Abstract

Modern electronic devices such as smartphones, wearable devices, and robots typically integrate three-dimensional sensors to track the device’s movement in the 3D space. However, sensor measurements in three-dimensional vectors are highly sensitive to device orientation since a slight change in the device’s tilt or heading can change the vector values. To avoid complications, applications using these sensors often use only the magnitude of the vector, as in geomagnetic-based indoor positioning, or assume fixed device holding postures such as holding a smartphone in portrait mode only. However, using only the magnitude of the vector loses the directional information, while ad hoc posture assumptions work under controlled laboratory conditions but often fail in real-world scenarios. To resolve these problems, we propose a universal vector calibration algorithm that enables consistent three-dimensional vector measurements for the same physical activity, regardless of device orientation. The algorithm works in two stages. First, it transforms vector values in local coordinates to those in global coordinates by calibrating device tilting using pitch and roll angles computed from the initial vector values. Second, it additionally transforms vector values from the global coordinate to a reference coordinate when the target coordinate is different from the global coordinate by correcting yaw rotation to align with application-specific reference coordinate systems. We evaluated our algorithm on geomagnetic field-based indoor positioning and bidirectional step detection. For indoor positioning, our vector calibration achieved an 83.6% reduction in mismatches between sampled magnetic vectors and magnetic field map vectors and reduced the LSTM-based positioning error from 31.14 m to 0.66 m. For bidirectional step detection, the proposed algorithm with vector calibration improved step detection accuracy from 67.63% to 99.25% and forward/backward classification from 65.54% to 100% across various device orientations.

1. Introduction

Modern devices such as smartphones, wearable devices, robots, and drones incorporate three-dimensional accelerometers, magnetometers, and gyroscopes, which enable the estimation of device’s movement, heading, speed, and posture. Various applications using these 3D sensors are gaining attention in the smartphone and robot markets. These include PDR (Pedestrian Dead Reckoning) [1], posture estimation [2], gesture recognition [3], activity classification [4], fall detection [5], and augmented-reality overlay alignment [6].

To ensure a reliable measurement with 3D sensors, the first critical step is often applying the general sensor calibration procedure to correct for intrinsic errors. This calibration primarily focuses on enhancing the measurement accuracy of the sensor itself. For example, various techniques are used to compensate for errors like hard and soft iron distortions in magnetometers [7,8,9,10,11], or biases and scale factors in accelerometers and gyroscopes [12,13].

While this traditional sensor calibration procedure targets reliable and accurate measurement with the sensor hardware itself, our vector calibration is a completely different process, targeting the sensor data transformation in the three-dimensional space, since sensor measurements in three-dimensional vectors are highly sensitive to device orientation. This means that the same motion can be recognized as a different motion when the smartphone’s tilt or heading direction is different.

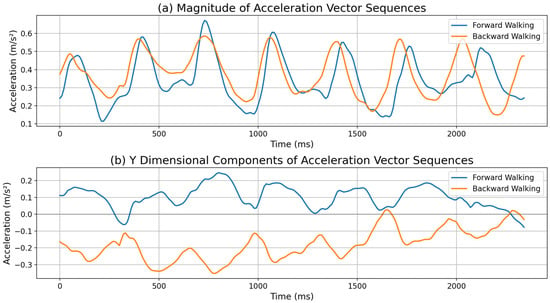

Most studies have addressed this problem by using the magnitude values of three-dimensional vectors [14] or taking ad hoc approaches such as assuming fixed device holding postures [15]. The magnitude of a three-dimensional vector remains constant regardless of device orientation. However, this approach loses the directional information of a motion by converting a three-dimensional vector into a single scalar value. For example, as shown in Figure 1a, forward and backward walking may produce similar magnitude values of three-dimensional accelerometers despite having opposite directions. This can simplify algorithms for step counting. However, since the detailed interpretation of individual three-dimensional vector values is not possible, it cannot determine the exact direction of the movement. Figure 1b shows the acceleration vector values in the y dimension during forward and backward walking. During forward walking, the acceleration in the y dimension is positive, while during backward walking it is negative. By using the polarity difference in the sensor vector values it is possible to implement directional step counting rather than traditional step counting.

Figure 1.

Comparison of accelerometer values during forward (blue line) and backward (orange line) walking. (a) The magnitudes of accelerometer vector sequences show similar values in both tests. (b) The y dimensional component values of acceleration vector sequences show a clear difference. Therefore, using the vector value enables not only step detection but also classification between forward and backward steps, as discussed in Section 5.

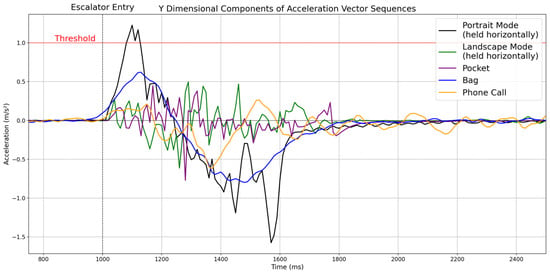

In addition, ad hoc posture assumptions work under controlled laboratory conditions but often fail in real-world scenarios. In the real world, users carry smartphones or wearable devices in various orientations while walking, making a phone call or carrying them in different positions such as pants pockets, shirt pockets, and bags. As an example, Figure 2 shows the acceleration vector values in the device’s y dimension when taking an escalator while carrying a smartphone in various positions. When the acceleration vector value exceeds the threshold (1.0 m/s2) marked in red, it is recognized as taking an escalator. When the user holds the smartphone horizontally in portrait mode, the vector value exceeds the threshold, and the algorithm recognizes the escalator usage (black line). However, when the user holds the smartphone in landscape mode (green line), carries it vertically in the front pocket of the pants with the top end facing down (purple line), carries it in a bag horizontally (blue line), and uses it for a phone call (orange line), the vector value does not exceed the threshold. This occurs because when taking the escalator, the device’s y-axis becomes misaligned with the user’s movement direction. Consequently, the algorithm fails to detect escalator usage even though the user is taking the escalator. This example demonstrates how sensor orientation-dependent algorithms that perform well under controlled laboratory conditions could fail in real-world scenarios.

Figure 2.

Acceleration vector values measured along the device’s y-axis during escalator entry for five different smartphone-carrying positions: hand-held in portrait mode (black), landscape mode (green), in pants pocket (purple), in a bag (blue), and during a phone call (orange). The red horizontal line denotes the 1.0 m/s2 detection threshold, and the dashed vertical line marks the moment of entry. Only the portrait posture exceeds the threshold, whereas the other carrying positions remain below it.

To process a sensor’s three-dimensional vector data regardless of device orientation, we propose a universal vector calibration algorithm that enables consistent three-dimensional vector data measurements for the same physical activity, independent of how users hold or rotate their devices.

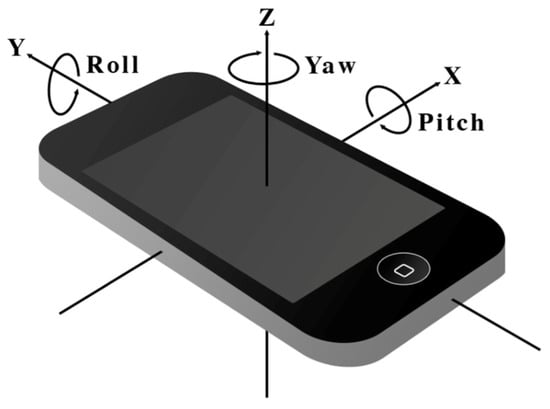

The proposed algorithm consists of two steps. First, we calibrate the device’s tilting using a measured pitch and roll angle. As shown in Figure 3, pitch angle represents the rotation angle along the x-axis, and roll angle represents the rotation angle along the y-axis. Second, we calibrate the device’s yaw rotation, which is the rotation along the z-axis, to align with a reference coordinate system. This reference coordinate system varies depending on the application requirements. It may be a user coordinate system aligned with the user’s eye direction, a global coordinate system aligned with Earth’s gravity and the user’s moving direction, or an absolute coordinate system aligned with an absolute orientation. We discuss the types of coordinate systems for various applications in the following section. Then, we demonstrate how we apply the vector calibration algorithm to two different applications: a geomagnetic field-based indoor positioning system [16,17] and bidirectional step detection with forward and backward walking.

Figure 3.

Roll, pitch, and yaw rotation on a smartphone. This coordinate system is defined such that the XY plane is horizontal to the ground, and the Z-axis is aligned with gravity [18].

2. Classification of Coordinate Systems

Before we introduce our vector calibration algorithm, we first define various categories of coordinate systems used in sensor-based applications. Table 1 shows the types of coordinate systems and the corresponding application examples where each coordinate system is used. We categorize coordinate systems into two main types: relative coordinate systems and absolute coordinate systems. A coordinate system is relative when its coordinate is defined along with an object’s orientation, such as human body orientation or device orientation. In contrast, a coordinate system is absolute when all three axes are aligned to a specific orientation in the three-dimensional space regardless of device rotation or user movement. However, since any object in a space, including Earth, is moving continuously relative to another, it is very difficult to define an absolute coordinate system. Therefore, we define a coordinate system as absolute when its coordinates are aligned, with Earth’s gravity direction being negative in the z dimension while its x-y plane is in parallel to the Earth’s surface. An example of an absolute coordinate system is one where its positive x coordinates point East while its positive y coordinates point North.

Table 1.

Coordinate system types, their characteristics, and the corresponding application examples.

Table 1 shows three examples of relative coordinate systems. First, the local coordinate system is physically aligned with a device’s orientation [19]. As shown in Figure 3, the x-, y-, and z-axes of the coordinate system are aligned with the smartphone’s internal sensor orientations. Second, the user coordinate system is physically aligned with user orientation, where the user’s head becomes the positive z dimension and the user’s eye level defines the xy plane. The direction of the user’s eye becomes the positive y dimension while their left-hand side becomes the negative x dimension. This coordinate system rotates when the user turns their head or changes posture so that it follows the user’s viewing direction. Third, the global coordinate system is an example of a user coordinate system, with the xy plane aligned horizontally to the ground and perpendicular to Earth’s gravity. This coordinate system maintains horizontality regardless of how the user tilts or moves but allows rotation only along the z-axis depending on the user’s movement direction. This is generally called the global coordinate system [12].

Depending on the application requirements, different reference coordinate systems are employed. For example, geomagnetic field-based indoor positioning [16,17] requires an absolute coordinate system aligned with the magnetic field map collection direction, since magnetic fingerprints are collected in a fixed orientation and real-time positioning accuracy depends on aligning three-dimensional magnetic field vectors to this reference orientation. Bidirectional step detection requires the global coordinate system because forward and backward walking are defined relative to the user’s moving direction, rather than to the device orientation. When a user holds a smartphone in various orientations such as portrait and landscape modes or carries the device in their pocket and in a bag, the same physical walking motion may generate different acceleration patterns in the local coordinate system. Therefore, the global coordinate system is essential to consistently distinguish movement directions across different device orientations. Smartwatch applications use the local coordinate system, as user interactions such as gestures and taps are naturally interpreted relative to the watch’s physical orientation on the wrist [20]. VR (Virtual Reality) applications require the user coordinate system since the application must respond to the user’s head and body movements while accommodating various gaming postures including standing, sitting, and lying down [21]. The LiDAR SLAM scanning application requires the global coordinate system to maintain consistent spatial mapping as the device moves through different orientations during environment scanning [22].

Our vector calibration algorithm transforms sensor measurements from the local coordinate system to any target reference coordinate system used in each application. For instance, geomagnetic field-based indoor positioning uses the absolute coordinate system defined along with the direction of the magnetic field map collection as a reference coordinate system. And bidirectional step detection uses the global coordinate system as a reference coordinate system. The following section presents the details of our universal vector calibration algorithm.

3. Vector Calibration Algorithm

A three-dimensional vector is used to measure the sensor readings in the local coordinate system. We denote the raw 3D sensor measurement by

where , , and represent the measured values along the sensor’s x-, y-, and z-axes, respectively.

The proposed algorithm consists of two stages. First, we transform the vector from the local coordinate system to the global coordinate system by calibrating the device’s tilt. To calibrate tilting, we first estimate the device’s pitch () and roll () angle using the gravity vector computed from , which is the of the accelerometer sensor. The measures not only linear acceleration caused by device movement but also the Earth’s gravitational acceleration (approximately 9.8 m/s2). Since gravity is always directed downward toward Earth’s center, the gravity vector computed from the accelerometer’s three axes indicates how the device is tilted relative to the vertical direction. We isolate the gravity vector by filtering out the high-frequency motion components from the raw accelerometer data, retaining only the low-frequency gravitational component [12]. More specifically, we employ an exponential smoothing filter [23], which is a type of low-pass filter particularly well-suited for real-time gravity estimation in mobile devices. Among the various moving average filters [24,25], we decided to use the exponential smoothing filter because it requires saving only a single previous sample, unlike other moving average filters [26] that require saving multiple previous samples. This results in O (1) memory complexity and minimal processing overhead, making it computationally efficient for real-time operation while providing optimal balance between noise reduction and responsiveness to orientation changes.

The filtered acceleration vector converges to the gravity vector , as the high-frequency linear acceleration components are progressively attenuated while the constant gravitational component is preserved.

The roll and pitch angles can be computed as

However, roll and pitch calculated in this way may contain errors. This is because the gravity component cannot be perfectly filtered from the raw accelerometer data. The accelerometer measures the total force acting on it and cannot distinguish between the gravitational force and the inertial forces caused by the device’s movement. Therefore, our low-pass filtering approach operates under the assumption that gravity is a constant, low-frequency signal, while forces from user motion are rapidly changing, high-frequency signals. This assumption is particularly effective during typical user activities such as walking, sitting, or jogging, where the user’s movement can change over a short period of time. In this environment the low-pass filter can effectively separate the gravity vector. However, in an environment where the user’s movement changes slowly and continuously, such as riding in a car or a train, the filter’s ability to separate the gravity vector can be reduced. The resulting error in the calculated pitch and roll angles is typically within ±1° under static conditions and ±3° during normal walking. In situations with slow and continuous acceleration, this error can temporarily increase up to ±10°.

The rotation matrices that correct the device’s tilt (pitch and roll) are defined as follows:

Here, represents rotation around the x-axis to eliminate pitch angle , and represents rotation around the y-axis to eliminate roll angle . The combined tilt correction rotation matrix is

By applying this combined rotation matrix to the raw sensor vector, we obtain a tilt-corrected vector.

At this point, the vector is independent of the device’s roll and pitch variations. The tilt correction ensures that consistent vector values are measured for the same physical activity regardless of whether the device is held upright, tilted sideways, and rotated forward or backward. However, when the device is rotated around the z-axis (yaw rotation), different vector values are still measured for the same physical activity.

Second, to correct yaw rotation, we transform the tilt-corrected vector to align with a target reference coordinate system. As described in Table 1, we select the appropriate reference coordinate system based on the specific application requirements. For instance, geomagnetic field-based indoor positioning requires alignment with the magnetic field map collection direction, while bidirectional step detection aligns with the user’s moving direction. The key principle is that we define a reference direction () specific to each application context.

We first obtain the device’s yaw angle from the gyroscope, where denotes the rotation around the z-axis. We then determine the yaw correction angle as the difference between the measured yaw angle and the reference direction.

From the , we can build the yaw correction rotation matrix

and apply it to the tilt-corrected vector to obtain the fully calibrated vector.

Now this calibrated vector maintains consistent measurements for the same physical activity regardless of device orientation, including pitch, roll, and yaw rotations.

The pseudo-code of the vector calibration algorithm is shown in Algorithm 1.

| Algorithm 1: Pseudo-code of the vector calibration algorithm | |

| Input: : A raw 3D vector to be calibrated (e.g., magnetic field vector) : The raw 3D vector from the accelerometer : The current yaw angle of the device : The application-specific reference yaw angle Output: The calibrated 3D vector Constant: (The smoothing coefficient) Persistent State: : The filtered acceleration vector from the previous time step | |

| 1 | // Stage1: Pitch & Roll Correction |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | |

| 11 | // Stage2: Yaw Correction |

| 12 | |

| 13 | |

| 14 | |

| 15 | |

The computational complexity of the algorithm is O (1) for each input vector. Each vector calibration involves three 3 × 3 rotation matrix constructions and multiplications, along with trigonometric computations for the rotation matrix elements. Since these operations are performed on constant-sized data structures (3 × 1 vectors and 3 × 3 matrices) regardless of the application scale or dataset size, the processing time remains constant per vector.

We measured the runtime performance of our vector calibration algorithm on a Samsung Galaxy S23 Plus smartphone with Android 14 (Samsung Electronics Co., Ltd., Suwon, Republic of Korea). The average execution time for calibrating a single three-dimensional vector is approximately 0.15 ms. This processing time is significantly lower than typical sensor sampling intervals in Android systems. According to Android 14 specifications, motion sensors are system-limited to specific maximum sampling rates: the accelerometer and the gyroscope operate at up to 200 Hz (5 ms intervals) while the magnetometer operates at up to 50 Hz (20 ms intervals). Our calibration processing time of 0.15 ms represents only 3% of the accelerometer and gyroscope sampling interval, and 0.75% of the magnetometer sampling interval. This substantial margin ensures real-time performance without affecting the overall system responsiveness, even when processing sensor data at maximum sampling rates.

4. Evaluation of Geomagnetic Field-Based Indoor Positioning

We first applied the proposed vector calibration algorithm to a geomagnetic field-based indoor positioning system (IPS) and evaluated its performance [27]. To build the IPS, we first collected a magnetic field map. We used the Hana Square underground facility in Korea University Science Campus as our IPS testbed, whose dimensions are 26 m × 95 m. We collected magnetic field data at every walkable location at 60 cm intervals while holding the smartphone horizontally and walking in a single, consistent direction, as shown in Figure 4.

Figure 4.

When we collected the magnetic vectors during the field map construction, we moved in the red arrows’ direction.

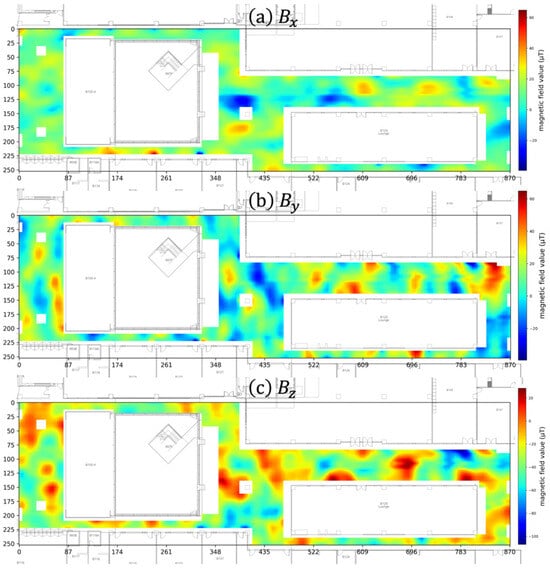

At each point, the smartphone’s magnetometer always recorded the three-dimensional magnetic field vector (). We then generated a three-dimensional magnetic field vector map by spatially interpolating the collected three-dimensional magnetic field vector data. Figure 5 visualizes the magnetic field map. Specifically, Figure 5a is the map generated by interpolating the x component () of the vectors, Figure 5b shows the map for the y component (), and Figure 5c shows the map for the z component ().

Figure 5.

The three-dimensional magnetic field map of our Hana Square testbed, showing the individual vector components: (a) the x dimension (), (b) the y dimension (), and (c) the z dimension ().

To apply vector calibration in a real-time magnetic field-based IPS [16,17], the measured magnetic field vectors must be aligned with the magnetic field map collection direction. Therefore, we set the map collection direction shown in Figure 4 as the reference direction . When the user starts walking, we calculate using the user’s moving direction estimated from compass and gyroscope data and apply this to calibrate the magnetic field vectors in real-time.

To evaluate the performance of our proposed vector calibration algorithm, we first analyzed the sequence matching improvement by comparing the raw and calibrated magnetic vectors against the ground truth map data. Subsequently, we also evaluated the improvement in localization accuracy by applying the calibration to an LSTM-based IPS [17]. A reliable ground truth data collection is essential for this evaluation, including the collection of the real-time magnetic vector sequences for the user’s test paths with varying orientations as well as the selection of the target vector sequences from the magnetic map.

To create this experimental setup, we use six different test paths with varying movement patterns to evaluate our calibration algorithm under different scenarios. Each test path was planned to include multiple directional changes relative to the magnetic field map collection direction. For example, one test path involved walking 30 steps in the same direction as the map collection direction (0°), then turning 90° clockwise and walking 50 steps, followed by another 90° clockwise turn and walking 50 steps, then a final 90° clockwise turn and walking the remaining 70 steps in the final direction (270°) relative to the map collection direction. These various directional changes allowed us to evaluate the calibration algorithm’s performance across various orientation differences between real-time movement and the original map collection direction. The ground truth map data sequences, as shown in Figure 6, consist of values extracted from the pre-collected magnetic field map at locations corresponding to these test paths. To minimize the errors caused by inter-device sensor biases, both map collection and test evaluations were conducted using the same smartphone.

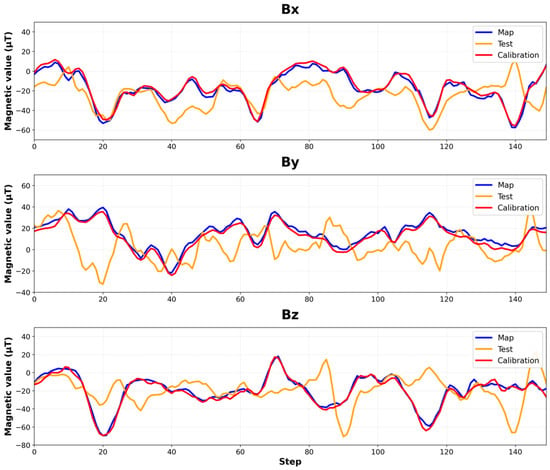

Figure 6.

Magnetic vector sequences in the x, y, and z dimensions for one of our test paths. The blue line shows the sequence of values stored in the magnetic field map while the orange line shows the sequence of values sampled by the user during the test while walking on the same path with a random orientation. The red line shows the result after calibration.

Figure 6 compares the sequence of magnetic vectors in the x, y, and z dimensions in the magnetic field map against the sequences of magnetic vectors collected during a test case with and without vector calibration. The blue line shows the vector sequence of the magnetic field map. The orange line shows the vector sequence during the test without calibration. During the test, if the device’s orientation aligns with the magnetic field map collection direction, the vector sequence collected during the test generally matches well with the corresponding sequence in the magnetic field map. However, if the device orientations are different from each other or the smartphone’s tilting changes during the test, the vector values completely mismatch. In the figure, the average differences between the vectors in the magnetic field map and the collected vectors without vector calibration are 13.83 μT, 16.51 μT, and 16.90 μT in the x, y, and z dimensions, respectively. The maximum difference reaches as high as 71.7 μT. These large differences make indoor positioning impossible with raw vectors in the existing geomagnetic field-based IPS.

However, as shown by the red line in Figure 6, after applying our vector calibration the calibrated vector sequences now match well with the original sequence in the magnetic field map. The calibrated vectors consistently match regardless of device orientation or tilting changes. For six test cases, the average differences between the calibrated vector values and those in the magnetic field map are 2.42 μT, 2.84 μT, and 2.44 μT in the x, y, and z dimensions, respectively. This suggests that our algorithm effectively addresses the device orientation dependency of magnetic field vectors and can be applied for real-time positioning in magnetic field-based indoor positioning systems.

To demonstrate the practical benefits of our vector calibration, we evaluated its impact on a geomagnetic field-based IPS using recurrent neural network (RNN) models, including a Long Short-Term Memory (LSTM) model [17]. While basic RNNs can process sequence data, they often struggle with long-term dependencies, gradually forgetting information from earlier parts of a long sequence. LSTM models address this limitation through internal memory cells that enable them to retain and utilize information from extended sequences, making them a better choice for indoor positioning systems with long sequences. The primary objective of our evaluation is to demonstrate the effectiveness of vector calibration across different applications, rather than comparing the impact of deep neural network models on the IPS’s performance.

To train the LSTM model for supervised training, we first generated training data from the pre-collected magnetic field map. To create paths like actual pedestrian movements, we used a modified random waypoint model [28]. This model simulates walking patterns by selecting a random starting point within the testbed, moving a random distance in a random direction, and then changing the direction again. Through this process, we generate 400,000 random training paths, each of which consists of 100 steps. A total of 60% of the datasets are used for training, 20% for validation, and the remaining 20% for the test. The architecture of our LSTM model consists of three hidden layers, each containing 300 hidden nodes. This network is designed to process an input sequence of 100 three-dimensional magnetic vectors and to output a two-dimensional (x, y) location coordinate. The detailed hyperparameters for the model are summarized in Table 2.

Table 2.

Hyperparameters of our LSTM model.

After training, our geomagnetic field-based IPS using the LSTM model shows an average positioning error of 0.43 m for the test set. However, in real-time testing with a smartphone, the performance varies dramatically depending on how device orientation is handled. When using raw vectors without any calibration, the positioning error increases to 30.18 m. This severe degradation occurs because device orientation changes during user movement can affect the measured magnetic vector sequences, causing them to mismatch with the vector sequences in the pre-collected magnetic field map, resulting in inaccurate position estimates.

Using the vector magnitude sequences instead of raw vector sequences, which is the most common approach for handling orientation dependency, performed better with an average positioning error of 10.48 m. While the magnitude-based approach resolves device orientation dependency, resulting in better positioning performance than using uncalibrated vectors, the positioning error is still quite large. This limitation arises because this approach uses only a single scalar magnitude value instead of utilizing the full three-dimensional vector. Since the magnitude-based approach represents the current best practice for handling 3D sensor data in geomagnetic field-based indoor positioning, the existing geomagnetic field-based IPSs face inherent limitations in further improving positioning accuracy.

In contrast, by applying our vector calibration algorithm to 3D sensor data, we can use the entire 3D vector sequences for sequence matching in the LSTM model. We can reduce the average positioning error of our IPS to 0.66 m, which is a modest degradation compared to the training error of 0.43 m. Thus, with vector calibration, we can address the fundamental performance limitation of the existing geomagnetic field-based IPS by preserving the full three-dimensional vector information.

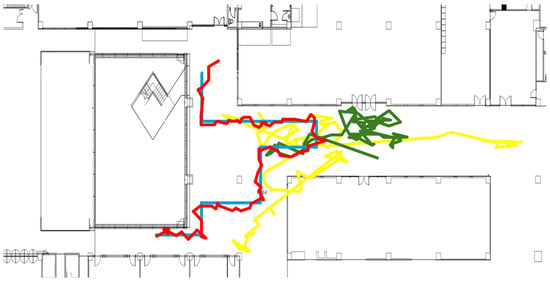

Figure 7 compares the test path with the paths predicted by the LSTM-based IPS for one of the six test paths. The blue line represents the ground truth path, while the other colored lines are paths predicted by the LSTM model using different vector processing approaches. The green line shows the path predicted by the LSTM model without calibration. As shown in the figure, the positioning results completely mismatch with the actual path. The yellow line shows the results of using vector magnitudes as the input to the LSTM model. As the figure illustrates, the magnitude approach produces better results than using the raw vectors without calibration. But it still exhibits significant positioning errors. However, with vector calibration, the LSTM model now accurately estimates user movement paths like the actual path, as shown in the red line. Table 3 summarizes the average positioning errors of the LSTM model using these three different vector processing approaches for six test paths.

Figure 7.

Positioning results of LSTM-based geomagnetic field IPS for a single test path. The blue line shows the actual test path, while the yellow, green, and red lines show the predicted paths generated by the LSTM model using vector magnitudes only, uncalibrated vectors, and calibrated vectors, respectively.

Table 3.

LSTM positioning errors for six test paths using different vector processing approaches.

5. Evaluation of Bidirectional Step Detection

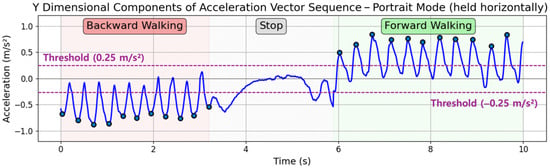

Our second target application is bidirectional step detection. So far traditional step detection algorithms [29,30] only count forward steps, missing backward steps and backward movement. We applied the proposed vector calibration algorithm to extend classical step detection to include bidirectional step detection. For bidirectional step detection, we use the acceleration vector in the y dimension. Since raw acceleration values contain noises that make analysis difficult, we apply a low-pass filter to the acceleration data. We detect both lower and upper peaks from the sampling data of the acceleration vector in the y dimension, recognizing a single peak in a sine curve as a single step. A forward step is detected when an upper peak exceeds a positive threshold of +0.25 m/s2, while a backward step is detected when a lower peak falls below a negative threshold of −0.25 m/s2. This is illustrated in Figure 8.

Figure 8.

Bidirectional step detection results with smartphone held horizontal to the ground in portrait mode.

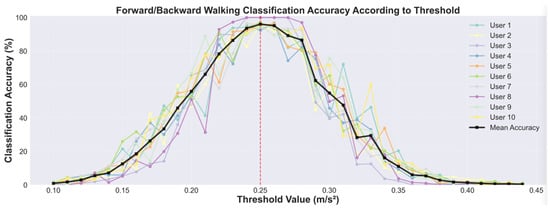

The threshold was determined through experimental validation. We collected a dataset where 10 users of varying gender, age, and height were instructed to walk 100 steps forward and then 100 steps backward with an approximate step length of 60 cm. We then evaluated the forward and backward walking classification accuracy as we varied the threshold value from ±0.1 to ±0.45 m/s2. As shown in Figure 9, the average classification accuracy across all users peaked when the threshold was set to ±0.25 m/s2. Therefore, we select this threshold value for our bidirectional step detection.

Figure 9.

The accuracy of forward and backward step classification in terms of the acceleration vector in the y dimension. The graph shows the classification accuracy for 10 individual users (colored lines) and their means (black line) across a range of threshold values. The mean accuracy is maximized at 96.0% when the threshold is ±0.25 m/s2, indicated by the red dashed line.

Figure 8 shows the test results when a user walks backward, stops, and then walks forward while holding a smartphone horizontal to the ground in portrait mode. When the smartphone is held in this orientation, the smartphone’s y-axis aligns with the user’s moving direction, enabling accurate step detection and bidirectional classification without vector calibration. The blue dots marked on the line graph indicate the detected upper peaks for forward steps and lower peaks for backward steps. In this test example, all detected lower peaks during backward walking fall below the −0.25 m/s2 threshold and all detected upper peaks during forward walking exceed the +0.25 m/s2 threshold, showing that the suggested threshold successfully distinguishes between forward and backward steps.

To apply vector calibration to bidirectional step detection, we set the reference direction to the user’s moving direction. We assume that the user’s moving direction matches with the device’s positive y-axis in its local coordinate when a user holds a smartphone in portrait mode. However, depending on the user’s grip posture, the heading of the smartphone’s y dimension may not match with the user’s moving direction, making it difficult to accurately estimate the user’s moving direction with only the smartphone’s sensors. To solve this problem, we use the IPS to help determine the user’s moving direction regardless of the grip posture. By analyzing the sequence of previous coordinates from the IPS, we can estimate the user’s moving direction. We then calculate the difference between this moving direction and the device’s orientation using the gyroscope () and apply this to align the acceleration vectors with the user’s moving direction regardless of how the smartphone is rotated. This alignment enables an accurate distinction between forward and backward walking based on the calibrated acceleration vector.

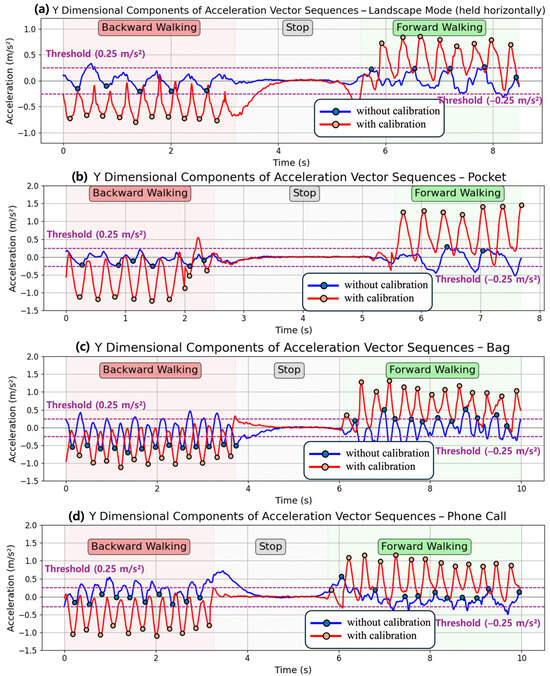

Figure 10 shows the bidirectional step detection results when the smartphone is carried in various orientations and in different positions: (a) held horizontally in landscape mode, (b) carried in their pants pocket, (c) carried in a backpack, and (d) held during a phone call. In contrast to the multi-user experiment shown in Figure 9, the experiment shown in Figure 10 was conducted with only a single user. Unlike the case in Figure 8, when the smartphone’s y-axis is not aligned with the user’s moving direction, the acceleration vector is measured according to the device’s orientation rather than the user’s actual moving direction. The blue lines show the results without vector calibration, where the acceleration patterns vary depending on device orientation, making it difficult to detect steps accurately and to distinguish between forward and backward walking. In Figure 10a,b, while the user took 19 and 16 steps during walking, the number of detected peaks was 10 and 8, resulting in step detection accuracies of 52% and 50%. Moreover, in Figure 10c,d, bidirectional classification fails with rates of 52% and 81%, respectively, due to peaks exceeding the threshold during backward walking or remaining below the threshold during forward walking.

Figure 10.

Comparison of bidirectional step detection performance when a smartphone is carried in different orientations and positions with and without vector calibration.

With vector calibration, the bidirectional step detection performance improves, as shown in the red lines in Figure 10, by aligning the acceleration vector to the user’s moving direction. Regardless of device orientation, the calibrated acceleration data reflects the user’s actual movement rather than the device’s orientation, enabling reliable step detection and accurate forward and backward walking classification. Table 4 summarizes the performance of step detection and forward/backward step classification over 200 steps. When comparing the results with and without calibration, step detection accuracy improved from an average of 67.63% to 99.25%, and forward/backward walking classification improved from an average of 65.54% to 100%.

Table 4.

Bidirectional step detection accuracy when a smartphone is carried in various orientations and positions.

6. Conclusions

In this paper, we proposed a universal vector calibration algorithm that addressed the fundamental challenge of orientation dependency in three-dimensional sensor data. The algorithm enables consistent vector measurements for the same physical activity regardless of device orientation, overcoming the limitations of existing magnitude-based and ad hoc posture assumption approaches.

Our calibration algorithm works in two stages. First, we correct device tilting using pitch and roll angles computed from raw 3D accelerometer vector data. Then, we calibrate yaw rotation to align with application-specific reference coordinate systems. We systematically categorize coordination systems into relative (local, user, global) and absolute types, providing a framework for selecting appropriate references based on application requirements.

Evaluation of two 3D sensor applications demonstrates the effectiveness of our vector calibration algorithm. For geomagnetic field-based indoor positioning, the calibration algorithm achieved an 83% reduction in vector mismatches between sampled magnetic vectors and reference magnetic field map vectors across all three dimensions. Additionally, it reduced the average positioning error from 30.18 m to 0.66 m in the LSTM-based IPS. For bidirectional step detection, the algorithm dramatically improved step detection accuracy from 67.63% to 99.25% and forward/backward classification accuracy from 65.54% to 100% across various device orientations and carrying positions.

The proposed universal calibration algorithm provides 3D sensor applications with consistent and reliable performance regardless of how users naturally hold or carry their devices in real-world scenarios. Beyond the evaluated applications, our calibration framework can be readily applied to various three-dimensional sensor-based applications. For smartwatch gesture recognition, the algorithm enables consistent gesture detection regardless of wrist rotation or watch orientation, improving recognition accuracy across different hand positions. In XR gaming applications, our calibration allows for seamless gameplay across diverse user postures by automatically aligning sensor measurements with the user’s orientation, eliminating manual recalibration needs. For LiDAR SLAM applications, the calibration ensures consistent spatial mapping regardless of device orientation during environment scanning, resulting in more robust localization and mapping performance.

Author Contributions

Conceptualization, W.S. and L.C.; methodology, W.S.; writing—original draft preparation, W.S.; writing—review and editing, W.S. and L.C.; supervision, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Challengeable Future Defense Technology Research and Development Program through the Agency for Defense Development (ADD) funded by the Defense Acquisition Program Administration (DAPA) in 2024 (No.915073201).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Q.; Ye, L.; Luo, H.; Men, A.; Zhao, F.; Huang, Y. Pedestrian Dead Reckoning Based on Motion Mode Recognition Using a Smartphone. Sensors 2018, 18, 1811. [Google Scholar] [CrossRef] [PubMed]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF Human Motion Tracking Using Miniature Inertial Sensors; Technical Report 2009; Xsens Motion Technologies BV: Enschede, The Netherlands, 2009. [Google Scholar]

- Lim, S.; Ahn, H.; Lee, C. Real-Time and Embedded Detection of Hand Gestures with an IMU-Based Glove. Informatics 2018, 5, 28. [Google Scholar]

- Ashfaq, T.; Khalil, M.S.; Jafri, S.T.A.; Masood, S.; Malik, M.H.; Ahmed, S.F. Real-time Smartphone Activity Classification Using Inertial Sensors—Recognition of Scrolling, Typing, and Watching Videos While Sitting or Walking. Sensors 2020, 20, 655. [Google Scholar]

- Chen, Y.-C.; Huang, S.-W.; Yuan, S.-M.; Luo, C.-H. Fall Recognition Based on an IMU Wearable Device and Fall Verification through a Smart Speaker and the IoT. Sensors 2023, 23, 5472. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Feng, X.; Wang, C. Real-Time Motion Tracking for Mobile Augmented/Virtual Reality Using Adaptive Visu-al-Inertial Fusion. Sensors 2017, 17, 1263. [Google Scholar]

- Guo, P.; Qiu, H.; Yang, Y.; Ren, Z. The soft iron and hard iron calibration method using extended Kalman filter for attitude and heading reference system. In Proceedings of the 2008 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 28–31 May 2008; pp. 135–142. [Google Scholar]

- Janošek, M.; Dressler, M.; Petrucha, V.; Chirtsov, A. Magnetic calibration system with interference compensation. IEEE Trans. Magn. 2018, 55, 1–4. [Google Scholar] [CrossRef]

- Wu, X.; Song, S.; Wang, J. Calibration-by-pivoting: A simple and accurate calibration method for magnetic tracking system. IEEE Trans. Instrum. Meas. 2022, 71, 1–9. [Google Scholar] [CrossRef]

- Kotsaras, A.; Spantideas, S.T.; Tsatalas, S.; Vergos, D. Automated estimation of magnetic sensor position and orientation in measurement facilities. IEEE Trans. Electromagn. Compat. 2024, 67, 20–32. [Google Scholar] [CrossRef]

- Son, D.; Dong, X.; Sitti, M. A simultaneous calibration method for magnetic robot localization and actuation systems. IEEE Trans. Robot. 2018, 35, 343–352. [Google Scholar] [CrossRef]

- Nakov, S.; Ivanov, T. A Calibration Algorithm for Microelectromechanical Systems Accelerometers in Inertial Navigation Sensors. arXiv 2013, arXiv:1309.5075. [Google Scholar] [CrossRef]

- Li, X.; Li, Z. Vector-aided in-field calibration method for low-end MEMS gyros in attitude and heading reference systems. IEEE Trans. Instrum. Meas. 2014, 63, 2675–2681. [Google Scholar] [CrossRef]

- Yurtman, A.; Barshan, B. Activity Recognition Invariant to Sensor Orientation with Wearable Motion Sensors. Sensors 2017, 17, 1838. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. In Proceedings of the 21st European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 24–26 April 2013. [Google Scholar]

- Jang, H.J.; Shin, J.M.; Choi, L. Geomagnetic Field Based Indoor Localization Using Recurrent Neural Networks. In Proceedings of the GLOBECOM 2017–2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Bae, H.; Choi, L. Large-Scale Indoor Positioning using Geomagnetic Field with Deep Neural Networks. In Proceedings of the ICC 2019–2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Android Open-Source Project. Sensor Types-Android Open-Source Project. Available online: https://source.android.com/docs/core/interaction/sensors/sensor-types (accessed on 25 June 2025).

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems, 2nd ed.; Artech House: Boston, MA, USA, 2013. [Google Scholar]

- Kwon, M.-C.; Choi, S. Recognition of Wrist Gestures for Controlling Smartwatch Applications. In Proceedings of the 2016 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 19–21 October 2016; pp. 1213–1215. [Google Scholar]

- LaValle, S.M. Virtual Reality; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Setyawan, I.B.; Huda, A.K.; Nashrullah, F.H.; Kurniawan, I.D.; Frans, S.I.; Hendry, J. Noise Removal in The IMU Sensor Using Exponential Moving Average with Parameter Selection in Remotely Operated Vehicle (ROV). In Proceedings of the 2022 8th International Conference on Science and Technology (ICST), Yogyakarta, Indonesia, 7–8 September 2022; volume 1; pp. 1–5. [Google Scholar]

- Engelberg, S. Implementing Moving Average Filters Using Recursion [Tips & Tricks]. IEEE Signal Process. Mag. 2023, 40, 78–80. [Google Scholar] [CrossRef]

- Santos, H.I.A.; Beserra, G.S. Design and Implementation of a Simple Moving Average Filter for a UWB/UHF Hybrid RFID Tag. In Proceedings of the Microelectronics Student Forum 2023, Brasília, Brazil, 28 August 2023–1 September 2023; pp. 1–4. [Google Scholar]

- Valachovic, E. An Extension of the Iterated Moving Average. arXiv 2025, arXiv:2502.00128. [Google Scholar] [CrossRef] [PubMed]

- Son, W.; Choi, L. Vector Calibration for Magnetic Field Based Indoor Localization. In Proceedings of the 2019 IEEE International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019. [Google Scholar]

- Camp, T.; Boleng, J.; Davies, V. A survey of mobility models for ad hoc network research. Wirel. Commun. Mob. Comput. 2002, 2, 483–502. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications. In Analog Devices AN-602 Application Note; Analog Devices, Inc.: Wilmington, MA, USA, 2002. [Google Scholar]

- Fortune, E.; Lugade, V.; Morrow, M.; Kaufman, K. Validity of using tri-axial accelerometers to measure human movement-Part II: Step counts at a wide range of gait velocities. Med. Eng. Phys. 2014, 36, 659–669. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).