A Joint LiDAR and Camera Calibration Algorithm Based on an Original 3D Calibration Plate

Abstract

1. Introduction

- A novel 3D calibration plate is designed, integrating depth gradients, localization markers, and corner features;

- A complete extrinsic calibration framework is developed, enabling an accurate feature point extraction across modalities;

- A Spatial Clustering Algorithm for LiDAR based on the slope and distance (SCAL-SD) is proposed, tailored to the characteristics of LiDAR data.

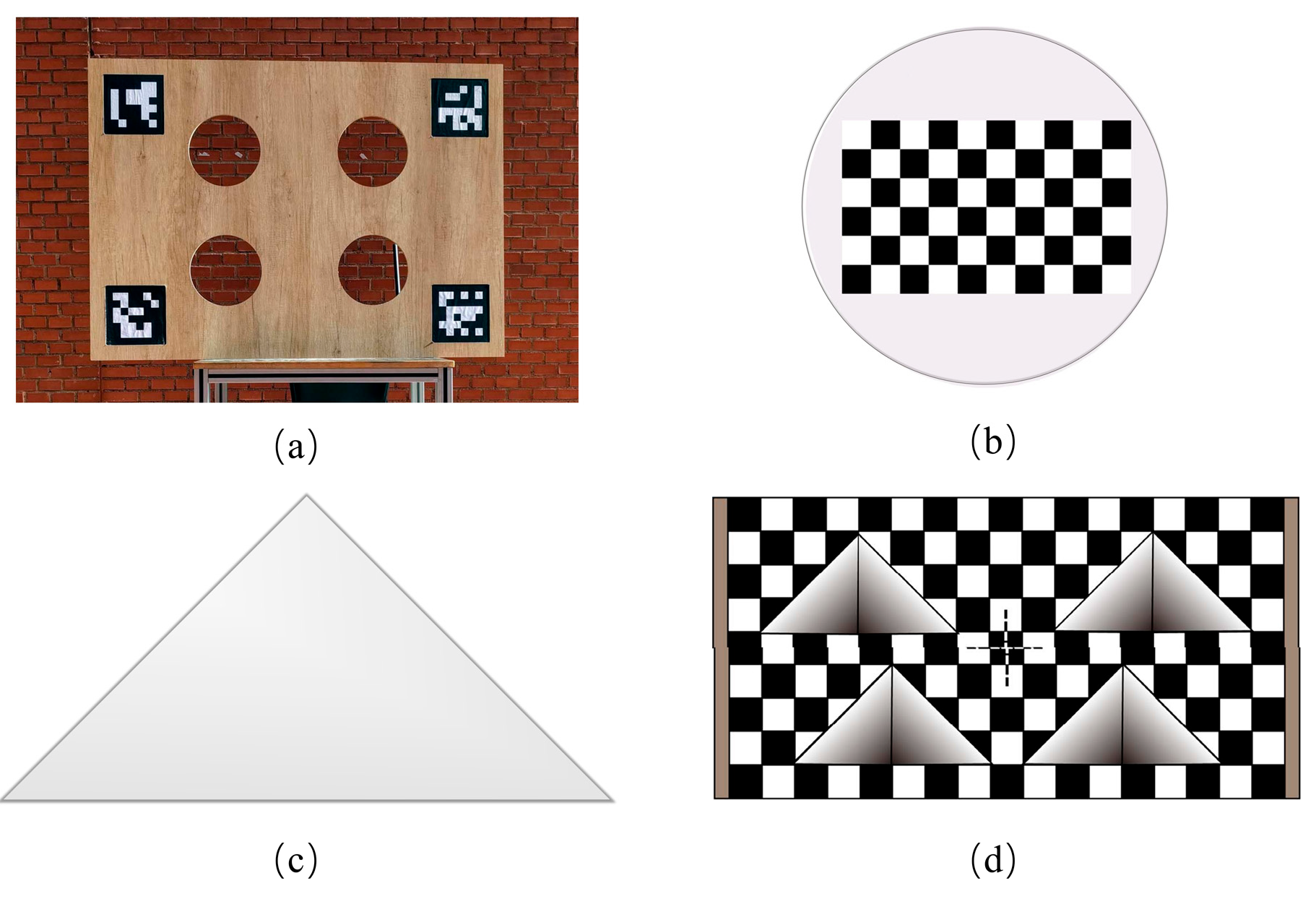

2. Related Work

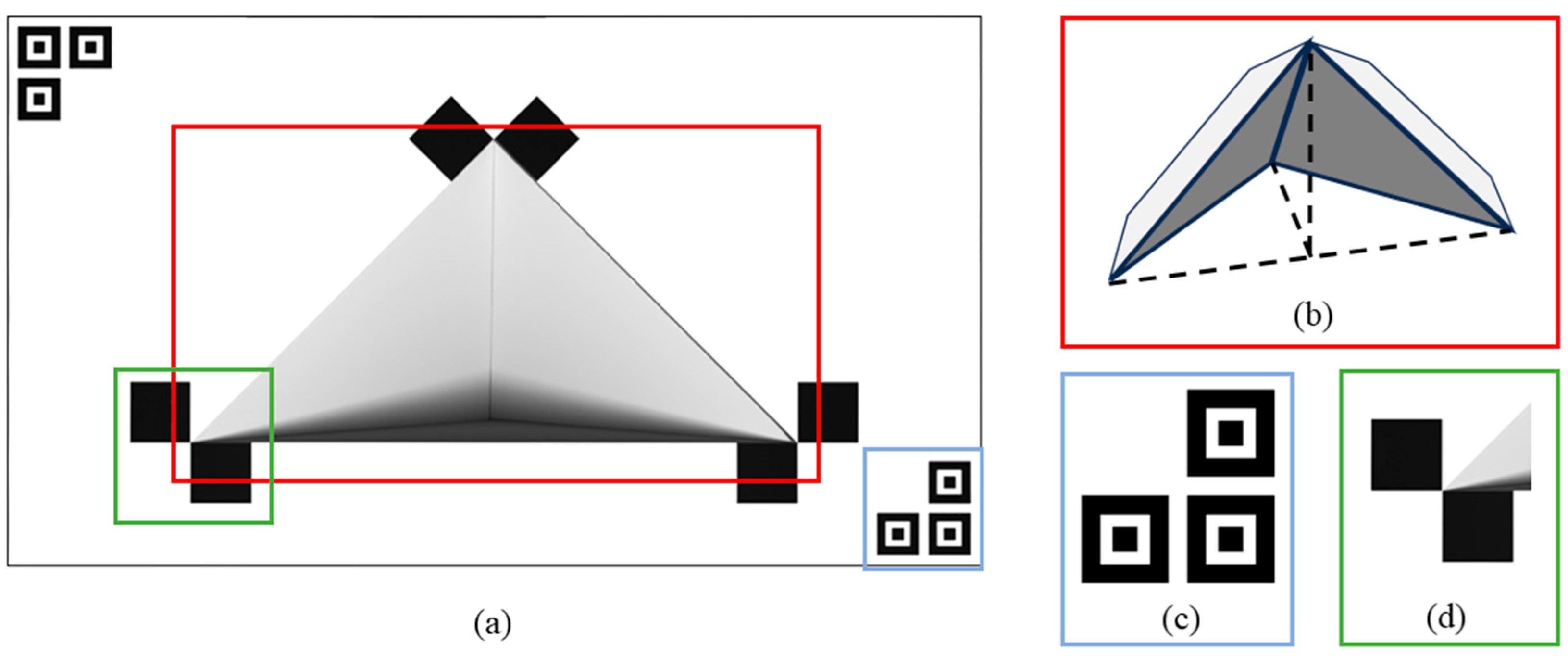

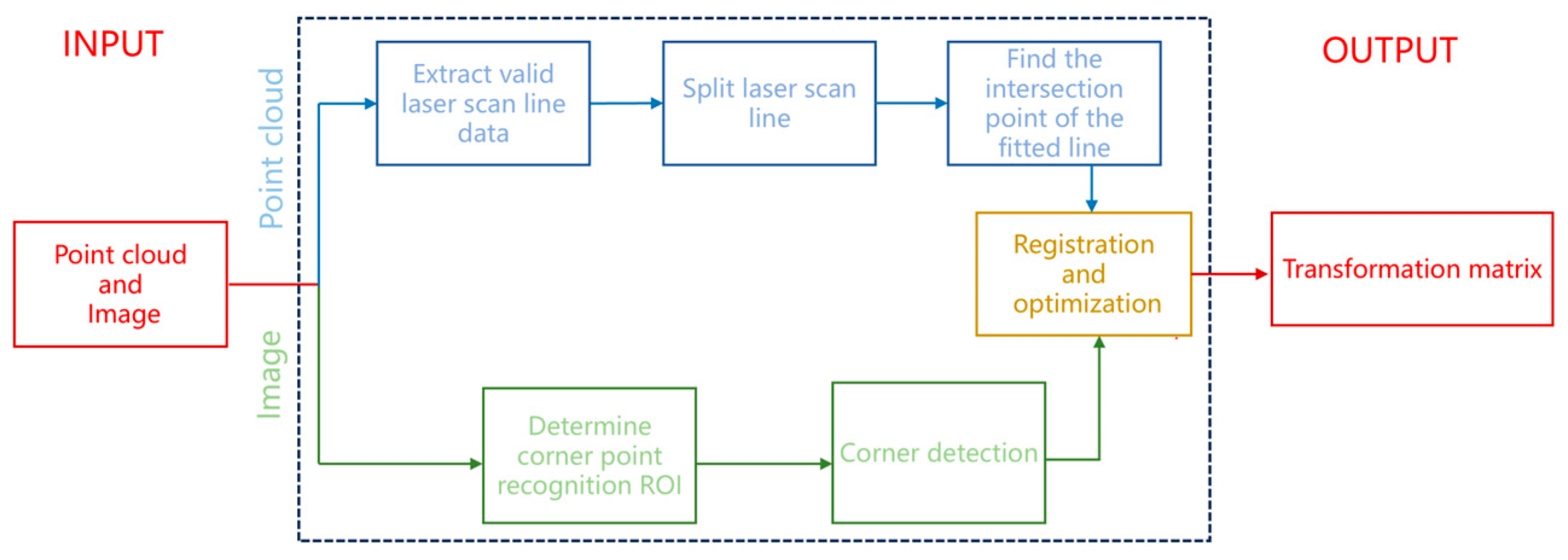

3. Methodology

- At the point cloud level, extract the 3D coordinates of the triangular cavity vertices of the calibration plate from the point cloud.

- At the image level, identify the corresponding 2D coordinates from the image.

- Perform 2D–3D feature matching and alignment.

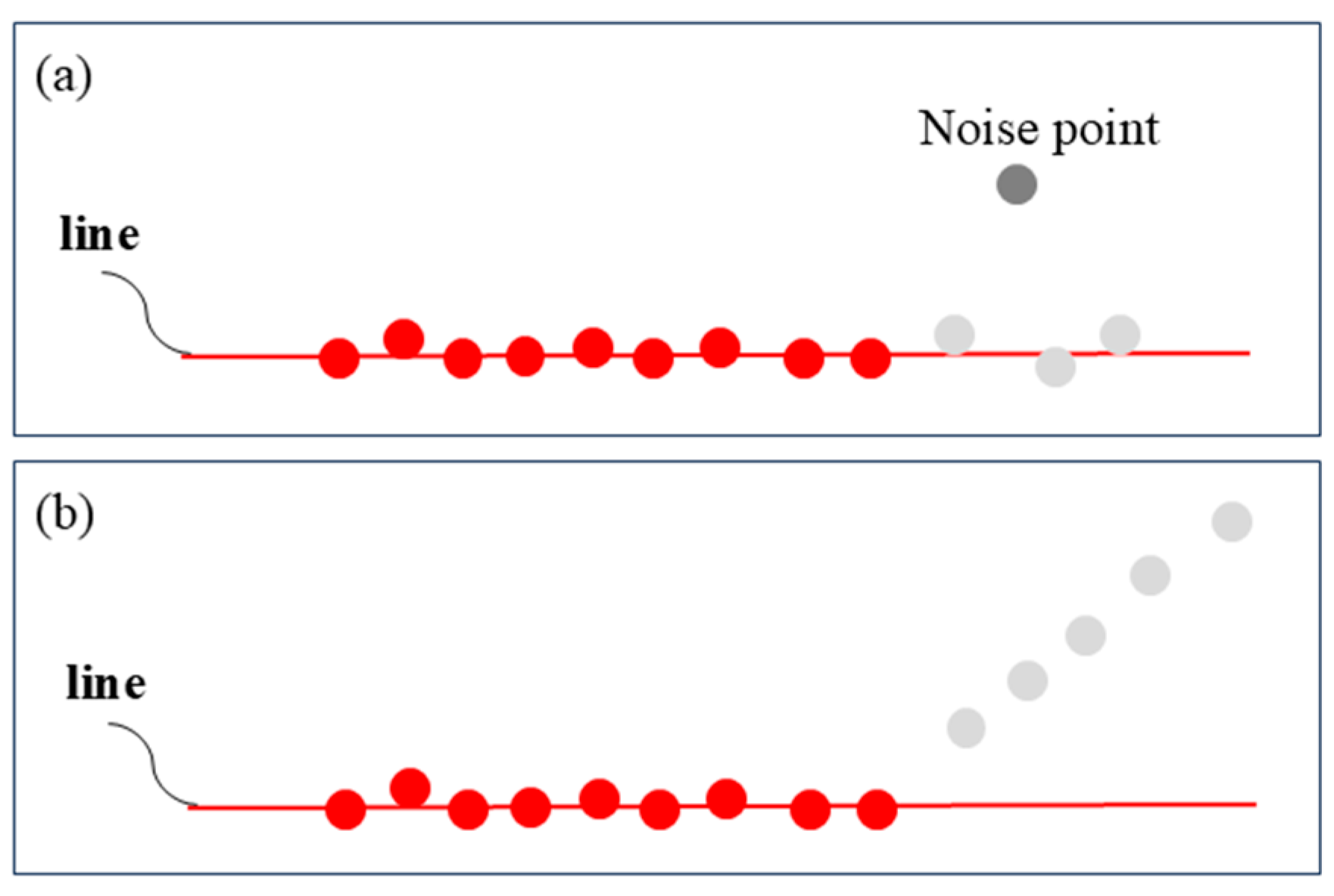

3.1. Extraction of 3D Coordinates from Point Clouds

- Apply the SCAL-SD algorithm to cluster the point cloud.

- Filter and extract relevant line segments to identify contour points.

- Use known cavity dimensions to compute the 3D coordinates of the cavity vertices.

| Algorithm 1: Algorithm for Feature Points Extraction from Point Cloud using SCAL-SD |

|

Input: original 3D point cloud Output: 3D Coordinates of Feature Points

|

3.2. Capture 2D Coordinates of Feature Points

3.3. 2D–3D Feature Point Matching and Alignment

4. Simulation and Experiment

4.1. Simulation Experiments

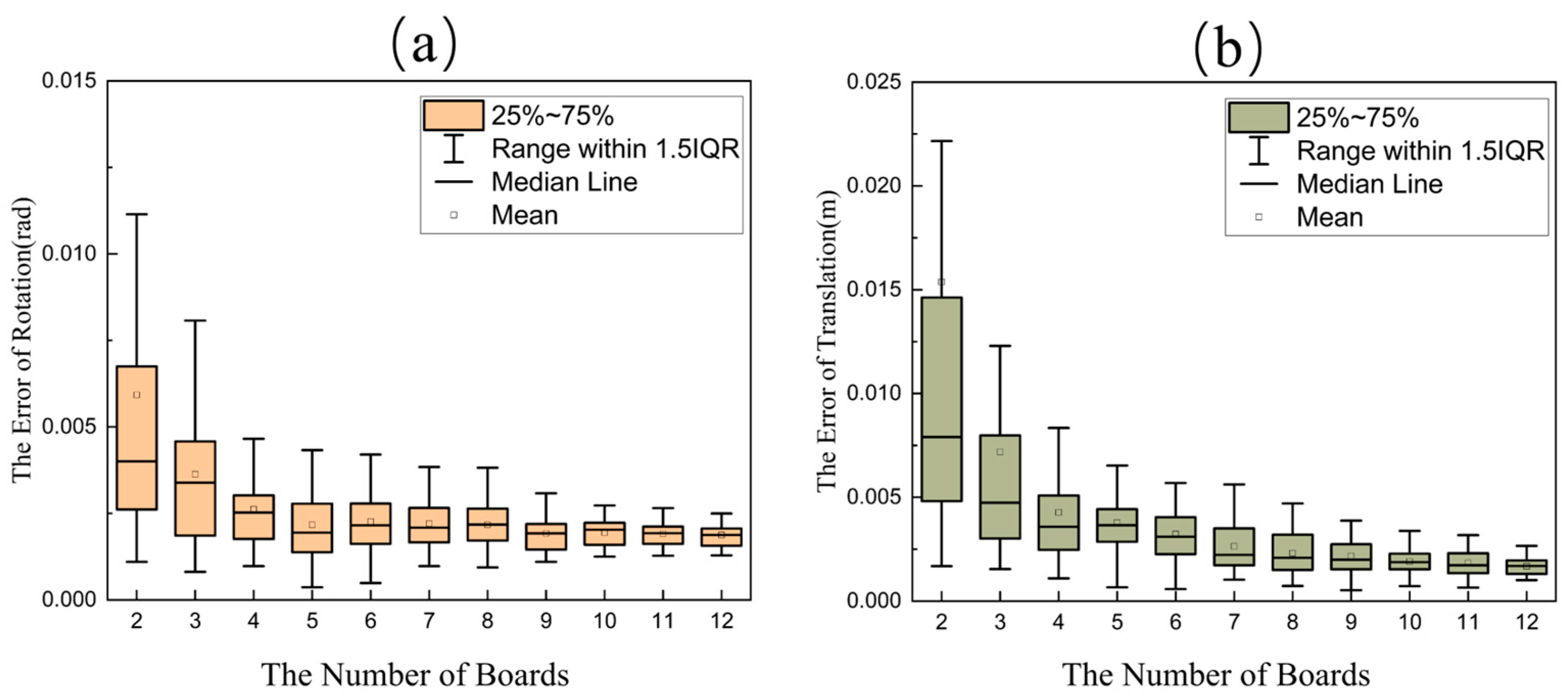

4.1.1. The Influence of the Number of Calibration Plate Positions

4.1.2. Impact of Noise

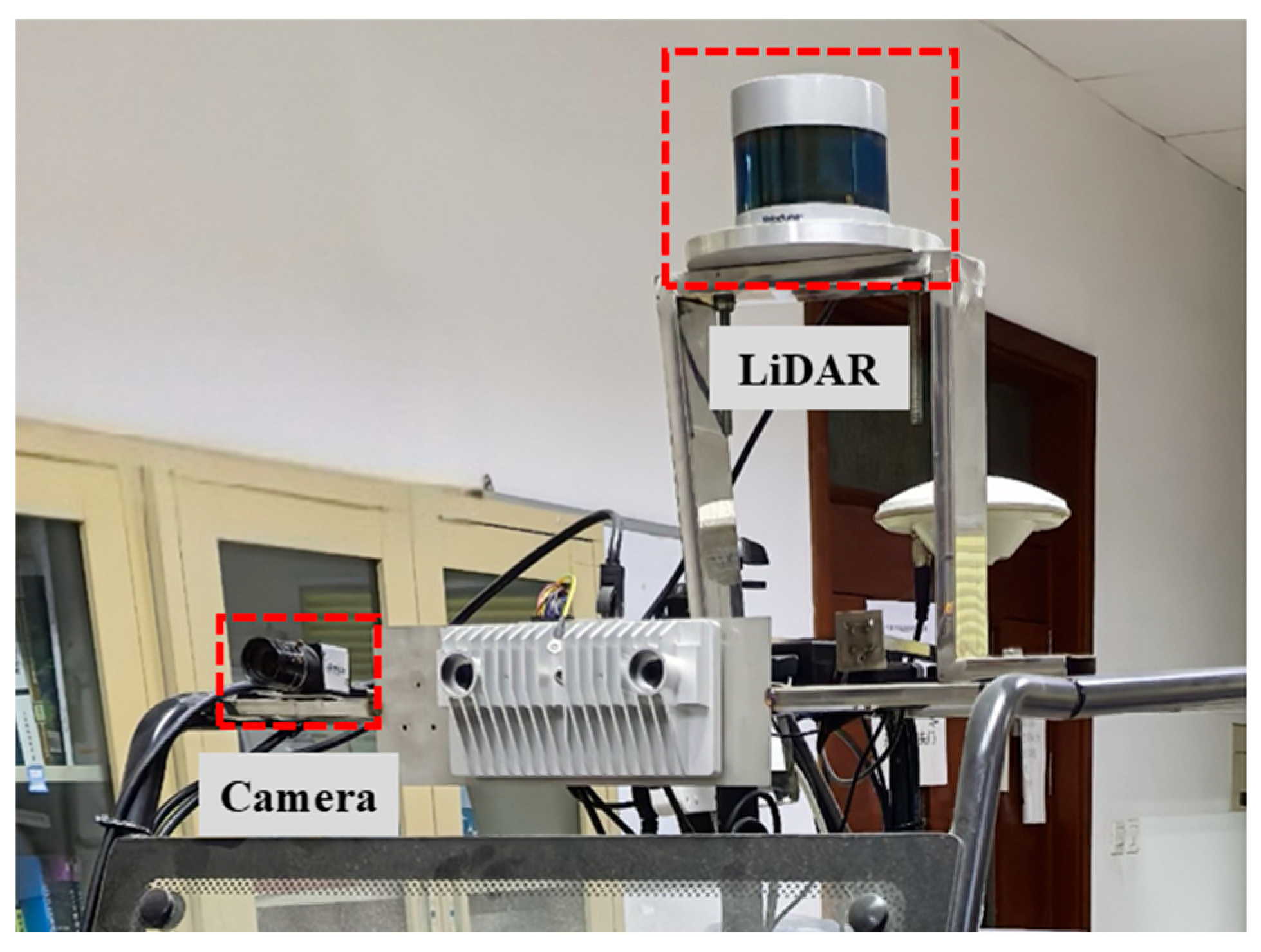

4.2. Experiments Based on Real Scenarios

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, S.; Feng, Y.; Huang, J.; Li, X.; Lv, Z.; Feng, Y.; Wang, W. Robust Calibration of Vehicle Solid-State Lidar-Camera Perception System Using Line-Weighted Correspondences in Natural Environments. IEEE Trans. Intell. Transp. Syst. 2024, 25, 4489–4502. [Google Scholar] [CrossRef]

- Lee, C.-L.; Hou, C.-Y.; Wang, C.-C.; Lin, W.-C. Extrinsic and Temporal Calibration of Automotive Radar and 3-D LiDAR in Factory and On-Road Calibration Settings. IEEE Open J. Intell. Transp. Syst. 2023, 4, 708–719. [Google Scholar] [CrossRef]

- Basso, F.; Menegatti, E.; Pretto, A. Robust intrinsic and extrinsic calibration of RGB-D cameras. IEEE Trans. Robot. 2018, 34, 1315–1332. [Google Scholar] [CrossRef]

- Wallace, A.M.; Halimi, A.; Buller, G.S. Full waveform LiDAR for adverse weather conditions. IEEE Trans. Veh. Technol. 2020, 69, 7064–7077. [Google Scholar] [CrossRef]

- Cai, H.; Pang, W.; Chen, X.; Wang, Y.; Liang, H. A novel calibration board and experiments for 3D LiDAR and camera calibration. Sensors 2020, 20, 1130. [Google Scholar] [CrossRef] [PubMed]

- Iyer, G.; Ram, R.K.; Murthy, J.K.; Krishna, K.M. CalibNet: Geometrically supervised extrinsic calibration using 3D spatial transformer networks. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1110–1117. [Google Scholar]

- Xie, S.; Yang, D.; Jiang, K.; Zhong, Y. Pixels and 3-D points alignment method for the fusion of camera and LiDAR data. IEEE Trans. Instrum. Meas. 2018, 68, 3661–3676. [Google Scholar] [CrossRef]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; pp. 2301–2306. [Google Scholar]

- Zhou, L.; Li, Z.; Kaess, M. Automatic extrinsic calibration of a camera and a 3d lidar using line and plane correspondences. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5562–5569. [Google Scholar]

- Duan, J.; Huang, Y.; Wang, Y.; Ye, X.; Yang, H. Multipath-Closure Calibration of Stereo Camera and 3D LiDAR Combined with Multiple Constraints. Remote Sens. 2024, 16, 258. [Google Scholar] [CrossRef]

- Beltrán, J.; Guindel, C.; De La Escalera, A.; García, F. Automatic extrinsic calibration method for lidar and camera sensor setups. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17677–17689. [Google Scholar] [CrossRef]

- Liu, H.; Xu, Q.; Huang, Y.; Ding, Y.; Xiao, J. A method for synchronous automated extrinsic calibration of LiDAR and cameras based on a circular calibration board. IEEE Sens. J. 2023, 23, 25026–25035. [Google Scholar] [CrossRef]

- Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between color camera and 3D LIDAR instruments with a polygonal planar board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- Falcao, G.; Hurtos, N.; Massich, J. Plane-based calibration of a projector-camera system. VIBOT Master 2008, 9, 1–12. [Google Scholar]

- Weng, J.; Cohen, P.; Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 22, 1330–1334. [Google Scholar] [CrossRef]

- Pandey, G.; McBride, J.R.; Savarese, S.; Eustice, R.M. Automatic extrinsic calibration of vision and lidar by maximizing mutual information. J. Field Robot. 2015, 32, 696–722. [Google Scholar] [CrossRef]

- Bai, Z.; Jiang, G.; Xu, A. LiDAR-camera calibration using line correspondences. Sensors 2020, 20, 6319. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Liu, Z.; Yan, G.; Li, Y. CRLF: Automatic calibration and refinement based on line feature for LiDAR and camera in road scenes. arXiv 2021, arXiv:2103.04558. [Google Scholar] [CrossRef]

- Lin, P.-T.; Huang, Y.-S.; Lin, W.-C.; Wang, C.-C.; Lin, H.-Y. Online LiDAR-Camera Extrinsic Calibration Using Selected Semantic Features. IEEE Open J. Intell. Transp. Syst. 2025, 6, 456–464. [Google Scholar] [CrossRef]

- Feng, M.; Hu, S.; Ang, M.H.; Lee, G.H. 2d3d-matchnet: Learning to match keypoints across 2d image and 3d point cloud. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4790–4796. [Google Scholar]

- Lv, X.; Wang, B.; Dou, Z.; Ye, D.; Wang, S. LCCNet: LiDAR and camera self-calibration using cost volume network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2894–2901. [Google Scholar]

- Zhou, J.; Ma, B.; Zhang, W.; Fang, Y.; Liu, Y.S.; Han, Z. Differentiable registration of images and lidar point clouds with voxelpoint-to-pixel matching. Adv. Neural Inf. Process. Syst. 2023, 36, 51166–51177. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Zhong, Y. Intrinsic shape signatures: A shape descriptor for 3D object recognition. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 689–696. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Fu, L.F.T.; Chebrolu, N.; Fallon, M. Extrinsic calibration of camera to lidar using a differentiable checkerboard model. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 1–5 October 2023; pp. 1825–1831. [Google Scholar]

- Unnikrishnan, R.; Hebert, M. Fast Extrinsic Calibration of a Laser Rangefinder to a Camera; Technic Report, CMU-RI-TR-05-09; Robotics Institute Carnegie Mellon University: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD, Portland, Oregon, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Bennett, S.; Lasenby, J. ChESS–Quick and robust detection of chess-board features. Comput. Vis. Image Underst. 2014, 118, 197–210. [Google Scholar] [CrossRef]

- Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2344–2351. [Google Scholar]

| Gaussian Noise (m) | Zhou et al. [23] | Park et al. [13] | Ours |

|---|---|---|---|

| 0.005 | 0.0032 rad/0.0084 m | 0.0026 rad/0.0059 m | 0.0018 rad/0.0010 m |

| 0.010 | 0.0040 rad/0.0139 m | 0.0029 rad/0.0078 m | 0.0018 rad/0.0029 m |

| 0.015 | 0.0045 rad/0.0210 m | 0.0030 rad/0.0103 m | 0.0020 rad/0.0055 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, Z.; Wang, Y.; Chen, X.; Cai, H. A Joint LiDAR and Camera Calibration Algorithm Based on an Original 3D Calibration Plate. Sensors 2025, 25, 4558. https://doi.org/10.3390/s25154558

Cui Z, Wang Y, Chen X, Cai H. A Joint LiDAR and Camera Calibration Algorithm Based on an Original 3D Calibration Plate. Sensors. 2025; 25(15):4558. https://doi.org/10.3390/s25154558

Chicago/Turabian StyleCui, Ziyang, Yi Wang, Xiaodong Chen, and Huaiyu Cai. 2025. "A Joint LiDAR and Camera Calibration Algorithm Based on an Original 3D Calibration Plate" Sensors 25, no. 15: 4558. https://doi.org/10.3390/s25154558

APA StyleCui, Z., Wang, Y., Chen, X., & Cai, H. (2025). A Joint LiDAR and Camera Calibration Algorithm Based on an Original 3D Calibration Plate. Sensors, 25(15), 4558. https://doi.org/10.3390/s25154558