Abstract

Autonomous Underwater Vehicles (AUVs) are gradually becoming some of the most important equipment in deep-sea exploration. However, in the dynamic nature of the deep-sea environment, any unplanned fault of AUVs would cause serious accidents. Traditional fault diagnosis models are trained in static and fixed datasets, making them difficult to adopt in new and unknown deep-sea environments. To address these issues, this study explores incremental learning to enable AUVs to continuously adapt to new fault scenarios while preserving previously learned diagnostic knowledge, named the RM-MFKAN model. First, the approach begins by employing the Rainbow Memory (RM) framework to analyze data characteristics and temporal sequences, thereby delineating boundaries between old and new tasks. Second, the model evaluates data importance to select and store key samples encapsulating critical information from prior tasks. Third, the RM is combined with the enhanced KAN network, and the stored samples are then combined with new task training data and fed into a multi-branch feature fusion neural network. The proposed RM-MFKAN model was conducted on the “Haizhe” dataset, and the experimental results have demonstrated that the proposed model achieves superior performance in fault diagnosis for AUVs.

1. Introduction

Concerning strategies in marine resource exploration and environmental surveillance, Autonomous Underwater Vehicles (AUVs) have risen as an indispensable tool for oceanic discovery and development [1]. Despite their great value, these vehicles usually operate in highly complex marine environments where extreme factors like hydrostatic pressure, thermal gradients, and salinity variations can induce performance degradation or catastrophic failures. Such operational disruptions not only compromise mission reliability and efficiency but also entail significant economic liabilities and safety risks [2]. Consequently, the development of intelligent fault diagnosis systems for AUVs has emerged as a challenge in marine engineering, with both academic and long-term engineering significance [3].

In recent years, deep learning has demonstrated considerable success in fault diagnosis for complex electromechanical systems [4]. While conventional fault diagnosis paradigms predominantly rely on static models trained with fixed datasets, their efficacy diminishes in dynamically evolving operational envelopes [5]. As AUVs undertake increasingly complex missions in time-varying marine environments, new fault modes continuously emerge. When confronted with new failure signatures, traditional diagnostic frameworks necessitate labor-intensive data re-acquisition, model retraining, and hyperparameter recalibration [6]. These iterative procedures incur prohibitive computational costs and prolonged update cycles, rendering them unsuitable for real-time fault diagnosis in deep-sea exploration scenarios.

In this context, incremental learning has emerged as a paradigm-shifting methodology to address these challenges [7]. This adaptive learning mechanism enables continuous knowledge acquisition from new data while retaining previously acquired intelligence. Through incrementally updating model parameters, it achieves real-time state estimation and self-optimization, effectively countering environmental dynamics and performance drift in deep-sea deployments. Furthermore, its localized update architecture minimizes computational overhead, rendering it particularly advantageous for resource-constrained AUV platforms. Previous studies on incremental learning have shown encouraging progress. Schaul et al. [8] proposed an improved experience replay approach that better accounts for the importance of different training samples. They proposed an experience prioritization framework that more frequently replays critical transitions, thereby enhancing learning efficiency. Cohen et al. [9] proposed a matrix approximation-based sampling strategy preserving data sparsity patterns, which excels in uniform data distributions but struggles with sparse reward environments. In contrast, Rainbow Memory addresses these limitations by efficiently selecting the most representative replay samples that can significantly improve the sample utilization efficiency and can offer a superior solution for incremental learning’s sample replay mechanism.

To address the challenges of high retraining costs and prolonged model update cycles caused by dynamic marine environmental changes in AUVs, this study proposes an RM-MFKAN model that combines Rainbow Memory (RM)-based incremental learning with a modified Kolmogorov–Arnold Network. The model leverages the advantages of incremental learning to achieve efficient and accurate fault diagnosis for AUVs, providing an innovative and practical solution for intelligent fault detection. The key contributions are as follows.

- (1)

- A new application of Rainbow Memory-augmented incremental learning to AUV fault diagnosis, enabling continuous knowledge consolidation through strategically reintroduced historical samples while assimilating new operational data and mitigating catastrophic forgetting.

- (2)

- A new two-dimensional convolutional feature fusion network is developed to extract both global fault characteristics and local correlation features from AUV multi-sensor data, enabling effective feature-level integration.

- (3)

- The integration of the MFKAN model as the diagnostic backbone, which has been validated on the ‘Haizhe’ AUV benchmark dataset. The results demonstrate its superior multi-modal feature fusion capabilities compared to baseline architectures.

2. Related Work

2.1. AUV Fault Diagnosis

Recent advances in deep learning have led to significant improvements in intelligent fault diagnosis methods for AUVs [6]. To address the complexity of state signals in deep-sea environments, Wu et al. [10] developed a multi-channel fully convolutional neural network-based fault diagnosis algorithm. By integrating multi-sensor signals to establish state-fault mapping relationships, their approach maintains a high accuracy of 91.4% even under missing data conditions. Xia et al. [11] innovatively combined bi-directional Gated Recurrent Units (GRUs) with spatial attention mechanisms for AUV fault diagnosis. Their method employs data attention for dynamic decorrelation and GRUs for temporal feature extraction, achieving exceptional detection performance on field test data from the “Qianlong-2” AUV of the South China Sea. Pei et al. [12] proposed a new NAS-based framework for AUV fault diagnosis that achieves breakthrough performance with a second-level architecture search on a single GPU. For handling the complexity and time-varying nature of large-scale multivariate time series (MTS) data, Xia et al. [13] developed a Hybrid Feature Adaptive Fusion Network (HFAF). This approach utilizes D-CNN and D-RNN to extract spatiotemporal information while employing attention mechanisms to eliminate redundancy across multi-scale features. In response to small-sample scenarios in AUV datasets, Gao et al. [14] integrated physical models with Generative Adversarial Network (GAN), incorporating physical constraints during GAN training to achieve 98.4% diagnostic accuracy with limited samples. Xu et al. [15] investigated an evidential reasoning method that can combine the multi-source fault feature information extracted from vibration signals to effectively reduce the uncertainty in diagnosis. Liu et al. [16] studied a multi-sensor cross-domain fault diagnosis method that can significantly improve the robustness of the fault detection to variations of operating environments. However, while these studies have achieved good results on the fault diagnosis of marine equipment, when the deep-sea environment changes, the data distribution of samples could change, leading to re-training in these situations.

2.2. Incremental Learning-Based Fault Diagnosis

Incremental learning has emerged as a prominent research focus in machine learning due to its demonstrated efficacy in mitigating catastrophic forgetting [17]. Recent studies have developed innovative solutions to address this challenge in deep learning-based multi-task fault diagnosis scenarios. Yin et al. [18] pioneered an approach combining Elastic Weight Consolidation (EWC) with Deep Residual Shrinkage Networks (DRSNs), establishing an effective framework for pressure relief valve fault diagnosis. Xia et al. [19] advanced the field through their Multi-Scale Knowledge Distillation with Label Smoothing system for incremental rotating machinery fault diagnosis, which employs knowledge distillation for model knowledge preservation and label smoothing to reduce model overconfidence, demonstrating robust performance in complex mechanical systems with three critical components.

Wang et al. [20] employed knowledge distillation to retain learned knowledge while employing shared classifiers across various operational scenarios to create a unified diagnostic architecture, achieving exceptional performance in rolling bearing fault diagnosis. Xu et al. [21] proposed the Causality-guided Broad Learning Model, which incorporates global and multi-scale local causal features. Model updates via weight expansion/modification enable effective incremental learning across two bearing datasets. Chen et al. [22] developed a Lifelong Learning Diagnosis Method that features a dual-branch aggregated residual network combined with sample retention. This method successfully overcomes catastrophic forgetting in bearing fault diagnosis with incremental fault types. Fu et al. [23] proposed a Dynamic Branch Layer-based Incremental Learning method. This method preserves historical knowledge using dedicated branch layers for each task and addresses model growth via innovative fusion structures. Although these studies can perform well in the incremental learning field by using knowledge distillation and dynamic architectural design methods, they may not be proficient in systematic preservation and the utilization of historically significant diagnostic information.

Replay-based incremental learning methods have demonstrated remarkable success across computer vision and natural language processing domains by strategically storing and replaying critical samples or features from historical data, thereby effectively mitigating catastrophic forgetting during new task acquisition. This paradigm has recently gained substantial traction in fault diagnosis applications, with several notable methodological advances. Hayes et al. [24] developed the REMIND framework, which introduced two key innovations: feature representation matching and an advanced replay mechanism. Their approach employs product quantization to compress and store feature representations from previous tasks, subsequently replaying these compressed features during new task learning to reinforce prior knowledge. Douillard et al. [25] proposed PODNet, incorporating spatial distillation loss and multi-agent vector representations to maintain an optimal balance between new task learning and old-task retention. This architecture demonstrates particular efficacy in long-term, small-scale incremental learning scenarios, significantly reducing forgetting rates. In mechanical fault diagnosis, Chen et al. [26] introduced an Incremental Fault Diagnosis with Bias Correction (IFD-BiC) method. Their solution combines distillation loss with cross-entropy optimization while employing both sample replay and a dedicated bias correction layer to address class imbalance between new and old categories, achieving superior diagnostic accuracy in diesel engine applications. For bearing fault diagnosis, Fu et al. [27] implemented a Maximum Dissimilarity Sampling (MDS) strategy that iteratively selects the most representative samples in feature space, ensuring comprehensive coverage of class distributions. Shen et al. [28] addressed catastrophic forgetting in imbalanced rotating machinery datasets through dynamic branch layer fusion, where dedicated branch layers preserve old task knowledge while fusion structures accommodate model expansion. Yan et al. [29] advanced wavelet transform-based system-level fault diagnosis through Forward and Backward Compatible representation Incremental Learning (FSCIL). Their method distills representative diagnostic knowledge into a reverse memory bank, providing robust protection against catastrophic forgetting. Based on the merit of replay-based incremental learning methods, this work develops a new RM-MFKAN model that can continuously adapt to new scenarios while preserving previously learned diagnostic knowledge.

3. Methodology

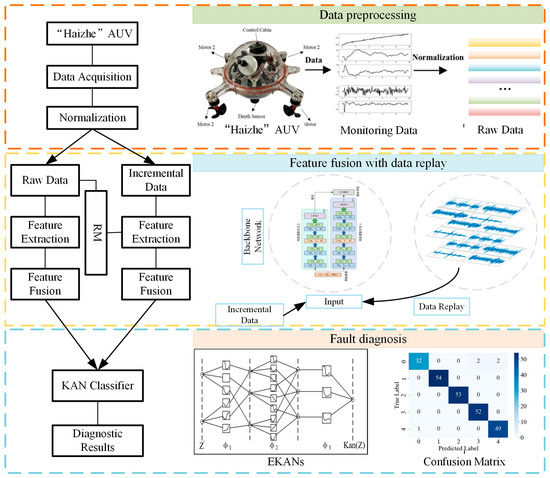

This research presents the RM-MFKAN model, an innovative framework that synergistically combines Rainbow Memory (RM)-based incremental learning with an enhanced Kolmogorov–Arnold Network (KAN) architecture. As illustrated in Figure 1, the model employs Rainbow Memory to analyze data characteristics and temporal patterns, which can establish the precise boundaries between old and new tasks through advanced feature-space partitioning. Following task reintroduction, the proposed RM-MFKAN implements a hierarchical selection process that evaluates samples based on informational significance, feature-space representativeness, and temporal relevance to construct an optimal memory bank of critical historical examples. Then, these preserved samples are integrated with new task data through a multi-branch convolutional network featuring parallel spatial–temporal feature extractors and cross-branch attention mechanisms, enabling comprehensive feature fusion across multiple abstraction levels. Third, the enhanced KAN component subsequently performs fault diagnosis via adaptive basis function optimization and multi-scale correlation analysis. By maintaining historical feature representations during new task assimilation through this sophisticated architecture, the model can effectively eliminate catastrophic forgetting while demonstrating enhanced diagnostic robustness in dynamic operational environments, particularly when handling sequential fault patterns in complex systems. The framework’s ability to balance knowledge retention with adaptive learning represents a significant advance in incremental fault diagnosis methodologies.

Figure 1.

The structure of AUV fault diagnosis using RM-MFKAN model.

3.1. Rainbow Memory Module

To ensure that stored memory samples exhibit both intra-class representativeness and inter-class discriminability, we propose a feature-space sampling strategy [30]. Samples near classification boundaries typically possess high discriminative power, while those near class distribution centers offer greater representativeness. Our method reconciles these dual requirements through strategic feature-space sampling. A key challenge lies in maintaining sample diversity.

To overcome this issue, we developed an effective computational alternative scheme, using the uncertainty of the classification model as a proxy for the estimation of relative positions in the discriminant feature space. The core premise builds upon the empirical observation that classification models demonstrate higher confidence for representative samples located near class centroids. Operationally, we quantify sample uncertainty through a perturbation-based analysis framework. For each sample, we generate augmented variants via stochastic transformations (color jittering, random cropping, and occlusion) and measure output variance across these perturbations. Following Gal et al. [31], we formalize this through Monte Carlo (MC) approximation of the posterior distribution under a defined perturbation prior. x denotes the original sample, denotes perturbed variants, y denotes the label, and A denotes the number of augmentations. The perturbation prior is modeled as a uniform mixture over transformation parameters in Equation (2), where represents hyperparameters for the r-th perturbation. The sample uncertainty with respect to perturbations is quantified from the approximate distribution as formalized in Equations (4) and (5). In these expressions, represents the uncertainty measure for sample x, and is the indicator function. T is the sample number of the class C. Lower values indicate samples residing in high-confidence regions of the model’s feature space.

Building upon the study by Bang et al. [32], we implement a balanced memory allocation scheme that distributes a fixed number of memory slots (kc slots per class) uniformly across all observed classes (N “seen” classes). The algorithm proceeds through three key operations: First, after initial slot assignment, we compute uncertainty metrics for both streaming samples () and stored samples () in task t memory buffer. Second, we rank all candidate samples by their uncertainty scores. Finally, we select samples from the ordered list using systematic interval sampling (stride ), thereby ensuring optimal diversity in the memory bank. This sampling strategy produces several important properties. First, the resulting memory population spans a continuous spectrum from perturbation-robust to perturbation-sensitive samples. Second, it introduces perturbation-induced diversity to episodic memory. Third, it enhances both the representativeness and robustness of the memory bank for incremental learning. Crucially, the interval selection mechanism maintains coverage of the complete uncertainty distribution while preventing cluster formation in the feature space.

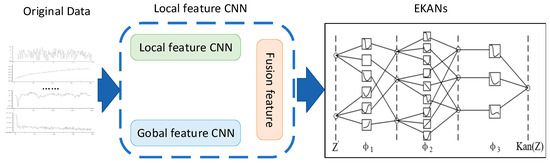

3.2. MFKAN Foundation Model

The MFKAN architecture integrates a feature fusion network with an Enhanced Kolmogorov–Arnold Network (EKAN), as illustrated in Figure 2. The framework initiates with preprocessing operational state data collected from multiple AUV-mounted sensors, followed by systematic processing through a dual-branch feature fusion network. The global analysis branch extracts system-wide fault characteristics while the parallel local branch identifies component-specific signatures. The EKAN module then leverages its adaptive basis functions and dynamic topology optimization to perform a sophisticated analysis of the fused features, enabling precise fault pattern recognition through the hierarchical decomposition of complex feature interactions and nonlinear mapping of failure modes. This integrated architecture achieves robust diagnostic performance by simultaneously capturing both macroscopic system behavior and microscopic component anomalies while maintaining computational efficiency through EKAN’s advanced processing capabilities.

Figure 2.

Network architecture of the MFKAN model.

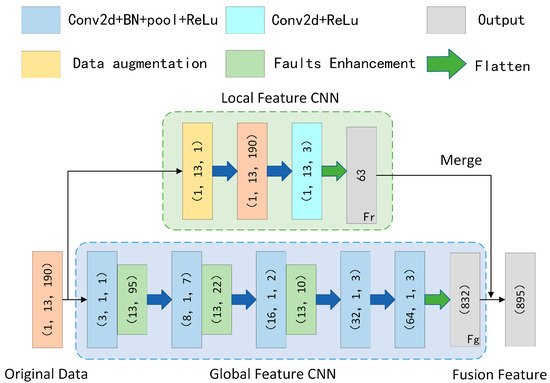

To address the issue of current AUV fault diagnosis methods in comprehensively extracting complete fault features from individual sensors, we propose a new multi-sensor feature fusion network. As illustrated in Figure 3, this architecture incorporates two specialized feature extraction modules: a global feature CNN designed to capture system-wide fault patterns across sensor arrays, and a local feature CNN that focuses on component-specific signatures from individual sensor streams. The integrated framework enables the synergistic analysis of heterogeneous sensor data through coordinated processing of both macroscopic system behavior and microscopic component anomalies, thereby overcoming the inherent constraints of single-sensor diagnostic approaches. This dual-pathway design ensures robust feature representation by simultaneously considering both the holistic system state and detailed local indicators while maintaining computational efficiency through parallel processing architecture. The global branch aggregates cross-sensor correlations to identify distributed fault patterns, whereas the local branch preserves high-resolution temporal and spatial features critical for precise fault localization, with subsequent feature fusion creating a comprehensive diagnostic representation that exceeds the capabilities of conventional single-sensor methods.

Figure 3.

Architecture of the multi-sensor feature fusion network.

To effectively extract both global fault characteristics and local correlation features from the raw monitoring data of AUVs while achieving comprehensive feature fusion, we designed a new two-dimensional convolutional neural network (CNN) architecture employing one-dimensional kernels for global feature extraction. The global feature CNN utilizes a specialized 2D convolutional network with 1D kernels, incorporating carefully selected stride parameters to maintain both the independence and non-mixing of individual sensor data streams. The raw sensor data is structured as a two-dimensional array of dimensions H×W, where H represents the number of sensor types and W denotes the temporal length of sensor readings. The network architecture implements a sequential convolutional stack with kernel sizes of 1, 7, 2, 3, and 3, respectively, employing a consistent stride of 2, while the corresponding channel depths progress through 3, 8, 16, 32, and 64 layers. This optimized configuration enables the efficient extraction of system-wide fault features from AUV operational data, with the complete feature transformation process formally expressed in Equation (6).

The global feature extraction process transforms raw input data into comprehensive fault representations , as formalized in Equation (6). To complement this global analysis, we developed a specialized local correlation network comprising two integrated components: a data augmentation module that emphasizes sensor-specific anomalies, and a local feature extractor that captures inter-sensor dependencies. The augmentation module employs global average pooling to identify anomalous sensor behaviors, subsequently amplified through sigmoid activation to highlight abnormal operational states. This targeted enhancement enables a more discriminative representation of localized fault patterns in AUV systems. The augmentation transformation is mathematically defined in Equation (7), where the adaptive weighting mechanism selectively intensifies diagnostically relevant sensor channels while suppressing normal operational signatures.

The data augmentation process yields enhanced sensor readings, where denotes the augmented data for the n-th sensor and represents the original measurements from the i-th sensor, with indicating the sigmoid activation function. Building upon this augmented representation, we developed a dedicated local feature extraction module to capture inter-sensor correlation patterns. This module analyzes localized dependencies across sensor arrays through a hierarchical convolution operation, systematically modeling both temporal and cross-channel relationships. The mathematical formulation for extracting these local correlation features is presented in Equation (8), which establishes a differentiable mapping from raw sensor measurements to discriminative fault signatures while preserving the physical interpretability of inter-sensor interactions.

The convolutional operation systematically captures inter-sensor correlations in AUV systems through kernel sliding across raw data streams. To holistically represent fault conditions, we performed a feature-level fusion of global fault characteristics and local correlation features, integrating their complementary diagnostic information. This fusion process, formally specified in Equation (9), generates comprehensive fault embeddings that preserve both system-wide patterns and localized inter-sensor dependencies.

The feature fusion process combines local correlation features () with global fault characteristics () to generate comprehensive diagnostic representations. By integrating sensor-level anomalies with system-wide fault patterns at the feature level, the multi-sensor fusion network produces discriminative embeddings that enable both holistic state characterization and precise fault identification.

3.3. Loss Function

The cross-entropy loss function is a fundamental objective function in machine learning, particularly for multi-class classification tasks. It quantifies the dissimilarity between the predicted probability distribution and the true label distribution, serving as a critical optimization target during model training. In multi-class settings, the cross-entropy loss is derived from information theory, measuring the expected number of bits required to encode the true class labels using the predicted probabilities.

Formally, given a classification problem with C classes, let denote the one-hot encoded ground-truth label, where yi = 1 if the sample belongs to class i and is 0 otherwise. Let represent the predicted probability distribution, typically obtained via the softmax function applied to the model’s logits. The multi-class cross-entropy loss is then defined as in Equation (10).

Due to the one-hot nature of y, this expression simplifies the negative log-likelihood of the true class, emphasizing the model’s confidence in the correct prediction. Minimizing this loss encourages the predicted probabilities to align closely with the true distribution, thereby improving classification accuracy.

The cross-entropy loss is widely favored in deep learning due to its well-behaved gradients, which facilitate efficient optimization, and its natural interpretation as a likelihood-based objective. By penalizing incorrect predictions more severely when the model is highly confident, it drives the learning process toward robust and discriminative feature representations. Consequently, it remains the standard choice for training neural networks in multi-class classification scenarios.

4. Experimental Study

4.1. AUV Dataset

The fault data was collected from navigation experiments conducted using Zhejiang University’s “Haizhe” AUV. The Haizhe AUV is a compact unmanned underwater platform equipped with a propulsion motor, depth sensor, and control module, as shown in Table 1. For monitoring purposes, the vehicle was instrumented with both depth sensors and inertial measurement units (IMUs). Table 2 details the sensor data specifications. Each experimental trial introduced a single fault condition lasting 1020 s. The Haizhe platform exhibits five operational states, named normal operation, depth sensor failure, increased payload, minor propeller damage, and severe propeller damage.

Table 1.

Sensor data of “Haizhe” AUV dataset.

Table 2.

Details of “Haizhe” dataset.

The data preprocessing pipeline begins with sample extraction from raw sensor streams using a sliding window approach with a fixed size of 1024 data points. This windowing operation facilitates local feature capture while generating representative segments for subsequent analysis. From the “Haizhe” dataset, we selected 13 sensor modalities (excluding temporal markers) that collectively encode the AUV’s operational states across diverse environmental conditions. Each sensor channel undergoes standardized processing: (1) uniform length adjustment to 190 data points through truncation of excess values or replication of terminal values for shorter sequences, ensuring dimensional consistency while preserving data continuity; (2) min–max normalization to the [−1, 1] interval to enhance comparability across heterogeneous sensors. The processed dataset is then randomly partitioned into training and testing subsets, maintaining a balanced representation of all fault conditions.

4.2. Experimental Setup

All experiments were conducted under identical environmental conditions to ensure comparability. The proposed RM-MFKAN model was implemented on a Windows 10 platform using Python 3.9 and PyTorch 2.2.1, with hardware acceleration provided by an NVIDIA RTX 2080Ti GPU and Intel® Core™ i9-10900X CPU.

To maintain experimental rigor, we standardized hyperparameters across all evaluations using the “Haizhe” dataset, as detailed in Table 3. Specifically, the configuration employed included learning rate, batch size, and epoch number, as described below.

Table 3.

Hyperparameter settings for model training.

4.3. Incremental Learning Performance

“Inc 1” means that all the methods are trained using label 0 and 1. “Inc 2” means that all the methods conducted the incremental learning. “Inc 2” is based on “Inc 1”, and it adds the label 2 and 3. “Inc 3” is an incremental learning as well. It is based on “Inc 2” and adds the label 4.

To rigorously evaluate the performance of RM-MFKAN, we conducted comparative experiments against ten established incremental learning methods, namely Finetuning [33], EEIL [34], EWC [35], Freezing [36], LUCIR [37], LwF [38], LwM [39], Path Integral [40], RWalk [41], and iCaRL [7]. These experiments were performed using a publicly available AUV dataset under identical conditions to ensure fair comparison. As evidenced in Table 4, RM-MFKAN demonstrates superior performance in both classification accuracy and computational efficiency across all incremental phases. The model achieves perfect 100% accuracy in the initial phase, outperforming all baseline methods and validating its exceptional fault diagnosis capability. Remarkably, RM-MFKAN maintains 98.1% accuracy after the second incremental phase (a mere 1.9% decrease) and 96.6% after the third phase (only a 1.5% further decline), significantly surpassing the 97.3% peak accuracy of competing methods. These results collectively demonstrate RM-MFKAN’s robust ability to assimilate new fault categories while effectively mitigating catastrophic forgetting, highlighting its dual strengths in maintaining classification precision and adaptive learning capacity under continuous data integration scenarios. The consistent performance advantage across incremental phases underscores the model’s suitability for real-world deployment, where sustained diagnostic accuracy is paramount.

Table 4.

Incremental learning performance of RM-MFKAN on AUV dataset.

4.4. Ablation Study

Table 5 presents a systematic evaluation of different sampling strategies’ impacts on incremental learning performance. The study compares four methods, including simple random sampling, which selects samples uniformly from the population; reservoir sampling, a stream-processing algorithm that maintains fixed-size representative samples without prior knowledge of total data volume; ring-buffer sampling, where new data overwrites the oldest entries in a circular storage structure; and the proposed Rainbow Memory sampling method. Performance metrics reveal Rainbow Memory’s superior efficacy, achieving peak accuracy rates of 100%, 98.1%, and 96.6% across the three incremental phases, respectively. This represents a statistically significant improvement over conventional methods, demonstrating Rainbow Memory’s unique capability to preserve critical feature distributions while accommodating new data streams. The circular buffer technique showed particular limitations in later phases, with accuracy degradation suggesting insufficient protection against catastrophic forgetting. Reservoir sampling, while theoretically sound for streaming scenarios, proved less effective in maintaining long-term knowledge retention compared to the proposed method’s discriminative sampling approach. These findings substantiate Rainbow Memory’s dual advantages: maintaining representative exemplars from historical distributions while dynamically adapting to emerging data patterns—a crucial requirement for effective incremental learning in AUV fault diagnosis applications. The consistent performance across phases underscores the method’s robustness compared to conventional sampling baselines.

Table 5.

The impact of different sampling strategies on the incremental learning process.

Table 6 illustrates the impact of varying random seeds on the incremental learning process. Classes were randomly partitioned and allocated across five tasks using distinct random seeds (1–3) to construct an incremental learning task configuration. The results reveal a non-monotonic relationship between the random seed value and model accuracy: as the seed increases from 1 to 3, incremental learning performance first rises and then declines. Consequently, a random seed of 2 was selected for the Blurry dataset to optimize accuracy.

Table 6.

The impact of different random seeds on the incremental learning process.

Table 7 demonstrates the impact of varying episodic memory sizes (K) on the incremental learning process. As K increases from 100 to 500, the accuracy rates across all three incremental learning phases show consistent improvement. However, when K is further increased to 600, the performance in phases 2 and 3 begins to decline. Based on these observations, we selected K = 500 as the optimal memory size for our final experiments.

Table 7.

The impact of K on incremental learning.

Table 8 presents a comparative analysis of how different data augmentation strategies affect incremental learning performance. The study evaluates three prominent techniques—CutMix (which synthesizes new samples by blending cropped regions from paired images), Cutout (which occludes random rectangular areas with uniform pixel values), and RandAugment (which provide details regarding where data supporting reported results can be found, including links to stochastic combinations of predefined transformations at varying intensities)—against a non-augmented baseline. Crucially, the baseline model consistently achieved higher accuracy rates (100%, 98.1%, and 96.6% across three incremental learning phases) than all augmented variants, suggesting that standard augmentation approaches may inadvertently compromise the preservation of learned features in incremental learning scenarios. This counterintuitive finding highlights the need for task-specific augmentation design in continual learning systems.

Table 8.

Impact of different data augmentation methods on incremental learning.

5. Conclusions

To address the practical application issues of high retraining costs and prolonged model update cycles caused by evolving fault types in AUVs, this paper proposes an RM-MFKAN-based fault diagnosis method. The approach first designs a multi-sensor feature fusion network to extract both global fault characteristics and local correlation features from AUVs by comprehensive feature-level integration. Second, a Rainbow Memory (RM) module is incorporated for incremental learning. The RM module calculates sample perturbations to select optimal samples for replay. Third, the fault diagnosis is implemented using an EKAN network. During incremental learning updates, the input samples include both new data and stored historical samples. This sample replay mechanism enables the model to acquire new knowledge while effectively retaining critical learned information. Several experiments on AUV datasets have demonstrated RM-MFKAN’s superior performance. The performance of RM-MFKAN achieves 98.1% classification accuracy across all fault categories after the first incremental learning and can maintain 96.6% accuracy after the second incremental update. These results validate RM-MFKAN’s exceptional capability to significantly mitigate catastrophic forgetting while maintaining the robust recognition of novel fault types. In the future, the proposed method can be conducted on a real-world AUV system, and the real-world experiments can be used to validate the proposed RM-MFKAN.

Author Contributions

Conceptualization, Y.L., Y.Y. and L.W.; methodology, Y.L., Y.Y. and L.W.; validation, Y.L., Y.Y., Z.Z. and L.W.; investigation, Y.L. and L.W.; writing—original draft preparation, Y.L. and Y.Y.; writing—review and editing, Y.L., Y.Y. and L.W.; supervision, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Shenzhen Science and Technology Program (JCYJ20230807113708016), the Guangdong Basic and Applied Basic Research Foundation (No. 2024A1515011025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Z.; Wu, Y.; Zhou, Y.; Hu, D. Fault-Tolerant Control of Autonomous Underwater Vehicle Actuators Based on Takagi and Sugeno Fuzzy and Pseudo-Inverse Quadratic Programming under Constraints. Sensors 2024, 24, 3029. [Google Scholar] [CrossRef] [PubMed]

- Fan, Z.; Gao, R.X.; He, Q.; Huang, Y.; Jiang, T.; Peng, Z.; Thévenaz, L.; Xiong, Y.; Zhong, S. New sensing technologies for monitoring machinery, structures, and manufacturing processes. J. Dyn. Monit. Diagn. 2023, 2, 69–88. [Google Scholar]

- Huang, R.; Xia, J.; Zhang, B.; Chen, Z.; Li, W. Compound fault diagnosis for rotating machinery: State-of-the-art, challenges, and opportunities. J. Dyn. Monit. Diagn. 2023, 2, 13–29. [Google Scholar] [CrossRef]

- Gebraeel, N.; Lei, Y.; Li, N.; Si, X.; Zio, E. Prognostics and remaining useful life prediction of machinery: Advances, opportunities and challenges. J. Dyn. Monit. Diagn. 2023, 2, 1–12. [Google Scholar]

- Ma, S.; Leng, J.; Zheng, P.; Chen, Z.; Li, B.; Li, W.; Liu, Q.; Chen, X. A digital twin-assisted deep transfer learning method towards intelligent thermal error modeling of electric spindles. J. Intell. Manuf. 2025, 36, 1659–1688. [Google Scholar] [CrossRef]

- Lin, H.; Huang, X.; Chen, Z.; He, G.; Xi, C.; Li, W. Matching pursuit network: An interpretable sparse time–frequency representation method toward mechanical fault diagnosis. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 12377–12388. [Google Scholar] [CrossRef] [PubMed]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. icarl: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Cohen, M.B.; Lee, Y.T.; Musco, C.; Musco, C.; Peng, R.; Sidford, A. Uniform sampling for matrix approximation. In Proceedings of the 2015 Conference on Innovations in Theoretical Computer Science, Rehovot, Israel, 11–13 January 2015; pp. 181–190. [Google Scholar]

- Wu, Y.; Wang, A.; Zhou, Y.; Zhu, Z.; Zeng, Q. Fault diagnosis of autonomous underwater vehicle with missing data based on multi-channel full convolutional neural network. Machines 2023, 11, 960. [Google Scholar] [CrossRef]

- Xia, S.; Zhou, X.; Shi, H.; Li, S.; Xu, C. A fault diagnosis method based on attention mechanism with application in Qianlong-2 autonomous underwater vehicle. Ocean Eng. 2021, 233, 109049. [Google Scholar] [CrossRef]

- Pei, S.; Wang, H.; Han, T. Time-efficient neural architecture search for autonomous underwater vehicle fault diagnosis. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Xia, S.; Zhou, X.; Shi, H.; Li, S. Hybrid feature adaptive fusion network for multivariate time series classification with application in AUV fault detection. Ships Offshore Struct. 2024, 19, 807–819. [Google Scholar] [CrossRef]

- Gao, S.; Liu, J.; Zhang, Z.; Feng, C.; He, B.; Zio, E. Physics-Guided Generative Adversarial Networks for fault detection of underwater thruster. Ocean Eng. 2023, 286, 115585. [Google Scholar] [CrossRef]

- Xu, X.; Huang, W.; Zhang, X.; Zhang, Z.; Liu, F.; Brunauer, G. An evidential reasoning-based information fusion method for fault diagnosis of ship rudder. Ocean Eng. 2025, 318, 120082. [Google Scholar] [CrossRef]

- Liu, J.; Yang, X.; Xie, Y.; Wu, M.; Li, Z.; Mu, W.; Liu, G. Multi-sensor cross-domain fault diagnosis method for leakage of ship pipeline valves. Ocean Eng. 2024, 299, 117211. [Google Scholar] [CrossRef]

- Pettirsch, A.; Garcia-Hernandez, A. Overcoming Data Scarcity in Roadside Thermal Imagery: A New Dataset and Weakly Supervised Incremental Learning Framework. Sensors 2025, 25, 2340. [Google Scholar] [CrossRef] [PubMed]

- Yin, H.; Xu, H.; Fan, W.; Sun, F. Fault diagnosis of pressure relief valve based on improved deep Residual Shrinking Network. Measurement 2024, 224, 113752. [Google Scholar] [CrossRef]

- Xia, Y.; Gao, J.; Shao, X.; Wang, C.; Xiang, J.; Lin, H. Incremental rotating machinery fault diagnosis method based on multi-scale knowledge distillation and label smoothing. Meas. Sci. Technol. 2025, 36, 046208. [Google Scholar] [CrossRef]

- Wang, P.; Xiong, H.; He, H. Bearing fault diagnosis under various conditions using an incremental learning-based multi-task shared classifier. Knowl. Based Syst. 2023, 266, 110395. [Google Scholar] [CrossRef]

- Xu, X.; Bao, S.; Liang, P.; Qiao, Z.; He, C.; Shi, P. A broad learning model guided by global and local receptive causal features for online incremental machinery fault diagnosis. Expert Syst. Appl. 2024, 246, 123124. [Google Scholar] [CrossRef]

- Chen, B.; Ding, C.; Jiang, X.; Shi, J.; Huang, W.; Shen, C. Lifelong learning for bearing fault diagnosis with incremental fault types. In Proceedings of the 2022 IEEE International Conference on Prognostics and Health Management (ICPHM), Romulus, MI, USA, 06–08 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 114–120. [Google Scholar]

- Fu, Y.; Cao, H.; Chen, X.; Ding, J. Broad auto-encoder for machinery intelligent fault diagnosis with incremental fault samples and fault modes. Mech. Syst. Signal Process. 2022, 178, 109353. [Google Scholar] [CrossRef]

- Hayes, T.L.; Kafle, K.; Shrestha, R.; Acharya, M.; Kanan, C. Remind your neural network to prevent catastrophic forgetting. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 466–483. [Google Scholar]

- Douillard, A.; Cord, M.; Ollion, C.; Robert, T.; Valle, E. Podnet: Pooled outputs distillation for small-tasks incremental learning. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; part XX 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 86–102. [Google Scholar]

- Chen, T.; Xiang, Y.; Wang, X. IFD-BiC: A Class-incremental Continual Learning Method for Diesel Engine Fault Diagnosis. Eng. Res. Express 2025, 7, 015419. [Google Scholar] [CrossRef]

- Fu, Y.; He, J.; Yang, L.; Luo, Z. Incremental Learning with Maximum Dissimilarity Sampling Based Fault Diagnosis for Rolling Bearings. In Proceedings of the International Conference on Bio-Inspired Computing: Theories and Applications, Changsha, China, 15–17 December 2023; Springer Nature: Singapore, 2023; pp. 213–226. [Google Scholar]

- Shen, C.; He, Z.; Chen, B.; Huang, W.; Li, L.; Wang, D. Dynamic branch layer fusion: A new continual learning method for rotating machinery fault diagnosis. Knowl. Based Syst. 2025, 313, 113177. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.; Wang, X.; Wang, J. Few-shot class-incremental learning for system-level fault diagnosis of wind turbine. IEEE/ASME Trans. Mechatron. 2024, 1–10. [Google Scholar] [CrossRef]

- Prabhu, A.; Torr, P.H.S.; Dokania, P.K. Gdumb: A simple approach that questions our progress in continual learning. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part II 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 524–540. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York City, NY, USA, 19–24 June 2016; PMLR: Cambridge, MA, USA, 2016; pp. 1050–1059. [Google Scholar]

- Bang, J.; Kim, H.; Yoo, Y.; Ha, J.-W.; Choi, J. Rainbow memory: Continual learning with a memory of diverse samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8218–8227. [Google Scholar]

- Ding, N.; Qin, Y.; Yang, G.; Wei, F.; Yang, Z.; Su, Y.; Hu, S.; Chen, Y.; Chan, C.-M.; Chen, W.; et al. Parameter-efficient fine-tuning of large-scale pre-trained language models. Nat. Mach. Intell. 2023, 5, 220–235. [Google Scholar] [CrossRef]

- Castro, F.M.; Marín-Jiménez, M.J.; Guil, N.; Schmid, C.; Alahari, K. End-to-end incremental learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 233–248. [Google Scholar]

- Chefrour, A.; Labiba, S.M.; Difi, I.; Bakkouche, N. A novel incremental learning algorithm based on incremental vector support machina and incremental neural network learn++. Rev. D intelligence Artif. 2019, 33, 181–188. [Google Scholar] [CrossRef][Green Version]

- French, R.M. Catastrophic forgetting in connectionist networks. Trends Cogn. Sci. 1999, 3, 128–135. [Google Scholar] [CrossRef] [PubMed]

- Hou, S.; Pan, X.; Loy, C.C.; Wang, Z.; Lin, D. Learning a unified classifier incrementally via rebalancing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 831–839. [Google Scholar]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef] [PubMed]

- Dhar, P.; Singh, R.V.; Peng, K.C.; Wu, Z.; Chellappa, R. Learning without memorizing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5138–5146. [Google Scholar]

- Zhu, W.; Guo, X.; Fang, Y.; Zhang, X. A path-integral-based reinforcement learning algorithm for path following of an autoassembly mobile robot. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 4487–4499. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, A.; Dokania, P.K.; Ajanthan, T.; Torr, P.H.S. Riemannian walk for incremental learning: Understanding forgetting and intransigence. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 532–547. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).