SAM2-DFBCNet: A Camouflaged Object Detection Network Based on the Heira Architecture of SAM2

Abstract

1. Introduction

- We propose a novel Camouflaged Object Detection network, SAM2-DFBCNet, which significantly enhances COD performance using context-aware feature fusion and dynamic boundary refinement.

- We design three innovative modules—CACEM, CSFIB, and DBRM—to improve the feature representation of camouflaged objects from three perspectives: context perception, cross-scale feature interaction, and boundary structure optimization.

- We conduct extensive experiments on the CAMO, COD10K, and NC4K datasets, demonstrating that SAM2-DFBCNet surpasses existing methods in both segmentation accuracy and robustness.

2. Related Works

2.1. Camouflage Object Detection Method

2.2. SAM and SAM2 in COD

3. Materials and Methods

3.1. Network Architecture Overview

3.2. Camouflage-Aware Context-Enhancement Module (CACEM)

3.3. Cross-Scale Feature Interactive Bridge (CSFIB)

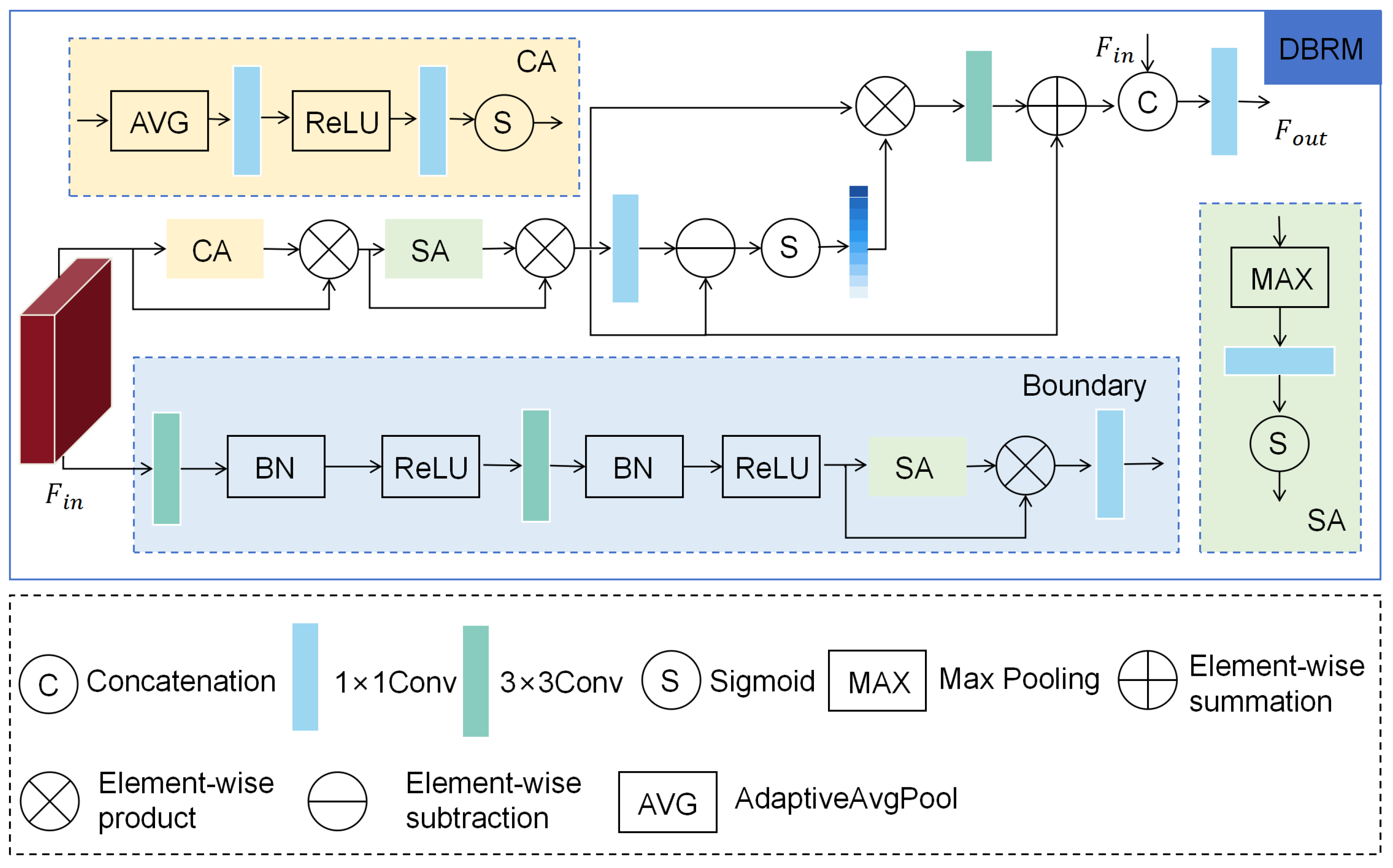

3.4. Dynamic Boundary-Refining Module (DBRM)

3.5. Loss Function

4. Experiment

4.1. Implementation Details

4.2. Dataset

4.3. Evaluation Indicators

4.4. Comparative Experiment

4.4.1. Quantitative Evaluation

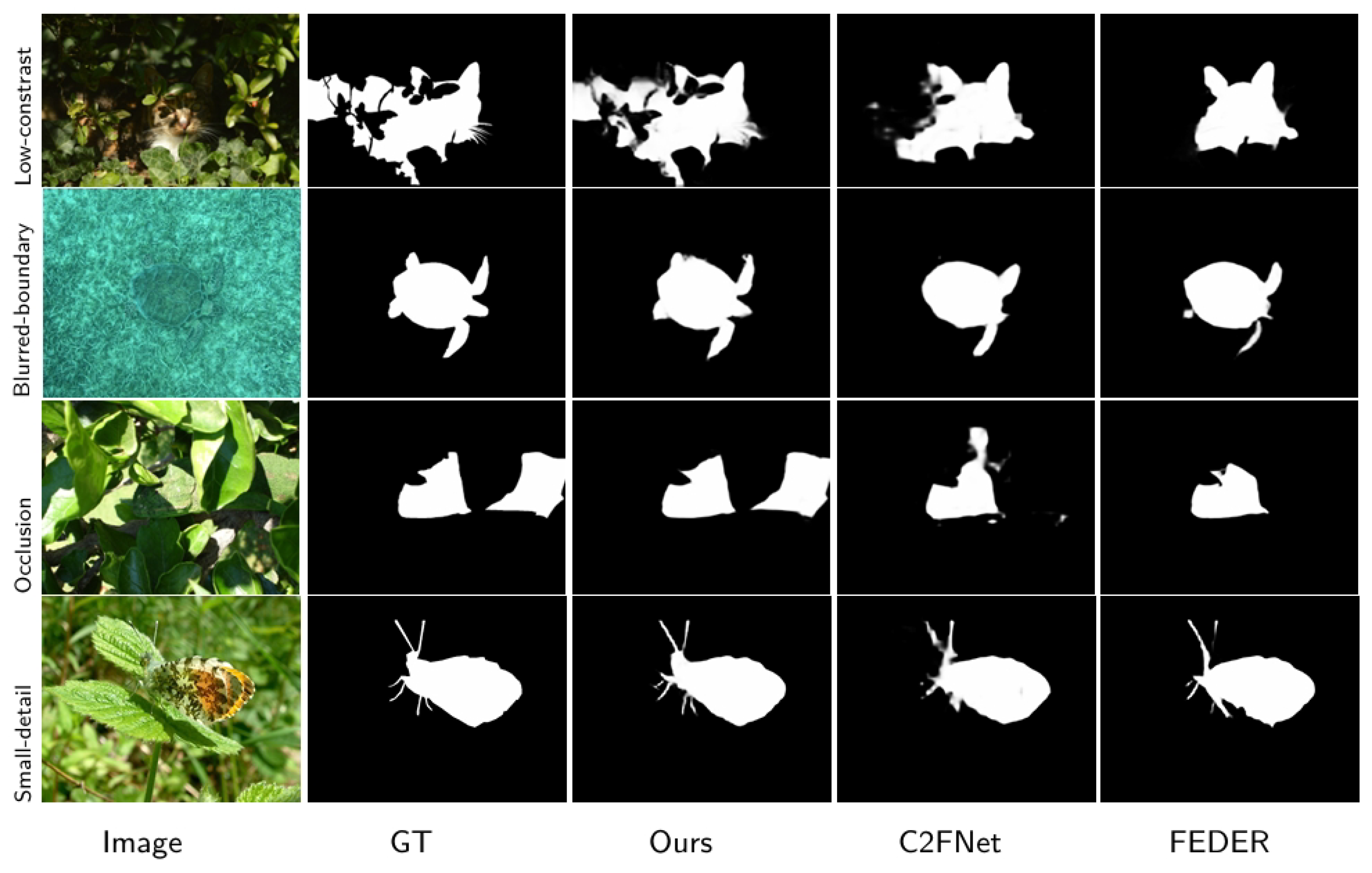

4.4.2. Qualitative Analysis

4.5. Ablation Experiment

4.5.1. The Effectiveness of Different Ingredients

4.5.2. CACEM Analysis

4.5.3. CSFIB Analysis

4.5.4. DBRM Analysis

4.5.5. The Impact of Different Backbone Network Architectures

4.5.6. Ablation of Loss Function

4.6. Calculation Complexity

4.7. Failure Cases

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, D.; Zheng, X.; Yang, L.T.; Gao, Y.; Zhu, C.; Ruan, Y. Mffn: Multi-view feature fusion network for camouflaged object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6232–6242. [Google Scholar] [CrossRef]

- Xiao, F.; Hu, S.; Shen, Y.; Fang, C.; Huang, J.; He, C.; Tang, L.; Yang, Z.; Li, X. A survey of camouflaged object detection and beyond. arXiv 2024, arXiv:2408.14562. [Google Scholar] [CrossRef]

- Zeng, B.; Gao, S.; Xu, Y.; Zhang, Z.; Li, F.; Wang, C. Detection of military targets on ground and sea by UAVs with low-altitude oblique perspective. Remote Sens. 2024, 16, 1288. [Google Scholar] [CrossRef]

- Ma, J.; Wang, Y.; An, X.; Ge, C.; Yu, Z.; Chen, J.; Zhu, Q.; Dong, G.; He, J.; He, Z.; et al. Toward data-efficient learning: A benchmark for COVID-19 CT lung and infection segmentation. Med. Phys. 2021, 48, 1197–1210. [Google Scholar] [CrossRef] [PubMed]

- Tuia, D.; Kellenberger, B.; Beery, S.; Costelloe, B.R.; Zuffi, S.; Risse, B.; Mathis, A.; Mathis, M.W.; Van Langevelde, F.; Burghardt, T.; et al. Perspectives in machine learning for wildlife conservation. Nat. Commun. 2022, 13, 792. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, G.; Zhou, T.; Zhang, Y.; Liu, N. Context-aware Cross-level Fusion Network for Camouflaged Object Detection. In Proceedings of the 30th International Joint Conference on Artificial Intelligence—IJCAI, Montreal, QC, Canada, 19–26 August 2021; pp. 1025–1031. [Google Scholar] [CrossRef]

- He, C.; Li, K.; Zhang, Y.; Tang, L.; Zhang, Y.; Guo, Z.; Li, X. Camouflaged Object Detection With Feature Decomposition and Edge Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 22046–22055. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Cheng, M.M.; Shao, L. Concealed object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6024–6042. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Tang, L.; Xiao, H.; Li, B. Can SAM Segment Anything? When SAM Meets Camouflaged Object Detection. arXiv 2023, arXiv:2304.04709. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. Sam 2: Segment anything in images and videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Chen, G.; Liu, S.J.; Sun, Y.J.; Ji, G.P.; Wu, Y.F.; Zhou, T. Camouflaged object detection via context-aware cross-level fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6981–6993. [Google Scholar] [CrossRef]

- Yang, F.; Zhai, Q.; Li, X.; Huang, R.; Luo, A.; Cheng, H.; Fan, D.P. Uncertainty-guided transformer reasoning for camouflaged object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4146–4155. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Xiang, T.Z.; Zhang, L.; Lu, H. Zoom in and out: A mixed-scale triplet network for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2160–2170. [Google Scholar]

- Liu, J.; Zhang, J.; Barnes, N. Modeling aleatoric uncertainty for camouflaged object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1445–1454. [Google Scholar]

- Ji, G.P.; Zhu, L.; Zhuge, M.; Fu, K. Fast camouflaged object detection via edge-based reversible re-calibration network. Pattern Recognit. 2022, 123, 108414. [Google Scholar] [CrossRef]

- Huang, Z.; Dai, H.; Xiang, T.Z.; Wang, S.; Chen, H.X.; Qin, J.; Xiong, H. Feature shrinkage pyramid for camouflaged object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5557–5566. [Google Scholar]

- Yin, B.; Zhang, X.; Fan, D.P.; Jiao, S.; Cheng, M.M.; Van Gool, L.; Hou, Q. Camoformer: Masked separable attention for camouflaged object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10362–10374. [Google Scholar] [CrossRef]

- Li, A.; Zhang, J.; Lv, Y.; Liu, B.; Zhang, T.; Dai, Y. Uncertainty-aware joint salient object and camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10071–10081. [Google Scholar]

- Sun, Y.; Wang, S.; Chen, C.; Xiang, T.Z. Boundary-Guided Camouflaged Object Detection. In Proceedings of the 31st International Joint Conference on Artificial Intelligence—IJCAI, Vienna, Austria, 23–29 July 2022; pp. 1335–1341. [Google Scholar]

- Jia, Q.; Yao, S.; Liu, Y.; Fan, X.; Liu, R.; Luo, Z. Segment, Magnify and Reiterate: Detecting Camouflaged Objects the Hard Way. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4703–4712. [Google Scholar] [CrossRef]

- Yan, X.; Sun, M.; Han, Y.; Wang, Z. Camouflaged object segmentation based on matching–recognition–refinement network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 15993–16007. [Google Scholar] [CrossRef] [PubMed]

- Li, A.; Zhang, J.; Lv, Y.; Zhang, T.; Zhong, Y.; He, M.; Dai, Y. Joint salient object detection and camouflaged object detection via uncertainty-aware learning. arXiv 2023, arXiv:2307.04651. [Google Scholar]

- Zhang, Y.; Zhang, J.; Hamidouche, W.; Deforges, O. Predictive uncertainty estimation for camouflaged object detection. IEEE Trans. Image Process. 2023, 32, 3580–3591. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wu, C. Unsupervised camouflaged object segmentation as domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4334–4344. [Google Scholar]

- Wu, Z.; Paudel, D.P.; Fan, D.P.; Wang, J.; Wang, S.; Demonceaux, C.; Timofte, R.; Van Gool, L. Source-free depth for object pop-out. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1032–1042. [Google Scholar]

- Mei, H.; Ji, G.P.; Wei, Z.; Yang, X.; Wei, X.; Fan, D.P. Camouflaged object segmentation with distraction mining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8772–8781. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Xu, G.; Tang, L.; Zhang, Y.; Guo, Z.; Li, X. Weakly-supervised concealed object segmentation with sam-based pseudo labeling and multi-scale feature grouping. Adv. Neural Inf. Process. Syst. 2023, 36, 30726–30737. [Google Scholar]

- Ji, G.P.; Fan, D.P.; Chou, Y.C.; Dai, D.; Liniger, A.; Van Gool, L. Deep gradient learning for efficient camouflaged object detection. Mach. Intell. Res. 2023, 20, 92–108. [Google Scholar] [CrossRef]

- Hu, X.; Wang, S.; Qin, X.; Dai, H.; Ren, W.; Luo, D.; Tai, Y.; Shao, L. High-resolution iterative feedback network for camouflaged object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 881–889. [Google Scholar]

- Chen, H.; Wei, P.; Guo, G.; Gao, S. SAM-COD: SAM-Guided Unified Framework for Weakly-Supervised Camouflaged Object Detection. In Computer Vision–ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2024; Volume 15093, pp. 315–331. [Google Scholar] [CrossRef]

- Chen, T.; Zhu, L.; Deng, C.; Cao, R.; Wang, Y.; Zhang, S.; Li, Z.; Sun, L.; Zang, Y.; Mao, P. Sam-adapter: Adapting segment anything in underperformed scenes. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3367–3375. [Google Scholar] [CrossRef]

- Hui, W.; Zhu, Z.; Zheng, S.; Zhao, Y. Endow sam with keen eyes: Temporal-spatial prompt learning for video camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19058–19067. [Google Scholar] [CrossRef]

- Meeran, M.N.; Mantha, B.P.; Adethya T, G. SAM-PM: Enhancing video camouflaged object detection using spatio-temporal attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 1857–1866. [Google Scholar]

- Xiong, X.; Wu, Z.; Tan, S.; Li, W.; Tang, F.; Chen, Y.; Li, S.; Ma, J.; Li, G. Sam2-unet: Segment anything 2 makes strong encoder for natural and medical image segmentation. arXiv 2024, arXiv:2408.08870. [Google Scholar]

- Tang, L.; Li, B. Evaluating SAM2’s Role in Camouflaged Object Detection: From SAM to SAM2. arXiv 2024, arXiv:2407.21596. [Google Scholar]

- Tang, L.; Jiang, P.T.; Shen, Z.H.; Zhang, H.; Chen, J.W.; Li, B. Chain of visual perception: Harnessing multimodal large language models for zero-shot camouflaged object detection. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 8805–8814. [Google Scholar]

- Guan, J.; Fang, X.; Zhu, T.; Qian, W. SDRNet: Camouflaged object detection with independent reconstruction of structure and detail. Knowl.-Based Syst. 2024, 299, 112051. [Google Scholar] [CrossRef]

- Qiu, T.; Li, X.; Li, S.; Zhou, C.; Liu, K. Camouflaged Object Detection using Multi-Level Feature Cross-Fusion. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Basak, H.; Kundu, R.; Sarkar, R. MFSNet: A multi focus segmentation network for skin lesion segmentation. Pattern Recognit. 2022, 128, 108673. [Google Scholar] [CrossRef]

- Sobel, I.; Feldman, G. A 3×3 Isotropic Gradient Operator for Image Processing. Pattern Classif. Scene Anal. 1968, 271–272. Available online: https://www.researchgate.net/publication/285159837_A_33_isotropic_gradient_operator_for_image_processing (accessed on 15 June 2025).

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11591–11601. [Google Scholar]

- Cheng, M.M.; Fan, D.P. Structure-measure: A new way to evaluate foreground maps. Int. J. Comput. Vis. 2021, 129, 2622–2638. [Google Scholar] [CrossRef]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 248–255. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. arXiv 2018, arXiv:1805.10421. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar] [CrossRef]

- He, C.; Li, K.; Zhang, Y.; Zhang, Y.; Guo, Z.; Li, X.; Danelljan, M.; Yu, F. Strategic preys make acute predators: Enhancing camouflaged object detectors by generating camouflaged objects. arXiv 2023, arXiv:2308.03166. [Google Scholar]

- Chen, T.; Ruan, H.; Wang, S.; Xiao, J.; Hu, X. A three-stage model for camouflaged object detection. Neurocomputing 2025, 614, 128784. [Google Scholar] [CrossRef]

| Datasets | Training Samples | Testing Samples | Target Categories |

|---|---|---|---|

| CAMO | 1000 | 250 | 8 |

| COD10K | 3040 | 6960 | 7 |

| NC4K | 0 | 4121 | 10 |

| Method | Pub./Year | CAMO-Test | COD10K-Test | NC4K | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BGNet | IJCAI22 | 0.812 | 0.789 | 0.870 | 0.073 | 0.831 | 0.753 | 0.901 | 0.033 | 0.851 | 0.820 | 0.907 | 0.044 |

| C2F-Net-v2 | TCSVT22 | 0.799 | 0.770 | 0.859 | 0.077 | 0.811 | 0.725 | 0.887 | 0.036 | 0.840 | 0.802 | 0.896 | 0.048 |

| SegMaR | CVPR22 | 0.815 | 0.795 | 0.874 | 0.071 | 0.833 | 0.757 | 0.899 | 0.034 | 0.841 | 0.821 | 0.896 | 0.046 |

| ZoomNet | CVPR22 | 0.820 | 0.794 | 0.877 | 0.066 | 0.838 | 0.766 | 0.888 | 0.034 | 0.853 | 0.818 | 0.896 | 0.043 |

| OCENet | WACV22 | 0.802 | 0.766 | 0.852 | 0.080 | 0.827 | 0.741 | 0.894 | 0.033 | 0.853 | 0.818 | 0.902 | 0.045 |

| ERRNet | CVPR22 | 0.779 | 0.720 | 0.842 | 0.085 | 0.786 | 0.678 | 0.867 | 0.043 | 0.827 | 0.778 | 0.887 | 0.054 |

| SINet-V2 | TPMI22 | 0.820 | 0.782 | 0.882 | 0.070 | 0.815 | 0.718 | 0.887 | 0.037 | 0.847 | 0.805 | 0.903 | 0.048 |

| MRR-Net | TNNLS23 | 0.811 | 0.772 | 0.869 | 0.076 | 0.822 | 0.730 | 0.889 | 0.036 | 0.848 | 0.801 | 0.898 | 0.040 |

| UJSCOD-V2 | arXiv23 | 0.803 | 0.768 | 0.858 | 0.071 | 0.817 | 0.733 | 0.895 | 0.033 | 0.856 | 0.824 | 0.913 | 0.040 |

| PUENet | TIP23 | 0.794 | 0.762 | 0.857 | 0.080 | 0.813 | 0.727 | 0.887 | 0.035 | 0.836 | 0.798 | 0.892 | 0.050 |

| WS-SAM | NeurIPS23 | 0.759 | 0.742 | 0.818 | 0.092 | 0.803 | 0.719 | 0.878 | 0.038 | 0.829 | 0.802 | 0.886 | 0.052 |

| FEDER | CVPR23 | 0.807 | 0.785 | 0.873 | 0.069 | 0.823 | 0.764 | 0.900 | 0.032 | 0.846 | 0.817 | 0.905 | 0.045 |

| PopNet | ICCV23 | 0.808 | 0.784 | 0.859 | 0.077 | 0.851 | 0.786 | 0.910 | 0.028 | 0.861 | 0.833 | 0.909 | 0.042 |

| DGNet | MIR23 | 0.839 | 0.806 | 0.901 | 0.057 | 0.822 | 0.728 | 0.896 | 0.033 | 0.857 | 0.814 | 0.911 | 0.042 |

| UCOS-DA | ICCV23 | 0.701 | 0.646 | 0.784 | 0.127 | 0.689 | 0.546 | 0.740 | 0.096 | 0.755 | 0.689 | 0.819 | 0.085 |

| SAM | ICCV23 | 0.684 | 0.680 | 0.687 | 0.132 | 0.783 | 0.756 | 0.798 | 0.050 | 0.767 | 0.752 | 0.776 | 0.078 |

| FSPNet | CVPR23 | 0.857 | 0.830 | 0.899 | 0.050 | 0.851 | 0.769 | 0.895 | 0.026 | 0.879 | 0.843 | 0.915 | 0.035 |

| SAM2 | airXiv24 | 0.444 | 0.207 | 0.401 | 0.236 | 0.549 | 0.291 | 0.521 | 0.134 | 0.512 | 0.268 | 0.482 | 0.186 |

| Camouflageator | ICLR24 | 0.829 | 0.766 | 0.843 | 0.066 | 0.843 | 0.763 | 0.920 | 0.028 | 0.869 | 0.835 | 0.922 | 0.041 |

| CamoFormer | TPAMI24 | 0.875 | 0.832 | 0.930 | 0.043 | 0.862 | 0.773 | 0.931 | 0.024 | 0.888 | 0.840 | 0.935 | 0.032 |

| SAM2-DFBCNet | Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 | 0.901 | 0.847 | 0.935 | 0.030 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| Base | 0.863 | 0.806 | 0.905 | 0.054 | 0.850 | 0.725 | 0.895 | 0.032 |

| Base + CACEM | 0.872 | 0.820 | 0.914 | 0.045 | 0.872 | 0.778 | 0.925 | 0.026 |

| Base + CSFIB | 0.874 | 0.822 | 0.923 | 0.043 | 0.873 | 0.779 | 0.927 | 0.024 |

| Base + DBRM | 0.873 | 0.835 | 0.922 | 0.043 | 0.873 | 0.783 | 0.930 | 0.024 |

| Base + CACEM + CSFIB | 0.885 | 0.846 | 0.926 | 0.043 | 0.880 | 0.788 | 0.933 | 0.023 |

| Base + CACEM + DBRM | 0.884 | 0.849 | 0.928 | 0.042 | 0.882 | 0.789 | 0.936 | 0.021 |

| Base + CSFIB + DBRM | 0.888 | 0.852 | 0.927 | 0.042 | 0.883 | 0.789 | 0.934 | 0.022 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| w/o LocalAtt | 0.885 | 0.847 | 0.925 | 0.043 | 0.882 | 0.787 | 0.934 | 0.021 |

| w/o GlobalAtt | 0.879 | 0.840 | 0.923 | 0.045 | 0.881 | 0.785 | 0.933 | 0.022 |

| w/o BothAtt | 0.879 | 0.812 | 0.908 | 0.051 | 0.870 | 0.740 | 0.915 | 0.027 |

| 4−Uniform | 0.885 | 0.856 | 0.928 | 0.041 | 0.885 | 0.792 | 0.936 | 0.021 |

| 8−Uniform | 0.887 | 0.843 | 0.927 | 0.042 | 0.883 | 0.790 | 0.935 | 0.021 |

| 2−4−6−8−Variable | 0.889 | 0.854 | 0.931 | 0.041 | 0.882 | 0.786 | 0.933 | 0.022 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| w/o GRU | 0.885 | 0.848 | 0.929 | 0.042 | 0.882 | 0.788 | 0.934 | 0.023 |

| w/o UpSample | 0.885 | 0.844 | 0.925 | 0.044 | 0.882 | 0.785 | 0.933 | 0.023 |

| Conv−Variant | 0.886 | 0.843 | 0.928 | 0.041 | 0.883 | 0.792 | 0.935 | 0.021 |

| AF | 0.885 | 0.850 | 0.931 | 0.041 | 0.882 | 0.791 | 0.936 | 0.021 |

| GF | 0.886 | 0.843 | 0.926 | 0.043 | 0.883 | 0.790 | 0.933 | 0.021 |

| LSTM | 0.886 | 0.853 | 0.930 | 0.043 | 0.882 | 0.789 | 0.934 | 0.022 |

| VGRU | 0.885 | 0.852 | 0.927 | 0.041 | 0.883 | 0.790 | 0.936 | 0.021 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| w/o CA | 0.888 | 0.845 | 0.930 | 0.042 | 0.881 | 0.789 | 0.933 | 0.024 |

| w/o SA | 0.889 | 0.854 | 0.929 | 0.041 | 0.883 | 0.790 | 0.935 | 0.022 |

| w/o Refine | 0.886 | 0.848 | 0.926 | 0.042 | 0.882 | 0.789 | 0.935 | 0.022 |

| w/o Boundary | 0.880 | 0.845 | 0.925 | 0.044 | 0.881 | 0.785 | 0.927 | 0.025 |

| SA → CA | 0.887 | 0.856 | 0.925 | 0.042 | 0.882 | 0.786 | 0.934 | 0.022 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| SAM2-DFBCNet-T | 0.826 | 0.784 | 0.870 | 0.066 | 0.827 | 0.709 | 0.885 | 0.035 |

| SAM2-DFBCNet-S | 0.850 | 0.811 | 0.890 | 0.057 | 0.845 | 0.730 | 0.902 | 0.030 |

| SAM2-DFBCNet+ | 0.864 | 0.818 | 0.904 | 0.052 | 0.857 | 0.752 | 0.911 | 0.027 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Configurations | CAMO | COD10K | ||||||

|---|---|---|---|---|---|---|---|---|

| w/o multi-scale | 0.892 | 0.858 | 0.932 | 0.041 | 0.881 | 0.786 | 0.933 | 0.021 |

| w/o boudary-loss | 0.885 | 0.848 | 0.926 | 0.043 | 0.879 | 0.783 | 0.932 | 0.023 |

| Ours | 0.893 | 0.858 | 0.934 | 0.040 | 0.884 | 0.793 | 0.936 | 0.021 |

| Metrics | BGNet | SiNet−V2 | C2FNetV2 | FEDER | FSPNet | CamoFormer | Ours |

| Params (M) ↓ | 58.38 | 12.31 | 18.19 | 35.92 | 278.79 | 71.40 | 221.99 |

| FLOPs (G) ↓ | 77.80 | 27.98 | 44.94 | 42.09 | 285.37 | 47.27 | 131.32 |

| Speed (FPS) ↑ | 23.27 | 22.73 | 16.05 | 12.70 | 40.26 | 17.53 | 19.05 |

| Metrics | SAM2-DFBCNet+ | SAM2-DFBCNet-S | SAM2-DFBCNet-T | ||||

| Params (M) ↓ | 32.07 | 39.26 | 75.19 | ||||

| FLOPs (G) ↓ | 41.80 | 24.48 | 20.30 | ||||

| Speed (FPS) ↑ | 37.23 | 46.05 | 49.72 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, C.; Liu, L.; Li, Y.; Li, J. SAM2-DFBCNet: A Camouflaged Object Detection Network Based on the Heira Architecture of SAM2. Sensors 2025, 25, 4509. https://doi.org/10.3390/s25144509

Yuan C, Liu L, Li Y, Li J. SAM2-DFBCNet: A Camouflaged Object Detection Network Based on the Heira Architecture of SAM2. Sensors. 2025; 25(14):4509. https://doi.org/10.3390/s25144509

Chicago/Turabian StyleYuan, Cao, Libang Liu, Yaqin Li, and Jianxiang Li. 2025. "SAM2-DFBCNet: A Camouflaged Object Detection Network Based on the Heira Architecture of SAM2" Sensors 25, no. 14: 4509. https://doi.org/10.3390/s25144509

APA StyleYuan, C., Liu, L., Li, Y., & Li, J. (2025). SAM2-DFBCNet: A Camouflaged Object Detection Network Based on the Heira Architecture of SAM2. Sensors, 25(14), 4509. https://doi.org/10.3390/s25144509