Extraction of Clinically Relevant Temporal Gait Parameters from IMU Sensors Mimicking the Use of Smartphones

Abstract

Highlights

- Single IMU + CNN-LSTM predicts stride time with <5% errors across hand, pocket, and jacket placements.

- Stance/swing times show moderate errors; double support > 20%, yet all correlate moderately strongly with lab data.

- Smartphone-based IMU enables remote, real-world gait tracking.

- Robust predictions across positions and speeds support scalable monitoring of gait disorders.

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Experimental Design and Instrumentation

2.3. Data Processing

2.4. Statistical Analysis

3. Results

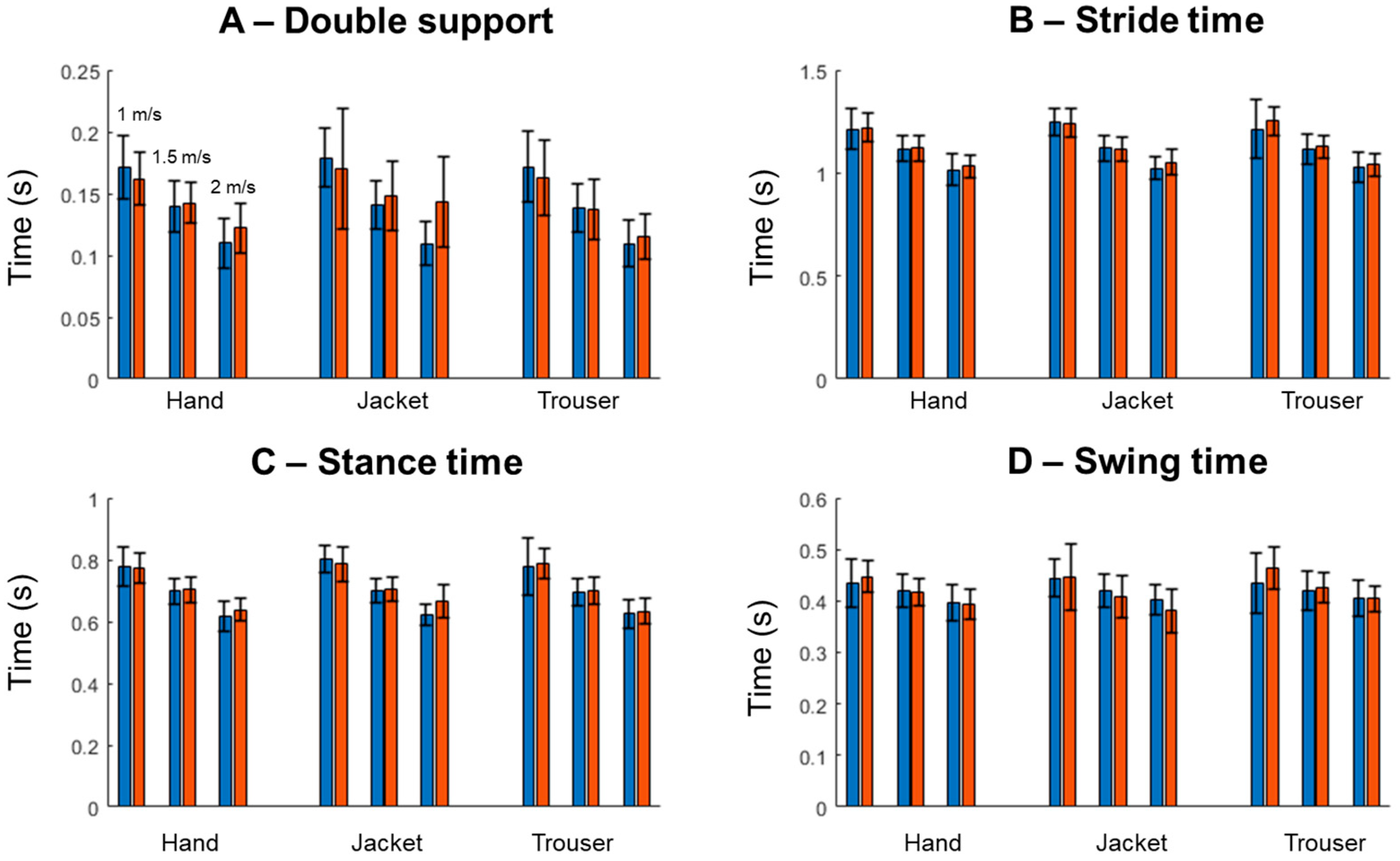

3.1. Comparison Experimental vs. Predicted Gait Parameters

3.2. Relative Error in Predicting Gait Parameters

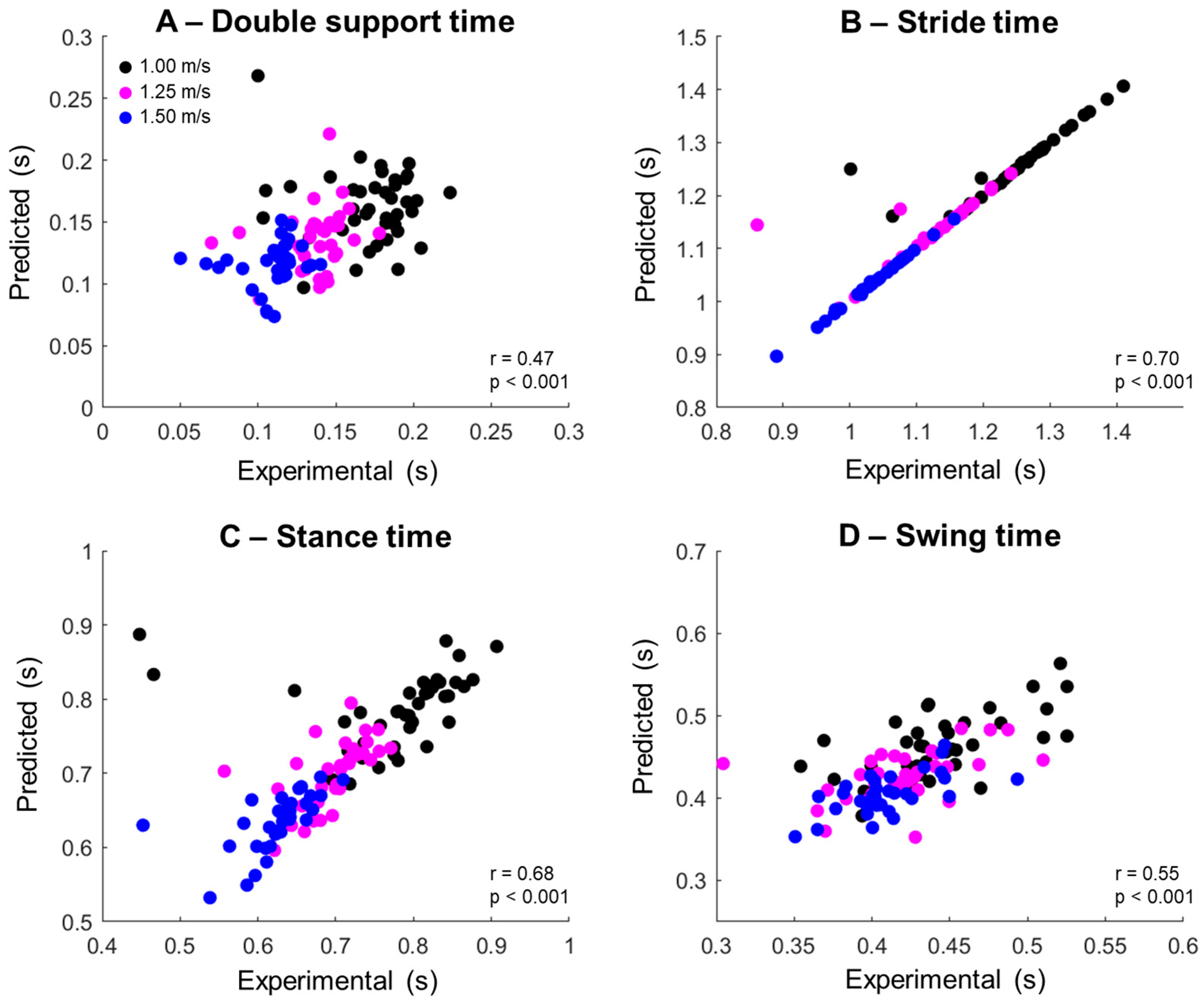

3.3. Inter-Subject Association Between the Experimental and Predicted Gait Parameters

4. Discussion

4.1. Parameter-Dependent Relative Errors

4.2. Fixed vs. Non-Fixed IMU Placement for Gait Assessments

4.3. Limitations of the Study

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pepa, L.; Verdini, F.; Spalazzi, L. Gait parameter and event estimation using smartphones. Gait Posture 2017, 57, 217–223. [Google Scholar] [CrossRef] [PubMed]

- Romijnders, R.; Warmerdam, E.; Hansen, C.; Schmidt, G.; Maetzler, W. A Deep Learning Approach for Gait Event Detection from a Single Shank-Worn IMU: Validation in Healthy and Neurological Cohorts. Sensors 2022, 22, 3859. [Google Scholar] [CrossRef] [PubMed]

- Seo, K.; Jung, J.; Hwang, J.; Kim, K.; Kim, S.-H. Assessing the Impact of IMU Sensor Location on Spatio-Temporal Gait Parameter Estimation. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Shema-Shiratzky, S.; Beer, Y.; Mor, A.; Elbaz, A. Smartphone-based inertial sensors technology—Validation of a new application to measure spatiotemporal gait metrics. Gait Posture 2022, 93, 102–106. [Google Scholar] [CrossRef] [PubMed]

- Christensen, J.C.; Stanley, E.C.; Oro, E.G.; Carlson, H.B.; Naveh, Y.Y.; Shalita, R.; Teitz, L.S. The validity and reliability of the OneStep smartphone application under various gait conditions in healthy adults with feasibility in clinical practice. J. Orthop. Surg. Res. 2022, 17, 417. [Google Scholar] [CrossRef] [PubMed]

- Contreras, C.; Stanley, E.C.; Deschamps-Prescott, C.; Burnap, S.; Hopkins, M.; Browning, B.; Christensen, J.C. Evaluation of Smartphone Technology on Spatiotemporal Gait in Older and Diseased Adult Populations. Sensors 2024, 24, 5839. [Google Scholar] [CrossRef] [PubMed]

- Olsen, S.; Rashid, U.; Barbado, D.; Suresh, P.; Alder, G.; Niazi, I.K.; Taylor, D. The validity of smartphone-based spatiotemporal gait measurements during walking with and without head turns: Comparison with the GAITRite® system. J. Biomech. 2024, 162, 111899. [Google Scholar] [CrossRef] [PubMed]

- Shahar, R.T.; Agmon, M. Gait Analysis Using Accelerometry Data from a Single Smartphone: Agreement and Consistency between a Smartphone Application and Gold-Standard Gait Analysis System. Sensors 2021, 21, 7497. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Lockhart, T.; Kim, S. Prediction of the Spatio-Temporal Gait Parameters Using Inertial Sensor. J. Mech. Med. Biol. 2018, 18, 1840002. [Google Scholar] [CrossRef]

- Larsen, A.G.; Sadolin, L.Ø.; Thomsen, T.R.; Oliveira, A.S. Accurate detection of gait events using neural networks and IMU data mimicking real-world smartphone usage. Comput. Methods Biomech. Biomed. Eng. 2024; ahead of print. [Google Scholar] [CrossRef]

- Akoglu, H. User’s guide to correlation coefficients. Turk. J. Emerg. Med. 2018, 18, 91–93. [Google Scholar] [CrossRef] [PubMed]

- Banos, O.; Galvez, J.-M.; Damas, M.; Pomares, H.; Rojas, I. Window size impact in human activity recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Sharma, Y.; Cheung, L.; Patterson, K.K.; Iaboni, A. Factors influencing the clinical adoption of quantitative gait analysis technology with a focus on clinical efficacy and clinician perspectives: A scoping review. Gait Posture 2024, 108, 228–242. [Google Scholar] [CrossRef] [PubMed]

- Chiaramonte, R.; Cioni, M. Critical spatiotemporal gait parameters for individuals with dementia: A systematic review and meta-analysis. Hong Kong Physiother. J. 2021, 41, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Rössler, R.; Wagner, J.; Knaier, R.; Rommers, N.; Kressig, R.W.; Schmidt-Trucksäss, A.; Hinrichs, T. Spatiotemporal gait characteristics across the adult lifespan: Reference values from a healthy population—Analysis of the COmPLETE cohort study. Gait Posture 2024, 109, 101–108. [Google Scholar] [CrossRef] [PubMed]

- Prasanth, H.; Caban, M.; Keller, U.; Courtine, G.; Ijspeert, A.; Vallery, H.; von Zitzewitz, J. Wearable Sensor-Based Real-Time Gait Detection: A Systematic Review. Sensors 2021, 21, 2727. [Google Scholar] [CrossRef] [PubMed]

- Laitala, J.; Jiang, M.; Syrjälä, E.; Naeini, E.K.; Airola, A.; Rahmani, A.M.; Dutt, N.D.; Liljeberg, P. Robust ECG R-peak detection using LSTM. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, New York, NY, USA, 30 March–3 April 2020; pp. 1104–1111. [Google Scholar] [CrossRef]

- Kazemi, K.; Laitala, J.; Azimi, I.; Liljeberg, P.; Rahmani, A.M. Robust PPG Peak Detection Using Dilated Convolutional Neural Networks. Sensors 2022, 22, 6054. [Google Scholar] [CrossRef] [PubMed]

- Zampier, V.C.; Simonsen, M.B.; Barbieri, F.A.; Oliveira, A.S. On the accuracy of methods identifying gait events using optical motion capture and a single inertial measurement unit on the sacrum. bioRxiv 2025. preprint. [Google Scholar] [CrossRef]

- Zeni, J.A.; Richards, J.G.; Higginson, J.S. Two simple methods for determining gait events during treadmill and overground walking using kinematic data. Gait Posture 2008, 27, 710–714. [Google Scholar] [CrossRef] [PubMed]

- Semaan, M.B.; Wallard, L.; Ruiz, V.; Gillet, C.; Leteneur, S.; Simoneau-Buessinger, E. Is treadmill walking biomechanically comparable to overground walking? A systematic review. Gait Posture 2022, 92, 249–257. [Google Scholar] [CrossRef] [PubMed]

| Double Support Time | Stride Time | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Median | IQR | CI Low | CI High | Median | IQR | CI Low | CI High | ||

| Hand | 31.1 | 21.0 | 30.7 | 40.5 | 3.6 | 3.0 | 3.6 | 8.0 | |

| 1.00 | Jacket | 42.7 | 20.8 | 40.3 | 54.2 | 4.5 | 4.7 | 4.6 | 8.5 |

| Trouser | 35.0 | 19.0 | 30.6 | 50.0 | 2.6 | 1.4 | 1.9 | 13.6 | |

| Hand | 29.9 | 22.5 | 30.9 | 42.3 | 3.0 | 1.6 | 3.2 | 5.3 | |

| 1.25 | Jacket | 46.9 | 27.8 | 45.1 | 60.5 | 4.6 | 6.1 | 5.2 | 9.7 |

| Trouser | 32.7 | 20.7 | 31.1 | 44.3 | 2.4 | 0.9 | 1.8 | 5.4 | |

| Hand | 47.4 | 21.2 | 43.4 | 60.4 | 3.3 | 5.1 | 3.8 | 9.7 | |

| 1.50 | Jacket | 73.4 | 72.3 | 71.8 | 109.7 | 6.0 | 11.4 | 6.4 | 14.2 |

| Trouser | 34.4 | 23.7 | 33.8 | 52.3 | 2.4 | 0.6 | 1.4 | 5.8 | |

| Stance Time | Swing Time | ||||||||

| Median | IQR | CI Low | CI High | Median | IQR | CI Low | CI High | ||

| Hand | 7.5 | 4.6 | 7.5 | 11.3 | 13.2 | 8.8 | 13.3 | 19.7 | |

| 1.00 | Jacket | 10.3 | 5.4 | 10.4 | 14.8 | 17.7 | 11.1 | 18.6 | 27.1 |

| Trouser | 7.6 | 4.1 | 6.5 | 18.8 | 13.4 | 8.1 | 12.8 | 23.1 | |

| Hand | 7.2 | 5.1 | 7.1 | 10.0 | 11.5 | 7.3 | 11.3 | 14.5 | |

| 1.25 | Jacket | 10.1 | 7.3 | 9.6 | 15.6 | 17.0 | 10.0 | 16.0 | 22.5 |

| Trouser | 7.3 | 4.2 | 6.6 | 9.7 | 11.3 | 6.1 | 10.2 | 15.4 | |

| Hand | 10.6 | 9.6 | 9.6 | 16.1 | 13.5 | 7.2 | 12.6 | 18.0 | |

| 1.5 | Jacket | 15.7 | 19.0 | 15.9 | 26.2 | 20.7 | 19.8 | 19.8 | 29.1 |

| Trouser | 6.2 | 3.4 | 5.7 | 10.4 | 9.9 | 4.9 | 9.3 | 13.5 | |

| Double Support | Stride Time | Stance Time | Swing Time | ||

|---|---|---|---|---|---|

| Hand | −0.001 | 0.804 ** | 0.695 ** | 0.492 * | |

| 1.0 m/s | Jacket | −0.151 | 0.989 ** | 0.761 ** | 0.535 ** |

| Trouser | 0.026 | 0.614 * | 0.438 * | 0.451 * | |

| Hand | 0.060 | 0.951 ** | 0.527 ** | 0.484 ** | |

| 1.25 m/s | Jacket | −0.092 | 0.941 ** | 0.480 * | 0.525 ** |

| Trouser | 0.255 | 0.554 * | 0.498 * | 0.296 | |

| Hand | −0.130 | 0.344 * | 0.086 * | 0.541 ** | |

| 1.5 m/s | Jacket | 0.241 | 0.727 ** | 0.608 ** | 0.464 * |

| Trouser | 0.160 | 0.744 ** | 0.614 ** | 0.545 ** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Larsen, A.G.; Sadolin, L.Ø.; Thomsen, T.R.; Oliveira, A.S. Extraction of Clinically Relevant Temporal Gait Parameters from IMU Sensors Mimicking the Use of Smartphones. Sensors 2025, 25, 4470. https://doi.org/10.3390/s25144470

Larsen AG, Sadolin LØ, Thomsen TR, Oliveira AS. Extraction of Clinically Relevant Temporal Gait Parameters from IMU Sensors Mimicking the Use of Smartphones. Sensors. 2025; 25(14):4470. https://doi.org/10.3390/s25144470

Chicago/Turabian StyleLarsen, Aske G., Line Ø. Sadolin, Trine R. Thomsen, and Anderson S. Oliveira. 2025. "Extraction of Clinically Relevant Temporal Gait Parameters from IMU Sensors Mimicking the Use of Smartphones" Sensors 25, no. 14: 4470. https://doi.org/10.3390/s25144470

APA StyleLarsen, A. G., Sadolin, L. Ø., Thomsen, T. R., & Oliveira, A. S. (2025). Extraction of Clinically Relevant Temporal Gait Parameters from IMU Sensors Mimicking the Use of Smartphones. Sensors, 25(14), 4470. https://doi.org/10.3390/s25144470