Smartphone-Based Sensing System for Identifying Artificially Marbled Beef Using Texture and Color Analysis to Enhance Food Safety

Abstract

1. Introduction

1.1. Appearance Difference Between Japanese Wagyu Beef and Fat-Injected Beef

1.2. Research Motivation and Purpose

1.3. Study Limitations

2. Literature Review

2.1. Overview of Beef Inspection and Quality Grades

2.2. Detection Techniques for Beef Marbling

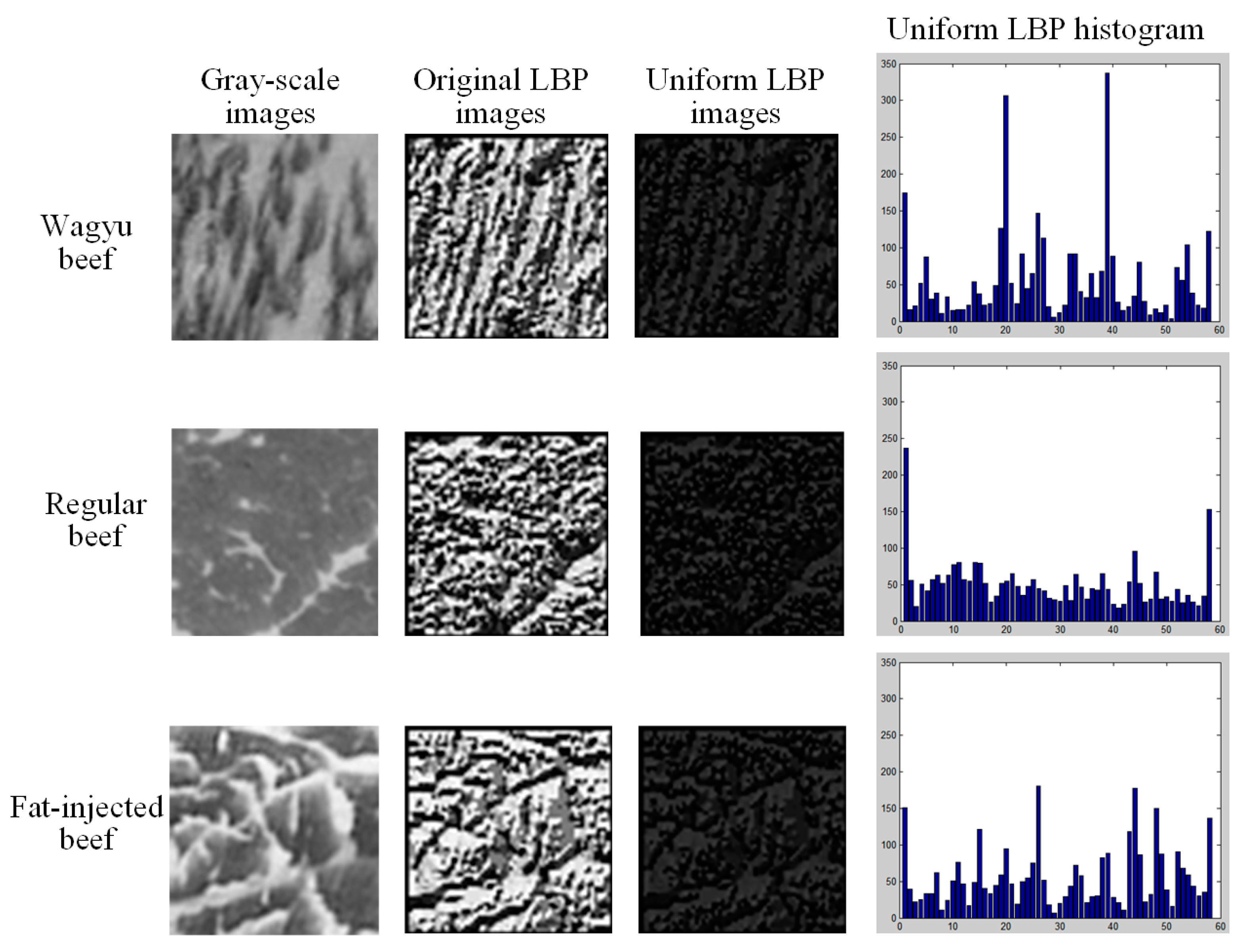

2.3. Texture-Based Analysis Methods for Beef Marbling

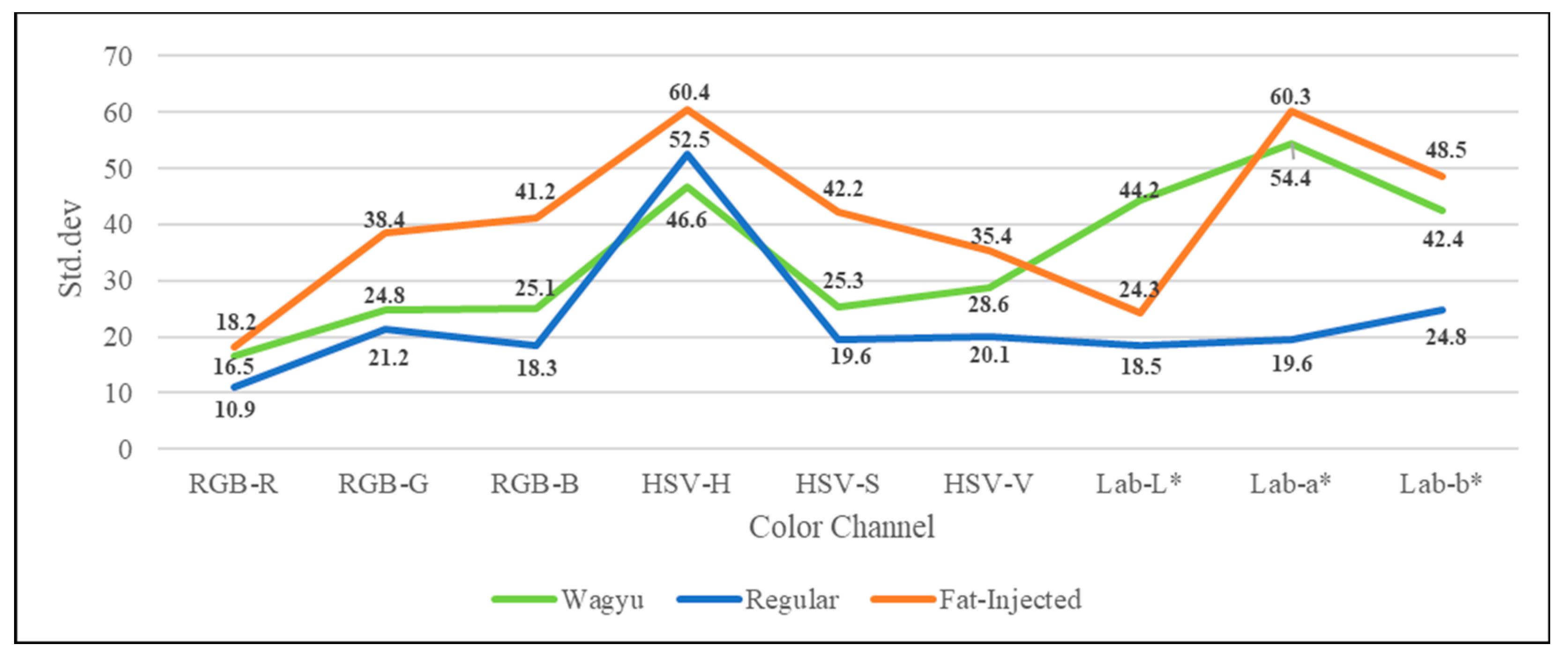

2.4. Color-Based Analysis Models for Beef Marbling

2.5. Machine Learning Approaches for Classification

3. Proposed Methods

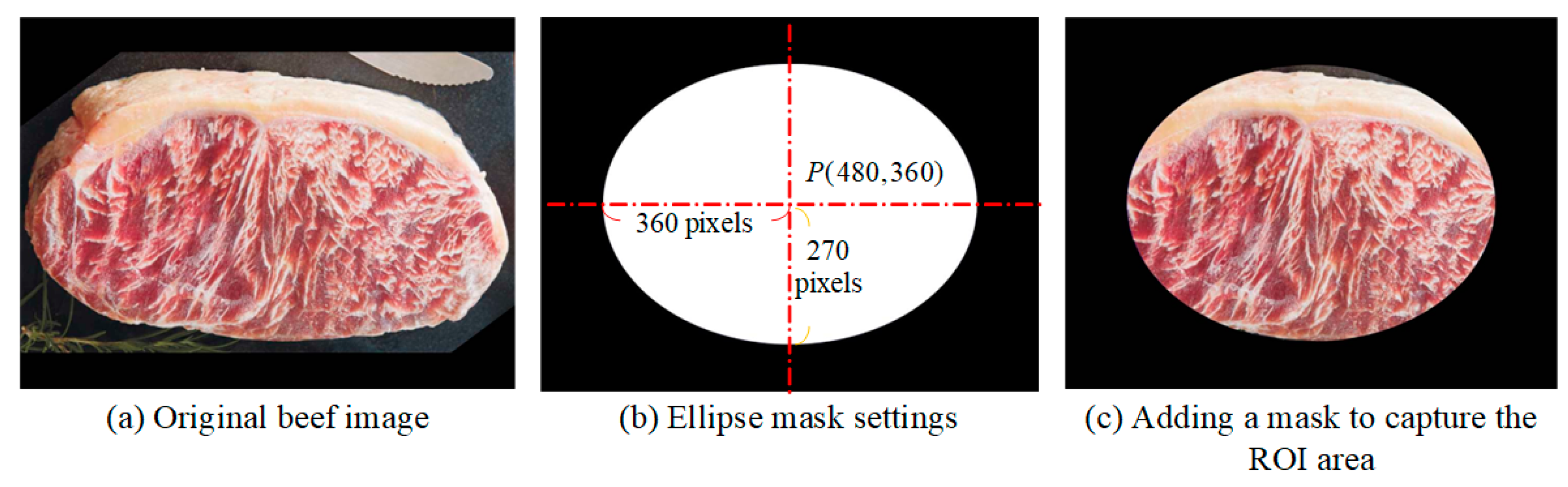

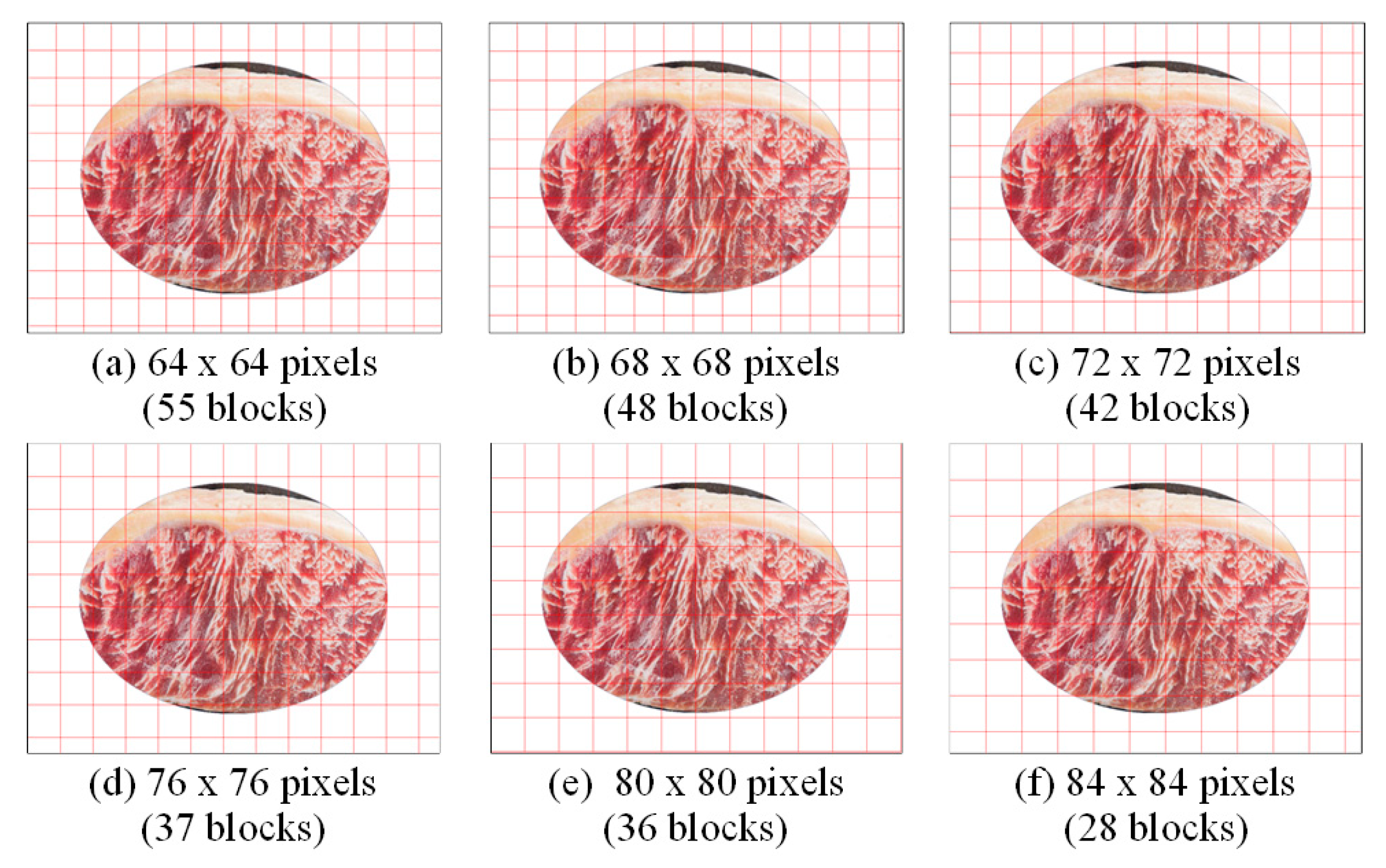

3.1. ROI Extraction and Gridding in a Beef Image

3.2. Feature Extraction in a Gridded ROI Beef Image

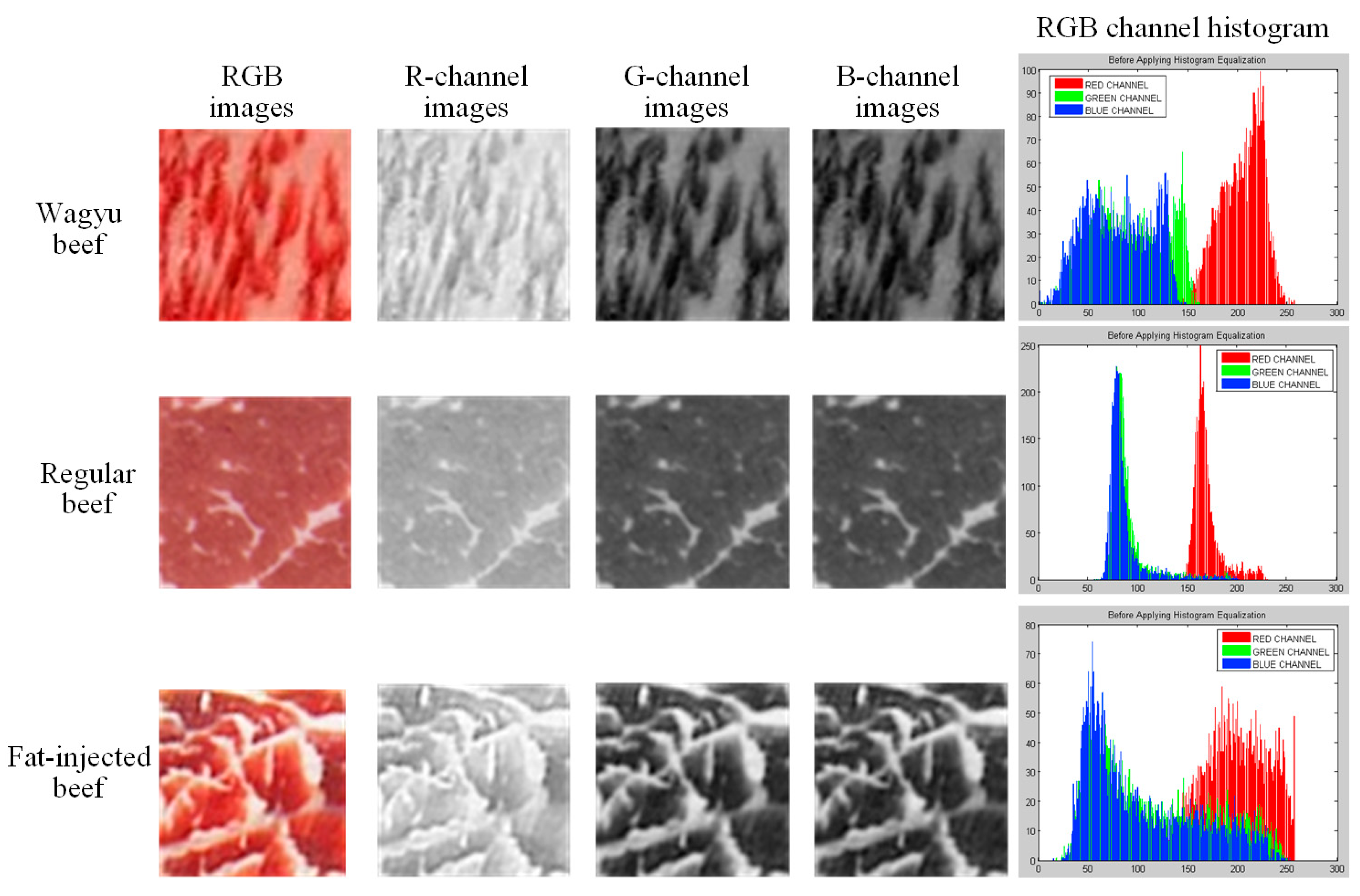

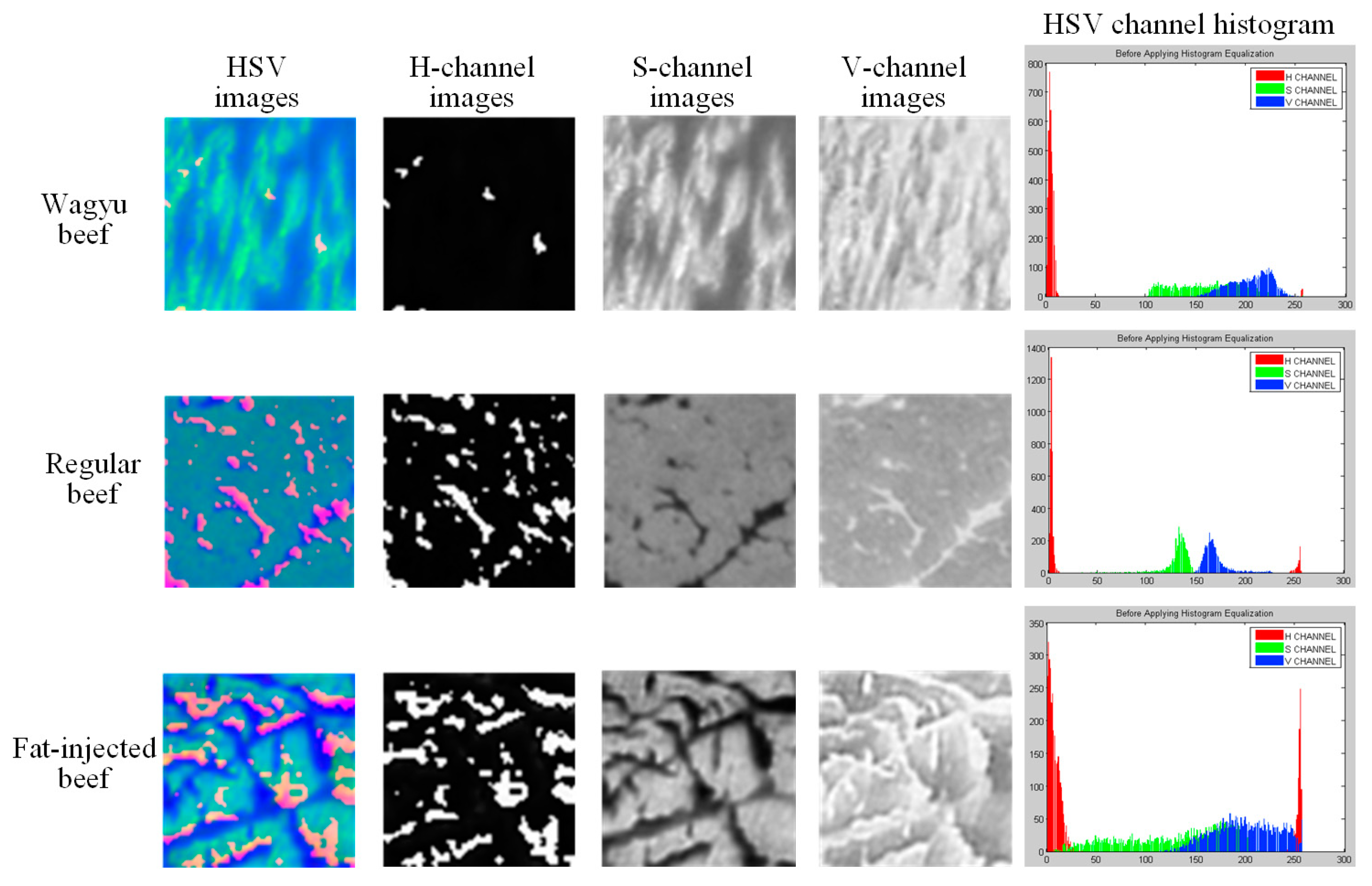

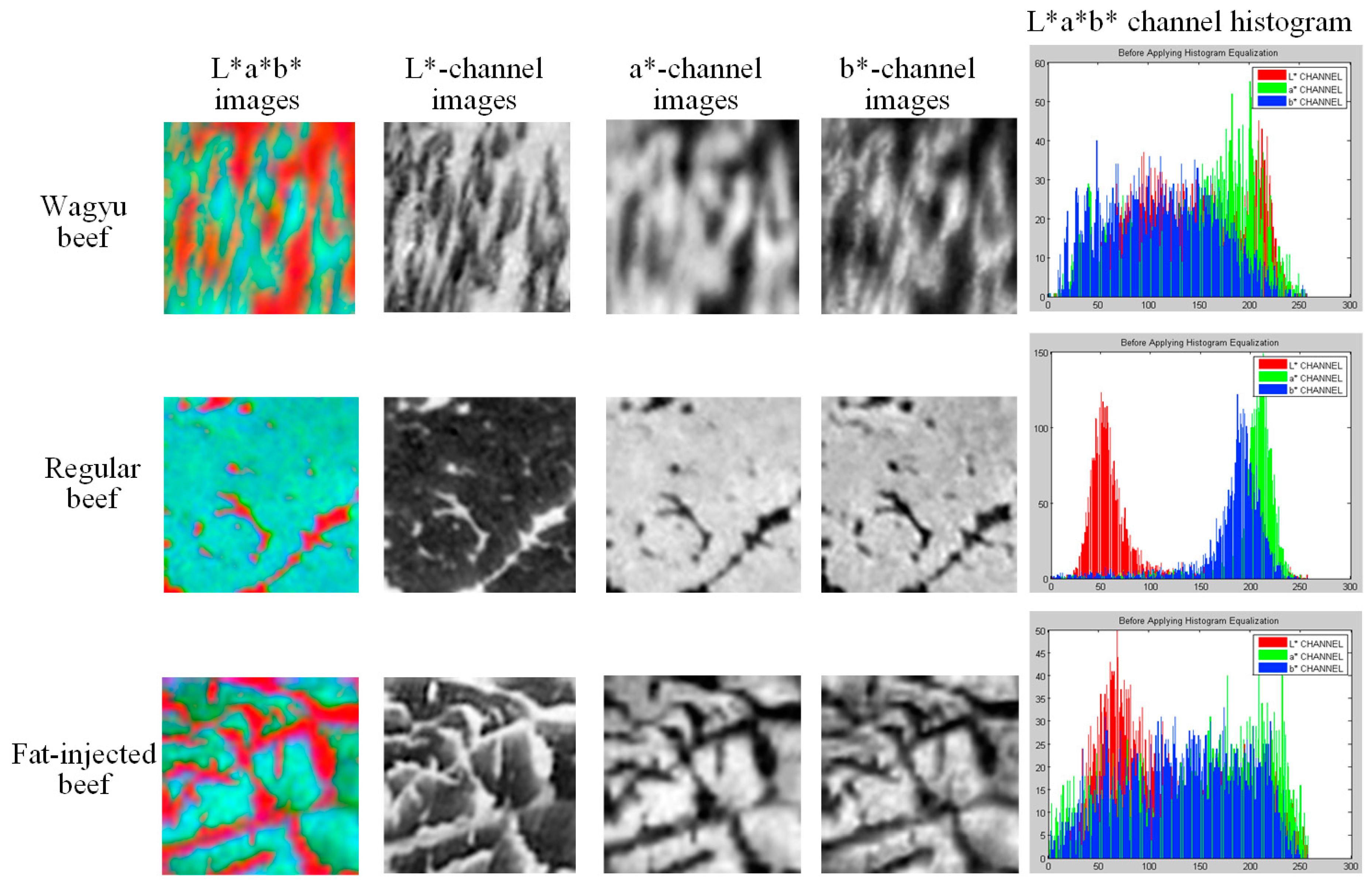

3.2.1. Color Models of Beef Color Features

3.2.2. LBP Model of Beef Texture Features

3.3. Machine Learning Models Applied to Artificially Marbled Beef Detection

3.3.1. SVM Model

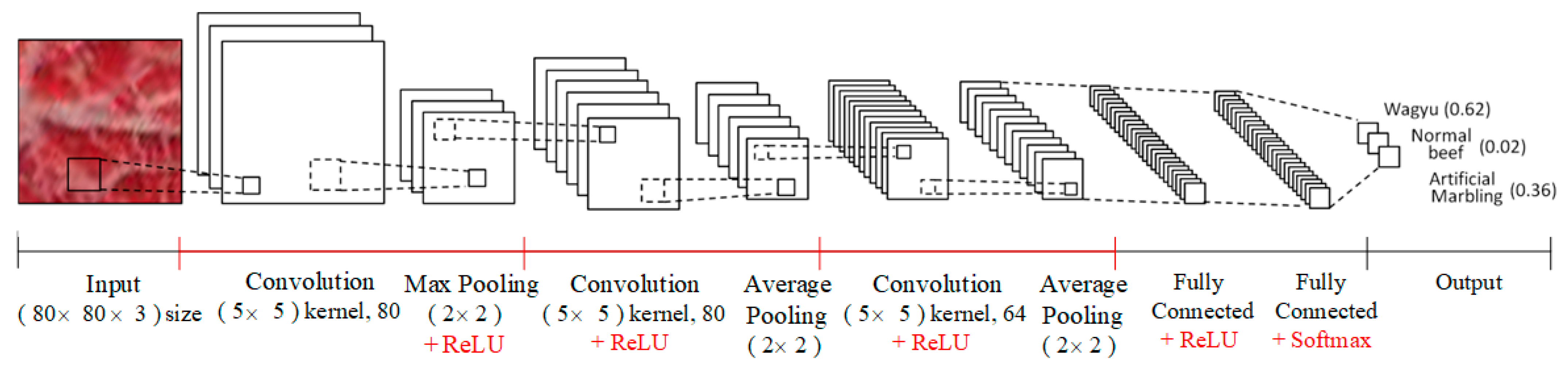

3.3.2. CNN Model

3.4. Artificially Marbled Beef Detection System

3.4.1. Image Capture and System Requirements

3.4.2. Performance Evaluation Metrics

- Performance evaluation based on the block level

- 2.

- Performance evaluation based on the image level

4. Experiments and Results

4.1. Parameter Optimization Results

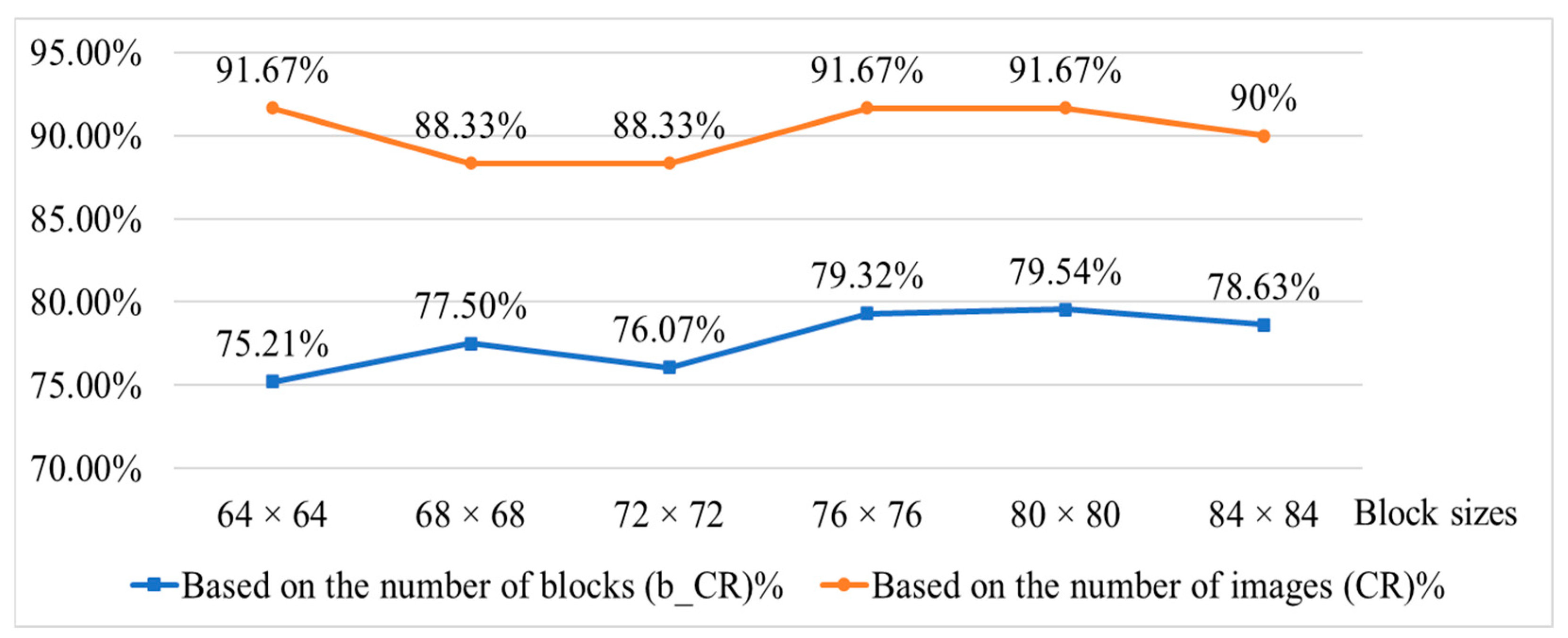

4.1.1. Parameter Setting of Grid Block Size

4.1.2. Parameter Setting of LBP Feature Operator

4.1.3. Parameter Setting of SVM Classification Model

4.1.4. Feature Vector Setting for Different Feature Pattern Combinations

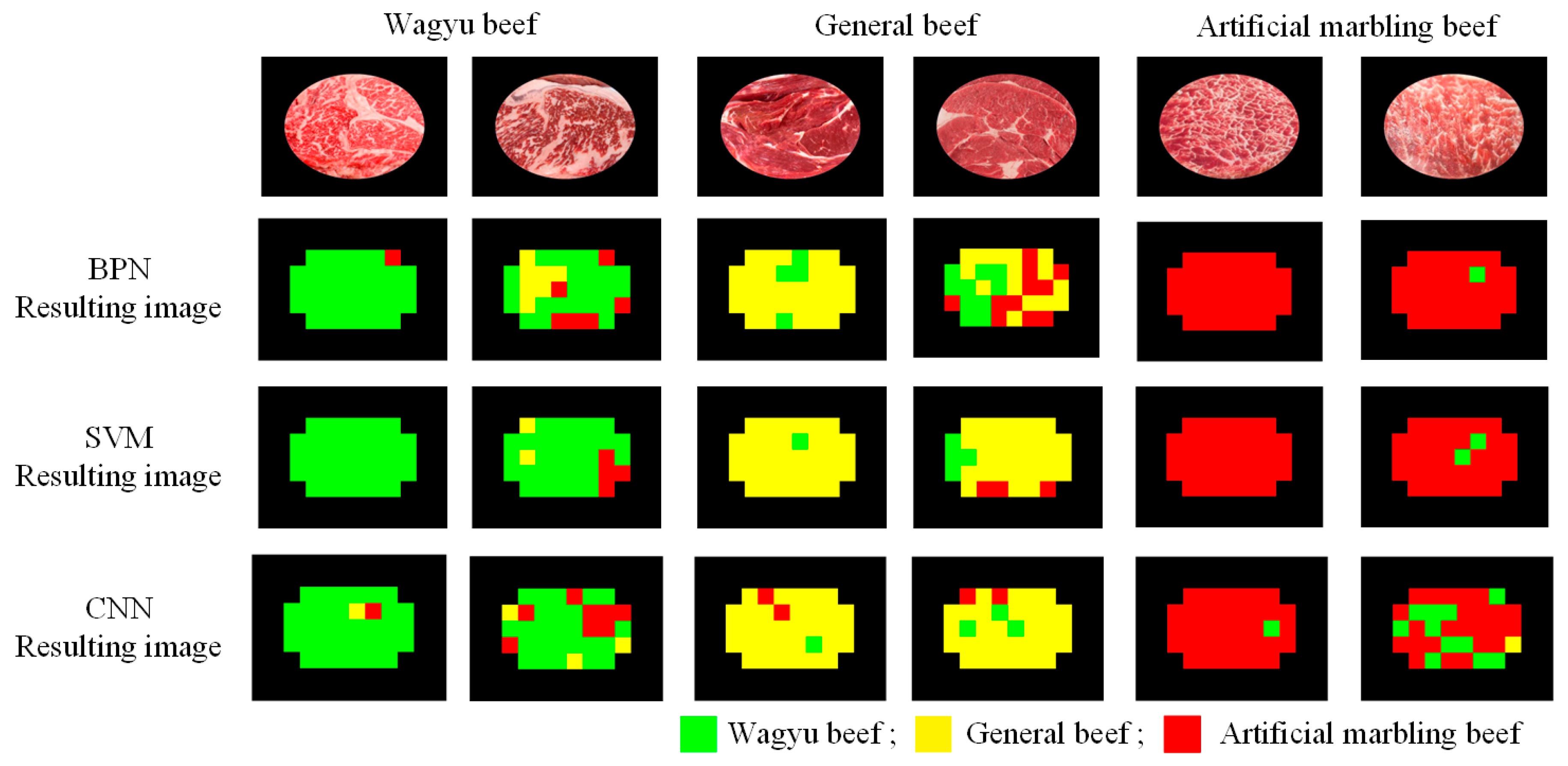

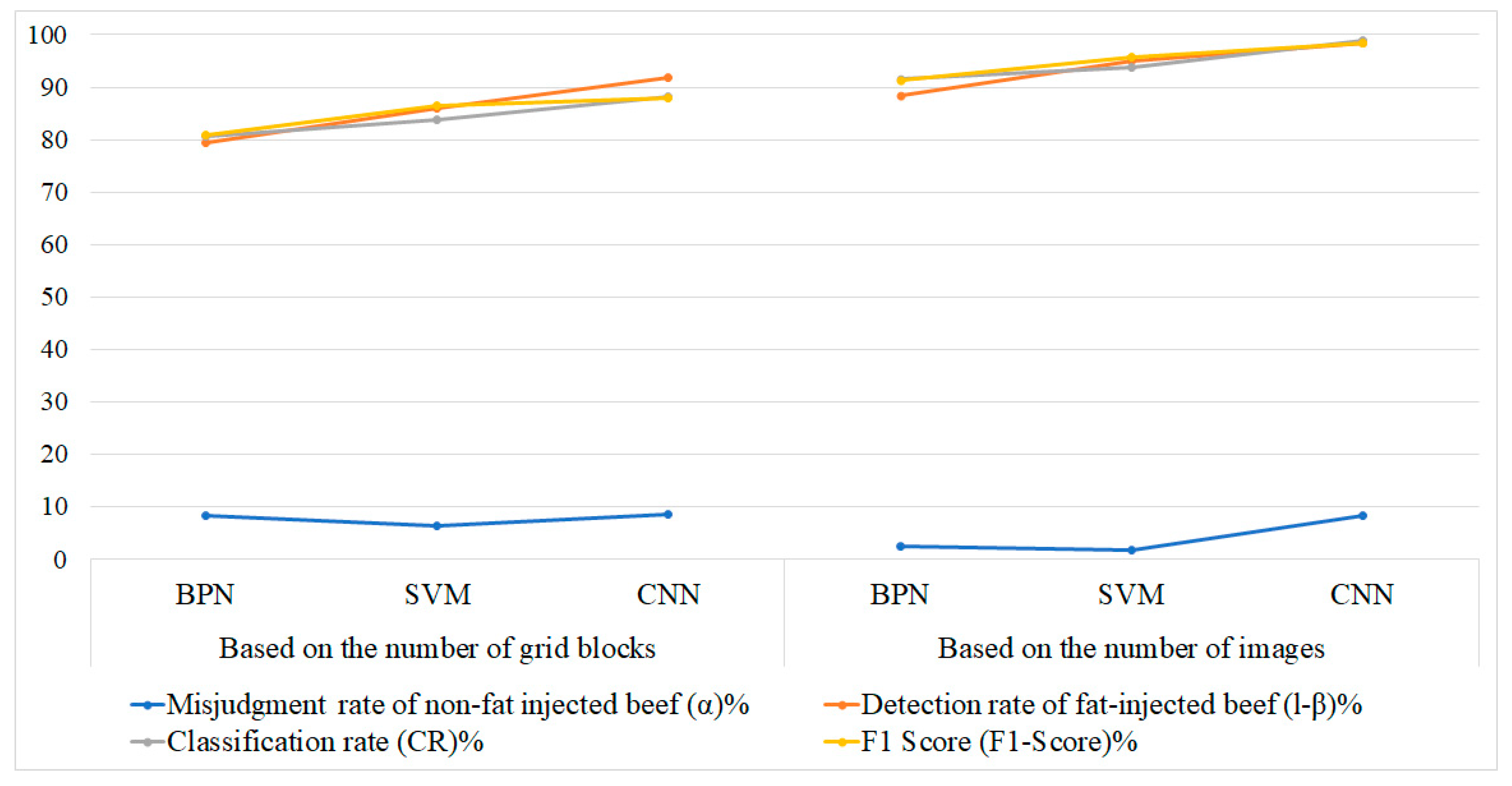

4.2. Performance Results of Large-Sample Experiments

4.3. Impact of External Factors

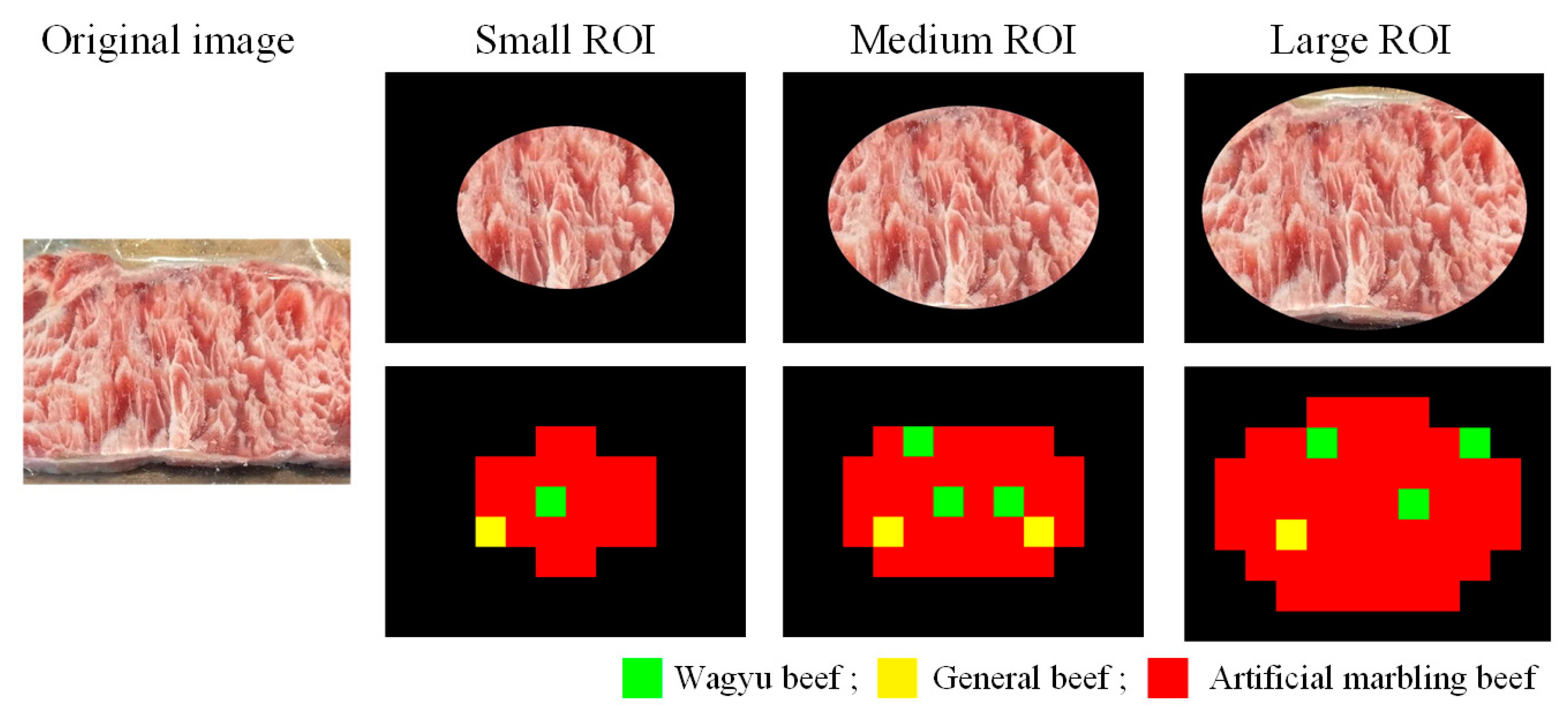

4.3.1. The Impact of ROI Mask Size on Detection Effectiveness

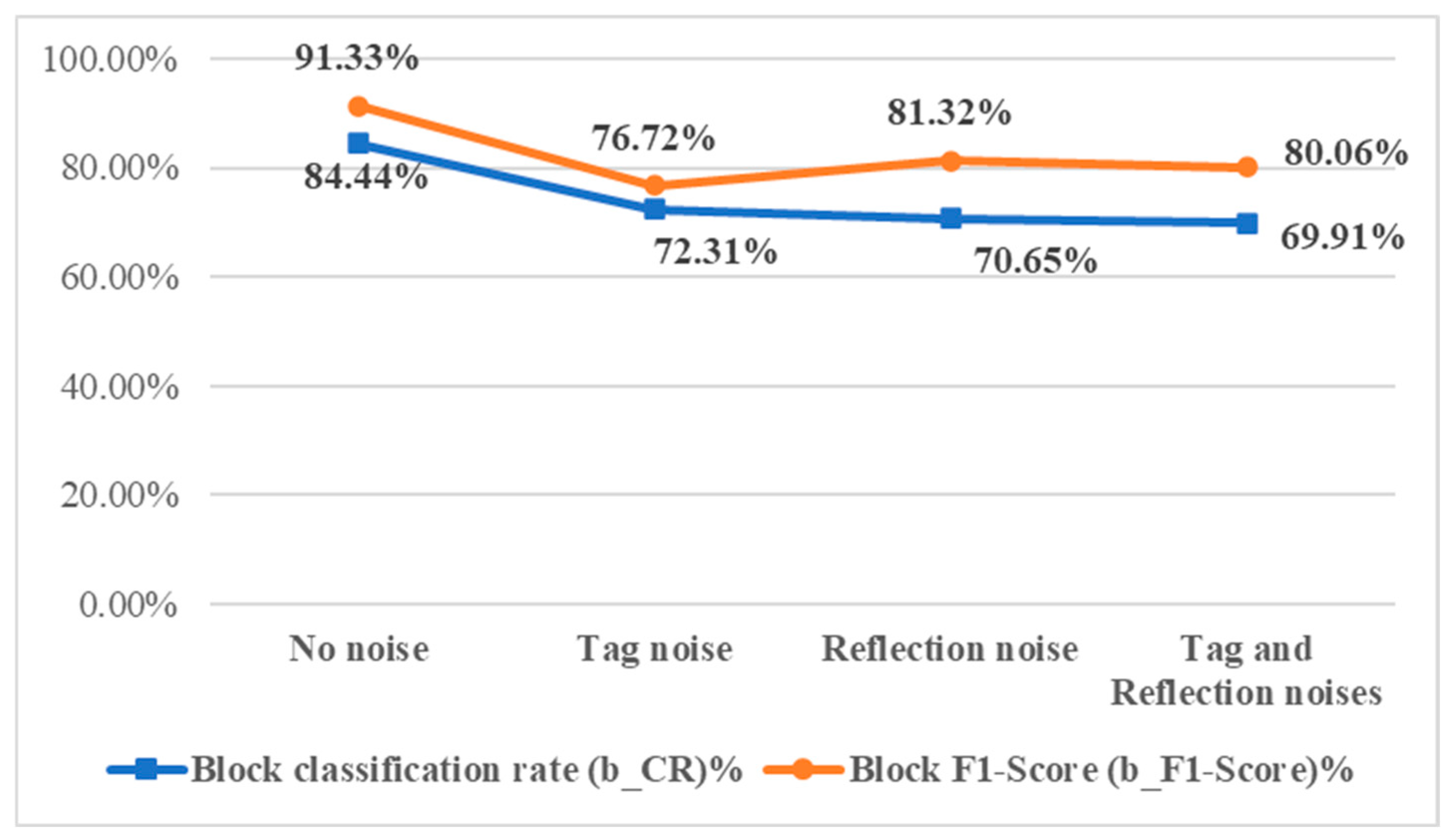

4.3.2. Impact of Surface Noises on Detection Effectiveness

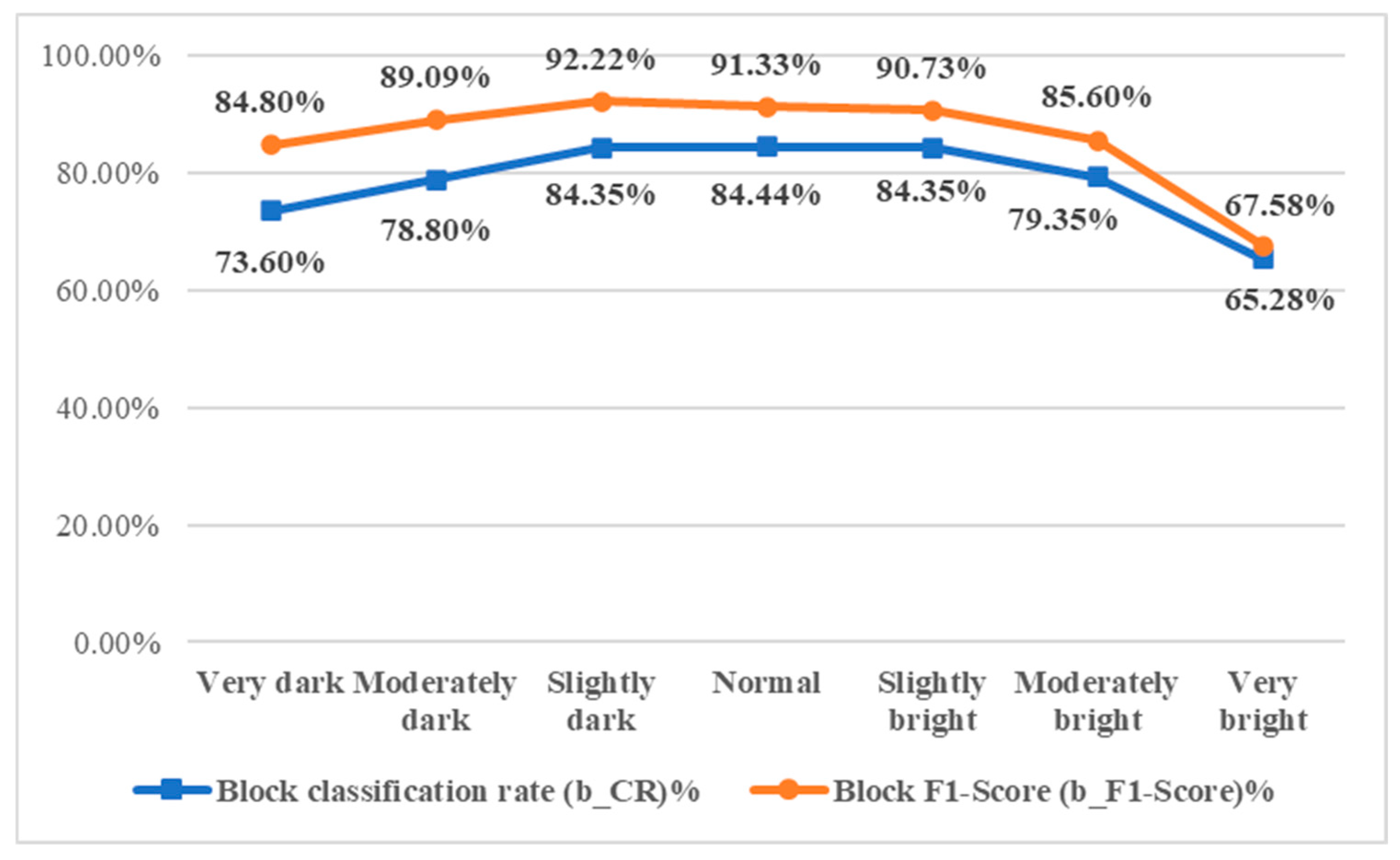

4.3.3. Effect of Changes in Image Brightness on Detection Effectiveness

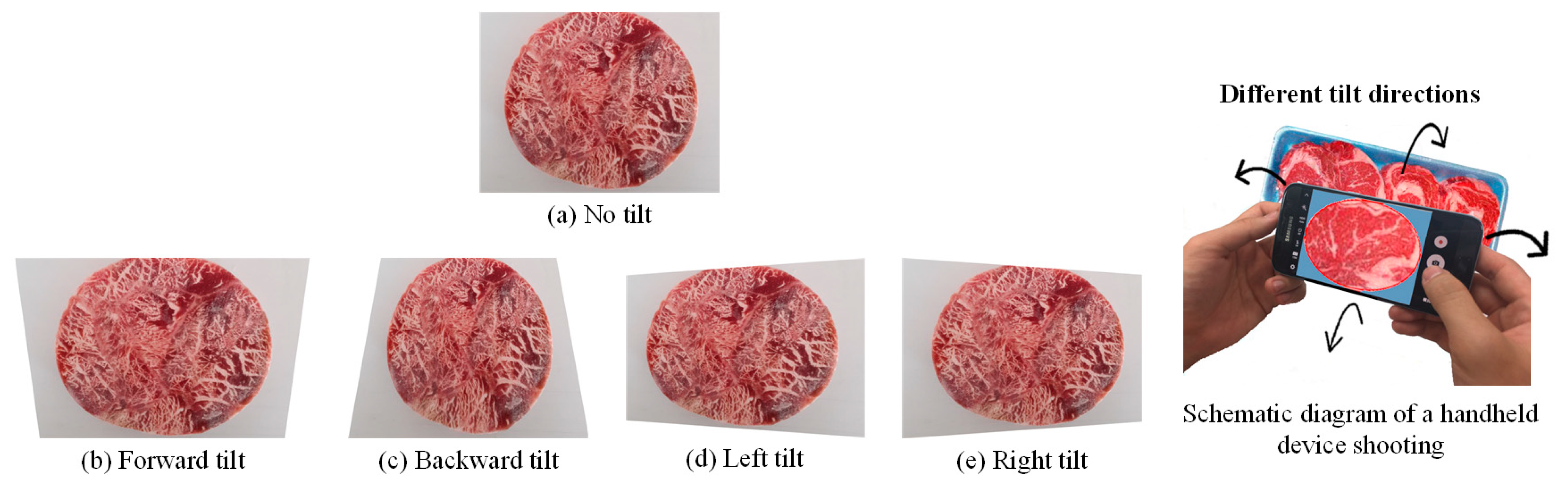

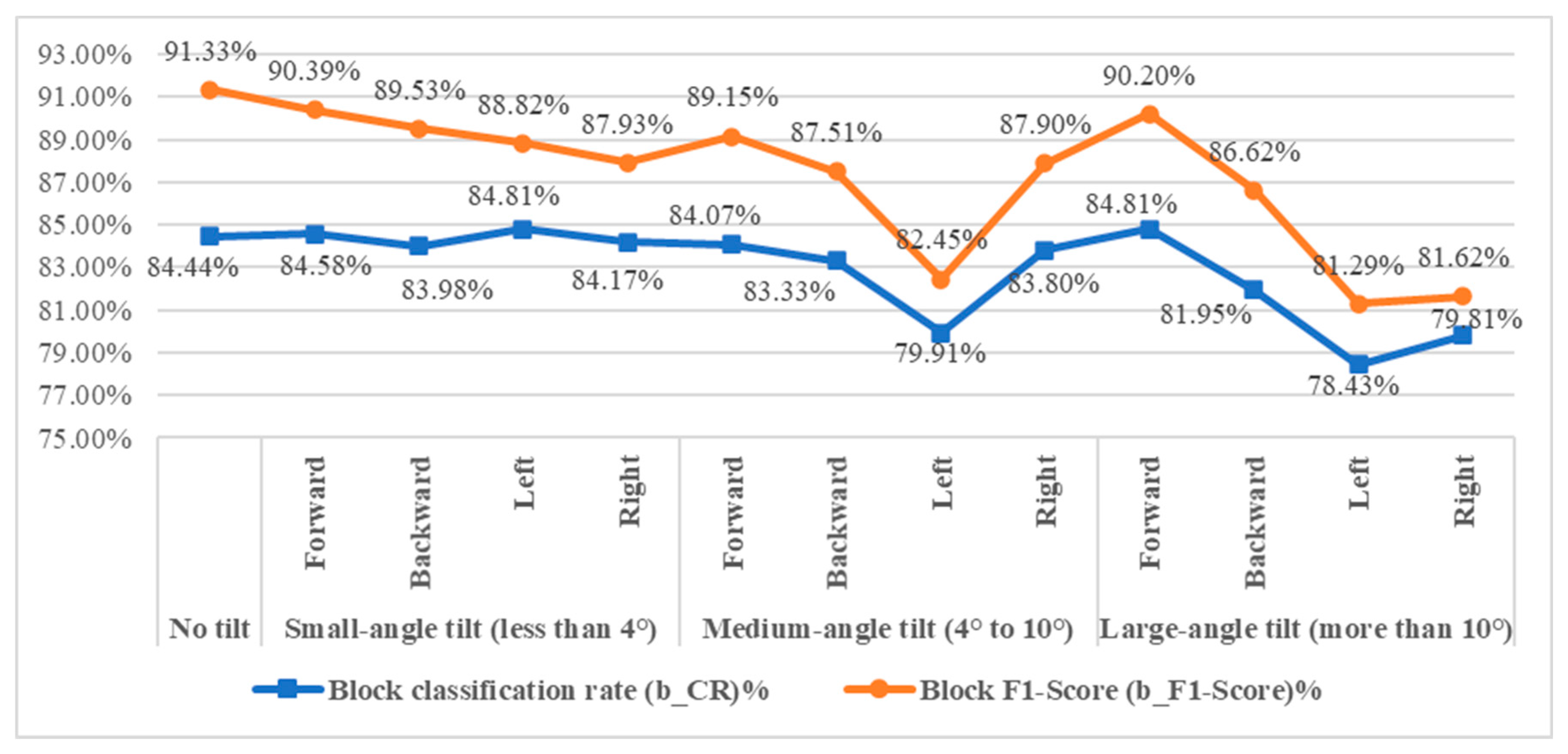

4.3.4. Impact of Changing the Image Capture Angle on Detection Effectiveness

4.4. Results and Discussion

5. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Junior, P.O.V.; Cardoso, R.D.C.V.; Nunes, I.L.; Lima, W.K.D.S. Quality and safety of fresh beef in retail: A review. J. Food Prot. 2022, 85, 435–447. [Google Scholar] [CrossRef] [PubMed]

- Khaled, A.Y.; Parrish, C.A.; Adedeji, A. Emerging nondestructive approaches for meat quality and safety evaluation—A review. Compr. Rev. Food Sci. Food Saf. 2021, 20, 3438–3463. [Google Scholar] [CrossRef] [PubMed]

- Apriantini, A.; Maulidawafi, R.; Humayrah, W.; Dainy, N.C.; Aditia, E.L. Knowledge, Perception, and Behavior of Business Consumers towards Meltique Meat in the City and District of Bogor. J. Ilmu Produksi Dan Teknol. Has. Peternak. 2024, 12, 130–143. [Google Scholar] [CrossRef]

- Baublits, R.T.; Pohlman, F.W.; Brown Jr, A.H.; Johnson, Z.B.; Proctor, A.; Sawyer, J.; Dias-Morse, P.; Galloway, D.L. Injection of conjugated linoleic acid into beef strip loins. Meat Sci. 2007, 75, 84–93. [Google Scholar] [CrossRef] [PubMed]

- What Is Beef Tallow-Injected Meat? A Similar Technique Is Used in French Cuisine, Nikkei. Available online: https://www.nikkei.com/article/DGXNASFK0805T_Y3A101C1000000/ (accessed on 25 April 2025).

- Kruk, O.; Ugnivenko, A. Quality characteristics of beef depending on its marbling. Anim. Sci. Food Technol. 2024, 15, 58–71. [Google Scholar] [CrossRef]

- Gotoh, T.; Joo, S.T. Characteristics and health benefit of highly marbled Wagyu and Hanwoo beef. Korean J. Food Sci. Anim. Resour. 2016, 36, 709–718. [Google Scholar] [CrossRef][Green Version]

- Panitchakorn, G.; Limpiyakorn, Y. Convolutional neural networks for artificial marbling beef classification. In Proceedings of the 2021 10th International Conference on Internet Computing for Science and Engineering (ICICSE 2021), New York, NY, USA, 11 July 2021; pp. 101–104. [Google Scholar]

- Hosseinpour, S.; Ilkhchi, A.H.; Aghbashlo, M. An intelligent machine vision-based smartphone app for beef quality evaluation. J. Food Eng. 2019, 248, 9–22. [Google Scholar] [CrossRef]

- ElMasry, G.; Sun, D.-W.; Allen, P. Near-infrared hyperspectral imaging for predicting colour, pH and tenderness of fresh beef. J. Food Eng. 2012, 110, 127–140. [Google Scholar] [CrossRef]

- Velásquez, L.; Cruz-Tirado, J.P.; Siche, R.; Quevedo, R. An application based on the decision tree to classify the marbling of beef by hyperspectral imaging. Meat Sci. 2017, 133, 43–50. [Google Scholar] [CrossRef]

- Jackman, P.; Sun, D.-W.; Allen, P. Prediction of beef palatability from colour, marbling and surface texture features of longissimus dorsi. J. Food Eng. 2010, 96, 151–165. [Google Scholar] [CrossRef]

- Chen, K.; Qin, C. Segmentation of beef marbling based on vision threshold. Comput. Electron. Agric. 2008, 62, 223–230. [Google Scholar] [CrossRef]

- Arsalane, A.; El Barbri, N.; Tabyaoui, A.; Klilou, A.; Rhofir, K.; Halimi, A. An embedded system based on DSP platform and PCA-SVM algorithms for rapid beef meat freshness prediction and identification. Comput. Electron. Agric. 2018, 152, 385–392. [Google Scholar] [CrossRef]

- Cheng, W.; Cheng, J.H.; Sun, D.W.; Pu, H. Marbling analysis for evaluating meat quality: Methods and techniques. Compr. Rev. Food Sci. Food Saf. 2015, 14, 523–535. [Google Scholar] [CrossRef]

- Li, J.; Tan, J.; Shatadal, P. Classification of tough and tender beef by image texture analysis. Meat Sci. 2001, 57, 341–346. [Google Scholar] [CrossRef]

- Li, J.; Tan, J.; Martz, F.A.; Heymann, H. Image texture features as indicators of beef tenderness. Meat Sci. 1999, 53, 17–22. [Google Scholar] [CrossRef]

- Lee, B.; Yoon, S.; Choi, Y.M. Comparison of marbling fleck characteristics between beef marbling grades and its effect on sensory quality characteristics in high-marbled Hanwoo steer. Meat Sci. 2019, 152, 109–115. [Google Scholar] [CrossRef]

- Jackman, P.; Sun, D.-W.; Allen, P.; Brandon, K.; White, A. Correlation of consumer assessment of longissimus dorsi beef palatability with image colour, marbling and surface texture features. Meat Sci. 2010, 84, 564–568. [Google Scholar] [CrossRef]

- Lee, Y.; Lee, B.; Kim, H.K.; Yun, Y.K.; Kang, S.; Kim, K.T.; Kim, B.D.; Kim, E.J.; Choi, Y.M. Sensory quality characteristics with different beef quality grades and surface texture features assessed by dented area and firmness, and the relation to muscle fiber and bundle characteristics. Meat Sci. 2015, 145, 195–201. [Google Scholar] [CrossRef]

- Shiranita, K.; Hayashi, K.; Otsubo, A.; Miyajima, T.; Takiyama, R. Grading meat quality by image processing. Pattern Recognit. 2000, 33, 97–104. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, X.; Borhan, M.S.; Young, J.; Newman, D.; Berg, E.; Sun, X. A review on meat quality evaluation methods based on non-destructive computer vision and artificial intelligence technologies. Food Sci. Anim. Resour. 2021, 41, 563–588. [Google Scholar] [CrossRef]

- Vierck, K.R.; O’Quinn, T.G.; Noel, J.A.; Houser, T.A.; Boyle, E.A.; Gonzalez, J.M. Effects of marbling texture on muscle fiber and collagen characteristics. Meat Muscle Biol. 2018, 2, 75–82. [Google Scholar] [CrossRef][Green Version]

- Li, C.; Zhou, G.; Xu, X.; Zhang, J.; Xu, S.; Ji, Y. Effects of marbling on meat quality characteristics and intramuscular connective tissue of beef longissimus muscle. Asian-Australas. J. Anim. Sci. 2006, 19, 1799–1808. [Google Scholar] [CrossRef]

- Stewart, S.M.; Gardner, G.E.; Tarr, G. Using chemical intramuscular fat percentage to predict visual marbling scores in Australian beef carcasses. Meat Sci. 2024, 217, 109573. [Google Scholar] [CrossRef] [PubMed]

- Stewart, S.M.; Gardner, G.E.; McGilchrist, P.; Pethick, D.W.; Polkinghorne, R.; Thompson, J.M.; Tarr, G. Prediction of consumer palatability in beef using visual marbling scores and chemical intramuscular fat percentage. Meat Sci. 2021, 181, 108322. [Google Scholar] [CrossRef] [PubMed]

- Aredo, V.; Velásquez, L.; Siche, R. Prediction of beef marbling using hyperspectral imaging (HSI) and partial least squares regression (PLSR). Sci. Agropecu. 2017, 8, 169–174. [Google Scholar] [CrossRef]

- Cai, J.; Lu, Y.; Olaniyi, E.; Wang, S.; Dahlgren, C.; Devost-Burnett, D.; Dinh, T. Beef marbling assessment by structured-illumination reflectance imaging with deep learning. J. Food Eng. 2024, 369, 111936. [Google Scholar] [CrossRef]

- Cai, J.; Lu, Y.; Olaniyi, E.; Wang, S.; Dahlgren, C.; Devost-Burnett, D.; Dinh, T. Enhanced segmentation of beef longissimus dorsi muscle using structured illumination reflectance imaging with deep learning. In Sensing for Agriculture and Food Quality and Safety XV; SPIE: Bellingham, WA, USA, 2023; Volume 12545, pp. 33–40. [Google Scholar]

- Ueda, S.; Namatame, Y.; Kitamura, C.; Tateoka, Y.; Yamada, S.; Fukuda, I.; Shirai, Y. Visualization of fine marbling in Japanese Black beef using X-ray computed tomography. Food Sci. Technol. Res. 2025, 31, 185–193. [Google Scholar] [CrossRef]

- Mulot, V.; Fatou-Toutie, N.; Benkhelifa, H.; Pathier, D.; Flick, D. Investigating the effect of freezing operating conditions on microstructure of frozen minced beef using an innovative X-ray micro-computed tomography method. J. Food Eng. 2019, 262, 13–21. [Google Scholar] [CrossRef]

- Kombolo-Ngah, M.; Goi, A.; Santinello, M.; Rampado, N.; Atanassova, S.; Liu, J.; Faure, P.; Thoumy, L.; Neveu, A.; Andueza, D.; et al. Across countries implementation of handheld near-infrared spectrometer for the on-line prediction of beef marbling in slaughterhouse. Meat Sci. 2023, 200, 109169. [Google Scholar] [CrossRef]

- Barragán-Hernández, W.; Mahecha-Ledesma, L.; Angulo-Arizala, J.; Olivera-Angel, M. Near-infrared spectroscopy as a beef quality tool to predict consumer acceptance. Foods 2020, 9, 984. [Google Scholar] [CrossRef] [PubMed]

- Ojala, T.; Pietikainen, M.; Harwood, D. Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In Proceedings of the 12th International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994; Volume 1, pp. 582–585. [Google Scholar]

- Pinto, D.L.; Selli, A.; Tulpan, D.; Andrietta, L.T.; Garbossa, P.L.; Vander Voort, G.; Munro, J.; McMorris, M.; Alves, A.A.; Carvalheiro, R.; et al. Image feature extraction via local binary patterns for marbling score classification in beef cattle using tree-based algorithms. Livest. Sci. 2023, 267, 105152. [Google Scholar] [CrossRef]

- Huang, H.; Liu, L.; Ngadi, M.O.; Gariépy, C. Prediction of pork marbling scores using pattern analysis techniques. Food Control 2013, 31, 224–229. [Google Scholar] [CrossRef]

- Xu, Z.; Han, Y.; Zhao, D.; Li, K.; Li, J.; Dong, J.; Shi, W.; Zhao, H.; Bai, Y. Research progress on quality detection of livestock and poultry meat based on machine vision, hyperspectral and multi-source information fusion technologies. Foods 2024, 13, 469. [Google Scholar] [CrossRef]

- Kiswanto, K.; Hadiyanto, H.; Sediyono, E. Meat texture image classification using the Haar wavelet approach and a gray-level co-occurrence matrix. Appl. Syst. Innov. 2024, 7, 49. [Google Scholar] [CrossRef]

- Xie, Y.C.; Xu, H.L.; Xie, Z. Analysis of texture features based on beef marbling standards (BMS) images. Sci. Agric. Sin. 2010, 43, 5121–5128. [Google Scholar]

- Varghese, A.; Jawahar, M.; Prince, A.A.; Gandomi, A.H. LBPMobileNet-based novel and simple leather image classification method. Neural Comput. Appl. 2025, 1–18. [Google Scholar] [CrossRef]

- Chen, K.; Sun, X.; Qin, C.; Tang, X. Color grading of beef fat by using computer vision and support vector machine. Comput. Electron. Agric. 2010, 70, 27–32. [Google Scholar] [CrossRef]

- Adi, K.; Pujiyanto, S.; Nurhayati, O.D.; Pamungkas, A. Beef marbling identification using color analysis and decision tree classification. Adv. Sci. Lett. 2017, 23, 6618–6622. [Google Scholar] [CrossRef]

- Caridade, C.M.; Pereira, C.D.; Pires, A.F.; Marnotes, N.G.; Viegas, J.F. Image analysis as a tool for beef grading. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 10, 466–475. [Google Scholar] [CrossRef]

- Lisitsyn, A.B.; Kozyrev, I.V. Researching of meat and fat colour and marbling in beef. Theory Prakt. Meat Process. 2016, 1, 51–56. [Google Scholar] [CrossRef]

- Liu, J.; Wang, L.; Xu, H.; Pi, J.; Wang, D. Research on beef marbling grading algorithm based on improved YOLOv8x. Foods 2025, 14, 1664. [Google Scholar] [CrossRef]

- Olaniyi, E.O.; Kucha, C. Advances in precision systems based on machine vision for meat quality detection. Food Eng. Rev. 2025, 1–26. [Google Scholar] [CrossRef]

- Lin, H.D.; He, M.Q.; Lin, C.H. Smartphone-based deep learning system for detecting ractopamine-fed pork using visual classification techniques. Sensors 2025, 25, 2698. [Google Scholar] [CrossRef]

- Li, B.; Ou-yang, S.T.; Li, Y.B.; Lu, Y.J.; Liu, Y.D.; Ou-yang, A.G. Quantitative detection of beef freshness characterized by storage days based on hyperspectral imaging technology combined with physicochemical indexes. J. Food Compos. Anal. 2025, 140, 107303. [Google Scholar] [CrossRef]

- Tong, Z.; Tanaka, G. Hybrid pooling for enhancement of generalization ability in deep convolutional neural networks. Neurocomputing 2019, 333, 76–85. [Google Scholar] [CrossRef]

| Name | Wagyu Beef | Artificially Marbled Beef |

|---|---|---|

| Captured steak images |  |  |

| Appearance characteristics | 1. Fat appears as dots and streaks, mostly not connected. 2. Fat distribution is scattered and relatively uneven. 3. Larger number of individual fat deposits. 4. Fat thickness varies. 5. Average fat area is relatively small. 6. Fat color varies in intensity; lean meat color is bright red. | 1. Fat appears as streaks, most of which are interconnected. 2. Fat distribution shows clear directionality and is relatively uniform. 3. Fewer individual fat deposits. 4. Fat thickness is relatively uniform. 5. Average fat area is relatively large. 6. Fat color is more uniform; lean meat color tends toward dark red. |

| No. | Various Combinations of Texture and Color Models | Number of Feature Values |

|---|---|---|

| 1 | LBP + RGB | 59 + 6 = 65 |

| 2 | LBP + HSV | 59 + 6 = 65 |

| 3 | LBP + CIE L*a*b* | 59 + 6 = 65 |

| 4 | LBP + RGB + HSV | 59 + 6 + 6 = 71 |

| 5 | LBP + RGB + HSV + CIE L*a*b* | 59 + 6 + 6 + 6 = 77 |

| Parameter Setting | |

|---|---|

| Input feature vector | 65 (Texture features: LBP0, LBP1, LBP2, …, and LBP58 (total 59 values) Color features: μR, μG, μB, σR, σG, and σB (total six values for RGB model) |

| Penalty parameter (C) | Original setting 26 (24, 25, 27, and 28, total five parameters) |

| Kernel coefficient (γ) | Original setting 20 (2−2, 2−1, 21, and 22, total five coefficients) |

| Output class | Y1 (Wagyu beef), Y2 (general beef), and Y3 (fat-injected beef) |

| Small-Sample Experiments | Based on Image Level | |||

| Training Images | Validation Images | Training Images | Total | |

| Wagyu beef | 40 | 20 | 20 | 80 |

| General beef | 40 | 20 | 20 | 80 |

| Fat-injected beef | 40 | 20 | 20 | 80 |

| Total | 120 | 60 | 60 | 240 |

| Small-Sample Experiments | Based on Block Level | |||

| Training Images | Validation Images | Training Images | Total | |

| Wagyu beef | 1440 | 720 | 720 | 2880 |

| General beef | 1440 | 720 | 720 | 2880 |

| Fat-injected beef | 1440 | 720 | 720 | 2880 |

| Total | 4320 | 2160 | 2160 | 8640 |

| Grid Block Sizes | 64 × 64 | 68 × 68 | 72 × 72 | 76 × 76 | 80 × 80 | 84 × 84 |

|---|---|---|---|---|---|---|

| Misjudgment rates of non-fat-injected beef (b_α)%, (α)% | 10.32 (5.00) | 8.54 (5.00) | 9.94 (5.00) | 8.38 (2.50) | 7.57 (2.50) | 9.55 (5.00) |

| Detection rates of fat-injected beef (b_l − β)%, (l − β)% | 73.82 (85.00) | 74.38 (75.00) | 71.90 (75.00) | 75.68 (80.00) | 75.56 (80.00) | 78.04 (80.00) |

| Precisions for fat-injected beef (b_P)%, (P)% | 78.15 (89.47) | 81.32 (89.47) | 78.34 (88.24) | 81.87 (94.12) | 83.31 (94.12) | 80.33 (88.89) |

| Classification rates (b_CR)%, (CR)% | 75.21 (91.67) | 77.50 (88.33) | 76.07 (88.33) | 79.32 (91.67) | 79.54 (91.67) | 78.63 (90.00) |

| F1-Scores (b_F1-Score)%, (F1-Score)% | 75.29 (87.18) | 77.70 (88.28) | 74.98 (88.28) | 78.65 (86.49) | 79.25 (86.49) | 79.17 (84.21) |

| LBP(P, R) | LBP(8, 2) | LBP(8, 1) | LBP(16, 2) |

|---|---|---|---|

| Schematic diagram of sampling points for different LBP texture features |  |  |  |

| Misjudgment rate of non-fat-injected beef (b_α)% | 8.75 | 7.57 | 11.53 |

| Detection rate of fat-injected beef (b_l − β)% | 74.03 | 75.56 | 74.31 |

| Precision for fat-injected beef (b_P)% | 80.88 | 83.31 | 76.32 |

| Block classification rate (b_CR)% | 75.60 | 79.54 | 71.30 |

| Block F1-score (b_F1-Score)% | 77.30 | 79.25 | 75.30 |

| C (Penalty Parameter) | 16 | 32 | 64 | 128 | 256 | |

|---|---|---|---|---|---|---|

| γ (Kernel Coefficient) | ||||||

| 0.25 | 69.40% | 72.69% | 76.34% | 78.56% | 78.24% | |

| 0.5 | 72.55% | 76.90% | 79.07% | 78.8% | 78.38% | |

| 1 | 76.67% | 79.26% | 79.54% | 78.75% | 77.96% | |

| 2 | 79.17% | 79.35% | 78.56% | 77.45% | 76.11% | |

| 4 | 78.44% | 77.31% | 75.37% | 73.61% | 72.64% | |

| Combinations of Feature Types | Misjudgment Rate of Non-Fat-Injected Beef (b_α)% | Detection Rate of Fat-Injected Beef (b_l − β)% | Precision for Fat-Injected Beef (b_P)% | Block Classification Rate (b_CR)% | Block F1-Score (b_F1-Score)% |

|---|---|---|---|---|---|

| LBP + RGB | 7.57 | 75.56 | 83.31 | 79.54 | 79.25 |

| LBP + HSV | 10.49 | 73.47 | 77.79 | 76.02 | 75.57 |

| LBP + L*a*b* | 10.69 | 68.89 | 72.15 | 74.72 | 70.48 |

| LBP + RGB + HSV | 9.93 | 74.03 | 78.85 | 76.81 | 76.36 |

| LBP + RGB + HSV + L*a*b* | 8.06 | 73.89 | 82.10 | 79.95 | 77.78 |

| Related Parameters | Preference Parameter Selection |

|---|---|

| Image block size | 80 × 80 |

| LBP texture operator configuration | LBP(8, 1) |

| Parameter setting of SVM model | C = 64, γ = 1 |

| Combinations of feature types | LBP + RGB |

| Large-Sample Experiments | Based on Image Level | |||

| Training Images | Validation Images | Testing Images | Total | |

| Wagyu beef | 120 | 40 | 40 | 200 |

| General beef | 120 | 40 | 40 | 200 |

| Fat-injected beef | 120 | 40 | 40 | 200 |

| Total | 360 | 120 | 120 | 600 |

| Large-Sample Experiments | Based on Block Level | |||

| Training Images | Validation Images | Testing Images | Total | |

| Wagyu beef | 4320 | 1440 | 1440 | 7200 |

| General beef | 4320 | 1440 | 1440 | 7200 |

| Fat-injected beef | 4320 | 1440 | 1440 | 7200 |

| Total | 12,960 | 4320 | 4320 | 21,600 |

| Classifiers | Effectiveness Indicators | Based on Block Level | Based on Image Level |

|---|---|---|---|

| BPN | Misjudgment rate of non-fat-injected beef (α)% | 8.43 | 2.50 |

| Detection rate of fat-injected beef (l − β)% | 79.31 | 88.33 | |

| Classification rate (CR)% | 80.57 | 91.67 | |

| F1-score (%) | 80.86 | 91.37 | |

| SVM | Misjudgment rate of non-fat-injected beef (α)% | 6.48 | 1.67 |

| Detection rate of fat-injected beef (l − β)% | 85.93 | 95.00 | |

| Classification rate (CR)% | 83.81 | 93.89 | |

| F1-score (%) | 86.41 | 95.80 | |

| CNN | Misjudgment rate of non-fat-injected beef (α)% | 8.52 | 8.33 |

| Detection rate of fat-injected beef (l − β)% | 91.94 | 98.33 | |

| Classification rate (CR)% | 88.07 | 98.89 | |

| F1-score (%) | 87.99 | 98.33 |

| Processing Time of Classifiers | BPN | SVM | CNN |

|---|---|---|---|

| Training time (Min.) | 8.09 min | 3.83 min | 38.82 min |

| Testing time (S/image) | 0.12 s | 0.08 s | 0.16 s |

| ROI Mask Types | Small ROI Mask | Medium ROI Mask | Large ROI Mask |

|---|---|---|---|

| Mask specifications | Long axis: 576 pixels Short axis: 432 pixels | Long axis: 720 pixels Short axis: 540 pixels | Long axis: 864 pixels Short axis: 648 pixels |

| Area of small ROI mask: 196,145 pixels Area of medium ROI mask: 302,783 pixels Area of large ROI mask: 440,813 pixels |  |  |  |

| Example images |  |  |  |

| Brightness Levels | Very Dark | Moderately Dark | Slightly Dark | Normal | Slightly Bright | Moderately Bright | Very Bright |

|---|---|---|---|---|---|---|---|

| Brightness standard | μ − 4.5σ | μ − 3σ | μ − 1.5σ | μ | μ + 1.5σ | μ + 3σ | μ + 4.5σ |

| Average brightness | 21.01 | 46.98 | 72.94 | 98.91 | 124.88 | 150.85 | 176.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-D.; Hsieh, Y.-T.; Lin, C.-H. Smartphone-Based Sensing System for Identifying Artificially Marbled Beef Using Texture and Color Analysis to Enhance Food Safety. Sensors 2025, 25, 4440. https://doi.org/10.3390/s25144440

Lin H-D, Hsieh Y-T, Lin C-H. Smartphone-Based Sensing System for Identifying Artificially Marbled Beef Using Texture and Color Analysis to Enhance Food Safety. Sensors. 2025; 25(14):4440. https://doi.org/10.3390/s25144440

Chicago/Turabian StyleLin, Hong-Dar, Yi-Ting Hsieh, and Chou-Hsien Lin. 2025. "Smartphone-Based Sensing System for Identifying Artificially Marbled Beef Using Texture and Color Analysis to Enhance Food Safety" Sensors 25, no. 14: 4440. https://doi.org/10.3390/s25144440

APA StyleLin, H.-D., Hsieh, Y.-T., & Lin, C.-H. (2025). Smartphone-Based Sensing System for Identifying Artificially Marbled Beef Using Texture and Color Analysis to Enhance Food Safety. Sensors, 25(14), 4440. https://doi.org/10.3390/s25144440