Wind Turbine Blade Defect Recognition Method Based on Large-Vision-Model Transfer Learning

Abstract

1. Introduction

1.1. Literature Review

1.2. Motivation and Contributions

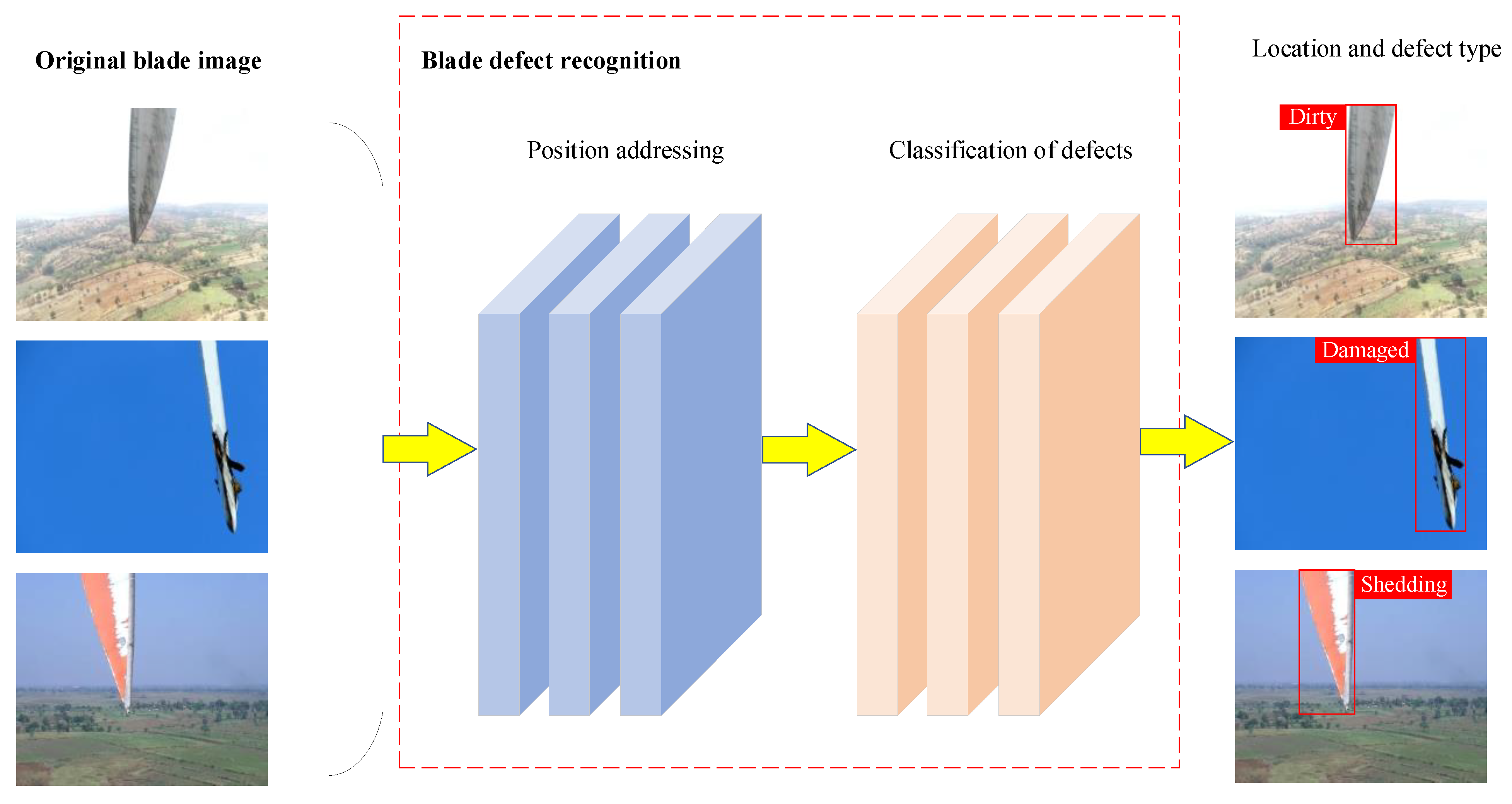

2. Wind Turbine Blade Positioning and Defect Recognition

2.1. Framework for Wind Turbine Blade Defect Recognition

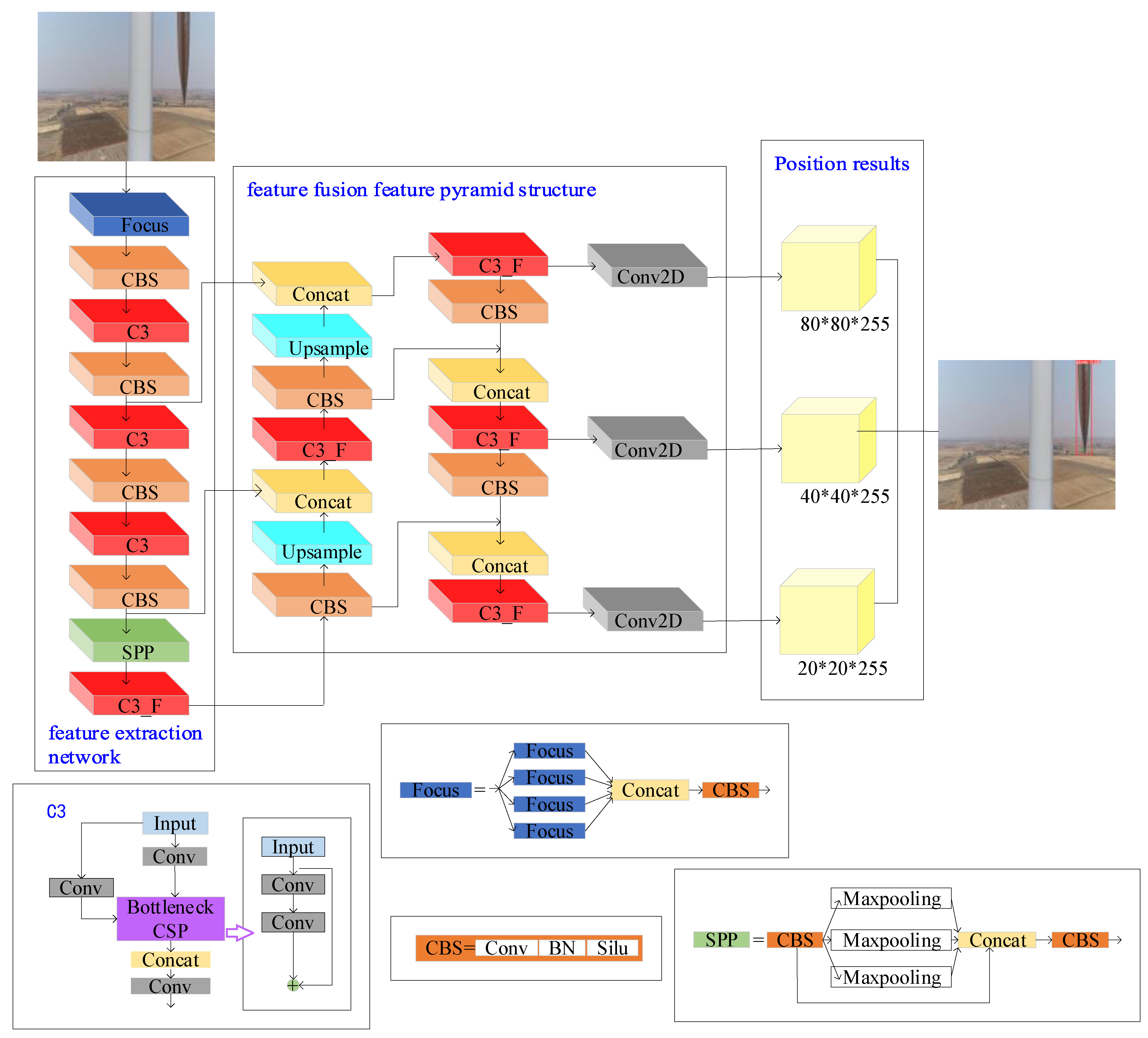

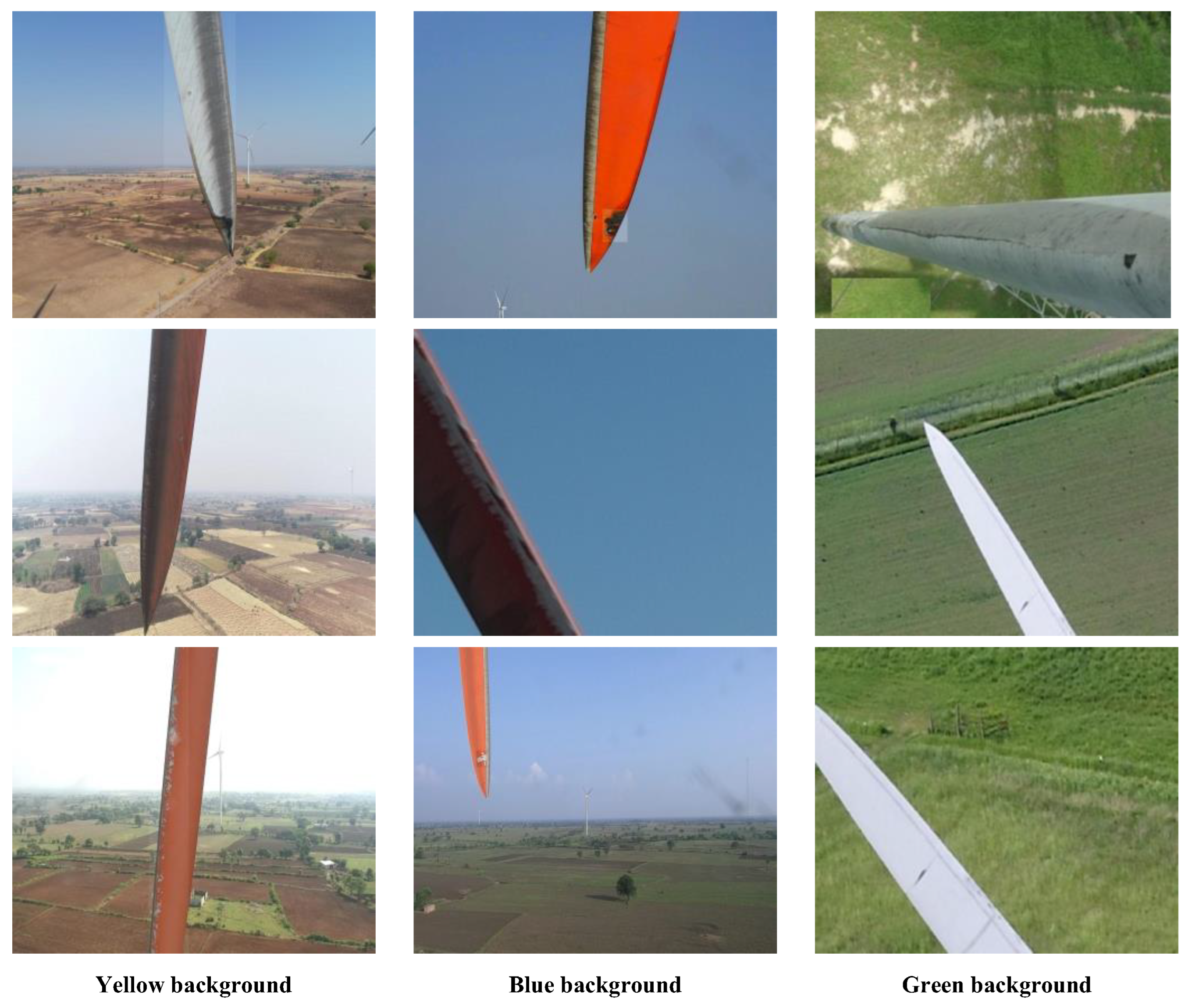

2.2. Wind Turbine Blade Area Positioning Method

| Algorithm 1: Batch normalization |

| Input: A = , n is the input batch size Output: B = |

| 1 For i = 1 to n do 2 Calculate the input mean: 3 Calculate variance: 4 Normalize each input using variance and mean: , is a nonzero decimal number 5 Introducing learnable parameters perform linear transformation: 6 End For |

2.3. Classification of Wind Turbine Blade Defects

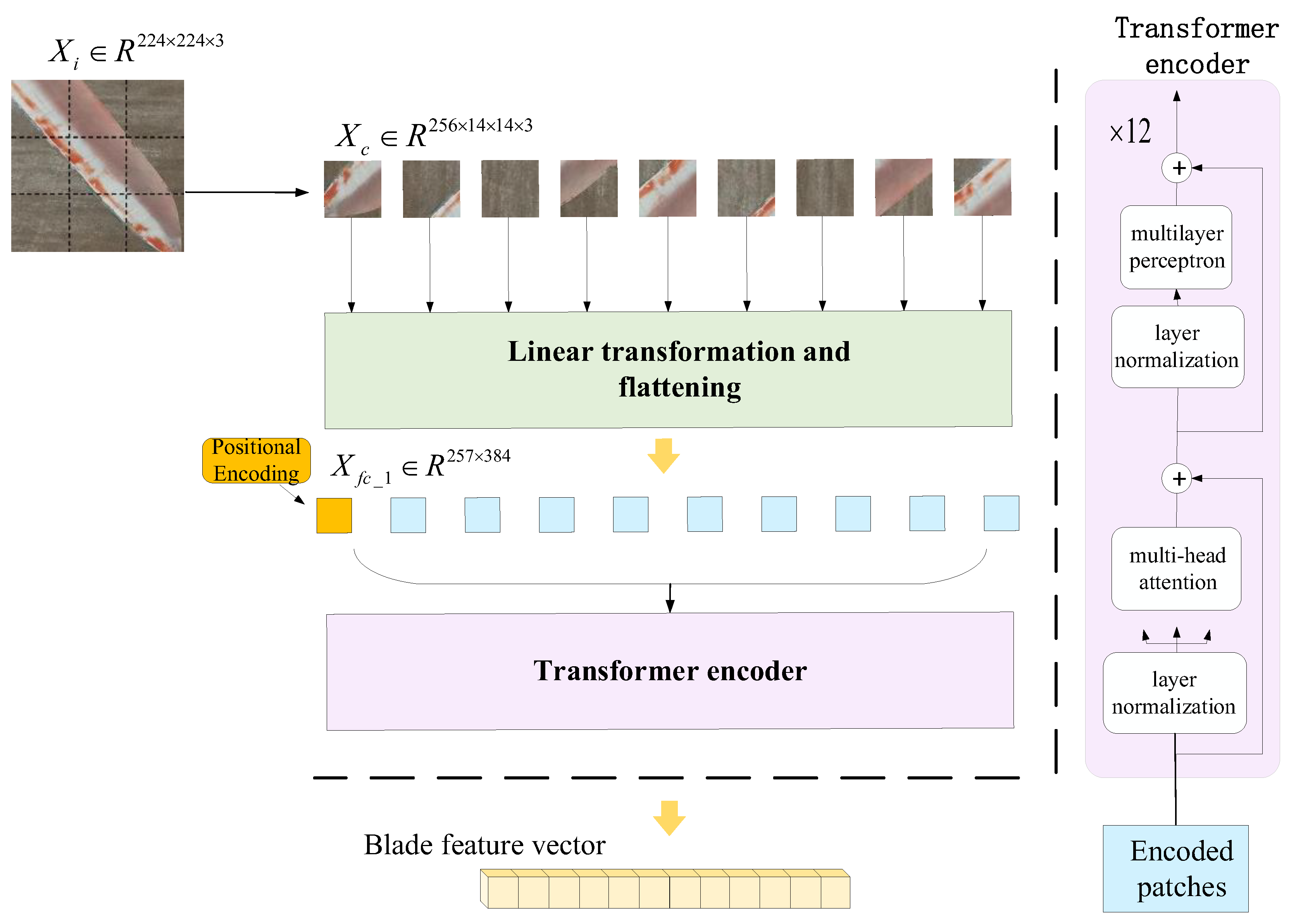

2.3.1. Method for Extracting Features Utilizing DINOv2

| Algorithm 2: DINOv2 wind turbine blade feature extraction algorithm |

| Input: blade image , Output: blade feature vector |

| 1 The blade image is divided into 16×16 image blocks with 14 × 14 pixels, and the wind turbine image is obtained: 2 Flatten the three-channel to get 3 is linearly projected onto a 384-dimensional vector space to obtain , , 4 To distinguish the relative position of sequence elements, embedded position encoding, 5 For i = 1 to 12 do 6 Layer normalization: 7 Attention mechanism: 8 Layer normalization: 9 The first fully connected layer of multi-layer perceptron: , , 10 The second fully connected layer of multi-layer perceptron: , , 11 End For 12 Layer normalization: 13 The blade feature vector y is the first row element of |

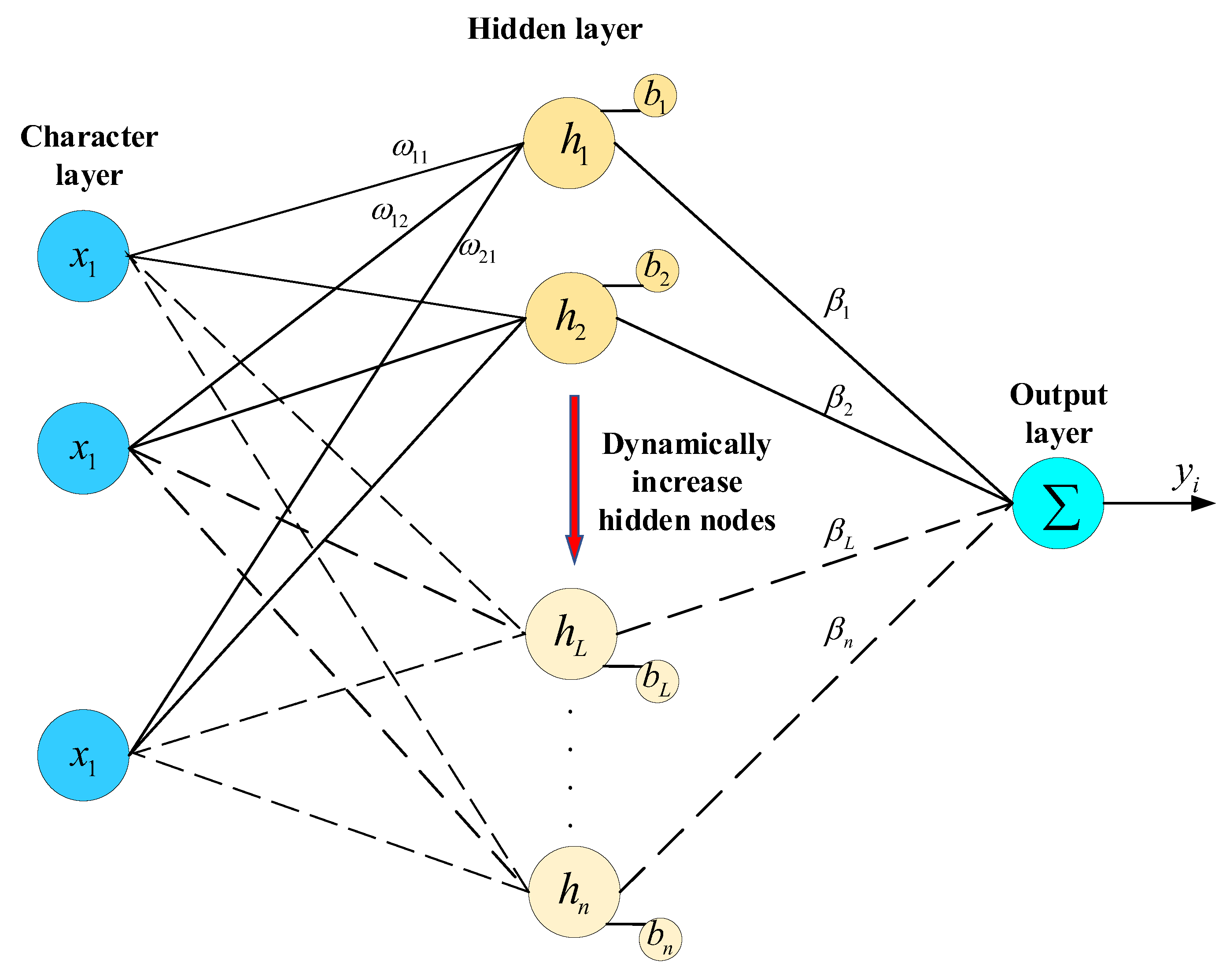

2.3.2. Feature Vector Classification Based on a Stochastic Configuration Network

3. Experimental Validation and Evaluation

3.1. Experimental Environment

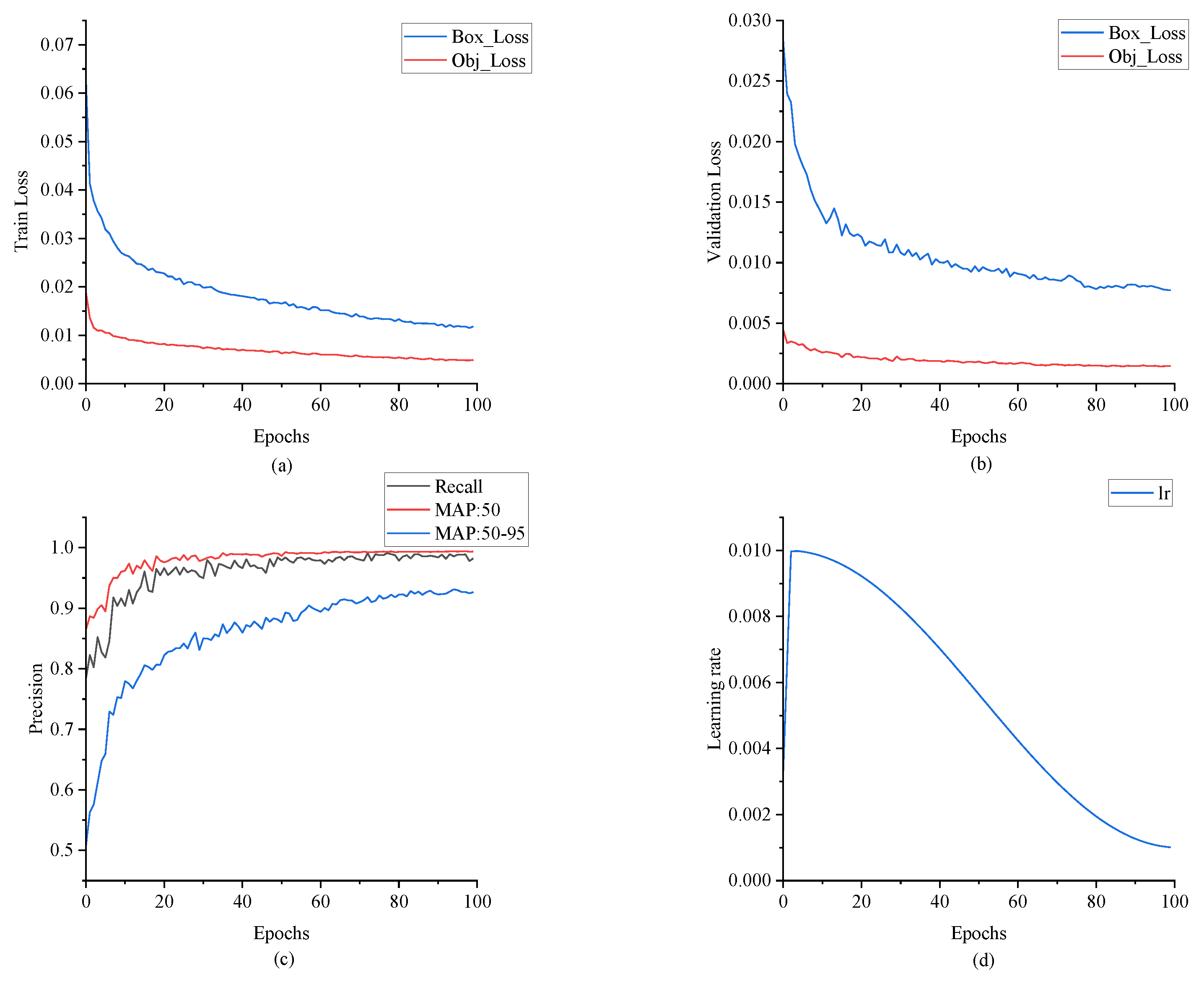

3.2. Model Architecture and Parameter Setting

3.3. Analysis of Wind Turbine Blade Area Positioning

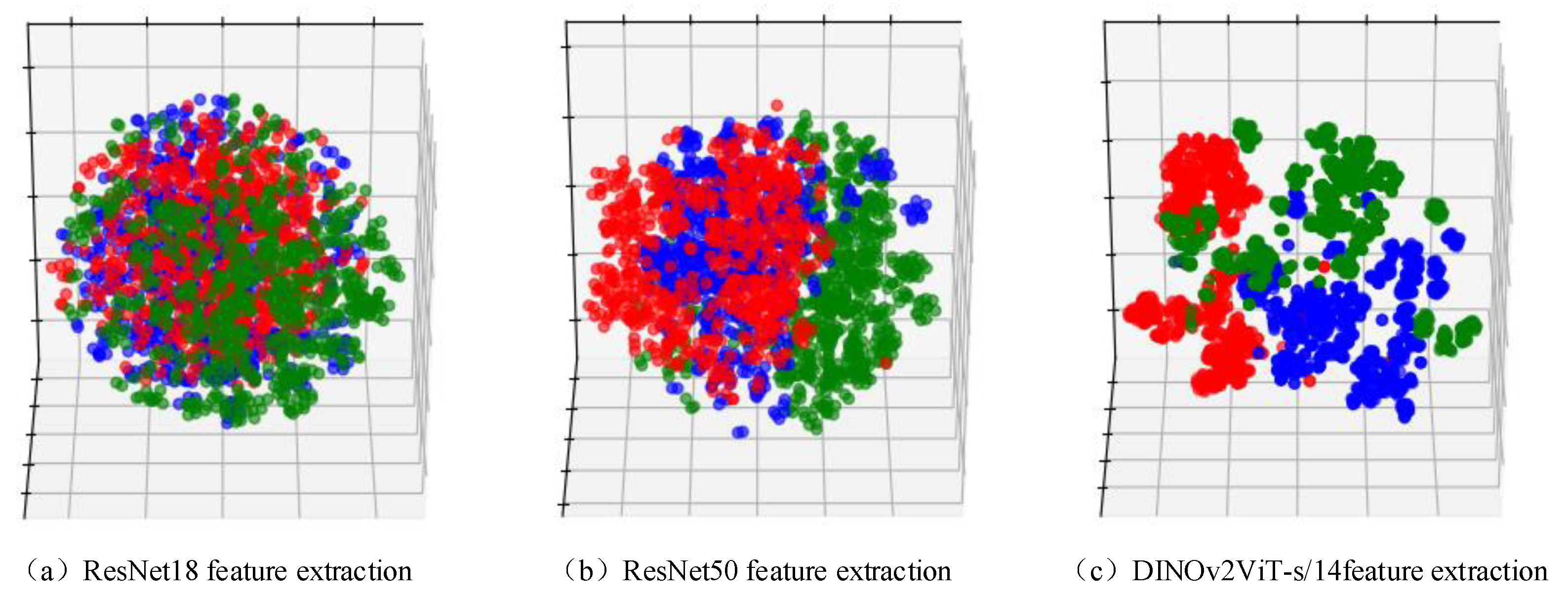

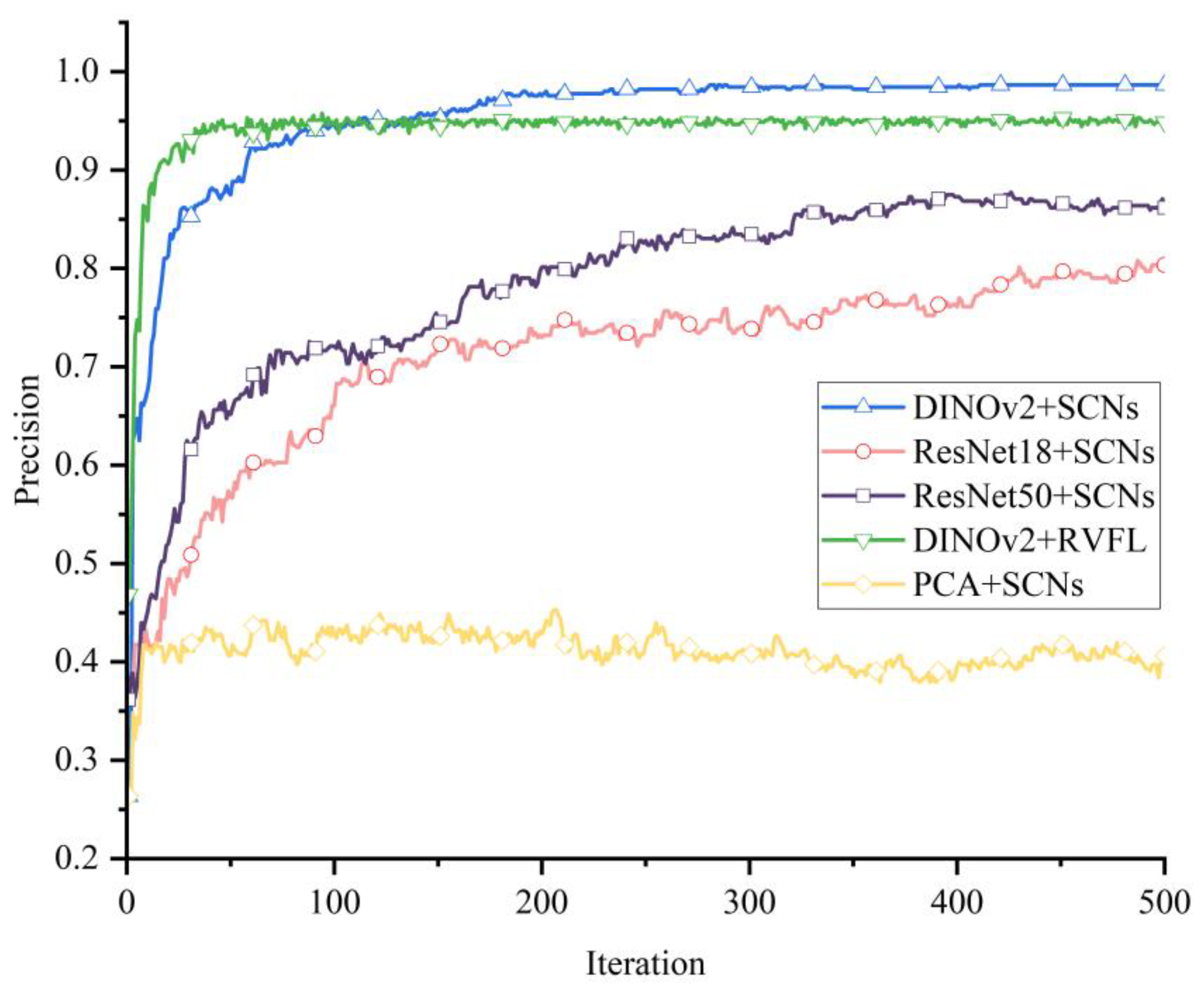

3.4. Analysis of Wind Turbine Blade Defect Classification

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| SCN | Stochastic Configuration Network |

| DINO | DETR with Improved Denoising Anchor Boxes |

| CNN(Conv) | Convolutional Neural Network |

| Faster-RCNN | Faster Region Convolutional Neural Network |

| VGG | Visual Geometry Group Network |

| ResNet | Residual Network |

| SSD | Single-Shot Multi-Box Detector |

| SVM | Support Vector Machine |

| RPCA | Recursive Principal Component Analysis |

| MCNN | Multi-Channel Convolutional Neural Network |

| ART | Adaptive Resonance Theory |

| CSP | Cross-Stage Partial Network |

| CBS | Conv + Batch Normalization + Silu |

| SPP | Spatial Pyramid Pooling |

| BN | Batch Normalization |

| Silu | Sigmoid Linear Unit |

| AP | Average Precision |

| IOU | Intersection over Union |

| PCA | Principal Component Analysis |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

| KNN | K Nearest Neighbor |

| K-means | k-Means Clustering |

References

- Joshuva, A.; Sugumaran, V. A Comparative Study of Bayes Classifiers for Blade Fault Diagnosis in Wind Turbines through Vibration Signals. Struct. Durab. Health Monit. 2017, 12, 69–90. [Google Scholar]

- Rezamand, M.; Kordestani, M.; Carriveau, R.; Ting, D.S.-K.; Saif, M. A New Hybrid Fault Detection Method for Wind Turbine Blades Using Recursive PCA and Wavelet-Based PDF. IEEE Sens. J. 2020, 20, 2023–2033. [Google Scholar] [CrossRef]

- Wang, M.H.; Lu, S.D.; Hsieh, C.C.; Hung, C.C. Fault Detection of Wind Turbine Blades Using Multi-Channel CNN. Sustainability 2022, 14, 1781. [Google Scholar] [CrossRef]

- Guo, J.; Liu, C.; Cao, J.; Jiang, D. Damage Identification of Wind Turbine Blades with Deep Convolutional Neural Networks. Renew. Energy 2021, 174, 122–133. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Y.; Lv, W.; Wang, D. Image Recognition of Wind Turbine Blade Damage Based on a Deep Learning Model with Transfer Learning and an Ensemble Learning Classifier. Renew. Energy 2021, 163, 386–397. [Google Scholar] [CrossRef]

- Shihavuddin, A.S.; Chen, X.; Fedorov, V.; Nymark Christensen, A.; Andre Brogaard Riis, N.; Branner, K.; Bjorholm Dahl, A.; Reinhold Paulsen, R. Wind Turbine Surface Damage Detection by Deep Learning Aided Drone Inspection Analysis. Energies 2019, 12, 676. [Google Scholar] [CrossRef]

- Xu, D.; Wen, C.; Liu, J. Wind Turbine Blade Surface Inspection Based on Deep Learning and UAV-Taken Images. J. Renew. Sustain. Energy 2019, 11, 053305. [Google Scholar] [CrossRef]

- Lv, L.; Yao, Z.; Wang, E.; Ren, X.; Pang, R.; Wang, H.; Zhang, Y.; Wu, H. Efficient and Accurate Damage Detector for Wind Turbine Blade Images. IEEE Access 2022, 10, 2169–3536. [Google Scholar] [CrossRef]

- Zhao, X.Y.; Dong, C.Y.; Zhou, P.; Zhu, M.J.; Ren, J.W.; Chen, X.Y. Detecting Surface Defects of Wind Turbine Blades Using an AlexNet Deep Learning Algorithm. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2019, E102-A, 1817–1824. [Google Scholar] [CrossRef]

- Zhu, J.; Wen, C.; Liu, J. Defect Identification of Wind Turbine Blade Based on Multi-Feature Fusion Residual Network and Transfer Learning. Energy Sci. Eng. 2022, 10, 219–229. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, H.; Yan, X.; Wang, T.; Ge, S.S. Defect Identification of Wind Turbine Blades Based on Defect Semantic Features with Transfer Feature Extractor. Neurocomputing 2020, 376, 1–9. [Google Scholar] [CrossRef]

- Mao, Y.; Wang, S.; Yu, D.; Zhao, J. Automatic Image Detection of Multi-Type Surface Defects on Wind Turbine Blades Based on Cascade Deep Learning Network. Intell. Data Anal. 2021, 25, 463–482. [Google Scholar] [CrossRef]

- Liu, Z.H.; Chen, Q.; Wei, H.L.; Lv, M.Y.; Chen, L. Channel-Spatial Attention Convolutional Neural Networks Trained with Adaptive Learning Rates for Surface Damage Detection of Wind Turbine Blades. Measurement 2023, 217, 113097. [Google Scholar] [CrossRef]

- Zhang, C.; Wen, C.; Liu, J. Mask-MRNet: A Deep Neural Network for Wind Turbine Blade Fault Detection. J. Renew. Sustain. Energy 2020, 12, 053302. [Google Scholar] [CrossRef]

- Yang, P.; Dong, C.; Zhao, X.; Chen, X. The Surface Damage Identifications of Wind Turbine Blades Based on ResNet50 Algorithm. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020. [Google Scholar]

- Yao, Y.; Wang, G.; Fan, J. WT-YOLOX: An Efficient Detection Algorithm for Wind Turbine Blade Damage Based on YOLOX. Energies 2023, 16, 3776. [Google Scholar] [CrossRef]

- Ran, X.; Zhang, S.; Wang, H.; Zhang, Z. An Improved Algorithm for Wind Turbine Blade Defect Detection. IEEE Access 2022, 10, 122171–122181. [Google Scholar] [CrossRef]

- Foster, A.; Best, O.; Gianni, M.; Khan, A.; Collins, K.; Sharma, S. Drone Footage Wind Turbine Surface Damage Detection. In Proceedings of the 2022 IEEE 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Nafplio, Greece, 26–29 June 2022. [Google Scholar]

- Zhang, Y.; Yang, Y.; Sun, J.; Ji, R.; Zhang, P.; Shan, H. Surface Defect Detection of Wind Turbine Based on Lightweight YOLOv5s Model. Measurement 2023, 220, 113222. [Google Scholar] [CrossRef]

- Zhang, R.; Wen, C. SOD-YOLO: A Small Target Defect Detection Algorithm for Wind Turbine Blades Based on Improved YOLOv5. Adv. Theory Simul. 2022, 5, 2100631. [Google Scholar] [CrossRef]

- Ran, X.; Suyaroj, N.; Tepsan, W.; Lei, M.; Ma, H.; Zhou, X.; Deng, W. A Novel Fuzzy System-Based Genetic Algorithm for Trajectory Segment Generation in Urban Global Positioning System. J. Adv. Res. 2025, in press. [CrossRef] [PubMed]

- Chen, H.; Sun, Y.; Li, X.; Zheng, B.; Chen, T. Dual-Scale Complementary Spatial-Spectral Joint Model for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6772–6789. [Google Scholar] [CrossRef]

- Wang, C.; Yang, J.; Jie, H.; Zhao, Z.; Wang, W. An Energy-Efficient Mechanical Fault Diagnosis Method Based on Neural-Dynamics-Inspired Metric SpikingFormer for Insufficient Samples in Industrial Internet of Things. IEEE Internet Things J. 2025, 12, 1081–1097. [Google Scholar] [CrossRef]

- Wang, C.; Jie, H.; Yang, J.; Gao, T.; Zhao, Z.; Chang, Y.; See, K.Y. A Multi-Source Domain Feature-Decision Dual Fusion Adversarial Transfer Network for Cross-Domain Anti-Noise Mechanical Fault Diagnosis in Sustainable City. Inf. Fusion 2025, 115, 102739. [Google Scholar] [CrossRef]

- Wang, C.; Shu, Z.; Yang, J.; Zhao, Z.; Jie, H.; Chang, Y.; Jiang, S.; See, K.Y. Learning to Imbalanced Open Set Generalize: A Meta-Learning Framework for Enhanced Mechanical Diagnosis. IEEE Trans. Cybern. 2025, 55, 1464–1475. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Liu, Y.; Wei, T.; Jiang, D.; Wang, M. Wind Turbine Blades Surface Crack-Detection Algorithm Based on Improved YOLO-v5 Model. J. Electron. Imaging 2023, 32, 033012. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Liu, X.; Liu, C.; Jiang, D. Wind Turbine Blade Surface Defect Detection Based on YOLO Algorithm. In Proceedings of the International Congress and Workshop on Industrial AI and eMaintenance 2023, Luleå, Sweden, 13–15 June 2023; pp. 367–380. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jegou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Wang, D.; Li, M. Stochastic Configuration Networks: Fundamentals and Algorithms. IEEE Trans. Cybern. 2017, 47, 3466–3479. [Google Scholar] [CrossRef] [PubMed]

- van der Maaten, L.; Hinton, G. Visualizing Data Using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| References | Wind Turbine Blade Positioning | Blade Defect Feature Extraction | Blade Defect Classification |

|---|---|---|---|

| [1] | - | Descriptive statistical parameters + J48 decision tree algorithm | Bayesian classification |

| [2] | - | RPCA | RPCA |

| [3] | - | MCNN | ART |

| [4] | Haar-AdaBoost | Improved VGG16 | Fully connected layer of VGG16 |

| [5] | Otsu algorithm | AlexNet | Random forest |

| [6] | - | Inception-ResNet-v2 | Fully connected layer of Faster-RCNN |

| [7] | - | Improved VGG11 | Fully connected layer of VGG11 |

| [8] | - | ResNet50 | Fully connected layer of SSD |

| [9] | - | AlexNet | AlexNet |

| [10] | - | Improved ResNet34 | Fully connected layer of |

| [11] | - | DCNN | SVM |

| [12] | Improved k-means algorithm | Fine-tuned ResNet101 | Fully connected layer of R-CNN |

| [13] | - | Improved VGG16 | Fully connected layer of VGG16 |

| [14] | MR algorithm | ResNet50 | DenseNet-121 |

| [15] | - | ResNet50 | Fully connected layer |

| [16] | - | CSPDarknet+RepVGG | Fully connected layer of YOLOvX |

| [17] | - | CSPDarknet | Fully connected layer of YOLOv5 |

| [18] | - | CSPDarknet | Fully connected layer of YOLOv5 |

| [19] | - | MobileNetv3 | Fully connected layer of YOLOv5 |

| [20] | - | CSPDarknet | Fully connected layer |

| This study | YOLOv5 | Feature extraction of DINOv2 large vision model | Stochastic Configuration Network |

| Computer Software and Hardware | Version/Model |

|---|---|

| GPU | Nvidia RTX 4090 (24 GB) |

| Python | 3.8 |

| CPU | Intel Xeon Platinum 8352 V |

| CUDA | 11.3 |

| Operating System | Ubuntu 22.04 |

| Pytorch | 1.10 |

| Blade-Positioning Model | AP:50 | Reasoning Speed |

|---|---|---|

| YOLOv3 | 0.993 | 10.3 ms |

| YOLOv5 | 0.994 | 9.0 ms |

| YOLOv7 | 0.941 | 8.1 ms |

| YOLOv8 | 0.990 | 21.9 ms |

| YOLOv9 FasterRCNN | 0.994 0.909 | 31.3 ms 9.6 ms |

| Sample | Damage | Paint Shedding | Dirt Buildup |

|---|---|---|---|

| Training set | 647 | 687 | 758 |

| Validation set | 138 | 148 | 163 |

| Testing set | 139 | 147 | 162 |

| Total images | 924 | 982 | 1083 |

| Wind Turbine Blade Defect Classification Algorithm | Accuracy |

|---|---|

| DINOv2 + SCN | 0.987 |

| DINOv2 + Kmeans | 0.515 |

| DINOv2 + KNN | 0.982 |

| DINOv2 + SVM | 0.906 |

| DINOv2 + random forest | 0.983 |

| DINOv2 + RVFL | 0.958 |

| ResNet50 + SCN | 0.873 |

| ResNet18 + SCN | 0.795 |

| PCA + SCN | 0.453 |

| RESNet18 + KNN | 0.759 |

| RESNet18 + Kmeans | 0.360 |

| DINOv2 + SCN (No YOLOv5 positioning) | 0.944 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Tian, J.; Pang, X.; Shen, L.; Li, H.; Zheng, Z. Wind Turbine Blade Defect Recognition Method Based on Large-Vision-Model Transfer Learning. Sensors 2025, 25, 4414. https://doi.org/10.3390/s25144414

Li X, Tian J, Pang X, Shen L, Li H, Zheng Z. Wind Turbine Blade Defect Recognition Method Based on Large-Vision-Model Transfer Learning. Sensors. 2025; 25(14):4414. https://doi.org/10.3390/s25144414

Chicago/Turabian StyleLi, Xin, Jinghe Tian, Xinfu Pang, Li Shen, Haibo Li, and Zedong Zheng. 2025. "Wind Turbine Blade Defect Recognition Method Based on Large-Vision-Model Transfer Learning" Sensors 25, no. 14: 4414. https://doi.org/10.3390/s25144414

APA StyleLi, X., Tian, J., Pang, X., Shen, L., Li, H., & Zheng, Z. (2025). Wind Turbine Blade Defect Recognition Method Based on Large-Vision-Model Transfer Learning. Sensors, 25(14), 4414. https://doi.org/10.3390/s25144414