Utilizing Tympanic Membrane Temperature for Earphone-Based Emotion Recognition

Abstract

1. Introduction

- Introducing TMT as a novel physiological signal for emotion recognition;

- Developing custom earphone-based devices for naturalistic, continuous TMT measurement;

- Offering a comprehensive assessment of TMT for emotion recognition by utilizing autobiographical recall and scenario imagination methods;

- Demonstrating that right-to-left difference in TMT can effectively support emotion classification across different experiments.

2. Related Work

2.1. The Association Between TMT Lateralization and Emotion

2.2. Measurement of TMT

3. Materials and Methods

3.1. Development of Earphone-Type Thermometer

3.2. Overview of Experiments

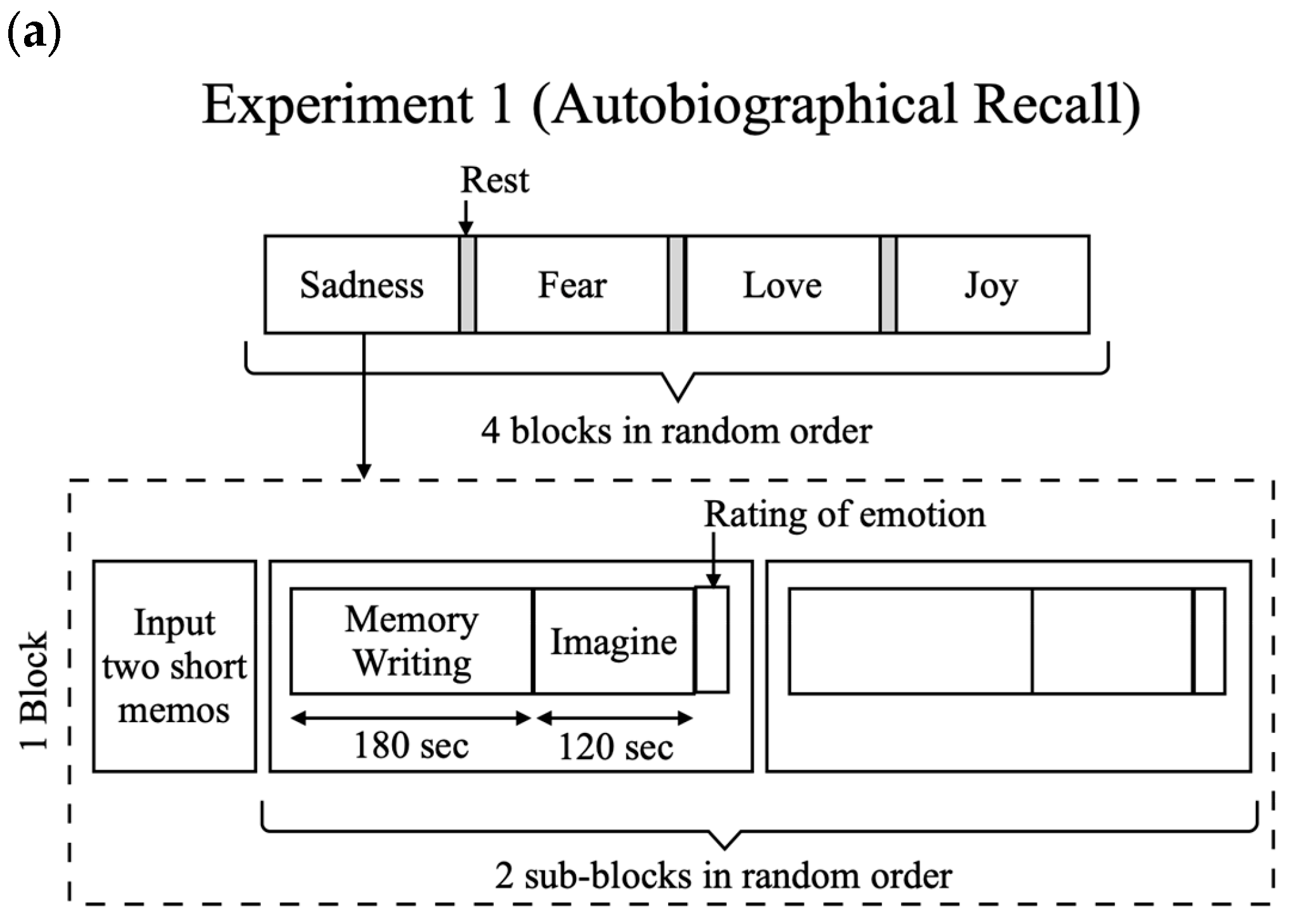

3.3. Experiment 1: Autobiographical Recall Experiment

3.4. Experiment 2: Scenario Imagination Experiment

4. Data Analysis

4.1. Descriptive Analysis of Emotion Ratings

4.2. Preprocess of Temperature Data

4.3. Analysis of Temporal Mean Temperature

4.4. Classification

- Gaussian Naïve Bayes (GNB): We used the GaussianNB classifier with default parameters.

- Support Vector Machine (SVM): We employed an SVC with a radial basis function kernel. The penalty parameter C was set to 0.5, with other parameters set to default.

- Multilayer Perceptron (MLP): Our MLPClassifier consists of three hidden layers, hidden_layer_sizes = (300, 300, 300), with other parameters set to default.

5. Results

5.1. Emotion Ratings

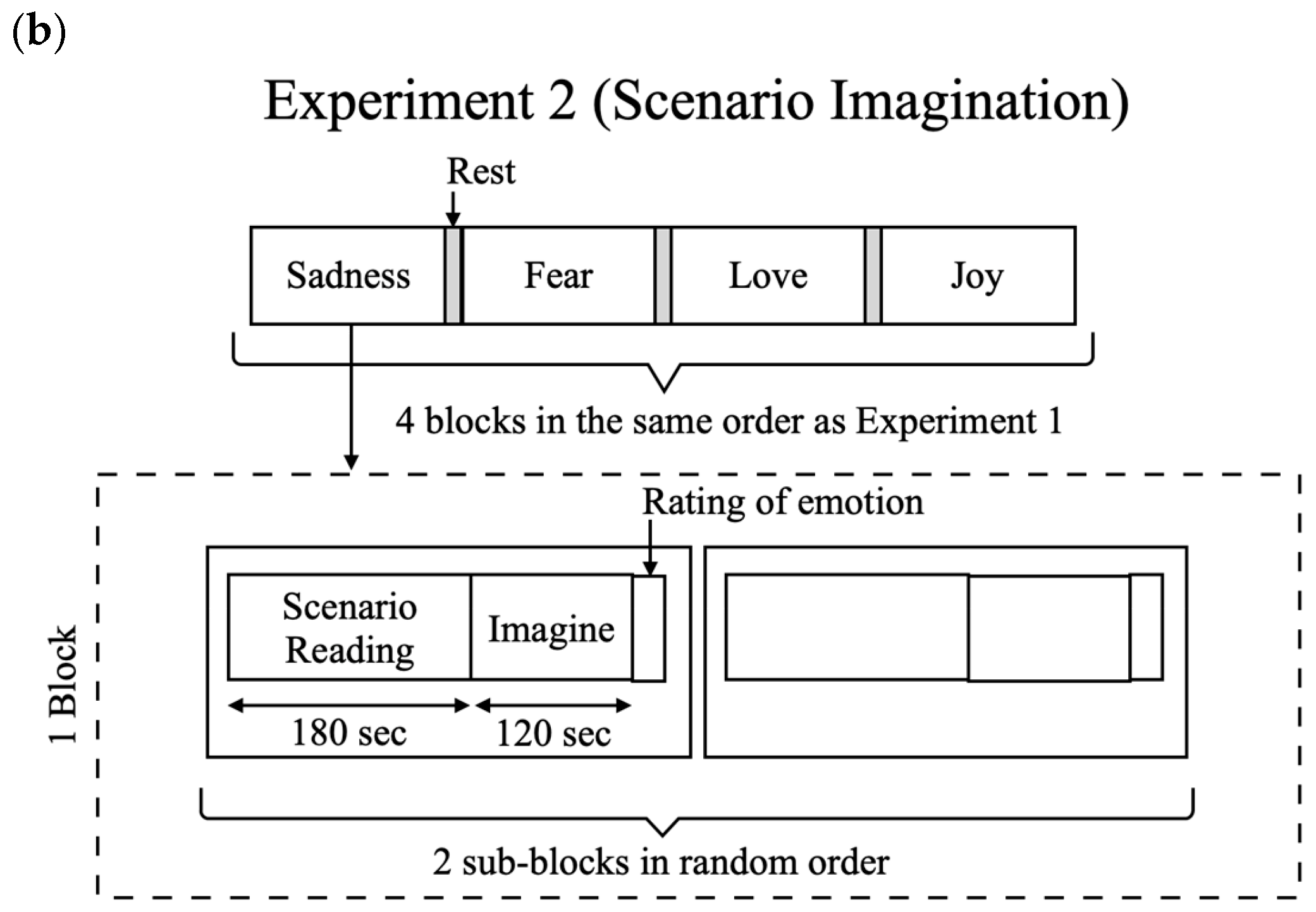

5.2. Temporal Temperature Differences

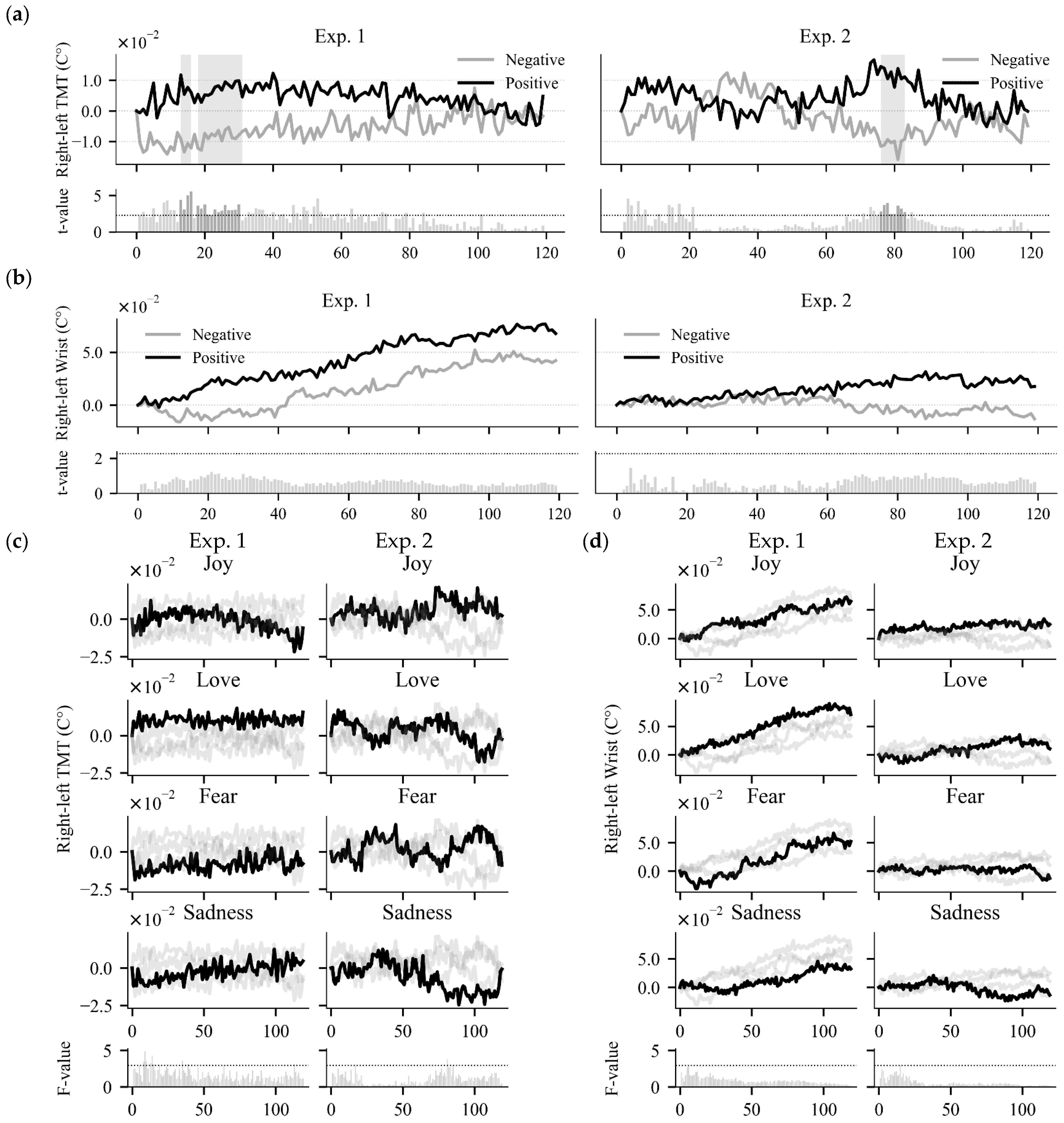

5.3. Classification Results

6. Discussion

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TMT | Tympanic Membrane Temperature |

| M | The mean |

| SD | The standard deviation |

| CI | Confidence Interval |

References

- Picard, R.W. Affective Computing; The MIT Press: Cambridge, MA, USA, 1997; p. 292. [Google Scholar]

- Wang, Y.; Song, W.; Tao, W.; Liotta, A.; Yang, D.; Li, X.; Gao, S.; Sun, Y.; Ge, W.; Zhang, W.; et al. A Systematic Review on Affective Computing: Emotion Models, Databases, and Recent Advances. Inf. Fusion. 2022, 83–84, 19–52. [Google Scholar] [CrossRef]

- Khare, S.K.; Blanes-Vidal, V.; Nadimi, E.S.; Acharya, U.R. Emotion Recognition and Artificial Intelligence: A Systematic Review (2014–2023) and Research Recommendations. Inf. Fusion. 2024, 102, 102019. [Google Scholar] [CrossRef]

- Larradet, F.; Niewiadomski, R.; Barresi, G.; Caldwell, D.G.; Mattos, L.S. Toward Emotion Recognition from Physiological Signals in the Wild: Approaching the Methodological Issues in Real-Life Data Collection. Front. Psychol. 2020, 11, 1111. [Google Scholar] [CrossRef]

- Tawsif, K.; Aziz, N.A.A.; Emerson Raja, J.; Hossen, J.; Jesmeen, M.Z. H A Systematic Review on Emotion Recognition System Using Physiological Signals: Data Acquisition and Methodology. Emerg. Sci. J. 2022, 6, 1167–1198. [Google Scholar]

- Wijasena, H.Z.; Ferdiana, R.; Wibirama, S. A Survey of Emotion Recognition Using Physiological Signal in Wearable Devices. In Proceedings of the 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Bandung, Indonesia, 28-30 April 2021; pp. 1–6. [Google Scholar]

- Saganowski, S.; Dutkowiak, A.; Dziadek, A.; Dzieżyc, M.; Komoszyńska, J.; Michalska, W.; Polak, A.; Ujma, M.; Kazienko, P. Emotion Recognition Using Wearables: A Systematic Literature Review-Work-in-Progress. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Saganowski, S.; Perz, B.; Polak, A.; Kazienko, P. Emotion Recognition for Everyday Life Using Physiological Signals from Wearables: A Systematic Literature Review. IEEE Trans. Affect. Comput. 2022, 14, 3. [Google Scholar] [CrossRef]

- Barrett, L.F. The Theory of Constructed Emotion: An Active Inference Account of Interoception and Categorization. Soc. Cogn. Affect. Neurosci. 2016, 12, 1–23. [Google Scholar] [CrossRef]

- Lindquist, K.A.; Wager, T.D.; Kober, H.; Bliss-Moreau, E.; Barrett, L.F. The Brain Basis of Emotion: A Meta-Analytic Review. Behav. Brain Sci. 2012, 35, 121–143. [Google Scholar] [CrossRef]

- Masè, M.; Micarelli, A.; Strapazzon, G. Hearables: New Perspectives and Pitfalls of in-Ear Devices for Physiological Monitoring. A Scoping Review. Front. Physiol. 2020, 11, 568886. [Google Scholar] [CrossRef]

- Choi, J.-Y.; Jeon, S.; Kim, H.; Ha, J.; Jeon, G.-S.; Lee, J.; Cho, S.-I. Health-Related Indicators Measured Using Earable Devices: Systematic Review. JMIR MHealth UHealth 2022, 10, e36696. [Google Scholar] [CrossRef]

- Athavipach, C.; Pan-ngum, S.; Israsena, P. A Wearable In-Ear EEG Device for Emotion Monitoring. Sensors 2019, 19, 4014. [Google Scholar] [CrossRef]

- Mai, N.-D.; Nguyen, H.-T.; Chung, W.-Y. Deep Learning-Based Wearable Ear-EEG Emotion Recognition System with Superlets-Based Signal-to-Image Conversion Framework. IEEE Sens. J. 2024, 24, 11946–11958. [Google Scholar] [CrossRef]

- Mogensen, C.B.; Wittenhoff, L.; Fruerhøj, G.; Hansen, S. Forehead or Ear Temperature Measurement Cannot Replace Rectal Measurements, except for Screening Purposes. BMC Pediatr. 2018, 18, 15. [Google Scholar] [CrossRef] [PubMed]

- Yeoh, W.K.; Lee, J.K.W.; Lim, H.Y.; Gan, C.W.; Liang, W.; Tan, K.K. Re-Visiting the Tympanic Membrane Vicinity as Core Body Temperature Measurement Site. PLoS ONE 2017, 12, e0174120. [Google Scholar] [CrossRef] [PubMed]

- Benzinger, T.H. On Physical Heat Regulation and the Sense of Temperature in Man. Proc. Natl. Acad. Sci. USA 1959, 45, 645–659. [Google Scholar] [CrossRef] [PubMed]

- Cherbuin, N.; Brinkman, C. Cognition Is Cool: Can Hemispheric Activation Be Assessed by Tympanic Membrane Thermometry? Brain Cogn. 2004, 54, 228–231. [Google Scholar] [CrossRef]

- Cherbuin, N.; Brinkman, C. Sensitivity of Functional Tympanic Membrane Thermometry (FTMT) as an Index of Hemispheric Activation in Cognition. Laterality 2007, 12, 239–261. [Google Scholar] [CrossRef]

- Hopkins, W.D.; Fowler, L.A. Lateralized Changes in Tympanic Membrane Temperature in Relation to Different Cognitive Tasks in Chimpanzees (Pan Troglodytes). Behav. Neurosci. 1998, 112, 83–88. [Google Scholar] [CrossRef][Green Version]

- Helton, W.S.; Kern, R.P.; Walker, D.R. Tympanic Membrane Temperature, Exposure to Emotional Stimuli and the Sustained Attention to Response Task. J. Clin. Exp. Neuropsychol. 2009, 31, 611–616. [Google Scholar] [CrossRef]

- Propper, R.E.; Brunyé, T.T.; Christman, S.D.; Bologna, J. Negative Emotional Valence Is Associated with Non-Right-Handedness and Increased Imbalance of Hemispheric Activation as Measured by Tympanic Membrane Temperature. J. Nerv. Ment. Dis. 2010, 198, 691–694. [Google Scholar] [CrossRef]

- Propper, R.E.; Januszewski, A.; Brunyé, T.T.; Christman, S.D. Tympanic Membrane Temperature, Hemispheric Activity, and Affect: Evidence for a Modest Relationship. J. Neuropsychiatry Clin. Neurosci. 2013, 25, 198–204. [Google Scholar] [CrossRef]

- Kiya, T.; Yamakage, M.; Hayase, T.; Satoh, J.-I.; Namiki, A. The Usefulness of an Earphone-Type Infrared Tympanic Thermometer for Intraoperative Core Temperature Monitoring. Anesth. Analg. 2007, 105, 1688–1692. [Google Scholar] [CrossRef]

- Yamakoshi, T.; Tanaka, N.; Yamakoshi, Y.; Matsumura, K.; Rolfe, P.; Hirose, H.; Takahashi, K. Development of a Core Body Thermometer with Built-in Earphone for Continuous Monitoring in GT Car Racing Athletes. Trans. Jpn. Soc. Med. Biol. Eng. 2010, 48, 494–504. [Google Scholar]

- Wager, T.D.; Phan, K.L.; Liberzon, I.; Taylor, S.F. Valence, Gender, and Lateralization of Functional Brain Anatomy in Emotion: A Meta-Analysis of Findings from Neuroimaging. Neuroimage 2003, 19, 513–531. [Google Scholar] [CrossRef]

- Gainotti, G. Emotional Behavior and Hemispheric Side of the Lesion. Cortex 1972, 8, 41–55. [Google Scholar] [CrossRef]

- Davidson, R.J.; Fox, N.A. Asymmetrical Brain Activity Discriminates between Positive and Negative Affective Stimuli in Human Infants. Science 1982, 218, 1235–1237. [Google Scholar] [CrossRef]

- Fox, N.A.; Davidson, R.J. Taste-Elicited Changes in Facial Signs of Emotion and the Asymmetry of Brain Electrical Activity in Human Newborns. Neuropsychologia 1986, 24, 417–422. [Google Scholar] [CrossRef] [PubMed]

- Coan, J.A.; Allen, J.J.; Harmon-Jones, E. Voluntary Facial Expression and Hemispheric Asymmetry over the Frontal Cortex. Psychophysiology 2001, 38, 912–925. [Google Scholar] [CrossRef] [PubMed]

- Stevens, J.S.; Hamann, S. Sex Differences in Brain Activation to Emotional Stimuli: A Meta-Analysis of Neuroimaging Studies. Neuropsychologia 2012, 50, 1578–1593. [Google Scholar] [CrossRef] [PubMed]

- Duerden, E.G.; Arsalidou, M.; Lee, M.; Taylor, M.J. Lateralization of Affective Processing in the Insula. Neuroimage 2013, 78, 159–175. [Google Scholar] [CrossRef]

- Craig, A.D. How Do You Feel? Princeton University Press: Princeton, NJ, USA, 2014; ISBN 9780691156767. [Google Scholar]

- Nielsen, B. Natural Cooling of the Brain during Outdoor Bicycling? Pflug. Arch. 1988, 411, 456–461. [Google Scholar] [CrossRef]

- Cabanac, M.; Germain, M.; Brinnel, H. Tympanic Temperatures during Hemiface Cooling. Eur. J. Appl. Physiol. Occup. Physiol. 1987, 56, 534–539. [Google Scholar] [CrossRef]

- Siedlecka, E.; Denson, T.F. Experimental Methods for Inducing Basic Emotions: A Qualitative Review. Emot. Rev. 2019, 11, 87–97. [Google Scholar] [CrossRef]

- Joseph, D.L.; Chan, M.Y.; Heintzelman, S.J.; Tay, L.; Diener, E.; Scotney, V.S. The Manipulation of Affect: A Meta-Analysis of Affect Induction Procedures. Psychol. Bull. 2020, 146, 355–375. [Google Scholar] [CrossRef] [PubMed]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward Machine Emotional Intelligence: Analysis of Affective Physiological State. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Hu, X.; Wang, F.; Zhang, D. Similar Brains Blend Emotion in Similar Ways: Neural Representations of Individual Difference in Emotion Profiles. Neuroimage 2022, 247, 118819. [Google Scholar] [CrossRef]

- Zaki, J.; Bolger, N.; Ochsner, K. Unpacking the Informational Bases of Empathic Accuracy. Emotion 2009, 9, 478–487. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, H.W.; Barrett, L.F. How Does This Make You Feel? A Comparison of Four Affect Induction Procedures. Front. Psychol. 2014, 5, 689. [Google Scholar] [CrossRef]

- Velten, E., Jr. A Laboratory Task for Induction of Mood States. Behav. Res. Ther. 1968, 6, 473–482. [Google Scholar] [CrossRef]

- Maris, E.; Oostenveld, R. Nonparametric Statistical Testing of EEG- and MEG-Data. J. Neurosci. Methods 2007, 164, 177–190. [Google Scholar] [CrossRef]

- Thomas, M.C.; Thomas, J.A. Elements of Information Theory: Cover/Elements of Information Theory, Second Edition, 2nd ed.; John Wiley & Sons: Nashville, TN, USA, 2006; ISBN 9780471241959. [Google Scholar]

- Rahman, M.M.; Xu, X.; Nathan, V.; Ahmed, T.; Ahmed, M.Y.; McCaffrey, D.; Kuang, J.; Cowell, T.; Moore, J.; Mendes, W.B.; et al. Detecting Physiological Responses Using Multimodal Earbud Sensors. In Proceedings of the C2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 1–5. [Google Scholar]

- Guo, R.; Guo, H.; Wang, L.; Chen, M.; Yang, D.; Li, B. Development and Application of Emotion Recognition Technology-a Systematic Literature Review. BMC Psychol. 2024, 12, 95. [Google Scholar] [CrossRef]

- Li, F.; Zhang, D. Transformer-Driven Affective State Recognition from Wearable Physiological Data in Everyday Contexts. Sensors 2025, 25, 761. [Google Scholar] [CrossRef] [PubMed]

- Yin, Y.; Kong, W.; Tang, J.; Li, J.; Babiloni, F. PSPN: Pseudo-Siamese Pyramid Network for Multimodal Emotion Analysis. Cogn. Neurodyn. 2024, 18, 2883–2896. [Google Scholar] [CrossRef]

- Shui, X.; Zhang, M.; Li, Z.; Hu, X.; Wang, F.; Zhang, D. A Dataset of Daily Ambulatory Psychological and Physiological Recording for Emotion Research. Sci. Data 2021, 8, 161. [Google Scholar] [CrossRef]

- Jürgens, R.; Grass, A.; Drolet, M.; Fischer, J. Effect of Acting Experience on Emotion Expression and Recognition in Voice: Non-Actors Provide Better Stimuli than Expected. J. Nonverbal Behav. 2015, 39, 195–214. [Google Scholar] [CrossRef] [PubMed]

| Emotion Ratings | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Exp. | Target Emotion | Joy | Tenderness | Inspire | Amusement | Anger | Disgust | Fear | Sadness |

| Exp. 1 | Positive | 6.6 ± 1.8 | 6.0 ± 2.5 | 5.0 ± 2.9 | 3.2 ± 2.4 | 1.8 ± 1.6 | 1.4 ± 1.2 | 1.6 ± 1.2 | 3.3 ± 2.4 |

| Joy | 7.3 ± 0.9 | 5.4 ± 2.6 | 5.5 ± 2.6 | 4.2 ± 2.5 | 1.3 ± 0.6 | 1.2 ± 0.5 | 1.6 ± 1.3 | 2.3 ± 1.6 | |

| Love | 5.8 ± 2.2 | 6.6 ± 2.4 | 4.4 ± 3.1 | 2.2 ± 1.8 | 2.3 ± 2.1 | 1.6 ± 1.6 | 1.7 ± 1.2 | 4.4 ± 2.7 | |

| Negative | 1.5 ± 0.7 | 1.8 ± 1.5 | 1.8 ± 1.6 | 1.3 ± 0.8 | 4.2 ± 2.6 | 4.3 ± 2.9 | 5.7 ± 2.7 | 6.2 ± 2.6 | |

| Fear | 1.4 ± 0.7 | 1.2 ± 0.5 | 1.8 ± 1.7 | 1.5 ± 0.9 | 3.8 ± 2.5 | 4.3 ± 3.0 | 7.6 ± 1.0 | 5.1 ± 3.1 | |

| Sadness | 1.5 ± 0.8 | 2.4 ± 1.9 | 1.8 ± 1.6 | 1.2 ± 0.5 | 4.5 ± 2.6 | 4.4 ± 2.9 | 3.8 ± 2.5 | 7.4 ± 1.0 | |

| Exp. 2 | Positive | 7.0 ± 1.2 | 6.8 ± 1.9 | 5.7 ± 2.7 | 3.0 ± 2.0 | 1.2 ± 0.4 | 1.1 ± 0.2 | 1.1 ± 0.4 | 2.1 ± 1.7 |

| Joy | 7.3 ± 0.9 | 6.2 ± 2.3 | 6.4 ± 2.4 | 3.1 ± 2.2 | 1.2 ± 0.4 | 1.1 ± 0.3 | 1.2 ± 0.4 | 1.3 ± 0.7 | |

| Love | 6.6 ± 1.3 | 7.5 ± 1.3 | 5.0 ± 2.9 | 2.9 ± 2.0 | 1.2 ± 0.4 | 1.1 ± 0.1 | 1.1 ± 0.3 | 2.9 ± 2.0 | |

| Negative | 1.2 ± 0.6 | 1.6 ± 1.3 | 1.4 ± 1.1 | 1.1 ± 0.2 | 3.3 ± 2.6 | 3.4 ± 2.6 | 6.0 ± 3.0 | 6.4 ± 2.4 | |

| Fear | 1.2 ± 0.6 | 1.1 ± 0.3 | 1.5 ± 1.4 | 1.1 ± 0.3 | 3.4 ± 2.6 | 4.5 ± 2.6 | 8.1 ± 0.9 | 4.9 ± 2.4 | |

| Sadness | 1.2 ± 0.7 | 2.1 ± 1.7 | 1.3 ± 0.7 | 1.1 ± 0.2 | 3.1 ± 2.7 | 2.4 ± 2.2 | 3.9 ± 2.9 | 8.0 ± 1.0 | |

| Class | Data | Chance | GNB | SVM | MLP |

|---|---|---|---|---|---|

| Valence | TMT | 50.0 | 63.7 ± 13.1 | 67.5 ± 13.9 | 72.5 ± 16.6 |

| Wrist | 53.8 ± 21.0 | 50.0 ± 20.9 | 43.8 ± 17.0 | ||

| Discrete | TMT | 25.0 | 36.2 ± 8.8 | 35.0 ± 12.2 | 35.0 ± 13.5 |

| Wrist | 27.5 ± 10.9 | 21.2 ± 8.0 | 27.5 ± 9.4 |

| Class | Data | Chance | GNB | SVM | MLP |

|---|---|---|---|---|---|

| Valence | TMT | 50.0 | 62.5 ± 15.8 | 63.7 ± 13.1 | 68.8 ± 15.1 |

| Wrist | 41.2 ± 14.8 | 40.0 ± 13.5 | 38.8 ± 20.5 | ||

| Discrete | TMT | 25.0 | 32.5 ± 15.0 | 42.5 ± 16.0 | 36.2 ± 15.3 |

| Wrist | 18.8 ± 8.4 | 16.2 ± 9.8 | 18.8 ± 12.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Furukawa, K.; Shui, X.; Li, M.; Zhang, D. Utilizing Tympanic Membrane Temperature for Earphone-Based Emotion Recognition. Sensors 2025, 25, 4411. https://doi.org/10.3390/s25144411

Furukawa K, Shui X, Li M, Zhang D. Utilizing Tympanic Membrane Temperature for Earphone-Based Emotion Recognition. Sensors. 2025; 25(14):4411. https://doi.org/10.3390/s25144411

Chicago/Turabian StyleFurukawa, Kaita, Xinyu Shui, Ming Li, and Dan Zhang. 2025. "Utilizing Tympanic Membrane Temperature for Earphone-Based Emotion Recognition" Sensors 25, no. 14: 4411. https://doi.org/10.3390/s25144411

APA StyleFurukawa, K., Shui, X., Li, M., & Zhang, D. (2025). Utilizing Tympanic Membrane Temperature for Earphone-Based Emotion Recognition. Sensors, 25(14), 4411. https://doi.org/10.3390/s25144411