Abstract

Star sensors, as the most precise attitude measurement devices currently available, play a crucial role in spacecraft attitude estimation. However, traditional frame-based cameras tend to suffer from target blur and loss under high-dynamic maneuvers, which severely limit the applicability of conventional star sensors in complex space environments. In contrast, event cameras—drawing inspiration from biological vision—can capture brightness changes at ultrahigh speeds and output a series of asynchronous events, thereby demonstrating enormous potential for space detection applications. Based on this, this paper proposes an event data extraction method for weak, high-dynamic space targets to enhance the performance of event cameras in detecting space targets under high-dynamic maneuvers. In the target denoising phase, we fully consider the characteristics of space targets’ motion trajectories and optimize a classical spatiotemporal correlation filter, thereby significantly improving the signal-to-noise ratio for weak targets. During the target extraction stage, we introduce the DBSCAN clustering algorithm to achieve the subpixel-level extraction of target centroids. Moreover, to address issues of target trajectory distortion and data discontinuity in certain ultrahigh-dynamic scenarios, we construct a camera motion model based on real-time motion data from an inertial measurement unit (IMU) and utilize it to effectively compensate for and correct the target’s trajectory. Finally, a ground-based simulation system is established to validate the applicability and superior performance of the proposed method in real-world scenarios.

1. Introduction

In spacecraft attitude estimation, star sensors are the most accurate devices available, offering drift-free performance and long operational lifetimes [1,2]. Unlike the coarse estimation methods used during satellite separation, star sensors provide critical support for mission objectives during stable flight through precise measurements, such as accurately aligning with space or Earth target regions. As imaging devices that incorporate advanced image-processing algorithms, star sensors estimate spacecraft attitude by recognizing star distribution patterns—their key function is the accurate extraction of star information from images [3,4,5,6].

As mentioned above, star sensors usually operate during stable flight conditions. However, when used in high-dynamic scenarios—especially during initial orbit insertion, maneuvers, or high-angle attitude adjustments of high-dynamic platforms (such as agile satellites and long-range weapons)—several problems may occur: (1) During the integration period, star points move within the image sensor’s photosensitive area, forming streaks on the sensor’s target plane. This motion blur reduces the image’s signal-to-noise ratio (SNR) and the accuracy of star point centroid localization. (2) Errors in star point centroid localization can lead to redundant or false matching during star map recognition, thereby decreasing both recognition speed and accuracy. (3) Significant positional changes of star points between adjacent frames increase the difficulty in tracking star targets, reducing the success rate and efficiency of star tracking [7,8,9]. Since the 1990s, institutions such as Jena–Optronik (ASTRO–APS) [10] in Germany, AeroAstro (MST) [11] in the United States, and Sodern (SED-16) [12] in France have conducted extensive research on high-dynamic star sensors, achieving a dynamic performance of approximately 10°/s. However, as star sensors are now being applied to high-speed, supersonic, and hypersonic flights, where dynamic rates may reach 15–30°/s, traditional star sensors face severe challenges under these high-dynamic conditions.

Bio-inspired event cameras, as a novel type of sensor, differ from conventional cameras that rely on frame-based imaging modalities. Instead, event cameras can asynchronously capture brightness changes and output event streams containing timestamp, spatial coordinate, and polarity information. Owing to its advantages of microsecond-level temporal resolution, a high-dynamic range (HDR), and low power consumption, this technology has demonstrated significant application potential in space exploration. For instance, in 2017, Cohen et al. successfully detected moving targets in space ranging from low-Earth orbit (LEO) to geosynchronous orbit (GEO) using event cameras in conjunction with large-aperture, long-focal-length ground-based telescopes [13]; in 2019, Chin et al. further explored the application of event cameras in star tracking [14,15]; and in 2021, Roffe et al. validated the reliable operational performance of event cameras in harsh space radiation environments [16].

However, event cameras still face multiple challenges in space target detection applications, with calibration accuracy and high-dynamic-range target extraction emerging as two key technical bottlenecks. In the domain of event camera calibration techniques, Muglikar et al. proposed a general event camera calibration framework based on image reconstruction in 2021, which leverages neural-network-based image reconstruction to break through the limitations of traditional methods that rely on flickering LED patterns [17]. In the same year, Huang et al. developed a dynamic event camera calibration method capable of directly extracting calibration patterns from raw event streams, making it suitable for high-dynamic scenarios [18]. In 2023, Salah et al. developed the E-Calib calibration toolbox, which utilizes the robustness of asymmetric circular grids to handle defocused scenes and introduces an efficient reweighted-least-squares (eRWLS) method for the subpixel-precision extraction of circular features in calibration patterns [19]. In 2024, Salah et al. further advanced the E-Calib toolbox by proposing the eRWLS method, achieving subpixel-precision feature extraction under diverse lighting conditions [20]. Concurrently, Liu et al. introduced the LECalib method, which for the first time, enabled event camera calibration based on line features, particularly suitable for artificial targets, such as spacecrafts with abundant geometric lines [21,22,23]. These advancements in calibration techniques have provided critical support for the application of event cameras in high-precision space measurements.

Event cameras have also demonstrated unique advantages in space target tracking and attitude estimation. In 2023, Jawaid et al. developed the SPADES dataset to address the domain gap issue in satellite attitude estimation, systematically investigating the application of event cameras in space target attitude estimation for the first time [24]. During the Second Satellite Pose Estimation Competition (SPEC2021), organized by Park et al. in 2023, the domain gap between synthetic and real images was highlighted, driving technological progress in this field [25]. In terms of algorithmic innovation, Liu et al. proposed ESVO (Event Stereo Visual Odometry) in 2023, achieving real-time localization and mapping based on stereo event cameras for the first time [26]. In 2024, Wang et al. developed an asynchronous event-stream-processing framework, based on spiking neural networks (SNNs), which suppresses noise through spatiotemporal correlation filtering and achieves target trajectory association using event density features, maintaining stable tracking capabilities even in low signal-to-noise-ratio (SNR) space scenarios [27]. In 2025, Liu et al. proposed a six-degree-of-freedom (6-DOF) pose tracking method for stereo event cameras (stereo-event-based 6-DOF pose tracking). By constructing a target wireframe model and adopting an event-line association strategy, this method enables the continuous pose estimation of non-cooperative spacecrafts. Combined with smoothness-constrained optimization, it resolves the motion blur caused by high-speed movements [22].

In summary, while the algorithmic systems for the event stream processing of event cameras have become relatively comprehensive, there remain notable deficiencies in their dedicated algorithms tailored for space exploration. Specifically, although event cameras have not yet matched the detection accuracy of traditional star trackers for space targets, their performance in high-dynamic scenarios—characterized by microsecond-level temporal resolution and wide-dynamic ranges (WDRs)—significantly surpasses that of conventional cameras, providing a critical complement to high-speed-maneuver target detection. However, in the more challenging context of detecting weak high-dynamic space targets, traditional spatiotemporal correlation filtering methods encounter substantial bottlenecks: They struggle to effectively distinguish target events from background noise. This limitation arises from the inherent characteristics of space targets, which feature weak signals and low signal-to-noise ratios (SNRs), compounded by the energy dispersion induced by high-dynamic maneuvers, which further degrade the target recognition performance of conventional methods. To address this core challenge, this paper proposes an event-driven extraction method for weak high-dynamic targets, aiming to enhance the space target detection capability of event cameras in high-dynamic-maneuver scenarios. The key contributions of this work are summarized as follows:

- For weak space targets’ event stream data, we improve the conventional spatiotemporal correlation filter. Specifically, the event stream is first segmented into pseudo-image frames at fixed time intervals; then, based on the trajectory characteristics of the space targets, a circular local sliding window is employed to determine the spatial neighborhood of the events; finally, noise within the event neighborhoods in the pseudo-image frames is assessed based on the event density—either within a single neighborhood or across multiple neighborhoods—thereby achieving effective denoising of the event stream data for weak space targets (Section 2);

- To extract space targets from the event stream, we introduce a density-based DBSCAN clustering algorithm to achieve subpixel target centroid extraction. For certain ultrahigh-dynamic scenarios—where rapid and nonuniform camera motions lead to target information distortion and data discontinuity—we utilize real-time motion data from an inertial measurement unit (IMU) to construct a camera motion model that infers the camera’s position and orientation at any given moment, thereby enabling the precise compensation and correction of trajectory distortions arising during motion (Section 3);

- We developed a ground-based simulation system to verify the applicability of our proposed method with actual cameras and in real-world scenarios (Section 4).

2. Denoising of Weak High-Dynamic Targets

2.1. Methodology

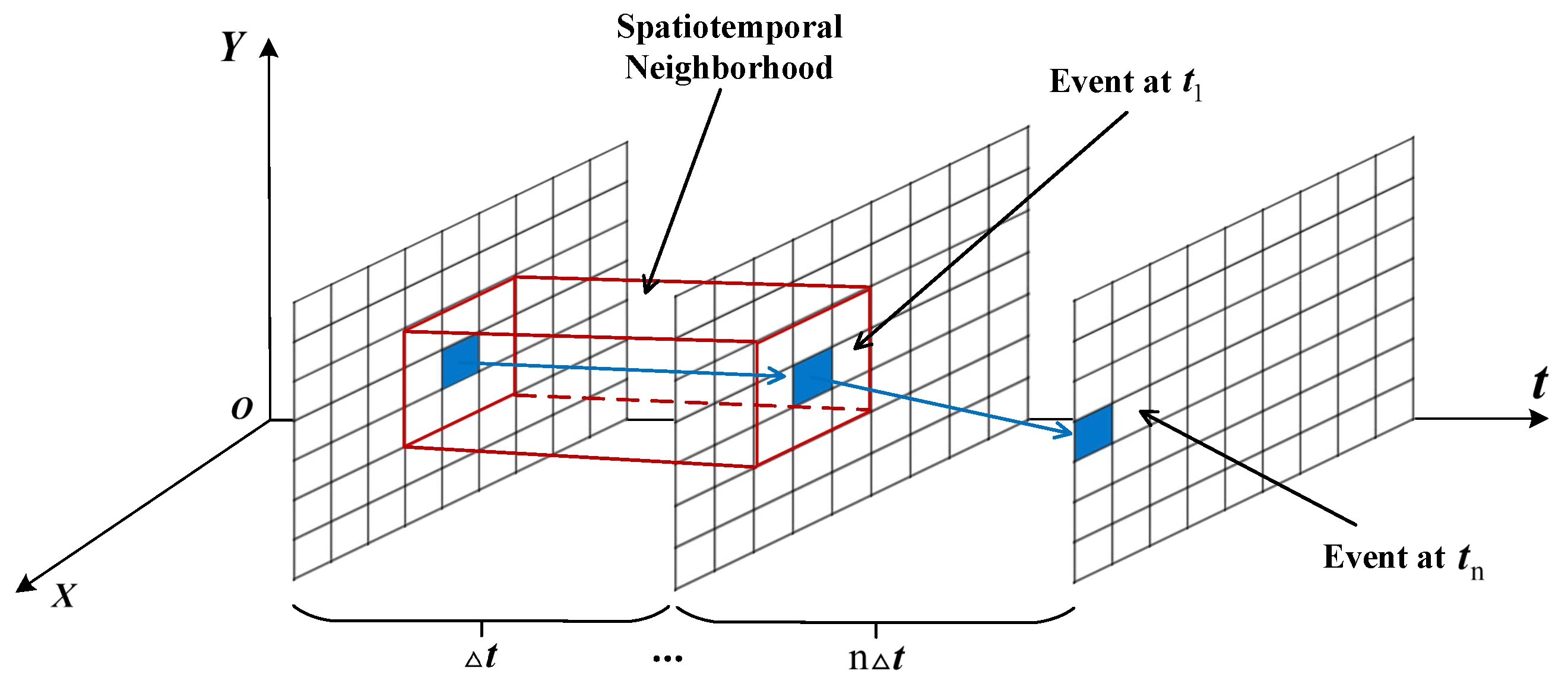

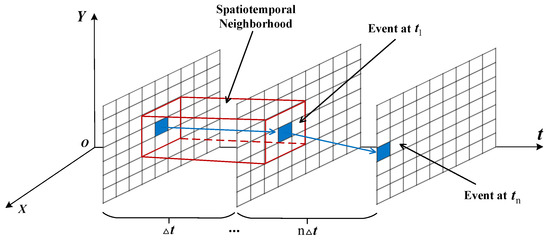

Target events are generated by intensity changes caused by the relative motion between the spacecraft and the target, and the activated pixels are typically adjacent within a certain time period. The distinction between noise and target events lies in the lack of spatiotemporal correlation among noise events within a spatial neighborhood. By exploiting this difference, noise can be filtered out by detecting events produced by neighboring pixels—a process known as the classical spatiotemporal correlation filter (hereafter, the classical filter). As illustrated in Figure 1, the principle is to store the timestamps of events from pixels adjacent to each pixel and then check whether the time difference between the current timestamp and the previous one is less than ; if so, the event is retained, otherwise, it is filtered out [28,29].

Figure 1.

Schematic diagram of the classical spatiotemporal correlation filter principle.

However, space targets often suffer from weak signals and low signal-to-noise ratios, making it challenging for conventional spatiotemporal correlation filters to differentiate target events from noise in the event stream. To overcome this limitation, we propose an enhanced filtering method that builds on classical filters. In this approach, the event stream is compressed into pseudo-image frames, and a local sliding window is applied to each frame to adjust the spatial neighborhood selection. By leveraging event density information, the filter determines whether an event is noise. This method is termed the Neighborhood-Density-based Spatiotemporal Event Filter (NDSEF). The detailed procedure is as follows:

The NDSEF’s denoising approach begins by sequentially reading the event stream at fixed time intervals to generate pseudo-image frames, denoted as , each containing a series of events as follows:

In the spatial event stream, the motion trajectory of a target signal typically exhibits a roughly cylindrical pattern, and its corresponding local spatial region in a pseudo-image frame tends to be circular or elliptical rather than adopting the rectangular window used in conventional spatiotemporal correlation filters. Therefore, in each pseudo-image frame (), we employ a circular local sliding window () to iteratively examine every event neighborhood, thereby capturing the spatial distribution characteristics of the target signal more precisely.

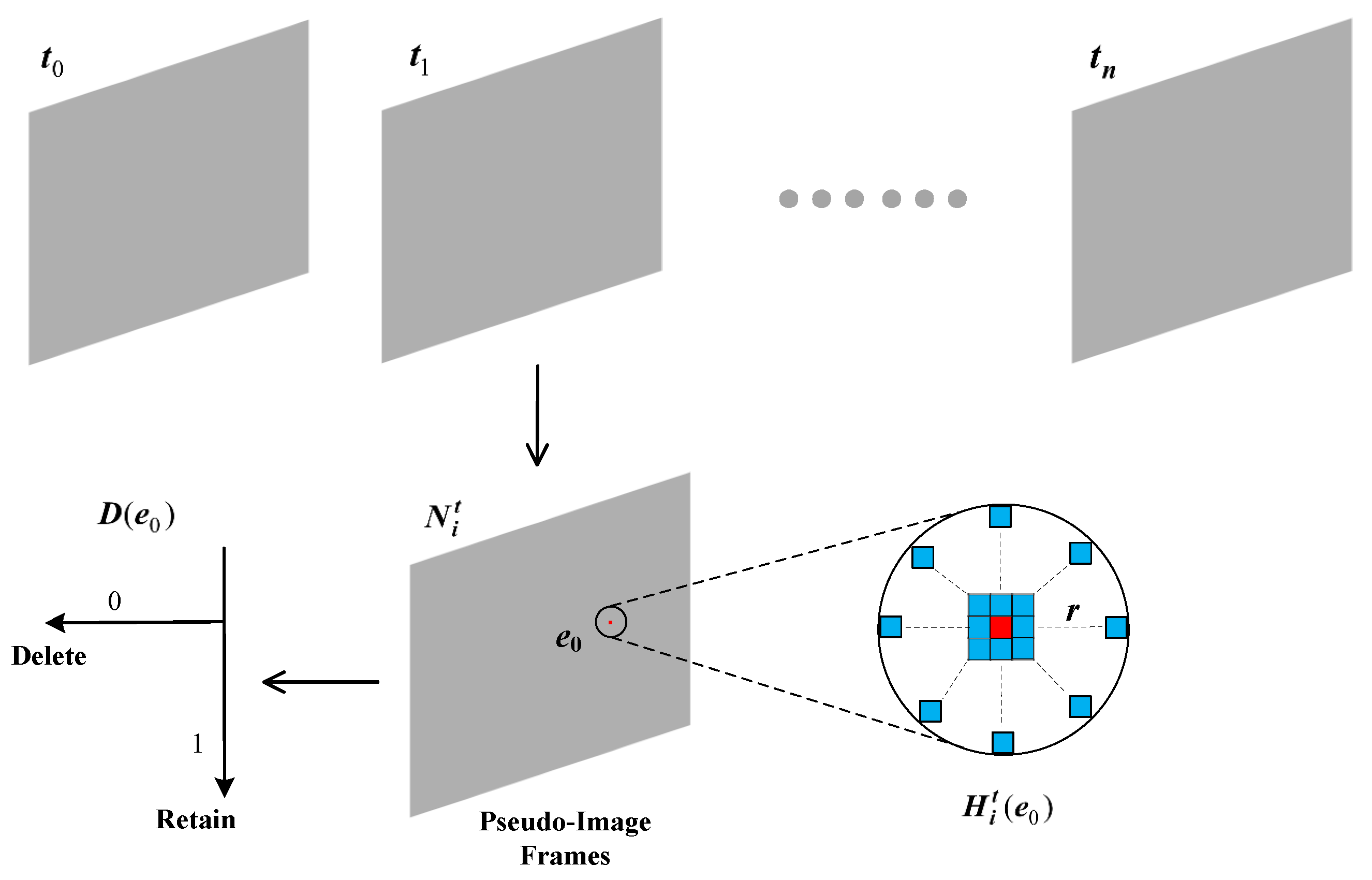

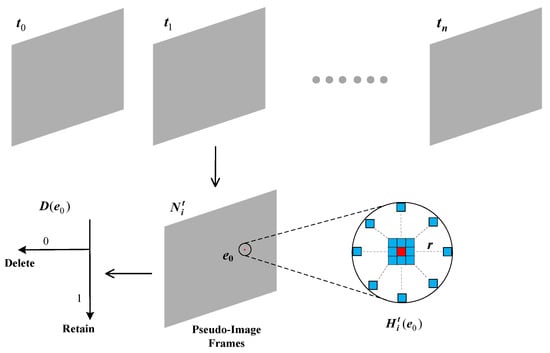

As shown in Figure 2, by setting a neighborhood radius (r), we calculate the density value () for the event () marked in red in the pseudo-image frame. This density value not only reflects the correlation between events across different timestamps but also reveals the spatial distribution characteristics of events within the local neighborhood. The formula is expressed as follows:

Figure 2.

Noise reduction principle of the NDSEF.

At each event location, we characterize the sparsity of an event () by the accumulated event density () over a fixed time interval (). By applying an event density threshold () to filter out noise, the filtering outcome is represented by a binary function (D), where 1 denotes a target event, and 0 denotes a noise event. The mathematical expression is as follows:

2.2. Optimization

While the aforementioned method achieves satisfactory denoising in low-dynamic space target scenarios, it encounters challenges when an aircraft is in a high-dynamic maneuvering state. Under such conditions, the spatial distribution of target events becomes elongated within an extremely short time. In these cases, the NDSEF method—which relies solely on a fixed-scale neighborhood radius (r) for density calculations—tends to preserve noise due to the larger neighborhood, thereby significantly reducing its denoising performance.

To address the deterioration in the denoising performance under high-dynamic maneuvering conditions, we introduce multiple neighborhood radii () (ordered from the smallest to the largest) to the same pseudo-image frame, conducting a neighborhood search and density estimation at each scale. Subsequently, the results across different scales are fused to determine whether a particular event should be retained or discarded. The primary steps and formulae are described as follows:

- Define a set of radii , where . For each event () in the pseudo-image frame (), we define its neighborhood set () at different radii as follows:

- At each radius (), the local neighborhood density () is defined as follows:The above expression indicates the number of events within the kth-scale neighborhood that are sufficiently close to ;

- To integrate the density information across multiple scales, we introduce a multiscale fusion function () to describe the overall density of event within neighborhoods defined by different radii. The expression below indicates that only those events that maintain a certain density across all the scales are recognized as target events, thereby effectively eliminating false detections caused by local noise aggregation.

- In a manner similar to that in the single-scale filtering approach, we need to define a final decision threshold () to determine whether an event () is retained (denoted as 1) or discarded (denoted as 0).

Multiscale neighborhood fusion, indeed, enhances the filtering accuracy for weak targets under high-dynamic conditions, but it also incurs additional computational overhead. To ensure the real-time performance, we adopt the following optimization strategies:

- Hierarchical screening: First, a rapid preliminary screening is performed using a small radius () to eliminate the vast majority of the sparse noise; only the events retained from this initial screening are then subjected to a more refined density evaluation using larger radii ();

- Parallel/neighborhood accumulation: When computing the density across various scales, an octree spatial data structure is employed, enabling a single query to return the aggregation count for multiple radii, thereby reducing redundant scanning.

3. Extraction of Weak High-Dynamic Targets

Compared to traditional frame cameras, such as star sensors, event cameras exhibit significantly higher temporal resolutions, with a response speed of up to 1 microsecond/event [30]. This high temporal resolution effectively mitigates target position extraction bias caused by motion blur in high-dynamic scenarios. In this study, we convert the event stream to pseudo-image frames at fixed time intervals and apply a density-based DBSCAN (Density-Based Spatial Clustering of Applications with Noise) clustering algorithm to precisely extract subpixel centroids of moving space targets [31,32].

The DBSCAN clustering algorithm relies on two key parameters: the neighborhood radius and the minimum number of samples per event cluster. In pseudo-image frames, the extraction of target centroids depends on the clustering performance of the DBSCAN algorithm, and its mathematical expression is given as follows:

In the equation, the centroid’s coordinates () are computed as the weighted average of the spatial coordinates of all the events within each event cluster. Each centroid represents the target’s position in the current frame, and by analyzing the variation in the centroid’s positions across consecutive frames, the target’s motion trajectory can be effectively tracked.

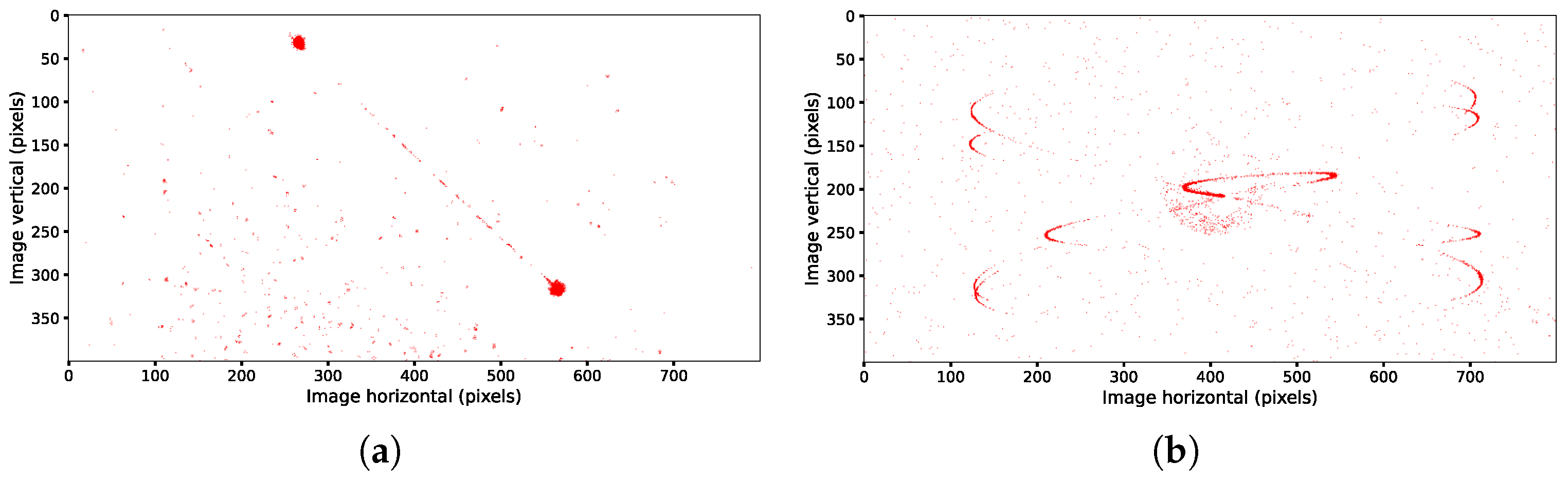

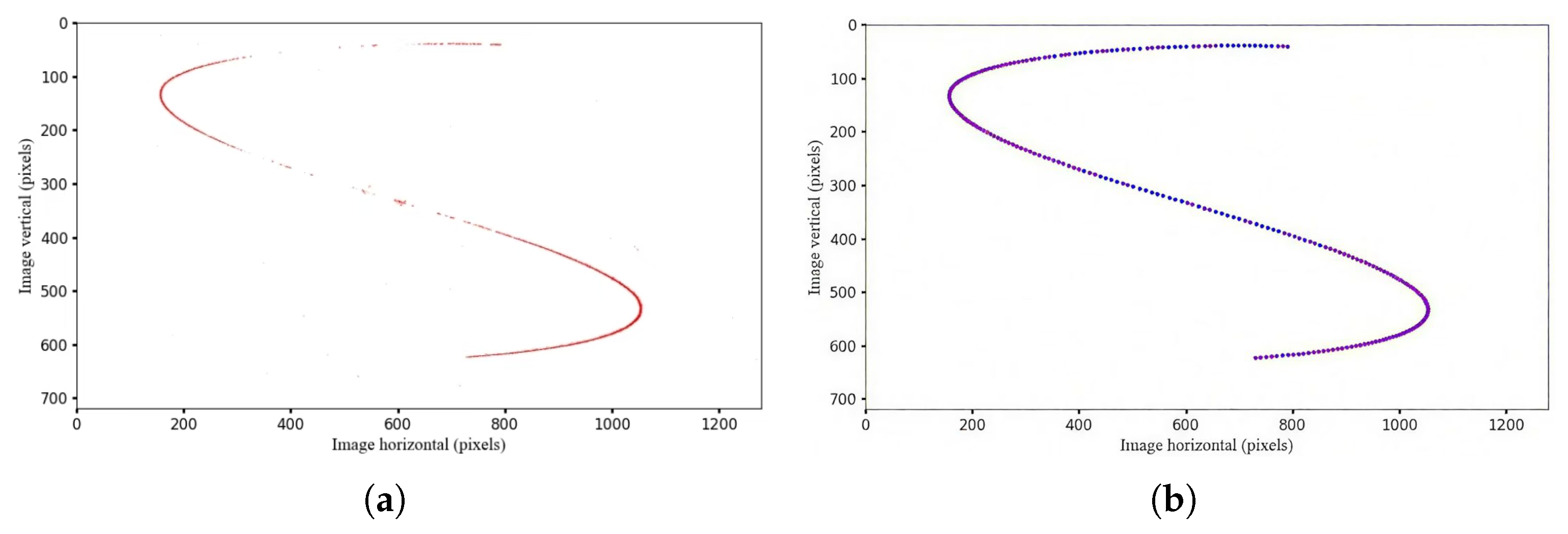

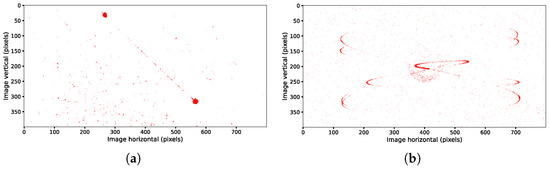

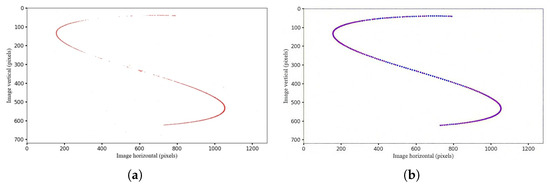

However, under certain ultrahigh-dynamic conditions, the target trajectories captured by the event camera are often distorted due to the camera’s extremely rapid and nonuniform motion. The acquired data exhibit significant discontinuities with large gaps, which compromise the accuracy of the centroid extraction, as shown in Figure 3.

Figure 3.

Schematic of the target’s trajectory after optimized-time single-channel filtering. (a) Linear motion with an angular velocity of 20°/s; (b) sinusoidal motion with an amplitude of 10° and a frequency of 1 Hz.

To compensate for the trajectory distortion caused by the camera’s ultrahigh-speed motion and to improve the accuracy of the target centroid extraction, we incorporate camera acceleration and angular velocity data provided by an inertial measurement unit (IMU) to infer and correct the camera’s trajectory. Currently, the DVXplorer Lite event camera from ETH Zurich is equipped with an integrated IMU system [33]. The real-time motion information supplied by the IMU facilitates the construction of an accurate camera motion model, which allows us to determine the camera’s position and orientation at any given moment and achieve precise compensation for the trajectory distortions incurred during motion.

The camera’s motion consists of translational and rotational components. The accelerometer and angular velocity data from the IMU can be used to describe these motions [34,35]. The acceleration () measured by the accelerometer allows us to calculate the camera’s velocity and position. Assuming the camera’s initial velocity at time is and its initial position is , by integrating the acceleration, we can calculate the camera’s velocity () and position () at any time (t) as follows:

This method calculates the camera’s positional change by doubly integrating the acceleration. The angular velocity (), measured by the gyroscope, describes the camera’s rotational speed around the three axes. By integrating the angular velocity, the camera’s orientation () can be obtained as follows:

where is the initial orientation of the camera, and is the angular velocity measured at time . Integrating the angular velocity gives the camera’s rotational change in three-dimensional space.

Due to differences in the sampling frequency between the acceleration and angular velocity data provided by the IMU and the event points captured by the event camera, the event camera records information from rapidly changing dynamic scenes, while the IMU data are used to infer the camera’s motion state at each moment. In practical applications, we employ an interpolation method to adjust the IMU data to match the event camera’s sampling times. After time synchronization, the IMU’s acceleration and angular velocity data can be accurately aligned with each event point captured by the event camera, thereby providing camera motion parameters for every event.

Using the motion information provided by the IMU, we can correct the event points. Let represent an event captured by the camera at time t, where is the event’s position in the image, and is the event’s polarity (enhancement or suppression). Using the IMU’s motion data, and assuming the camera’s motion (position and orientation) state at the event capture’s time is and , we can use the following formula to correct the event point’s spatial coordinates:

where is the corrected position of the event point, T is the compensation model, and and represent the camera’s position and orientation at time t, respectively.

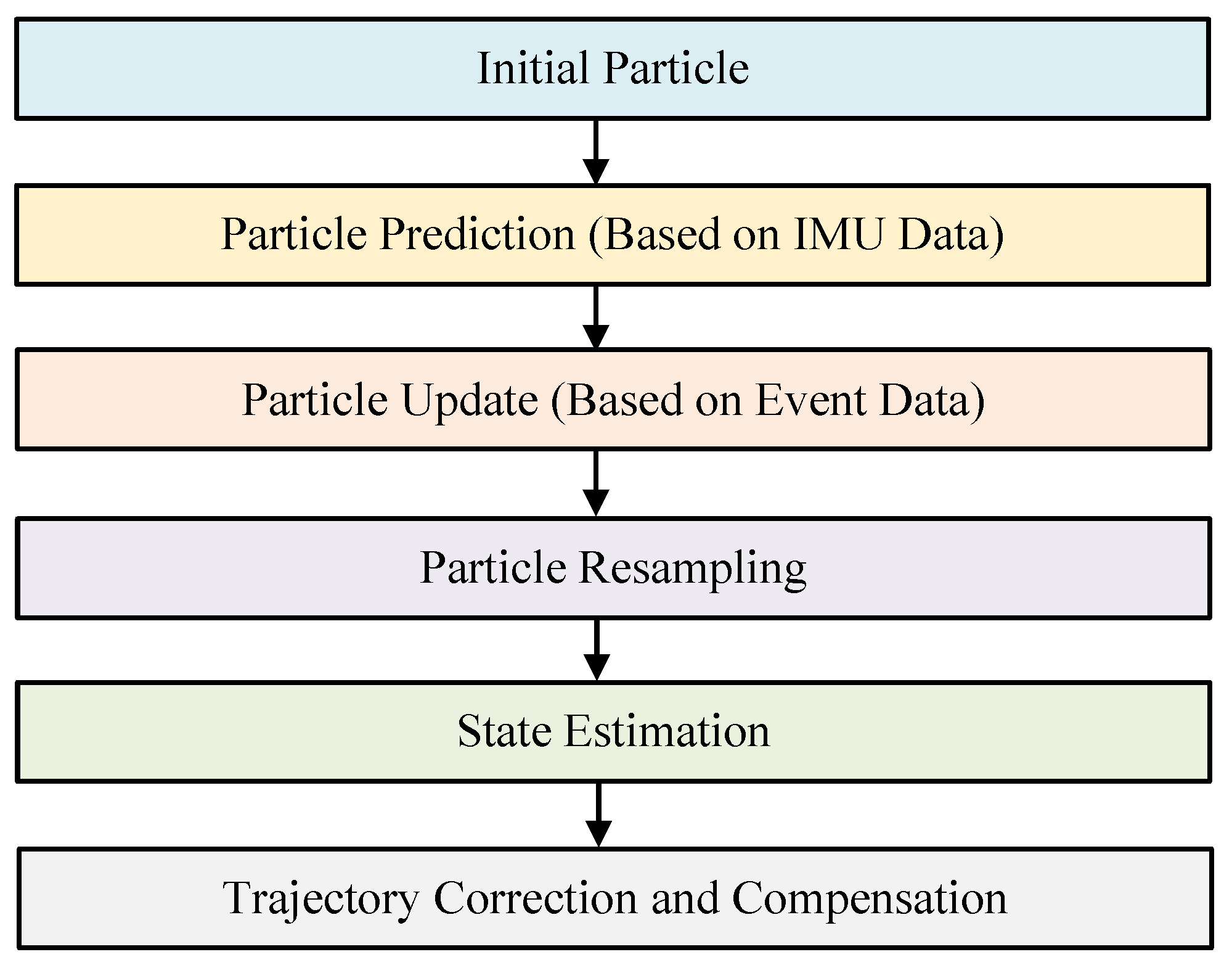

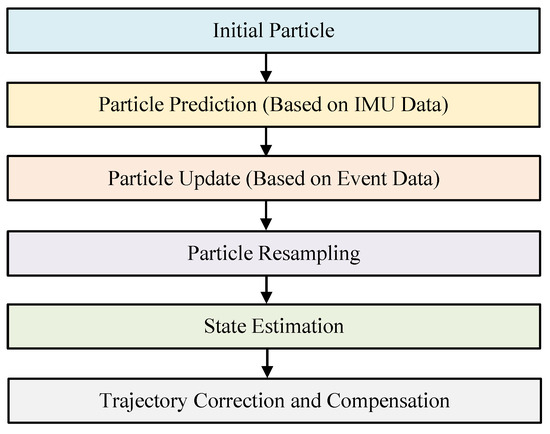

According to the above, we have developed a method that estimates the camera’s motion using IMU data to compensate for the high-dynamic trajectories of star points. However, the IMU sensor itself introduces high-frequency random noise under dynamic conditions and may accumulate biases and drifts over prolonged use, due to factors such as zero offset and temperature variations. To further improve the accuracy of the target trajectory’s compensation, this paper introduces a particle-filtering algorithm to correct compensation errors caused by noise or sensor offsets. Particle filtering is a state estimation algorithm, based on Monte Carlo methods, which estimates the true state of the system by incorporating multiple hypothesized states (particles) and assigning a weight to each, thereby overcoming the limitations of traditional Kalman filtering in handling nonlinearities and non-Gaussian noise. The computational flowchart for the particle-filtering algorithm is shown in Figure 4.

Figure 4.

Flowchart of the particle-filtering algorithm.

- Initializing the Particle Set: The first step in particle filtering is initializing a set of particles, each representing a possible state of the camera. Each particle represents motion parameters, such as position, velocity, and orientation. These particles can be initialized using the IMU’s initial data or randomly based on a prior motion model. The state of each particle is represented as , where is the position, is the velocity, and is the orientation;

- Particle Prediction (Based on IMU Data): At each time step (k), the particles predict the current state based on the previous state and the IMU’s acceleration () and angular velocity (). This process is executed through the motion model.

- Particle Update (Based on Event Data and Observations): Particle filtering’s core is updating particles’ weights using the event camera’s observational data. Each particle’s weight is proportional to how closely its predicted state matches the actual observational data. For each particle (i), the match with the event camera data is calculated to determine the particle weight (). Particles with higher match values receive higher weights.where is the observational data from the event camera, and is the match between the particle and event data;

- Particle Resampling: Particle filtering resamples the particles based on their weights, creating a new particle set. This eliminates low-weight particles and focuses on those that better match the actual observational data, improving accuracy and robustness;

- State Estimation: The optimal estimate at the current time is obtained by calculating the weighted average of the particles’ states (). Particle filtering computes the camera’s final motion state by averaging the states of all the particles, weighted by their respective weights, as follows:

- Trajectory Correction and Compensation: Using the optimal estimated state, the trajectory of star points captured by the event camera can be corrected. Particle filtering compensates for lost or distorted event points, corrects deviations in the camera’s trajectory, and restores the original target event’s trajectory.

4. Experiments and Results

4.1. Experimental Setup

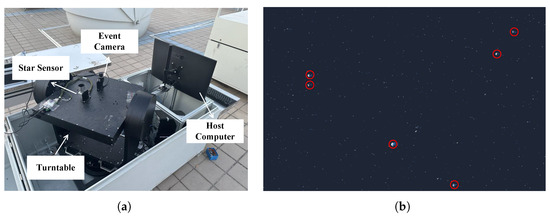

Experimental targets primarily consist of high-dynamic objects in space. Most of these targets are at great distances and appear as point-like objects on the imaging plane, while a subset are extended targets. Notably, the point-like targets occupy from only a few to a dozen pixels in the captured images, closely resembling the appearance of distant stars. Therefore, the design of the light source’s brightness in the experiment can be referenced to stellar magnitude standards.

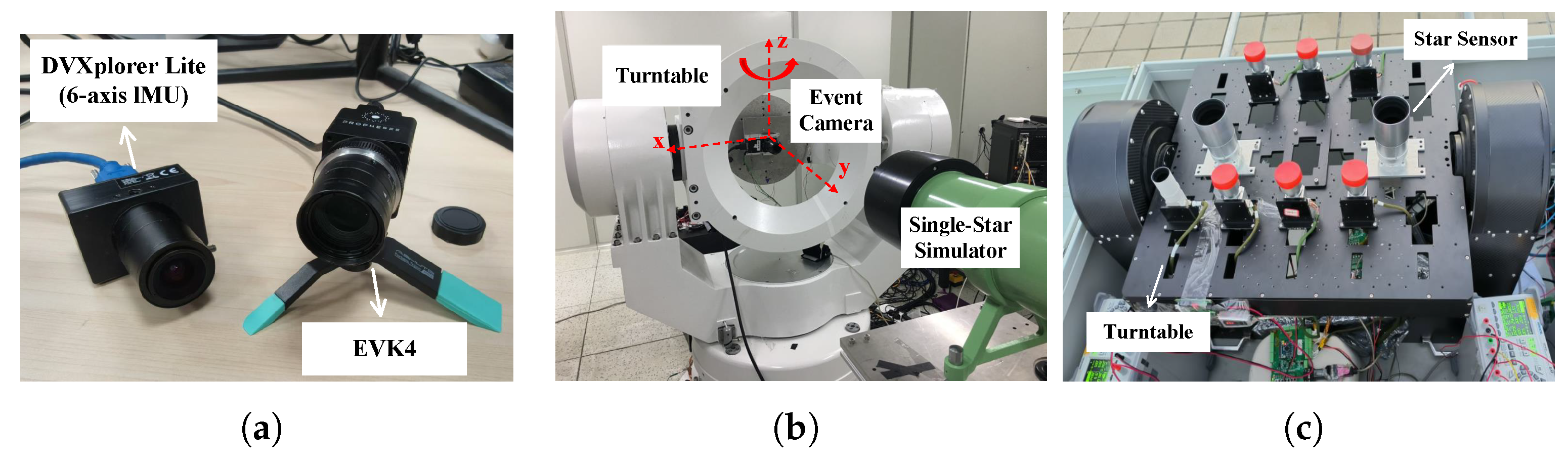

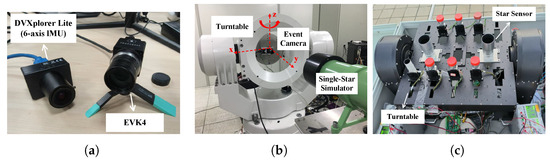

Since starlight can be regarded as parallel light, a single-star simulator was constructed using a combination of an LED light source, a mask, and a collimator. Specifically, the single-star simulator generates parallel light, with a brightness similar to that of a star, through the LED light source, uses the mask to adjust the spot size, and then transmits the beam to the event camera’s field of view through the collimator. To ensure that the parallel light emitted by the single-star simulator adequately covers the field of view of the event camera, the event camera is mounted on a high-precision turntable that is aligned parallel to the single-star simulator, as shown in Figure 5b. In addition, for the brightness calibration of the LED light source in the single-star simulator, the experiment referenced nighttime observational data from a star sensor. Figure 5c shows the experimental setup for nighttime star observation, which provided the basis for the light source calibration in this experiment.

Figure 5.

Experimental environment and equipment. (a) The event camera used in the experiment (DVXplorer Lite: Basel, Switzerland’s iniVation, EVK4: Atsugi, Japan’s Sony and PROPHESEE); (b) High-dynamic-measurement experimental setup (Turntable: State Key Laboratory of Precision Measurement Technology and Instruments, Tsinghua University, Beijing, China); (c) Real night stargazing experimental setup (Turntable and Star Sensors: State Key Laboratory of Precision Measurement Technology and Instruments, Tsinghua University).

In this study, the aforementioned experimental apparatus was employed to conduct multiple experiments within an angular velocity range of 4∼20°/s. Detailed analyses and comparisons of the noise reduction performances and centroid extraction accuracies of the proposed method were carried out, thereby investigating the capability of the event camera to detect space targets under high-dynamic conditions.

The proposed event-based method for extracting weak high-dynamic targets involves multiple parameters, which optimization critically determines algorithmic feasibility; this section provides implementation guidance for optimizing the key parameters.

- Temporal Interval (): Event cameras typically operate at microsecond temporal resolutions. Conventional spatiotemporal denoising methods applied at these resolutions risk event loss during high-dynamic imaging. To mitigate this, our algorithm integrates events into pseudo-image frames, using intervals calibrated against typical star tracker exposure durations (e.g., 5 ms, 10 ms, and 20 ms). This ensures sufficient space target events are captured per frame while maintaining high-temporal-resolution advantages;

- Neighborhood Parameters (r, R) and Density Threshold (): These values were empirically determined through the statistical analysis of experimental data across 4–20°/s angular velocities, with scenario-adaptive adjustments: Stronger motion dynamics (reducing the target energy) necessitate larger radii (r) and lower density thresholds (), while elevated stray light conditions require smaller radii (r) and higher thresholds (). Spatial distribution analysis under varying angular velocities yielded the following statistically optimal parameters: neighborhood radius set R = 3, 5, 7, 9, 11, … pixels and density threshold = 25–100 pixel densities (equivalent to 5 × 5–10 × 10 pixel areas) based on high-dynamic star-imaging characteristics—target events are identified when neighborhood event counts exceed these thresholds.

4.2. Experimental Results and Analysis

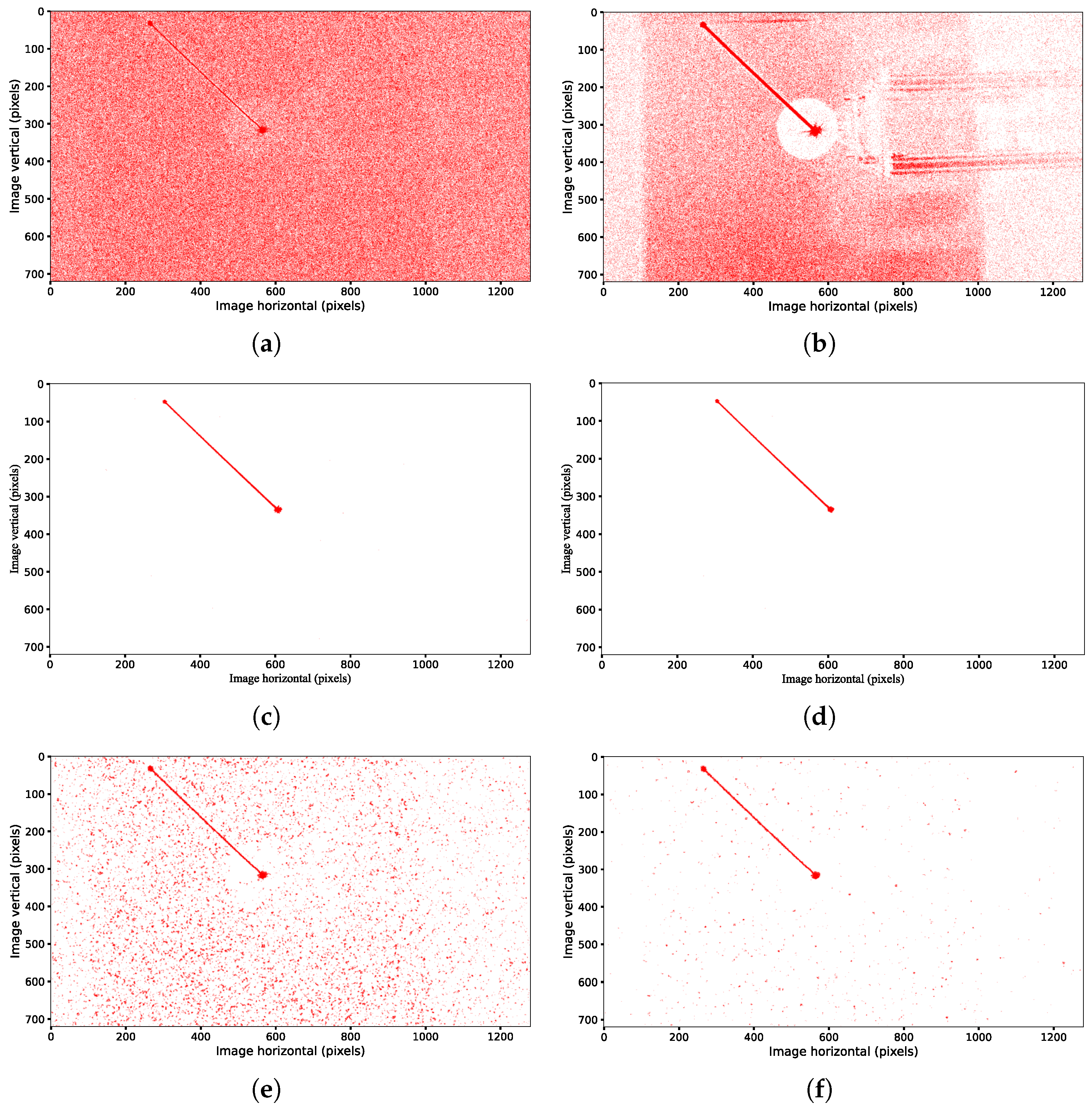

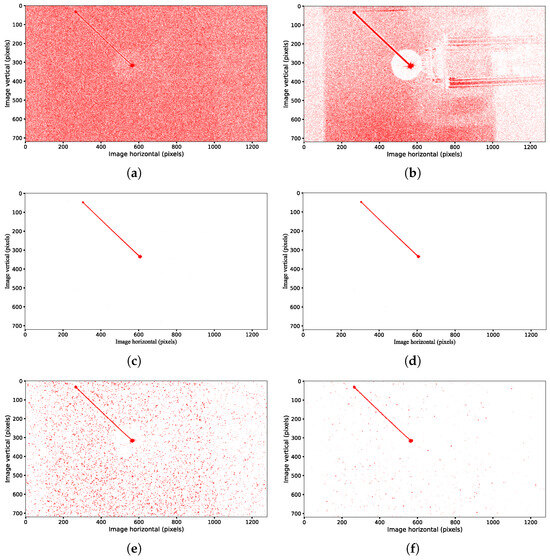

Figure 6 presents a comparison of the denoising performances among the classical filter, the NDSEF filter, and the multi-neighborhood-radius-based NDSEF filter (referred to as MR-NDSEF) in various angular velocity scenarios, with a constant light source brightness (equivalent to that of a magnitude-three star). Notably, the results in Figure 6e,f are based on IMU data for target trajectory compensation, and their performances can be compared with the compensation results shown in Figure 3.

Figure 6.

Denoise processing and optical-flow compensation diagram. (a) Original image at 10°/s. (b) Classical filter at 10°/s. (c) NDSEF filter at 10°/s. (d) MR-NDSEF filter at 10°/s. (e) NDSEF filter at 20°/s. (f) MR-NDSEF filter at 20°/s.

From Figure 6a–d, it can be observed that when the angular velocity is relatively low in high-dynamic maneuvers, the classical filter, although capable of preserving target signal events, still leaves a significant amount of noise after filtering, thereby increasing the difficulty in the subsequent target extraction. In contrast, both the NDSEF filter and the MR-NDSEF filter markedly improve the denoising performance—the target signals appear much clearer, and the use of a circular local sliding window better conforms to the characteristics of the target signals’ trajectory in the spatial data. Moreover, the results in Figure 6e,f indicate that when the camera operates at higher angular velocities, the energy of the target signal is further diminished, and the MR-NDSEF filter exhibits an even superior denoising performance.

Evaluating the denoising performance requires not only qualitative visual assessments but also scientifically rigorous and comprehensive quantitative metrics. To facilitate the quantitative evaluation of the noise filters, this paper employs the signal-to-noise ratio (SNR) for quantitative analysis. The SNR reflects the energy ratio between the target signal and the noise before and after filtering and is defined by the following equations:

In the equations, represents the number of events after denoising, represents the number of events before denoising, is the original event stream, is the event stream with added noise, denotes the signal-to-noise ratio before denoising, and denotes the signal-to-noise ratio after denoising.

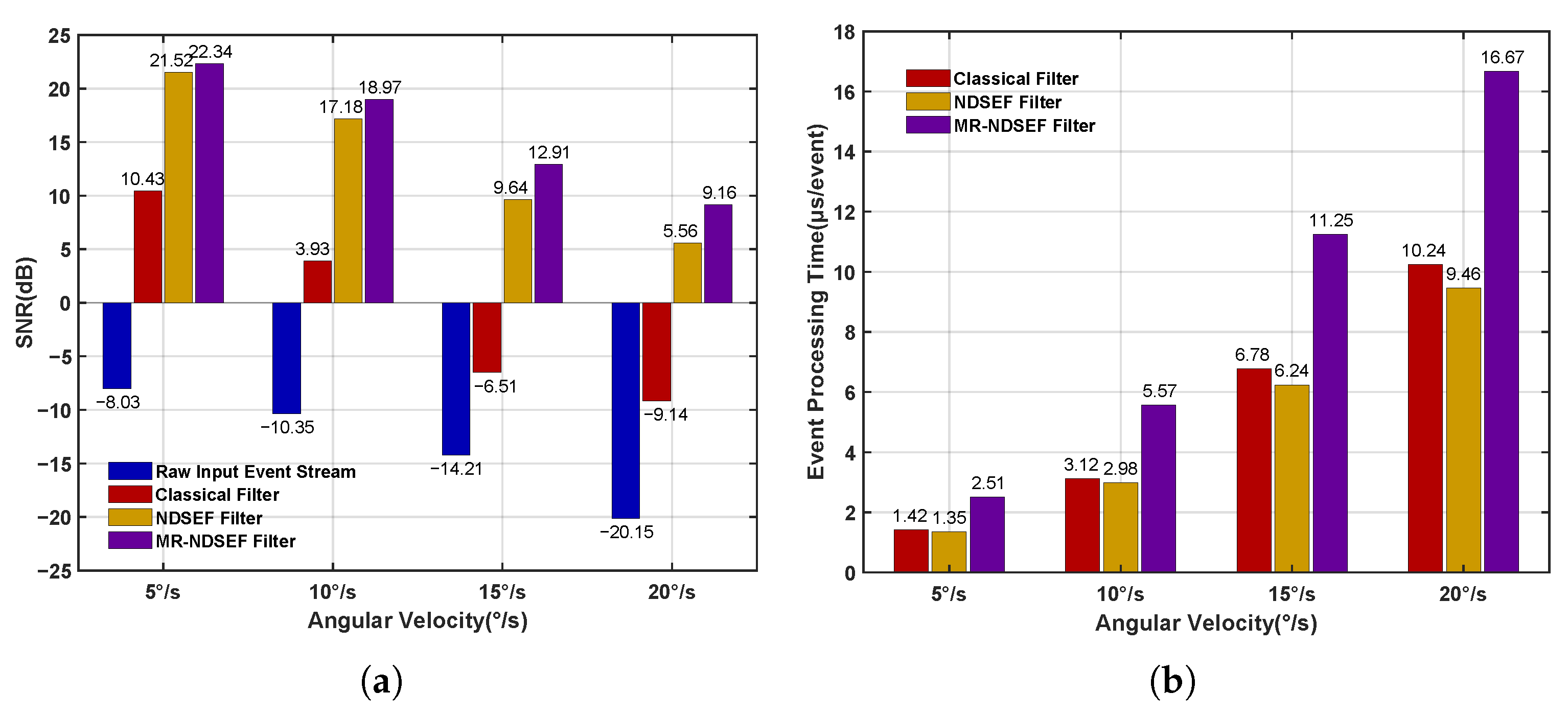

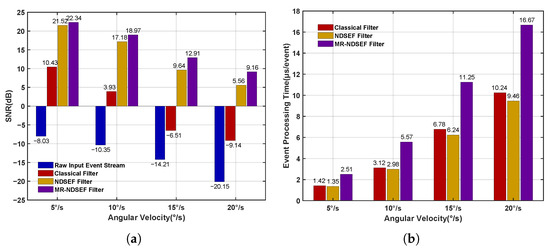

Figure 7 quantitatively evaluates the filtering qualities of the classical filter, the NDSEF filter, and the MR-NDSEF filter, using two metrics: the signal-to-noise ratio (SNR) and event-processing time. As shown in the figure, after denoising with both the NDSEF and MR-NDSEF filters, the signal-to-noise ratio of the space target’s event stream is significantly improved. Notably, at relatively high angular velocities, the MR-NDSEF filter exhibits a particularly outstanding filtering performance. Furthermore, the event-processing time of the NDSEF filter is comparable to that of the classical filter, while the MR-NDSEF filter requires a relatively longer processing time, which is generally maintained at approximately 10 µs, thereby satisfying the real-time requirements of space exploration.

Figure 7.

Evaluation of the noise reduction effect. (a) Signal-to-noise ratio (SNR). (b) Event-processing time.

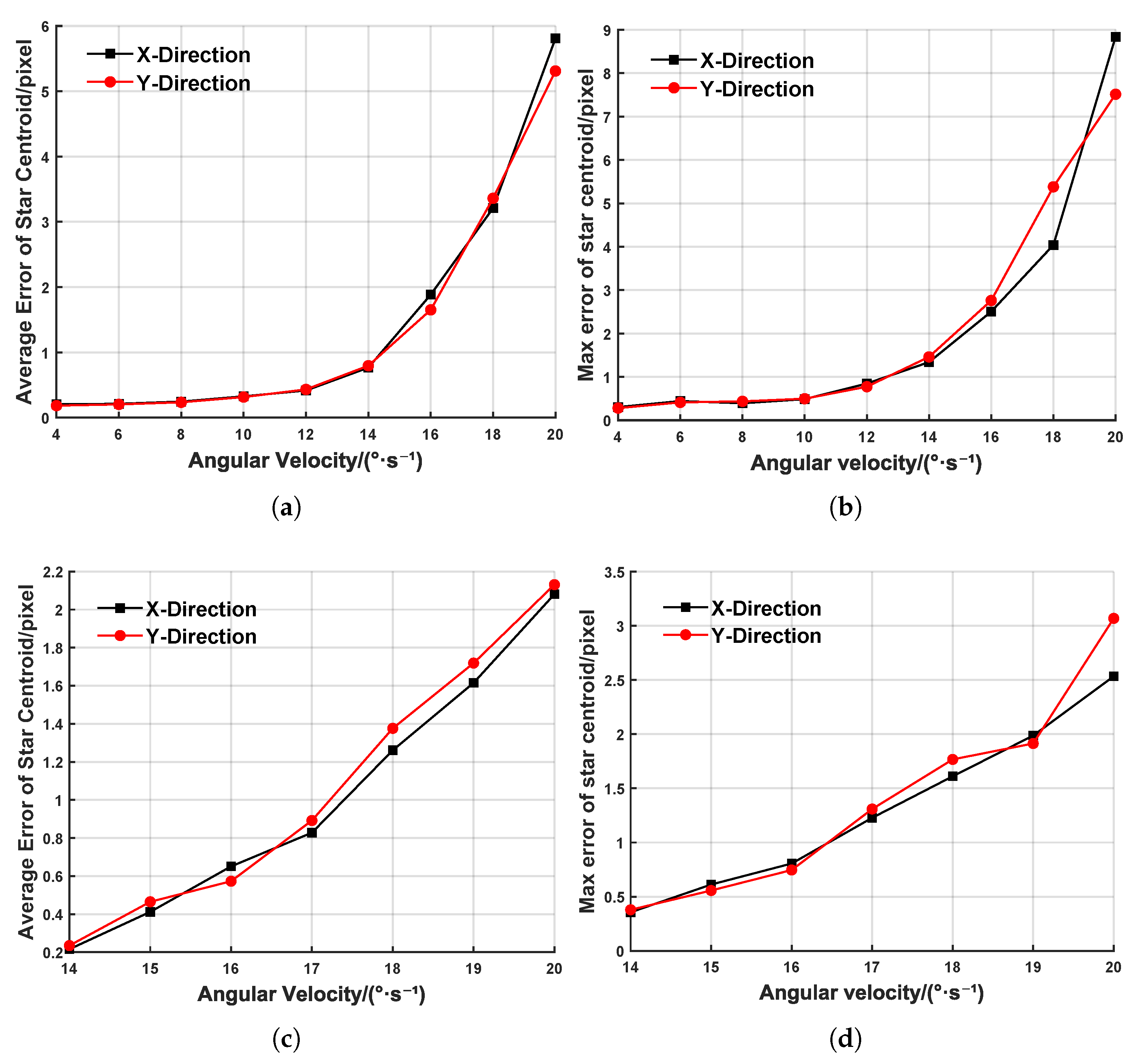

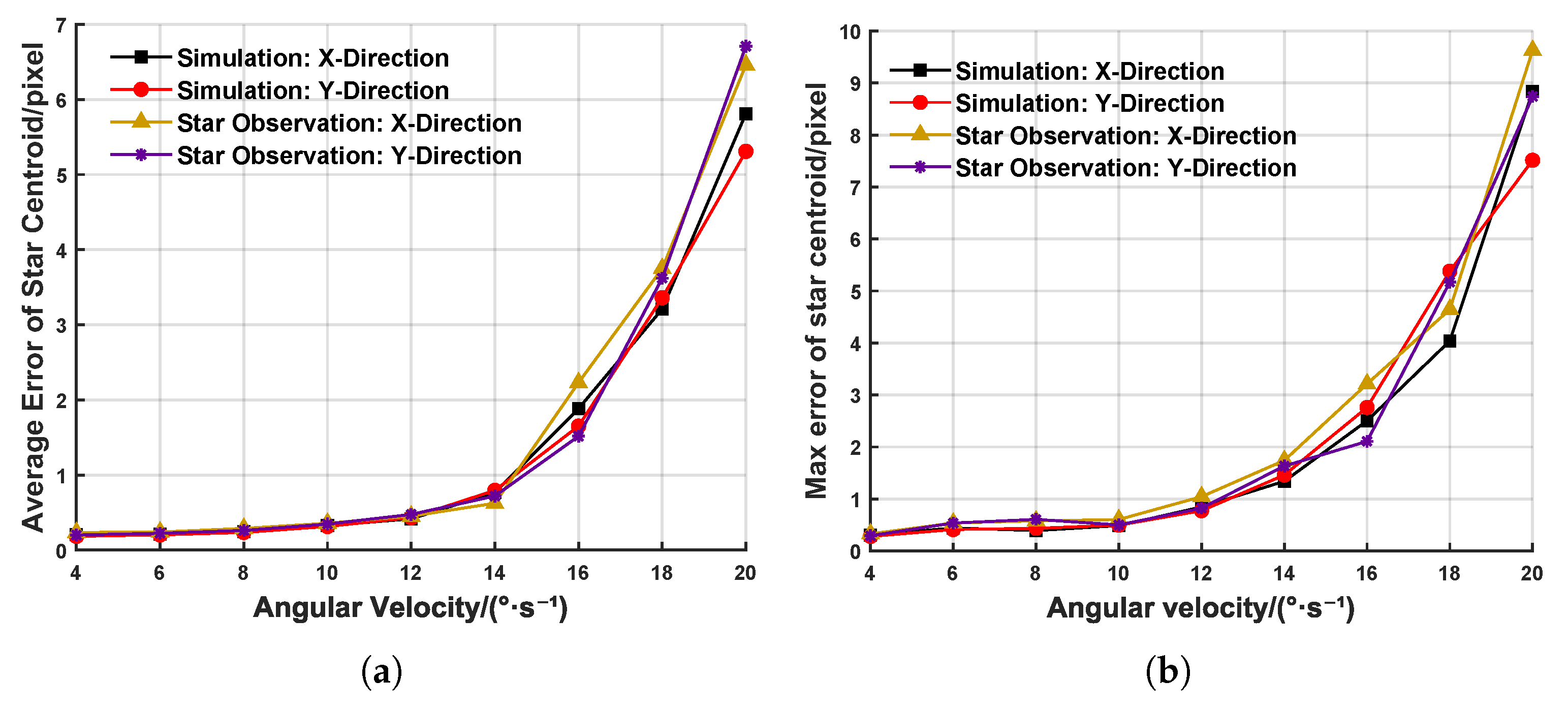

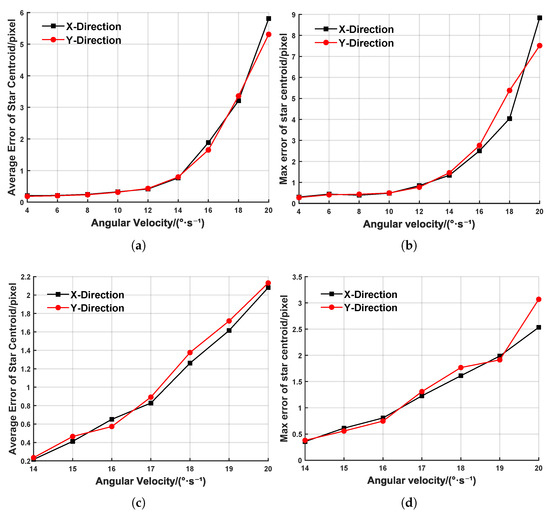

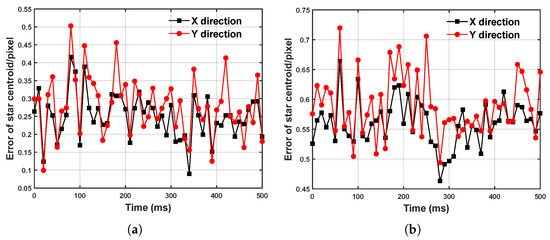

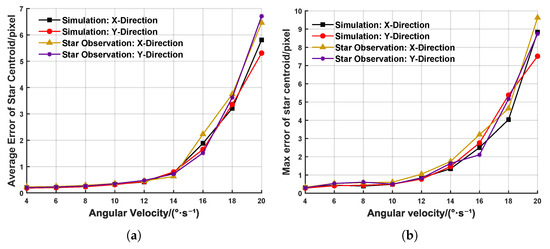

To validate the effectiveness of the DBSCAN algorithm and the IMU-based event compensation method for the extraction of target centroids under high-dynamic conditions, we selected 500 ms event streams with a stable angular velocity and a stable acceleration for each angular velocity, extracted the target centroids, and computed the average and maximum errors between the extracted target centroids and the theoretical ground truth, as shown in Figure 8. In Figure 8a,b, when the dynamic angular velocity is in the range 4∼12°/s, the average target centroid’s error is less than 0.5 pixels, and the maximum error remains at the subpixel level. However, when the dynamic angular velocity increases to 12∼20°/s, the extremely rapid and nonuniform camera motion leads to missing target events and gaps between events, causing both the average and maximum centroid errors to surge drastically. At 20°/s, the average error exceeds five pixels, which, theoretically, renders it as unsuitable for space target exploration. Figure 8c,d presents the target centroids’ extraction errors after IMU-based event compensation, clearly showing a significant improvement in the extraction accuracy. At 20°/s, the average error is reduced by 65% and the maximum error by 71%, thereby demonstrating the effectiveness of the IMU-based compensation method in mitigating missing events under extremely high-dynamic conditions.

Figure 8.

Error of centroid extraction at different angular velocities. (a) Average error of centroid extraction. (b) Maximum error of centroid extraction. (c) Average error of centroid extraction (after IMU compensation). (d) Maximum error of centroid extraction (after IMU compensation).

All the experiments described above were conducted under conditions of a constant angular velocity. To further validate the reliability of our method for target centroid extraction, we analyzed an event stream collected while the camera underwent sinusoidal motion at varying frequencies and amplitudes (with non-constant angular velocities and accelerations). Figure 9a shows the event stream acquired under sinusoidal motion with an amplitude of 10° and a frequency of 1 Hz, while Figure 9b presents the target centroid results extracted from the event stream after applying event compensation based on IMU data.

Figure 9.

Event camera sinusoidal motion event acquisition trajectory (Noise Reduction). (a) Before event compensation. (b) After event compensation.

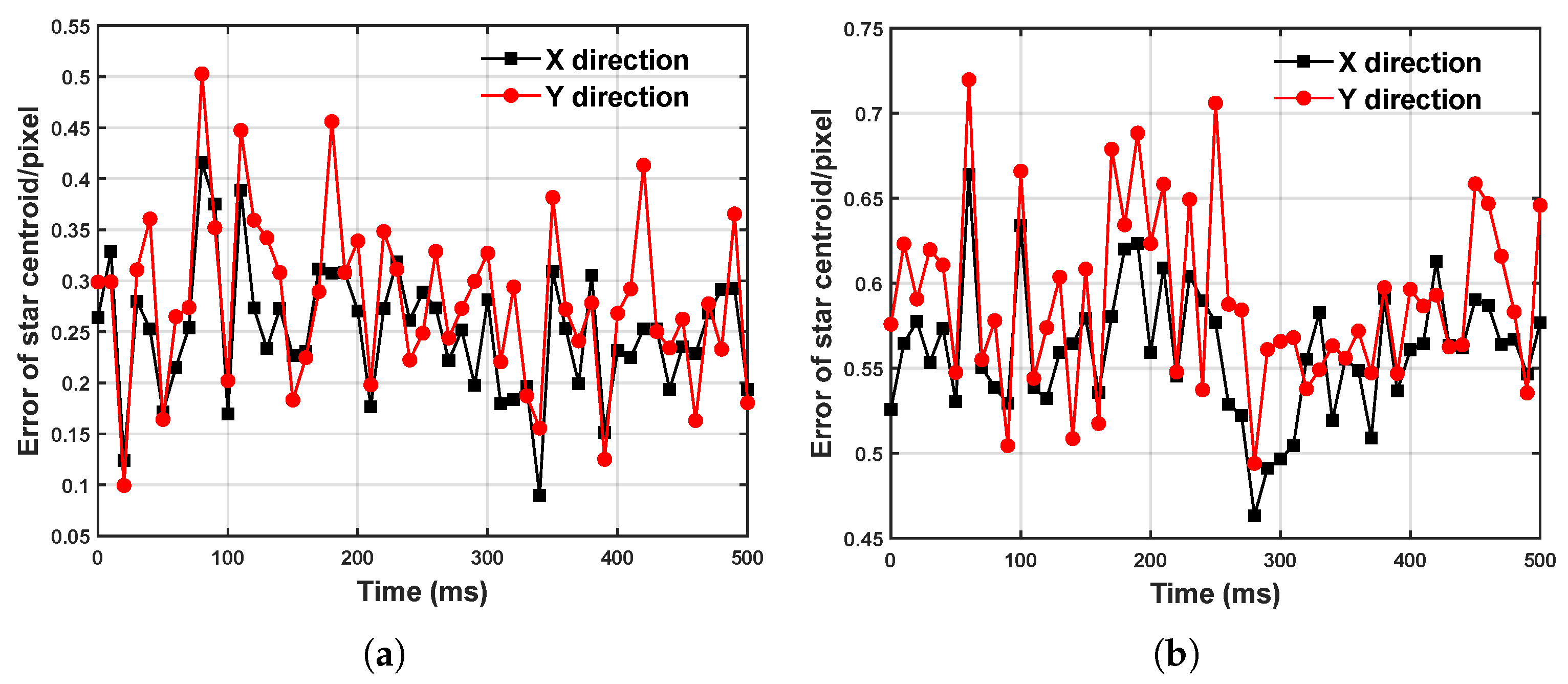

In this event stream, we randomly selected two segments of 500 ms event data and calculated the average error between the extracted centroid and the theoretical ground truth for each segment. As shown in Figure 10a,b, under nonlinear and nonuniform motion conditions, the average error in the target centroid extraction remains at the subpixel level. This indicates that even under complex motion conditions, the event compensation method based on IMU data can effectively enhance the accuracy of the target extraction.

Figure 10.

Error of centroid extraction under sinusoidal motion. (a) The first 500 ms event data stream. (b) The second 500 ms event data stream.

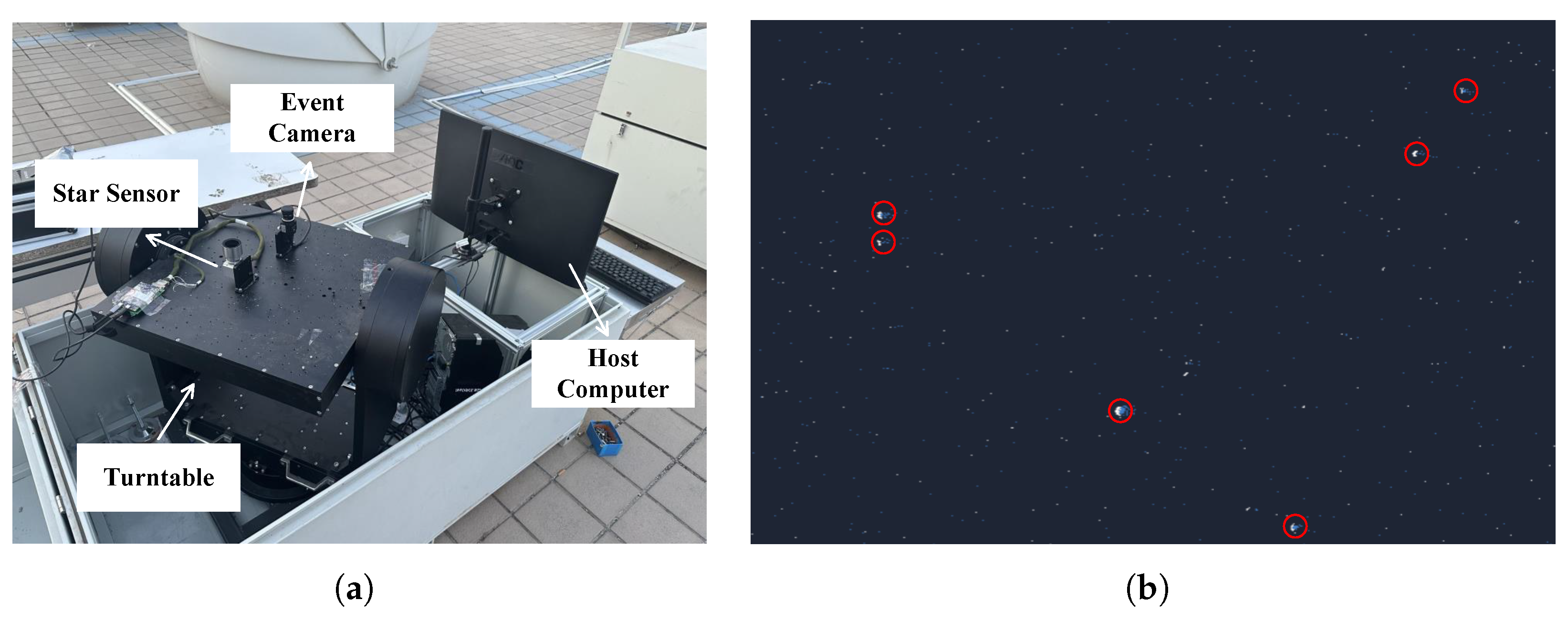

5. Verification

In the validation case, we conducted an outdoor stellar observation experiment, as illustrated in Figure 11a. In this experiment, a star sensor and an event camera were co-mounted on a rotary table. By leveraging the well-established target recognition algorithm of the stellar sensor, celestial objects were precisely identified, enabling the calibration of the equivalent stellar magnitudes of the targets captured by the event camera. Figure 11b presents the experimental results of this outdoor stellar observation, demonstrating the feasibility of using event cameras for target detection in space.

Figure 11.

Nighttime star observation experiment. (a) Experimental equipment. (b) Experimental results (Red circles mark the identifiable navigation stars).

By leveraging the existing equipment and resources, we conducted multiple stellar observation experiments and compared the experimental results with those from ground-based simulation experiments (targeting magnitude-three stars). The error distributions are shown in Figure 12. These results validate the effectiveness and reliability of the proposed event-based extraction method for weak high-dynamic targets.

Figure 12.

Error of centroid extraction at different angular velocities in ground simulation and stargazing experiments. (a) Average error of centroid extraction. (b) Maximum error of centroid extraction.

6. Conclusions

In this paper, we proposed an event data extraction method tailored for weak, high-dynamic targets. Unlike existing event target extraction algorithms, our approach fully accounts for the trajectory characteristics of moving targets during the event-stream-denoising stage by employing the multiscale neighborhood density for noise determination, thereby significantly enhancing the signal-to-noise ratios of high-dynamic space targets. In the target extraction phase, we not only integrate the DBSCAN clustering algorithm but also address issues of trajectory distortion and data discontinuity in ultrahigh-dynamic scenarios by constructing a camera motion model using real-time motion data from an inertial measurement unit (IMU) to compensate for and correct the target’s trajectory. The experimental results validate the robustness and reliability of our algorithm in both denoising and target extraction. Future work will focus on the following directions: (1) extending the algorithms to accommodate multitarget high-dynamic application scenarios tailored for practical space exploration missions, (2) designing large-aperture lenses integrated with event cameras and continuing to conduct ground-based high-dynamic stellar observation experiments to investigate the ultimate detection capabilities of event cameras, and (3) developing a high-dynamic motion dataset for space targets, integrating machine-learning techniques to perform in-depth studies on sparse event stream data, and achieving adaptive parameter selection for the algorithms.

Author Contributions

Conceptualization, H.L.; data curation, S.W. and G.R.; formal analysis, S.W., Y.T., and G.R.; funding acquisition, T.S. and F.X.; investigation, H.L., Y.T., and K.Y.; methodology, H.L., T.S., Y.T., and S.W.; project administration, T.S., F.X., and H.W.; resources, T.S., S.W., and H.W.; software, H.L., Z.Z., K.Y., and G.R.; supervision, F.X. and X.W.; validation, H.L., Z.Z., and G.R.; visualization, Z.Z., F.X., X.W., and H.W.; writing—original draft, H.L., T.S., Y.T., and K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 62375022).

Institutional Review Board Statement

Ethical review and approval were waived because the study did not involve humans or animals.

Data Availability Statement

The synthetic data supporting the results of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xing, F.; You, Z.; Sun, T.; Wei, M. Principle and Implementation of APS CMOS Star Tracker; National Defense Industry Press: Beijing, China, 2017. [Google Scholar]

- Liu, H.N.; Sun, T.; Wu, S.Y.; Yang, K.; Yang, B.S.; Ren, G.T.; Song, J.H.; Li, G.W. Development and validation of full-field simulation imaging technology for star sensors under hypersonic aero-optical effects. Opt. Express 2025, 33, 6526–6542. [Google Scholar] [CrossRef]

- Liebe, C. Accuracy performance of star trackers—A tutorial. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 587–599. [Google Scholar] [CrossRef]

- Yang, B.; Fan, Z.; Yu, H. Aero-optical effects simulation technique for starlight transmission in boundary layer under high-speed conditions. Chin. J. Aeronaut. 2020, 33, 1929–1941. [Google Scholar] [CrossRef]

- Liu, H.; Sun, T.; Yang, K.; Xing, F.; Wang, X.; Wu, S.; Peng, Y.; Tian, Y. Aero-Thermal Radiation Effects Simulation Technique for Star Sensor Imaging Under Hypersonic Conditions. IEEE Sens. J. 2025, 25, 21803–21813. [Google Scholar] [CrossRef]

- Li, T.; Zhang, C.; Kong, L.; Wang, J.; Pang, Y.; Li, H.; Wu, J.; Yang, Y.; Tian, L. Centroiding Error Compensation of Star Sensor Images for Hypersonic Navigation Based on Angular Distance. IEEE Trans. Instrum. Meas. 2024, 73, 8508211. [Google Scholar] [CrossRef]

- Wang, J. Research on Key Technologies of High Dynamic Star Sensor. Ph.D. Thesis, University of Chinese Academy of Sciences (Changchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of Sciences), Changchun, China, 2019. [Google Scholar]

- Wan, X.; Wang, G.; Wei, X.; Li, J.; Zhang, G. ODCC: A dynamic star spots extraction method for star sensors. IEEE Trans. Instrum. Meas. 2021, 70, 5009114. [Google Scholar] [CrossRef]

- Zeng, S.; Zhao, R.; Ma, Y.; Zhu, Z.; Zhu, Z. An Event-based Method for Extracting Star Points from High Dynamic Star Sensors. Acta Photonica Sin. 2022, 51, 0912003. [Google Scholar]

- Schmidt, U. ASTRO APS-the next generation Hi-Rel star tracker based on active pixel sensor technology. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, San Francisco, CA, USA, 15–18 August 2005; p. 5925. [Google Scholar]

- Cassidy, L.W. Miniature star tracker. In Proceedings of the Space Guidance, Control, and Tracking; SPIE: Bellingham, WA, USA, 1993; Volume 1949, pp. 110–117. [Google Scholar]

- Foisneau, T.; Piriou, V.; Perrimon, N.; Jacob, P.; Blarre, L.; Vilaire, D. SED16 autonomous star tracker night sky testing. In Proceedings of the International Conference on Space Optics—ICSO 2000, Toulouse, France, 5–7 December 2000; SPIE: Bellingham, WA, USA, 2017; Volume 10569, pp. 281–290. [Google Scholar]

- Cohen, G.; Afshar, S.; Morreale, B.; Bessell, T.; Wabnitz, A.; Rutten, M.; van Schaik, A. Event-based sensing for space situational awareness. J. Astronaut. Sci. 2019, 66, 125–141. [Google Scholar] [CrossRef]

- Chin, T.J.; Bagchi, S.; Eriksson, A.; Van Schaik, A. Star tracking using an event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Bagchi, S.; Chin, T.J. Event-based star tracking via multiresolution progressive Hough transforms. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 2143–2152. [Google Scholar]

- Roffe, S.; Akolkar, H.; George, A.D.; Linares-Barranco, B.; Benosman, R.B. Neutron-induced, single-event effects on neuromorphic event-based vision sensor: A first step and tools to space applications. IEEE Access 2021, 9, 85748–85763. [Google Scholar] [CrossRef]

- Muglikar, M.; Gehrig, M.; Gehrig, D.; Scaramuzza, D. How to calibrate your event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1403–1409. [Google Scholar]

- Chen, S.; Li, X.; Yuan, L.; Liu, Z. eKalibr: Dynamic Intrinsic Calibration for Event Cameras From First Principles of Events. arXiv 2025, arXiv:2501.05688. [Google Scholar] [CrossRef]

- Salah, M.; Ayyad, A.; Humais, M.; Gehrig, D.; Abusafieh, A.; Seneviratne, L.; Scaramuzza, D.; Zweiri, Y. E-calib: A fast, robust and accurate calibration toolbox for event cameras. IEEE Trans. Image Process. 2024, 33, 3977–3990. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.; Wang, Y.; Gao, L.; Kneip, L. Globally-optimal event camera motion estimation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 51–67. [Google Scholar]

- Liu, Z.; Liang, S.; Guan, B.; Tan, D.; Shang, Y.; Yu, Q. Collimator-assisted high-precision calibration method for event cameras. Opt. Lett. 2025, 50, 4254–4257. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Guan, B.; Shang, Y.; Bian, Y.; Sun, P.; Yu, Q. Stereo Event-based, 6-DOF Pose Tracking for Uncooperative Spacecraft. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5607513. [Google Scholar] [CrossRef]

- Cieslewski, T.; Choudhary, S.; Scaramuzza, D. Data-Efficient Decentralized Visual SLAM. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2466–2473. [Google Scholar] [CrossRef]

- Elms, E.; Jawaid, M.; Latif, Y.; Chin, T. SEENIC: Dataset for Spacecraft PosE Estimation with NeuromorphIC Vision. 2022. Available online: https://zenodo.org/records/7214231 (accessed on 18 November 2024).

- Park, T.H.; Märtens, M.; Jawaid, M.; Wang, Z.; Chen, B.; Chin, T.J.; Izzo, D.; D’Amico, S. Satellite Pose Estimation Competition 2021: Results and Analyses. Acta Astronaut. 2023, 204, 640–665. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, D.; Li, R.; Zhang, Y.; Yang, S. T-ESVO: Improved event-based stereo visual odometry via adaptive time-surface and truncated signed distance function. Adv. Intell. Syst. 2023, 5, 2300027. [Google Scholar] [CrossRef]

- Wang, R.; Wang, L.; He, Y.; Li, L. A space point object tracking method based on asynchronous event stream. Aerosp. Control Appl. 2024, 50, 46–55. [Google Scholar]

- Liu, H.; Brandli, C.; Li, C.; Liu, S.C.; Delbruck, T. Design of a spatiotemporal correlation filter for event-based sensors. In Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS), Lisbon, Portugal, 24–27 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 722–725. [Google Scholar]

- Gouda, M.; Abreu, S.; Bienstman, P. Surrogate gradient learning in spiking networks trained on event-based cytometry dataset. Opt. Express 2024, 32, 16260–16272. [Google Scholar] [CrossRef] [PubMed]

- Raviv, D.; Barsi, C.; Naik, N.; Feigin, M.; Raskar, R. Pose estimation using time-resolved inversion of diffuse light. Opt. Express 2014, 22, 20164–20176. [Google Scholar] [CrossRef] [PubMed]

- Çelik, M.; Dadaşer-Çelik, F.; Dokuz, A.Ş. Anomaly detection in temperature data using DBSCAN algorithm. In Proceedings of the 2011 International Symposium on Innovations in Intelligent Systems and Applications, Istanbul, Turkey, 15–18 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 91–95. [Google Scholar]

- Deng, D. Research on anomaly detection method based on DBSCAN clustering algorithm. In Proceedings of the 2020 5th International Conference on Information Science, Computer Technology and Transportation (ISCTT), Shenyang, China, 13–15 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 439–442. [Google Scholar]

- Stewart, T.; Drouin, M.A.; Picard, M.; Djupkep Dizeu, F.B.; Orth, A.; Gagné, G. A virtual fence for drones: Efficiently detecting propeller blades with a dvxplorer event camera. In Proceedings of the International Conference on Neuromorphic Systems 2022, Knoxville, TN, USA, 27–29 July 2022; pp. 1–7. [Google Scholar]

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on various inertial measurement unit (IMU) sensor applications. Int. J. Signal Process. Syst. 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Yan, H.; Shan, Q.; Furukawa, Y. RIDI: Robust IMU double integration. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 621–636. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).