A Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting in Complex Orchards

Abstract

1. Introduction

- -

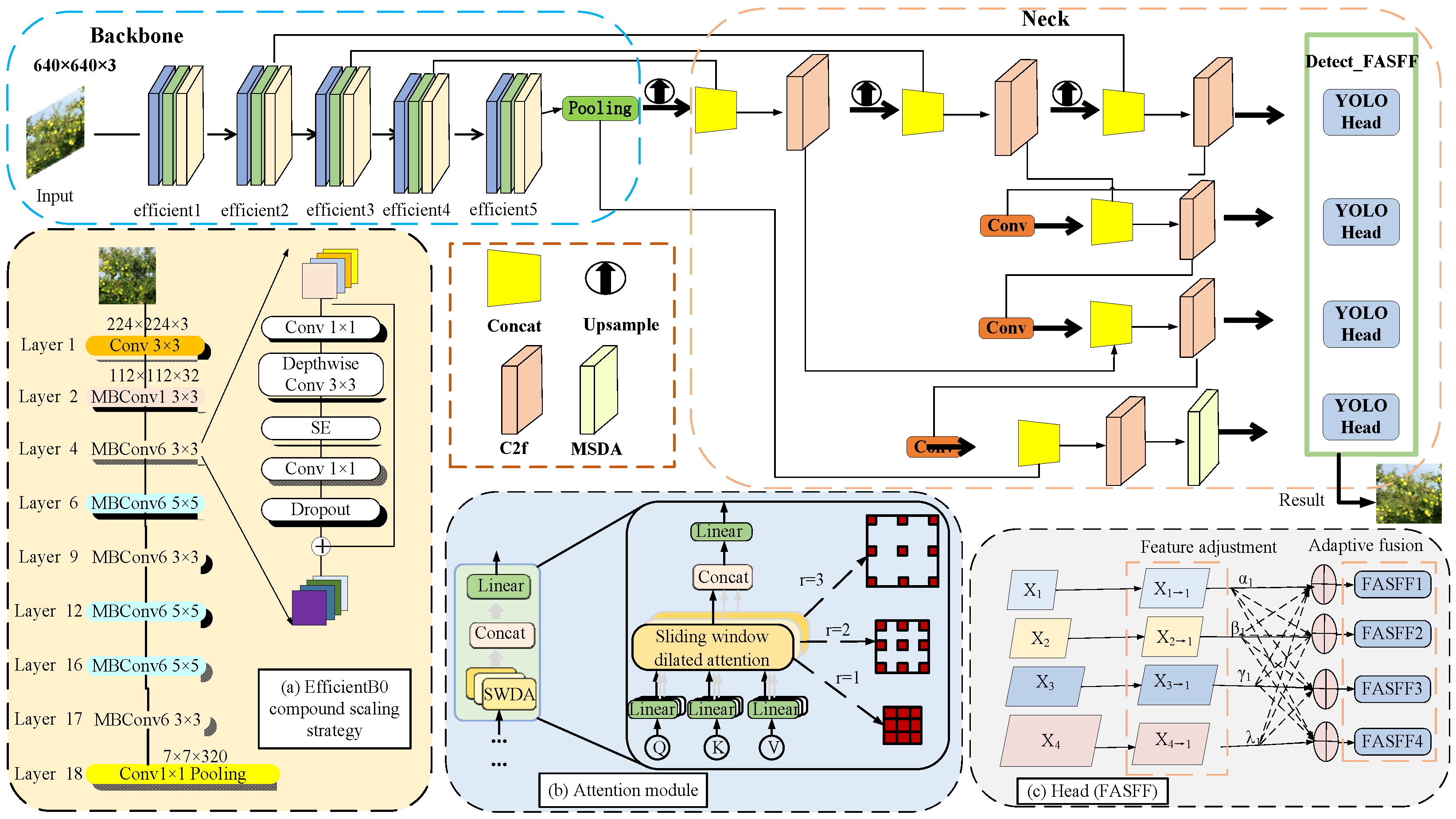

- The developed fruit tracking and detection model, including improvements to the network architecture through enhanced feature extraction modules, optimized detection heads, and integrated attention mechanisms are demonstrated.

- -

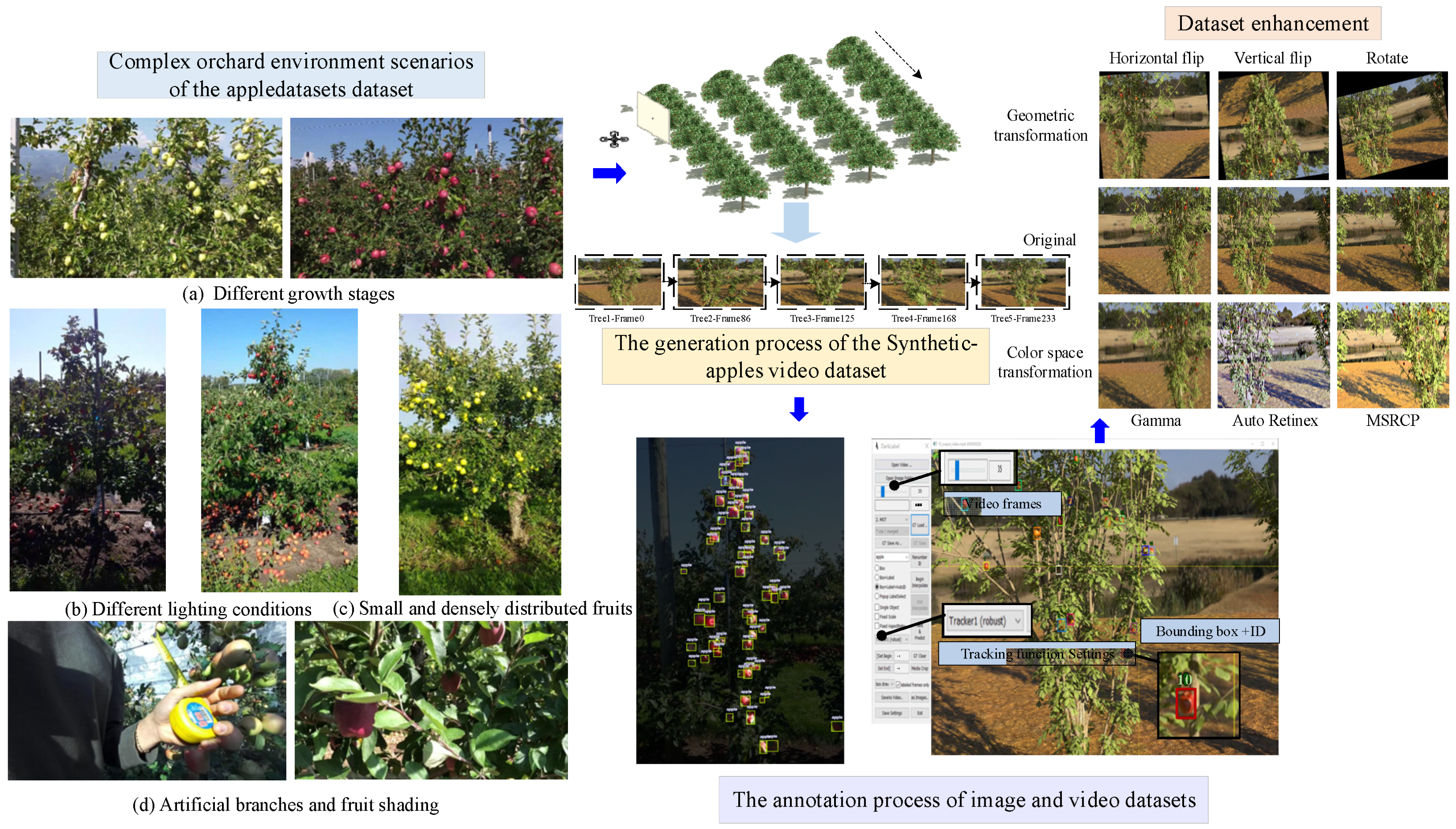

- The construction of the training dataset using appledatasets and Synthetic-apples for model training and testing is explained.

- -

- The results that evaluate the performance of the fruit detection module (using an improved YOLOv8 model) and the fruit tracking module (with enhanced Kalman filtering) are presented.

2. Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting

2.1. Dataset Construction

2.2. Fruit Detector by the Improved YOLO Model

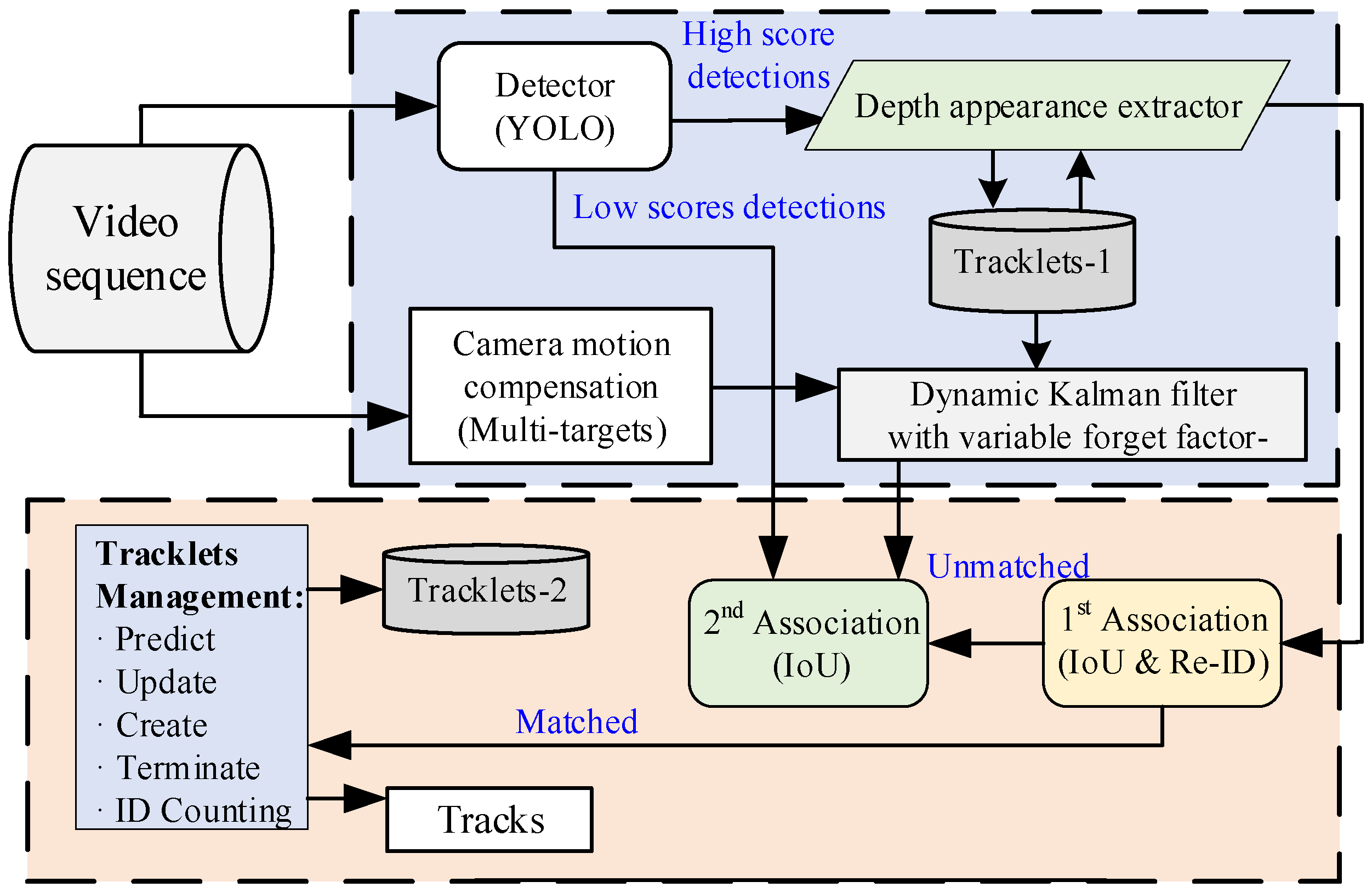

2.3. Fruit Tracking and Counting Modules

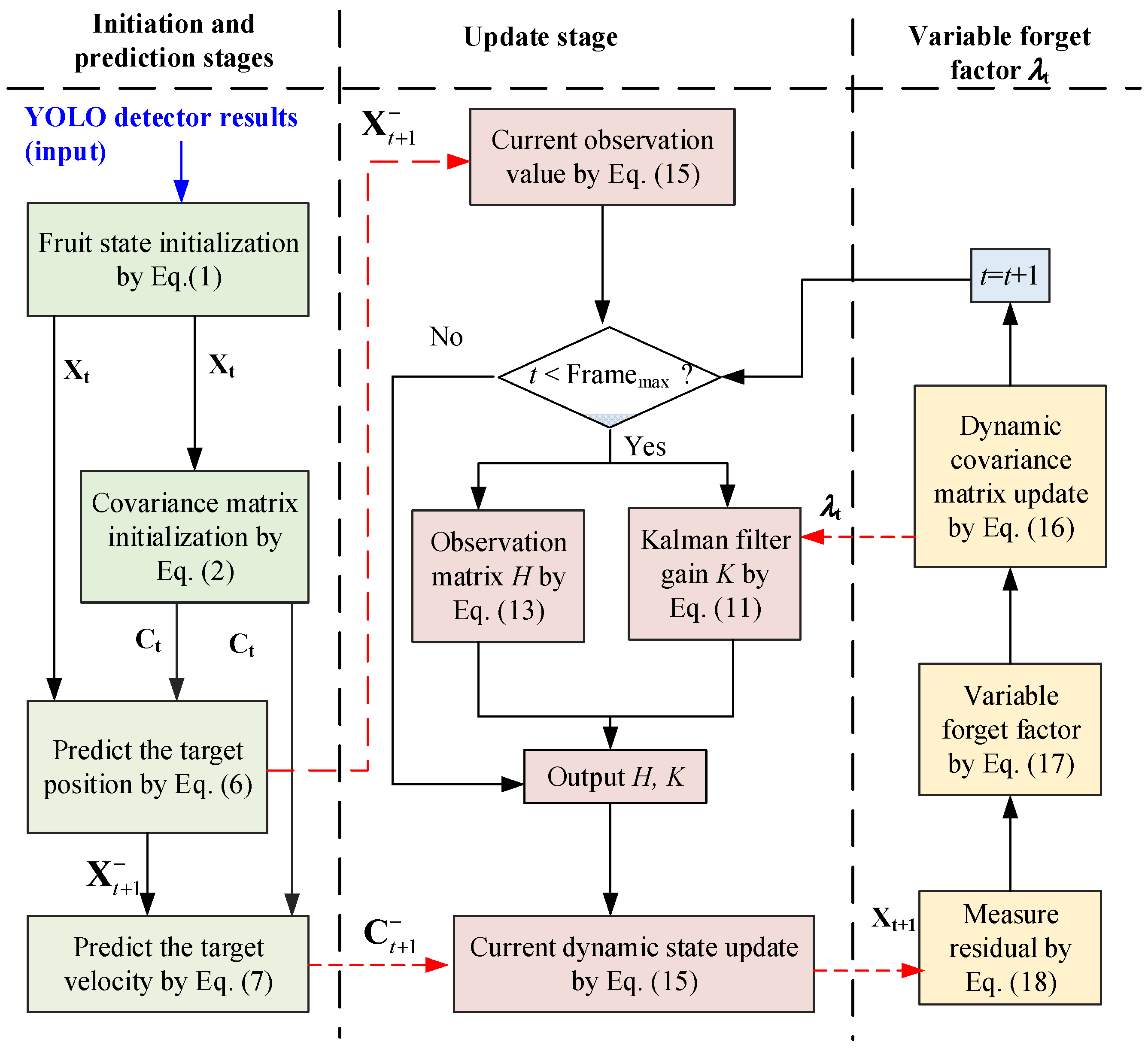

2.3.1. Dynamic Kalman Filtering Method

2.3.2. Camera Motion Compensation Method

2.3.3. Data Association and Matching Method

2.4. Experimental Environment and Evaluation Metrics

3. Results and Discussion

3.1. Comparisons of YOLO Detection Model

3.2. Comparison of Fruit Tracking Performance

- -

- The comparative experimental results in Table 2 demonstrated that the proposed improved YOLO detection model exhibited superior performance in recognizing individual fruits in scenes with complex backgrounds, occlusions, and overlapping fruit targets. The proposed model typically provided more accurate target positions, which helped to improve the stability and precision of subsequent tracking.

- -

- The SORT method performed well in computation with fast processing speed and small computational cost. However, the ID switching frequency was relatively high in situations with target overlap and heavy occlusion, resulting in lower MOTA and IDF1 values. By using the improved YOLO detector, the performance of SORT had improved a little with a MOTA of 69.7%, IDF1 of 42.0%, and HOTA of 70.2%, demonstrating better overall performance.

- -

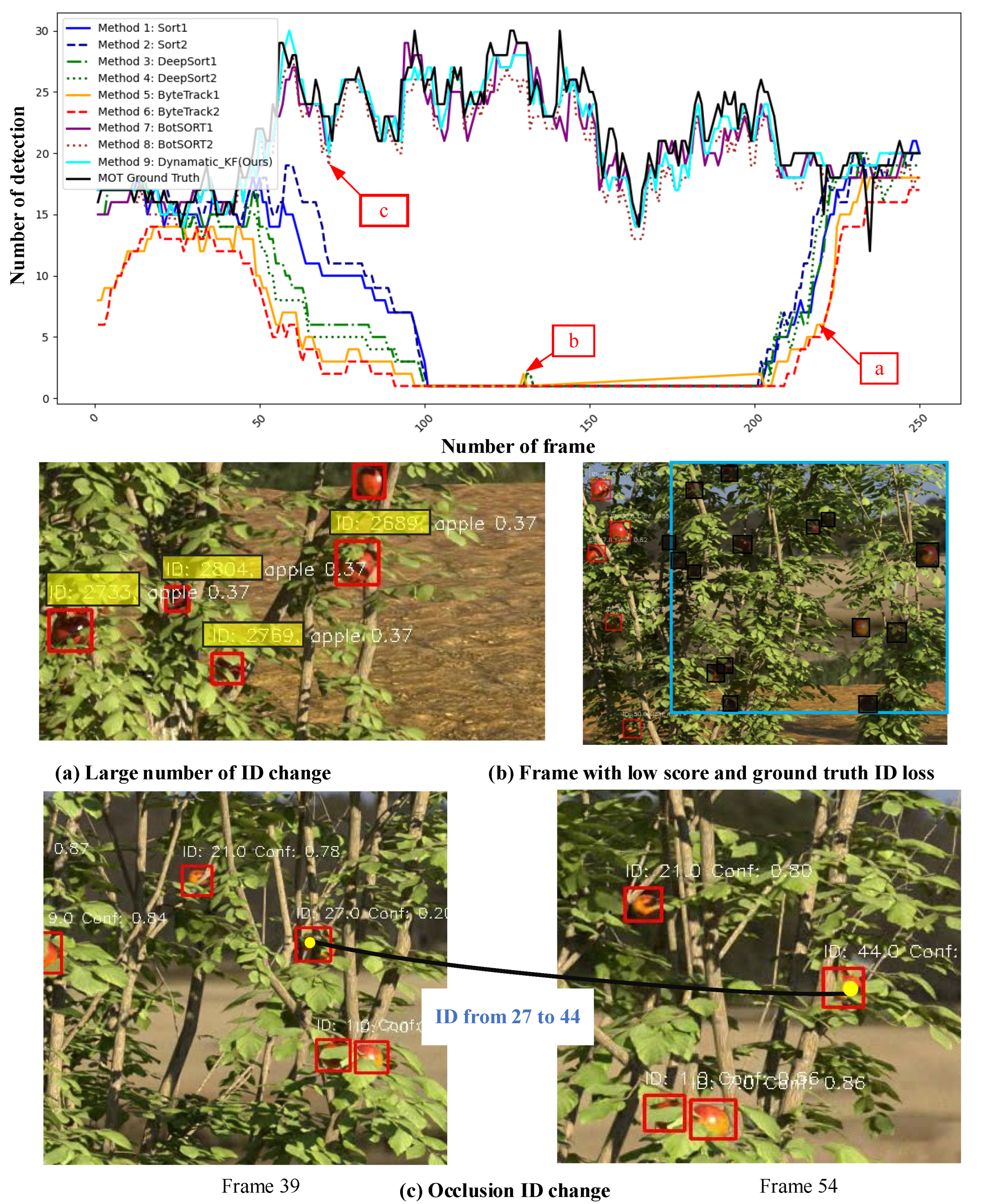

- The robustness of the ByteTrack method in complex environments was found to be improved by further processing the high and low score detection frames. Although the overall performance of ByteTrack was slightly inferior to DeepSORT, its ability to handle low-score targets improved the continuity of target tracking. Under the Improved YOLO detector, ByteTrack achieved a MOTA of 75.2%, IDF1 of 38.4, and HOTA of 69.1%, overcoming the typical trade-off between handling occlusions and maintaining ID consistency. This demonstrates the effectiveness of the multi-score detection approach in addressing the challenges posed by complex orchard environments, as shown in Figure 7. While the tracking methods from 1 to 6 performed relatively well in the early stage, the fluctuations in the detected and tracked fruit quantities became larger. The detection quantities were relatively low in the subsequent frames, leading to situations such as tracking loss, large ID jumps, and missed detections, shown in Figure 7a,b.

- -

- BoTSORT was an optimization of ByteTrack that incorporated camera motion compensation, which was a crucial factor for tracking performance in dynamic environments. The experimental results showed that BoTSORT had a MOTA of 84.7%, IDF1 of 55.5%, and HOTA of 76.4% using YOLOv8n, and a MOTA of 89.2%, IDF1 of 61.9, HOTA of 80.2% with the Improved YOLO detector, making it a compelling choice for complex fruit tracking tasks.

- -

- The dynamic Kalman filter tracker proposed in this study introduced a Kalman filter with a variable forget factor and combined IoU and Re-ID features to enhance target matching accuracy, effectively improving tracking accuracy and stability. The experimental results showed the proposed method outperformed other methods in indicators of MOTA, IDF1, and HOTA.

- -

- As depicted in Figure 7, the overall trajectories of the curves for method 7, method 8, and the proposed method were more consistent with the ground true value, exhibiting robust detection capabilities across most frames and maintaining high tracking accuracy. However, the BoTSORT methods still exhibited undesirable changes in the identification of the same target fruit when occluded, as illustrated in Figure 7c. The Fréchet distance was employed to quantify the similarity between the curves of these methods and the ground truth curve. This metric considers both the distance between each point and the overall alignment of the curve trajectories. The results revealed that the Fréchet distance for the dynamic Kalman filter tracker proposed in this study was 5.0, significantly lower than the values of 46.5 and 24.3 for Methods 7 and 8, respectively. This demonstrates the suitability of the proposed method for the critical task of tracking and counting fruits in complex orchard environments.

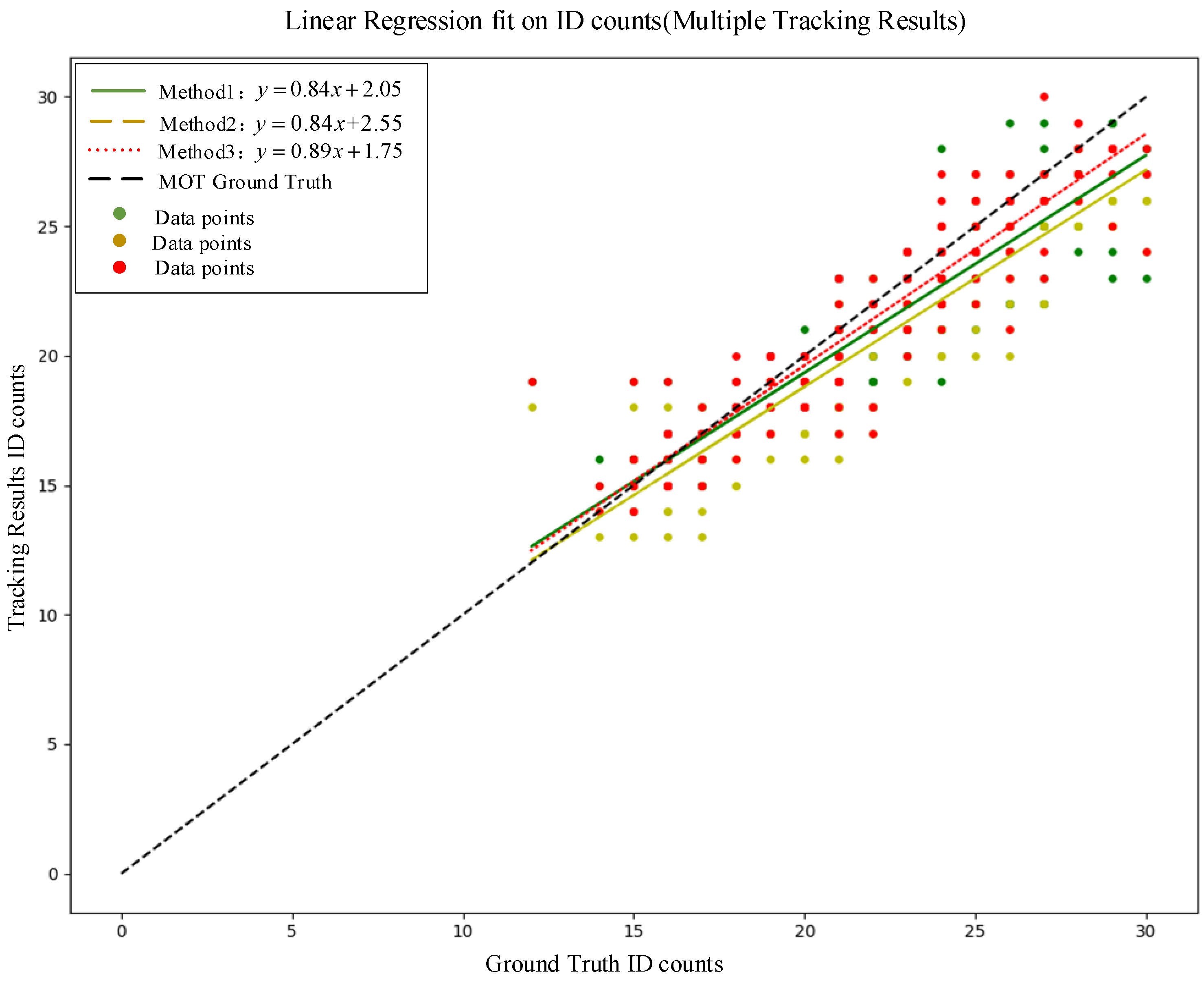

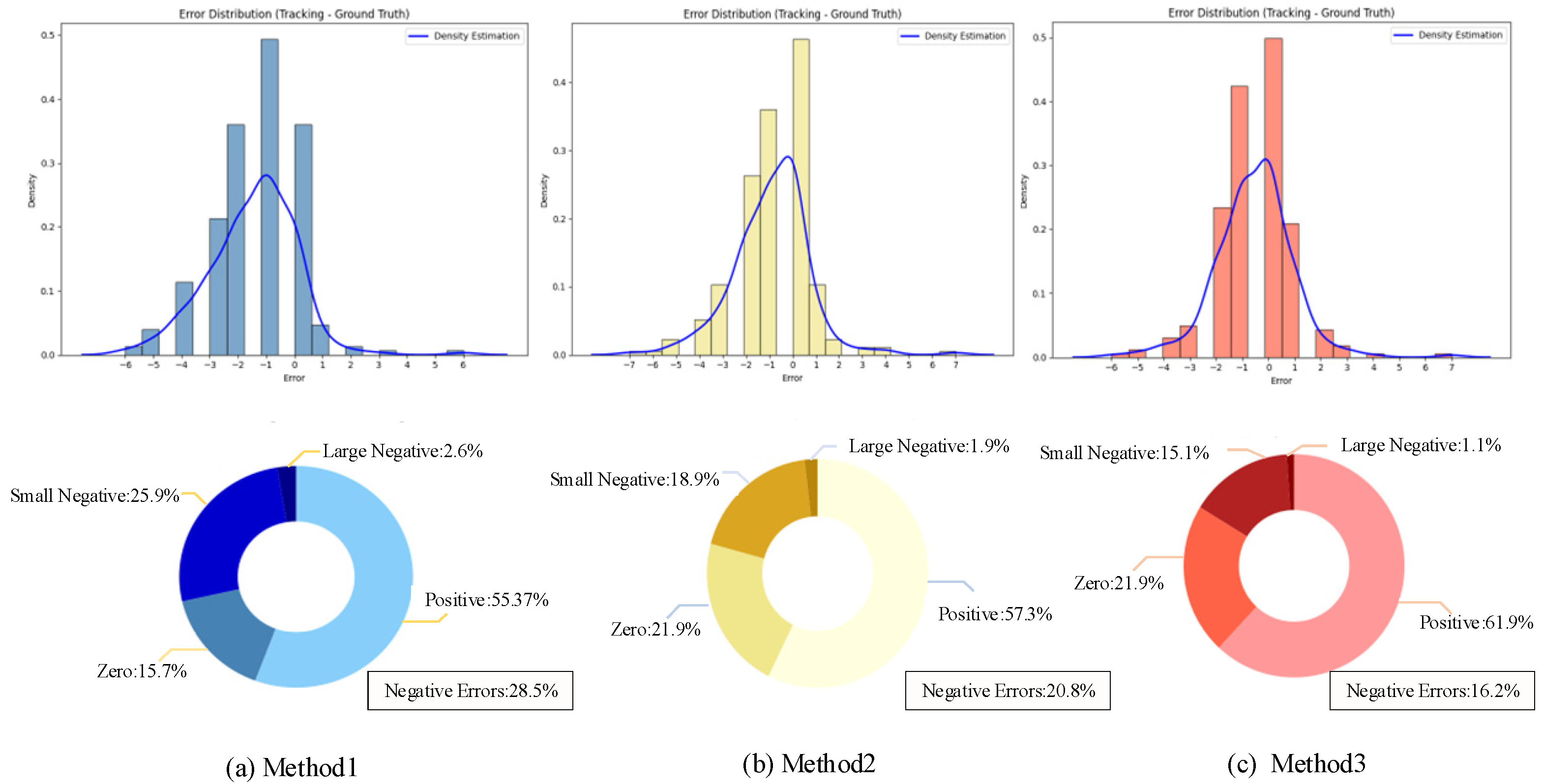

3.3. Comparison of Fruit Counting Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Padhiary, M.; Saha, D.; Kumar, R.; Sethi, L.N.; Kumar, A. Enhancing Precision Agriculture: A Comprehensive Review of Machine Learning and AI Vision Applications in All-Terrain Vehicle for Farm Automation. Smart Agric. Technol. 2024, 8, 100483. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Ong, P.; Yi, Z.; Ding, L.; Han, C. Fast Recognition and Counting Method of Dragon Fruit Flowers and Fruits Based on Video Stream. Sensors 2023, 23, 8444. [Google Scholar] [CrossRef]

- Shen, L.; Su, J.; He, R.; Song, L.; Huang, R.; Fang, Y.; Song, Y.; Su, B. Real-Time Tracking and Counting of Grape Clusters in the Field Based on Channel Pruning with YOLOv5s. Comput. Electron. Agric. 2023, 206, 107662. [Google Scholar] [CrossRef]

- Peng, J.; Yu, P.; Yang, Y.; Xin, J.; Wang, Y. NETSI: Nonequidistant Time Series Imaging-Based Multisensor Fusion Method for Smart Fruit Sorting. IEEE Sens. J. 2024, 24, 30834–30844. [Google Scholar] [CrossRef]

- Singh, R.; Nisha, R.; Naik, R.; Upendar, K.; Nickhil, C.; Deka, S.C. Sensor Fusion Techniques in Deep Learning for Multimodal Fruit and Vegetable Quality Assessment: A Comprehensive Review. Food Meas. 2024, 18, 8088–8109. [Google Scholar] [CrossRef]

- Sun, Q.; Zhang, R.; Chen, L.; Zhang, L.; Zhang, H.; Zhao, C. Semantic Segmentation and Path Planning for Orchards Based on UAV Images. Comput. Electron. Agric. 2022, 200, 107222. [Google Scholar] [CrossRef]

- Lu, J.; Huang, Y.; Lee, K.-M. Feature-Set Characterization for Target Detection Based on Artificial Color Contrast and Principal Component Analysis with Robotic Tealeaf Harvesting Applications. Int J Intell Robot Appl 2021, 5, 494–509. [Google Scholar] [CrossRef]

- Ramos, P.J.; Prieto, F.A.; Montoya, E.C.; Oliveros, C.E. Automatic Fruit Count on Coffee Branches Using Computer Vision. Comput. Electron. Agric. 2017, 137, 9–22. [Google Scholar] [CrossRef]

- Qureshi, W.S.; Payne, A.; Walsh, K.B.; Linker, R.; Cohen, O.; Dailey, M.N. Machine Vision for Counting Fruit on Mango Tree Canopies. Precis. Agric 2017, 18, 224–244. [Google Scholar] [CrossRef]

- Latha, R.S.; Sreekanth, G.R.; Suganthe, R.C.; Geetha, M.; Swathi, N.; Vaishnavi, S.; Sonasri, P. Automatic Fruit Detection System Using Multilayer Deep Convolution Neural Network. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; pp. 1–5. [Google Scholar]

- Samar, H.J.M.; Manalang, H.J.J.; Villaverde, J.F. Melon Ripeness Determination Using K-Nearest Neighbor Algorithm. In Proceedings of the International Conference on Computer and Automation Engineering (ICCAE), Sydney, Australia, 12–14 March 2024; pp. 461–466. [Google Scholar]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning for Real-Time Fruit Detection and Orchard Fruit Load Estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A Lightweight YOLOv8 Tomato Detection Algorithm Combining Feature Enhancement and Attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Kang, R.; Huang, J.; Zhou, X.; Ren, N.; Sun, S. Toward Real Scenery: A Lightweight Tomato Growth Inspection Algorithm for Leaf Disease Detection and Fruit Counting. Plant Phenomics 2024, 6, 0174. [Google Scholar] [CrossRef]

- Sun, Z.; Chen, J.; Chao, L.; Ruan, W.; Mukherjee, M. A Survey of Multiple Pedestrian Tracking Based on Tracking-by-Detection Framework. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1819–1833. [Google Scholar] [CrossRef]

- Cong, R.; Wang, Z.; Wang, Z. Enhanced Byte Track Vehicle Tracking Algorithm for Addressing Occlusion Challenges. In Advances in Computational Vision and Robotics; Tsihrintzis, G.A., Favorskaya, M.N., Kountcheva, R., Patnaik, S., Eds.; Springer: Cham, Switzerland, 2025. [Google Scholar]

- Revach, G.; Shlezinger, N.; Ni, X.; Escoriza, A.L.; Van Sloun, R.J.; Eldar, Y.C. KalmanNet: Neural network aided Kalman filtering for partially known dynamics. IEEE Trans. Signal Process. 2022, 70, 1532–1547. [Google Scholar] [CrossRef]

- Sadeghi, B.; Moshiri, B. Second-order EKF and unscented Kalman filter fusion for tracking maneuvering targets. In Proceedings of the 2007 IEEE International Conference on Information Reuse and Integration, Las Vegas, NV, USA, 13–15 August 2007; pp. 514–519. [Google Scholar]

- Santos, T.T.; de Souza, K.X.S.; Neto, J.C.; Koenigkan, L.V.; Moreira, A.S.; Ternes, S. Multiple orange detection and tracking with 3-D fruit relocalization and neural-net based yield regression in commercial sweet orange orchards. Comput. Electron. Agric. 2024, 224, 109199. [Google Scholar] [CrossRef]

- Salim, E. Suharjito Hyperparameter Optimization of YOLOv4 Tiny for Palm Oil Fresh Fruit Bunches Maturity Detection Using Genetics Algorithms. Smart Agric. Technol. 2023, 6, 100364. [Google Scholar] [CrossRef]

- Karim, M.J. Autonomous Pollination System for Tomato Plants in Greenhouses: Integrating Deep Learning and Robotic Hardware Manipulation on Edge Device. In Proceedings of the 2024 International Conference on Innovations in Science, Engineering and Technology (ICISET), Chattogram, Bangladesh, 26–27 October 2024; pp. 1–6. [Google Scholar]

- Jia, L.; Jie, R. Bagged grape video counting method based on improved YOLOv9s and adaptive Kalman filtering. Trans. Chin. Soc. Agric. Eng. 2025, 41, 195–203. [Google Scholar]

- Yan, S.; Fu, Y.; Zhang, W.; Yang, W.; Yu, R.; Zhang, F. Multi-target instance segmentation and tracking using yolov8 and bot-sort for video sar. In Proceedings of the 2023 5th International Conference on Electronic Engineering and Informatics (EEI), Wuhan, China, 23–25 June 2023; pp. 506–510. [Google Scholar]

- Khan, F.; Zafar, N.; Tahir, M.N.; Aqib, M.; Waheed, H.; Haroon, Z. A Mobile-Based System for Maize Plant Leaf Disease Detection and Classification Using Deep Learning. Front. Plant Sci. 2023, 14, 1079366. [Google Scholar] [CrossRef]

- Koonce, B. EfficientNet. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Springer: Cham, Switzerland, 2021; pp. 109–123. [Google Scholar]

- Jiao, J.; Tang, Y.M.; Lin, K.Y.; Gao, Y.; Ma, A.J.; Wang, Y.; Zheng, W.S. Dilateformer: Multi-scale dilated transformer for visual recognition. IEEE Trans. Multimed. 2023, 25, 8906–8919. [Google Scholar] [CrossRef]

- Li, M.; Pi, D.; Qin, S. An efficient single shot detector with weight-based feature fusion for small object detection. Sci. Rep. 2023, 13, 9883. [Google Scholar] [CrossRef]

| Models | EfficientNetV1 | MSDA | FASFFHead | P/% | mAP@0.5/% | mAP@0.5:0.95/% | GFLOPs |

|---|---|---|---|---|---|---|---|

| YOLOv5n | - | - | - | 81.0 | 84.1 | 38.0 | 4.2 |

| YOLOv7 | - | - | - | 53.1 | 79.0 | 33.1 | 103.5 |

| YOLOv8n * | - | - | - | 84.9 | 85.8 | 42.3 | 8.1 |

| YOLOv10n | - | - | - | 84.8 | 83.1 | 40.4 | 8.2 |

| YOLOv11 | - | - | - | 85.5 | 84.2 | 41.0 | 6.3 |

| YOLOv12n | - | - | - | 84.8 | 83.5 | 38.7 | 6.0 |

| Model 1 | √ | 84.0 | 87.1 | 44.5 | 5.6 | ||

| Model 2 | √ | 83.6 | 86.3 | 42.8 | 8.4 | ||

| Model 3 | √ | 83.2 | 88.7 | 44.9 | 15.4 | ||

| Model 4 | √ | √ | 85.6 | 87.2 | 44.3 | 5.7 | |

| Model 5 | √ | √ | 85.6 | 89.2 | 46.1 | 12.7 | |

| Model 6 | √ | √ | 85.7 | 88.3 | 45.2 | 15.4 | |

| Model 7 (proposed method) | √ | √ | √ | 86.7 | 89.5 | 47.3 | 12.9 |

| Tracker Method | YOLOv8n | Proposed Improved YOLO | MOTA/% | IDF1/% | HOTA/% |

|---|---|---|---|---|---|

| SORT (method 1) | √ | 65.0 | 39.0 | 59.1 | |

| DeepSORT (method 2) | √ | 76.0 | 49.0 | 73.7 | |

| ByteTrack (method 3) | √ | 75.0 | 37.0 | 68.6 | |

| BoTSORT (method 4) | √ | 84.7 | 55.5 | 76.4 | |

| SORT (method 5) | √ | 69.7 | 42.0 | 70.2 | |

| DeepSORT (method 6) | √ | 86.7 | 49.7 | 74.2 | |

| ByteTrack (method 7) | √ | 75.2 | 38.4 | 69.2 | |

| BoTSORT (method 8) | √ | 89.2 | 61.9 | 80.2 | |

| Dynamic Kalman filter tracker (proposed method) | √ | 95.0 | 65.5 | 82.4 |

| Method | Tracker& Detector | R2 | RMSE | Regression Equation |

|---|---|---|---|---|

| 1 | YOLOv8 + BotSORT | 0.72 | 2.13 | |

| 2 | Improved YOLO + BotSORT | 0.78 | 1.87 | |

| 3 | Improved YOLO + dynamic Kalman filter tracker | 0.85 | 1.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, Y.; Zhang, L.; Hu, X.; Yang, F.; Huang, Y. A Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting in Complex Orchards. Sensors 2025, 25, 4138. https://doi.org/10.3390/s25134138

Zhai Y, Zhang L, Hu X, Yang F, Huang Y. A Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting in Complex Orchards. Sensors. 2025; 25(13):4138. https://doi.org/10.3390/s25134138

Chicago/Turabian StyleZhai, Yaning, Ling Zhang, Xin Hu, Fanghu Yang, and Yang Huang. 2025. "A Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting in Complex Orchards" Sensors 25, no. 13: 4138. https://doi.org/10.3390/s25134138

APA StyleZhai, Y., Zhang, L., Hu, X., Yang, F., & Huang, Y. (2025). A Dynamic Kalman Filtering Method for Multi-Object Fruit Tracking and Counting in Complex Orchards. Sensors, 25(13), 4138. https://doi.org/10.3390/s25134138