Abstract

Specific emitter identification (SEI) is a core technology for wireless device security that plays a crucial role in protecting wireless communication systems from various security threats. However, current deep learning-based SEI methods heavily rely on large amounts of labeled data for supervised training, facing challenges in non-cooperative communication scenarios. To address these issues, this paper proposes a novel contrastive asymmetric masked learning-based SEI (CAML-SEI) method, effectively solving the problem of SEI under scarce labeled samples. The proposed method constructs an asymmetric auto-encoder architecture, comprising an encoder network based on channel squeeze-and-excitation residual blocks to capture radio frequency fingerprint (RFF) features embedded in signals, while employing a lightweight single-layer convolutional decoder for masked signal reconstruction. This design promotes the learning of fine-grained local feature representations. To further enhance feature discriminability, a learnable non-linear mapping is introduced to compress high-dimensional encoded features into a compact low-dimensional space, accompanied by a contrastive loss function that simultaneously achieves feature aggregation of positive samples and feature separation of negative samples. Finally, the network is jointly optimized by combining signal reconstruction and feature contrast tasks. Experiments conducted on real-world ADS-B and Wi-Fi datasets demonstrate that the proposed method effectively learns generalized RFF features, and the results show superior performance compared with other SEI methods.

1. Introduction

In the current era of highly developed digitalization, wireless communication technologies have been widely applied in various fields. From the interconnection of smart home devices in the civilian domain to the transmission of critical instructions in the military domain, the security of wireless communication is of utmost importance [1,2]. For example, during the flight of an aircraft, it needs to maintain close contact with the ground station through wireless communication and transmit key information such as position, speed, altitude, and flight attitude in real time. The accurate and secure transmission of this information is crucial for ensuring flight safety. In the traditional encryption authentication method, the security of the encryption algorithm depends on the confidentiality of the key. Once the key is leaked, the entire communication system will face huge risks. Moreover, the encryption and decryption processes are computationally intensive, which will severely affect the performance of some resource-constrained devices [3,4]. Specific emitter identification (SEI) is a non-cryptographic authentication method based on device physical characteristics [5]. Due to hardware characteristic differences, such as I/Q imbalance [6], power amplifier non-linearity [7], and frequency offset [8], each transmitter leaves unique and hard-to-forge radio frequency fingerprints (RFFs) in the signal. SEI technology has significant advantages compared with cryptography-based methods and has great application value in fields such as cognitive radio, spectrum monitoring, and electronic countermeasures [9,10,11].

Traditional methods based on feature engineering rely heavily on expert knowledge and human experience. They manually extract features such as signal amplitude, phase, and frequency [12,13,14] and design classifiers to identify different transmitters. With the continuous increase in the number of wireless devices, the selection of effective fingerprint features becomes increasingly difficult, making it hard to adapt to the rapidly changing electromagnetic environment.

In recent years, deep learning (DL) [15] has achieved remarkable success in various fields, such as computer vision [16] and natural language processing [17]. With its outstanding automatic feature extraction capabilities, deep learning can extract the subtle feature patterns hidden in radio frequency signals from large amounts of data. Therefore, the deep learning method was applied to SEI. Some methods use the original In-phase and Quadrature (IQ) data as the input of convolutional neural networks (CNNs) [18,19,20] and recurrent neural network (RNNs) [21]. Merchant et al. [22] utilized a CNN to extract features from IQ signals for identifying ZigBee devices. The experimental results demonstrated the method’s robustness across various signal-to-noise ratios (SNRs). Sankhe et al. [23] proposed the ORACLE model, composed of two convolutional layers and two fully connected layers, to extract and fuse features from I and Q signals. Yu et al. [24] introduced a robust method based on a multisampling convolutional neural network (MSCNN), employing multiple downsampling transformations for multiscale feature extraction. Al-Shawabka et al. [25] fed IQ signals into the RFF-LSTM model, which consists of three stacked Long Short-Term Memory (LSTM) layers and one fully connected layer. In [26], the authors proposed the ConvRNN model, which combines convolutional layers with RNN layers to simultaneously extract spatial and temporal features from IQ signals. A lightweight Transformer-based GLFormer method was presented in [27]. This method automatically extracts long-term dependencies from IQ signals. Wang et al. [28] proposed the complex-valued neural network (CVNN), comprising nine complex-valued convolutional layers and two fully connected layers, along with the model compression method. This approach aims to reduce model complexity while maintaining accuracy.

Other methods adopt bispectrum transform [29], time–frequency transform [30], Hilbert–Huang transform [31], constellation diagrams [32], etc., as the input to extract the features in the transform domain. Shen et al. [33] used the time–frequency spectrograms obtained through Short-Time Fourier Transform (STFT) as input to the Transformer model. To overcome the impact of wireless channels, Shen et al. [34] proposed the channel independent spectrogram and data augmentation method to enhance the system’s robustness against channel variations. In [35], the authors processed the transmitter signals through empirical mode decomposition (EMD) and Hilbert transform (HT) into graph tensors and applied graph neural networks (GNNs) for graph classification. Peng et al. [32] introduced an SEI method based on a differential constellation trace figure (DCTF). The CNN was designed to recognize the DCTF features of different devices. Subsequently, Peng et al. [8] proposed the heat constellation trace figure (HCTF) method. Lin et al. [36] proposed converting the IQ signal into a contour stellar image (CSI) with statistical significance and then using a CNN model for classification.

However, DL-based SEI methods are data-driven and rely heavily on a large amount of labeled samples. Labeling massive samples requires expensive manual overhead, as it requires professional knowledge to accurately label each transmitter signal to determine its corresponding transmitter category. In many practical non-cooperative electromagnetic environments, only unlabeled samples can be obtained, and it is difficult to acquire a sufficient number of labeled data to train deep neural networks (DNNs) adequately, which often leads to overfitting of the model.

To fully leverage all unlabeled data, self-supervised learning (SSL) has been proposed. Unlike traditional supervised learning, SSL does not rely on a large number of labeled samples. Instead, it designs pre-training tasks that enable the model to generate high-quality feature representations, thereby enhancing the performance of downstream tasks. Contrastive and generative methods are two key paradigms in SSL pre-training. In the framework of contrastive methods, feature representations are learned by constructing positive and negative samples. Specifically, for a given input sample, two augmented views are generated. These augmented views are regarded as positive sample pairs relative to the original input sample. While the augmented views may differ in form due to data augmentation, they share the same semantic content. In contrast, augmented views derived from different input samples are considered negative samples. The model aims to minimize the distance between positive sample pairs and maximize the distance between positive and negative samples, thereby learning discriminative feature representations. Wu et al. [37] devised positive sample pairs via random slicing for contrastive learning, employing the pre-trained model as an initialization in the subsequent fine-tuning stage. Meanwhile, Liu et al. [38] utilized BYOL [39] as the backbone for self-supervised contrastive learning, pre-training an RFF extractor and introducing adversarial augmentation to enhance the self-supervised learning process. Thereafter, they utilized a limited set of labeled samples for fine-tuning the extractor and classifier. Inspired by SimCLR [40], Liu et al. [41] converted signals into constellation trace figures and adopted image augmentation techniques from the computer vision domain to generate extensive positive and negative sample pairs for contrastive learning. In contrast to contrastive methods, which emphasize distinguishing between different sample pairs, generative methods focus more on learning how to reconstruct or predict partially observed data. By enabling the model to learn the reconstruction of input data from latent representations or the generation of new samples consistent with the real data distribution, these methods capture the intrinsic structures and features of the data. Xie et al. [42] utilized the Hilbert time–frequency spectrum as the input to an auto-encoder for pre-training, followed by fine-tuning the pre-trained auto-encoder using a small amount of labeled data. Huang et al. [43] proposed a pre-training method based on a symmetric masked auto-encoder (MAE), which predicted the masked segments of signals. To achieve better feature extraction performance and reduce the computational cost associated with symmetric decoders, Yao et al. [44] introduced an asymmetric masked auto-encoder (AMAE) for pre-training the RFF extractor. Li et al. [45] proposed a CML framework for few-shot SEI, which decouples masked reconstruction and contrastive learning into a dual-branch architecture comprising an encoder–decoder branch for signal reconstruction and a momentum encoder branch for instance discrimination.

Inspired by the aforementioned analysis, contrastive learning demonstrates remarkable advantages in feature extraction by learning discriminative feature representations through the construction of positive–negative sample pairs. Meanwhile, generative methods effectively capture latent distribution characteristics via data reconstruction. While contrastive methods excel in inter-sample discriminability, they overlook critical fine-grained local patterns, whereas generative methods capture signal structures but lack explicit mechanisms for inter-class separation. Considering this, we bridge the gap by combining masked reconstruction with contrastive learning, which reinforces device identity discrimination through feature aggregation and separation. This approach achieves representation learning from local details to global discriminability. Distinct from the dual-branch architecture in [45], the proposed method employs a single-branch asymmetric auto-encoder incorporating a lightweight single-layer convolutional decoder. This design significantly reduces parameter and computational costs while retaining effective signal reconstruction capabilities. Furthermore, deviating from conventional random masking techniques, we introduce a novel complementary masking data augmentation strategy. This approach generates complementary masked views of identical signals, compelling the model to learn robust feature representations by correlating information from non-overlapping signal segments. To mitigate limitations in generic feature extraction, we integrate channel squeeze-and-excitation residual blocks within the encoder. These blocks dynamically recalibrate feature responses to enhance focus on discriminative radio frequency fingerprint regions. Additionally, a lightweight feature projection head is integrated to compress features into a compact, discriminative latent space. We further establish dual optimization objectives. Unlike traditional methods reconstructing the entire signal, the reconstruction task specifically targets the complementary masked regions. Simultaneously, the contrastive task explicitly optimizes inter-device discrimination. This dual focus on local detail reconstruction and global separability effectively overcomes the limitations of single-paradigm approaches.

To this end, we propose a novel contrastive asymmetric masked learning-based SEI (CAML-SEI) method, aiming to integrate the synergistic advantages of contrastive and generative learning to build robust and discriminative RFF feature representations. Specifically, we first employ a complementary masking data augmentation technique to construct positive and negative sample pairs for RFF feature representation contrastive learning. Subsequently, we design channel squeeze-and-excitation residual blocks (CSERBlocks) to build an encoder architecture that maps sample pairs into the feature space. Additionally, a lightweight feature projection head is introduced to eliminate redundant information while enhancing feature discriminability. Finally, we jointly optimize the network by combining masked signal reconstruction loss and contrastive loss. The main contributions of this paper are summarized as follows:

- An effective CAML-SEI method is proposed for SSL-SEI. This method enhances the model’s ability to extract RFF features and effectively overcomes the limitation of limited labeled samples.

- A novel asymmetric auto-encoder architecture is designed to implement CAML-SEI, comprising a CSERBlock-based encoder, a lightweight decoder, and a lightweight feature projection head.

- The pre-trained encoder is fine-tuned using only a limited number of labeled samples, and a simple classifier containing only one fully connected layer is trained to classify the RFF features for SEI.

- The proposed CAML-SEI method is evaluated on real-world ADS-B and Wi-Fi datasets. Simulation results show that the recognition performance of the proposed method is superior to other comparison methods.

2. Signal Model and Problem Formulation

2.1. Signal Model

Under the assumption that only one transmitter is active at any given time and its signal can be captured individually without confusion with other transmitters’ signals, the received signal from this transmitter can be described as follows:

where represents the channel impulse response between the receiver and the transmitter, denotes the original signal transmitted by the transmitter, and represents additive white Gaussian noise (AWGN). The symbol * here denotes the convolution operation. When the continuous-time signal is sampled at a sampling frequency , a discrete complex sequence, known as IQ data, is obtained. The k-th signal is expressed as follows:

where the size of is , with L being the length of each sampled signal sequence. is the index of the k-th signal, and N is the total number of signals. These IQ signals are used as inputs to the SEI model to identify the transmitters.

2.2. SEI Problem

Given the transmitter signal dataset , where each represents a signal and is the one-hot encoded label vector. When the sample belongs to the c-th transmitter, the label vector satisfies , where C denotes the total number of transmitters. Here, and represent the signal space and class label space, respectively. The goal of SEI is to construct a mapping function by learning the relationship between signals and transmitters. The optimization objective can be formulated as minimizing the following loss:

where denotes a supervised loss function that quantifies the discrepancy between the model prediction and the ground-truth label . By minimizing this loss function, the model parameters can be optimized to ensure the predictions align closely with the true labels. However, when the number of labeled samples N is small (e.g., or 10), the model’s generalization capability deteriorates significantly, often leading to overfitting.

2.3. SSL-SEI Problem

In the SSL-SEI problem, the training dataset consists of an unlabeled dataset and a small labeled dataset , i.e., . Here, denotes the number of unlabeled samples, denotes the number of labeled samples, and . During the pre-training stage, the feature encoder is trained through the self-supervised learning task to learn general feature representations of the signals. The optimization objective at this stage can be formulated as minimizing the following loss:

where denotes the loss function of the self-supervised learning task and is the target of the task. Upon completion of pre-training, the optimized parameters of the feature encoder are saved. Subsequently, during the fine-tuning stage, the pre-trained feature encoder is utilized: its learned parameters are loaded and serve as the initialization for the encoder within the SEI model. The entire SEI model (now comprising the pre-trained encoder and a newly added classifier ) is then further optimized using the labeled dataset to adapt to the SEI task. The loss function at this stage can be expressed as follows:

where represents the loss function of the supervised learning task, typically the cross-entropy loss, and denotes the combined SEI mapping function. Through this process of initializing the encoder with pre-trained weights, the generic feature representations learned by the encoder during pre-training are effectively transferred to the fine-tuning stage. During fine-tuning, all model parameters (both and ) are further optimized to minimize . Ultimately, the model combining pre-training and fine-tuning achieves superior performance even with limited labeled data.

3. Proposed Method

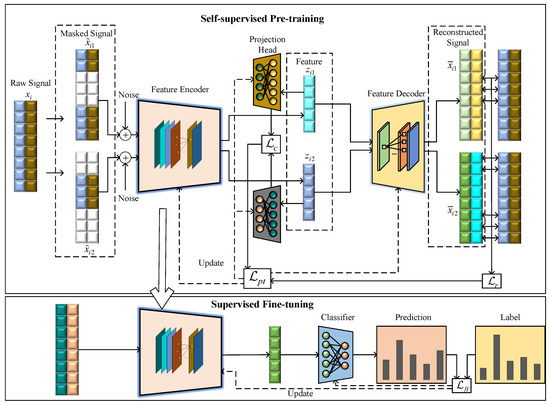

The proposed CAML-SEI method is illustrated in Figure 1. The framework incorporates a masked signal reconstruction task to enable the model to learn local structural characteristics of signals, while simultaneously employing a contrastive learning task to enhance intra-class compactness and inter-class separability among samples from similar devices. The joint optimization of these two objectives enables the model to simultaneously capture fine-grained features and establish globally discriminative representations. Furthermore, the CSERBlock is specifically designed to construct the feature encoder.

Figure 1.

Contrastive asymmetric masked learning-based specific emitter identification method.

During the pre-training stage, only unlabeled samples are required to learn generalized signal characteristics. During the fine-tuning stage, the encoder parameters obtained from self-supervised pre-training are used as the initialization parameters for the encoder. The encoder and classifier undergo end-to-end training, ensuring the encoder can both learn generalized RFF features from unlabeled data and adapt to task specificity through a small number of labeled samples. Extensive experiments are conducted to validate the enhanced feature learning capability of the proposed method, which demonstrates advanced recognition performance compared with other methods.

3.1. Data Augmentation

Traditional masked signal reconstruction methods typically employ a single random mask for training by applying it to the input signal, while contrastive learning paradigms rely on similarity learning between paired augmented samples. The data augmentation in this paper consists of two steps, which are masking and adding Gaussian white noise. To achieve synergistic optimization of these two tasks, a complementary mask enhancement strategy is proposed to enable the model to infer complete signal features from different local information, thereby enhancing the comprehensiveness of feature extraction. The strategy generates two augmented samples with complementary mask positions from the original signal based on a preset masking ratio . Specifically, the starting point of the masked interval is randomly selected within the signal length L, and the ending point is calculated according to , ensuring that two mask blocks and operate on non-overlapping regions of the signal. sets the interval to zero while preserving the remaining parts, whereas retains this interval and masks out other regions. This complementary masking facilitates the model in extracting effective features from distinct local segments and establishing cross-region feature associations, thereby effectively mitigating the potential issue of local feature overfitting that may arise from single-mask training. Additionally, after performing complementary masking, Gaussian white noise and are, respectively, added to the two masked signals to generate augmented samples and :

where ⊙ denotes the Hadamard product. Therefore, the two complementarily masked signals obtained through the aforementioned data augmentation will serve as inputs to the feature encoder.

3.2. Network Structure

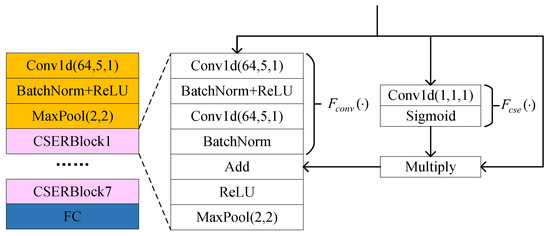

To enhance the feature extraction capability of the encoder, we design an encoder architecture based on the CSERBlock, as illustrated in Figure 2. The encoder comprises a 1D convolutional layer, seven CSERBlocks, and a fully connected layer. Here, Conv1d (C, K, S) denotes a 1D convolutional layer with output channels C, kernel size K, and stride S. Here, the output channel number C is set to 64 to balance feature richness and computational efficiency. An insufficient number of channels would limit the model’s ability to capture key features, while excessive channels would increase computational overhead and easily lead to overfitting in few-shot scenarios. The convolution kernel size K is set to 5 as a trade-off choice to accommodate both short-term and long-term feature extraction. The stride S = 1 maximizes the retention of fingerprint details. These structural hyperparameters remain fixed throughout the training process, with only the convolution kernel weights being adaptively updated through backpropagation. In the CSERBlock, the input features are first transformed into a more compact representation through 1D convolution. In the “excitation” step, weights are generated via activation functions to adaptively recalibrate feature responses at different positions on the feature maps. This mechanism enables the model to focus on more informative regions, thereby optimizing the quality of feature representations. Simultaneously, residual connections ensure direct gradient flow, mitigating the vanishing gradient problem during the training of deep networks. Consequently, the residual block with channel squeeze-and-excitation enhances critical features while suppressing less significant ones by reweighting the importance of features at each position, thereby improving the model’s feature extraction capability. Finally, a max-pooling layer is employed to complete the feature extraction process.

Figure 2.

Structure of CSERNet.

Specifically, in the CSERBlock, the channel squeeze-and-excitation function is composed of a 1D convolutional layer followed by the sigmoid activation function that maps inputs to values within the range of 0 to 1. By applying to the input feature map , the output response is generated as follows:

Each element in indicates the relative importance of the feature at position i. The input feature map is then adjusted through the weighting mechanism, thereby recalibrating the importance of features at each position. This process enhances the influence of critical features while suppressing non-critical ones as follows:

where · denotes element-wise multiplication across channels. The excitation mechanism enhances focus on discriminative regions through a spatial attention mechanism that dynamically assigns location-specific weights to feature maps. Specifically, as defined in (13), a 1D convolutional layer compresses multi-channel features at each temporal position into a scalar value, followed by a sigmoid activation to generate a spatial weight vector. These weights recalibrate the original features via element-wise multiplication, where higher weights intensify responses to discriminative features, while lower weights suppress less informative regions. This weighting mechanism is adaptively optimized through backpropagation during training.

The function consists of two 1D convolutional layers applied to the input feature map . Its output is added to the recalibrated feature map , thereby forming a residual connection. This residual connection ensures direct gradient flow, which helps mitigate the vanishing gradient problem during the training of deep networks. Finally, a max-pooling layer is utilized to achieve dimensionality reduction and feature extraction as follows:

We adopt an asymmetric encoder–decoder architecture, where the decoder employs only a single 1D convolutional layer to reconstruct the signal. Compared to traditional symmetric encoder–decoder architectures, this asymmetric design significantly simplifies the decoder’s complexity. Due to the reduced parameters, the asymmetric decoder exhibits stronger generalization capability on training data, effectively mitigating the risk of overfitting. Furthermore, this design enhances flexibility and reduces demands on computational resources, thereby significantly lowering the model’s computational burden while maintaining efficient performance.

In contrastive learning tasks, two complementary masked augmented signals from the same signal may exhibit potential distribution shifts in their encoded features due to differences in semantic information density within masked regions. This asymmetric semantic distribution hinders the contrastive loss function from effectively minimizing the intra-class distance of positive sample pairs, thereby limiting the model’s capability to learn useful representations. Consequently, direct contrastive learning on raw features may encounter significant challenges in efficiently extracting discriminative feature representations. To address this issue, we introduce two lightweight feature projection heads subsequent to the feature encoder. Each projection head comprises merely a single fully connected layer, which employs non-linear projection to compress high-dimensional features into a low-dimensional space, thereby eliminating redundant information while enhancing feature discriminability. This design aims to mitigate distributional discrepancies among contrastive features and facilitate model optimization.

The classifier in the fine-tuning stage comprises a fully connected layer and a dropout layer to mitigate overfitting effects. Detailed network configurations are presented in Table 1.

Table 1.

Network structure of CAML-SEI method.

3.3. Pre-Training Stage

During the self-supervised pre-training stage, two augmented masked signals and are fed into the feature encoder to extract latent fingerprint representations and . For the signal reconstruction task, the latent representations are passed to the decoder to produce reconstructed signals and . To minimize the discrepancy between reconstructed and original signals, the mean squared error (MSE) is employed as the loss function, with the loss calculated exclusively on the masked regions between the decoder predictions and the original signals:

where B denotes the training batch size. Since , the model must fully reconstruct the signal from these two mutually exclusive subsets. This compels the encoder to learn a global feature representation from local fragments and maintain semantic consistency across fragments. For random masking, due to the existence of overlapping regions, the model might neglect feature extraction from certain regions. For the latent fingerprint features and of two augmented samples, the features are further compressed via projection heads to obtain and . We utilize cosine similarity as the similarity metric. Its advantage lies in its robustness to the magnitudes of feature vectors, enabling the model to focus on learning discriminative semantic features. The cosine similarity between them is computed as follows:

To establish a discriminative feature representation space, we further design a contrastive learning-based optimization objective. Specifically, for positive signal pairs (i.e., the two masked augmented signals) derived from the same original signal, their latent features are encouraged to be close to each other, while being repelled from negative signal features originating from different signals. Formally, given a training batch containing B signals, for each signal , the features of its two augmented versions, and , form a positive pair. The negative signal set comprises the complementary mask signal features from other signals within the batch. Based on this construction, the contrastive loss is formulated as follows:

Similarly, is defined as

The total contrastive loss is defined as

In this way, the model learns to discriminate between positive signals derived from the same signal and negative signals derived from different signals, thereby improving the discriminative capability of latent features. The overall learning objective is formulated as a combination of the reconstruction loss and the contrastive loss , defined as

Since the model is required to recover complete signals from masked inputs, the reconstruction loss facilitates the model in capturing the inherent structures and patterns of the signals, thereby enabling the learning of more comprehensive and enriched feature representations. The contrastive loss focuses on optimizing both the matching degree between two augmented signals and the contrastiveness between signals. This mechanism encourages the learned features to exhibit desirable instance discriminability.

3.4. Fine-Tuning Stage

After completing the parameter optimization of the feature encoder during the pre-training stage, we propose to transfer the pre-trained weights of the encoder as initial parameters to the model fine-tuning stage. This design effectively leverages the general feature representation capabilities acquired through self-supervised pre-training, thereby providing a more efficient initial foundation for subsequent fine-tuning tasks. Additionally, the classifier incorporates a fully connected layer. To minimize the discrepancy between the probability distribution predicted by the model and that of the ground-truth labels, the cross-entropy loss function is employed as the optimization objective:

where is the batch size for model training in the fine-tuning stage. The training procedure of CAML-SEI is summarized in Algorithm 1.

| Algorithm 1 Training procedure of CAML-SEI method. |

| Input: |

|

| Output: Trained parameters and . |

| Pre-training Stage: |

|

| Fine-tuning Stage: |

|

4. Experimental Results and Discussions

4.1. Dataset and Experimental Setup

The model performance is evaluated using a real-world collected ADS-B dataset containing 30 emitters and an open-source Wi-Fi dataset [23] containing 16 emitters. The ADS-B dataset is constructed based on a receiver system comprising the AD9361 (Analog Devices, Norwood, MA, USA) RF front-end and the ZYNQ-7020 (Xilinx, San Jose, CA, USA) programmable logic platform, with the system’s center frequency set to 1090 MHz and a sampling rate of 40 MHz. The SNR of ADS-B signals is 12–15 dB. Each raw signal frame contains 4780 sampling points, which are segmented into samples using a sliding window with a length of and a step size of . During the pre-training stage, 100 signal frames per aircraft are selected and sliced to generate 500 training samples; in the testing stage, 80 signal frames per aircraft are sliced to produce 400 test samples. The Wi-Fi dataset consists of signals complying with the IEEE 802.11a standard [46] and has the SNR of 30 dB. It is collected using a USRP B210 (Ettus Research, Austin, TX, USA) device, with a center frequency of 2450 MHz. The signal sources are 16 USRP X310 (Ettus Research, Austin, TX, USA) devices. The signals are generated using a sliding window with a fixed window length of and a stride of . Each device contains 1000 signals in the pre-training stage and 800 signals per device in the testing stage. In the fine-tuning stage, experiments are conducted with 10, 15, 20, and 25 labeled signals per emitter.

During the pre-training stage, the batch size is set to 128, and the number of training epochs is set to 100. The Adam optimizer is employed with a learning rate of 0.001. The masking ratio is set to 0.4. The random seed is 300. In the fine-tuning stage, the same batch size of 128 is maintained, with the number of training epochs set to 200. The Adam optimizer is used with a learning rate of 0.001, coupled with a CosineAnnealingLR scheduler for learning rate adjustment. All our experiments were executed on a NVIDIA GeForce RTX 3090 GPU.

4.2. Comparison with Baseline Methods

The proposed method is compared with various generative approaches, including AE [47], DCGAN [48], MAE [43], AMAE [44], as well as contrastive learning methods, such as BYOL [39], SimCLR [40], and SA2SEI [38]. We conducted ten independent experiments and calculated statistical indicators including mean accuracy and standard deviation. Experimental results demonstrate that the proposed method exhibits significant performance advantages in two SEI tasks. Table 2 presents the performance comparison of various methods on the ADS-B dataset with 10, 15, 20, or 25 labeled samples per class. The results show that the proposed method significantly outperforms other methods and demonstrates robustness to label scarcity. The core robustness of our approach lies in its stable performance under extremely limited labeled data scenarios. Specifically, with only 10 labeled samples per class, the proposed method achieves a recognition accuracy of 78.29%, surpassing the suboptimal baseline method AMAE (74.04%) by 4.25%. As the number of labeled samples increases to 25 per class, the accuracy of the proposed method further improves to 88.00%, representing a 2.74% enhancement over the suboptimal AMAE approach. This indicates that the robust feature representation constructed during the self-supervised pre-training stage effectively captures the fingerprint characteristics of ADS-B signals.

Table 2.

Recognition accuracy of different methods on the ADS-B dataset.

Table 3 demonstrates the performance of different methods on the Wi-Fi dataset. Compared to baseline methods, the proposed CAML-SEI method consistently outperforms in four few-shot labeled sample scenarios. When the number of labeled samples is 10, CAML-SEI achieves an accuracy of 96.37%, outperforming the suboptimal baseline method MAE by 4.23%. As the number of labeled samples increases to 25, the accuracy of CAML-SEI further improves to 99.53%. It is noteworthy that the contrastive learning method SimCLR achieves an accuracy of 99.04% with 25 labeled samples per class, which is close to the proposed method’s accuracy of 99.53%. However, when only 10 labeled samples per class are available, SimCLR’s accuracy drops to 85.92%, which is 10.45% lower than the 96.37% achieved by the proposed CAML-SEI method. These results fully validate the robustness and effectiveness of the proposed method under limited labeled data conditions. Under the challenging condition of only 10 to 25 samples per class, the sustained lead fully demonstrates its strong tolerance to label scarcity, making it a reliable solution for few-shot SEI tasks.

Table 3.

Recognition accuracy of different methods on the Wi-Fi dataset.

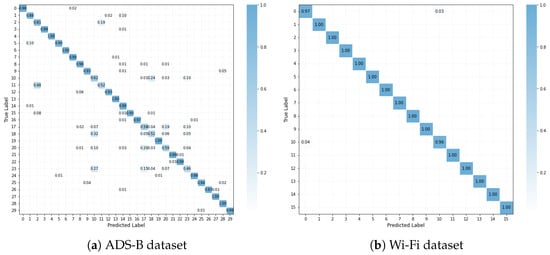

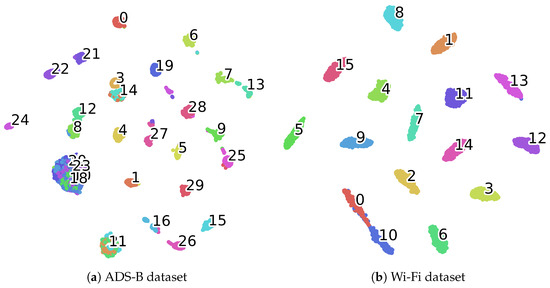

Table 4 and Table 5 present the F1-score and recall of the proposed CAML-SEI under different numbers of labeled samples. It can be observed that the F1-score and recall are generally consistent with the accuracy, which indicates that the model has relatively balanced prediction performance across all categories and shows certain robustness in terms of class balance. Figure 3 presents the confusion matrices of the proposed method on two datasets. The vast majority of the diagonal elements have very high values, intuitively confirming that most transmitters can be correctly identified. The t-SNE method is adopted to visualize the features learned by the model. As illustrated in Figure 4, the visualization results on two datasets demonstrate that the features of most categories form distinct clusters in the low-dimensional embedded space, exhibiting intra-class compactness and inter-class separation characteristics. This high degree of intra-class compactness and inter-class separation is a direct manifestation of the feature discriminability successfully achieved by the mechanism of the contrastive loss . Through its loss function design, effectively pulls together the different views of the same signal while pushing apart views from different signals. Although some classes in Figure 4a may overlap partially due to environmental factors such as noise and different flight states, the majority of classes still form clear clusters, which confirms the strong discriminability of CAML-SEI for global features.

Table 4.

F1-score and recall of the CAML-SEI method on the ADS-B dataset.

Table 5.

F1-score and recall of the CAML-SEI method on the Wi-Fi dataset.

Figure 3.

Confusion matrices.

Figure 4.

Feature visualization using t-SNE. Each color represents the feature distribution of the device signal, with numbers indicating the corresponding devices.

4.3. Ablation Study

Table 6 and Table 7 present the experimental results on two datasets, where “w/o CSE” denotes removing the channel squeeze-and-excitation operation, “w/o Proh” indicates removing the feature projection head, “w/o ” represents eliminating the reconstruction loss, and “w/o ” signifies the removal of the contrastive loss. “only ” means using only , while removing the components CSE, Proh, and . “only ” means using only , while removing the components CSE, Proh, and . The results demonstrate that all components contribute significantly to model performance improvement across a different number of labeled samples scenarios.

Table 6.

Ablation experiment on the ADS-B dataset.

Table 7.

Ablation experiment on the Wi-Fi dataset.

The ablation of the CSE module exhibits a particularly notable impact on model performance. On the ADS-B dataset with 10 labeled samples per class, the recognition accuracy drops by 10.99%, while a 4.96% decrease is observed on the Wi-Fi dataset under the same conditions. These results strongly validate the critical role of the feature weighting mechanism in CSERBlock. RFF features are generated by hardware defects and exhibit fine-grained local patterns in signals. The CSE module dynamically enhances responses in key feature regions by reweighting features. When the CSE module is removed, discriminative regions become averaged out, which causes the model’s ability to identify genuine RFF features to diminish, thus leading to a decline in recognition performance. The removal of the feature projection head negatively affects both datasets, causing accuracy reductions of 1.61% on the ADS-B dataset and 0.76% on the Wi-Fi dataset when 15 samples per class are labeled. Further analysis of loss function impacts reveals that the ablation of reconstruction loss leads to significant performance degradation on both datasets. With 10 labeled samples per class, the accuracy decreases by 5.64% on the ADS-B dataset and 3.75% on the Wi-Fi dataset, confirming the necessity of masked reconstruction for fine-grained feature extraction. This significant performance decline clearly demonstrates that the reconstruction task is crucial for the model to learn the inherent structures and patterns of signals. Removing it prevents the model from effectively learning comprehensive and enriched feature representations from masked signals, thereby impairing downstream classification performance. The reconstruction task forces the model to recover masked signals from complementary masked samples, enabling the capture of structural detail features in signals. When the reconstruction loss is removed, the model neglects these local features and tends to learn global features. However, such features are prone to lacking device specificity, which ultimately results in a drop in recognition performance. The removal of the contrastive loss has an even more pronounced impact, causing a 37.12% accuracy drop on the Wi-Fi dataset and a 14.09% drop on the ADS-B dataset when there are 10 labeled samples per class. This proves the core role of contrastive loss in optimizing the matching degree of positive sample pairs and the contrastiveness between positive and negative samples. Without , the features learned by the model severely lack the ability to distinguish between different signal instances, leading to a substantial deterioration in classification performance. The design of contrastive loss explicitly achieves this goal by maximizing the similarity ratio between positive sample pairs versus negative sample pairs. Additionally, using either the reconstruction loss or the contrastive loss alone leads to a decrease in recognition accuracy. For example, on the ADS-B dataset with 10 labeled samples per class, using only the reconstruction loss results in a 15.74% drop in accuracy; on the Wi-Fi dataset, it leads to a 30.72% drop. On the ADS-B dataset with 10 labeled samples per class, using only the contrastive loss results in a 5.68% drop in accuracy; on the Wi-Fi dataset, it leads to a 6.13% drop. The complete model that uses both and consistently outperforms the models using either loss function alone. This indicates that and are complementary rather than redundant. Therefore, the ablation experiments comprehensively verify the design rationality of each module from multiple perspectives, providing solid experimental support for the overall architecture of the proposed model.

4.4. Impact of Hyperparameters

4.4.1. The Impact of Different Masking Ratios

Table 8 and Table 9 illustrate the impact of different masking ratios on model performance for the ADS-B and Wi-Fi datasets, respectively. For the 30-class ADS-B task, the optimal masking ratio is = 0.4, achieving peak accuracy of 78.29%, 82.78%, 84.47%, and 88.00% across 10 to 25 labeled samples per class. This ratio balances the difficulty of local reconstruction with the integrity of global semantics, enabling robust feature extraction. For the 16-class Wi-Fi dataset, = 0.6 yields accuracies of 97.99%, 99.45%, 99.46%, and 99.72% under the same labeled sample sizes. It can be seen that excessively high or low masking ratios (e.g., 0.1 and 0.9) lead to significant performance degradation. When the masking ratio is too low, the model only needs to reconstruct local signal fragments, failing to compel the encoder to learn global dependency features of device fingerprints. Conversely, an excessively high ratio results in the over-masking of critical signal structures, causing both the reconstruction task and contrastive task to converge to suboptimal local solutions, thereby impairing the model’s ability to recover discriminative information effectively. Therefore, a moderate masking ratio ( = 0.2 to 0.8) is considered appropriate, as it balances the difficulty of local reconstruction with the integrity of global semantics, enabling robust feature extraction and achieving higher accuracy.

Table 8.

Recognition accuracy with different masking ratios on the ADS-B dataset.

Table 9.

Recognition accuracy with different masking ratios on the Wi-Fi dataset.

4.4.2. The Impact of Different Batch Sizes

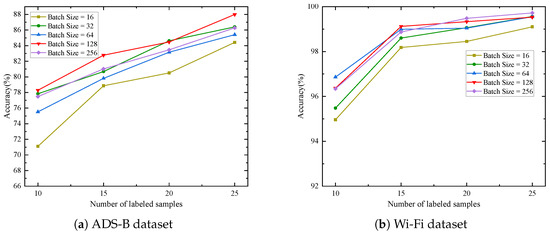

Figure 5 illustrates the impact of different training batch sizes on model performance during the pre-training stage for the ADS-B and Wi-Fi datasets. Experimental results demonstrate that a batch size of 128 achieves the highest or near-highest recognition accuracy across varying numbers of labeled samples in both datasets. Specifically, on the ADS-B dataset, the batch size of 128 yields the highest accuracy for three cases (10, 15, and 25 labeled samples). On the Wi-Fi dataset, all batch sizes except for a batch size of 16 achieved high accuracy. Even with smaller batch sizes (such as 32), the model maintains high accuracy. These observations suggest that a batch size of 128 may provide relatively stable gradient estimates and sufficient update frequency. A moderate batch size can effectively balance gradient update stability and model generalization ability.

Figure 5.

Recognition accuracy under different batch sizes.

4.4.3. The Impact of the Number of Unlabeled Samples

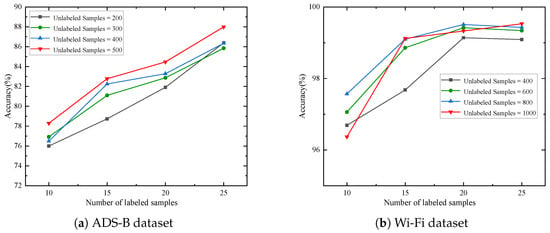

The impact of the number of unlabeled samples on test accuracy is systematically evaluated for both ADS-B and Wi-Fi datasets, as shown in Figure 6. For the ADS-B dataset, raising unlabeled samples per class from 200 to 500 yields a clear upward trend in recognition accuracy. For example, with 10 labeled samples, accuracy improves from 76.00% to 78.29%. Similarly, for the Wi-Fi dataset, increasing unlabeled samples per class from 400 to 1000 generally boosts accuracy across most labeled sample counts. Notably, with 15 labeled samples, accuracy rises from 97.68% to 99.12%, and with 25 labeled samples, it increases from 99.09% to 99.53%. Although a minor fluctuation occurs for 10 labeled samples on the Wi-Fi dataset, the overall results confirm that self-supervised learning effectively extracts generalizable feature representations from unlabeled data, mitigating performance degradation due to labeled data scarcity.

Figure 6.

Recognition accuracy under different number of unlabeled samples.

4.5. Other Analyses

4.5.1. The Impact of the Number of Projection Head Layers

To evaluate the impact of projection head layers on the proposed method, Table 10 and Table 11 present the recognition accuracy under different layer configurations for the ADS-B and Wi-Fi datasets. The results demonstrate that on ADS-B, a single FC layer achieves 88.00% accuracy with 25 labeled samples, while increasing the depth to two and three layers degrades performance by 3.31% and 4.78%, respectively, indicating overfitting risks. On the Wi-Fi dataset, although the two-layer head demonstrates better recognition performance, its advantage diminishes with increasing labeled samples. Crucially, the three-FC-layer configuration underperforms the single-layer head in all Wi-Fi scenarios. These findings confirm that excessively increasing projection head complexity hampers generalization.

Table 10.

Impact of projection head layers on the ADS-B dataset.

Table 11.

Impact of projection head layers on the Wi-Fi dataset.

4.5.2. The Impact of Different Feature Encoders

Table 12 and Table 13 compare the recognition accuracy, number of parameters (Params), floating point operations (FLOPs), and training time per epoch for each class with 25 labeled samples of the proposed CSERNet with CVCNN [28], VGG [49], ResNet [50], and EfficientNet [51] when they are employed as feature encoders under varying numbers of labeled samples. The results show that on the ADS-B dataset, CSERNet significantly outperforms VGG, ResNet, and CVCNN models and performs better than EfficientNet when every class only has 10 and 25 samples. Specifically, with 10 labeled samples per class, CSERNet attains an accuracy of 78.29%, exhibiting improvements of 1.41%, 24.01%, 6.84%, and 0.55% over CVCNN, VGG, ResNet, and EfficientNet, respectively. On the Wi-Fi dataset, the performance of models like CVCNN and EfficientNet is slightly higher than that of CSERNet, but CSERNet remains highly competitive, exceeding 99% accuracy with 15 labeled samples per class. Crucially, CSERNet has the fewest parameters and the lowest FLOPs, but it still achieves the highest or a relatively high accuracy, demonstrating its robustness to model simplification. Additionally, its training time is shorter than that of CVCNN, ResNet, and EfficientNet, which validates the balance of CSERNet between high feature extraction capability and significantly lower resource requirements.

Table 12.

Impact of different encoders on the ADS-B dataset.

Table 13.

Impact of different encoders on the Wi-Fi dataset.

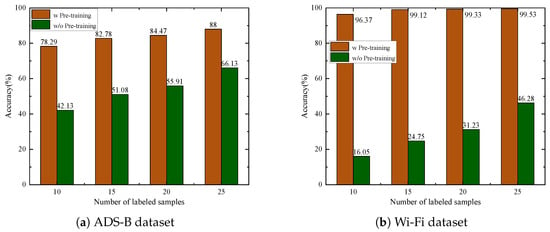

4.5.3. The Impact of Self-Supervised Pre-Training

To validate the effectiveness of the proposed self-supervised pre-training method, we compared it with a baseline approach that does not use pre-training. In the baseline, both the feature encoder and classifier parameters are randomly initialized and then fine-tuned using 10, 15, 20, or 25 labeled samples. The experimental results shown in Figure 7 on both datasets demonstrate that the proposed self-supervised pre-training method consistently outperforms the baseline across various scenarios. Specifically, on the ADS-B dataset, with 10 labeled samples, the pre-trained model achieves an accuracy of 78.29%, which is 36.16% higher than the baseline without pre-training. On the Wi-Fi dataset, with 10 labeled samples, the pre-trained model achieves an accuracy of 96.37%, while the baseline without pre-training is only 16.05%. In scenarios with 15, 20, and 25 labeled samples, the proposed method continues to maintain robust performance. These results clearly indicate that the proposed method has significant advantages in scenarios with limited labeled samples. This validates its ability to maintain high performance with fewer labeled samples while effectively leveraging unlabeled data to maximize its potential.

Figure 7.

Recognition accuracy with/without pre-training.

4.5.4. The Impact of Masking Mode

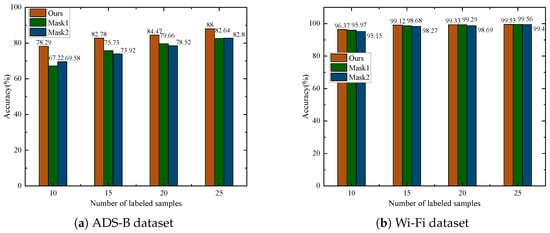

This experiment aims to validate the effectiveness of the proposed complementary masking mode. Two baseline methods are designed: Mask1, which applies random masking with identical masking ratios to both augmented signals, and Mask2, which employs random masking with masking ratios summing to 1 for the two augmented signals. The experimental results shown in Figure 8 demonstrate that the proposed complementary masking strategy achieves better performance under the condition of having very few labeled samples per class (e.g., 10 or 15). On the ADS-B dataset, when the number of labeled samples per class is 25, the proposed method achieves an accuracy of 88.00%, which is 5.36% and 5.2% higher than the other two methods, respectively. With only 10 labeled samples, the performance gap becomes more pronounced, where the proposed method achieves improvements of 11.07% and 8.71% over Mask1 and Mask2. On the Wi-Fi dataset, with only 10 labeled samples per class, the proposed method achieves 0.4% and 1.22% higher accuracy than Mask1 and Mask2, respectively. The experimental results indicate that the complementary masking mechanism enhances the robustness of feature learning, thus making it more adaptable to scenarios with scarce labeled data.

Figure 8.

Impact of masking mode.

4.5.5. The Impact of Encoder–Decoder Symmetry

To validate the effectiveness of the proposed asymmetric encoder–decoder architecture, its performance is compared against a symmetric architecture. Results based on the ADS-B and Wi-Fi datasets are presented in Table 14 and Table 15, clearly demonstrating the advantage of the asymmetric design. On the ADS-B dataset, the asymmetric architecture consistently outperforms the symmetric counterpart across all labeled sample scenarios, with a performance improvement of 6.25% to 8.96%. Similarly, on the Wi-Fi dataset, the asymmetric architecture maintains performance advantages, achieving a 3.57% advantage with 10 samples per class. While the performance difference diminished as the number of labeled samples increased to 25, the asymmetric design still achieved marginally higher accuracy. The asymmetric architecture effectively reduces model complexity and computational overhead by employing a powerful encoder to extract rich features while utilizing a simple decoder focused solely on reconstruction. This design mitigates overfitting of the complex decoder to the reconstruction task, facilitating the learning of more discriminative and generalizable feature representations by the encoders.

Table 14.

Impact of encoder–decoder symmetry on the ADS-B dataset.

Table 15.

Impact of encoder–decoder symmetry on the Wi-Fi dataset.

4.5.6. The Impact of Signal Length

Table 16 presents the recognition performance of the model on the ADS-B dataset under three different input signal lengths: L = 3750, L = 4000, and L = 4250. As can be seen from the table, when there are 10 labeled samples per class, the model achieves the highest recognition accuracy under the condition of L = 4000. When there are 15, 20, and 25 labeled samples per class, the model achieves the highest recognition accuracy under the condition of L = 4250. Under the four few-shot conditions, the model is able to maintain a high level of recognition accuracy under different signal lengths, and the maximum fluctuation in the model’s recognition performance across different signal lengths is approximately 3%. This demonstrates that the CAML-SEI model exhibits certain robustness to changes in signal length. Its self-supervised feature extraction mechanism does not strictly depend on a fixed-length signal segment. The model is able to learn effective RFF features from signals of different lengths.

Table 16.

Impact of signal length.

5. Conclusions

Aiming at the challenge of scarce labeled samples in SEI tasks under non-cooperative communication scenarios, this paper proposes a self-supervised learning method based on contrastive asymmetric masked learning. The method innovatively integrates dual optimization mechanisms of masked signal reconstruction and contrastive learning. Through complementary masking augmentation strategies, it preserves critical fingerprint information in signals while utilizing masked regions to guide robust feature learning. Furthermore, a channel squeeze-and-excitation residual network combined with an asymmetric encoder–decoder architecture is designed to enhance key feature representation while reducing model complexity, significantly improving feature extraction capability. Experimental results demonstrate that the proposed CAML-SEI method outperforms other comparative methods across multiple extreme labeled conditions (only 10, 15, 20, 25 samples per category) on both the 30-class ADS-B dataset and 16-class Wi-Fi dataset. This conclusively verifies its superiority in learning robust and generalizable fingerprint characteristics for RFFs, providing a reliable technical solution for addressing RFF feature extraction challenges in label-scarce scenarios.

The proposed CAML-SEI method offers several advantages, and our experiments have validated its robustness under moderate SNR conditions. However, performance may not be optimal under low SNR conditions, for example, at SNR levels below 0 dB. Therefore, future work will integrate a signal denoising module to enhance robustness for low SNR conditions. Simultaneously, considering the issue of signal interference in non-cooperative scenarios, we will incorporate interference detection as an auxiliary pre-training task in the self-supervised framework to improve model performance. Moreover, regarding future research directions, we will focus on the study of cross-domain SEI, specifically investigating whether pre-trained models can be adapted to SEI recognition tasks where signal distributions may change over time. To address the cross-domain SEI problem, we plan to introduce domain adaptation techniques in future work. Additionally, we will employ model compression strategies such as model pruning, quantization, and knowledge distillation to further reduce model size, enabling edge deployment. Furthermore, for ultra-large-scale device identification scenarios, we will explore combining memory bank mechanisms with adaptive hard sample mining to further improve the quality of negative samples and training scalability.

Author Contributions

Conceptualization, D.W. and Y.Z.; methodology, D.W. and Y.Z.; software, D.W.; validation, D.W. and Y.Z.; formal analysis, D.W. and Y.H.; investigation, D.W. and T.C.; resources, D.W.; data curation D.W. and T.C.; writing—original draft preparation, D.W. and Y.H.; writing—review and editing, D.W., Y.H., T.C. and Y.Z.; visualization, D.W.; supervision, Y.Z. and Y.H.; project administration, Y.Z. and Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Angueira, P.; Val, I.; Montalban, J.; Seijo, Ó.; Iradier, E.; Fontaneda, P.S.; Fanari, L.; Arriola, A. A survey of physical layer techniques for secure wireless communications in industry. IEEE Commun. Surv. Tutor. 2022, 24, 810–838. [Google Scholar] [CrossRef]

- Bai, L.; Zhu, L.; Liu, J.; Choi, J.; Zhang, W. Physical layer authentication in wireless communication networks: A survey. J. Commun. Inf. Netw. 2020, 5, 237–264. [Google Scholar] [CrossRef]

- Zhang, J.; Shen, G.; Saad, W.; Chowdhury, K. Radio Frequency Fingerprint Identification for Device Authentication in the Internet of Things. IEEE Commun. Mag. 2023, 61, 110–115. [Google Scholar] [CrossRef]

- Xie, N.; Li, Z.; Tan, H. A survey of physical-layer authentication in wireless communications. IEEE Commun. Surv. Tutor. 2020, 23, 282–310. [Google Scholar] [CrossRef]

- Fadul, M.K.M.; Reising, D.R.; Weerasena, L.P.; Loveless, T.D.; Sartipi, M.; Tyler, J.H. Improving RF-DNA Fingerprinting Performance in an Indoor Multipath Environment Using Semi-Supervised Learning. IEEE Trans. Inf. Forensics Secur. 2024, 19, 3194–3209. [Google Scholar] [CrossRef]

- Zha, X.; Chen, H.; Li, T.; Qiu, Z.; Feng, Y. Specific Emitter Identification Based on Complex Fourier Neural Network. IEEE Commun. Lett. 2022, 26, 592–596. [Google Scholar] [CrossRef]

- Li, Y.; Ding, Y.; Goussetis, G.; Zhang, J. Power amplifier enabled RF fingerprint identification. In Proceedings of the 2021 IEEE Texas Symposium on Wireless and Microwave Circuits and Systems (WMCS), Waco, TX, USA, 18–20 May 2021; pp. 1–6. [Google Scholar]

- Peng, Y.; Liu, P.; Wang, Y.; Gui, G.; Adebisi, B.; Gacanin, H. Radio frequency fingerprint identification based on slice integration cooperation and heat constellation trace figure. IEEE Wirel. Commun. Lett. 2021, 11, 543–547. [Google Scholar] [CrossRef]

- Gao, N.; Jing, X.; Huang, H.; Mu, J. Robust collaborative spectrum sensing using phy-layer fingerprints in mobile cognitive radio networks. IEEE Commun. Lett. 2017, 21, 1063–1066. [Google Scholar] [CrossRef]

- Tian, Q.; Lin, Y.; Guo, X.; Wen, J.; Fang, Y.; Rodriguez, J.; Mumtaz, S. New security mechanisms of high-reliability IoT communication based on radio frequency fingerprint. IEEE Internet Things J. 2019, 6, 7980–7987. [Google Scholar] [CrossRef]

- Hao, X.; Feng, Z.; Liu, R.; Yang, S.; Jiao, L.; Luo, R. Contrastive self-supervised clustering for specific emitter identification. IEEE Internet Things J. 2023, 10, 20803–20818. [Google Scholar] [CrossRef]

- Ellis, K.; Serinken, N. Characteristics of radio transmitter fingerprints. Radio Sci. 2001, 36, 585–597. [Google Scholar] [CrossRef]

- Hall, J.; Barbeau, M.; Kranakis, E. Detection of transient in radio frequency fingerprinting using signal phase. Wirel. Opt. Commun. 2003, 9, 13. [Google Scholar]

- Hall, J.; Barbeau, M.; Kranakis, E. Radio frequency fingerprinting for intrusion detection in wireless networks. IEEE Trans. Defendable Secur. Comput. 2005, 12, 1–35. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Archana, R.; Jeevaraj, P.E. Deep learning models for digital image processing: A review. Artif. Intell. Rev. 2024, 57, 11. [Google Scholar] [CrossRef]

- Luo, S.; Ivison, H.; Han, S.C.; Poon, J. Local interpretations for explainable natural language processing: A survey. ACM Comput. Surv. 2024, 56, 232. [Google Scholar] [CrossRef]

- Ahmed, A.; Quoitin, B.; Gros, A.; Moeyaert, V. A comprehensive survey on deep learning-based LoRa radio frequency fingerprinting identification. Sensors 2024, 24, 4411. [Google Scholar] [CrossRef]

- Wang, M.; Fang, S.; Fan, Y.; Hou, S. Resource-Constrained Specific Emitter Identification Based on Efficient Design and Network Compression. Sensors 2025, 25, 2293. [Google Scholar] [CrossRef]

- Ying, S.; Huang, S.; Chang, S.; He, J.; Feng, Z. AMSCN: A novel dual-task model for automatic modulation classification and specific emitter Identification. Sensors 2023, 23, 2476. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y.; Zhang, H.; Li, Y.; Wei, X. Radio frequency signal identification using transfer learning based on LSTM. Circuits Syst. Signal Process. 2020, 39, 5514–5528. [Google Scholar] [CrossRef]

- Merchant, K.; Revay, S.; Stantchev, G.; Nousain, B. Deep learning for RF device fingerprinting in cognitive communication networks. IEEE J. Sel. Top. Signal Process. 2018, 12, 160–167. [Google Scholar] [CrossRef]

- Sankhe, K.; Belgiovine, M.; Zhou, F.; Riyaz, S.; Ioannidis, S.; Chowdhury, K. ORACLE: Optimized radio classification through convolutional neural networks. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 370–378. [Google Scholar]

- Yu, J.; Hu, A.; Li, G.; Peng, L. A robust RF fingerprinting approach using multisampling convolutional neural network. IEEE Internet Things J. 2019, 6, 6786–6799. [Google Scholar] [CrossRef]

- Al-Shawabka, A.; Pietraski, P.; Pattar, S.B.; Restuccia, F.; Melodia, T. DeepLoRa: Fingerprinting LoRa devices at scale through deep learning and data augmentation. In Proceedings of the Twenty-second International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing, Shanghai, China, 26–29 July 2021; pp. 251–260. [Google Scholar]

- Soltani, N.; Sankhe, K.; Dy, J.; Ioannidis, S.; Chowdhury, K. More is better: Data augmentation for channel-resilient RF fingerprinting. IEEE Commun. Mag. 2020, 58, 66–72. [Google Scholar] [CrossRef]

- Deng, P.; Hong, S.; Qi, J.; Wang, L.; Sun, H. A lightweight transformer-based approach of specific emitter identification for the automatic identification system. IEEE Trans. Inf. Forensics Secur. 2023, 18, 2303–2317. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, G.; Gacanin, H.; Ohtsuki, T.; Dobre, O.A.; Poor, H.V. An efficient specific emitter identification method based on complex-valued neural networks and network compression. IEEE J. Sel. Areas Commun. 2021, 39, 2305–2317. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Y.; Li, M.; Wang, J.; Zhao, N. Transfer Learning-Based Specific Emitter Identification for ADS-B over Satellite System. Remote Sens. 2024, 16, 2068. [Google Scholar] [CrossRef]

- Yan, X.; Han, B.; Su, Z.; Hao, J. SignalFormer: Hybrid Transformer for Automatic Drone Identification Based on Drone RF Signals. Sensors 2023, 23, 9098. [Google Scholar] [CrossRef]

- Pan, Y.; Yang, S.; Peng, H.; Li, T.; Wang, W. Specific emitter identification based on deep residual networks. IEEE Access 2019, 7, 54425–54434. [Google Scholar] [CrossRef]

- Peng, L.; Zhang, J.; Liu, M.; Hu, A. Deep learning based RF fingerprint identification using differential constellation trace figure. IEEE Trans. Veh. Technol. 2019, 69, 1091–1095. [Google Scholar] [CrossRef]

- Shen, G.; Zhang, J.; Marshall, A.; Valkama, M.; Cavallaro, J. Radio Frequency Fingerprint Identification for Security in Low-Cost IoT Devices. In Proceedings of the 2021 55th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 31 October–3 November 2021; pp. 309–313. [Google Scholar]

- Shen, G.; Zhang, J.; Marshall, A.; Cavallaro, J.R. Towards scalable and channel-robust radio frequency fingerprint identification for LoRa. IEEE Trans. Inf. Forensics Secur. 2022, 17, 774–787. [Google Scholar] [CrossRef]

- Li, H.; Liao, Y.; Wang, W.; Hui, J.; Liu, J.; Liu, X. A novel time-domain graph tensor attention network for specific emitter identification. IEEE Trans. Instrum. Meas. 2023, 72, 1–14. [Google Scholar] [CrossRef]

- Lin, Y.; Tu, Y.; Dou, Z.; Chen, L.; Mao, S. Contour stella image and deep learning for signal recognition in the physical layer. IEEE Trans. Cogn. Commun. Netw. 2020, 7, 34–46. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, F.; He, B. Specific emitter identification via contrastive learning. IEEE Commun. Lett. 2023, 27, 1160–1164. [Google Scholar] [CrossRef]

- Liu, C.; Fu, X.; Wang, Y.; Guo, L.; Liu, Y.; Lin, Y.; Zhao, H.; Gui, G. Overcoming Data Limitations: A Few-Shot Specific Emitter Identification Method Using Self-Supervised Learning and Adversarial Augmentation. IEEE Trans. Inf. Forensics Secur. 2024, 19, 500–513. [Google Scholar] [CrossRef]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Vienna, Austria, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Liu, B.; Yu, H.; Du, J.; Wu, Y.; Li, Y.; Zhu, Z.; Wang, Z. Specific emitter identification based on self-supervised contrast learning. Electronics 2022, 11, 2907. [Google Scholar] [CrossRef]

- Xie, C.; Zhang, L.; Zhong, Z. Few-shot unsupervised specific emitter identification based on density peak clustering algorithm and meta-learning. IEEE Sens. J. 2022, 22, 18008–18020. [Google Scholar] [CrossRef]

- Huang, K.; Yang, J.; Liu, H.; Hu, P. Deep Learning of Radio Frequency Fingerprints from Limited Samples by Masked Autoencoding. IEEE Wirel. Commun. Lett. 2022, 1. [Google Scholar] [CrossRef]

- Yao, Z.; Fu, X.; Guo, L.; Wang, Y.; Lin, Y.; Shi, S.; Gui, G. Few-Shot Specific Emitter Identification Using Asymmetric Masked Auto-Encoder. IEEE Commun. Lett. 2023, 27, 2657–2661. [Google Scholar] [CrossRef]

- Li, W.; Wang, J.; Liu, T.; Xu, G. Few-Shot Specific Emitter Identification Based on a Contrastive Masked Learning Framework. IEEE Commun. Lett. 2025, 29, 408–412. [Google Scholar] [CrossRef]

- IEEE Std. 802.11a-1999; IEEE Standard for Information Technology—Telecommunications and Information Exchange Between Systems—Local and Metropolitan Area Networks—Specific Requirements Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications; Amendment 1: High-Speed Physical Layer in the 5 GHz Band. IEEE: Piscataway, NJ, USA, 1999.

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).