Immersive Teleoperation via Collaborative Device-Agnostic Interfaces for Smart Haptics: A Study on Operational Efficiency and Cognitive Overflow for Industrial Assistive Applications

Abstract

1. Introduction

1.1. Background and Motivation

1.2. QoE in Immersive Teleoperation

1.3. Contributions of This Study

1.4. Structure of the Document

2. Related Work

2.1. Immersive Teleoperation Systems

2.2. Haptic Feedback Devices and Modalities

2.3. Quality of Experience in Robotics and Haptics

2.4. Device-Agnostic Teleoperation Frameworks

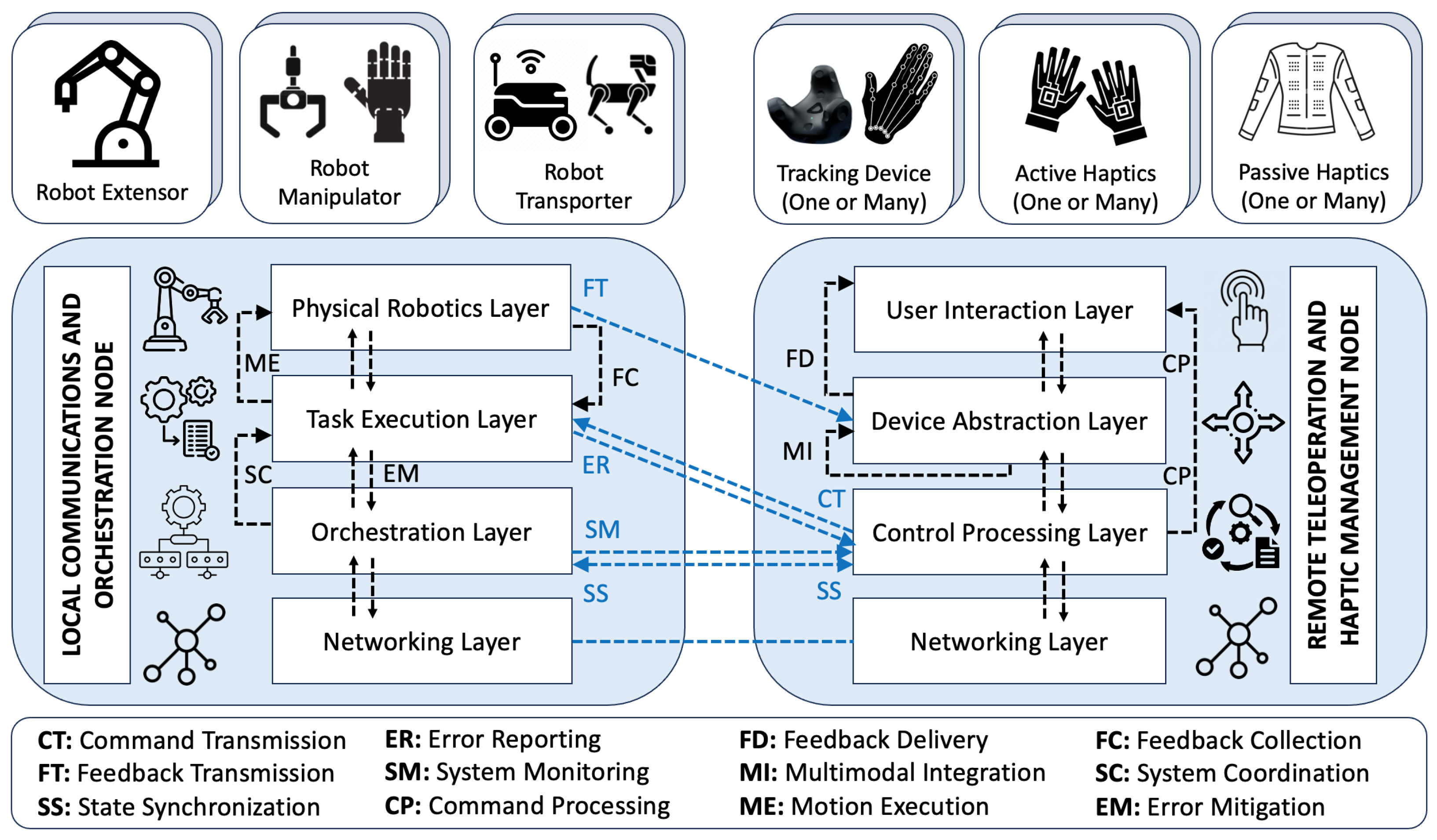

3. System Design and Architecture

3.1. Overview of the Teleoperation Framework

3.2. Device-Agnostic Layer for Interoperability

3.3. Integration of Haptic Devices and Trackers

- D1: Touch Device (3D Systems). This grounded kinaesthetic device provides precise positional tracking and high-fidelity force feedback, making it well suited for tasks that demand fine motor control and spatial accuracy. This device connects via USB using the official “TouchDeviceDriver v2023.1.4” and operates as an ROS node. It publishes six-DOF pose and force feedback data over a 1 kHz referesh rate via USB-serial, conforming to standard ROS message types.

- D2: DualSense Controller. This is a tactile joystick that doubles as a mobile haptic feedback device, offering ease of use and portability. Its intuitive interface is ideal for rapid task execution in dynamic environments. It is paired via BLE and managed using the community-supported “ds5_ros” ROS node, built on the “pydualsense” library. It publishes sensor data (axes and buttons) via sensor_msgs and subscribes to feedback topics (rumble and triggers), encapsulating haptic events in the abstraction layer.

- D3: bHaptics TactGloves with REalsense Camera Tracker. This combination of a vibrotactile glove and a camera-based tracking system with video gesture recognition using a convolutional neural network (CNN) across 21 hand landmark positions enables immersive interactions, particularly for one-handed operations requiring tactile feedback. The TactGlove connects via BLE and is driven by the bHaptics “tact-python” library, which publishes haptic commands over ROS topics. The camera is wired via USB and runs an ROS wrapper based on OpenCV and MediaPipe for real-time CNN pose estimation.

- D4: SenseGlove Nova with VIVE Trackers. This combination integrates force-feedback and vibrotactile functionalities with expanded movement tracking capabilities and hand gesture recognition using a supervised feedforward neural network across the string tension of each finger joint, delivering an advanced multi-modal haptic experience. The SenseGlove connects via BLE and uses the SGConnect protocol together with the SGCore API to stream multi-finger force and position data. Tracking data from the Vive Trackers is obtained via USB through the SteamVR subsystem. Both streams are integrated via ROS nodes that publish standardized messages.

4. Experimental Design and Methodology

4.1. Experimental Set-Up

4.1.1. System Configuration for XU-XE Scenarios

- 1U-1E: One User with One Equipment. In this configuration, a single user operated one device at a time, evaluating its standalone capabilities in performing teleoperation tasks.

- 1U-ME: One User with Multiple Equipment. In this configuration, a single user operated two or more devices simultaneously, requiring coordination and adaptability to perform teleoperation tasks.

- −

- D1 and D2: The Touch Device provided high-precision manipulation, while the DualSense controller was used for navigation or secondary object placement. This scenario tested the user’s ability to switch focus between detailed and generalized control.

- −

- D3 and D4: The bHaptics DK2 glove was used for tactile interaction, while the SenseGlove Nova handled force-feedback tasks. This combination assessed the user’s ability to manage and integrate two distinct haptic modalities.

- MU-ME: Multiple Users with Multiple Equipment. This configuration involved multiple users operating separate devices simultaneously to accomplish collaborative tasks. The objective was to evaluate coordination, shared control, and system performance in multi-user scenarios.

- −

- D1 and D3: One user employed the Touch Device for precision-based manipulation, while another used the bHaptics DK2 glove for tactile object interaction. This set-up tested complementary task distribution among users.

- −

- D2 and D4: The DualSense controller was utilized for navigation and coarse manipulation, while the SenseGlove Nova provided force-feedback capabilities for detailed adjustments. This scenario assessed collaborative efficiency in handling complex objects and trajectories.

4.1.2. Object Manipulation and 3D Trajectory Tasks

4.2. Participant Demographics and Selection

- Novice Users: Individuals with little or no prior experience with teleoperation or haptic devices;

- Intermediate Users: Participants with moderate familiarity, such as casual gamers or those with minimal robotics exposure;

- Expert Users: Professionals or researchers with significant experience in robotics, haptics, or related technologies.

4.3. Objective Performance Metrics

4.4. Subjective Experience Metrics

- Presence: Commonly referred to as “telepresence”, this is defined in the literature as the user’s illusion of being physically present in a mediated environment, an experiential phenomenon where the medium itself “disappears” from awareness [47].

- Engagement: Defined as the degree of psychological investment, focus, and enjoyment a user experiences during a task [48], we assessed engagement through self-reporting and behavioral indicators like persistence and error recovery.

- Control: This refers to the user’s perceived command over the teleoperation system, encompassing confidence, responsiveness, and synchronization of actions and feedback [49].

- Sensory integration: This denotes the cohesion of multisensory cues—visual, haptic, and proprioceptive—and their combined effect on perceptual realism [48].

- Cognitive load: This evaluates the mental effort and mental resources required to operate the system, particularly in regard to the effort needed to manage sensory input and control complexity [50].

4.5. Data Collection and Analysis Techniques

- To what extent did you feel immersed in the remote environment during the task?

- Did haptic feedback make you feel as if you were physically interacting with objects?

- How focused and attentive did you feel during the task?

- Did you enjoy the teleoperation experience using this configuration?

- How confident were you in your ability to control the robotic system?

- Did the system respond accurately and promptly to your commands?

- How naturally did the visual, haptic, and proprioceptive cues combine during tasks?

- Were you able to easily interpret the feedback from the device(s)?

- How mentally demanding did you find the task?

- Did you need to frequently pause or think carefully to continue the operation?

5. Results and Analysis

5.1. Objective Performance Results

5.1.1. Temporal Efficiency Across Device Configurations

5.1.2. Trajectory Similarity Across Scenarios

5.2. Subjective Experience Results

5.2.1. User Feedback on Presence and Engagement

5.2.2. Insights into Sensory Integration and Control

5.2.3. Cognitive Load Variations Across Devices

5.3. Comparative Analysis of Configurations

5.3.1. Performance Trade-Offs Between Configurations

5.3.2. Correlations Between Objective and Subjective QoE

6. Discussion

6.1. Implications for Device-Agnostic Teleoperation

6.2. Optimizing Robotics Learning Through QoE Metrics

6.3. Challenges in Balancing Objective and Subjective QoE

6.4. Applications in Industrial and Assistive Scenarios

7. Conclusions and Future Work

7.1. Summary of Findings

7.2. Contributions to Robotics and Haptics Research

7.3. Limitations of the Study

7.4. Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hernandez-Gobertti, F.; Lozano, R.; Kousias, K.; Alay, O.; Griwodz, C.; Gomez-Barquero, D. Exploring Performance and User Experience in Haptic Teleoperation Systems: A Study on QoS/QoE Dynamics on Immersive Communications. In Proceedings of the 2025 IEEE 26th International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Fort Worth, TX, USA, 27–30 May 2025; pp. 188–194. [Google Scholar]

- Dekker, I.; Kellens, K.; Demeester, E. Design and evaluation of an intuitive haptic teleoperation control system for 6-dof industrial manipulators. Robotics 2023, 12, 54. [Google Scholar] [CrossRef]

- Gordon, D.F.; Christou, A.; Stouraitis, T.; Gienger, M.; Vijayakumar, S. Adaptive assistive robotics: A framework for triadic collaboration between humans and robots. R. Soc. Open Sci. 2023, 10, 221617. [Google Scholar] [CrossRef] [PubMed]

- Huang, F.; Yang, X.; Yan, T.; Chen, Z. Telepresence augmentation for visual and haptic guided immersive teleoperation of industrial manipulator. ISA Trans. 2024, 150, 262–277. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, A.M.; de Araujo, I.B.Q. A Brief Overview of Teleoperation and Its Applications. In Proceedings of the 2023 15th IEEE International Conference on Industry Applications (INDUSCON), São Bernardo do Campo, Brazil, 22–24 November 2023; pp. 601–602. [Google Scholar]

- Lee, H.J.; Brell-Cokcan, S. Data-Driven Actuator Model-Based Teleoperation Assistance System. In Proceedings of the 2023 20th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 25–28 June 2023; pp. 552–558. [Google Scholar]

- Smith, A.; Doe, B. Gesture-Based Control Interface for Multi-Robot Teleoperation using ROS and Gazebo. Sci. Rep. 2024, 14, 30230. [Google Scholar]

- Trinitatova, D.; Tsetserukou, D. Study of the Effectiveness of a Wearable Haptic Interface with Cutaneous and Vibrotactile Feedback for VR-Based Teleoperation. IEEE Trans. Haptics 2023, 16, 463–469. [Google Scholar] [CrossRef]

- Zhang, Z.; Qian, C. Wearable Teleoperation Controller with 2-DoF Robotic Arm and Haptic Feedback for enhanced interaction in virtual reality. Front. Neurorobot. 2023, 17, 1228587. [Google Scholar] [CrossRef]

- Hejrati, M.; Mustalahti, P.; Mattila, J. Robust Immersive Bilateral Teleoperation of Dissimilar Systems with Enhanced Transparency and Sense of Embodiment. arXiv 2025, arXiv:2505.14486. [Google Scholar]

- Black, D.G.; Andjelic, D.; Salcudean, S.E. Evaluation of communication and human response latency for (human) teleoperation. IEEE Trans. Med. Robot. Bionics 2024, 6, 53–63. [Google Scholar] [CrossRef]

- Si, W.; Wang, N.; Yang, C. Design and quantitative assessment of teleoperation-based human–robot collaboration method for robot-assisted sonography. IEEE Trans. Autom. Sci. Eng. 2024, 22, 317–327. [Google Scholar] [CrossRef]

- Liu, S.; Xu, X.; Wang, Z.; Yang, D.; Jin, Z.; Steinbach, E. Quality of task perception based performance optimization of time-delayed teleoperation. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; pp. 1968–1974. [Google Scholar]

- Pacchierotti, C.; Prattichizzo, D. Cutaneous/tactile haptic feedback in robotic teleoperation: Motivation, survey, and perspectives. IEEE Trans. Robot. 2023, 40, 978–998. [Google Scholar] [CrossRef]

- Ghasemi, A.; Yousefi, K.; Yazdankhoo, B.; Beigzadeh, B. Cost-effective Haptic Teleoperation Framework: Design and Implementation. In Proceedings of the 2023 11th RSI International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 19–21 December 2023; pp. 253–258. [Google Scholar]

- Kamtam, S.B.; Lu, Q.; Bouali, F.; Haas, O.C.; Birrell, S. Network Latency in Teleoperation of Connected and Autonomous Vehicles: A Review of Trends, Challenges, and Mitigation Strategies. Sensors 2024, 24, 3957. [Google Scholar] [CrossRef] [PubMed]

- Scott, L.; Liu, T.; Wu, L. A Low-Cost Teleoperable Surgical Robot with a Macro-Micro Structure and a Continuum Tip for Open-Source Research. arXiv 2024, arXiv:2405.16084. [Google Scholar]

- Galarza, B.R.; Ayala, P.; Manzano, S.; Garcia, M.V. Virtual reality teleoperation system for mobile robot manipulation. Robotics 2023, 12, 163. [Google Scholar] [CrossRef]

- Esaki, H.; Sekiyama, K. Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space. Sensors 2024, 24, 5073. [Google Scholar] [CrossRef]

- Su, Y.P.; Chen, X.Q.; Zhou, C.; Pearson, L.H.; Pretty, C.G.; Chase, J.G. Integrating virtual, mixed, and augmented reality into remote robotic applications: A brief review of extended reality-enhanced Robotic systems for Intuitive Telemanipulation and Telemanufacturing tasks in Hazardous conditions. Appl. Sci. 2023, 13, 12129. [Google Scholar] [CrossRef]

- Ahmed, M.; Daksha, L.; Kahar, V.; Mahavar, N.; Abbas, Q.; Kumar, R.; Kherani, A.; Lall, B. Towards characterizing feasibility of Edge driven split-control in Bilateral Teleoperation of Robots. Wirel. Pers. Commun. 2024, 2024, 1–26. [Google Scholar] [CrossRef]

- Charpentier, V.; Slamnik-Kriještorac, N.; Limani, X.; Pinheiro, J.F.N.; Marquez-Barja, J. Demonstrating Situational Awareness of Remote Operators with Edge Computing and 5G Standalone. In Proceedings of the IEEE INFOCOM 2024—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Vancouver, BC, Canada, 20 May 2024; pp. 1–2. [Google Scholar]

- Najm, A.; Banakou, D.; Michael-Grigoriou, D. Development of a Modular Adjustable Wearable Haptic Device for XR Applications. Virtual Worlds 2024, 3, 436–458. [Google Scholar] [CrossRef]

- Frisoli, A.; Leonardis, D. Wearable haptics for virtual reality and beyond. Nat. Rev. Electr. Eng. 2024, 1, 666–679. [Google Scholar] [CrossRef]

- Kuang, L.; Chinello, F.; Giordano, P.R.; Marchal, M.; Pacchierotti, C. Haptic Mushroom: A 3-DoF shape-changing encounter-type haptic device with interchangeable end-effectors. In Proceedings of the 2023 IEEE World Haptics Conference (WHC), Delft, The Netherlands, 10–13 July 2023; pp. 467–473. [Google Scholar]

- van Wegen, M.; Herder, J.L.; Adelsberger, R.; Pastore-Wapp, M.; Van Wegen, E.E.; Bohlhalter, S.; Nef, T.; Krack, P.; Vanbellingen, T. An overview of wearable haptic technologies and their performance in virtual object exploration. Sensors 2023, 23, 1563. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, K.; Gao, S.; Yu, Y.; Wang, Z.; Tang, Y. Design of multi-modal feedback channel of human–robot cognitive interface for teleoperation in manufacturing. J. Intell. Manuf. 2024, 2024, 1–21. [Google Scholar] [CrossRef]

- Cerón, J.C.; Sunny, M.S.H.; Brahmi, B.; Mendez, L.M.; Fareh, R.; Ahmed, H.U.; Rahman, M.H. A novel multi-modal teleoperation of a humanoid assistive robot with real-time motion mimic. Micromachines 2023, 14, 461. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Mei, F.; Xu, X.; Steinbach, E. Towards subjective experience prediction for time-delayed teleoperation with haptic data reduction. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022; pp. 129–134. [Google Scholar]

- Woźniak, M.; Ari, I.; De Tommaso, D.; Wykowska, A. The influence of autonomy of a teleoperated robot on user’s objective and subjective performance. In Proceedings of the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), Pasadena, CA, USA, 26–30 August 2024; pp. 1950–1956. [Google Scholar]

- Sankar, G.; Djamasbi, S.; Li, Z.; Xiao, J.; Buchler, N. Systematic Literature Review on the User Evaluation of Teleoperation Interfaces for Professional Service Robots. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 23–28 July 2023; pp. 66–85. [Google Scholar]

- Wan, Y.; Sun, J.; Peers, C.; Humphreys, J.; Kanoulas, D.; Zhou, C. Performance and usability evaluation scheme for mobile manipulator teleoperation. IEEE Trans. Human-Mach. Syst. 2023, 53, 844–854. [Google Scholar] [CrossRef]

- Tavares, A.; Silva, J.L.; Ventura, R. Physiologically attentive user interface for improved robot teleoperation. In Proceedings of the 28th International Conference on Intelligent User Interfaces, Sydney, Australia, 27–31 March 2023; pp. 776–789. [Google Scholar]

- Schmaus, P.; Batti, N.; Bauer, A.; Beck, J.; Chupin, T.; Den Exter, E.; Grabner, N.; Köpken, A.; Lay, F.; Sewtz, M.; et al. Toward Multi User Knowledge Driven Teleoperation of a Robotic Team with Scalable Autonomy. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; pp. 867–873. [Google Scholar]

- Black, D.; Nogami, M.; Salcudean, S. Mixed reality human teleoperation with device-agnostic remote ultrasound: Communication and user interaction. Comput. Graph. 2024, 118, 184–193. [Google Scholar] [CrossRef]

- Guhl, J.; Tung, S.; Kruger, J. Concept and architecture for programming industrial robots using augmented reality with mobile devices like microsoft HoloLens. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–4. [Google Scholar]

- Hu, B.H.; Krausz, N.E.; Hargrove, L.J. A Novel Method for Bilateral Gait Segmentation Using a Single Thigh-Mounted Depth Sensor and IMU. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 807–812. [Google Scholar]

- Wei, X.; Wu, D.; Zhou, L.; Guizani, M. Cross-modal communication technology: A survey. Fundam. Res. 2023; in press. [Google Scholar]

- Hazarika, A.; Rahmati, M. Towards an Evolved Immersive Experience: Exploring 5G- and Beyond-Enabled Ultra-Low-Latency Communications for Augmented and Virtual Reality. Sensors 2023, 23, 3682. [Google Scholar] [CrossRef]

- Franco, E. Combined Adaptive and Predictive Control for a Teleoperation System with Force Disturbance and Input Delay. Front. Robot. AI 2016, 3, 48. [Google Scholar] [CrossRef]

- Kim, C.; Lee, D.Y. Adaptive Model-Mediated Teleoperation for Tasks Interacting with Uncertain Environment. IEEE Access 2021, 9, 128188–128201. [Google Scholar] [CrossRef]

- Demostration Video from iTEAM Research Institute. Available online: https://youtu.be/QMck8VuOr-s (accessed on 15 May 2025).

- Odoh, G.; Landowska, A.; Crowe, E.M.; Benali, K.; Cobb, S.; Wilson, M.L.; Maior, H.A.; Kucukyilmaz, A. Performance metrics outperform physiological indicators in robotic teleoperation workload assessment. Sci. Rep. 2024, 14, 30984. [Google Scholar] [CrossRef]

- Tugal, H.; Tugal, I.; Abe, F.; Sakamoto, M.; Shirai, S.; Caliskanelli, I.; Skilton, R. Operator Expertise in Bilateral Teleoperation: Performance, Manipulation, and Gaze Metrics. Electronics 2025, 14, 1923. [Google Scholar] [CrossRef]

- Triantafyllidis, E.; Hu, W.; McGreavy, C.; Li, Z. Metrics for 3D Object Pointing and Manipulation in Virtual Reality. arXiv 2021, arXiv:2106.06655. [Google Scholar]

- Poignant, A.; Morel, G.; Jarrassé, N. Teleoperation of a robotic manipulator in peri-personal space: A virtual wand approach. arXiv 2024, arXiv:2406.09309. [Google Scholar]

- Lombard, M.; Ditton, T. Presence, explicated. Commun. Theory 2004, 14, 27–50. [Google Scholar]

- Xavier, R.; Silva, J.L.; Ventura, R.; Jorge, J. Pseudo-haptics Survey: Human–Computer Interaction in Extended Reality & Teleoperation. arXiv 2024, arXiv:2406.01102. [Google Scholar]

- Zhang, D.; Tron, R.; Khurshid, R.P. Haptic Feedback Improves Human–Robot Agreement and User Satisfaction in Shared-Autonomy Teleoperation. arXiv 2021, arXiv:2103.03453. [Google Scholar]

- Zhong, N.; Hauser, K. Attentiveness Map Estimation for Haptic Teleoperation of Mobile Robots. IEEE Robot. Autom. Lett. 2024, 9, 2152–2159. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernandez-Gobertti, F.; Kudyk, I.D.; Lozano, R.; Nguyen, G.T.; Gomez-Barquero, D. Immersive Teleoperation via Collaborative Device-Agnostic Interfaces for Smart Haptics: A Study on Operational Efficiency and Cognitive Overflow for Industrial Assistive Applications. Sensors 2025, 25, 3993. https://doi.org/10.3390/s25133993

Hernandez-Gobertti F, Kudyk ID, Lozano R, Nguyen GT, Gomez-Barquero D. Immersive Teleoperation via Collaborative Device-Agnostic Interfaces for Smart Haptics: A Study on Operational Efficiency and Cognitive Overflow for Industrial Assistive Applications. Sensors. 2025; 25(13):3993. https://doi.org/10.3390/s25133993

Chicago/Turabian StyleHernandez-Gobertti, Fernando, Ivan D. Kudyk, Raul Lozano, Giang T. Nguyen, and David Gomez-Barquero. 2025. "Immersive Teleoperation via Collaborative Device-Agnostic Interfaces for Smart Haptics: A Study on Operational Efficiency and Cognitive Overflow for Industrial Assistive Applications" Sensors 25, no. 13: 3993. https://doi.org/10.3390/s25133993

APA StyleHernandez-Gobertti, F., Kudyk, I. D., Lozano, R., Nguyen, G. T., & Gomez-Barquero, D. (2025). Immersive Teleoperation via Collaborative Device-Agnostic Interfaces for Smart Haptics: A Study on Operational Efficiency and Cognitive Overflow for Industrial Assistive Applications. Sensors, 25(13), 3993. https://doi.org/10.3390/s25133993