Abstract

This paper presents the design and evaluation of a novel soft pneumatic actuator featuring two independent bending chambers, enabling independent joint actuation and localization for rehabilitation purposes. The actuator’s dual-chamber configuration provides flexibility for applications requiring customized bending profiles. To measure the bending angle of the finger joints in real time, a camera-based system is employed, utilizing a deep learning detection model to localize the joints and estimate their bending angles. This approach provides a non-intrusive, sensor-free alternative to hardware-based measurement methods, reducing complexity and wiring typically associated with wearable devices. Experimental results demonstrate the effectiveness of the proposed actuator in achieving bending angles of 105 degrees for the metacarpophalangeal (MCP) joint and 95 degrees for the proximal interphalangeal (PIP) joint, as well as a gripping force of 9.3 N. The vision system also captures bending angles with a precision of 98%, indicating potential applications in fields such as rehabilitation and human–robot interaction.

1. Introduction

Soft robotics has gained significant attention due to its potential to revolutionize robotic applications in various fields, including rehabilitation, human–robot interaction, and biomedical engineering. Unlike traditional rigid robots, soft robots leverage flexible materials and actuation methods to achieve bio-inspired movements, making them particularly suitable for applications that require safe and adaptable interaction with humans and delicate objects [1]. Among the different actuation strategies, pneumatic actuators have emerged as a promising approach to develop soft robots due to their compliance, lightweight structure, and ability to produce continuous and smooth deformations [2]. Current soft pneumatic technology can be improved by enhancing control accuracy and simplifying feedback mechanisms through the development of novel designs and advanced sensing methods, leading to greater functionality and efficiency [3]. In this regard, Chen et al. [4] reviewed recent developments in soft actuators, including pneumatic actuators, optimizing soft robotic designs for enhanced performance. Yap et al. [5] explored high-force printable pneumatics for soft robotic applications, emphasizing novel manufacturing techniques. Soliman et al. [6] explored the effects of positive and vacuum pressure on the inclination angle of a soft pneumatic actuator. The study focused on modeling the actuator’s work envelope to improve design efficiency and functional performance. Elsayed et al. [7] investigated design optimization strategies using finite element analysis to enhance soft pneumatic actuator performance. As a result of their safe interaction and compliance, pneumatic actuators have gained increasing attention for use in wearable devices. As an example, Ma et al. [8] designed a reconfigurable exomuscle system employing pneumatic artificial muscles to assist hip flexion and ankle plantarflexion. Their system allows for configuration switching and parameter tuning to match the user’s gait, demonstrating notable reductions in metabolic cost during walking.

Despite the advantages of soft robots, due to their inherent nonlinearity and compliance, accurately estimating their instantaneous angular position remains a challenging and ongoing area of research [9]. For soft bending actuators, traditional methods for measuring bending angles rely on embedded sensors such as flex sensors [10], electromagnetic tracking systems [11], or inertial measurement units [12]. While these approaches provide real-time feedback, they introduce additional complexity, weight, and durability concerns. To circumvent these issues, vision-based measurement systems have been explored as non-intrusive alternatives. By using a camera to track actuator movement, such systems eliminate the need for additional wiring and sensor integration, simplifying the design and enhancing reliability [13]. Recent studies have extensively explored vision-based sensing technologies for soft robotics. Hofer et al. [14] developed a vision-based sensing approach for a spherical soft robotic arm, leveraging deep learning techniques to enhance real-time pose estimation. Wang et al. [15] investigated deep-learning-based vision systems for real-time proprioception in soft robots, achieving precise shape sensing through high-resolution optical tracking. Zhang et al. [16] developed a finite-element-based vision sensing method to estimate external forces acting on soft robots, offering insights into force perception and interaction modeling. Li et al. [17] explored vision-based reinforcement learning techniques for soft robot manipulators, achieving precise tip trajectory tracking through real-time visual feedback. Ogunmolu et al. [18] investigated the use of vision-based control in a soft robot designed for maskless head and neck cancer radiotherapy, demonstrating its applicability in medical robotics. Werner et al. [19] proposed a vision-based proprioceptive sensing system for soft actuators, enhancing deformation tracking accuracy. Oguntosin et al. [20] introduced advanced vision algorithms for sensing soft robots, focusing on geometric parameter estimation and motion control. Wu et al. [21] developed a vision-based tactile intelligence system for soft robotic metamaterials, improving the detection of external forces and environmental interactions.

After reviewing studies in this field, the authors’ motivation lies in creating a system that continuously captures the instantaneous angular and linear positions of finger segments, even when the hand is covered by a soft rehabilitation assistive device (while the device developed in this study is technically a soft assistive device that covers only the index finger and thumb, the term “glove” is used throughout this paper for ease of reference and to maintain the natural flow of the text). This enables accurate finger joint localization and dynamic, real-time tracking of finger bending angles. By processing motion data in real time, the system can adapt to the assistive level to suit individual rehabilitation needs. Some previous studies rely on predefined gestures or fixed relationships between pressure and bending angle. However, due to the variability in hand injuries among patients, resistance to bending and extension can differ significantly, resulting in inconsistent finger movements and bending angles. This poses a major challenge for tasks such as gaming or lifting, where precise control over finger opening and closing is essential. In this context, the system must reliably deliver sufficient bending angles and fingertip force to maintain effective task performance.

This paper advances real-time motion analysis of the hand coupled with a soft pneumatic actuator. First, a novel pneumatic actuator with independent joint actuation is designed to support the index finger. The design is carefully evaluated to ensure that the fingertip force and joint bending angles closely resemble those of a real finger, preventing injury or fatigue for the user. Two independent joint actuations allow therapists to rehabilitate each joint separately, and this independence also ensures that, like real fingers, each joint bends individually, mimicking natural movement.

Next, a non-intrusive vision-based method is proposed for dynamic joint localization in a hand occluded by a glove. Although the joints are not visible, this method ensures accurate joint detection. As mentioned by Ji et al. [20], it has been observed that when the actuator bends and extends multiple times, the use of bending sensors results in a drift in overall electrical resistance values for the same deformation. This drift can decrease estimation accuracy over time. By utilizing a vision system, the overall bending of the finger is captured without concerns about sensor drift. The method presented in this paper eliminates constraints related to wiring and disconnections, as it is entirely non-intrusive and does not require wires on the hand.

The setup described in this paper provides a solid foundation for real-time motor–cognitive hand rehabilitation systems by implementing communication protocols in which all experimental components, except for the camera, are controlled by a microcomputer wirelessly connected to a PC. The use of multithreading techniques along with a server–client protocol ensures seamless and real-time communication. While not a direct contribution of this paper, this capability could pave the way for personalized training systems, wherein assistance is dynamically adjusted based on user input.

2. Description of the Soft Pneumatic Actuator

2.1. Design

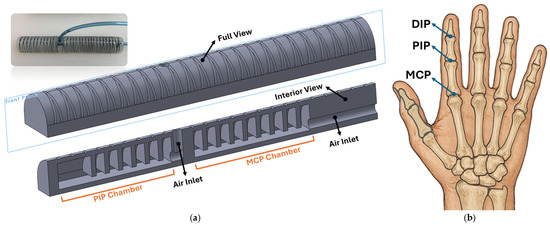

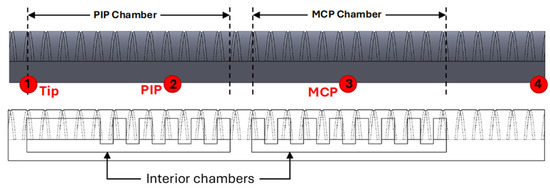

The soft pneumatic actuator, designed in SolidWorks 2023 SP3.0, is shown in Figure 1a. The actuator is composed of two independent chambers that inflate when pressurized. Chambers are designed to be mounted on the MCP and PIP joints of the index finger (see Figure 1b), providing the same degrees of freedom as the natural bending of the finger. The actuator’s design should not only provide enough grasp force to meet the requirement of 7.3 N for hand rehabilitation [11], but it should also exhibit a desired bending angle similar to joints of the finger within a safe interior pressure range. Many researchers have presented soft actuators with uniform chambers (not segmented) [22]. However, the segmented chamber design in soft actuators offers superior control, especially in applications requiring gradual, precise movement, as in rehabilitation. Unlike continuous chambers, where pressure distribution leads to a single, expansive force, segmented chambers distribute this force across individual sections. This segmented structure introduces internal boundaries that naturally “buffer” and stabilize the motion, reducing the risk of overshooting or uncontrolled bending.

Figure 1.

(a) CAD design of the soft pneumatic actuator; (b) metacarpophalangeal (MCP), proximal interphalangeal (PIP), and distal interphalangeal (DIP) finger joints.

The term “buffer” refers to the way each segment absorbs and moderates the pressure applied across the chamber. Rather than the entire chamber expanding uniformly, each segment expands slightly independently within its sectioned boundaries, creating a damping effect. This damped expansion smooths out movements, making the actuator’s response to pressure changes more gradual and preventing sudden or excessive bending. Each segment acts as a localized control point, allowing for finer adjustment and incremental movement, which is essential for guiding safe, controlled motions during rehabilitation exercises.

The actuator design incorporates two chambers to achieve the bending motions of the MCP and PIP joints (see Figure 1a). Van Zwieten et al. [23] provided a detailed analysis of the interdependence between the distal interphalangeal (DIP) and proximal interphalangeal (PIP) joints, demonstrating that their movements are not independent but rather governed by a complex biomechanical relationship. Through an analytical model based on anatomical structures and kinematics, the authors showed that DIP flexion is inherently linked to PIP flexion due to the mechanical constraints imposed by the extensor assembly, including the spiral fibers and lateral bundles. As the PIP joint flexes, the lateral bands of the extensor mechanism slide distally while being suspended by the spiral fibers, which, in turn, facilitates the flexion of the DIP joint. This effect results in a predictable mathematical correlation, allowing the DIP joint angle to be expressed as a function of the PIP joint angle. Accordingly, in the proposed actuator, instead of assigning a separate chamber for the DIP joint, the PIP chamber is extended further toward the fingertip, allowing both joints to share a single chamber. This design ensures that the bending of the PIP joint naturally induces bending in the DIP joint. Otherwise, if the DIP joint was expected to remain unbent while the PIP joint flexes, it would experience unnecessary strain, leading to increased pressure and fatigue in the joint. The extension of the PIP chamber is designed as a uniform, non-segmented structure (see Figure 1a) to further increase the generated force, ensuring improved performance during gripping tasks.

The cross-sectional view of the actuator is hemi-circular, allowing for more flexible and efficient bending. Polygerinos et al. [22] compared different actuators with rectangular, hemi-circular, and circular cross-sections, and their study revealed that the rectangular shape was less suitable since it required the highest amount of pressure to achieve the same bending angle. Additionally, the hemi-circular shape was found to be easier to bend.

2.2. Fabrication Process

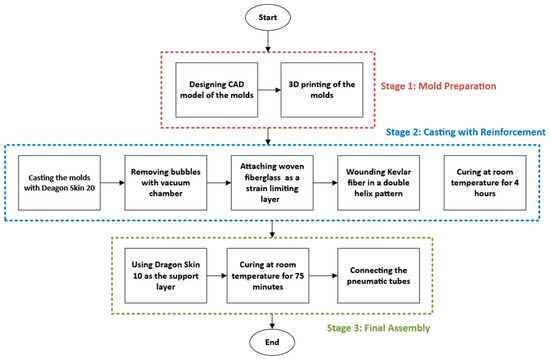

The flowchart outlining the manufacturing process of the soft pneumatic actuator is presented in Figure 2. The first step in fabricating the pneumatic actuator was to design the molds using SolidWorks. The actuator molds were designed using SolidWorks with two hemi-circular chambers, each segmented to allow for independent control and bending at the joints. The molds’ geometry was modeled accurately, considering these specific dimensions. Once the design was complete, it was converted into stereolithography (STL) files, suitable for 3D printing.

Figure 2.

Soft pneumatic actuator manufacturing flowchart.

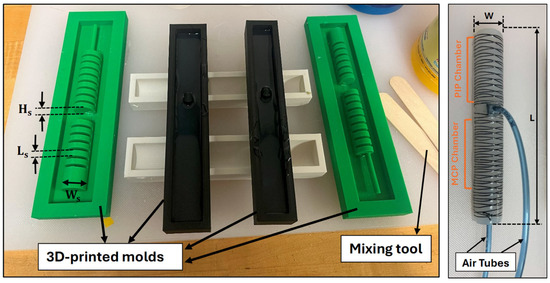

With the molds ready, the silicone casting process began. Dragon Skin 20 silicone (Smooth-On, Inc., Macungie, PA, USA) [24] was chosen for the chambers due to its flexibility and durability. The silicone was prepared by mixing the two components thoroughly. To ensure the material was free of air bubbles, the mixed silicone was placed in a vacuum chamber (Model PB-4CFM-3T, P PBAUTOS, Taizhou, China). The vacuum chamber effectively removed any trapped air bubbles, which could otherwise weaken the final product. The silicone was then carefully poured into the molds, ensuring it filled all the cavities (see Figure 3). The silicone was then left to cure at room temperature for 4 h, ensuring it achieved the desired mechanical properties.

Figure 3.

Soft pneumatic actuator molding and fabrication.

Once the silicone was fully cured, the woven fiberglass was attached to the bottom side of the actuator to act as a strain limiting layer. Kevlar fiber was also wound in a double helix pattern along the length of the actuator body to lead the actuator to bend when exposed to the pressure. Regarding the end face, as shown in Figure 1a, this area did not require an additional support layer, since the actuator was designed with a sufficient gap between the inner and outer walls to prevent rupture. To make the actuator firmer and protect the Kevlar fiber wound around it, Dragon Skin 10 (Smooth-On, Inc., Macungie, PA, USA) was applied as a cover layer, forming a thin yet stretchable coating over the entire actuator. The silicone was then left to cure at room temperature for 75 min. The final assembly involved connecting the pneumatic lines to each segmented chamber. The specifications of the actuator, including material and dimensions, are provided in Table 1.

Table 1.

Specifications of the soft actuator.

3. Experimental Setup

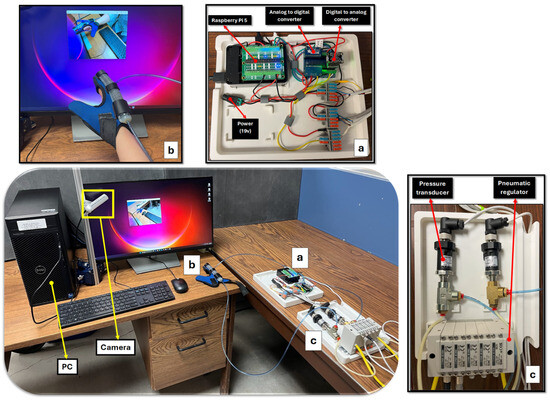

A schematic of the experimental components is shown in Figure 4. A Raspberry Pi 5 acts as an interface unit that controls the pressure regulators and receives data from the T2 pressure transducers (Ashcroft Inc., Stratford, CT, USA) (see Figure 4a), while the main computational tasks are handled by the PC (see Figure 4b). The Raspberry Pi 5 is equipped with sufficient processing power to handle multiple input and output operations, including the control of the pneumatic actuator. Additionally, its GPIO (General Purpose Input/Output) pins facilitate easy integration with external hardware components such as pressure sensors. To accurately measure the bending angle of the fingers, an Intel RealSense D455f camera is utilized. This camera was chosen for its high precision and ability to capture detailed 3D images with up to 90 frames per second (fps). T2 Pressure transducers are integrated into the setup to measure the internal pressure of the chambers. An ITV0030-3UMN electro-pneumatic regulator (SMC Corporation, Tokyo, Japan) is used to control the air pressure supplied to the pneumatic actuators (see Figure 4c). This regulator has a control range of 0.001 to 0.5 MPa and a high-speed response time of 0.1 s (without load), ensuring that pressure adjustments can be made quickly and reliably in real-time applications. To send analog signals from the Raspberry Pi 5 to the electro-pneumatic regulator, an MCP4728 quad-channel Digital-to-Analog Converter (DAC) (Microchip Technology Inc., Chandler, AZ, USA) is used. The 12-bit resolution allows for 4096 discrete levels of output voltage and ensures smooth and accurate control necessary for delicate rehabilitation movements. For the Raspberry Pi 5 to receive analog signals from pressure transducers, an ADS1115 16-bit Analog-to-Digital Converter (ADC) (Texas Instruments Inc., Dallas, TX, USA), which offers four single-ended or two differential input channels, is employed. The ADC features a programmable data rate of up to 860 samples per second.

Figure 4.

Experimental setup layout: (a) data acquisition system including Raspberry Pi 5, DAC, and ADC; (b) PC as the main processing unit; (c) pneumatic modules comprising regulators and pressure transducers.

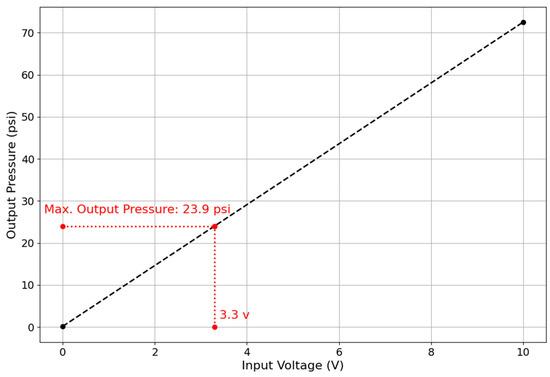

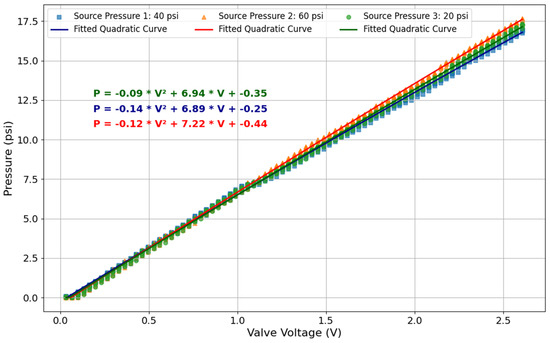

As shown in Figure 5, with a 3.3 V input to the ITV0030-3UMN pneumatic regulator, a maximum output pressure of 23.9 psi can be achieved. This means that for any pressure demand above 23.9 psi, the regulator will only output a maximum of 23.9 psi. Otherwise, the output pressure of the regulator will be dependent on the valve input voltage, as shown in Figure 6. In this figure, pressure readings are plotted against input voltage for different source pressures (20, 40, and 60 psi).

Figure 5.

Input and output range for the SMC ITV0030-3UMN pneumatic regulator.

Figure 6.

Predictive model for estimating regulator output pressure based on input voltage given different source pressures.

To ensure that the pressure estimation based on the input voltage is reliable, ten pressure readings were collected for each voltage step under each constant source pressure (see Figure 6). A quadratic curve was then fitted to all the data points. Based on the results from the pneumatic pressure regulator and its precision in maintaining pressure regulation, along with the established relationship between the regulator voltage and the output pressure (validated by readings from the pressure transducer), we can simplify the system by removing the pressure transducer. Since the regulator’s response to the input voltage is well-characterized, the system can reliably estimate the output pressure directly from the applied voltage, reducing system complexity while maintaining accurate pressure control.

4. Bending Angle Measurement

4.1. Joint Localization

To measure the bending angles of a finger coupled with the soft actuator while it is fully covered with a glove, the YOLOv8s Pose model is utilized to detect specific keypoints corresponding to the wrist, MCP, PIP, and the fingertip, as shown in Figure 7 and Figure 8. This method provides a non-intrusive, robust solution for monitoring joint movements without requiring direct visibility of the hand.

Figure 7.

Keypoint locations on the soft actuator. Keypoint 1 is located at the fingertip, Keypoint 2 at the PIP joint, Keypoint 3 at the MCP joint, and Keypoint 4 at the wrist.

Figure 8.

Expected keypoints to be detected by YOLOv8s Pose model.

In soft gloves, challenges arise when the finger is fully occluded by the glove, making the underlying joints invisible to conventional methods such as MediaPipe [25]. Despite this occlusion, we show that careful annotation and a robust pose estimation network can predict four keypoints (representing joints) of the finger purely from the external glove shape. Our approach adopts the YOLOv8s Pose framework to provide real-time detection of the glove-covered finger and to simultaneously infer its hidden keypoints.

4.2. Model Interpretation

The YOLOv8s Pose model consists of three key components: (i) a backbone for feature extraction, (ii) a neck for multi-scale feature fusion, and (iii) a pose head dedicated to bounding box and keypoint predictions. This architecture follows the advancements of previous YOLO versions while incorporating an anchor-free detection approach for improved accuracy and efficiency.

The backbone extracts hierarchical features, capturing the glove’s deformation due to finger movements and pressure variations. The neck fuses multi-scale spatial cues, enabling the detection of joint locations even when the glove fully obscures the hand. The pose head predicts bounding boxes and refines keypoints by learning spatial correlations between glove wrinkles, material stretching, and underlying finger positions.

4.3. Bending Angle Calculations

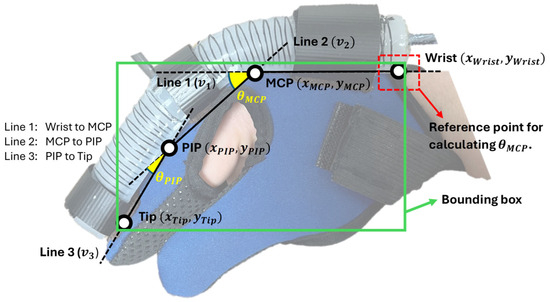

Using the detected keypoints for the wrist, MCP, PIP, and the tip, we compute the bending angles at the MCP and PIP joints based on the relative orientation between connected points, as shown in Figure 9.

Figure 9.

Relative orientation between connected points of index finger.

The bending angle at a joint is determined using the cosine rule for two adjacent vectors. Let each segment be represented as a vector in the image coordinate space:

The bending angles at the MCP and PIP joints are computed using the angle between two adjacent segments. For the MCP joint bending angle , the angle between Line 1 () and Line 2 () is given by Equation (4), where denotes the dot product, and is the Euclidean norm of vector . Similarly, for the PIP joint bending angle , the angle between Line 2 () and Line 3 () is calculated by Equation (5). These angles provide a direct measure of finger articulation and can be used to track motion during rehabilitation.

4.4. System Integration and Workflow

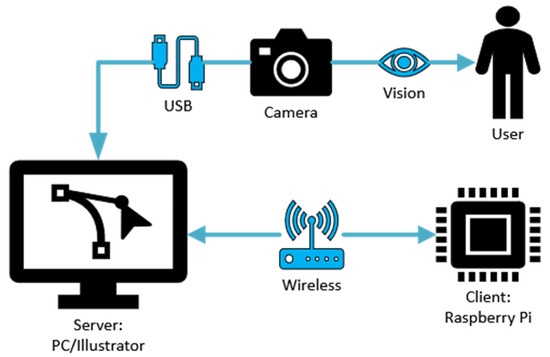

The system workflow begins with the initialization of the Raspberry Pi, which sets up communication with the pneumatic pressure regulators and pressure transducers. When using YOLOv8s Pose, the RealSense camera is connected to the PC, and a wireless socket-based server–client protocol is established between the PC and the Raspberry Pi. In this setup, the PC handles the detection tasks and sends the results back to the Raspberry Pi via sockets for further processing. Further processing includes scenarios where a GUI or a game run on the PC, and based on different events, such as a detected movement or an in-game action, the Raspberry Pi receives commands to adjust the actuator pressure accordingly. For instance, if the system detects that assistance is needed for grasping, the Raspberry Pi sends a command to increase the pressure. If resistance training is required, it reduces the pressure. Figure 10 visualizes communications within the hand rehabilitation system.

Figure 10.

Communication flows within the rehabilitation system.

Additionally, multithreading is implemented not only to manage communication efficiently but also to run the YOLOv8s Pose model in parallel without interrupting the real-time data transmission. This prevents processing delays caused by the computational load of the model and ensures that actuator control commands and sensor readings remain responsive. The multithreading approach enhances system reliability, making it suitable for dynamic and adaptive rehabilitation scenarios.

5. Results

5.1. Design Verification

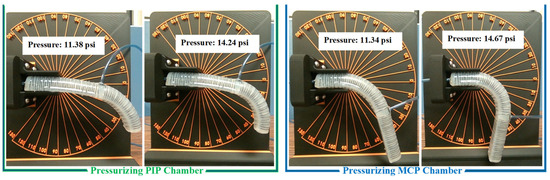

Range of motion (ROM): The maximum pressure for both chambers was set to 15 psi. While higher pressures are possible, they are not recommended to ensure the actuator’s durability and repeatability. At this pressure, the maximum recorded bending angles of the actuator were 105 degrees for the MCP joint and 95 degrees for the PIP joint in the case where it was not attached to a hand, as shown in Figure 11.

Figure 11.

Bending movements of the soft actuator under two actuating pressures by pressurizing each chamber.

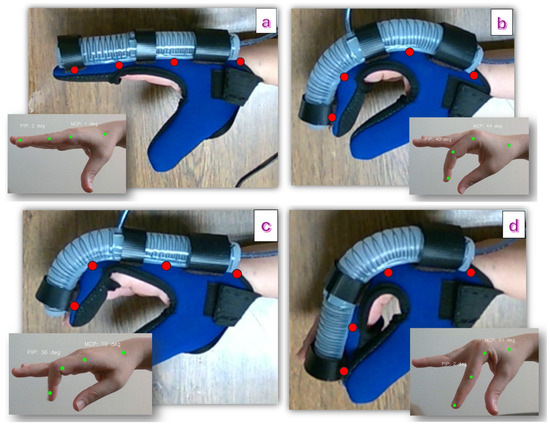

As shown in Figure 12, several joint angles were captured using the MediaPipe library in Python 3.11 to serve as reference points for cases where the finger is covered by a glove. These specific values and gestures were selected to include the approximate maximum and minimum bending angles for each joint, as well as a fully extended finger and a scenario where both joints bend simultaneously. The objective is to use these reference angles as target values for the hand supported by the soft actuator, assessing whether the actuator can replicate the bending angles and provide natural finger movement. According to the results provided in Table 2, the actuator can provide a similar bending angle for different finger gestures.

Figure 12.

Bending movements caused by the actuator for specific desired gestures, (a) finger extension, (b) MCP and PIP bend, (c) PIP bend, (d) MCP bend. Red keypoints are detected using YOLOv8s Pose, while green keypoints are obtained using MediaPipe.

Table 2.

Comparison between bending angles in healthy index finger and finger supported by soft actuator.

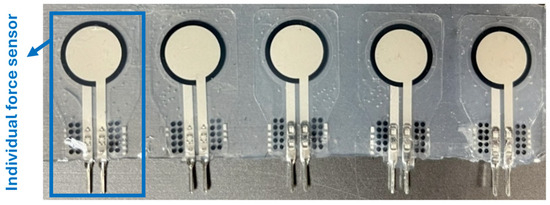

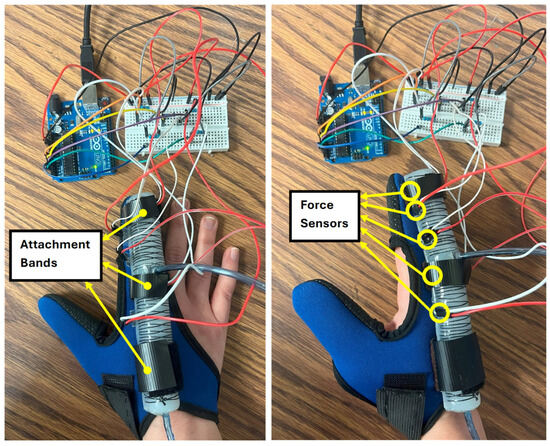

Comfort on pressure points: In the experimental setup, a UNeo GD-10 force sensor was utilized to measure the contact forces between the actuator and the finger. This sensor has a resolution of 0.1 N, making it suitable for detecting subtle variations in force application. To measure the contact forces between the actuator and the finger, a set of five sensors were attached to each other to create a map of the interaction forces on the finger. as shown in Figure 13. These sensors were located at the MCP joint, between the MCP and PIP joints, the PIP joint, the DIP joint, and the fingertip (see Figure 14). The sensors were programmed to present a heat map of the integrated sensor to better visualize the exerted force. Since there was a specific distance between each pair of sensors in the integrated sensor array, the force exerted in regions that were not directly covered by any individual sensor was estimated using a Gaussian weighting function. This function is derived from the Gaussian (normal) distribution, which is commonly used to model smooth, continuous transitions between data points. The Gaussian function is defined as:

where is the Gaussian weighting function, is the input location along the finger, is the position of the i-th sensor, and is the standard deviation, which controls how broadly each sensor’s influence spreads.

Figure 13.

Integrated force sensors consisting of five individual force sensors.

Figure 14.

Force sensors integrated between the index finger and the soft pneumatic actuator.

Function (6) was used to compute a smooth spatial weighting based on the distance between the target point and the sensor locations. The estimated force intensity at a given location was calculated by summing the weighted contributions from all sensors:

where is the force measured by the i-th sensor, and is the total number of sensors.

To avoid having artificially low values near the edges (due to reduced overlap from fewer sensors), the interpolated intensity values were normalized, and an offset was added:

where is the normalized intensity at position , and the offset ensured a smoother transition and avoided black edges in the visualization.

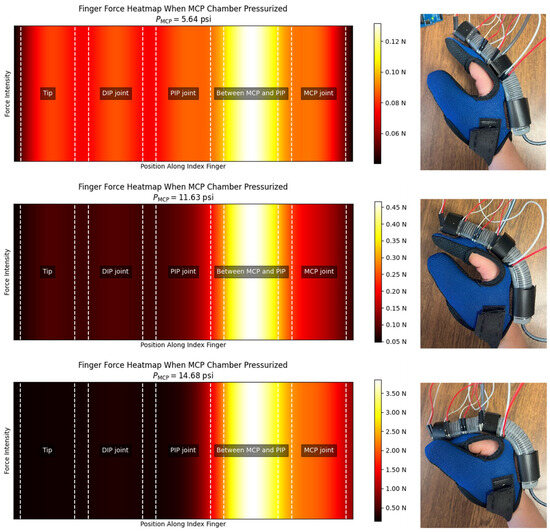

According to Figure 15 and Figure 16, the interaction forces between the soft actuator and the finger were measured to assess the comfort level for individuals when wearing the glove in two conditions: (i) when only the MCP chamber is pressurized, and (ii) when only the PIP chamber is pressurized. The main focus is on the finger joints that are supposed to bend, ensuring that there is no discomfort or resistance during bending and extending motions. The results indicate that, during pressurization of the chambers, no signs of discomfort or irritation were observed at the joints intended to bend. The bending motion of the actuator closely matched the natural movement of the finger, ensuring that no pressure points were formed on the joints under any pressurization condition (MCP chamber, PIP chamber, or both).

Figure 15.

Heatmap of the exerted contact forces to the index finger when the PIP chamber is pressurized.

Figure 16.

Heatmap of the exerted contact forces to the index finger when the MCP chamber is pressurized.

It is important to note that these interaction forces were measured when the actuator was fully pressurized. As the actuator includes a bottom fiberglass layer and Kevlar reinforcement in a double helix pattern, it bends as intended and does not exhibit significant bulging on the bottom side that could cause discomfort. Additionally, as shown in Figure 14, the actuator is mounted using attachment bands with a base (brown in the figure) that lifts it approximately 1 mm off the finger surface to enhance comfort by preventing direct contact or pressure from any minor bottom deformation.

Additionally, the actuator demonstrates effective force transmission at the fingertip (shown as “Tip” in Figure 15 and Figure 16), as illustrated in the heatmaps, making it suitable for further tasks such as grasping and lifting objects. When both chambers were pressurized, a tip force of 9.3 N was recorded.

5.2. Training the Model for Joint Localization

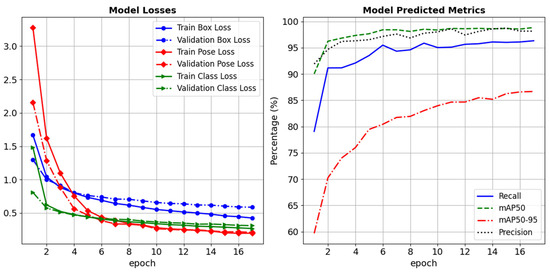

The YOLOv8s Pose model was fine-tuned on custom datasets with specific training configurations to detect keypoints. Pre-trained weights from the COCO dataset were utilized as a starting point for transfer learning, enabling the models to adapt efficiently to the custom dataset. Table 3 summarizes the key specifications for training the model. Training and validation losses and metrics are provided in Figure 17.

Table 3.

Key specifications for training YOLOv8s Pose models.

Figure 17.

Training and validation losses and metrics.

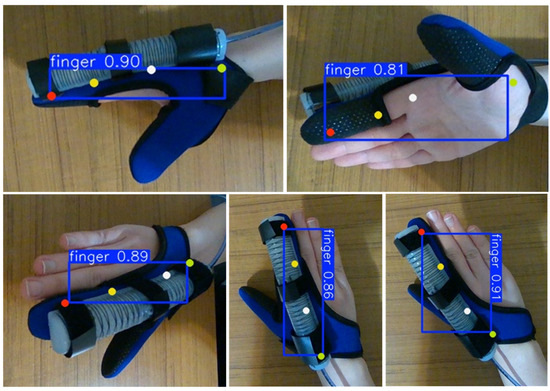

The keypoint detection model trained with YOLOv8s Pose is versatile. It can be trained to detect the finger joints directly, even when the finger is covered by the glove, as shown in Figure 18. Since all sides of the fingers are included in the training process, the model can detect keypoints in various orientations of the hand. The detection accuracy, presented as a proportion (e.g., 0.81), is also shown in this figure. The model is also less sensitive to changes in lighting conditions compared to the tag detection model. Furthermore, the keypoint detection model, being trained to detect specific anatomical points within a defined region, is less likely to misinterpret other pixels or objects as keypoints, making it more robust for real-time applications.

Figure 18.

Detection of keypoints of index finger using YOLOv8s Pose.

In the experiments, reliable joint localization and accurate angle estimation were achieved when certain conditions were present during camera-based tracking. The hand remained within the camera’s field of view throughout operation, as the model relies on visual input to detect keypoints. Although the model was trained on a variety of hand orientations to improve generalization (as shown in Figure 18), we observed that optimal detection performance occurred when the finger was viewed from the side. This perspective provided a clear representation of joint bending, enhancing both the stability and accuracy of the estimated angles. Additionally, while the model demonstrated robustness to moderate lighting variations due to extensive data augmentation during training, consistent and diffuse lighting conditions further reduced detection noise and supported stable real-time performance.

In summary, the advantages of YOLOv8s Pose for this application are as follows:

- Non-Intrusive Monitoring: By using vision-based keypoint detection, the system avoids the need for intrusive or bulky sensors, preserving the glove’s natural design.

- Real-Time Performance: YOLOv8s Pose delivers fast inference speeds, making it ideal for applications where real-time feedback is critical, such as rehabilitation or gaming interfaces.

- High Accuracy: The model’s architecture ensures precise detection of keypoints, even in scenarios where the hand is fully covered by the glove.

- Scalability and Adaptability: YOLOv8s Pose can be retrained to detect different keypoints or accommodate various glove designs, making it highly versatile.

- Integration Capability: The model’s lightweight structure allows it to integrate seamlessly with existing hardware, such as embedded systems or edge devices, ensuring portability and ease of deployment.

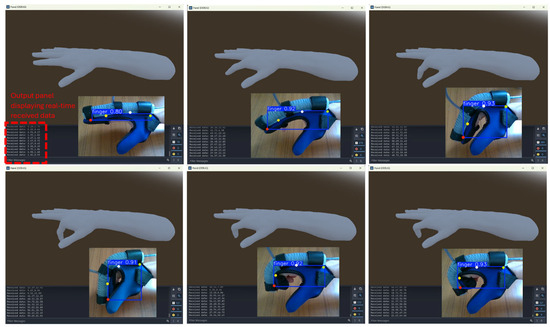

To assess the camera’s detection performance, the camera was positioned at a height of 37 cm above the desk, allowing the hand to move freely within the field of view. This setup ensured that the camera could track hand movements under natural conditions without restricting motion. To visualize the keypoint detection process and evaluate the accuracy of angle estimation, the Godot game engine was utilized with a rigged 3D hand model, as shown in Figure 19. The MCP and PIP joint angles were computed on the main PC and transmitted to the Godot environment using UDP communication. The real-time update of joint angles in Godot effectively mirrored the detected movements, providing a dynamic and interactive representation of keypoint tracking accuracy in the rehabilitation system.

Figure 19.

Rea-time visualization of the bending angles using the Godot game engine.

In the results, minor shifts were observed in the detected keypoint positions across consecutive frames—a phenomenon known as jitter. Jitter refers to small, unintended variations in keypoint localization that can negatively affect the stability of real-time tracking systems. It can be caused by several factors, including calibration errors, image noise, occlusion, and insufficient representation of certain hand poses in the training dataset [26,27]. In addition, temporal discontinuities and feature tracking mismatches during dynamic movements or partial occlusions can further contribute to this instability [27]. Apparent motion caused by system-induced disturbances has also been shown to introduce false displacements in static features [28]. In our system, this jitter was measured as a fluctuation of approximately ±5 pixels per keypoint, even when the hand remained stationary.

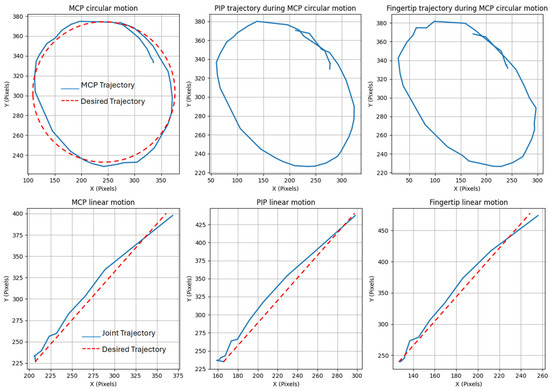

As an additional verification of the keypoint detection accuracy and consistency, predefined circular and linear trajectories were used as reference motion paths to assess how well the camera detects keypoints during whole-hand transition movements, rather than just finger bending. These trajectories served as desired movement patterns to assess whether the detected MCP and PIP joint positions remained stable and accurately followed the expected motion when the hand was positioned at different locations relative to the camera. As shown in Figure 20, by moving the hand along these predefined trajectories, it was confirmed that joint detection remained consistent across varying positions and orientations, with no instances of undetected movements. This validation highlights the robustness of the joint localization approach in this system, demonstrating that the dynamic joints positions can be reliably accessed in real time, regardless of spatial variations within the camera’s field of view.

Figure 20.

Consistency of joint localization using keypoints with different trajectories.

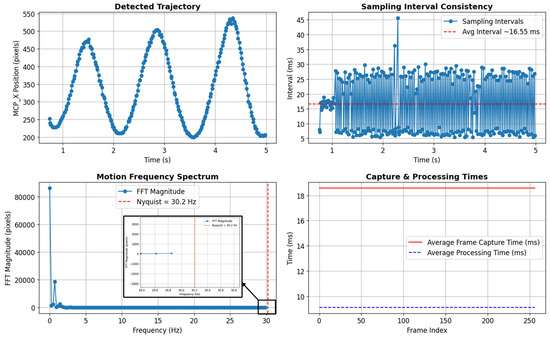

The vision system was evaluated for real-time detection to assess its ability to track rapid finger movements without aliasing. As shown in Figure 21, the x-coordinate of the keypoint corresponding to the MCP joint over a 5 s period demonstrated a smooth and periodic trajectory, indicating stable and continuous tracking. The sampling interval remained consistent, averaging around 16.55 milliseconds, which corresponds to a frame rate of approximately 60 fps (). This suggests that each new frame was processed and available within ~16.55 ms, ensuring low-latency performance suitable for real-time control applications. Minor fluctuations were observed due to processing overhead. The Nyquist frequency of 30.2 Hz suggests that any motion below this threshold can be accurately detected without aliasing. Since voluntary human hand movements typically occur in the 2–5 Hz range [29,30], with rapid flicks or ballistic movements reaching up to 10 Hz [29], the system is well within acceptable real-time limits. Additionally, physiological finger tremor frequencies in healthy individuals have been reported between 5 and 15 Hz, with a dominant peak around 9 to10 Hz [31], further reinforcing that the system’s sampling rate is sufficient to track normal hand motions without distortion or aliasing.

Figure 21.

Evaluation of the camera-based detection pipeline in terms of sampling consistency, processing time and motion frequency content.

To further assess tracking performance, a Fast Fourier Transform (FFT) was applied to the detected keypoint positions [32]. The analysis shows that the dominant motion frequencies remained well below the Nyquist limit of 30.2 Hz, confirming that the sampling rate was sufficient to avoid aliasing. The Nyquist frequency was determined by the actual sampling rate using Equation (10).

Table 4 compares the contributions of this work with those of other research studies on soft robotic gloves, providing a fair and transparent comparison. As shown in the table, several key aspects of this system, such as joint-specific actuation, joint localization through a vision-based method, and dynamic bending measurement, are not fully addressed in existing studies, underscoring the novelties and contributions of this work.

Table 4.

Comparing selected research studies on soft robotic gloves with the current study 1.

Table 5 presents a quantitative comparison of pneumatic soft actuators used for hand rehabilitation across selected research studies and the current study. Key performance metrics, such as the maximum tip force, operating pressure, achievable bending angles for MCP, PIP, and DIP joints, and repeatability (where reported) are compared. Based on the requirements outlined in previous studies—which suggest that forces above 7.3 N and joint angles ranging from 33° to 86° are typically needed for effective rehabilitation—our proposed actuator demonstrates strong performance. It delivers sufficient force (9.3 N) and bending angles of 105° at the MCP and 95° at the PIP joint while operating at a relatively low pressure of 105 kPa. Additionally, the design considers the natural anatomical coupling between the PIP and DIP joints, enabling more biomimetic movement patterns that can better support functional hand therapy. Repeatability is estimated from vision-based measurements, with a pixel drift corresponding to approximately ±2.9°, indicating reliable joint tracking during actuation.

Table 5.

Quantitative comparison of soft pneumatic actuators for hand rehabilitation across selected studies and the current study 1.

In soft pneumatic actuators, it is generally observed—both in our experimental results and in previous studies summarized in Table 5—that increasing the internal pressure tends to produce greater bending angles due to the expansion of internal chambers that induces structural curvature. Concurrently, the force output at the actuator’s tip is affected by both the applied pressure and the bending configuration. Higher tip forces are typically observed when the actuator is mechanically constrained, whereas larger bending angles occur in unconstrained conditions with reduced force generation. This trade-off between force and angular displacement is a fundamental property of soft pneumatic actuators, as consistently reported in prior modeling and experimental studies, including the work by Polygerinos et al. [22].

6. Conclusions

This paper presented a soft pneumatic actuator featuring dual independent bending chambers that enable independent actuation of the metacarpophalangeal (MCP) and proximal interphalangeal (PIP) joints while carefully accounting for the mechanical dependency between the PIP and distal interphalangeal (DIP) joints. A camera-based detection system powered by a deep learning model accurately and dynamically localizes finger joints and estimates their bending angles in real time. These estimated angles are visualized effectively within a game engine, showcasing the consistency of detection. The system achieves real-time performance with a low delay of 16.63 ms. The experimental results demonstrate the actuator’s ability to produce adequate bending angles and gripping force. The sensor-free vision-based approach simplifies the design, reduces wiring, and enhances the practicality and comfort of wearable use.

Although the current study focuses on the index finger, the proposed system is designed to be modular and scalable, enabling future extension to other fingers and more complex hand gestures. Given the structural and functional similarities among the fingers—particularly in terms of joint configuration and range of motion—the actuator developed for the index finger can be adapted to other digits. This provides a foundation for a full-hand rehabilitation system capable of supporting coordinated multi-finger movements.

While the proposed system offers accurate detection, minor shifts in the observed keypoints were noticed due to the markerless tracking approach, especially during hand movements. Future work will target this issue by developing a robust solution to reduce detection noise and stabilize angle estimation. The current setup relies on camera visibility for angle estimation, which can be affected by various external conditions such as lighting variations, camera angle, and rapid hand movements or occlusions. These factors may lead to inaccurate or failed detections in dynamic environments. Future work will address these limitations by incorporating state estimation and sensor fusion techniques to ensure robust and continuous angle predictions, even when visual tracking is partially lost or degraded. Additionally, since the current setup employs a PC for real-time processing, future developments can focus on building a fully portable system by replacing the PC with an embedded processing unit. This upgrade will support the creation of a compact, wearable, and user-friendly soft robotic glove suitable for home-based or mobile rehabilitations.

To further evaluate system robustness, future studies will also include intra-subject testing to assess performance consistency across different users and hand sizes during repeated gestures.

Author Contributions

Conceptualization, N.G., W.K. and N.S.; Data Curation, N.G.; Investigation, N.G.; Writing—original draft preparation, N.G.; Writing—review and editing, N.G., N.S., W.K. and T.S.; Visualization, N.G.; Supervision, N.S., W.K. and T.S.; Project Administration, N.S.; funding acquisition, N.S.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC). Grant number: RGPIN-2018-05352.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data needed to evaluate the conclusions of the paper are present in the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rahman, N.; Diteesawat, R.S.; Hoh, S.; Morris, L.; Turton, A.; Cramp, M.; Rossiter, J. Soft Scissor: A Cartilage-Inspired, Pneumatic Artificial Muscle for Wearable Devices. IEEE Robot. Autom. Lett. 2025, 10, 2367–2374. [Google Scholar] [CrossRef]

- Ghobadi, N.; Sepehri, N.; Kinsner, W.; Szturm, T. Beyond Human Touch: Integrating Soft Robotics with Environmental Interaction for Advanced Applications. Actuators 2024, 13, 507. [Google Scholar] [CrossRef]

- Yang, Y.; Chen, Y. Innovative Design of Embedded Pressure and Position Sensors for Soft Actuators. IEEE Robot. Autom. Lett. 2017, 3, 656–663. [Google Scholar] [CrossRef]

- Chen, F.; Wang, M.Y. Design Optimization of Soft Robots: A Review of the State of the Art. IEEE Robot. Autom. Mag. 2020, 27, 27–43. [Google Scholar] [CrossRef]

- Yap, H.K.; Ng, H.Y.; Yeow, C.-H. High-Force Soft Printable Pneumatics for Soft Robotic Applications. Soft Robot. 2016, 3, 144–158. [Google Scholar] [CrossRef]

- Soliman, M.; Saleh, M.A.; Mousa, M.A.; Elsamanty, M.; Radwan, A.G. Theoretical and Experimental Investigation Study of Data Driven Work Envelope Modelling for 3D Printed Soft Pneumatic Actuators. Sens. Actuators A Phys. 2021, 331, 112978. [Google Scholar] [CrossRef]

- Elsayed, Y.; Lekakou, C.; Geng, T.; Saaj, C.M. Design Optimisation of Soft Silicone Pneumatic Actuators Using Finite Element Analysis. In Proceedings of the 2014 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Besacon, France, 8–11 July 2014; pp. 44–49. [Google Scholar]

- Ma, Z.; Wang, Y.; Zhang, T.; Liu, J. Reconfigurable Exomuscle System Employing Parameter Tuning to Assist Hip Flexion or Ankle Plantarflexion. IEEE/ASME Trans. Mechatron. 2025. early access. [Google Scholar] [CrossRef]

- Loo, J.Y.; Ding, Z.Y.; Baskaran, V.M.; Nurzaman, S.G.; Tan, C.P. Robust Multimodal Indirect Sensing for Soft Robots Via Neural Network-Aided Filter-Based Estimation. Soft Robot. 2022, 9, 591–612. [Google Scholar] [CrossRef]

- Lai, J.; Song, A.; Shi, K.; Ji, Q.; Lu, Y.; Li, H. Design and Evaluation of a Bidirectional Soft Glove for Hand Rehabilitation-Assistance Tasks. IEEE Trans. Med. Robot. Bionics 2023, 5, 730–740. [Google Scholar] [CrossRef]

- Polygerinos, P.; Wang, Z.; Galloway, K.C.; Wood, R.J.; Walsh, C.J. Soft robotic glove for combined assistance and at-home rehabilitation. Robot. Auton. Syst. 2015, 73, 135–143. [Google Scholar] [CrossRef]

- Liu, H.; Xie, X.; Millar, M.; Edmonds, M.; Gao, F.; Zhu, Y.; Santos, V.J.; Rothrock, B.; Zhu, S.-C. A Glove-Based System for Studying Hand-Object Manipulation via Joint Pose and Force Sensing. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6617–6624. [Google Scholar]

- Hassani, S.; Dackermann, U. A Systematic Review of Advanced Sensor Technologies for Non-Destructive Testing and Structural Health Monitoring. Sensors 2023, 23, 2204. [Google Scholar] [CrossRef] [PubMed]

- Hofer, M.; Sferrazza, C.; D’Andrea, R. A Vision-Based Sensing Approach for a Spherical Soft Robotic Arm. Front. Robot. AI 2021, 8, 630935. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Wang, S.; Du, S.; Xiao, E.; Yuan, W.; Feng, C. Real-Time Soft Body 3D Proprioception via Deep Vision-Based Sensing. IEEE Robot. Autom. Lett. 2020, 5, 3382–3389. [Google Scholar] [CrossRef]

- Zhang, Z.; Dequidt, J.; Duriez, C. Vision-Based Sensing of External Forces Acting on Soft Robots Using Finite Element Method. IEEE Robot. Autom. Lett. 2018, 3, 1529–1536. [Google Scholar] [CrossRef]

- Li, J.; Ma, J.; Hu, Y.; Zhang, L.; Liu, Z.; Sun, S. Vision-Based Reinforcement Learning Control of Soft Robot Manipulators. Robot. Intell. Autom. 2024, 44, 783–790. [Google Scholar] [CrossRef]

- Ogunmolu, O.P.; Gu, X.; Jiang, S.; Gans, N.R. A Real-Time, Soft Robotic Patient Positioning System for Maskless Head-and-Neck Cancer Radiotherapy: An Initial Investigation. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 1539–1545. [Google Scholar]

- Werner, P.; Hofer, M.; Sferrazza, C.; D’Andrea, R. Vision-Based Proprioceptive Sensing: Tip Position Estimation for a Soft Inflatable Bellow Actuator. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 8889–8896. [Google Scholar]

- Oguntosin, V.; Akindele, A.; Alashiri, O. Vision Algorithms for Sensing Soft Robots. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2019; Volume 1378, p. 032102. [Google Scholar]

- Wu, T.; Dong, Y.; Liu, X.; Han, X.; Xiao, Y.; Wei, J.; Wan, F.; Song, C. Vision-Based Tactile Intelligence with Soft Robotic Metamaterial. Mater. Des. 2024, 238, 112629. [Google Scholar] [CrossRef]

- Polygerinos, P.; Wang, Z.; Overvelde, J.T.B.; Galloway, K.C.; Wood, R.J.; Bertoldi, K.; Walsh, C.J. Modeling of Soft Fiber-Reinforced Bending Actuators. IEEE Trans. Robot. 2015, 31, 778–789. [Google Scholar] [CrossRef]

- Van-Zwieten, K.J.; Schmidt, K.P.; Bex, G.J.; Lippens, P.L.; Duyvendak, W. An Analytical Expression for the DIP–PIP Flexion Interdependence in Human Fingers. Acta Bioeng. Biomech. 2015, 17, 129–135. [Google Scholar]

- Marechal, L.; Balland, P.; Lindenroth, L.; Petrou, F.; Kontovounisios, C.; Bello, F. Toward a Common Framework and Database of Materials for Soft Robotics. Soft Robot. 2021, 8, 284–297. [Google Scholar] [CrossRef]

- Pu, M.; Chong, C.Y.; Lim, M.K. Robustness Evaluation in Hand Pose Estimation Models Using Metamorphic Testing. In Proceedings of the 2023 IEEE/ACM 8th International Workshop on Metamorphic Testing (MET), Melbourne, Australia, 14 May 2023; pp. 31–38. [Google Scholar]

- Luo, J.; Zhu, L.; Li, L.; Hong, P. Robot Visual Servoing Grasping Based on Top-down Keypoint Detection Network. IEEE Trans. Instrum. Meas. 2023, 73, 5000511. [Google Scholar] [CrossRef]

- Pavlovych, A.; Stuerzlinger, W. The Tradeoff between Spatial Jitter and Latency in Pointing Tasks. In Proceedings of the Proceedings of the 1st ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Pittsburgh, PA, USA, 15–17 July 2009; pp. 187–196. [Google Scholar]

- Tsai, C.; Lee, P.; Bui, T.; Xu, G.; Sheu, M. Moving Object Detection for Remote Sensing Video with Satellite Jitter. In Proceedings of the 2023 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2023; pp. 1–2. [Google Scholar]

- Groß, J.; Timmermann, L.; Kujala, J.; Dirks, M.; Schmitz, F.; Salmelin, R.; Schnitzler, A. The Neural Basis of Intermittent Motor Control in Humans. Proc. Natl. Acad. Sci. USA 2002, 99, 2299–2302. [Google Scholar] [CrossRef] [PubMed]

- van Galen, G.P.; van Huygevoort, M. Error, Stress and the Role of Neuromotor Noise in Space Oriented Behaviour. Biol. Psychol. 2000, 51, 151–171. [Google Scholar] [CrossRef]

- Halliday, A.M.; Redfearn, J.W.T. An Analysis of the Frequencies of Finger Tremor in Healthy Subjects. J. Physiol. 1956, 134, 600. [Google Scholar] [CrossRef]

- Brigham, E.O.; Morrow, R.E. The Fast Fourier Transform. IEEE Spectr. 1967, 4, 63–70. [Google Scholar] [CrossRef]

- Meng, F.; Liu, C.; Li, Y.; Hao, H.; Li, Q.; Lyu, C.; Wang, Z.; Ge, G.; Yin, J.; Ji, X. Personalized and Safe Soft Glove for Rehabilitation Training. Electronics 2023, 12, 2531. [Google Scholar] [CrossRef]

- Chen, X.; Gong, L.; Wei, L.; Yeh, S.-C.; Da Xu, L.; Zheng, L.; Zou, Z. A Wearable Hand Rehabilitation System with Soft Gloves. IEEE Trans. Ind. Inform. 2020, 17, 943–952. [Google Scholar] [CrossRef]

- Wang, J.; Fei, Y.; Pang, W. Design, Modeling, and Testing of a Soft Pneumatic Glove with Segmented Pneunets Bending Actuators. IEEE/ASME Trans. Mechatron. 2019, 24, 990–1001. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, D.; Zhang, Y.; Liu, J.; Wen, L.; Xu, W.; Zhang, Y. A Three-Fingered Force Feedback Glove Using Fiber-Reinforced Soft Bending Actuators. IEEE Trans. Ind. Electron. 2019, 67, 7681–7690. [Google Scholar] [CrossRef]

- Duanmu, D.; Wang, X.; Li, X.; Wang, Z.; Hu, Y. Design of Guided Bending Bellows Actuators for Soft Hand Function Rehabilitation Gloves. Actuators 2022, 11, 346. [Google Scholar] [CrossRef]

- Heung, K.H.L.; Tong, R.K.Y.; Lau, A.T.H.; Li, Z. Robotic Glove with Soft-Elastic Composite Actuators for Assisting Activities of Daily Living. Soft Robot. 2019, 6, 289–304. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Liu, Z.; Fei, Y. Design and Testing of a Soft Rehabilitation Glove Integrating Finger and Wrist Function. J. Mech. Robot. 2019, 11, 011015. [Google Scholar] [CrossRef]

- Li, X.; Hao, Y.; Zhang, J.; Wang, C.; Li, D.; Zhang, J. Design, Modeling and Experiments of a Variable Stiffness Soft Robotic Glove for Stroke Patients with Clenched Fist Deformity. IEEE Robot. Autom. Lett. 2023, 8, 4044–4051. [Google Scholar] [CrossRef]

- Yap, H.K.; Khin, P.M.; Koh, T.H.; Sun, Y.; Liang, X.; Lim, J.H.; Yeow, C.-H. A Fully Fabric-Based Bidirectional Soft Robotic Glove for Assistance and Rehabilitation of Hand Impaired Patients. IEEE Robot. Autom. Lett. 2017, 2, 1383–1390. [Google Scholar] [CrossRef]

- Kokubu, S.; Vinocour, P.E.T.; Yu, W. Development and Evaluation of Fiber Reinforced Modular Soft Actuators and an Individualized Soft Rehabilitation Glove. Robot. Auton. Syst. 2024, 171, 104571. [Google Scholar] [CrossRef]

- Ha, J.; Kim, D.; Jo, S. Use of Deep Learning for Position Estimation and Control of Soft Glove. In Proceedings of the 2018 18th International Conference on Control, Automation and Systems (ICCAS), Pyeong Chang, Republic of Korea, 17–20 October 2018; pp. 570–574. [Google Scholar]

- Cheng, N.; Phua, K.S.; Lai, H.S.; Tam, P.K.; Tang, K.Y.; Cheng, K.K.; Yeow, R.C.-H.; Ang, K.K.; Guan, C.; Lim, J.H. Brain-Computer Interface-Based Soft Robotic Glove Rehabilitation for Stroke. IEEE Trans. Biomed. Eng. 2020, 67, 3339–3351. [Google Scholar] [CrossRef]

- Rho, E.; Lee, H.; Lee, Y.; Lee, K.-D.; Mun, J.; Kim, M.; Kim, D.; Park, H.-S.; Jo, S. Multiple Hand Posture Rehabilitation System Using Vision-Based Intention Detection and Soft-Robotic Glove. IEEE Trans. Ind. Inform. 2024, 20, 6499–6509. [Google Scholar] [CrossRef]

- Kwon, H.; Hwang, C.; Jo, S. Vision Combined with Mi-Based Bci in Soft Robotic Glove Control. In Proceedings of the 2022 10th International Winter Conference on Brain-Computer Interface (BCI), Gangwon-do, Republic of Korea, 21–23 February 2022; pp. 1–5. [Google Scholar]

- Jadhav, S.; Kannanda, V.; Kang, B.; Tolley, M.T.; Schulze, J.P. Soft Robotic Glove for Kinesthetic Haptic Feedback in Virtual Reality Environments. Electron. Imaging 2017, 29, 19–24. [Google Scholar] [CrossRef]

- Shen, Z.; Yi, J.; Li, X.; Lo, M.H.P.; Chen, M.Z.Q.; Hu, Y.; Wang, Z. A Soft Stretchable Bending Sensor and Data Glove Applications. Robot. Biomim. 2016, 3, 22. [Google Scholar] [CrossRef]

- Park, M.; Park, T.; Park, S.; Yoon, S.J.; Koo, S.H.; Park, Y.-L. Stretchable Glove for Accurate and Robust Hand Pose Reconstruction Based on Comprehensive Motion Data. Nat. Commun. 2024, 15, 5821. [Google Scholar] [CrossRef]

- Glauser, O.; Wu, S.; Panozzo, D.; Hilliges, O.; Sorkine-Hornung, O. Interactive Hand Pose Estimation Using a Stretch-Sensing Soft Glove. ACM Trans. Graph. (ToG) 2019, 38, 1–15. [Google Scholar] [CrossRef]

- Syringas, P.; Economopoulos, T.; Kouris, I.; Kakkos, I.; Papagiannis, G.; Triantafyllou, A.; Tselikas, N.; Matsopoulos, G.K.; Fotiadis, D.I. Rehabotics: A Comprehensive Rehabilitation Platform for Post-Stroke Spasticity, Incorporating a Soft Glove, a Robotic Exoskeleton Hand and Augmented Reality Serious Games. Eng. Proc. 2023, 50, 2. [Google Scholar]

- Hume, M.C.; Gellman, H.; McKellop, H.; Brumfield, R.H., Jr. Functional Range of Motion of the Joints of the Hand. J. Hand Surg. Am. 1990, 15, 240–243. [Google Scholar] [CrossRef] [PubMed]

- Yap, H.K.; Lim, J.H.; Nasrallah, F.; Yeow, C.-H. Design and Preliminary Feasibility Study of a Soft Robotic Glove for Hand Function Assistance in Stroke Survivors. Front. Neurosci. 2017, 11, 547. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).