Flash 3D Imaging of Far-Field Dynamic Objects: An EMCCD-Based Polarization Modulation System

Abstract

Highlights

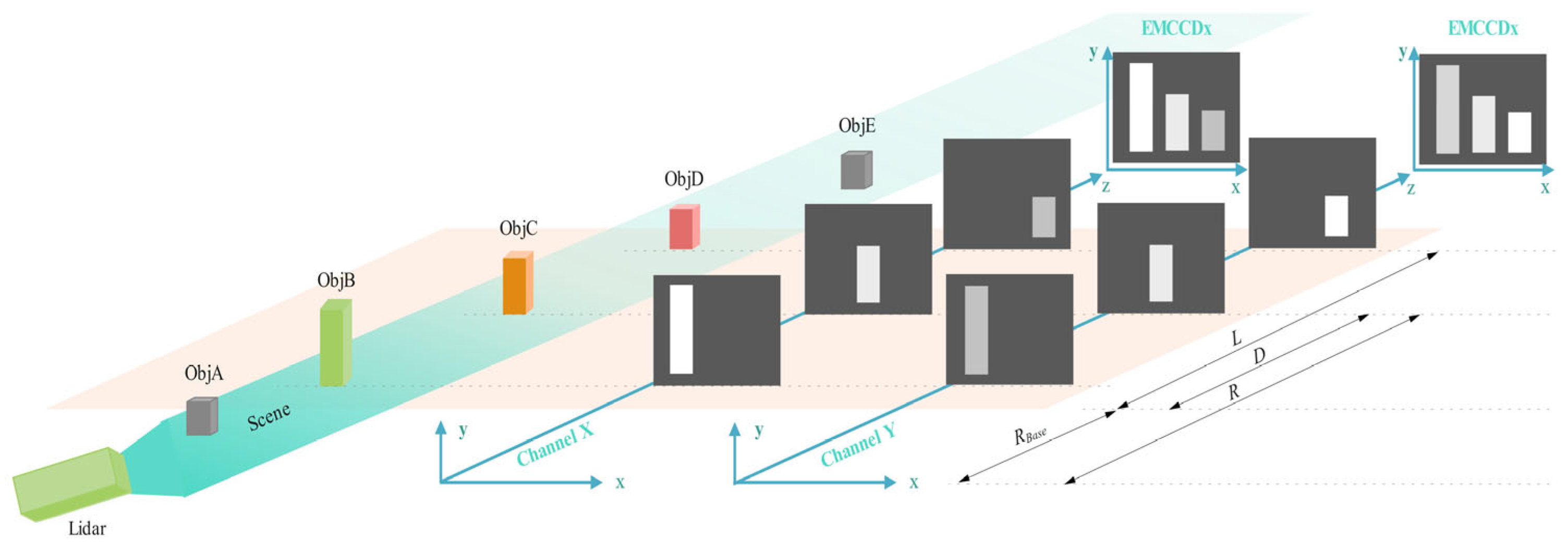

- Polarization-Modulated 3D Imaging: The key novelty is the synergy between polarization modulation and a dual-EMCCD framework. In the dual-cameras structure, the ratio of intensities in one camera versus the other camera provides range information, while the summation of intensities in both cameras provides transverse information. Ultimately, 3D image about the scene can be produced after 3D reconstruction. The advantage of this technique is that we can use a pair of EMCCD cameras for super-resolution 3D imaging, with which a frame of 3D image can be reconstructed from a frame of polarization-modulated images.

- Dual-EMCCD Framework for Dynamic Scene Reconstruction: A dual-EMCCD setup simultaneously reconstructs high-resolution depth images and grayscale intensity images from a single raw data frame. Combined with adaptive gate-opening range technology, the system achieves 10 cm range resolution for specific objects, overcoming motion-induced artifacts in dynamic environments (e.g., moving objects or platforms).

- Advancing High-Speed 3D Imaging: The EMCCD’s low bandwidth and rapid shuttering capabilities address the limitations of traditional avalanche photodiode (APD) arrays, enabling ultrafast, noise-resilient 3D imaging for applications requiring real-time depth sensing in dynamic scenarios (e.g., autonomous vehicles, robotics).

- Enhanced Versatility for Complex Environments: The dual-image reconstruction (depth + grayscale) and adaptive range gating provide motion-agnostic imaging performance, making the system robust in scenarios with rapid object/platform movement or cluttered scenes. This innovation could revolutionize applications like remote sensing of fast-moving targets where both spatial and temporal resolution are critical.

Abstract

1. Introduction

2. Materials and Methods

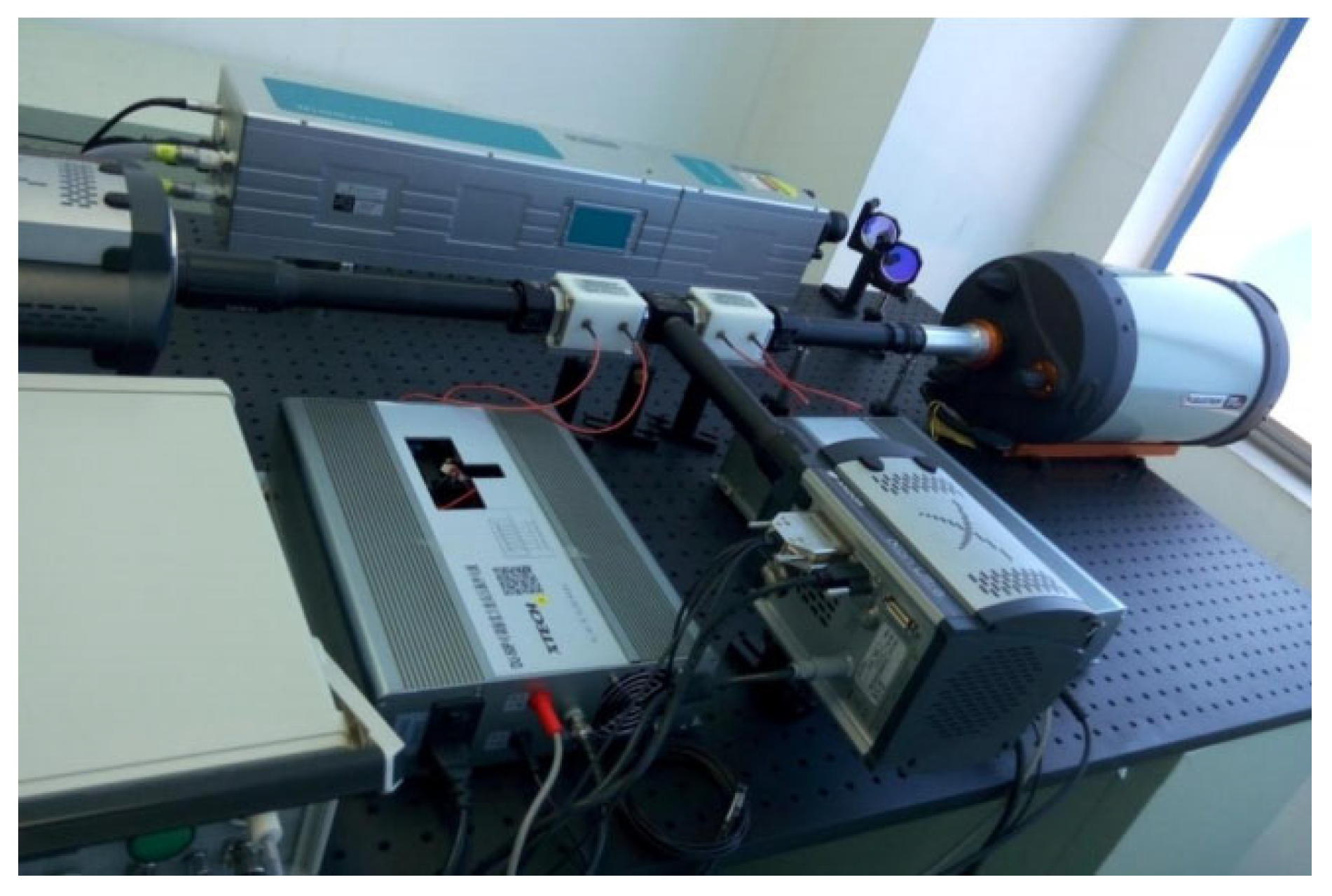

2.1. 3D Imaging Setup

2.2. Image Acquisition and Processing

2.3. 3D Structure Reconstruction

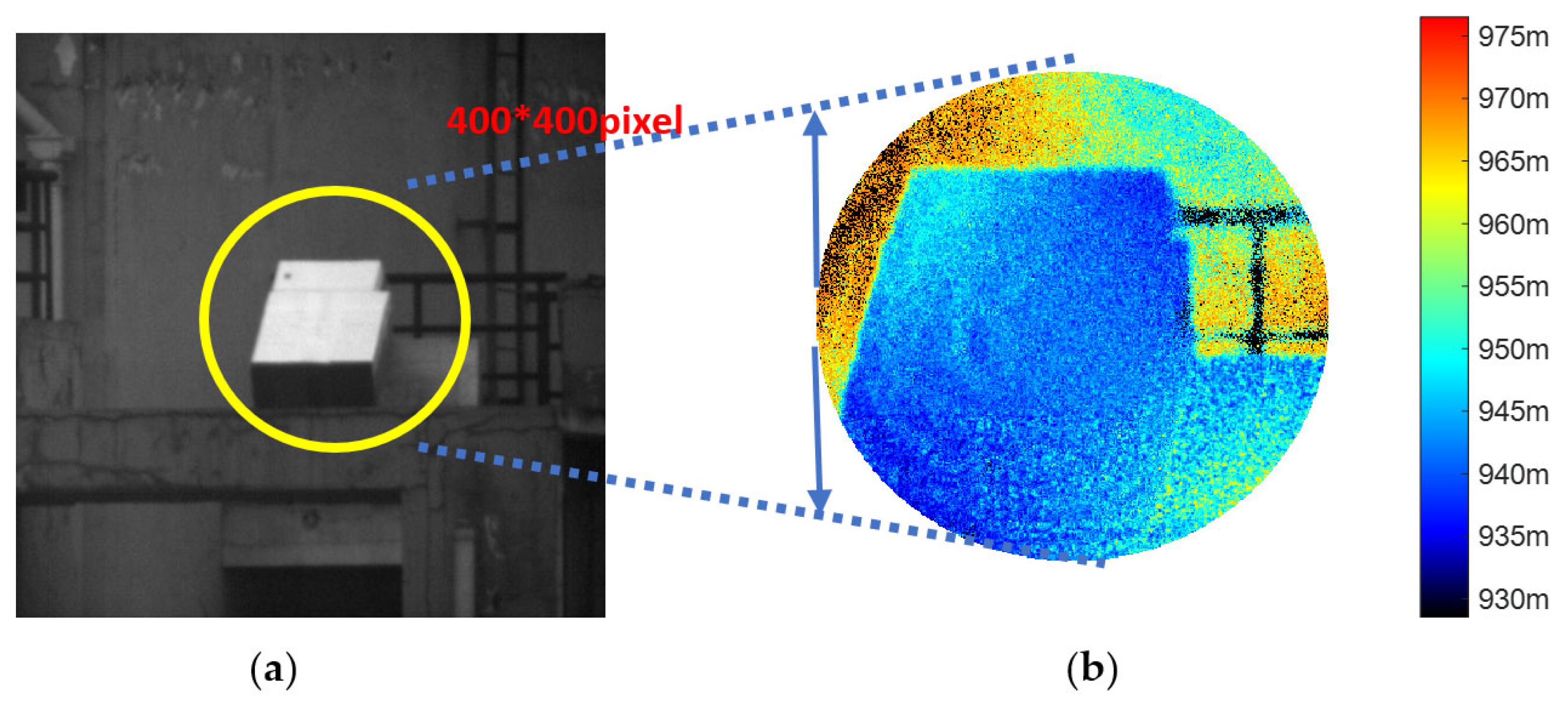

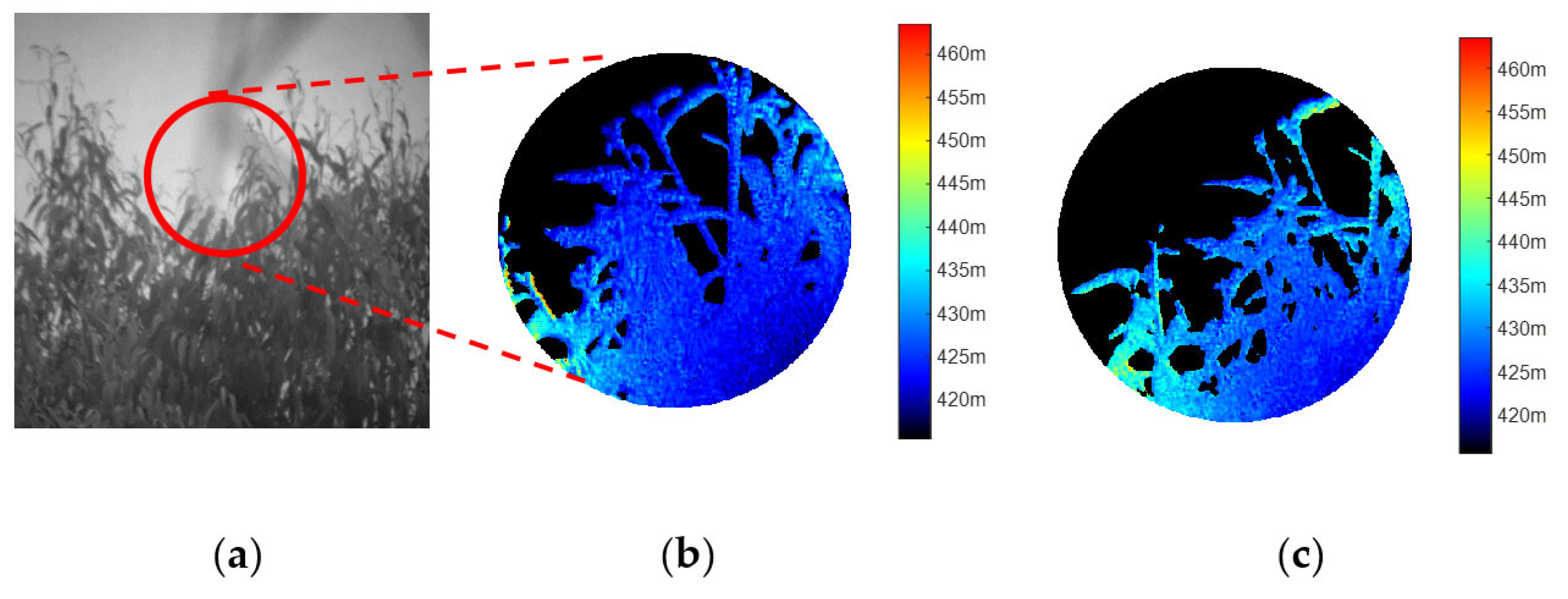

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | Three-Dimensional |

| APD | avalanche photodiode |

| EMCCD | Electron Multiplying Charge Coupled Device |

| SNR | signal-to-noise ratio |

| LiDAR | Light Detection and Ranging |

| TOF | time-of-flight |

| GM-APD | Geiger-mode Avalanche Photodiode |

| SPAD | Single-Photon Avalanche Diode |

| TCSPC | time-correlated single-photon counting |

| ROIC | readout integrated circuit |

| ICCD | Intensified Charge-Coupled Device |

| QE | quantum efficiency |

| MCP | micro-channel plate |

| EOM | electro-optic modulator |

| NBF | narrowband filter |

| Obj | object |

| EM | electron multiplying |

References

- Schwarz, B. Lidar: Mapping the world in 3D. Nat. Photonics 2010, 4, 429–430. [Google Scholar] [CrossRef]

- Deppner, I.; Herrmann, N. The CBM Time-of-Flight system. J. Instrum. 2019, 14, C09020. [Google Scholar] [CrossRef]

- Gariepy, G.; Krstajić, N.; Henderson, R.; Li, C.; Thomson, R.R.; Buller, G.S.; Heshmat, B.; Raskar, R.; Leach, J.; Faccio, D. Single-photon sensitive light-in-flight imaging. Nat. Commun. 2015, 6, 6021. [Google Scholar] [CrossRef] [PubMed]

- Albota, M.A.; Aull, B.F.; Fouche, D.G.; Heinrichs, R.M.; Kocher, D.G.; Marino, R.M.; Mooney, J.G.; Newbury, N.R.; O’Brien, M.E.; Player, B.E.; et al. Three-dimensional imaging ladars with Geiger mode avalanche photodiode arrays. Lincoln Lab. 2002, 13, 351–370. Available online: https://ieeexplore.ieee.org/document/1297890 (accessed on 4 January 2025).

- Marino, R.M.; Davis, W.R., Jr. A foliage-penetrating 3D imaging ladar system. Lincoln Lab. 2005, 15, 23–32. Available online: https://api.semanticscholar.org/CorpusID:18046922 (accessed on 24 January 2025).

- Kirmani, A.; Venkatraman, D.; Shin, D.; Colaço, A.; Wong, F.N.C.; Shapiro, J.H.; Goyal, V.K. First-photon imaging. Science 2014, 343, 58–61. [Google Scholar] [CrossRef]

- Shin, D.; Kirmani, A.; Goyal, V.K.; Shapiro, J.H. Photon-Efficient Computational 3D and Reflectivity Imaging with Single-Photon Detectors. IEEE Trans. Comput. Imaging 2015, 1, 112–125. [Google Scholar] [CrossRef]

- Shin, D.; Xu, F.; Venkatraman, D.; Lussana, R.; Villa, F.; Zappa, F.; Goyal, V.K.; Wong, F.N.; Shapiro, J.H. Photon-efficient imaging with a single-photon camera. Nat. Commun. 2016, 7, 12046. [Google Scholar] [CrossRef]

- Tyrrell, B.; Anderson, K.; Baker, J.; Berger, R.; Brown, M.; Colonero, C.; Costa, J.; Holford, B.; Kelly, M.; Ringdahl, E.; et al. Time delay integration and in-pixel spatiotemporal filtering using a nanoscale digital cmos focal plane readout. IEEE Trans. Electron Devices 2009, 56, 2516–2523. [Google Scholar] [CrossRef]

- Aull, B.F.; Duerr, E.K.; Frechette, J.P.; McIntosh, K.A.; Schuette, D.R.; Younger, R.D. Large-Format Geiger-Mode Avalanche Photodiode Arrays and Readout Circuits. IEEE J. Sel. Top. Quantum Electron. 2018, 24, 3800510. [Google Scholar] [CrossRef]

- Laurenzis, M.; Christnacher, F.; Monnin, D. Long-range three-dimensional active imaging with super-resolution depth mapping. Opt. Lett. 2007, 32, 3146–3148. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yan, H.; Jiang, Y. Pulse-shape-free method for long-range three-dimensional active imaging with high linear accuracy. Opt. Lett. 2008, 33, 1219–1221. [Google Scholar] [CrossRef]

- Zhang, X.; Yan, H.; Zhou, Q. Overcoming the shot-noise limitation of three-dimensional active imaging. Opt. Lett. 2011, 36, 1434–1436. [Google Scholar] [CrossRef] [PubMed]

- Djazovski, O.; Daigle, O.; Laurin, D.; Bedirian, M.; Ducharme, M.-E.; Artigau, É.; Doyon, R.; Cheben, P.; Schmid, J.; Boudoux, C.; et al. Electron-Multiplying CCDs for Future Space Instruments; Photonics North: Ottawa, ON, Canada, 2013; Available online: https://api.semanticscholar.org/CorpusID:56424353 (accessed on 2 February 2025).

- Denvir, D.J.; Conroy, E. Electron Multiplying CCD Technology: The new ICCD. Proc. SPIE 2002, 4796, 167–174. Available online: https://api.semanticscholar.org/CorpusID:114320073 (accessed on 4 February 2025).

- Zhang, W.; Chen, Q. Signal-to-Noise Ratio Performance Comparison of Electron Multiplying CCD and Intensified CCD Detectors. In Proceedings of the 2009 International Conference on Image Analysis and Signal Processing, Linhai, China, 11–12 April 2009. [Google Scholar] [CrossRef]

- Sangalli, L.; Partamies, N.; Syrjäsuo, M.; Enell, C.-F.; Kauristie, K.; Mäkinen, S. Performance study of the new EMCCD-based all-sky cameras for auroral imaging. Int. J. Remote Sens. 2011, 32, 2987–3003. [Google Scholar] [CrossRef]

- Robbins, M.S.; Hadwen, B.J. The Noise Performance of Electron Multiplying Charge Coupled Devices. IEEE Trans. Electron. Device 2003, 59, 1227–1232. [Google Scholar] [CrossRef]

- Hall, D.J.; Tutt, J.H.; Soman, M.R.; Holland, A.D.; Murray, N.J.; Schmitt, B.; Schmitt, T.; Siegmund, O.H. High-resolution soft x-ray spectrometry using the electron-multiplying charge-coupled device (EM-CCD). In Proceedings of the Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, San Diego, CA, USA, 25–29 August 2013. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, B.; Liu, E.; Peng, Z. Electro-optic modulation methods in range-gated active imaging. Appl. Opt. 2016, 55, A184–A190. [Google Scholar] [CrossRef]

- Busck, J.; Heiselberg, H. Gated viewing and high-accuracy three-dimensional laser radar. Appl. Opt. 2004, 43, 4705–4710. [Google Scholar] [CrossRef]

- Imaki, M.; Ochimizu, H.; Tsuji, H.; Kameyama, S.; Saito, T.; Ishibashi, S.; Yoshida, H. Underwater three-dimensional imaging laser sensor with 120-deg wide-scanning angle using the combination of a dome lens and coaxial optics. Opt. Eng. 2017, 56, 031212. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian-Gaussian Noise Modeling and Fitting for Single-Image Raw-Data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Tanaka, M.; Okutomi, M. Practical Signal-Dependent Noise Parameter Estimation From a Single Noisy Image. IEEE Trans. Image Process. 2014, 23, 4361–4371. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Guo, J.; Lei, X.; Zhu, C. A high-speed vision-based sensor for dynamic vibration analysis using fast motion extraction algorithms. Sensors 2016, 16, 572. [Google Scholar] [CrossRef] [PubMed]

- Tong, W. Subpixel image registration with reduced bias. Opt. Lett. 2011, 36, 763. [Google Scholar] [CrossRef]

- Foroosh, H.; Zerubia, J.B.; Berthod, M. Extension of Phase Correlation to Subpixel Registration. IEEE Trans. Image Process. 2002, 11, 188. [Google Scholar] [CrossRef]

- Yang, Q.; Sun, S.; Han, R.; Sima, W.; Liu, T. Intense transient electric field sensor based on the electro-optic effect of LiNbO3. Aip Adv. 2015, 5, 1875–1882. [Google Scholar] [CrossRef]

- Wang, Z.; Yin, J.; Pang, C.; Li, Y.; Wang, X. An Adaptive Direction-Dependent Polarization State Configuration Method for High Isolation in Polarimetric Phased Array Radar. IEEE Trans. Antennas Propag. 2021, 6, 69. [Google Scholar] [CrossRef]

| System Parameters | Value |

|---|---|

| Wavelength | 532 nm |

| Pulse Energy | 200 mJ |

| Pulse Duration | 8 ns |

| Frame Rate | 10 Hz |

| Resolution | 1024 × 1024 pixels |

| Wavelength | 532 nm |

| Background light intensity | 0.1–1 lux |

| Target reflectance | 87.3% ± 1.5% |

| Characteristic/Sensor Type | Avalanche Photodiode (APD) | Intensified CCD (ICCD) | Electron-Multiplying CCD (EMCCD) |

| Weak-Light Sensitivity | Moderate (saturation issues) | High (but noise-affected) | Optimal (single-photon sensitivity) |

| Noise Level | Low | High (MCP-induced noise) | Ultra-low (deep cooling technology) |

| Quantum Efficiency | Not specified | Not specified | >90% (visible spectrum) |

| Spatial Resolution | Low (10–50 μm pixel pitch) | Moderate (spatial distortion) | High |

| Dynamic Response | Limited linearity | Restricted dynamic range | Wide dynamic range & linear response |

| Core Strength | Ultra-high speed (ps-level response) | Gated imaging capability | Sensitivity + spatial fidelity |

| Primary Limitation | Low resolution, saturation-prone | MCP noise, spatial distortion | High cost, moderate frame rates |

| Optimal Use Case | High-speed imaging | Time-gated imaging | Low-light 3D imaging (depth mapping) |

| Signal-to-Noise Ratio (SNR) | Moderate | MCP noise-limited | Best |

| System Compatibility | Standard circuitry | Requires sync triggering | Strong |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Yang, X.; Su, D.; Cao, W.; Zhang, X. Flash 3D Imaging of Far-Field Dynamic Objects: An EMCCD-Based Polarization Modulation System. Sensors 2025, 25, 3852. https://doi.org/10.3390/s25133852

Wang S, Yang X, Su D, Cao W, Zhang X. Flash 3D Imaging of Far-Field Dynamic Objects: An EMCCD-Based Polarization Modulation System. Sensors. 2025; 25(13):3852. https://doi.org/10.3390/s25133852

Chicago/Turabian StyleWang, Shengjie, Xiaojia Yang, Donglin Su, Weiqi Cao, and Xianhao Zhang. 2025. "Flash 3D Imaging of Far-Field Dynamic Objects: An EMCCD-Based Polarization Modulation System" Sensors 25, no. 13: 3852. https://doi.org/10.3390/s25133852

APA StyleWang, S., Yang, X., Su, D., Cao, W., & Zhang, X. (2025). Flash 3D Imaging of Far-Field Dynamic Objects: An EMCCD-Based Polarization Modulation System. Sensors, 25(13), 3852. https://doi.org/10.3390/s25133852