A Multi-Feature Fusion Approach for Road Surface Recognition Leveraging Millimeter-Wave Radar

Abstract

1. Introduction

1.1. Motivation

1.2. Literature Review

1.3. Contributions

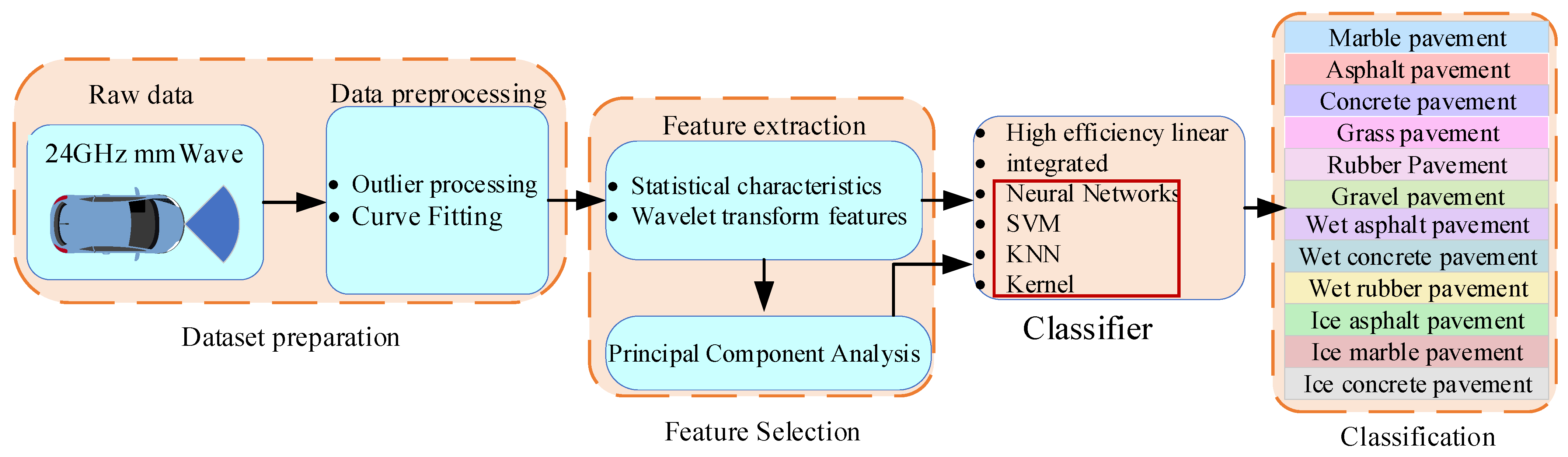

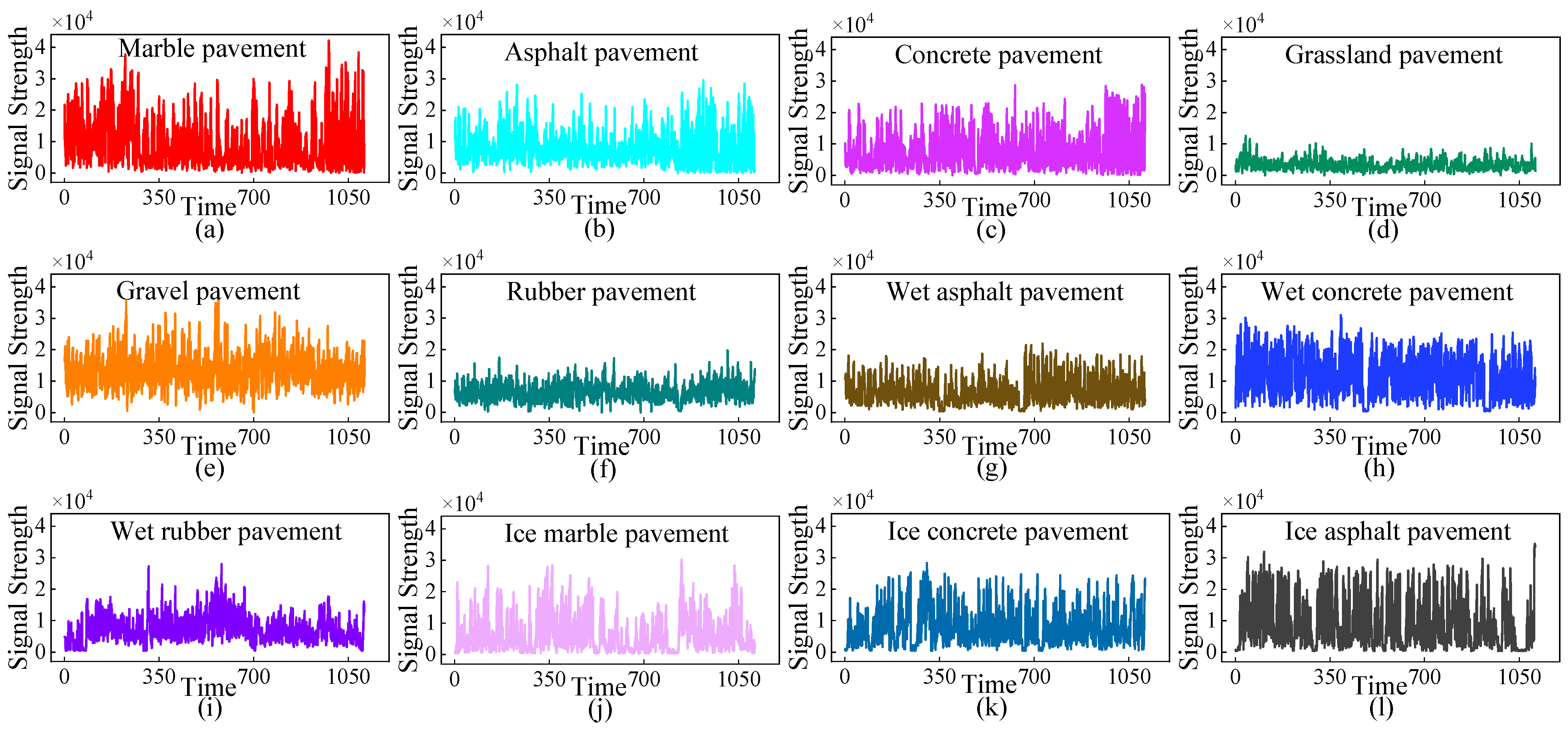

- This study explores the feasibility of employing a low-cost, high-efficiency 24 GHz millimeter-wave radar for road surface type and state identification. Analysis of radar echo signals reveals pronounced disparities in echo intensity among different road types (e.g., asphalt, gravel) and moisture states (dry, wet, ice). By extracting statistical features—such as mean, variance—from these signals, strong class separability is achieved across various road conditions. Notably, the use of a 24 GHz millimeter-wave radar to identify road moisture states has been rarely explored in prior research, representing a key innovation of this work.

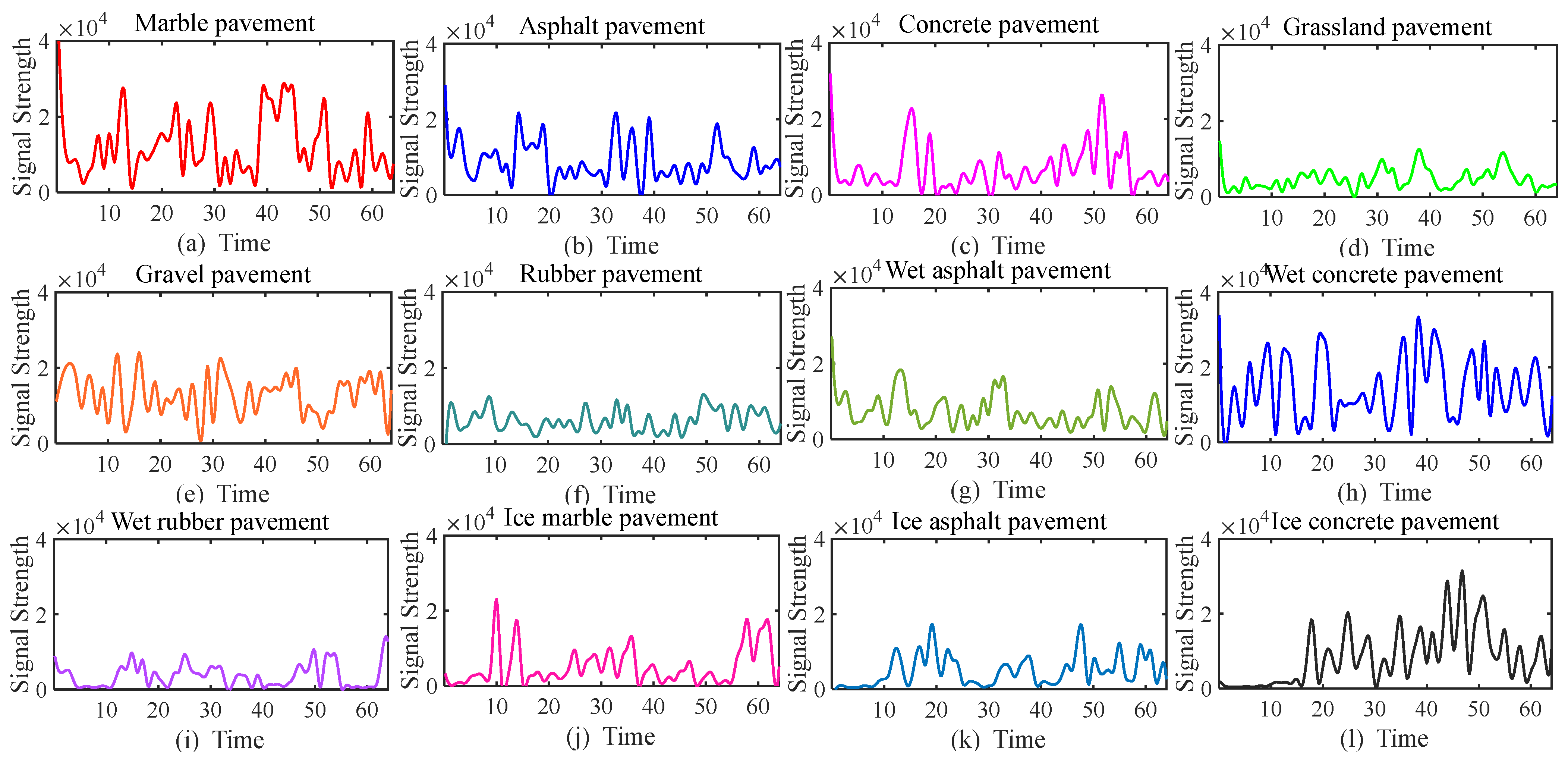

- This study reconstructs radar data as time-series sequences, converting discrete radar signals into curve-based image representations that visualize signal dynamics over time. Wavelet transform is then applied to these resulting curve images to extract multi-scale features, effectively capturing both low-frequency trends (e.g., long-term signal patterns) and high-frequency details (e.g., transient reflections). This approach significantly enhances the temporal–spatial correlation of echo information, reducing the sensitivity to localized road surface irregularities or damage and providing a robust, novel framework for road surface recognition.

- Feature-level fusion is achieved by integrating wavelet transform-derived multi-scale features with statistical characteristics extracted from raw radar signals, generating richer and more discriminative feature vectors for road condition analysis. This integrated strategy significantly enhances the accuracy and robustness of road surface identification by leveraging the complementary information from both time-domain statistics and frequency-domain structural details.

1.4. Paper Organization

2. Feature Extraction Based on Statistics

2.1. Road Surface Separability Analysis of 24 GHz Millimeter-Wave Radar

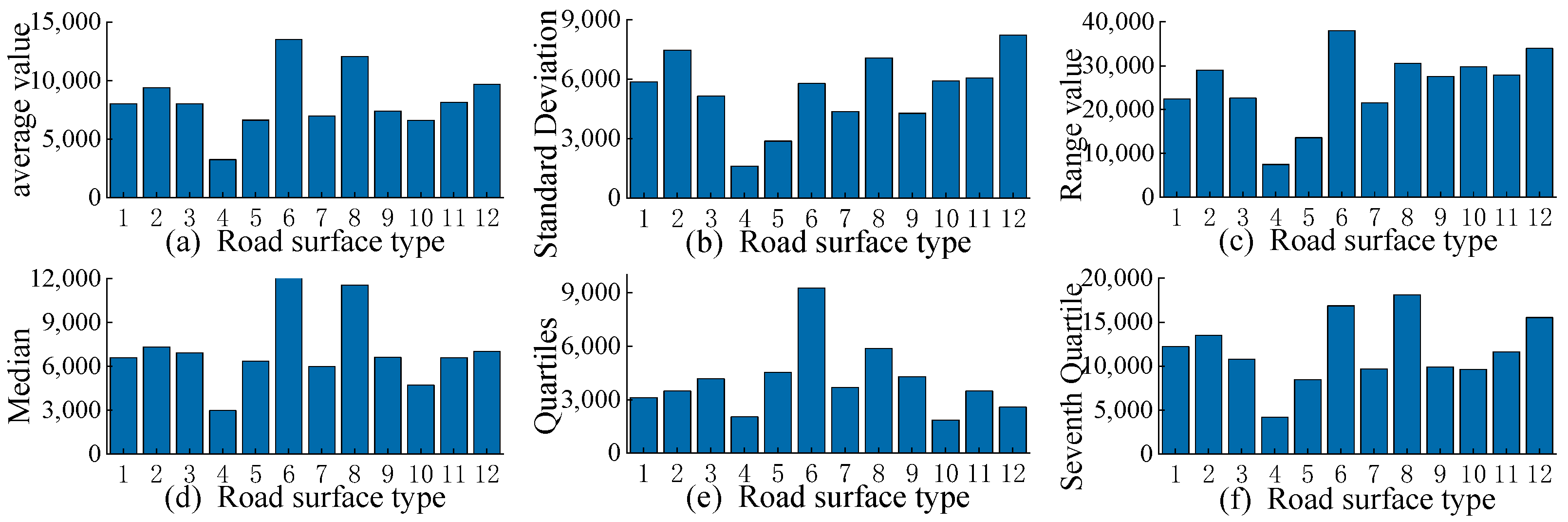

2.2. Statistical Feature Extraction

- Mean (μ): The arithmetic mean of the sample signal strength, calculated as follows:

- 2.

- Standard Deviation (σ): An unbiased estimate of the sample signal strength, calculated as follows:

- 3.

- Range: The difference between the maximum and minimum values, calculated as follows:

- 4.

- Median: Arrange all echo amplitude values in ascending order.

- 5.

- Quartiles: Arrange all the values in the sample from small to large and divide them into four equal parts. After all the values in the sample are arranged from small to large, the number that is around 75% is found.

- 6.

- Seventh Quartile: Arrange all the values in the sample from small to large and divide them into four equal parts. After all the values in the sample are arranged from small to large, the number that is around 75% is found.

3. Feature Extraction Utilizing Wavelet Transform

3.1. Curve Fitting Graphical Modeling

3.2. Feature Extraction Based on Wavelet Transform

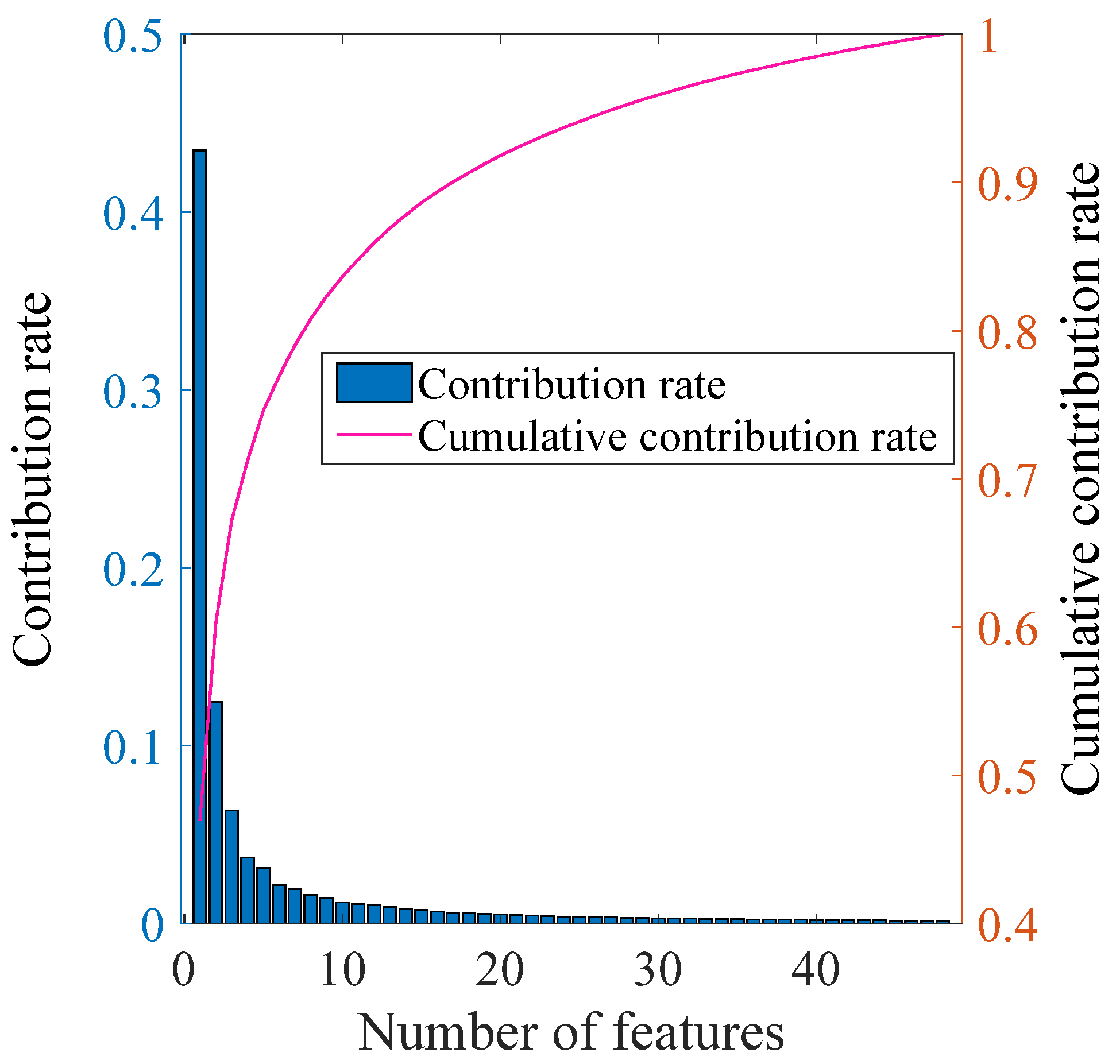

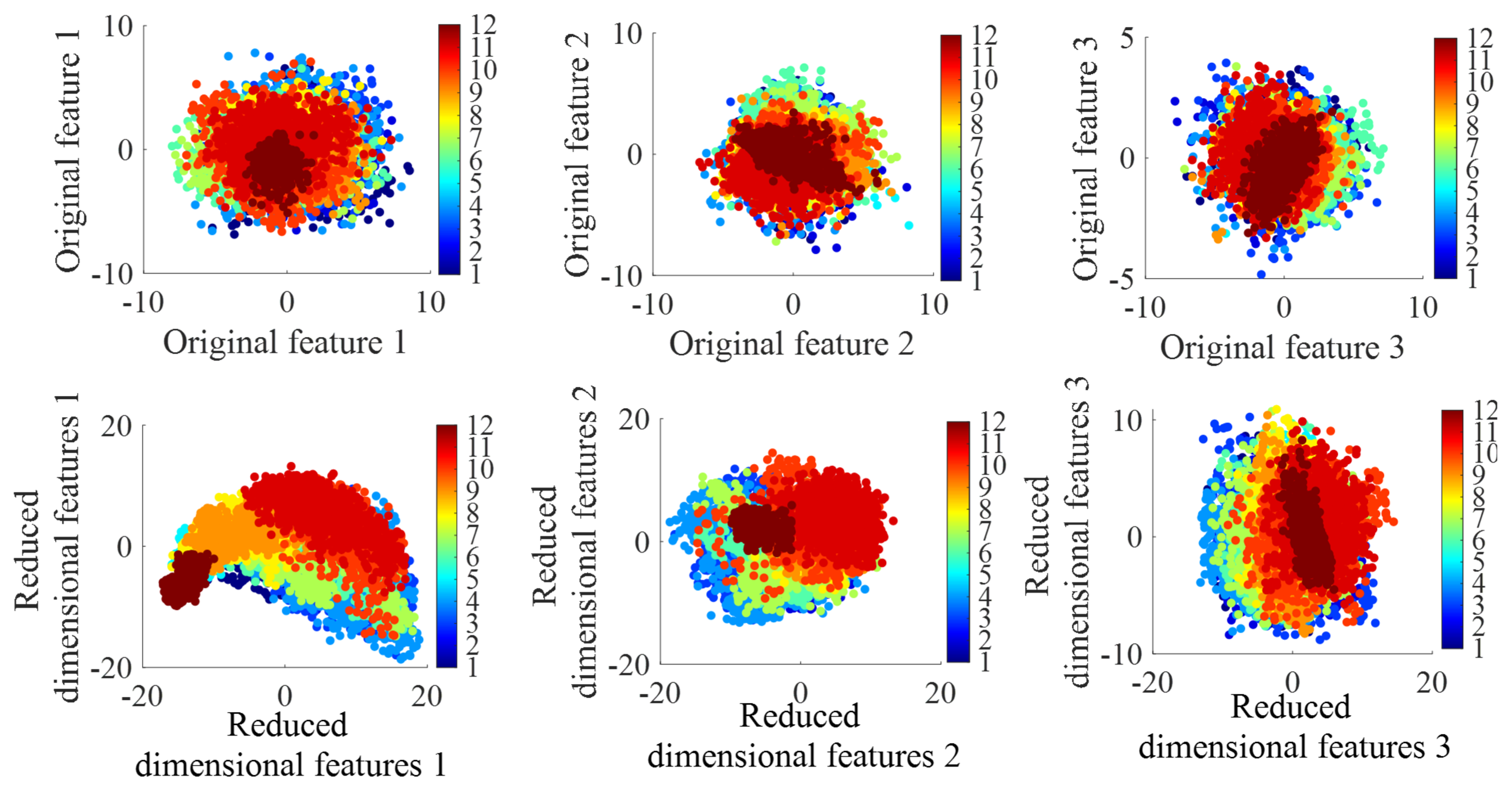

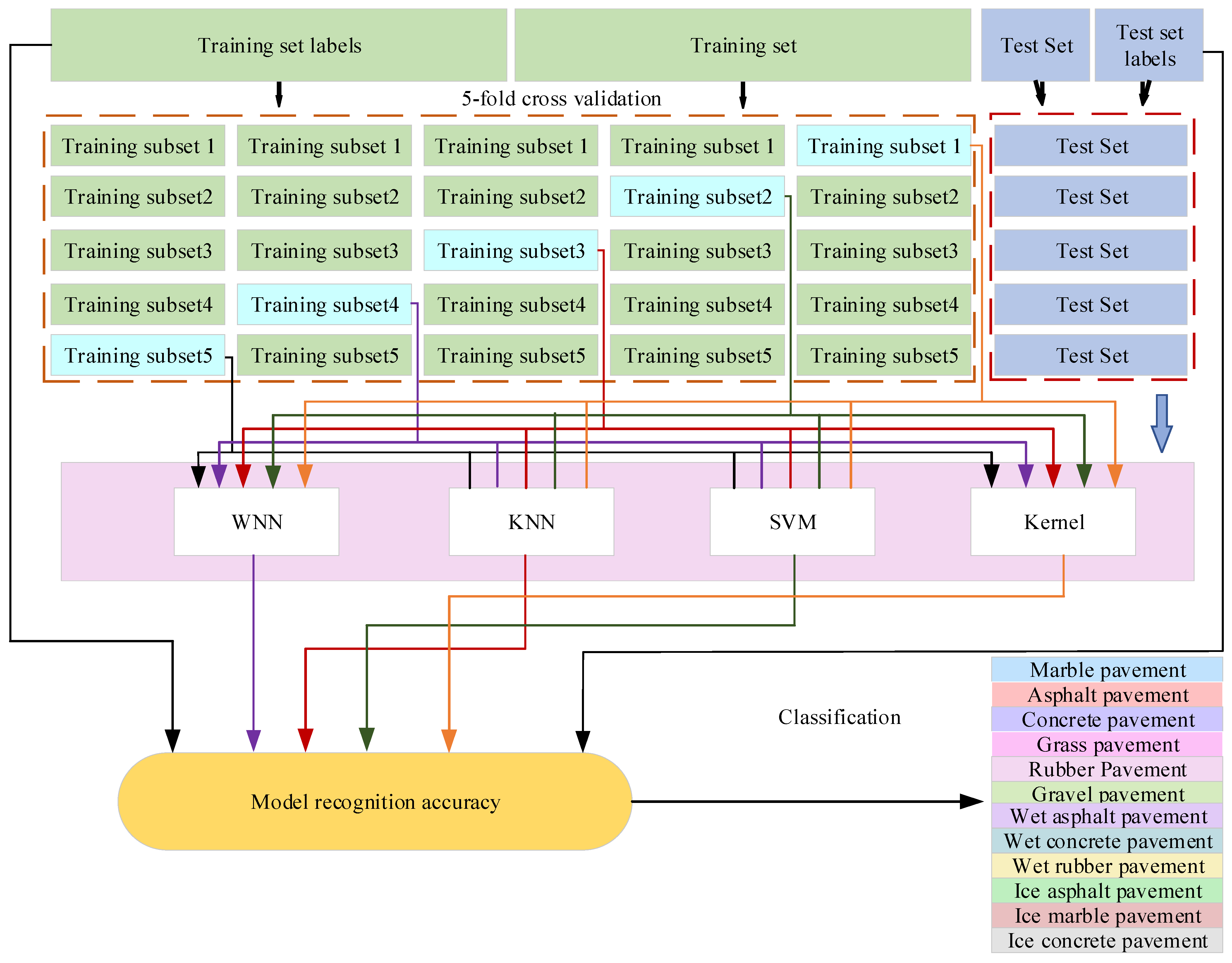

4. Feature-Level Fusion and Machine Learning Classifier

4.1. Dataset Construction

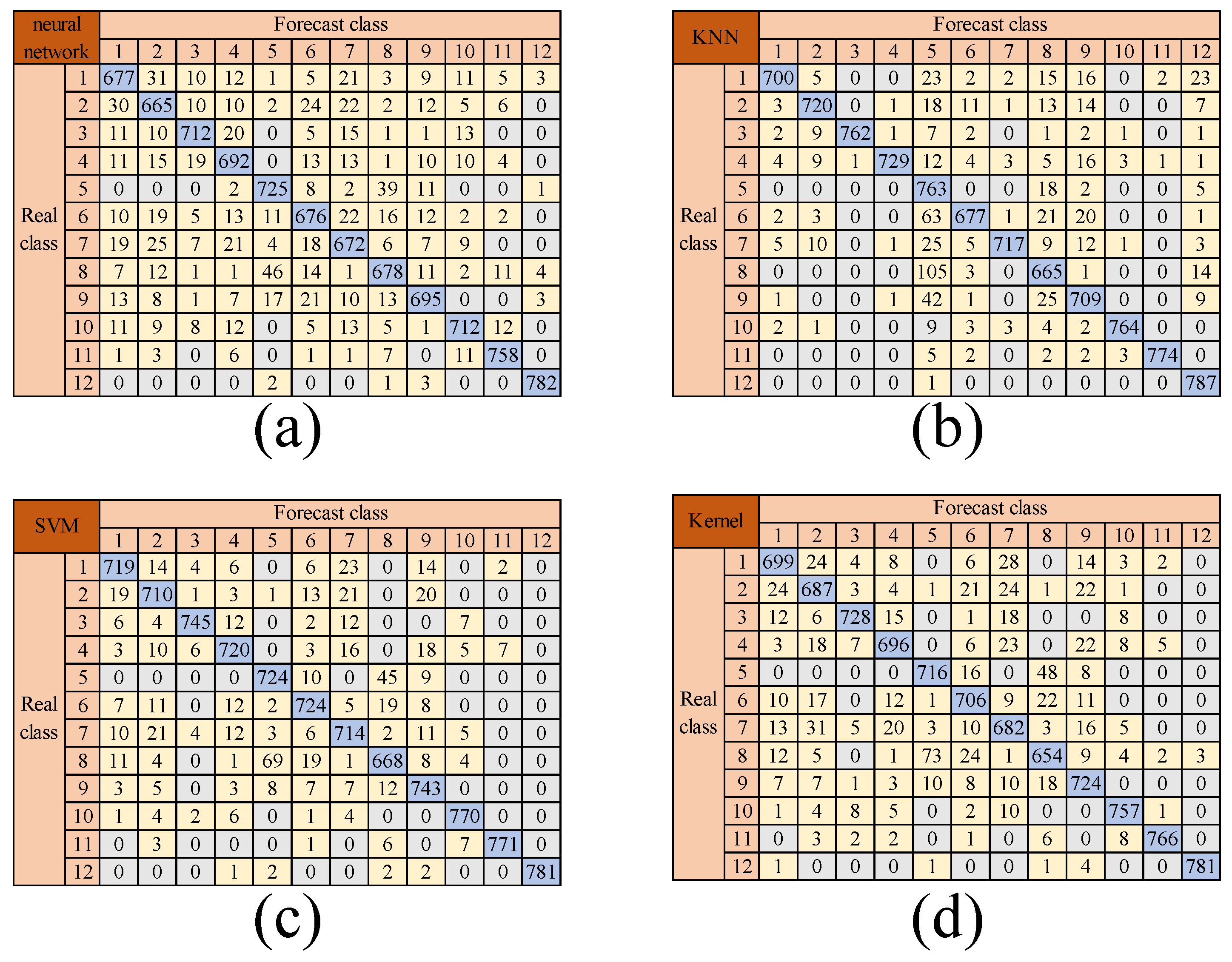

4.2. Road Surface Recognition Classifier Training

5. Real Vehicle Test Verification

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| WNN | Multidisciplinary Digital Publishing Institute |

| KNN | K-Nearest Neighbors |

| SVM | Support Vector Machine |

| CNN | Convolutional Neural Network |

| RBF | Radial Basis Function |

| BP | Backpropagation |

| MFCC | Mel-Frequency Cepstral Coefficient |

| ANN | Artificial Neural Network |

| CWT | Continuous Wavelet Transform |

| DWT | Discrete Wavelet Transform |

| PCA | Principal Component Analysis |

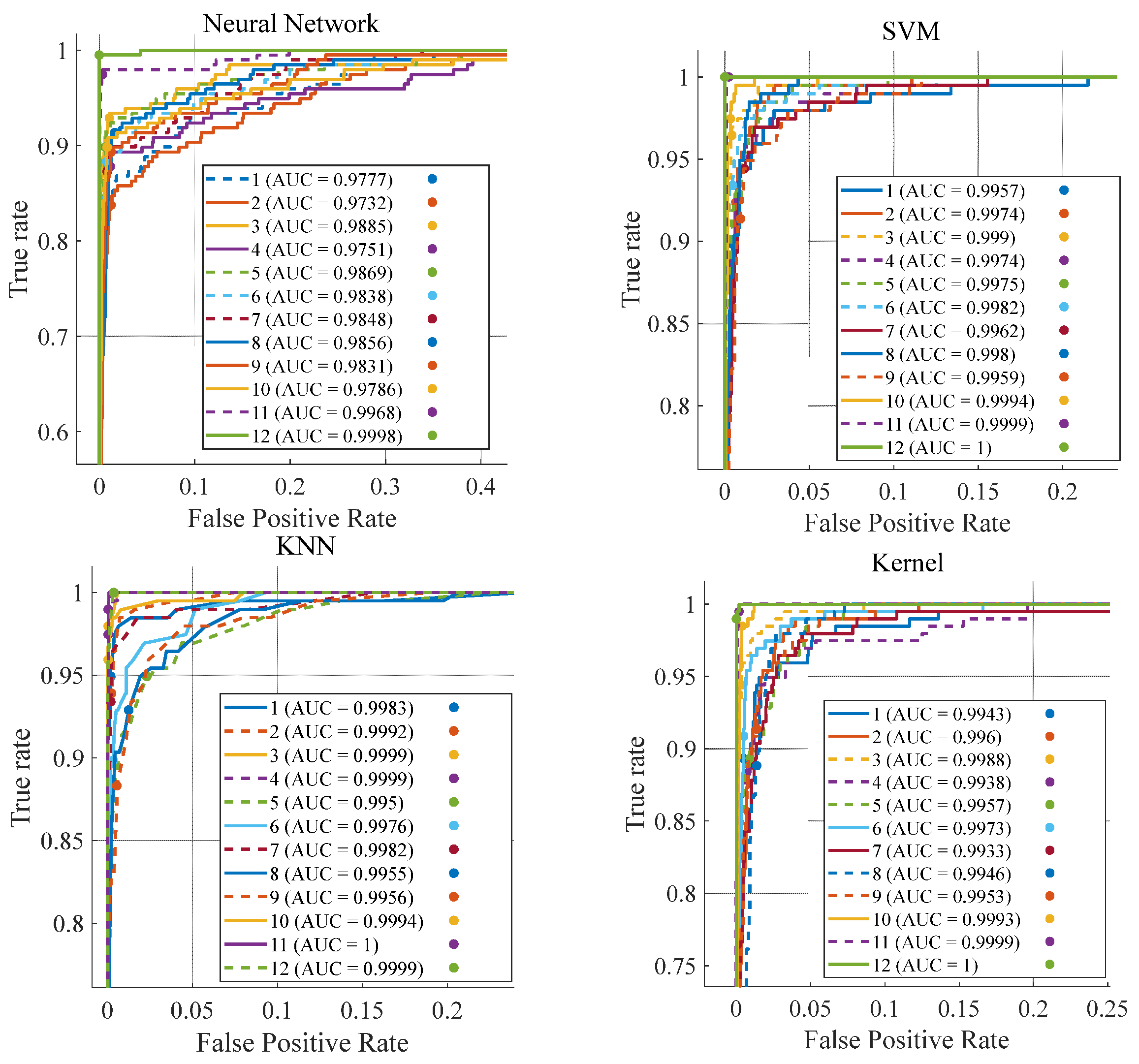

| ROC | Receiver Operating Characteristic |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| AUC | Area Under the Curve |

References

- Cao, D.; Wang, X.; Li, L.; Lv, C.; Na, X.; Xing, Y.; Li, X.; Li, Y.; Chen, Y.; Wang, F.-Y. Future directions of intelligent vehicles: Potentials, possibilities, and perspectives. IEEE Trans. Intell. Veh. 2022, 7, 7–10. [Google Scholar] [CrossRef]

- Ogunrinde, I.; Bernadin, S. Deep camera–radar fusion with an attention framework for autonomous vehicle vision in foggy weather conditions. Sensors 2023, 23, 6255. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Salimpour, S.; Queralta, J.P.; Westerlund, T. General-purpose deep learning detection and segmentation models for images from a LiDAR-based camera sensor. Sensors 2023, 23, 2936. [Google Scholar] [CrossRef] [PubMed]

- Šabanovič, E.; Žuraulis, V.; Prentkovskis, O.; Skrickij, V. Identification of road-surface type using deep neural networks for friction coefficient estimation. Sensors 2020, 20, 612. [Google Scholar] [CrossRef]

- Marsh, B.; Sadka, A.H.; Bahai, H. A critical review of deep learning-based multi-sensor fusion techniques. Sensors 2022, 22, 9364. [Google Scholar] [CrossRef]

- Obet, R.; Juliandy, C.; Ndi, A.; Endri, H.; Hendrik, J.; Tarigan, F.A. Image road surface classification based on GLCM feature using LGBM classifier. IOP Conf. Ser. Earth Environ. Sci. 2022, 1083, 012006. [Google Scholar] [CrossRef]

- Chen, C.; Seo, H.; Jun, C.H.; Zhao, Y. Pavement crack detection and classification based on fusion feature of LBP and PCA with SVM. Int. J. Pavement Eng. 2022, 23, 3274–3283. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4353–4361. [Google Scholar] [CrossRef]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road extraction from high-resolution remote sensing imagery using refined deep residual convolutional neural network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef]

- Zhao, J.; Wu, H.; Chen, L. Road surface state recognition based on SVM optimization and image segmentation processing. J. Adv. Transp. 2017, 2017, 6458495. [Google Scholar] [CrossRef]

- Liu, X.Y.; Huang, Q.D. Study on classifier of wet-road images based on SVM. J. Transp. Sci. Eng. 2011, 35, 784–787. (In Chinese) [Google Scholar]

- Wan, J.; Zhao, K.; Wang, W.F. Classification of slippery road images based on high-dimensional features and RBF neural network. Transp. Inf. Saf. 2013, 31, 32–35. (In Chinese) [Google Scholar]

- Wang, X.W.; Li, S.Y.; Liang, X.; Li, S.H.; Zheng, J.J. A complex pavement rapid recognition model based on structural reparameterization and adaptive attention. China J. Highway Transp. 2024, 37, 245–258. (In Chinese) [Google Scholar] [CrossRef]

- Aki, M.; Rojanaarpa, T.; Nakano, K.; Suda, Y.; Takasuka, N.; Isogai, T.; Kawai, T. Road surface recognition using laser radar for automatic platooning. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2800–2810. [Google Scholar] [CrossRef]

- Liu, B.; Zhao, D.; Zhang, H. Road classification using 3D LiDAR sensor on vehicle. Meas. Sci. Technol. 2023, 34, 065201. [Google Scholar] [CrossRef]

- Lalonde, J.F.; Vandapel, N.; Huber, D.F.; Hebert, M. Natural terrain classification using three-dimensional ladar data for ground robot mobility. J. Field Robot. 2006, 23, 839–861. [Google Scholar] [CrossRef]

- Stove, A.G. Linear FMCW radar techniques. IEE Proc. F Radar Signal Process. 1992, 139, 343–350. [Google Scholar] [CrossRef]

- Kim, M.H.; Park, J.; Choi, S. Road type identification ahead of the tire using D-CNN and reflected ultrasonic signals. Int. J. Automot. Technol. 2021, 22, 47–54. [Google Scholar] [CrossRef]

- Schneider, R.; Didascalou, D.; Wiesbeck, W. Impact of road surfaces on millimeter-wave propagation. IEEE Trans. Veh. Technol. 2000, 49, 1314–1320. [Google Scholar] [CrossRef]

- Harikrishnan, P.M.; Gopi, V.P. Vehicle vibration signal processing for road surface monitoring. IEEE Sens. J. 2017, 17, 5192–5197. [Google Scholar] [CrossRef]

- Han, K.; Choi, M.; Choi, S.B. Estimation of the tire cornering stiffness as a road surface classification indicator using understeering characteristics. IEEE Trans. Veh. Technol. 2018, 67, 6851–6860. [Google Scholar] [CrossRef]

- Gueta, L.B.; Sato, A. Classifying road surface conditions using vibration signals. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 39–43. [Google Scholar] [CrossRef]

- Ao, X.; Wang, L.M.; Hou, J.X.; Xue, Y.Q.; Rao, S.J.; Zhou, Z.Y.; Jia, F.X.; Zhang, Z.Y.; Li, L.M. Road recognition and stability control for unmanned ground vehicles on complex terrain. IEEE Access 2023, 11, 77689–77702. [Google Scholar] [CrossRef]

- Wang, B.; Guan, H.; Lu, P.; Zhang, A. Road surface condition identification approach based on road characteristic value. J. Terramech. 2014, 56, 103–117. [Google Scholar] [CrossRef]

- Gailius, D.; Jacenas, S. Ice detection on a road by analyzing tire to road friction ultrasonic noise. Ultragarsas 2007, 62, 17–20. [Google Scholar]

- Alonso, J.; López, J.M.; Pavón, I.; Recuero, M.; Asensio, C.; Arcas, G.; Bravo, A. On-board wet road surface identification using tyre/road noise and support vector machines. Appl. Acoust. 2014, 76, 407–415. [Google Scholar] [CrossRef]

- Zhang, L.; Guan, K.R.; Lin, X.L.; Guo, P.Y.; Wang, Z.P.; Sun, F.C. Method for estimating pavement adhesion coefficient based on the fusion of image recognition and dynamics. Automot. Eng. 2023, 45, 1222–1234, 1262. (In Chinese) [Google Scholar] [CrossRef]

- Safont, G.; Salazar, A.; Rodriguez, A.; Vergara, L. A multisensor system for road surface identification. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; pp. 703–706. [Google Scholar] [CrossRef]

- Yanik, M.; Chandrasekaran, A.K.; Rao, S. Machine Learning on the Edge with the mmWave Radar Device IWRL6432; SWRA774; Texas Instruments: Dallas, TX, USA, 2023; Available online: https://www.ti.com/lit/wp/swra774/swra774.pdf (accessed on 8 June 2025).

- Jaén-Vargas, M.; Leiva, K.M.R.; Fernandes, F.; Gonçalves, S.B.; Silva, M.T.; Lopes, D.S.; Olmedo, J.J.S. Effects of sliding window variation in the performance of acceleration-based human activity recognition using deep learning models. PeerJ Comput. Sci. 2022, 8, e1052. [Google Scholar] [CrossRef]

- Guo, T.; Zhang, T.; Lim, E.; López-Benítez, M.; Ma, F.; Yu, L. A review of wavelet analysis and its applications: Challenges and opportunities. IEEE Access 2022, 10, 58869–58903. [Google Scholar] [CrossRef]

- Huang, J.; Jiang, B.; Xu, C.; Wang, N. Slipping detection of electric locomotive based on empirical wavelet transform, fuzzy entropy algorithm and support vector machine. IEEE Trans. Veh. Technol. 2021, 70, 7558–7570. [Google Scholar] [CrossRef]

- Panigrahi, B.K.; Ray, P.K.; Rout, P.K.; Mohanty, A.; Pal, K. Detection and classification of faults in a microgrid using wavelet neural network. J. Inf. Optim. Sci. 2018, 39, 327–335. [Google Scholar] [CrossRef]

- Padhy, S.K.; Panigrahi, B.K.; Ray, P.K.; Satpathy, A.K.; Nanda, R.P.; Nayak, A. Classification of faults in a transmission line using artificial neural network. In Proceedings of the 2018 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2018; pp. 239–243. [Google Scholar]

- Osadchiy, A.; Kamenev, A.; Saharov, V.; Chernyi, S. Signal processing algorithm based on discrete wavelet transform. Designs 2021, 5, 41. [Google Scholar] [CrossRef]

- Daubechies, I. Ten Lectures on Wavelets; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

- Mallat, S. A Wavelet Tour of Signal Processing, 2nd ed.; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Martinez-Ríos, E.A.; Bustamante-Bello, R.; Navarro-Tuch, S.; Perez-Meana, H. Applications of the Generalized Morse Wavelets: A Review. IEEE Access 2022, 11, 667–688. [Google Scholar] [CrossRef]

- Raath, K.C.; Ensor, K.B. Time-Varying Wavelet-Based Applications for Evaluating the Water-Energy Nexus. Front. Energy Res. 2020, 8, 118. [Google Scholar] [CrossRef]

- Mallat, S.G. A Theory for Multiresolution Signal Decomposition: The Wavelet Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 11, 674–693. [Google Scholar] [CrossRef]

- Sang, Y.F.; Singh, V.P.; Sun, F.; Chen, Y.; Liu, Y.; Yang, M. Wavelet-Based Hydrological Time Series Forecasting. J. Hydrol. Eng. 2016, 21, 06016001. [Google Scholar] [CrossRef]

- Wan, S.; Li, S.; Chen, Z.; Tang, Y.; Wu, X. An Ultrasonic-AI Hybrid Approach for Predicting Void Defects in Concrete-Filled Steel Tubes via Enhanced XGBoost with Bayesian Optimization. Case Stud. Constr. Mater. 2025, 22, e04359. [Google Scholar] [CrossRef]

| Road Surface Type | Average Value | Standard Deviation | Range Value | Median | Quartiles | Seventh Quartile |

|---|---|---|---|---|---|---|

| Concrete | 8020.94 | 5872.46 | 22,421.27 | 6606.87 | 3124.5 | 12,216.23 |

| Marble | 9403.71 | 7466.94 | 29,010.04 | 7321.58 | 3483.61 | 13,524 |

| Asphalt | 8031.41 | 5146.02 | 22,615.45 | 6933.04 | 4176.95 | 10,811.43 |

| Grass | 3273.85 | 1622.93 | 7494.25 | 2987.36 | 2046.17 | 4215.78 |

| Rubber | 6634.26 | 2890.66 | 13,612.79 | 6347.72 | 4543.9 | 8465.43 |

| Gravel | 13,499.05 | 5793.34 | 38,027 | 12,686 | 9247 | 16,850.25 |

| Wet asphalt | 6992.37 | 4368.66 | 21,527 | 5978 | 3695.25 | 9702.75 |

| Wet concrete | 12,057.94 | 7075.07 | 30,550 | 11,537 | 5881.75 | 18,081.5 |

| Wet rubber | 7397.6 | 4287.82 | 27,568 | 6623 | 4294.75 | 9919.5 |

| Ice marble | 6623.7 | 5910.18 | 29,734 | 4719 | 1857.75 | 9635.25 |

| Ice asphalt | 8163.09 | 6072.19 | 27,927 | 6609 | 3489.75 | 11,620.75 |

| Ice concrete | 9677.6 | 8224.39 | 33,982 | 7018 | 2579.75 | 15,509.25 |

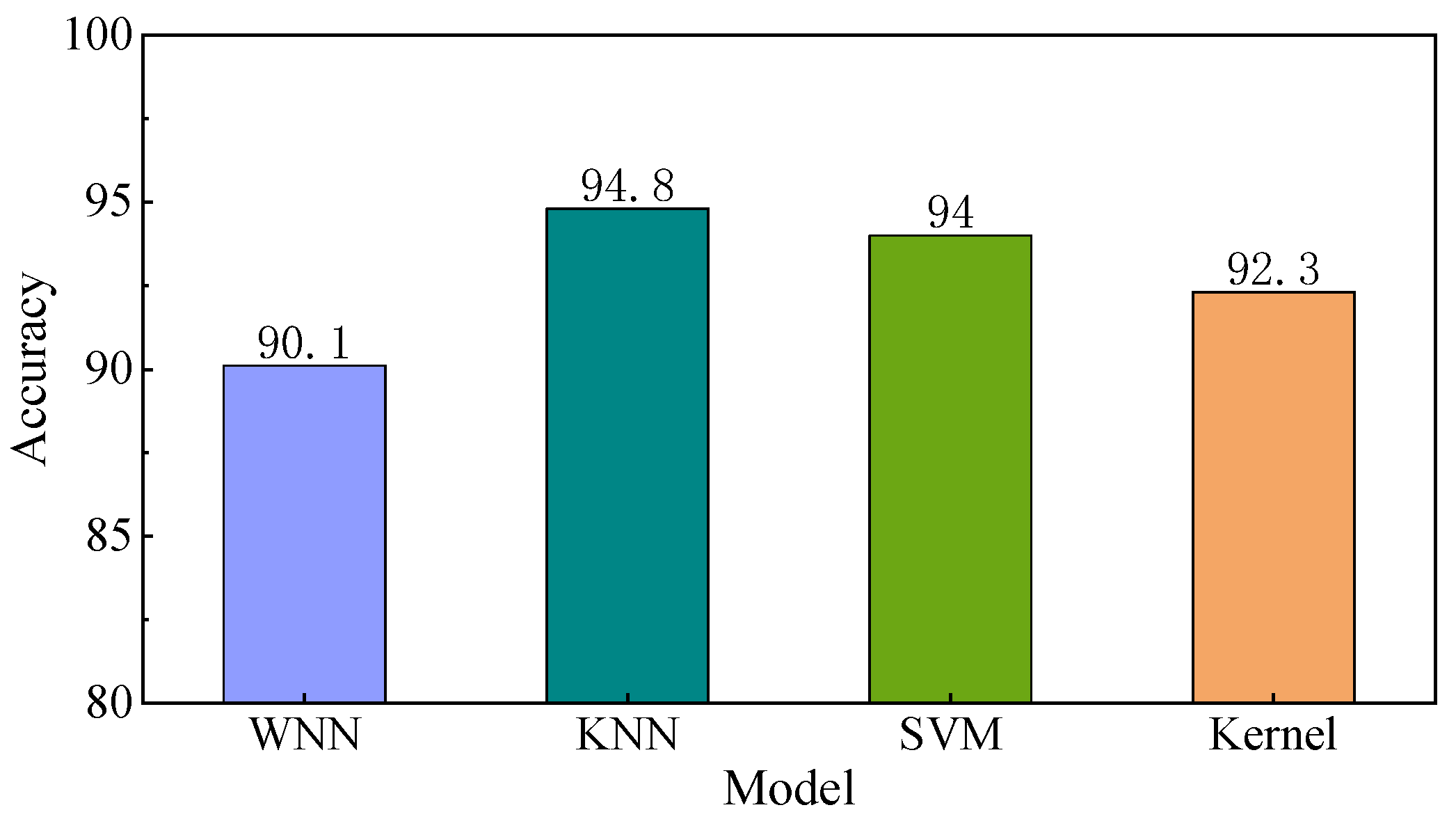

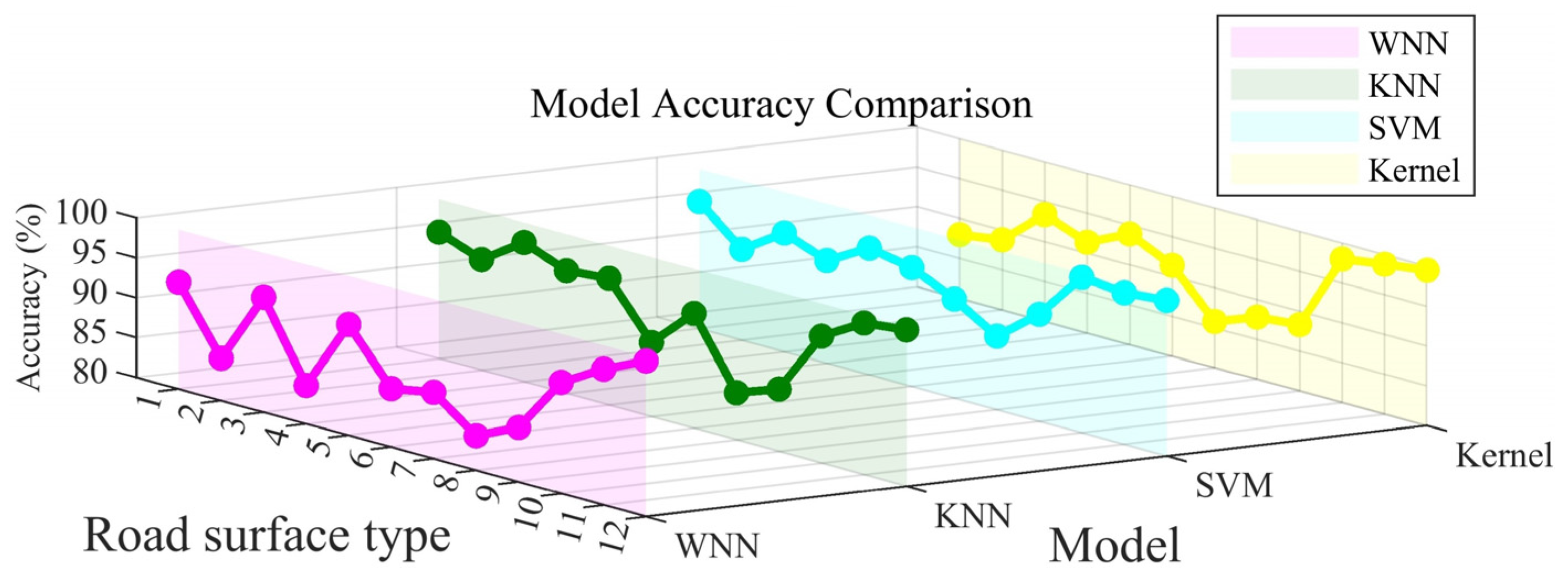

| Road Surface Type | WNN (%) | KNN (%) | SVM (%) | Kernel (%) |

|---|---|---|---|---|

| Ice marble | 84 | 88.8 | 90.9 | 86.5 |

| Ice asphalt | 82 | 91.9 | 91.8 | 86.7 |

| Ice concrete | 90.5 | 95.1 | 94.4 | 92.6 |

| Marble | 85.9 | 92.6 | 91.1 | 87.2 |

| Rubber | 89.7 | 95.4 | 91.6 | 89.8 |

| Asphalt | 89.2 | 87.7 | 92.6 | 89.7 |

| Concrete | 84.4 | 89.6 | 90.6 | 86.8 |

| Wet rubber | 87.2 | 85.9 | 85.2 | 81.9 |

| Wet asphalt | 89.1 | 89 | 93.3 | 91.4 |

| Wet concrete | 90 | 95.6 | 97.6 | 96.2 |

| Gravel | 95.1 | 98.1 | 97.8 | 97.8 |

| Grass | 98.5 | 99.6 | 98.7 | 98.7 |

| Parameter | Numerical Value |

|---|---|

| Frequency (GHz) | 24 |

| Renewal rate (msec) | 50 |

| Actual detection distance (m) | <50 |

| Transmit power (dBm) | 10 |

| Output voltage (V) | 8–16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Shao, J.; Guo, D.; Yin, X.; Zhai, Z.; Duan, Z.; Xu, Y. A Multi-Feature Fusion Approach for Road Surface Recognition Leveraging Millimeter-Wave Radar. Sensors 2025, 25, 3802. https://doi.org/10.3390/s25123802

Qiu Z, Shao J, Guo D, Yin X, Zhai Z, Duan Z, Xu Y. A Multi-Feature Fusion Approach for Road Surface Recognition Leveraging Millimeter-Wave Radar. Sensors. 2025; 25(12):3802. https://doi.org/10.3390/s25123802

Chicago/Turabian StyleQiu, Zhimin, Jinju Shao, Dong Guo, Xuehao Yin, Zhipeng Zhai, Zhibing Duan, and Yi Xu. 2025. "A Multi-Feature Fusion Approach for Road Surface Recognition Leveraging Millimeter-Wave Radar" Sensors 25, no. 12: 3802. https://doi.org/10.3390/s25123802

APA StyleQiu, Z., Shao, J., Guo, D., Yin, X., Zhai, Z., Duan, Z., & Xu, Y. (2025). A Multi-Feature Fusion Approach for Road Surface Recognition Leveraging Millimeter-Wave Radar. Sensors, 25(12), 3802. https://doi.org/10.3390/s25123802