SGF-SLAM: Semantic Gaussian Filtering SLAM for Urban Road Environments

Abstract

1. Introduction

- We designed and modified a new multi-task frontend network, integrating semantic segmentation and feature point detection tasks, and trained the network with an appropriate loss function, achieving both fast and accurate performance.

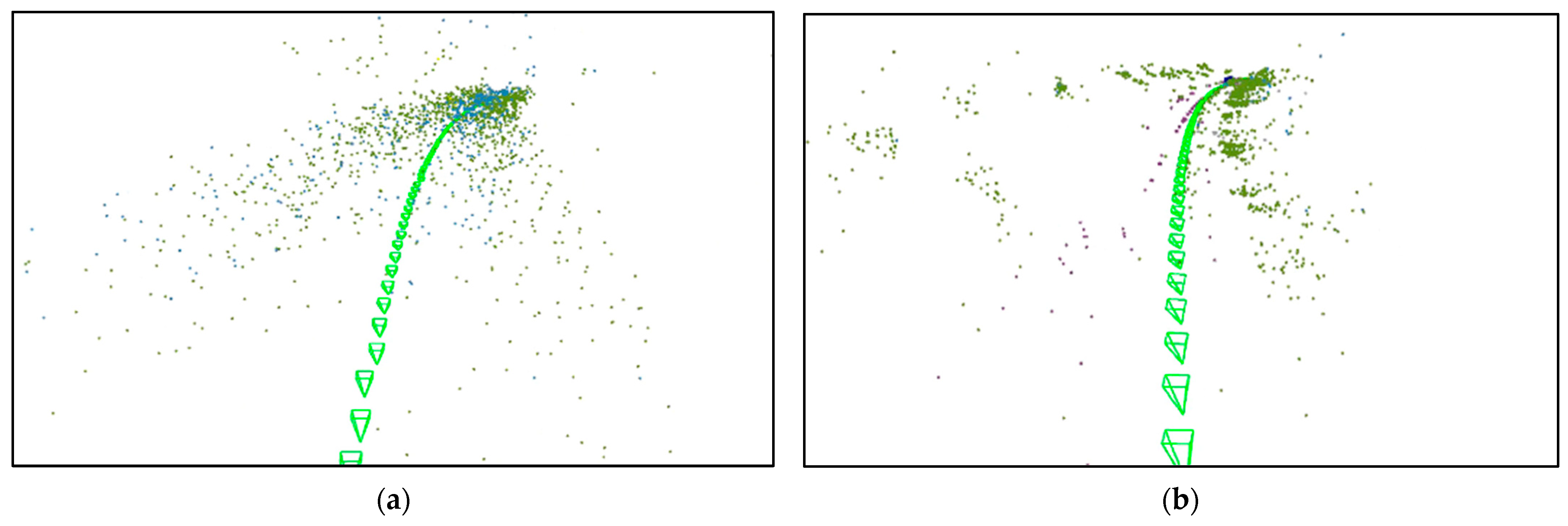

- We proposed a Semantic Gaussian Filter technique for street environments, designing a dynamic mapping framework that effectively shields dynamic objects that change over time in the scene.

- We combined semantic information to continuously update the map for dynamic occlusion areas. This provides stable navigation services and map rendering capabilities.

2. Materials and Methods

2.1. Multi-Task Network

2.2. Network Loss

2.3. Semantic Gaussian Filter

2.4. Map Update and Reload

3. Results

3.1. Setup

3.2. Evaluation of Frontend Network

3.3. Experiments on Odometer Accuracy

3.4. Experiments on Rendering

3.5. Experiments on Campus Datasets

3.6. Experiments on Map Update and Reload

3.7. Ablation Experiments

- Baseline-A: Feature point detection network only. The semantic segmentation branch is removed, and the feature point detection network is constructed purely with the C2F module.

- Baseline-A1: Similar to Baseline-A, but with reduced Conv4 and C2F_4 layers.

- Baseline-B: Semantic segmentation network only. The feature point detection branch is removed, keeping only the STDC-seg backbone, with output as standard semantic segmentation.

- Naive Fusion: Simple parallel training. C2F and STDC-seg branches run in parallel without any multi-task feature interaction mechanism.

- Proposed Full Model: The full fusion model with shared input head network, and dual-branch architecture of C2F and STDC-seg running in parallel.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 139:1–139:14. [Google Scholar] [CrossRef]

- Fisher, A.; Cannizzaro, R.; Cochrane, M.; Nagahawatte, C.; Palmer, J.L. ColMap: A memory-efficient occupancy grid mapping framework. Robot. Auton. Syst. 2021, 142, 103755. [Google Scholar] [CrossRef]

- Liao, Y.; Xie, J.; Geiger, A. Kitti-360: A novel dataset and benchmarks for urban scene understanding in 2d and 3d. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3292–3310. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Yan, C.; Qu, D.; Xu, D.; Zhao, B.; Wang, Z.; Wang, D.; Li, X. Gs-slam: Dense visual slam with 3d gaussian splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 19595–19604. [Google Scholar]

- Matsuki, H.; Murai, R.; Kelly, P.H.; Davison, A.J. Gaussian splatting slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 18039–18048. [Google Scholar]

- Straub, J.; Whelan, T.; Ma, L.; Chen, Y.; Wijmans, E.; Green, S.; Engel, J.J.; Mur-Artal, R.; Ren, C.; Verma, S. The replica dataset: A digital replica of indoor spaces. arXiv 2019, arXiv:1906.05797. [Google Scholar]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.; Stückler, J.; Cremers, D. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1680–1687. [Google Scholar]

- Keetha, N.; Karhade, J.; Jatavallabhula, K.M.; Yang, G.; Scherer, S.; Ramanan, D.; Luiten, J. Splatam: Splat track & map 3d gaussians for dense rgb-d slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 21357–21366. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Ha, S.; Yeon, J.; Yu, H. Rgbd gs-icp slam. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 180–197. [Google Scholar]

- Huang, H.; Li, L.; Cheng, H.; Yeung, S.-K. Photo-slam: Real-time simultaneous localization and photorealistic mapping for monocular stereo and rgb-d cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 21584–21593. [Google Scholar]

- Homeyer, C.; Begiristain, L.; Schnörr, C. DROID-Splat: Combining end-to-end SLAM with 3D Gaussian Splatting. arXiv 2024, arXiv:2411.17660. [Google Scholar]

- Deng, T.; Chen, Y.; Zhang, L.; Yang, J.; Yuan, S.; Liu, J.; Wang, D.; Wang, H.; Chen, W. Compact 3d gaussian splatting for dense visual slam. arXiv 2024, arXiv:2403.11247. [Google Scholar]

- Fei, B.; Xu, J.; Zhang, R.; Zhou, Q.; Yang, W.; He, Y. 3d gaussian splatting as new era: A survey. IEEE Trans. Vis. Comput. Graph. 2024, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Chen, X.; Yan, S.; Cui, Z.; Xiao, H.; Liu, Y.; Zhang, M. ThermalGS: Dynamic 3D Thermal Reconstruction with Gaussian Splatting. Remote Sens. 2025, 17, 335. [Google Scholar] [CrossRef]

- Liu, Y.; Luo, C.; Fan, L.; Wang, N.; Peng, J.; Zhang, Z. Citygaussian: Real-time high-quality large-scale scene rendering with gaussians. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 265–282. [Google Scholar]

- Lin, J.; Li, Z.; Tang, X.; Liu, J.; Liu, S.; Liu, J.; Lu, Y.; Wu, X.; Xu, S.; Yan, Y. Vastgaussian: Vast 3d gaussians for large scene reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 5166–5175. [Google Scholar]

- Liu, Y.; Luo, C.; Mao, Z.; Peng, J.; Zhang, Z. CityGaussianV2: Efficient and Geometrically Accurate Reconstruction for Large-Scale Scenes. arXiv 2024, arXiv:2411.00771. [Google Scholar]

- Yan, Y.; Lin, H.; Zhou, C.; Wang, W.; Sun, H.; Zhan, K.; Lang, X.; Zhou, X.; Peng, S. Street gaussians: Modeling dynamic urban scenes with gaussian splatting. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 156–173. [Google Scholar]

- Zhou, H.; Shao, J.; Xu, L.; Bai, D.; Qiu, W.; Liu, B.; Wang, Y.; Geiger, A.; Liao, Y. Hugs: Holistic urban 3d scene understanding via gaussian splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 21336–21345. [Google Scholar]

- Huang, N.; Wei, X.; Zheng, W.; An, P.; Lu, M.; Zhan, W.; Tomizuka, M.; Keutzer, K.; Zhang, S. $\textit {S}^ 3$ Gaussian: Self-Supervised Street Gaussians for Autonomous Driving. arXiv 2024, arXiv:2405.20323. [Google Scholar]

- Xiao, R.; Liu, W.; Chen, Y.; Hu, L. LiV-GS: LiDAR-Vision Integration for 3D Gaussian Splatting SLAM in Outdoor Environments. IEEE Robot. Autom. Lett. 2024, 10, 421–428. [Google Scholar] [CrossRef]

- Shen, J.; Yu, H.; Wu, J.; Yang, W.; Xia, G.-S. LiDAR-enhanced 3D Gaussian Splatting Mapping. arXiv 2025, arXiv:2503.05425. [Google Scholar]

- Jiang, C.; Gao, R.; Shao, K.; Wang, Y.; Xiong, R.; Zhang, Y. Li-gs: Gaussian splatting with lidar incorporated for accurate large-scale reconstruction. IEEE Robot. Autom. Lett. 2024, 10, 1864–1871. [Google Scholar] [CrossRef]

- Wang, S.; Leroy, V.; Cabon, Y.; Chidlovskii, B.; Revaud, J. Dust3r: Geometric 3d vision made easy. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 20697–20709. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking bisenet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Potje, G.; Cadar, F.; Araujo, A.; Martins, R.; Nascimento, E.R. Xfeat: Accelerated features for lightweight image matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16 June 2024; pp. 2682–2691. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Kerbl, B.; Meuleman, A.; Kopanas, G.; Wimmer, M.; Lanvin, A.; Drettakis, G. A hierarchical 3d gaussian representation for real-time rendering of very large datasets. ACM Trans. Graph. (TOG) 2024, 43, 1–15. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International conference on computer vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5173–5182. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ international conference on intelligent robots and systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Cabon, Y.; Murray, N.; Humenberger, M. Virtual kitti 2. arXiv 2020, arXiv:2001.10773. [Google Scholar]

- Zhao, X.; Wu, X.; Miao, J.; Chen, W.; Chen, P.C.; Li, Z. Alike: Accurate and lightweight keypoint detection and descriptor extraction. IEEE Trans. Multimed. 2022, 25, 3101–3112. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

| Methods | Acc (5°) ↑ | Acc (10°) * ↑ | Dim ↓ | FPS ↑ |

|---|---|---|---|---|

| ORB [28] | 13.8 | 31.9 | 256-b | 45.6 |

| Superpoint [32] | 45.0 | 67.4 | 256-f | 4.3 |

| Xfeat [31] | 41.9 | 74.9 | 64-f | 22.5 |

| seperate ours | 42.1 | 75.2 | 64-f | 29.1 |

| ours | 42.0 | 75.4 | 64-f | 35.8 |

| Methods | (m) ↓ | (deg/100 m) ↓ | (%) ↓ |

|---|---|---|---|

| ORB-SLAM3 [28] | 2.56 | 0.22 | 0.72 |

| Photo-SLAM [13] | 2.55 | 0.24 | 0.77 |

| DROID-Splat [14] | 10.53 | 1.66 | 2.32 |

| ours | 2.37 | 0.21 | 0.69 |

| Methods | KITTI Scene 02 | KITTI Scene 06 | vKITTI Scene 02 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ | |

| Photo-SLAM [13] | 24.04 | 0.654 | 0.182 | 24.67 | 0.689 | 0.141 | 23.92 | 0.611 | 0.098 |

| Hugs [22] | 25.42 | 0.821 | 0.092 | 28.20 | 0.919 | 0.027 | 26.21 | 0.911 | 0.040 |

| Ours | 26.13 | 0.828 | 0.088 | 29.40 | 0.906 | 0.026 | 27.49 | 0.913 | 0.037 |

| Methods | PSNR ↑ | SSIM ↑ | LPIPS ↓ | Tracking FPS ↑ | Rendering FPS ↑ | (m) ↓ |

|---|---|---|---|---|---|---|

| ORB-SLAM3 [28] | - | - | - | 59.31 | - | 2.32 |

| Photo-SLAM [13] | 21.33 | 0.778 | 0.365 | 47.47 | 798.72 | 2.47 |

| HUGs [22] | 22.26 | 0.820 | 0.184 | - | 92.57 | - |

| Ours | 23.38 | 0.870 | 0.156 | 25.64 | 782.63 | 2.26 |

| Methods | Repetability ↑ | Acc (10°) * ↑ | mIoU ↑ | FPS ↑ |

|---|---|---|---|---|

| Baseline-A | 71.4 | 76.1 | - | 37.5 |

| Baseline-A1 | 45.0 | 55.7 | - | 41.3 |

| Baseline-B | - | - | 74.5 | 88.3 |

| Naive Fusion | 70.9 | 75.6 | 74.3 | 29.1 |

| Proposed Full Model | 71.5 | 75.3 | 74.2 | 35.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, Z.; Wang, R. SGF-SLAM: Semantic Gaussian Filtering SLAM for Urban Road Environments. Sensors 2025, 25, 3602. https://doi.org/10.3390/s25123602

Deng Z, Wang R. SGF-SLAM: Semantic Gaussian Filtering SLAM for Urban Road Environments. Sensors. 2025; 25(12):3602. https://doi.org/10.3390/s25123602

Chicago/Turabian StyleDeng, Zhongliang, and Runmin Wang. 2025. "SGF-SLAM: Semantic Gaussian Filtering SLAM for Urban Road Environments" Sensors 25, no. 12: 3602. https://doi.org/10.3390/s25123602

APA StyleDeng, Z., & Wang, R. (2025). SGF-SLAM: Semantic Gaussian Filtering SLAM for Urban Road Environments. Sensors, 25(12), 3602. https://doi.org/10.3390/s25123602