Abstract

Scientific machine learning (SciML) offers an emerging alternative to the traditional modeling approaches for wave propagation. These physics-based models rely on computationally demanding numerical techniques. However, SciML extends artificial neural network-based wave models with the capability of learning wave physics. Contrary to the physics-intensive methods, particularly physics-informed neural networks (PINNs) presented earlier, this study presents data-driven frameworks of physics-guided neural networks (PgNNs) and neural operators (NOs). Unlike PINNs and PgNNs, which focus on specific PDEs with respective boundary conditions, NOs solve a family of PDEs and hold the potential to easily solve different boundary conditions. Hence, NOs provide a more generalized SciML approach. NOs extend neural networks to map between functions rather than vectors, enhancing their applicability. This review highlights the potential of NOs in wave propagation modeling, aiming to advance wave-based structural health monitoring (SHM). Through comparative analysis of existing NO algorithms applied across different engineering fields, this study demonstrates how NOs improve generalization, accelerate inference, and enhance scalability for practical wave modeling scenarios. Lastly, this article identifies current limitations and suggests promising directions for future research on NO-based methods within computational wave mechanics.

1. Introduction

Monitoring structural performance for damage and durability is instrumental in different engineering disciplines, including aerospace [1,2], civil [3], mechanical [4], and naval [5,6,7] structures. Structural health monitoring (SHM) now provides automated, real-time insights, moving beyond the limitations of traditional non-destructive testing and evaluation (NDT&E) [8,9]. An SHM system consists of an in-service data collection setup and signal analysis capabilities. The core concept is to collect structural responses with distributed sensors, followed by analyzing them to extract damage-sensitive features and predict the health status using a physics-based or data-driven model [10,11]. Over the past decades, a broad range of SHM techniques have been introduced for practical applications [12]. Among them, guided wave-based SHM techniques are widely adopted in the community [13,14,15].

Guided waves are specific types of elastic waves in ultrasonic and acoustic frequencies that propagate in solid plates or layers, governed by the structural form or geometric boundary of the medium [16,17]. Therefore, the propagation characteristics depend on the density and elastic properties of the medium. Two key features make guided waves highly effective for damage detection: short wavelengths at high frequencies and low attenuation over distance. Due to this nature, they are highly sensitive to small defects and efficient at covering large structural areas [12]. While guided waves operate over larger structural scales, surface acoustic waves (SAWs) are suited for surface-sensitive or micro-scale SHM applications. Beyond their diverse applications in biosensing [18], microfluidics [19], and MEMS [20], SAWs are extensively used for non-destructive material characterization [21,22]. Their ability to detect minute surface disturbances makes them well-suited for localized damage monitoring [20]. Thus, it is evident that the success of building robust and reliable SHM critically depends on the study of elastic and acoustic wave analysis. Given this necessity, efficient numerical tools are indispensable to facilitate the study of wave analysis.

To this date, there are many numerical methods available for wave analysis. Among them, finite element (FE)-based methods are most common [23,24]. To name a few more: spectral element method (SEM) [25,26,27], finite difference method (FDM) [28], boundary element method (BEM) [29], mass spring lattice model (MSLM) [30], finite strip method (FSM) [31,32], peri-elastodynamic [33], cellular automata [34,35], elastodynamic finite integration technique (EFIT) [36], etc. These techniques are well-established and have been effectively serving for decades as standard practice. However, there are some key concerns with these methods. These techniques are computationally very demanding due to their mesh-based nature. Thus, solving higher-dimensional problems using these methods becomes challenging. This particular issue is addressed as the curse of dimensionality (CoD) [37]. Secondly, if the grid size is not sufficiently small relative to the wavelength, discretization errors arise, noticeably compromising the resolution [38]. Also, the Gibbs phenomenon is another well-known numerical artifact characterized by spurious oscillations near non-smooth or discontinuous regions. This issue is common in most computational methods due to the reliance on polynomials, piecewise polynomials, and other basis functions [39,40,41]. Despite their individual advantages and disadvantages, all methods share the common challenge of high computational cost [42].

As an effort to lessen the computational expense, researchers proposed many semi-analytical methods. The distributed point source method (DPSM) is one of the most used techniques. It uses displacement and stress Green’s function in its meshless semi-analytical problem formulation. This method is comparatively more accurate and faster, especially in the frequency domain, than FEM, BEM, and SEM [37,43]. However, this model tends to match the required conditions at some specific points (apex) only, which makes the simulated wavefield a bit weaker than expected [38]. The local interaction simulation approach (LISA) is another noteworthy time domain semi-analytical method [39,40,41]. This technique is computationally heavier as it requires additional local interaction of material points in temporal and spatial domains. Parallel computing is a must to efficiently simulate this method. However, the issue of computational burden persists instead of further progress, making the existing numerical and semi-analytical models impractical for real-world applications [42].

In recent years, there has been a notable shift toward leveraging machine learning (ML) techniques for wave propagation modeling [11,44]. ML methods have the exceptional ability to capture high-level features. Their ability to capture the relation between multidimensional data and target variables is incredible [45]. Moreover, these methods work as an effective solution to the issue of computational expenses associated with existing physics-based models. However, this fact does not lessen the importance of these physics-based models, as they play a key role by providing the ground truth to train the ML models. With the growing data from these physics models and the breakthroughs of ML, the term “scientific machine learning (SciML)” has come to the forefront in many areas. SciML especially refers to the inclusion of domain knowledge (physical principles, constraints, correlations in space and time, etc.) in the ML models through data or modification of the architecture [46,47,48,49,50]. The advantages of SciML include (1) a meshless solution technique, thus no issue of CoD; (2) performs better in higher-dimensional space with advanced neural network (NN) architecture [51,52]; (3) gradient-based optimization instead of linear solvers [53,54]; (4) nonlinear representation of NNs and no reliance on linear piecewise polynomials, (5) offers solutions for forward and inverse problems under the same optimization problem [55,56,57].

Owing to its proven benefits, the field is witnessing diversified research efforts. Thus, the current literature of SciML refers to different nomenclature such as “physics-guided”, “physics-enabled”, “physics-based”, “physics-informed”, “physics-constrained”, and “theory-guided”, to name a few. Faroughi et al. [58] classified the SciML models into four prime methods: physics-guided neural networks (PgNNs), physics-informed neural networks (PINNs), physics-encoded neural networks (PeNNs), and neural operators (NOs). Among these four models, PINNs and PeNNs are considered physics intensive. On the other hand, NOs and PgNN approaches are mostly data driven.

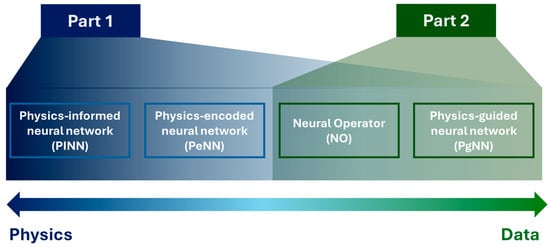

This article is a direct continuation of Ref. [59], which reviewed the progression of the four SciML approaches and their definition in the context of wave propagation. The first part of this article thoroughly discusses the underlying physics of acoustic, elastic, and guided waves. The latter part of this study concentrates on only the physics-intensive PINN model and its application in different engineering fields involving wave propagation. Figure 1 outlines the topics addressed in this two-part review. In this article, the authors focus on data-driven approaches, as mentioned in Part 2 in Figure 1. Based on the definition, the PgNN is the oldest data-driven method to learn patterns from wavefields with and without damage. Based on the literature, the number of articles combining deep learning (DL) and SHM has surpassed the previous records every year until now [60]. In addition to guided wave signals, researchers in this field utilized vibration signals, images, acoustic emission signals, etc., to train the off-the-shelf statistical methods. The records show that, among different data types, vibration signals (displacement, acceleration, strain) are the most used ones for the PgNN approach. In the context of different DL methods, the convolutional neural network (CNN) is the most adopted one for damage-based feature extraction. A good number of review papers already exist covering PgNNs for guided wave-based SHM [61,62,63,64,65]. Thus, this article particularly emphasizes the newly emerging neural operators (NOs).

Figure 1.

Outline of the two-fold study for the application of physics-driven artificial intelligence tailored for wave propagation.

The structure of this article is as follows. Section 2 presents the underlying algorithms of neural operators (NOs) applied to wave propagation problems. Section 3 reviews the use of various NO frameworks in modeling acoustic, elastic, and guided wave phenomena. Section 4 concludes this paper by summarizing key findings, highlighting current challenges, and suggesting directions for future research. For the theoretical formulation of wave equations and related physics, readers are referred to Part 1 of this study [59].

2. Data-Intensive SciML Models: Architecture and Algorithms

SciML models are primarily employed for three core purposes: (i) solving PDEs (PDE solver), (ii) discovering governing equations from data (PDE discovery), and (iii) learning solution operators (operator learning). PDE solvers include methods such as PINNs [66], PeNNs [67,68,69], and PgNNs [70]. These models can be highly data-dependent or physics-dependent based on the objective and problem type (inverse or forward). These approaches are mostly useful for solving existing PDEs for a specific set of physical constraints and fixed parameters for parametric PDEs. However, the second category regarding PDE discovery works on the data to reveal the structure or coefficients of the PDE without any prior knowledge of the equation. Sparse identification of nonlinear dynamical systems (SINDy) by Brunton et al. [71] is an excellent example of this powerful tool.

In particular, this section focuses on the 3rd one, operator learning. It is a purely data-driven approach to solve a family of PDEs, both parametric PDEs and nonparametric PDEs. To this date, operator learning has primarily focused on forward problems, aiming to generalize the solution space [72]. Before diving into different neural operator architectures, a brief description of operator learning in the context of wave equations has been presented first in Section 2.1. Later, different neural operator architectures already utilized to simulate wave propagation are discussed.

2.1. Concept of Operator Learning

A series of established studies [72,73,74] on universal approximation theorems demonstrate that sufficiently large shallow (two-layer) NNs can approximate any continuous function within a bounded domain. This theoretical guarantee makes NNs a powerful approximator. Thus, scholars extended this capability of NNs to approximate operators in functional maps from one function space to another, unlike the usual vector-to-vector mapping. To be more specific, operator learning is a data-driven framework designed to approximate nonlinear operators that map between infinite-dimensional Banach or Hilbert spaces of functions [75,76]. The origin of this concept can be traced back to early work in regression on function spaces, later formalized through rigorous approximation theory for neural operators [77]. According to a study by Kovachki et al. [78], neural operators are currently the only class of models proven to support both universal approximation theory and discretization invariance, unlike conventional deep learning models that rely on fixed-grid inputs and fail to generalize when those grids are reformed.

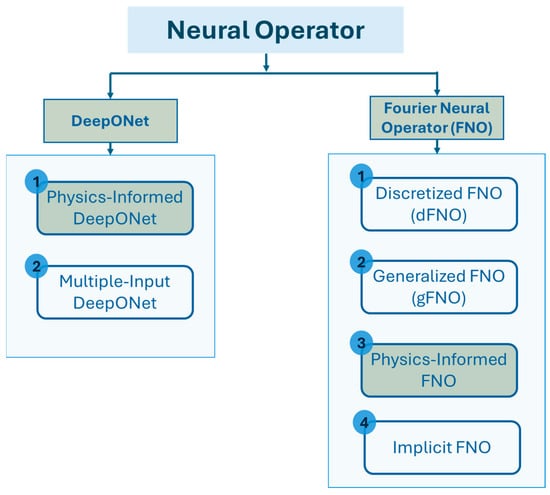

Based on this concept, until now, scholars in this field have proposed various neural operator architectures, namely deep operator network (DeepONet) [79], Fourier neural operator (FNO) [80], wavelet neural operator (WNO) [81], Laplace neural operator (LNO) [82], convolutional neural operator (CNO) [83], spectral neural operator (SNO) [84], and many more. To date, only DeepONet and FNO, along with some of their variants, have been applied to wave PDEs. Figure 2, based on Goswami et al. [85], illustrates some of the major DeepONet and FNO variants, highlighting those used in wave propagation studies. Figure 3 and Figure 4 summarize the key challenges addressed by each variant and outline the corresponding solution strategies within the two neural operator frameworks.

Figure 2.

Variants of DeepONet and FNO architectures [85]; models highlighted in green have been utilized for wave equation modeling.

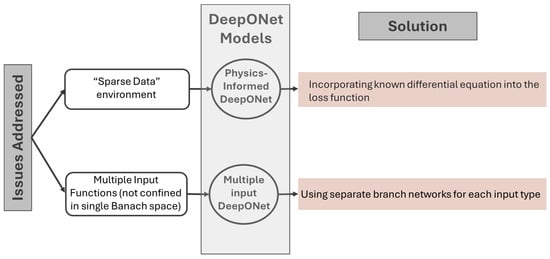

Figure 3.

Motivation and architectural adaptations in DeepONet.

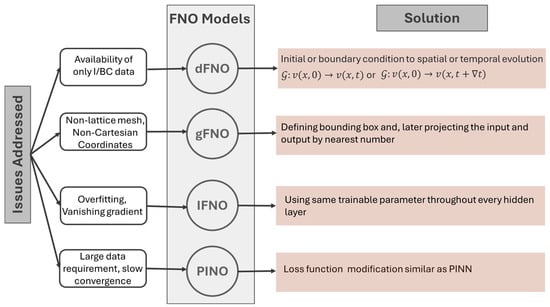

Figure 4.

Motivation and architectural adaptations in FNOs.

Problem Formulation: To illustrate the concept of neural operators in the context of wave equations, this paper considers the homogeneous 3D wave equation expressed by Equation (1).

Here, denotes the wave displacement field, the spatial coordinates, and the temporal variable. To keep the problem simple, homogeneous Dirichlet boundary conditions are considered. Considering space-dependent wave speed in the material, Equation (1) can be rewritten in the following form:

Here, = is used as a vector notation for the 3D space. The material system is modeled within the spatial domain , where the wave speed is defined by a bounded function within . The initial condition of the system is presented by Equation (3), where denotes the initial displacement and denotes the initial velocity. The boundary condition for the system is presented by Equation (4). Here, the final time .

The primary goal here is not to find the solution of the PDE explicitly every time, but to learn the operator. In this context, frameworks such as DeepONet and FNOs aim to learn the operator , where maps the input function f, which consists of initial conditions and the medium properties (for parametric PDEs) or only initial conditions (for nonparametric PDEs with fixed parameters), to predict the solution . Here, refers to the trainable weight , and bias are parameters. can be expressed in the integral form as follows:

More generally,

Here, in Equation (5), is Green’s function for the system where it represents the fundamental solution describing the response at point and time due to a unit impulse applied at location and time , subject to the same boundary and initial conditions as the original wave equation. In Equation (6), the is the generalized kernel function from the formulation perspective of a neural operator, which will be thoroughly discussed in later sections. In the context of wave propagation, parametric and nonparametric PDEs are discussed herein to understand the following sections better. If the velocity profile on the entire structure is fixed (i.e., nonparameterized), which could be inhomogeneous and anisotropic, then the operator that is intended to be learned is parameter-independent. However, if the intention is to find the mapping operator that can map any given velocity profile to an output displacement wavefield , then the operator is a parameter-dependent neural operator.

For wave propagation problems, it is necessary to find the entire displacement wavefield in a material or structure (i.e., at every spatial point ) over a period . Let us divide this time period into two segments, which we can call train and predict segments as and . To clarify further, it is to be noted that for the parameter-independent neural operator , the profile is not provided as input, as it is irrelevant for a fixed wave speed profile. Please note that the fixed wave speed profile does not mean a constant homogeneous speed over the entire space. Rather, it means that the wave speed profile could be inhomogeneous and/or anisotropic, but the operator learned is for that fixed profile . Hence, to learn the parameter-independent operator , the input function as initial conditions would consist of that has prior knowledge of the profile. During the training stage, for a given , needs to be provided. After learning this nonparametric operator, (for this fixed of ) would become -independent or the initial-condition-independent operator. would be ready to predict the wavefield with any other arbitrary initial conditions as input. In this case, the boundary conditions can also be fixed, irrespective of being Dirichlet, Neuman, or mixed boundary conditions.

Alternatively, if the problem is parameterized and the entire displacement wavefield in a material or structure (i.e., at every spatial point ) over a period is asked to find any arbitrary wave speed profile of , then it is necessary to have several random wave speed profiles to train the parametric operator . Here, in this setup, to learn the parametric neural operator , the input function would consist of , and the output function would consist of . Several such random input functions and their corresponding should be used for the training. After learning, for any given profile (different from what was used in training), the parametric would predict the full wavefield .

DeepONet and FNOs are both applicable for parametric and nonparametric PDEs. However, their strength lies in mapping and learning the parametric PDEs. Generally speaking, any physical system governed by PDEs can be expressed as an integral operator mapping from one function to another function through a kernel function or a Green’s function.

2.2. DeepONet

DeepONet, proposed by Lu et al. [79] is one of the most widely adopted neural operator frameworks. Following the problem statement in Section 2.1, DeepONet approximates the solution operator , where is the space of input functions such as initial displacement or initial velocity , and is the solution space containing the corresponding wavefield .

To learn the mapping, DeepONet uses a two-branch architecture. The branch network takes as input a discretized form of the function (e.g., the set of initial velocity or initial displacement at sensor points), while the trunk network takes spatiotemporal coordinates as input. Outputs from the two networks are then combined by the inner product to obtain the final prediction. Equation (7) represents the final prediction.

Here, and denote the outputs of the branch and trunk networks, respectively, and is the latent dimension. This architecture allows the network to learn a generalized operator that can predict solutions at arbitrary spatiotemporal coordinates for a wide range of initial and boundary conditions. The final prediction is compared to the actual wave displacement field to calculate the loss, which is minimized through traditional backpropagation methods [86] to optimize the weights and biases.

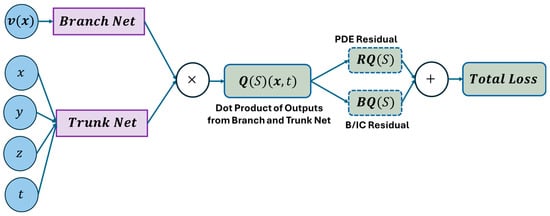

There are a few variants of DeepONet, namely physics-informed DeepONet [85,87,88], multiple-input DeepONet [89,90] and many more. Among these variants of DeepONet, only physics-informed DeepONet has been utilized to simulate wave propagation. Figure 5 represents the architecture of a physics-informed DeepONet to model wave propagation. The architecture of the model follows the exact method explained in this section instead of the only weak formation of the loss function to incorporate the physics in the model. The detailed loss calculation formulation can be found here [91]. The pseudocode presented in Algorithm 1 gives readers a clear concept of DeepONet to simulate wave propagation.

| Algorithm 1 DeepONet |

| 1: |

| 2: |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: |

| 9: |

| 10: |

| 11: else |

| 12: |

| 13: end if |

| 14: |

| 15: end for |

| 16: end for |

Figure 5.

A schematic architecture of DeepONet (physics-informed variant). Reproduced from [91].

2.3. Physical Understanding of DeepONet

Sometimes, it is confusing and challenging to recognize why and how DeepONet could have the potential to understand the physics of a system. In relation to elastic and acoustic wave propagation, the concept of DeepONet is explained herein. Here, it is necessary to reiterate the eigenfunction expansion method for solving the partial differential equation (PDE) from the fundamental. It is known that any solution to the governing PDE would be the superposition of dominant eigenfunctions multiplied by their respective contribution coefficients. The solution of the wave PDE in Equation (1) could be written as

where is the -th basis or space-dependent eigenfunction and is the -th temporal eigenfunction associated with its respective participation coefficient. is the number of modes or basis or eigenfunctions considered in the superposition. Separating the participation coefficients and expressing the temporal and spatial functions into one function, the displacement wavefield could visualize

Specific to the problem presented in Equation (1), if the initial conditions and are known and a wavefield is computationally or experimentally found at some spatiotemporal space or at some sensor locations, then these known datasets create an opportunity to find the internal mapping functions that consist of inherent eigenfunctions of the system. The objective of the problem is to solve the PDE and find the displacement wavefield at any point in space and at any time . Hence, according to Equation (9), if somehow the participation coefficients and the eigenfunctions are found, then the solution at any point in space and at any time could be explicitly obtained. With this fundamental background, DeepONet shoots for finding the solutions for and for number of modes. By now, it is clear that (latent dimension) in Equation (7) is equivalent to the parameter that indicates how many eigenfunctions are to be considered in the solution and is taken as an input from the user. Branch net takes the initial conditions and tries to find the coefficients for all modes. Branch net tries to answer the contribution of each mode or each eigenfunction to the final solution based on the initial condition. Whereas trunk net in Equation (6) tries to find the eigenfunction for all modes. Trunk net tries to evaluate the response of each mode at each point in space and time .

Having these two answers, based on Equation (9), it is obvious that the output from the branch net and trunk net needs to be multiplied to find the displacement wavefield . However, in order to train the model, the total loss is minimized based on the initial condition and the user-provided wavefield as training data. Once the model is trained, it is expected to find the displacement wavefield at any query point , given any initial conditions and . Please note, here, the initial conditions are not required to be the same as the initial conditions used for training. This philosophy is the heart of DeepONet and is much faster and more generalized than PINNs.

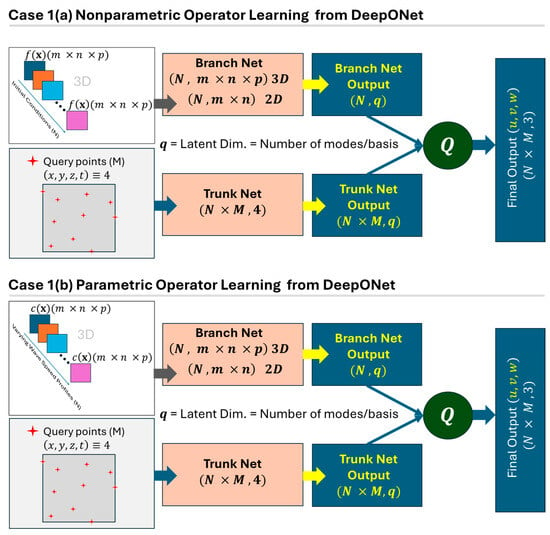

Now, with the above concepts, generally speaking, DeepONet does not explicitly construct the kernel function in Equation (6) but represents the operator via latent basis functions and coefficients. Comparing Equations (6) and (9), it can be said that DeepONet replaces the continuous effect of the kernel integral with a weighted combination of learned basis functions, where the weights depend on the input function, and the basis functions capture how each point in the domain responds. Figure 6 shows two different cases with parametric and nonparametric PDEs modeled using DeepONet for wave propagation.

Figure 6.

A schematic architecture of two cases for nonparametric and parametric PDE modeling using DeepONet (physics-informed variant).

2.4. Fourier Neural Operator (FNO)

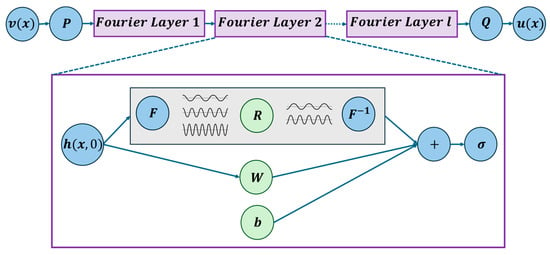

In 2020, Li et al. [80] introduced the FNO. In contrast to DeepONet, FNO uses the convolutional operator in Fourier space instead of the kernel integral operator. These modifications allow the model to capture long-range dependencies more efficiently and to generalize across varying discretization. Unlike DeepONet, FNO avoids explicit basis construction, and it implicitly learns the operator kernel by manipulating Fourier modes.

In the context of the 3D wave propagation problem described in Section 2.1, the goal is to approximate the operator that maps the input function to the wavefield . For nonparametric PDEs, the input function can refer to the initial displacement or initial velocity at time zero or at any other time , labeling (, respectively, or any combinations of these functions (e.g., ). It is important to note that, in many wave propagation problems, the initial conditions at do not contain essential features, or sometimes the displacement and velocity values are zero. Thus, instead of training with the data at , it is essential to provide the data at to learn physics. This is considered a forward nonparametric problem under FNO. Alternatively, a forward parametric problem could be better suited for FNO, where a spatially varying wave speed could be input to an FNO model and displacement or velocity wavefields are output from the model. The power of FNO comes from learning global, resolution-independent mappings between functions in Fourier space—whether those functions encode variable parameters (as in parametric PDEs) or time-evolving fields (as in nonparametric cases).

Irrespective of input types, the input is first lifted to a high-dimensional latent space using a lifting operator , which can be a shallow NN or just a linear transformation, resulting in . Where the second argument of the function signifies the -th Fourier layer. This is passed through a sequence of Fourier layers. The is fed to the first Fourier layer, and the process is subsequently repeated. The operation at the layer is given by

where and are the Fourier and inverse Fourier transforms, is a learnable parameter in Fourier space, is a pointwise linear operator in physical space, and is a bias term. The nonlinearity , typically a GELU [92] or ReLU [93] function or any activation function, adds expressiveness to the model. After passing through all layers, the final representation is projected back to the output space by a decoder , yielding the predicted wavefield . Later, the loss calculation is carried out in the traditional way, or sometimes, based on the problem, physics inclusion is carried out in the weak form. Figure 7 provides a schematic representation of the FNO model. Algorithm 2 presents the pseudocode for the FNO applied to solving the wave equation.

| Algorithm 2 FNO |

| 1: |

| 2: |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: end for |

| 9: |

| 10: |

| 11: |

| 12: end for |

| 13: end for |

Figure 7.

A schematic architecture of FNO. Reproduced from [80].

2.5. Physical Understanding of FNOs

Even it is more confusing and challenging than DeepONet to recognize why and how FNOs can be faster and have broader potential for understanding the physics of a system. The concept of FNOs is explained herein in relation to elastic and acoustic wave propagation. FNOs being mesh independent, a small set of low-resolution data can predict a solution with improved finer resolution while the spatial dimension of the problem remains the same. FNOs have additional potential to solve inverse problems. As discussed under general neural operator, an operator maps from one function to another function. Hence, there is no harm if one can place multiple such operators in a sequence one after another (refer layers in FNOs). Every operator performs the same action as presented in Equation (6) and needs their respective kernel function for their respective input–output mapping. Knowing how DeepONet performs the mapping from Section 2.3, it can be said that the FNO performs the mapping differently than its predecessor. The FNO explicitly approximates the integral operator in Equation (6) using Fourier transforms using the convolution theorem as follows:

The reason behind this thinking is that the influence of the respective input function on the respective output function depends only on the relative distance between and , not on their absolute positions. As convolution in physical space is equivalent to elementwise multiplication in Fourier space, the Fourier transform of Equation (11) can be read as follows:

As the kernel function is not known, its Fourier coefficients are also not known; thus, the Fourier coefficients of the kernel function can be the learning parameter replaced by R as depicted in Figure 7. After taking the inverse Fourier transform, the mapped function can be retrieved as follows:

Based on this explanation, now it is easier to understand Equation (10), where there are multiple FNO layers present, designated with index . Every FNO has its own inputs and respective outputs in a sequence. , the pointwise linear operator, , the bias and activation function in Equation (10) are now self-explanatory from the fundamentals of neural networks.

Further, it is useful to visualize the difference between DeepONet and FNO. A comparative analysis is presented in Table 1.

Table 1.

Comparison of DeepONet and FNO.

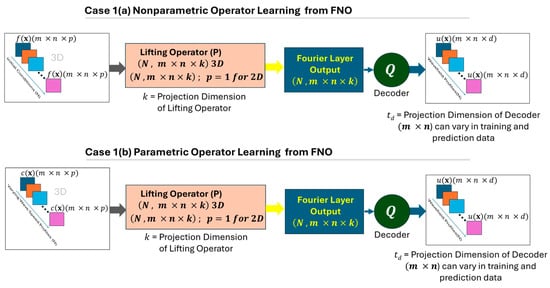

2.6. Application Cases of FNO

Here, two cases that are widely occurring in NDE/SHM that could be benefited by FNO are explained from the fundamental physics and mathematical perspectives. These two cases are listed as (a) Case 1: Forward problem and (b) Case 2: Inverse problem. At its heart, the FNO is a data-driven approximation of a nonlinear operator mapping one function space to another. In physical systems like wave propagation, the governing PDE (like the elastic wave equation) describes how a wavefield evolves over space and time based on material properties like wave speed.

Case 1(a): Predicting the displacement wavefield at a later time with a given displacement wavefield at an earlier time with a known and fixed velocity profile (for nonparametric operators) in the material. Velocity and density could be homogeneous or nonhomogeneous, isotropic or anisotropic throughout the material. This will not be relevant when one is interested in finding the wavefield for different random velocity or density profiles.

Case 1(b): Predicting the displacement wavefield with a given velocity or density profile (considered parameters in wave propagation). The training will consist of a velocity or density map , which could be homogeneous or nonhomogeneous, isotropic or anisotropic throughout the material, and several random states should be trained. This will be a mandatory mapping process when one is interested in finding the wavefield for different random velocity or density profiles. Figure 8 shows two different cases with parametric and nonparametric PDEs for modeling wave propagation with the FNO.

Figure 8.

A schematic architecture of two cases for nonparametric and parametric PDE modeling using FNO.

Case 2: Inferring the material velocity profile from observed wave propagation or recorded wavefield data from specific sensor points. In this case the velocity at each pixel located at is considered to have a different velocity and can be expressed as a function .

The core idea is to approximate the operators ( or ) not in the physical space, but in the Fourier space, where global spatial dependencies (like wave propagation, reflection, and dispersion) are naturally captured through Fourier modes. It is known that Fourier transforms a wavefield into a set of standing and traveling wave modes or sinusoids. The FNO learns how the amplitude and phase of this mode should evolve, depending on the underlying material properties and source characteristics. This was achieved through finding the complex multiplier or the weight tensor per mode that are learned through the training process. Next, by transforming the learned updates back to physical space, the FNO reconstructs the updated wavefield or predicts material properties.

In the forward problem (Case 1), it predicts how a wavefield will propagate over time based on its current state, essentially learning a surrogate model for time-stepping.

In the inverse problem (Case 2), the FNO effectively learns to invert the wave propagation operator by associating observed wavefield patterns with their generating velocity profiles.

3. NO Applications in Wave Propagation

3.1. Wave Propagation with DeepONet

DeepONet has been widely adopted in several research areas. However, the literature lacks in quantity when it comes to simulating wave propagation. Only a few research works in 2024 have been found. Notably, most of these works extended the standard DeepONet architecture to improve performance in solving wave equations.

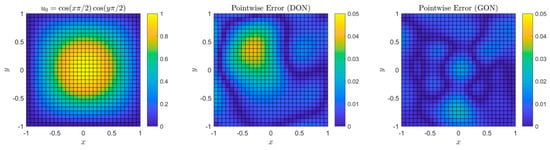

Aldirany et al. [91] first introduced GreenONets, a variant of DeepONet inspired by Green’s functions. The model’s performance was evaluated using the ground truth and vanilla DeepONet while solving the linear wave equation in the 1D and 2D homogeneous and heterogeneous domains. Although GreenONet demonstrated improved accuracy in these cases, the authors raised concerns about the generalizability of the model. A comparative analysis of the performance of DeepONet and GreenONet is presented in Figure 9.

Figure 9.

(Left) initial condition; (middle) pointwise error at t = 1.5 using DeepONets; (right) pointwise error at t = 1.5 using GreenONets, adapted from [91].

Later, Zhu et al. [94] proposed another variation of the DeepONet, named Fourier DeepONet. The authors combined the concepts of FNOs and DeepONet to perform full waveform inversion (FWI). While the original DeepONet architecture was largely retained, the final dot product of the branch and trunk networks was passed through one Fourier layer followed by three U-Fourier layers to enhance the model’s ability to capture high-frequency components. The inclusion of U-Fourier layers resulted in improved predictive accuracy but also introduced a higher computational cost, as U-Fourier layers [95] are more expensive to train. The proposed model demonstrated robust performance across a range of datasets, including flat layers, curved layers, faulted media, and style-transferred geological configurations.

In another study, Guo et al. [96] introduced Inversion DeepONet, a DeepONet-based architecture incorporating an encoder–decoder framework. This approach effectively reduced the dependence on large training datasets by using only source parameters, such as frequency and location, as input. It also addressed the limitations associated with the standard dot product operation between the trunk and branch networks, which often leads to suboptimal performance. The literature suggests that a substantial portion of DeepONet-related work is concentrated in geophysical applications. While most studies propose architectural variations of DeepONet, Li et al. [97] focused on developing more generalized datasets to overcome the limitations of the existing OpenFWI data. As a result, they introduced GlobalTomo, a comprehensive dataset encompassing 3D acoustic and elastic wave propagation for full waveform inversion and seismic wavefield modeling.

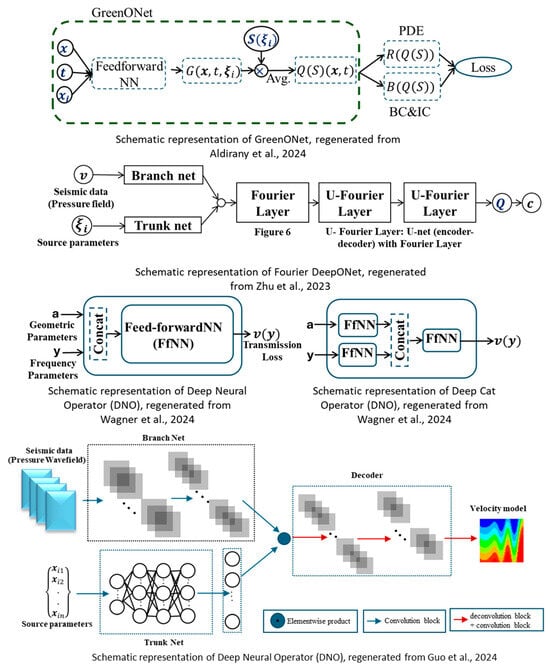

To date, only a single research article has been found utilizing the concept of DeepONet in the field of material property characterization. Wagner et al. [98] explored four different operator learning approaches to investigate the material properties of sonic crystals by solving the acoustic wave equation. Among these, two are DeepONet and FNO, whereas deep neural operator (DNO) and deep cat operator (DCO) are the two new ones, inspired by the concept of the vanilla DeepONet. These two approaches outperformed DeepONet and FNO. However, the study did not compare the results with any simulations generated with the traditional models, which questions the accuracy of the performance of these four models. Figure 10 depicts the architecture of the different modifications of DeepONet, providing a clear idea of each of the model’s algorithms. Table 2 represents a summary of all the research work discussed in this section.

Figure 10.

Different variants of DeepONet architectures to model wave propagation [91,94,96,98].

Table 2.

A selective list of studies leveraging DeepONet for modeling wave propagation for addressing different engineering problems.

3.2. Wave Propagation with FNOs

This section provides a comprehensive review of recent studies employing FNOs for solving wave equations in applied domains. The integration of physics-based wave modeling with data-driven approaches has gained momentum across diverse disciplines, including structural health monitoring [99,100], material design [101,102], and medical imaging [103]. Notably, geophysics stands out for adapting NOs. Currently, scholars in this field are actively harnessing this new approach to reconstructing higher-resolution earth models.

Yang et al. [104] used the NO for the first time in 2021 to simulate the seismic wavefield. To train the FNO, 5000 random velocity models were generated using the spectral element method (SEM). To create a varied range of velocity models, the authors used the von Karman covariance function and different combinations of source receivers and their locations. The trained model successfully reconstructed smooth heterogeneous surfaces and surfaces with sharp discontinuities and shorter wavelengths. This approach bypasses the need for adjoint wavefields, which are typically required in numerical methods for full waveform inversion (FWI). The study concludes that a larger training dataset improves the model’s ability to generalize. It also enables more accurate capture of complex wave phenomena such as reflections, which are challenging for FNOs when trained on limited data.

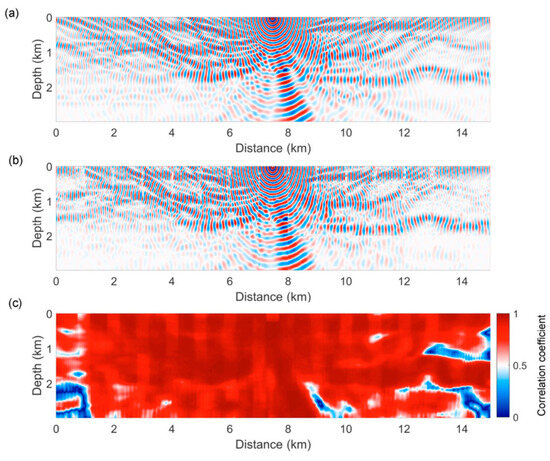

While the previous research group focused on time domain FWI, the very next year, Song and Yang [105] trained FNOs on frequency domain wavefield extrapolation. The research group specifically focused on mapping the low-frequency wavefield (5–12 Hz) to a higher-frequency wavefield (13–30 Hz). Synthetic data were generated using the FDM based on the Marmousi model to train the FNO. The focus of this study was to obtain dispersion-free high-frequency wavefields for large-scale subsurface models with less computational cost. The trained model was experimented with three test cases: a strongly smoothed model, a moderately smoothed model, and the original Marmousi model. For the first two cases, the results from both FDM and FNOs were in good agreement. However, for the original Marmousi model, the correlation coefficient was comparatively high, specifically in the shallow low-frequency region where FDM suffered from higher dispersion error. Figure 11 shows the performance comparison of the original Marmousi model. The FNO proved its worth in both surpassing the FDM in dispersion error and computational cost. According to this study, the trained FNO model was two orders of magnitude faster than FDM.

Figure 11.

Wavefields at 13 Hz from (a) the finite-difference method, (b) from the FNO, and (c) the correlation coefficients between (a,b) corresponding to the original Marmousi model [105].

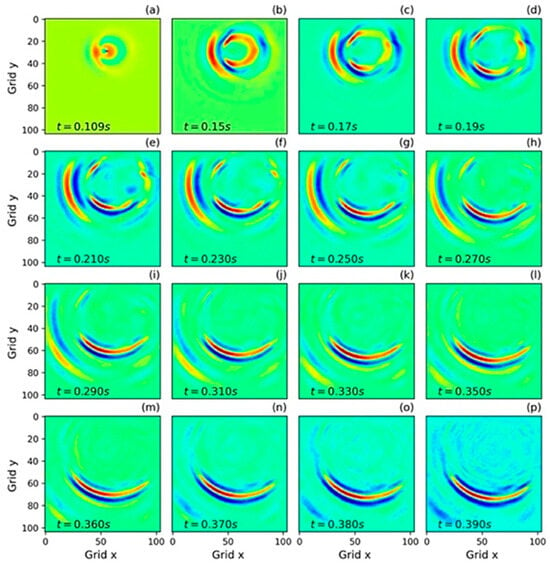

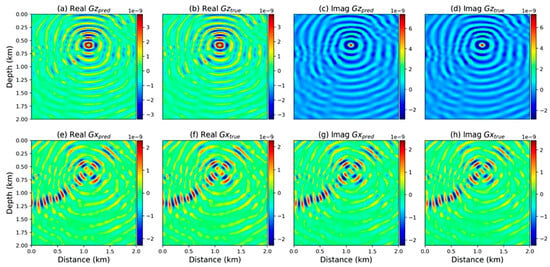

For the first time, Zhang et al. [106] investigated the incorporation of NOs for 2D elastic wave propagation in both time and frequency domains in 2022. The time domain datasets were trained using 100 velocity models with varying shapes and source locations. To generate the frequency domain training dataset, 800 models were derived from a 3D overthrust model of varying source locations and frequencies within a specific range. The trained FNO performed well in both cases, as shown in Figure 12 and Figure 13. However, the performance of the model degrades noticeably in the time domain case with increasing time with amplitude mismatch and source distortion effect. In the frequency domain, the model faced challenges in simulating high-frequency components with intricate patterns.

Figure 12.

Nine snapshots of the x component of the wavefields generated with the FNO. With dimension projection width 60 and 33 Fourier models, adapted from [106].

Figure 13.

The training results in the frequency domain. The main frequency is 31 Hz, and the source location is located at 0.25 km in depth and 1.37 km in distance from the model. (a,b) are the real parts of the wavefields in the z direction. (c,d) are the wavefields in the x direction. (e,f) are the real parts of the fields in the x direction. (g,h) are the imaginary parts of the fields in the x direction, adapted from [106].

From 2021 to 2022, a small number of research works incorporated NOs for simulating acoustic and elastic wave equations. A recurring trend in the literature is the use of traditional numerical methods to generate training datasets for specific model configurations. While training the FNO, the overarching structure of the model remains consistent, but key hyperparameters are tuned according to the specific problems being addressed. However, 2023 marked a significant diversification in model architectures and solution domains. There were also notable advancements in robust generalization techniques and a growing shift toward application-focused research beyond geophysics into other fields. Li et al. [107] first introduced a variation of the FNO model, parallel FNO (PFNO), to predict the 2D acoustic wave equation to increase the generalization capability of the model. Instead of using one velocity model with variations, the proposed method trains several velocity models using different FNOs in the same simulation setup. Though the training of PFNO is computationally very demanding, the generalization capability of this model is beyond PINNs and FNOs.

Lehmann et al. [108] first utilized NOs to simulate elastic wave propagation in 3D heterogeneous isotropic materials. The authors proposed another variation of the NO named the U-shaped neural operator (UNO). In this architecture, the Fourier layers are arranged in an encoder–decoder structure, which allows skip connections, offering easier training and balance of capturing global and local features. The whole experiment has been carried out with the layer model, introducing heterogeneities in each layer using Karman random fields. Kong et al. [109] also studied 3D elastic waves, focusing on modeling both P and S waves. The authors compared the predictability of FNO and UNO for low-velocity zones and vertical slab structures. Though UNO outperformed FNO on small-scale simulations, it was slower by a factor of 4.

While most of the research articles demonstrate the fact that FNO faces challenges in capturing the wavefield with evolved time, Middleton et al. [110] experimented with multiple sets of input–output ratios simulating the 2D linear acoustic wave equation in the free field domain to present an overall idea of the model’s capability. Rosofsky et al. [111] proposed PINO, combining the PINN and FNO, including the physics-informed loss term with the FNO structure. The model was tested using the 1D wave equation, the 2D wave equation, and the 2D nonconstant coefficients wave equation. This model faces challenges in the case of higher-magnitude initial data (input value > 1). Thus, as a solution, a normalized wavefield has been sought to train the data. PINO has been successfully applied to simulate the frequency domain acoustic wave equation in vertically transverse isotropic (VTI) media with high accuracy.

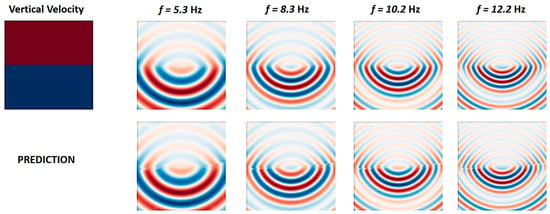

Konuk and Shragge [112] proposed a novel approach to train PINO without any pre-simulated data. The research group utilized the real and imaginary components of the background wavefield, which were analytically incorporated into the training process. This innovative method allowed the model to accurately mimic wave propagation in anisotropic media. Figure 14 reflects on the predictability of their proposed approach.

Figure 14.

The real component of monochromatic scattered wavefields for the two-layer model obtained using a numerical solver (top row) and predicted by the proposed neural network (bottom row), adapted from [112].

Although, currently, NO-based wave propagation study is mostly confined within the geophysics field, Guan et al. [113] first solved a photoacoustic wave equation for simulating photoacoustic tomography (PAT) with FNOs. To address this problem, the researchers modified the architecture of the FNO with an incorporation of the convolutional neural network (CNN) model. Instead of using only the Fourier layer as the linear operator, the authors combined the CNN and Fourier layers. In this context, the CNN has been incorporated to capture the local features from the spatial data (edges, textures, etc.), while the Fourier layer captures the global features (resolution invariance, sharp transition, etc.). The output from the two linear operators is later added and passed to the activation function (GELU). This study experimented with two key hyperparameters: channels and frequency modes. The models have been trained on the breast vascular simulation dataset. The generalization ability of the model has been tested with other models such as breast tumor, Shepp–Logan, synthetic vascular, and Mason—M logo phantoms, where the models performed well in most of the cases. The authors suggested more accuracy by tuning the channels and frequency for robustness. Table 3 summarizes the research works discussed in this section.

Table 3.

A selective list of studies leveraging FNOs for modeling wave propagation for addressing different engineering problems.

4. Conclusions

This article underscores the potential and applicability of NOs in wave propagation modeling. Unlike traditional solvers or other SciML methods, NOs directly learn the solution operator of a PDE, enabling rapid and accurate predictions over a distribution of input functions. This review primarily focuses on DeepONet and FNO, along with their different variants. These methods have demonstrated strong generalization and inference efficiency across diverse wave modeling tasks. According to the literature, seismic imaging is comparatively the most active field for successfully incorporating NOs in wave modeling.

The integration of NOs, including FNO, DeepONet, and GreenONet, holds transformative potential for the fields of non-destructive evaluation (NDE) and structural health monitoring (SHM). These frameworks offer a paradigm shift from conventional data-driven models to physics-informed, operator-learning architectures capable of directly learning mappings between infinite-dimensional function spaces. In the context of NDE/SHM, this enables real-time, scalable, and highly generalizable models for tasks such as wavefield reconstruction, damage localization, and forward/inverse problem-solving in complex structures.

Future research directions will likely focus on leveraging FNOs for the efficient modeling of wave propagation phenomena in heterogeneous and anisotropic materials, significantly accelerating simulations used in guided wave-based inspections. DeepONets and GreenONets, with their capacity to learn solution operators for partial differential equations (PDEs), are well-suited for developing surrogate models for inverse problems, such as inferring damage characteristics from sparse measurement data. Additionally, combining these neural operator frameworks with physics-informed loss functions and uncertainty quantification techniques could improve model interpretability and reliability, addressing key challenges in safety-critical SHM applications.

Moreover, these operator-learning models can support the development of digital twins for structural systems, providing near real-time predictive maintenance insights by rapidly simulating the structural response under varying operational and damage scenarios. The synergy between NO architectures and edge computing platforms may further enable onboard, in situ SHM for aerospace, civil infrastructure, and energy sector applications, making advanced NDE/SHM more accessible, adaptive, and autonomous.

Irrespective of several positive outcomes, it is necessary to be aware that the robustness of these models comes at the cost of training larger datasets. Their performance is often sensitive to architectural design, data distribution, and training stability. Future work should explore self-supervised or hybrid training strategies, improved operator expressiveness, and integration of uncertainty quantification to broaden their applicability.

Overall, NOs represent a paradigm shift in scientific computing for wave propagation. Their data efficiency and resolution invariance make them a compelling alternative to mesh-based methods. By bridging computational physics and deep learning, NOs are poised to play a central role in the next generation of simulation tools for wave-based diagnostics and design.

Author Contributions

Conceptualization, S.B.; methodology, N.M.; investigation, N.M.; resources, S.B.; data curation, N.M.; writing—original draft preparation, N.M.; writing—review and editing, S.B. and N.M.; visualization, N.M.; supervision, S.B.; project administration, S.B.; funding acquisition, S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Office of Naval Research (ONR), grant number N00178-24-1-0007.

Acknowledgments

The authors acknowledge the support provided by the high-performance computing (HPC) facility at the University of South Carolina, Columbia, SC.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pant, S.; Laliberte, J.; Martinez, M. Structural Health Monitoring (SHM) of composite aerospace structures using Lamb waves. In Proceedings of the Conference: ICCM19—The 19th International Conference on Composite Materials, Montréal, QC, Canada, 28 July–2 August 2013. [Google Scholar]

- Rocha, H.; Semprimoschnig, C.; Nunes, J.P. Sensors for process and structural health monitoring of aerospace composites: A review. Eng. Struct. 2021, 237, 112231. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection—A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Barski, M.; Kędziora, P.; Muc, A.; Romanowicz, P. Structural health monitoring (SHM) methods in machine design and operation. Arch. Mech. Eng. 2014, 61, 653–677. [Google Scholar] [CrossRef]

- Mondoro, A.; Soliman, M.; Frangopol, D.M. Prediction of structural response of naval vessels based on available structural health monitoring data. Ocean Eng. 2016, 125, 295–307. [Google Scholar] [CrossRef]

- Sabra, K.G.; Huston, S. Passive structural health monitoring of a high-speed naval ship from ambient vibrations. J. Acoust. Soc. Am. 2011, 129, 2991–2999. [Google Scholar] [CrossRef]

- Sielski, R.A. Ship structural health monitoring research at the Office of Naval Research. JOM 2012, 64, 823–827. [Google Scholar] [CrossRef]

- Farrar, C.R.; Worden, K. Structural Health Monitoring: A Machine Learning Perspective; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Mitra, M.; Gopalakrishnan, S. Guided wave based structural health monitoring: A review. Smart Mater. Struct. 2016, 25, 053001. [Google Scholar] [CrossRef]

- Farrar, C.R.; Worden, K. An introduction to structural health monitoring. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2007, 365, 303–315. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, H.; Tian, T.; Deng, D.; Hu, M.; Ma, J.; Gao, D.; Zhang, J.; Ma, S.; Yang, L. A review on guided-ultrasonic-wave-based structural health monitoring: From fundamental theory to machine learning techniques. Ultrasonics 2023, 133, 107014. [Google Scholar] [CrossRef]

- Willberg, C.; Duczek, S.; Vivar-Perez, J.M.; Ahmad, Z.A. Simulation methods for guided wave-based structural health monitoring: A review. Appl. Mech. Rev. 2015, 67, 010803. [Google Scholar] [CrossRef]

- Abbas, M.; Shafiee, M. Structural health monitoring (SHM) and determination of surface defects in large metallic structures using ultrasonic guided waves. Sensors 2018, 18, 3958. [Google Scholar] [CrossRef] [PubMed]

- Memmolo, V.; Monaco, E.; Boffa, N.; Maio, L.; Ricci, F. Guided wave propagation and scattering for structural health monitoring of stiffened composites. Compos. Struct. 2018, 184, 568–580. [Google Scholar] [CrossRef]

- Sun, Z.; Rocha, B.; Wu, K.-T.; Mrad, N. A methodological review of piezoelectric based acoustic wave generation and detection techniques for structural health monitoring. Int. J. Aerosp. Eng. 2013, 2013, 928627. [Google Scholar] [CrossRef]

- Light, G. Nondestructive evaluation technologies for monitoring corrosion. In Techniques for Corrosion Monitoring; Elsevier: Amsterdam, The Netherlands, 2021; pp. 285–304. [Google Scholar]

- Viktorov, I.A. Rayleigh Lamb Waves; Springer: Berlin/Heidelberg, Germany, 1967; p. 113. [Google Scholar]

- Länge, K.; Rapp, B.E.; Rapp, M. Surface acoustic wave biosensors: A review. Anal. Bioanal. Chem. 2008, 391, 1509–1519. [Google Scholar] [CrossRef]

- Ding, X.; Li, P.; Lin, S.-C.S.; Stratton, Z.S.; Nama, N.; Guo, F.; Slotcavage, D.; Mao, X.; Shi, J.; Costanzo, F. Surface acoustic wave microfluidics. Lab Chip 2013, 13, 3626–3649. [Google Scholar] [CrossRef]

- Mandal, D.; Banerjee, S. Surface acoustic wave (SAW) sensors: Physics, materials, and applications. Sensors 2022, 22, 820. [Google Scholar] [CrossRef]

- Frye, G.C.; Martin, S.J. Materials characterization using surface acoustic wave devices. Appl. Spectrosc. Rev. 1991, 26, 73–149. [Google Scholar] [CrossRef]

- Hess, P. Surface acoustic waves in materials science. Phys. Today 2002, 55, 42–47. [Google Scholar] [CrossRef]

- Ham, S.; Bathe, K.-J. A finite element method enriched for wave propagation problems. Comput. Struct. 2012, 94, 1–12. [Google Scholar] [CrossRef]

- Moser, F.; Jacobs, L.J.; Qu, J. Modeling elastic wave propagation in waveguides with the finite element method. Ndt E Int. 1999, 32, 225–234. [Google Scholar] [CrossRef]

- Ha, S.; Chang, F.-K. Optimizing a spectral element for modeling PZT-induced Lamb wave propagation in thin plates. Smart Mater. Struct. 2009, 19, 015015. [Google Scholar] [CrossRef]

- Ge, L.; Wang, X.; Wang, F. Accurate modeling of PZT-induced Lamb wave propagation in structures by using a novel spectral finite element method. Smart Mater. Struct. 2014, 23, 095018. [Google Scholar] [CrossRef]

- Zou, F.; Aliabadi, M. On modelling three-dimensional piezoelectric smart structures with boundary spectral element method. Smart Mater. Struct. 2017, 26, 055015. [Google Scholar] [CrossRef]

- Balasubramanyam, R.; Quinney, D.; Challis, R.; Todd, C. A finite-difference simulation of ultrasonic Lamb waves in metal sheets with experimental verification. J. Phys. D Appl. Phys. 1996, 29, 147. [Google Scholar] [CrossRef]

- Cho, Y.; Rose, J.L. A boundary element solution for a mode conversion study on the edge reflection of Lamb waves. J. Acoust. Soc. Am. 1996, 99, 2097–2109. [Google Scholar] [CrossRef]

- Yim, H.; Sohn, Y. Numerical simulation and visualization of elastic waves using mass-spring lattice model. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2000, 47, 549–558. [Google Scholar]

- Bergamini, A.; Biondini, F. Finite strip modeling for optimal design of prestressed folded plate structures. Eng. Struct. 2004, 26, 1043–1054. [Google Scholar] [CrossRef]

- Diehl, P.; Schweitzer, M.A. Simulation of wave propagation and impact damage in brittle materials using peridynamics. In Recent Trends in Computational Engineering-CE2014; Springer: Cham, Switzerland, 2015; pp. 251–265. [Google Scholar]

- Rahman, F.M.M.; Banerjee, S. Peri-elastodynamic: Peridynamic simulation method for guided waves in materials. Mech. Syst. Signal Process. 2024, 219, 111560. [Google Scholar] [CrossRef]

- Nishawala, V.V.; Ostoja-Starzewski, M.; Leamy, M.J.; Demmie, P.N. Simulation of elastic wave propagation using cellular automata and peridynamics, and comparison with experiments. Wave Motion 2016, 60, 73–83. [Google Scholar] [CrossRef]

- Kluska, P.; Staszewski, W.; Leamy, M.; Uhl, T. Cellular automata for Lamb wave propagation modelling in smart structures. Smart Mater. Struct. 2013, 22, 085022. [Google Scholar] [CrossRef]

- Leckey, C.A.; Rogge, M.D.; Miller, C.A.; Hinders, M.K. Multiple-mode Lamb wave scattering simulations using 3D elastodynamic finite integration technique. Ultrasonics 2012, 52, 193–207. [Google Scholar] [CrossRef] [PubMed]

- McEneaney, W.M. A curse-of-dimensionality-free numerical method for solution of certain HJB PDEs. SIAM J. Control Optim. 2007, 46, 1239–1276. [Google Scholar] [CrossRef]

- Connell, K.O.; Cashman, A. Development of a numerical wave tank with reduced discretization error. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3008–3012. [Google Scholar]

- Biondini, G.; Trogdon, T. Gibbs phenomenon for dispersive PDEs. arXiv 2015, arXiv:1411.6142. [Google Scholar] [CrossRef]

- Bernardi, C.; Maday, Y. Spectral methods. In Handbook of Numerical Analysis; Elsevier: Amsterdam, The Netherlands, 1997. [Google Scholar]

- Shizgal, B.D.; Jung, J.-H. Towards the resolution of the Gibbs phenomena. J. Comput. Appl. Math. 2003, 161, 41–65. [Google Scholar] [CrossRef]

- Banerjee, S.; Leckey, C.A. Computational Nondestructive Evaluation Handbook: Ultrasound Modeling Techniques; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Rahani, E.K.; Kundu, T. Gaussian-DPSM (G-DPSM) and Element Source Method (ESM) modifications to DPSM for ultrasonic field modeling. Ultrasonics 2011, 51, 625–631. [Google Scholar] [CrossRef]

- Monaco, E.; Rautela, M.; Gopalakrishnan, S.; Ricci, F. Machine learning algorithms for delaminations detection on composites panels by wave propagation signals analysis: Review, experiences and results. Prog. Aerosp. Sci. 2024, 146, 100994. [Google Scholar] [CrossRef]

- Cantero-Chinchilla, S.; Wilcox, P.D.; Croxford, A.J. Deep learning in automated ultrasonic NDE–developments, axioms and opportunities. Ndt E Int. 2022, 131, 102703. [Google Scholar] [CrossRef]

- Carleo, G.; Cirac, I.; Cranmer, K.; Daudet, L.; Schuld, M.; Tishby, N.; Vogt-Maranto, L.; Zdeborová, L. Machine learning and the physical sciences. Rev. Mod. Phys. 2019, 91, 045002. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific machine learning through physics–informed neural networks: Where we are and what’s next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Hey, T.; Butler, K.; Jackson, S.; Thiyagalingam, J. Machine learning and big scientific data. Philos. Trans. R. Soc. A 2020, 378, 20190054. [Google Scholar] [CrossRef]

- Takamoto, M.; Praditia, T.; Leiteritz, R.; MacKinlay, D.; Alesiani, F.; Pflüger, D.; Niepert, M. Pdebench: An extensive benchmark for scientific machine learning. Adv. Neural Inf. Process. Syst. 2022, 35, 1596–1611. [Google Scholar]

- Thiyagalingam, J.; Shankar, M.; Fox, G.; Hey, T. Scientific machine learning benchmarks. Nat. Rev. Phys. 2022, 4, 413–420. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Cheng, L.; Xie, M.; Cao, K. 3D wave simulation based on a deep learning model for spatiotemporal prediction. Ocean Eng. 2022, 263, 112420. [Google Scholar] [CrossRef]

- Moseley, B.; Markham, A.; Nissen-Meyer, T. Solving the wave equation with physics-informed deep learning. arXiv 2020, arXiv:2006.11894. [Google Scholar]

- Daoud, M.S.; Shehab, M.; Al-Mimi, H.M.; Abualigah, L.; Zitar, R.A.; Shambour, M.K.Y. Gradient-based optimizer (GBO): A review, theory, variants, and applications. Arch. Comput. Methods Eng. 2023, 30, 2431–2449. [Google Scholar] [CrossRef]

- Haji, S.H.; Abdulazeez, A.M. Comparison of optimization techniques based on gradient descent algorithm: A review. PalArch’s J. Archaeol. Egypt/Egyptol. 2021, 18, 2715–2743. [Google Scholar]

- Karimpouli, S.; Tahmasebi, P. Physics informed machine learning: Seismic wave equation. Geosci. Front. 2020, 11, 1993–2001. [Google Scholar] [CrossRef]

- Kim, Y.; Nakata, N. Geophysical inversion versus machine learning in inverse problems. Lead. Edge 2018, 37, 894–901. [Google Scholar] [CrossRef]

- Smaragdakis, C.; Taroudaki, V.; Taroudakis, M.I. Using machine learning techniques in inverse problems of acoustical oceanography. Stud. Appl. Math. 2024, 153, e12704. [Google Scholar] [CrossRef]

- Faroughi, S.A.; Pawar, N.M.; Fernandes, C.; Raissi, M.; Das, S.; Kalantari, N.K.; Kourosh Mahjour, S. Physics-guided, physics-informed, and physics-encoded neural networks and operators in scientific computing: Fluid and solid mechanics. J. Comput. Inf. Sci. Eng. 2024, 24, 040802. [Google Scholar] [CrossRef]

- Mehtaj, N.; Banerjee, S. Scientific Machine Learning for Guided Wave and Surface Acoustic Wave (SAW) Propagation: PgNN, PeNN, PINN, and Neural Operator. Sensors 2025, 25, 1401. [Google Scholar] [CrossRef] [PubMed]

- Jia, J.; Li, Y. Deep learning for structural health monitoring: Data, algorithms, applications, challenges, and trends. Sensors 2023, 23, 8824. [Google Scholar] [CrossRef] [PubMed]

- Capineri, L.; Bulletti, A. Ultrasonic guided-waves sensors and integrated structural health monitoring systems for impact detection and localization: A review. Sensors 2021, 21, 2929. [Google Scholar] [CrossRef]

- Eltouny, K.; Gomaa, M.; Liang, X. Unsupervised learning methods for data-driven vibration-based structural health monitoring: A review. Sensors 2023, 23, 3290. [Google Scholar] [CrossRef] [PubMed]

- Flah, M.; Nunez, I.; Ben Chaabene, W.; Nehdi, M.L. Machine learning algorithms in civil structural health monitoring: A systematic review. Arch. Comput. Methods Eng. 2021, 28, 2621–2643. [Google Scholar] [CrossRef]

- Gomez-Cabrera, A.; Escamilla-Ambrosio, P.J. Review of machine-learning techniques applied to structural health monitoring systems for building and bridge structures. Appl. Sci. 2022, 12, 10754. [Google Scholar] [CrossRef]

- Yuan, F.-G.; Zargar, S.A.; Chen, Q.; Wang, S. Machine learning for structural health monitoring: Challenges and opportunities. In Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2020; SPIE Digital Library: Bellingham, WA, USA, 2020; Volume 11379, p. 1137903. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Rao, C.; Ren, P.; Liu, Y.; Sun, H. Discovering nonlinear PDEs from scarce data with physics-encoded learning. arXiv 2022, arXiv:2201.12354. [Google Scholar]

- Rao, C.; Ren, P.; Wang, Q.; Buyukozturk, O.; Sun, H.; Liu, Y. Encoding physics to learn reaction–diffusion processes. Nat. Mach. Intell. 2023, 5, 765–779. [Google Scholar] [CrossRef]

- Rao, C.; Sun, H.; Liu, Y. Hard encoding of physics for learning spatiotemporal dynamics. arXiv 2021, arXiv:2105.00557. [Google Scholar]

- Li, W.; Bazant, M.Z.; Zhu, J. A physics-guided neural network framework for elastic plates: Comparison of governing equations-based and energy-based approaches. Comput. Methods Appl. Mech. Eng. 2021, 383, 113933. [Google Scholar] [CrossRef]

- Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 2016, 113, 3932–3937. [Google Scholar] [CrossRef] [PubMed]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Funahashi, K.-I. On the approximate realization of continuous mappings by neural networks. Neural Netw. 1989, 2, 183–192. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Boullé, N.; Townsend, A. A mathematical guide to operator learning. arXiv 2023, arXiv:2312.14688. [Google Scholar]

- Maier, A.; Köstler, H.; Heisig, M.; Krauss, P.; Yang, S.H. Known operator learning and hybrid machine learning in medical imaging—A review of the past, the present, and the future. Prog. Biomed. Eng. 2022, 4, 022002. [Google Scholar] [CrossRef]

- Chen, T.; Chen, H. Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Trans. Neural Netw. 1995, 6, 911–917. [Google Scholar] [CrossRef]

- Kovachki, N.; Li, Z.; Liu, B.; Azizzadenesheli, K.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Neural operator: Learning maps between function spaces with applications to pdes. J. Mach. Learn. Res. 2023, 24, 1–97. [Google Scholar]

- Lu, L.; Jin, P.; Pang, G.; Zhang, Z.; Karniadakis, G.E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 2021, 3, 218–229. [Google Scholar] [CrossRef]

- Li, Z.; Kovachki, N.; Azizzadenesheli, K.; Liu, B.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Fourier neural operator for parametric partial differential equations. arXiv 2020, arXiv:2010.08895. [Google Scholar]

- Tripura, T.; Chakraborty, S. Wavelet neural operator: A neural operator for parametric partial differential equations. arXiv 2022, arXiv:2205.02191. [Google Scholar]

- Cao, Q.; Goswami, S.; Karniadakis, G.E. Laplace neural operator for solving differential equations. Nat. Mach. Intell. 2024, 6, 631–640. [Google Scholar] [CrossRef]

- Raonic, B.; Molinaro, R.; Rohner, T.; Mishra, S.; de Bezenac, E. Convolutional neural operators. In Proceedings of the ICLR 2023 Workshop on Physics for Machine Learning, Kigali, Rwanda, 4 May 2023. [Google Scholar]

- Fanaskov, V.S.; Oseledets, I.V. Spectral neural operators. Dokl. Math. 2023, 108, S226–S232. [Google Scholar] [CrossRef]

- Goswami, S.; Bora, A.; Yu, Y.; Karniadakis, G.E. Physics-informed deep neural operator networks. In Machine Learning in Modeling and Simulation: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 219–254. [Google Scholar]

- Cilimkovic, M. Neural Networks and Back Propagation Algorithm; Institute of Technology Blanchardstown: Dublin, Ireland, 2015; Volume 15, p. 18. [Google Scholar]

- Goswami, S.; Yin, M.; Yu, Y.; Karniadakis, G.E. A physics-informed variational DeepONet for predicting crack path in quasi-brittle materials. Comput. Methods Appl. Mech. Eng. 2022, 391, 114587. [Google Scholar] [CrossRef]

- Wang, S.; Wang, H.; Perdikaris, P. Learning the solution operator of parametric partial differential equations with physics-informed DeepONets. Sci. Adv. 2021, 7, eabi8605. [Google Scholar] [CrossRef]

- Jin, P.; Meng, S.; Lu, L. MIONet: Learning multiple-input operators via tensor product. SIAM J. Sci. Comput. 2022, 44, A3490–A3514. [Google Scholar] [CrossRef]

- Tan, L.; Chen, L. Enhanced deeponet for modeling partial differential operators considering multiple input functions. arXiv 2022, arXiv:2202.08942. [Google Scholar]

- Aldirany, Z.; Cottereau, R.; Laforest, M.; Prudhomme, S. Operator approximation of the wave equation based on deep learning of Green’s function. Comput. Math. Appl. 2024, 159, 21–30. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Li, Y.; Yuan, Y. Convergence analysis of two-layer neural networks with relu activation. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Zhu, M.; Feng, S.; Lin, Y.; Lu, L. Fourier-DeepONet: Fourier-enhanced deep operator networks for full waveform inversion with improved accuracy, generalizability, and robustness. Comput. Methods Appl. Mech. Eng. 2023, 416, 116300. [Google Scholar] [CrossRef]

- Wen, G.; Li, Z.; Azizzadenesheli, K.; Anandkumar, A.; Benson, S.M. U-FNO—An enhanced Fourier neural operator-based deep-learning model for multiphase flow. Adv. Water Resour. 2022, 163, 104180. [Google Scholar] [CrossRef]

- Guo, Z.; Chai, L.; Huang, S.; Li, Y. Inversion-DeepONet: A Novel DeepONet-Based Network with Encoder-Decoder for Full Waveform Inversion. arXiv 2024, arXiv:2408.08005. [Google Scholar]

- Li, S.; Li, Z.; Mu, Z.; Xin, S.; Dai, Z.; Leng, K.; Zhang, R.; Song, X.; Zhu, Y. GlobalTomo: A global dataset for physics-ML seismic wavefield modeling and FWI. arXiv 2024, arXiv:2406.18202. [Google Scholar]

- Wagner, J.E.; Burbulla, S.; de Benito Delgado, M.; Schmid, J.D. Neural Operators as Fast Surrogate Models for the Transmission Loss of Parameterized Sonic Crystals. In Proceedings of the NeurIPS 2024 Workshop on Data-driven and Differentiable Simulations, Surrogates, and Solvers, Vancouver, BC, Canada, 15 December 2024. [Google Scholar]

- Bao, Y.; Li, H. Machine learning paradigm for structural health monitoring. Struct. Health Monit. 2021, 20, 1353–1372. [Google Scholar] [CrossRef]

- Smarsly, K.; Dragos, K.; Wiggenbrock, J. Machine learning techniques for structural health monitoring. In Proceedings of the 8th European Workshop on Structural Health Monitoring (EWSHM 2016), Bilbao, Spain, 5–8 July 2016; pp. 5–8. [Google Scholar]

- Gubernatis, J.; Lookman, T. Machine learning in materials design and discovery: Examples from the present and suggestions for the future. Phys. Rev. Mater. 2018, 2, 120301. [Google Scholar] [CrossRef]

- Moosavi, S.M.; Jablonka, K.M.; Smit, B. The role of machine learning in the understanding and design of materials. J. Am. Chem. Soc. 2020, 142, 20273–20287. [Google Scholar] [CrossRef]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine learning for medical imaging. RadioGraphics 2017, 37, 505–515. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, A.F.; Castellanos, J.C.; Ross, Z.E.; Azizzadenesheli, K.; Clayton, R.W. Seismic wave propagation and inversion with neural operators. Seism. Rec. 2021, 1, 126–134. [Google Scholar] [CrossRef]

- Song, C.; Wang, Y. High-frequency wavefield extrapolation using the Fourier neural operator. J. Geophys. Eng. 2022, 19, 269–282. [Google Scholar] [CrossRef]

- Zhang, T.; Innanen, K.; Trad, D. Learning the elastic wave equation with Fourier Neural Operators. Geoconvention 2022, 2022, 1–5. [Google Scholar] [CrossRef]

- Li, B.; Wang, H.; Feng, S.; Yang, X.; Lin, Y. Solving seismic wave equations on variable velocity models with Fourier neural operator. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Lehmann, F.; Gatti, F.; Bertin, M.; Clouteau, D. Fourier neural operator surrogate model to predict 3D seismic waves propagation. arXiv 2023, arXiv:2304.10242. [Google Scholar]

- Kong, Q.; Rodgers, A. Feasibility of Using Fourier Neural Operators for 3D Elastic Seismic Simulations; Lawrence Livermore National Laboratory (LLNL): Livermore, CA, USA, 2023.

- Middleton, M.; Murphy, D.T.; Savioja, L. The application of Fourier neural operator networks for solving the 2D linear acoustic wave equation. In Forum Acusticum; European Acoustics Association: Turin, Italy, 2023. [Google Scholar]

- Rosofsky, S.G.; Al Majed, H.; Huerta, E. Applications of physics informed neural operators. Mach. Learn. Sci. Technol. 2023, 4, 025022. [Google Scholar] [CrossRef]

- Konuk, T.; Shragge, J. Physics-guided deep learning using fourier neural operators for solving the acoustic VTI wave equation. In Proceedings of the 82nd EAGE Annual Conference & Exhibition, Amsterdam, The Netherlands, 18–21 October 2021; European Association of Geoscientists & Engineers: Utrecht, The Netherlands, 2021. [Google Scholar]

- Guan, S.; Hsu, K.-T.; Chitnis, P.V. Fourier neural operator network for fast photoacoustic wave simulations. Algorithms 2023, 16, 124. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).