Abstract

In this study, we propose a digital domain TDI-CMOS dynamic imaging method based on minimum search domain alignment, which consists of five steps: image-motion vector computation, image jitter estimation, feature pair matching, global displacement estimation, and TDI accumulation. To solve the challenge of matching feature point pairs in dark and low-contrast images, our method first optimizes the size and position of the search box using an image motion compensation mathematical model and a satellite platform jitter model. Then, the feature point pairs that best match the extracted feature points of the reference frame are identified within the search box of the target frame. After that, a kernel density estimation algorithm is proposed for calculating the displacement probability density of each feature point pair to fit the actual displacement between two frames. Finally, we align and superimpose all the frames in the digital domain to generate a delayed integral image. Experimental results show that this method greatly improves the alignment speed and accuracy of dark and low-contrast images during dynamic imaging. It effectively mitigates the effects of image motion and jitter from the spatial camera, and the fitted global image motion error is kept below 0.01 pixels, which is compensated to improve the MTF coefficient of the image motion and jitter link to 0.68, thus improving the imaging quality of TDI.

1. Introduction

High-resolution optical remote sensing satellites hold significant value in various fields, including Earth observation, military reconnaissance, geographic mapping, and resource surveys. Complementary metal–oxide–semiconductor (CMOS) devices equipped with time delay integration (TDI) functionality are widely utilized in high-resolution satellite image acquisition due to their high signal-to-noise ratio (SNR) and sensitivity. However, the imaging quality of TDI is influenced by several factors, such as image motion and satellite platform jitter [1].

Image motion occurs during normal push-broom scanning, where the image of the ground target and the image detector surface experience relative motion. Orbital parameters and satellite attitude typically determine the direction and magnitude of this motion. In dynamic scenes, rapid movement of the target can lead to blurring and displacement of the image during exposure, which in turn affects the clarity and accuracy of the final image [2,3].

Current methods for compensating image motion can be categorized into four types [4,5,6]: mechanical, optical, electronic, and image-based techniques. The image-motion compensation of mainstream high-resolution remote sensing satellites mainly relies on a combination of mechanical adjustment of the bias angle and electronic correction of the magnitude of the image-motion velocity. Specifically, the bias mechanism is calibrated based on the calculated results of the image-motion vector to correct for angular deviations, while the magnitude of motion speed deviation is addressed by adjusting the transmission frequency of the TDI line.

However, the incorporation of additional mechanical structures introduces significant complexity, increasing the size and mass of the payload and posing challenges in meeting the high-speed, multi-directional, and high-frequency imaging requirements of agile satellites. In light of these limitations, advancements in onboard computational capabilities and digital image processing technologies have led to a growing emphasis on image-based self-compensation for image motion. This innovative approach has garnered considerable attention and is rapidly evolving, presenting a promising alternative to traditional compensation methods.

Satellite platform jitter also significantly affects TDI imaging quality [7]. Satellite jitter arises from attitude adjustments and the periodic motion of satellite components during orbital operations. Due to the high sensitivity and uncontrollability of satellite jitter, its effects are challenging to mitigate through physical means [8]. Offsets occur when satellite jitter is transmitted to the image plane, altering the geometric and radiometric information of the image and directly leading to a degradation in image quality. The primary jitter sources on the satellite platform include the solar sail panel, the magnetic torque rod, the attitude control flywheel, and the control moment gyroscope (CMG). These jitter sources cause distortion and blurring in TDI images, and the impact of satellite platform jitters on imaging quality worsens as the spatial resolution of the payload increases [9].

For the compensation of satellite platform jitter effects, one is to use the data collected by the attitude sensor for direct image distortion correction, and the other is to reconstruct the degraded image to its original form by image restoration through Wiener filtering, constrained least squares filtering, inverse filtering, the Lucy–Richardson algorithm, and so on, using the platform tremor a priori knowledge [10].

However, these methods have notable shortcomings. First, resampling techniques may result in the loss of image information when addressing high-frequency jitter, particularly in the presence of multiple vibration sources on the starboard platform. Second, reconstruction methods that rely on a priori knowledge are highly dependent on the accuracy of the jitter model; inaccuracies in the model can significantly diminish reconstruction quality. Additionally, existing techniques often struggle to achieve optimal compensation effects in complex scenes with multi-source dithering, leading to unsatisfactory final image quality.

This paper introduces an innovative digital domain TDI-CMOS imaging technique that utilizes minimum search domain alignment to address these challenges. This approach aims to simultaneously mitigate the effects of satellite platform jitter on TDI imaging throughout the imaging process. The proposed method is specifically designed for the area-array push-scan imaging mode. It facilitates the integration of area-array dark and low-contrast images by optimizing the image alignment process and estimating the global displacement between frames. In the following sections, we will elaborate on the underlying principles of the method and evaluate its effectiveness in practical applications.

The remainder of this paper is organized as follows: Section 2 presents the working principle of the digital domain TDI-CMOS. Section 3 describes each imaging process of this method. Section 4 provides a simulation experiment of the digital domain TDI-CMOS imaging process. The simulation analysis is discussed in Section 5. Finally, the conclusion of this study is given.

2. Digital Image Domain TDI-CMOS Operating Principle

TDI-CMOS is a widely used imaging technology in remote sensing. Its working principle is based on TDI technology, wherein the image sensor comprises multiple rows, each capable of independently receiving optical signals. When the space camera and the scene move relative to each other, each row of the sensor captures optical signals from the same target at different times. This process enhances imaging quality and improves the SNR by successively sampling the same target across multiple time windows.

In contrast to traditional analog TDI, the digital domain TDI technique motions the charge signal integration process to an off-chip field-programmable gate array (FPGA) or processor, where the analog signal has been converted into digital format. In off-chip TDI imaging, the line time, frame period, null time, and number of TDI stages are calculated based on the requirements of TDI imaging. The CMOS then adjusts the drive timing according to these parameters, continuously outputting signals obtained from multiple exposures of the same target. These digital signals are synchronized with optical scanning and superimposed in the FPGA with memory, resulting in an integrated digital signal output [11].

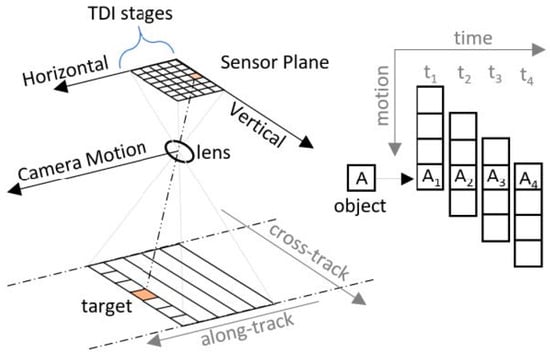

The digital domain TDI is commonly employed in area-scanning imaging mode, where the area-scanning detector continuously captures images at a high frame rate along the track direction. This imaging mode allows for acquiring multi-frame images with high overlaps of the same scene. As shown in Figure 1, the imaging mode under which the satellite flies along its orbit and images a ground scene takes place at the sensor plane through the lens, and multiple frames of high overlap images to of the same scene A can be obtained, which are aligned to realize the superposition of the digital images in the off-chip FPGAs. The original frames, which may have low SNR, can be matched and superimposed, improving the SNR of the final output image by a factor of on M-stage TDI. Additionally, redundant information in the highly overlapping multi-frame images provides significant flexibility for both spaceborne and ground processing.

Figure 1.

Schematic diagram of space-based TDI imaging.

Our previous work [12] proposed an off-chip digital domain TDI-CMOS camera and an image-motion self-compensation algorithm. In this study, we build upon this foundation by simultaneously implementing self-compensation for inter-frame image motion and tremor in the digital domain, without relying on a mechanical deflection angle adjustment mechanism.

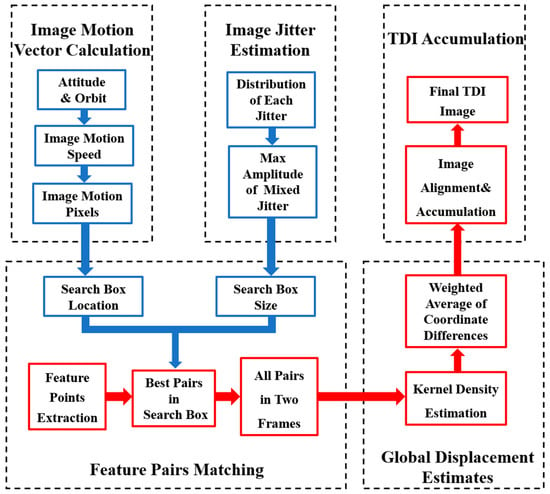

3. TDI Imaging Method Based on Minimum Search Domain

This method comprises five steps: (1) calculating the image-motion vector at the moment of imaging, (2) estimating the image jitter volume, (3) matching feature point pairs within a minimum search domain, (4) estimating the global displacement volume, and (5) aligning and performing TDI accumulation. The specific flow is illustrated in Figure 2.

Figure 2.

Block diagram of TDI imaging method flow based on minimum search domain.

3.1. Image-Motion Vector Calculation

Currently, the primary method for calculating image displacement is based on the coordinate transformation of the image displacement velocity vector. This approach necessitates the use of matrix transformation to establish a precise coordinate relationship between the object point and the image point. Subsequently, the coordinate equation of the image point concerning the target’s image plane position is derived through coordinate correspondence. The image displacement velocity vector equations for the points on the image plane can be obtained by differentiating this equation concerning time.

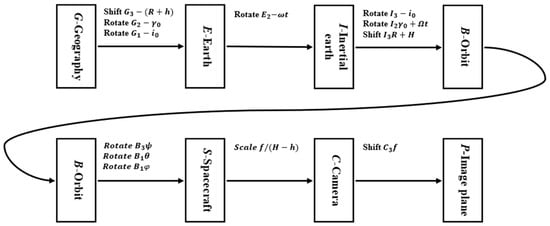

As shown in Figure 3, according to the 11 matrix linear transformations of 7 coordinate systems proposed in the literature [13] as an example, it has gone through the geographic coordinate system G(, , ) to the earth coordinate system E(, , ) to the geocentric inertial coordinate system I(, , ) to the spacecraft orbit coordinate system B(, , ) to the spacecraft coordinate system S(, , ) to the camera coordinate system C(, , ) and finally to the image plane coordinate system P(, , ) of the seven transformations. The scene in the image plane at the coordinates of P(, ) can be calculated by its coordinates in the geographical coordinate system G(, ). The process for calculating the image motion velocity vector on the image plane is as follows:

Figure 3.

Transformation relationships between major coordinate systems in Earth imaging.

Differentiating Equation (1) with respect to time t yields the equation for the image motion velocity at the image plane:

In this context, represents the radius of the Earth relative to its center; is the spacecraft orbital altitude; is the focal length of the camera lens; is the height of the feature terrain at the photographed target; , , and are the roll, pitch, and yaw attitude angles of the spacecraft’s coordinate system for the orbital coordinate system, respectively; , with being the central angle corresponding to the point of descending crossover of the spacecraft to the orbital plane at the moment of photo-graphing, and being the average angular rate of spacecraft rotation around the earth’s center along the orbit; is the orbital inclination; and ω is the angular rate of earth rotation.

The calculated values , represent the image motion velocities in the X and Y direction of the image surface. For a single frame exposure time , the image motion during the imaging time interval from the 1st frame to the kth frame, can be expressed in terms of the deviation of the image motion in the rows and columns of the image:

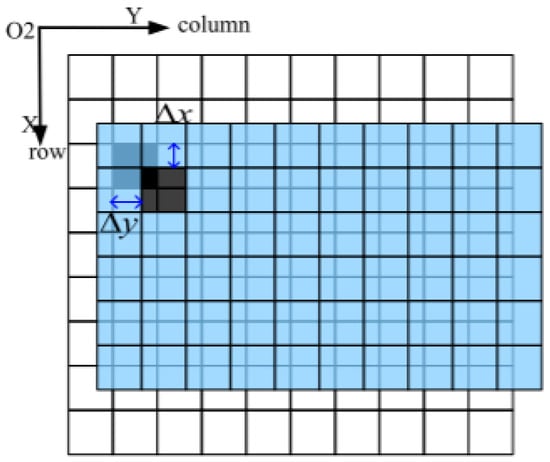

Similarly, as shown in Figure 4, for an image detector with pixel size , the number of pixels motioned in the row and column directions of the image in the imaging plane during the time interval between the 1st frame and the kth frame can be expressed as follows:

Figure 4.

Diagram of image motion at the focal plane.

3.2. Image Jitter Estimation

During Earth observation using space-based optical remote sensors, platform jitters are characterized by small amplitudes, a wide frequency spectrum, and various forms. The amplitude typically occurs on the sub-pixel scale, ranging from 0.1 Hz to 1 kHz [10]. These jitters are often not simply harmonic or linear; instead, they manifest as the superposition of multiple jitter sources and combinations of motions. This complexity complicates predicting and inhibiting jitter amplitudes at the imaging focal plane at any given moment.

In this paper, we examine combined jitters as a case study to investigate the displacement of a focal plane image resulting from the superposition of low-frequency, high-frequency, and random jitter sources [14]. Their functional distributions and key parameters are presented in Table 1.

Table 1.

Platform jitter parameter types.

According to the 3σ quasi-measurement, for a single random jitter that follows a normal distribution , there is 99.73% certainty that its amplitude is within the interval . The maximum amplitude of this single random jitter can be expressed as follows:

The maximum amplitude of the mixed jitter resulting from the superposition of multiple low-frequency, high-frequency, and random jitter sources can be expressed as follows:

3.3. Feature Pairs Matching

Traditional image-matching methods typically employ the same feature description operator to extract all feature points from two frames. During the subsequent matching point pair search process, each feature point in the target frame is calculated and compared with every feature point in the reference frame to obtain the globally optimal matching feature point pair. However, when dealing with a large number of feature points, the matching point pair search can consume up to 70% of the computation time [15]. Additionally, due to the large frame width, low brightness, and low signal-to-noise ratio of single-frame surface-array TDI images, traditional image-matching methods are often computationally intensive and prone to matching errors, making it challenging to meet the high-precision and fast-response imaging requirements of on-planet TDI.

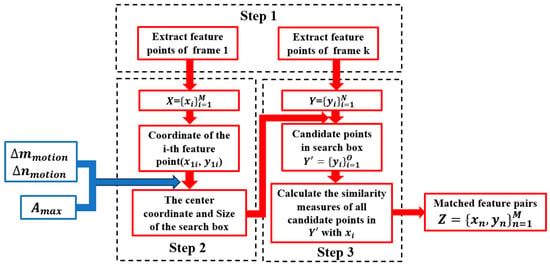

The feature point pair matching in the minimum search domain proposed in this paper consists of the following steps outlined in Figure 5:

Figure 5.

Flowchart of feature point pair matching.

First, all feature points in the reference frame and the frame to be matched are extracted using a null domain feature point operator. The SIFT (scale-invariant feature transform) operator is a classical and most commonly used feature extraction operator and is selected for this experiment due to its invariance to rotation, scale, and affine transformations, as well as for its stability to noise, viewing angle, and illumination [16].

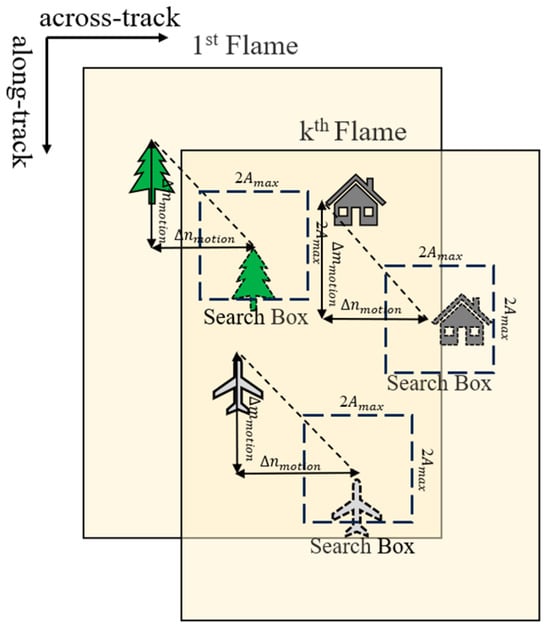

In the second step, as shown in Figure 6, the position of the search box is determined based on the inter-frame image motion derived from the image motion vector mathematical model in Section 3.1, and the size of the search box is determined based on the maximum magnitude derived from the image motion modelling in Section 3.2; each feature point extracted from the reference frame is found to have a desired position in the target frame, and a search box is built with the center of the point.

Figure 6.

Schematic of the search box.

Finally, the similarity measure, such as the Euclidean distance, is calculated between all feature points in the search box and their corresponding feature points in the base frame, leading to the identification of the matching feature point pair with the smallest distance.

Through the aforementioned three steps, all feature points in the base frame have identified matched candidates in the target frame. We then combine the matched point pairs into a set . For any pair of matched points between the base frame and the target frame with coordinates and , we can calculate their coordinate differences as follows:

The coordinate differences and of all feature points are then plotted as a scatter plot, which visually represents the displacement distribution between the feature points. By examining the shape and distribution of the scatter plot, we can initially assess the direction and magnitude of the global displacement.

3.4. Global Displacement Estimate

Kernel density estimation (KDE) is a non-parametric method that smooths data by placing a kernel function around each data point, thereby filling in the gaps between isolated observations and enabling the evaluation of the probability density of a random variable [17]. In Section 3.3, we obtained a set of matched feature point pairs. In this section, we will employ kernel density estimation to analyze the coordinate differences derived from these matched feature point pairs. These coordinate differences reflect the motion information between neighboring frames and can be utilized to estimate global displacement.

The formula for kernel density estimation is defined as follows [17]:

where is any variable belonging to the overall data, n is the number of data points in the sample, K is the kernel density function, and is the smoothing bandwidth that controls the degree of smoothing (with larger values of resulting in smoother data). In this study, according to the literature [18], the mean integrated squared error (MISE) is used to measure the difference between the estimated density function and the true density function, and its minimum value is used to determine the optimal bandwidth . And is a data point in the sample [19]. In this paper, the Gaussian distribution probability density function is employed as the kernel function, expressed as follows:

Substituting Equation (12) into Equation (11) yields the Gaussian kernel density function as follows:

By analyzing the kernel density estimation results for each coordinate difference point, the weighted average of these coordinate differences serves as an estimate of the global displacement. The pixel displacements corresponding to the image motion deviation between the frame to be aligned and the reference frame in the row and column directions are denoted by and :

3.5. TDI Accumulation

To align the images of each frame in the TDI accumulation process, we use M-stage integration as an example. The first frame in an imaging cycle serves as the reference frame, while the remaining M-1 frames are designated as the frames to be aligned. The integer parts of the pixel displacements in the row and column directions between the kth frame and the first frame are denoted as and :

Respectively. The fractional parts of the pixel displacements and can be expressed as follows:

Since the gray value of an image pixel is proportional to the number of photogenerated charges, which correspond to the light-sensitive area and the luminance of the target object [12], the contribution of each pixel cell to the pixel gray value must be considered. The pixel array of the kth frame image aligned with the first frame can be expressed as follows:

Finally, by summing the inter-frame images of the digital domain TDI-CMOS camera after M-frame alignment, we can obtain the pixel gray value of each pixel cell of the M-stage TDI cumulative image :

4. Experiments

4.1. Experimental Parameter Setting

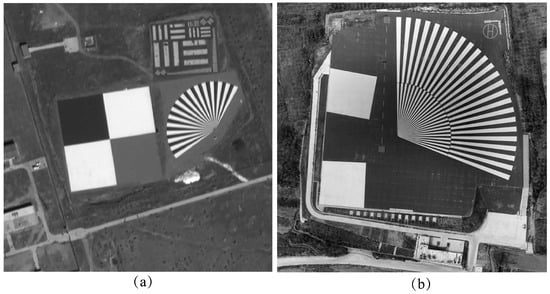

To verify the effectiveness of the proposed TDI imaging technique, we selected a satellite remote sensing image from the Baotou range and the Songshan range shown in Figure 7a and Figure 7b, respectively, with sizes of 1024 pixel × 1024 pixel and 2048 pixel × 2048 pixel to simulate image detectors with different numbers of image elements. The satellite platform and camera parameters at their imaging moments are detailed in Table 2.

Figure 7.

Raw remote sensing imagery of the target range. (a) Baotou range; (b) Songshan range.

Table 2.

Spacecraft and image detector indicators.

According to the image motion calculation model presented in Section 3.1, the image motion velocities in the along-track and across-track directions at the time of imaging are 0.01187 m/s and 0.001455 m/s, respectively. These velocities correspond to image motions of 0.9926 pixels and 0.1217 pixels between two adjacent frames in the along-track and across-track directions, respectively.

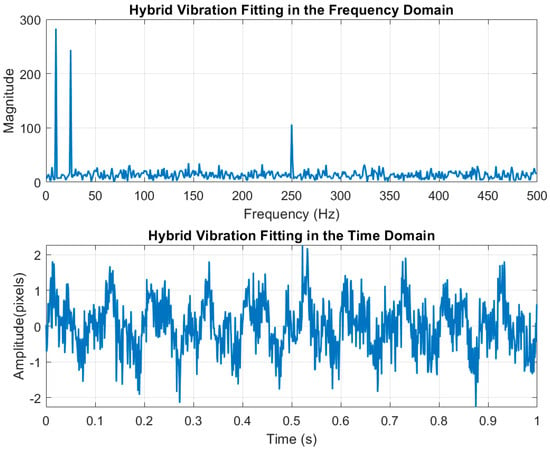

For the image jitter model, we established a hybrid jitter that encompasses low-frequency, high-frequency, and random jitters to simulate the jitter of the satellite platform during the imaging process. The parameters of this hybrid jitter are presented in Table 3, and the time-domain and frequency-domain spectra of the superimposed hybrid jitter are illustrated in Figure 8. The maximum amplitude of the hybrid jitter is 2 pixels, with a corresponding minimum search box size of 4 pixels by 4 pixels.

Table 3.

Satellite platform jitter type and parameter indicators.

Figure 8.

Frequency-domain and time-domain fitting plots for hybrid jitters.

4.2. Analysis of Alignment Indicators

In this section, we analyze the effectiveness and advancements of the algorithm proposed in this paper regarding both speed and accuracy. Because the single-frame image is too dark and weak, it is necessary to lower the values of contrast-threshold, edge-threshold, Sigma, and other parameters in the SIFT operator as much as possible in order to extract as many feature points as possible to avoid incomplete extraction of feature points. So, we extracted varying numbers of feature points by applying different thresholds with the SIFT operator on the experimental images. We then matched the feature point pairs using both the SIFT global and minimum search domains within the same algorithmic framework. The time taken for the matching process is presented in Table 4, which indicates that our method significantly outperforms SIFT global pairing. Notably, as the search box size decreases, the time required for matching also decreases, particularly in scenarios with a large number of feature points, where the running speed can reach up to 3.86 times that of SIFT global pairing.

Table 4.

Feature point pair matching and durations.

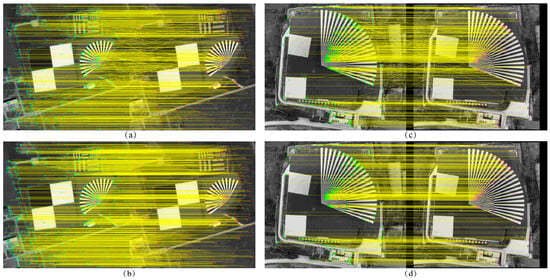

Figure 9 shows the comparison of the alignment effect of the dark and weak images between frames, and bolded parts indicate the shortest matching elapsed time for the same number of feature pairs. It can be seen that when the feature point extraction parameter threshold is set lower, a large number of mismatched points appear in the inter-frame displacement image, whereas the minimum search domain method aligns with fewer mismatched points and has better results in both experimental images.

Figure 9.

Comparison of the effect of inter-frame image alignment (a) Global matching of Baotou range (b) Minimum search domain matching of Baotou range (c) Global matching of Songshan range (d) Minimum search domain matching of Songshan range.

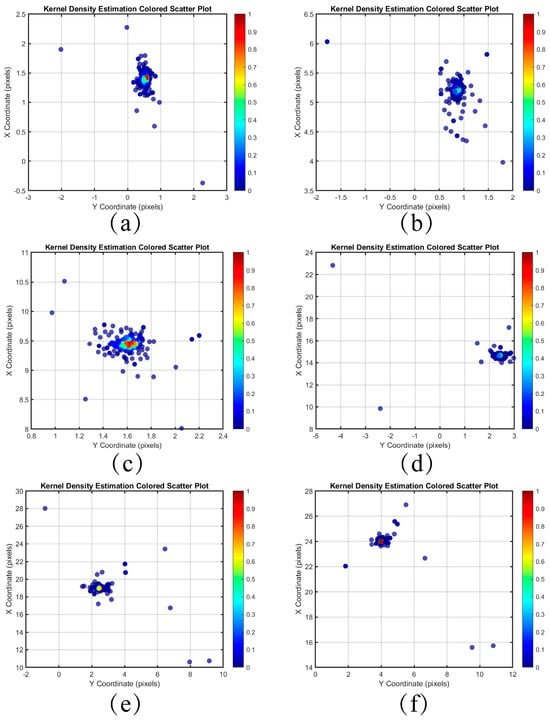

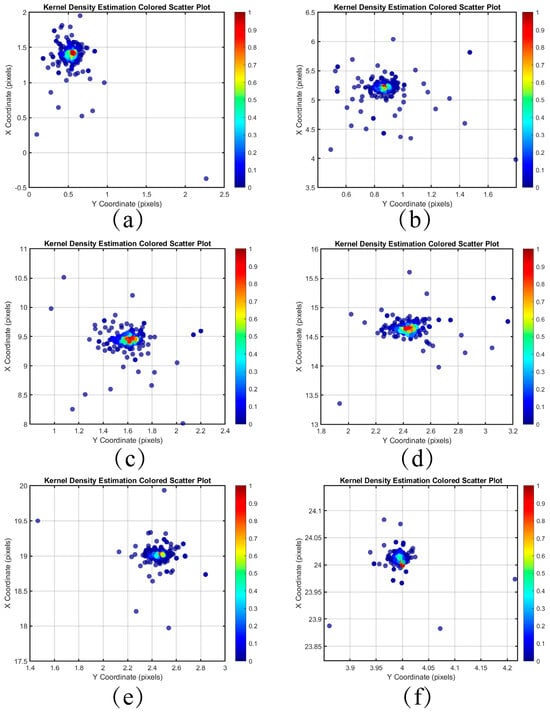

The distribution of the differences between the coordinates of the feature point pairs in each frame and the first frame after pairing the images of the Baotou range is shown in Figure 10 and Figure 11. The horizontal and vertical axes represent the coordinate differences in the Y and X directions, respectively. The colors of the scattered points indicate the kernel density estimation of the differences, with denser distributions corresponding to higher probability densities. Compared to inter-frame matching using only SIFT, the scatter point distribution obtained through the minimum search domain method is more concentrated, effectively mitigating the influence of outliers on the results.

Figure 10.

Kernel density estimation distribution of difference in coordinate pairs of feature points between each frame and the first frame using SIFT only. (a) Frame 2 (b) Frame 6 (c) Frame 10 (d) Frame 15 (e) Frame 20 (f) Frame 24.

Figure 11.

Kernel density estimation distribution of coordinate differences between each frame and the first frame feature point pair for the minimum search domain. (a) Frame 2 (b) Frame 6 (c) Frame 10 (d) Frame 15 (e) Frame 20 (f) Frame 24.

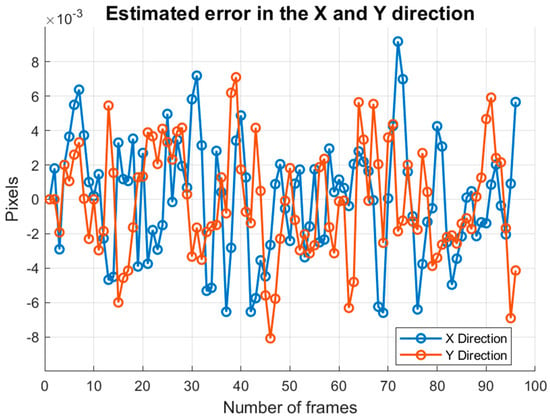

The coordinate differences of all feature point pairs in each frame are weighted and averaged to derive the global displacement estimates for each frame relative to the first frame. The error values are calculated by subtracting the global displacement estimates from the true displacements. As shown in Table 5, the minimum search domain method yields smaller error values compared to inter-frame matching using SIFT only, indicating greater accuracy in the inter-frame global displacement estimation. Figure 12 illustrates the distribution of error values from the minimum search domain algorithm across the 1st frame to the 96th frame. It is evident that the error values of the inter-frame displacements computed by this algorithm, along with the true displacements affected by image motion and platform tremor, remain within 0.01 pixels in both the across-track and along-track directions.

Table 5.

Analysis of global displacement estimates for each frame with respect to the first frame.

Figure 12.

Error analysis of the estimated displacement values for each frame.

4.3. Analysis of TDI Imaging Results

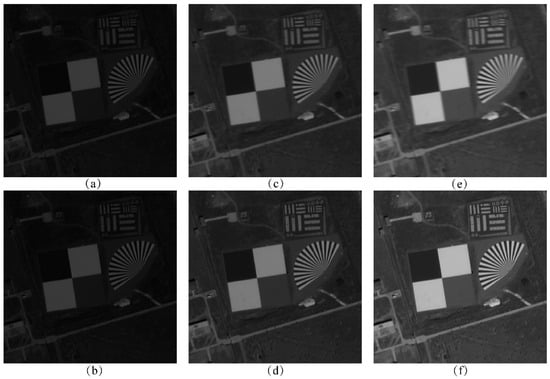

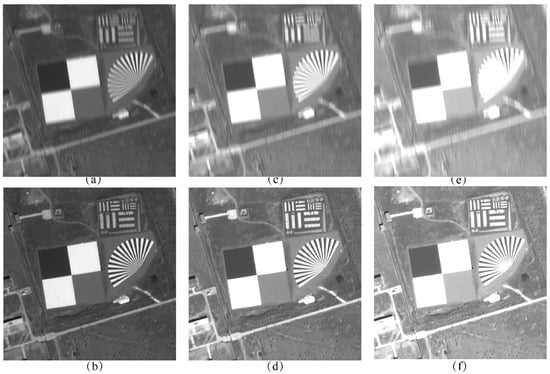

After aligning each frame of the dark and weak image according to the estimated global image motion, TDI images were generated using integration stages 6, 12, 18, 24, 36, and 48. Additionally, TDI images were generated using an uncompensated method for comparison. The simulation results of this algorithm and the uncompensated method for stages 6 to 18 are presented in Figure 13, while the results for stages 24 to 48 are shown in Figure 14.

Figure 13.

TDI stacking effects for 6–18 stages: (a) 6-stage without compensation; (b) 6-stage with our algorithm; (c) 12-stage without compensation; (d) 12-stage with our algorithm; (e) 18-stage without compensation; (f) 18-stage with our algorithm.

Figure 14.

TDI stacking effects for 24–48 stages: (a) 24-stage without compensation; (b) 24-stage with our algorithm; (c) 36-stage without compensation; (d) 36-stage with our algorithm; (e) 48-stage without compensation; (f) 48-stage with our algorithm.

Table 6 displays the objective metrics of the images generated at each TDI stage, utilizing five metrics: structural similarity index (SSIM), peak signal-to-noise ratio (PSNR), cross-correlation (CC), and information entropy to evaluate the generated TDI images. SSIM evaluates the perceived similarity of two images through brightness, contrast, and structural information; the closer the value is to 1, the more similar the two images are. PSNR quantifies the fidelity of an image based on the pixel mean-square error; the higher the value is, the lower the distortion. CC measures the degree of linear correlation of the pixel values of the two images; the higher the value is, the better the global consistency. And entropy shows the complexity of image information, calculated by the probability of grayscale distribution; the richer the texture, the higher the entropy value. The four metrics are used to evaluate the image characteristics in terms of perceptual quality, numerical accuracy, statistical correlation, and informativeness dimensions, respectively [20], so we use these four metrics to evaluate the generated TDI images.

Table 6.

Indicators for objective evaluation of TDI imaging results.

Observing Figure 13 and Figure 14, and Table 6, it can be found that the quality of the images generated by the algorithm is better than that of the uncompensated images at all stages, and the higher the stage, the higher the percentage of objective evaluation indexes improved.

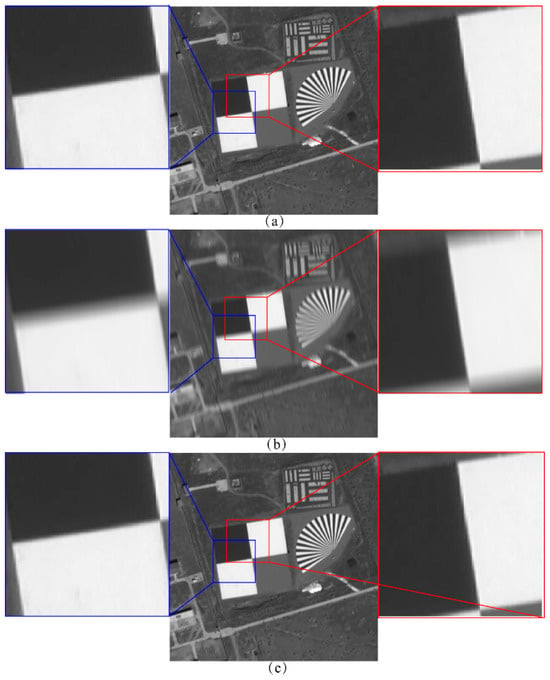

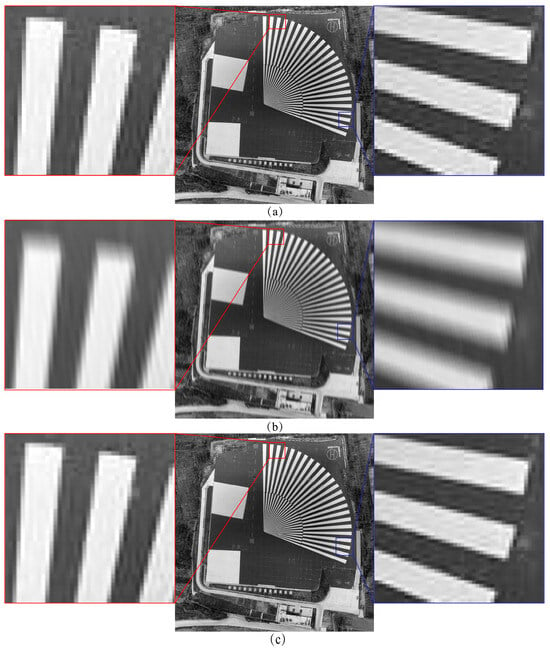

MTF (modulation transfer function) is a comprehensive index describing the response of the whole imaging link to spatial frequency, reflecting the ability of the system to transfer image details at different spatial frequencies, and it is an important parameter for evaluating the quality of remote-sensing images. According to ISO 12233 [21], the tilted edge method is a standard method for testing the resolution of electronic still image cameras [22], in which the response of an edge (usually a black and white demarcation line) is analyzed by placing it at an angle in the field of view of the imaging system, using gradient analysis.

Since the experimental images achieved the highest combined objective evaluation index at 24 integration stages, we analyzed the MTF of the TDI images obtained from both the uncompensated method and our algorithm. Figure 15 and Figure 16 illustrate the MTF in the across and along-track directions, measured using the tilted edge method, while Table 7 presents the calculated values for these directions.

Figure 15.

MTF of Baotou region images measured by the tilted edge method: (a) Ideal image; (b) The image without image motion and jitter compensation; (c) The image obtained by our algorithm.

Figure 16.

MTF of Songshan region images measured by the tilted edge method: (a) Ideal image; (b) The image without image motion and jitter compensation; (c) The image obtained by our algorithm.

Table 7.

Across-track and along-track MTF analysis.

It can be seen that the sharpness degradation of the image in the across-track direction is more drastic in the case of no compensation. The MTF of the Baotou region image is 0.08 times the ideal image in the across-track direction and 0.19 times in the along-track direction, and the MTF of the across-track and along-track direction is 0.68 times the ideal image after compensation by our algorithm. The MTF of the Songshan region image in the across-track direction is 0.15 times the ideal image, and the MTF in the along-track direction is 0.21 times the ideal, while the MTF in the across-track and along-track directions after compensation by our algorithm is 0.72 times and 0.69 times the ideal image, respectively. It is proved that the present algorithm can reduce the effects of image motion and jitter on the sharpness of TDI images in both across-track and along-track directions.

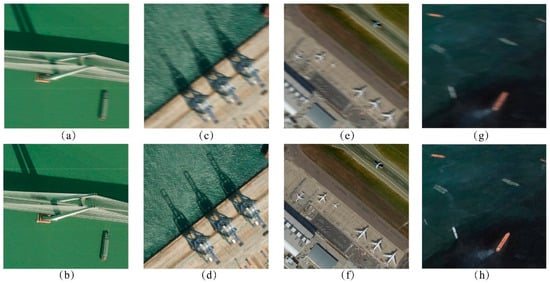

In the multispectral TDI imaging experiment, we extended the proposed digital domain TDI algorithm to multispectral remote-sensing images to evaluate its effectiveness with multispectral data. We selected a set of multispectral remote-sensing images from Google Maps containing scenes with various feature types. During the TDI imaging process, we employed the same algorithmic framework as for the panchromatic images, updating the satellite platform and image detector parameters at the time of imaging. Figure 17 shows that the multispectral images obtained using this algorithm are visually superior to uncompensated images. The quantitative analysis in Table 8 reveals that the SSIM of the images produced by this method improved by an average of 0.2886, and the PSNR value increased by an average of 15.02 dB compared to images without compensation, indicating a significant enhancement in imaging quality. These results demonstrate that the proposed algorithm is well-suited and effective for multispectral remote-sensing images.

Figure 17.

Multi-spectral TDI imaging results: (a) Bridge without compensation; (b) Bridge with our algorithm; (c) Harbor without compensation; (d) Harbor with our algorithm; (e) Airport without compensation; (f) Airport with our algorithm; (g) Ships without compensation; (h) Ships with our algorithm.

Table 8.

Indicators for objective evaluation of multispectral imaging results.

5. Discussion

Firstly, a complete set of digital domain TDI algorithms and processes are proposed for the on-star image-motion model and the jitter mechanism. Compared with the traditional image-motion compensation method, the algorithm (off-chip digital domain self-aligned TDI-CMOS camera) has the advantages of simple optical and mechanical structures and simultaneous elimination of image motion and tremor during the imaging process. The effectiveness of the algorithm is verified by simulation and imaging experiments. Secondly, the experimental results show that compared with the global search, this method can improve the alignment speed and alignment accuracy of inter-frame dark and weak images, and the error of inter-frame global image motion estimation is less than 0.01 pixels, which overcomes the difficulties of the TDI process, such as the large number of calculations of dark and weak image alignment and the large number of false matching points. The simulation and experimental results show that this method can improve the alignment speed of the dark and weak images between frames, and the error of the global image motion estimation between frames is less than 0.01 pixels. Compared with TDI imaging without image motion and jitter compensation, this method has obvious improvement in the indexes of structural similarity, peak signal-to-noise ratio, number of interrelationships, information entropy, and modulation transfer function.

In the future, this algorithm is expected to be embedded in the off-chip digital domain self-aligning TDI-CMOS camera, using the satellite attitude and orbit information and the tremor parameter to achieve the establishment of the minimum search domain in the alignment process, and then to achieve the accurate estimation of the global image motion estimate between frames, in order to release the digital compensation in the TDI imaging process with the help of the on-board processing system.

However, there are still some areas that can be improved: firstly, for the feature point extraction part, this method only uses the existing common operators, which can be optimized for new lightweight operators to adapt to the application scenarios of low illumination and complex texture, as well as to the application requirements of inter-frame displacement alignment. Then, this method can only achieve the motion compensation caused by image motion and jitter in the TDI process, and the experiment uses an ideal image without aberration; however, in actual space-based remote sensing, due to the non-ideal nature of the optical system, there are many kinds of aberrations which lead to distortion of the original image captured by the image detector, yet it can then be combined with the wavefront aberration compensation technology of the optical system and the aberration correction technology of the digital image processing so that it can also achieve aberration compensation during the TDI process.

6. Conclusions

The minimum search domain alignment method proposed in this study solves the three core problems of image motion and jitter compensation in TDI imaging by combining the strategy of optimized feature alignment and inter-frame displacement estimation:

- Joint compensation of image motion and jitter: Optimizing the search domain based on the image motion compensation model and the satellite platform jitter model can eliminate the effects of image motion and jitter on the imaging quality in the imaging process at the same time.

- Matching feature pairs between frames in the imaging process: Based on the constraints of image motion and jitter, the minimum search domain is used to take the local optimal pair as the global optimal pair, which significantly reduces the time-consumption of the matching process compared with the worldwide search.

- High-precision global displacement estimation: A kernel density estimation algorithm is proposed to deal with the probability distribution of inter-frame displacements efficiently, and the weighted assignment of each feature pair achieves sub-pixel level displacement estimation with an error of less than 0.01 pixels.

This method achieves inter-frame image alignment in the digital domain, avoids the complexity of traditional external mechanical compensation, and provides an efficient and low-cost solution for TDI-CMOS cameras in aerospace remote sensing. Future research can further combine the back-illumination technique and digital-to-analog conversion method of TDI-CMOS to expand the usage scenarios of non-push-scan imaging modes, and further explore methods such as deep learning to optimize sub-pixel-level self-compensation in the digital domain.

Author Contributions

H.L. did all the experimental work, data acquisition, and analysis; S.T., Q.F., and Z.L. contributed expertise, direction, materials, and experimental tools. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62075219, in part by the Key Technological Research Projects of Jilin Province, China, under Grant 20240302018GX and 20250402007ZP, and in part by the Youth Innovation Promotion Association, Chinese Academy of Sciences, under Grant 2021218.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ruan, N.; Zhuang, X.; Li, T.; Man, Y. Full-link simulation and analysis of space optical remote sensing systems. Aerosp. Return Remote Sens. 2013, 34, 36–43. [Google Scholar]

- Zhuang, X.; Wang, Z.; Ruan, N.; He, J. Analysis of the impact of image motion on the imaging quality of spaceborne TDI CCD cameras. Aerosp. Return Remote Sens. 2013, 34, 66–73. [Google Scholar]

- Wahballah, W.A.; Bazan, T.M.; El-Tohamy, F.; Fathy, M. Analysis of smear in high-resolution remote sensing satellites. In Proceedings of the SPIE 10000, Sensors, Systems, and Next-Generation Satellites XX, Edinburgh, UK, 19 October 2016; Volume 100001J. [Google Scholar]

- Hao, W.; Yan, P.; Li, Z.; Cheng, Z.; She, W. Research on image motion and compensation technology development trends in space-based optical imaging systems (Invited). J. Photonics 2023, 52, 58–80. [Google Scholar]

- Li, W. Research on Image Motion Compensation Methods for Agile Imaging of High-Resolution Space Cameras. Master’s Thesis, Graduate School of the Chinese Academy of Sciences, Changchun Institute of Optics, Fine Mechanics and Physics, Changchun, China, 2012. [Google Scholar]

- Zhang, Y. Research on Image Acquisition and Processing System of Wide-Field Large-Area CCD Cameras. Master’s Thesis, Graduate School of the Chinese Academy of Sciences, Changchun Institute of Optics, Fine Mechanics and Physics, Changchun, China, 2010. [Google Scholar]

- Shi, J.; Xue, X.; Guo, Y. The impact of satellite vibration on the imaging quality of TDI CCD and compensation methods. Optoelectron. Eng. 2010, 37, 11–16. [Google Scholar]

- Pan, J.; Ye, G.; Zhu, Y.; Song, X.; Hu, F.; Zhang, C.; Wang, M. Jitter detection and image restoration based on continuous dynamic shooting model for high-resolution TDI CCD satellite images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4915–4933. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, H.; Tong, X.; Ye, Z.; Xie, H.; Lin, F. Effect of the matching window size and TDI stage number on image-based satellite jitter detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6508805. [Google Scholar] [CrossRef]

- Xing, W.; Tuo, W.; Li, X.; Wang, T.; Yang, C. Micro-vibration suppression and compensation techniques for in-orbit satellites: A review. Chin. J. Aeronaut. 2024, 37, 1–19. [Google Scholar] [CrossRef]

- Qu, H.; Zhang, Y.; Jin, G. Improvement of area array CMOS image sensor functions based on digital domain TDI algorithm. Opt. Precis. Eng. 2010, 18, 1896–1903. [Google Scholar]

- Tao, S.; Zhang, X.; Xu, W.; Qu, H. Realize the image motion self-registration based on TDI in digital domain. IEEE Sens. J. 2019, 19, 11666–11674. [Google Scholar] [CrossRef]

- Wang, J.; Yu, P.; Yan, C.; Ren, J.; He, B. Mathematical model for calculating the velocity vector of image motion in aerospace optical remote sensors. Acta Opt. Sin. 2004, 12, 1585–1589. [Google Scholar]

- Liu, H.; Li, X.; Xue, X.; Han, C.; Xue, X.; Sun, X. Detection of vibration parameters of spatial cameras using rolling shutter area array CMOS autocorrelation imaging. Opt. Precis. Eng. 2016, 24, 1474–1481. [Google Scholar]

- Dawn, S.; Saxena, V.; Sharma, B. Remote Sensing Image Registration Techniques: A Survey. In Image and Signal Processing; Elmoataz, A., Lezoray, O., Nouboud, F., Mammass, D., Meunier, J., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6134. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Latecki, L.J.; Lazarevic, A.; Pokrajac, D. Outlier Detection with Kernel Density Functions. In Machine Learning and Data Mining in Pattern Recognition; Perner, P., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4571. [Google Scholar] [CrossRef]

- Gasser, T.; Müller, H.G.; Mammitzsch, V. Kernels for Nonparametric Curve Estimation. J. R. Stat. Soc. Ser. B (Methodol.) 1985, 47, 238–252. [Google Scholar] [CrossRef]

- Wang, G.; Wang, Z.; Chen, Y.; Zhao, W.; Liu, X. Fuzzy correspondences and kernel density estimation for contaminated point set registration. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9 October 2015; pp. 1936–1941. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Photography-Electronic Still Picture Imaging-Resolution and Spatial Frequency Responses: ISO 12233:2017[S/OL]. Available online: https://www.iso.org/standard/71696.html (accessed on 26 November 2021).

- Li, T.; Tao, X.; Feng, H.; Xu, Z. Calculation of Modulation Transfer Function Based on the Inclined Edge Method and Image Restoration. Acta Opt. Sin. 2010, 30, 2891–2897. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).