Abstract

Accurate track segment association plays an important role in modern sensor data processing systems to ensure the temporal and spatial consistency of target information. Traditional methods face a series of challenges in association accuracy when handling complex scenarios involving short tracks or multi-target intersections. This study proposes an intelligent association method that includes a multi-dimensional track data preprocessing algorithm and the characteristic-aware attention long short-term memory (CA-LSTM) network. The algorithm can segment and temporally align track segments containing multi-dimensional characteristics. The CA-LSTM model is built to perform track segment association and has two basic parts. One part focuses on the target characteristic dimension and utilizes the separation and importance evaluation of physical characteristics to make association decisions. The other part focuses on the time dimension, matching the application scenarios of short, medium and long tracks by obtaining the temporal characteristics of different time spans. The method is verified on a multi-source track association dataset. Experimental results show that association accuracy rate is 85.19% for short-range track segments and 96.97% for long-range track segments. Compared with the typical traditional method LSTM, this method has a 9.89% improvement in accuracy on short tracks.

1. Introduction

In modern military defense and air traffic control systems, multiple networked radars and sensors are deployed to form a system that can achieve multi-dimensional data processing and target tracking. As a core technology in radar data processing, multi-sensor track segment association and fusion is the key to situational awareness [1], directly determining the system’s global perception accuracy and decision reliability. With the widespread application of distributed heterogeneous sensors, track data will be affected by error superposition and spatiotemporal asynchrony [2].

Traditional track segment association methods are categorized into probabilistic statistical, fuzzy mathematical, and anti-bias tracks association algorithms. Probabilistic statistical methods, such as hypothesis testing-based methods, evaluate track correlation through statistics. Probabilistic statistical track segment association algorithms, such as weighted methods and statistical double threshold approaches, show good performance under low noise conditions. Wang et al. [3] solved the sensor-target count mismatch problem by using sequential m-best algorithms with cost likelihood function refinements. However, the real-time performance of such methods degrades due to the combinatorial explosion in dense target environments [2,4]. Li et al. [5] optimized the distance weight allocation of the optimal sub-pattern assignment (OSPA) and introduced a sliding window function. Wang et al. [6] proposed an engineering-oriented probabilistic data association (JPDA) algorithm to improve the data association accuracy and practicality in multi-target tracking scenarios. Fuzzy mathematical methods, including grey relational analysis, enhance adaptability to complex environments [7]. Xiong et al. [8] constructed multidimensional grey relational matrices with a dynamic feedback mechanism to mitigate the impact of time-varying noise. Guo et al. [9] reduced computational complexity in asynchronous multi-node scenarios via a hybrid virtual-real sequence temporal divergence algorithm. Su et al. [10] achieved track clustering using fuzzy membership functions. Chen et al. [11] proposed an asynchronous track-to-track association method based on pseudo-nearest neighbor distance and grey correlation theory. Zhang et al. [12] proposed to take the advantages of communicable collaboration in a peer-to-peer structure and establish an error correction model between high-precision and low-precision nodes. However, fuzzy mathematics methods are dependent on parameter settings and cannot adapt to dynamically changing complex environments. Anti-bias tracks association algorithms focus on reducing sensor biases and false alarms. Yang et al. [13] designed visual equivalence factors based on target measurement error distributions for cross radar associations using classical assignment methods. Cui et al. [14] developed generalized spatiotemporal intersection matching models to suppress regional bias effects. Zhang et al. [15] enhanced association stability in dense clutter environments through relative coordinate allocation matrices (RCAM). However, the robust association method is not capable of modeling features and cannot be generalized.

Breakthroughs in the field of deep learning have provided new methods for track segment association and fusion [16]. With their powerful characteristic extraction and nonlinear modeling capabilities, these methods achieve data-driven association decisions [17,18]. Deep learning architecture substantially overcomes the limitations of traditional approaches caused by dependence on specific motion models, allowing it to avoid the impact caused by the difference between the hypothetical model and the complex track scenarios in the real world. Convolutional neural networks (CNNs) and long short-term memory (LSTM) networks effectively exploit dynamic temporal patterns in tracks and reduce the reliance of traditional methods on predefined motion models [19,20]. Generative adversarial networks (GANs) and contrastive learning further enhance the robustness of discontinuous track segment association [21,22]. However, current research predominantly focuses on single scenario associations, and areas such as end-to-end fusion frameworks and multi-scene adaptive algorithms need further exploration [23].

Multilayer perceptions (MLPs) were initially introduced for time series classification (TSC) tasks due to their simplicity, but they struggled to capture temporal dependencies and multi-scale characteristics [24]. Variants of CNNs, such as multi-channel deep convolutional neural networks (MC-DCNN), can handle multivariate data by processing each channel independently [25]. Fully convolutional network (FCN) enhances input flexibility through global average pooling [26]. Through the modular network architecture, multi-level models based on specific networks and parallel fusion structures are also proposed [27,28]. Cao et al. [29] proposes the contrastive Transformer encoder representation network (TSA-cTFER) based on contrastive learning and Transformer encoder, integrating a two-stage online algorithm for dynamic management of track segment association.

To address these challenges, this paper proposes an association data preprocessing algorithm and the CA-LSTM model. The model establishes a track segment association method through the collaborative optimization of multi-scale dilated convolution, characteristic-aware attention, and gated adaptive LSTM. The main contributions are as follows:

- This paper developed an association data preprocessing algorithm that can segment and time-series synchronize the data from different sensors within the same time window. At the same time, different tracks are combined to form inputs suitable for the model.

- The CA-LSTM model optimizes characteristic representation through three mechanisms: characteristic group embedding, encoding, and characteristic-aware attention. This method can assign characteristic weights based on model training results, effectively solving the waste of multi-dimensional data caused by using only position characteristic data in traditional methods.

- The model combines a gated adaptive LSTM and a multi-scale dilated convolution to optimize the representation of the time dimension. The two modules can extract the characteristics of the input in the time dimension, and obtain the short-range and medium-range correlations of adjacent nodes and the long-range correlations of the entire track.

Verification on the multi-source track segment association dataset shows that the proposed method outperforms existing deep learning models in core metrics. This method provides a technical method for intelligent collaborative sensing technology in distributed sensor networks.

2. Track Segment Association Problem Formulation

2.1. Problem Description

In multi-sensor information fusion systems, track segment association aims to correlate track data from heterogeneous sensors to the same physical target while eliminating systematic biases. Let source , for example, radar station A, provide a track set containing tracks, and source , for example, radar station B, provides with tracks. Each track comprises sequential state observations

where denotes the sensor source, is the track index, and represents the temporal index set. defines the state vector at time , including Cartesian coordinates , velocity , heading angle , and some other dimension characteristic data. Assume that the number of characteristics is represented by parameter , and the dimension of vector is .

The core objective is to derive an optimal mapping function which associates each track from source to its counterpart from source , or labels it as unassociated. This reduces to a binary classification task for track pairs to determine their association likelihood.

2.2. Association Characteristic Processing Algorithm

In actual scenarios, significant heterogeneity exists in track data acquisition from different sensors. Some sensors in the network operate at higher refresh rates, while others have latency. Variations in update cycles can cause track points to be inconsistent in time and have differences in the number of observations within the same time interval. These differences require temporal alignment through data preprocessing methods.

The alignment process begins with the association data processing module, which filters and combines the raw association results. By analyzing the data characteristics, specific track data combinations meeting predefined criteria are selected, establishing a foundation for subsequent processing. In the time alignment part, the shared temporal coverage is first determined for different sensors’ data matrices. This involves identifying the minimum and maximum timestamps across matrices to define a common time interval. Data within this interval are then filtered and aligned using window-based operations. For example, given a row of characteristic data from sensor A with timestamp , a time window centered at is defined as the data of sensor B. The average value of the relevant characteristics within this window is calculated to achieve preliminary time alignment. Subsequently, the scenario data processing function groups data by source identifiers and batch numbers. Using the results from the associated data processing stage, corresponding grouped data undergo further alignment and merging operations. This ensures the consistency of the multi-sensor dataset in structure and time.

Through these preprocessing steps, challenges caused by sensor temporal inconsistencies and observation quantity differences are effectively mitigated. The implemented temporal alignment provides a unified and accurate data foundation for subsequent track-based analysis and applications.

The temporal data alignment and windowing process is initiated as follows, for two temporal data matrices A and B, where matrix A consists of the state vectors of the track connected in time order, and matrix B is constructed in a way similar to track . The shared temporal range is first determined by formula

where . The data matrices and are filtered out within this time range, and a numerical column aggregation alignment operation is performed on the data matrices to finally obtain . The calculation is as follows

At this time, the dimension of matrix and matrix is . In the window division stage, the number of windows is calculated based on predefined window size and sliding step

where denotes the index count of track data matrix . In the subsequent experiments in this study, the window size is generally determined to be the length of the multidimensional data of the track segment. Assuming that the track segment is a 10-point track segment, the window size is defined as . The sliding step size is set as , one fifth of the window length. This ensures that the track segment data generates more training samples, enhances the robustness of the model, and does not increase the computational cost too much while covering data changes.

For each window, the and indices are computed as

After extracting windowed data, matrices and are combined in chronological order to form the windowed result matrix . This structured windowing process facilitates setting a standardized data format for subsequent analysis and model training.

Differential processing is applied to paired track data within to compute column-wise differences

where denotes the -th window’s track data, , represents the -th timestamp within the track segment, , and indicates the longitude value at the -th timestamp in the -th window of sensor .

Normalization is subsequently executed. Taking longitude as an example following

represents the maximum value in , and represents the minimum value in . Latitude , speed , and heading undergo analogous normalization. These preprocessing steps produce standardized data suitable for subsequent analysis and modeling tasks. The algorithm adopts a sequential architecture for track segment association analysis, as detailed in Algorithm 1.

| Algorithm 1: Association characteristic processing algorithm |

| Input : Sensor matrices A(α), B(β), window size w, slide step δ = ∅ = max (min (, min ()) = min (max (), max ()) ] n = ⌈((length of ) − w)/δ⌉ + 1 for γ = 1: n do s = (γ − 1) * δ, ) [s:e] if ≠ ∅ then ) else = ∅ if ≠ ∅ then for f = x, y, v, θ do = (Δf − min(Δf))/(max(Δf) − min(Δf)) end end end |

2.3. Association Decision Output

The association decision uses a specific algorithm to evaluate the track data in pairs to measure the correlation or other association metrics between the tracks. If a pair of tracks can be determined to belong to the same target according to predefined criteria and thresholds, they are labeled as positive class, usually represented by 1. Conversely, if the pair is considered to represent different targets, it is labeled as a negative class, which is typically denoted as 0. For a given track pair , the association decision algorithm calculates an association metric value . The boundary between association and non-association is divided by a pre-defined threshold .

The association decision output is formally defined as a binary classification label

where denotes the output label for the track pair , and is a threshold determined by empirical or theoretical analysis. The analysis is determined according to specific application scenarios and data characteristics.

In deep learning approaches, the track characteristics to be adjudicated are fed into a trained classifier that outputs binary labels. The input track data tensor undergoes characteristic transformation and nonlinear mapping through fully connected layers and leaky rectified linear unit activation function , thereby generating an output vector

where denotes the first layer weight matrix and denotes the second layer weight matrix. and represent the bias vectors of the two layers, respectively. The output vector is converted into a probability vector through the soft maximum function , and the final classification label is determined by comparative probability values following

3. The CA-LSTM Model

3.1. Overall Architecture

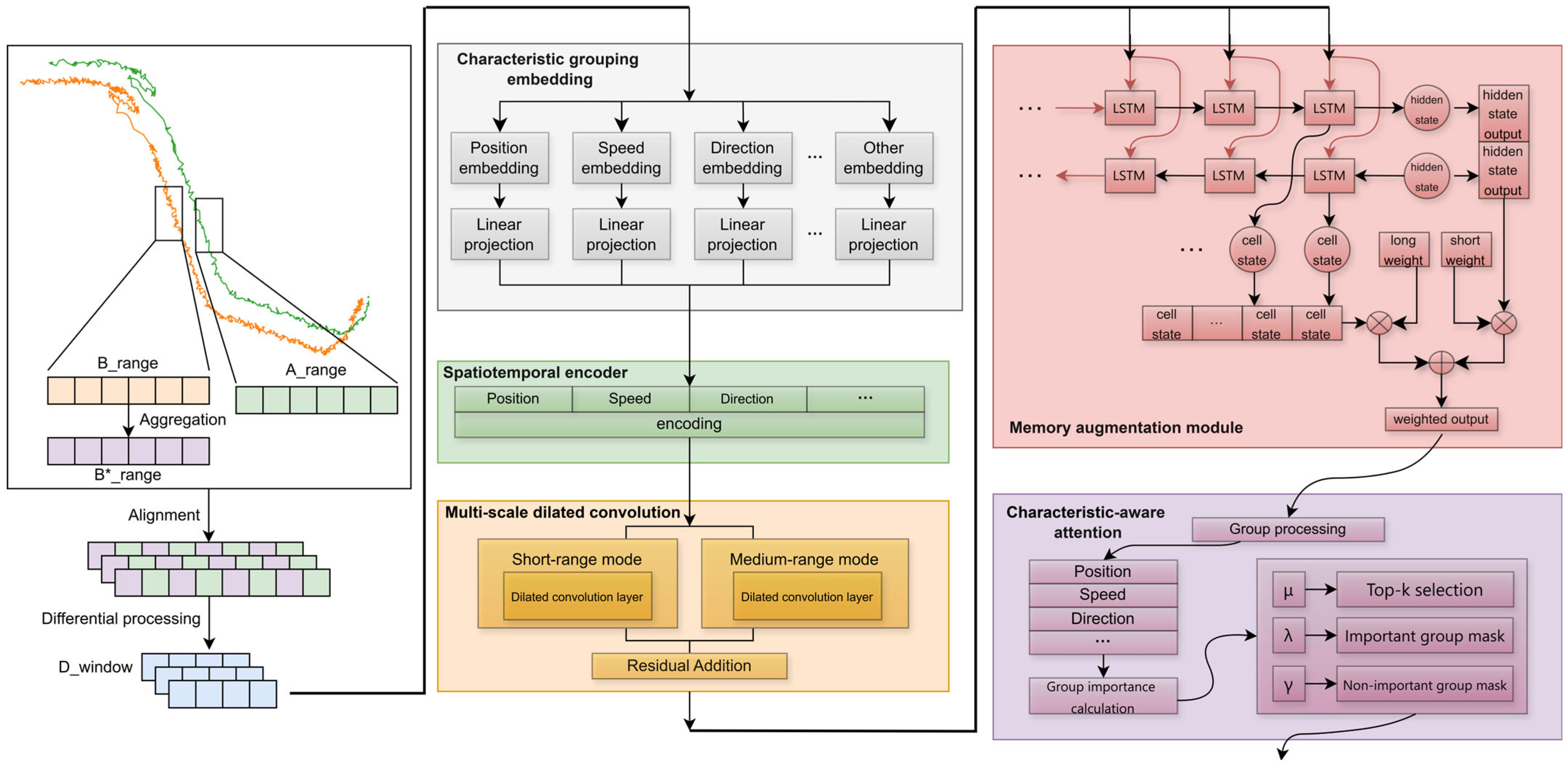

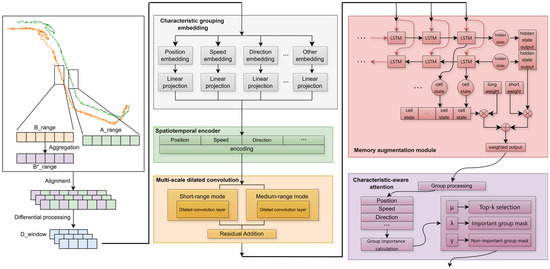

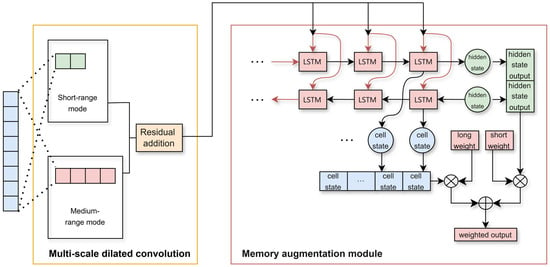

Time series data is represented as , where is a 3D tensor representing the sequence data of batch input. denotes the time step length, and represents the characteristic dimension. The encoding multimodal characteristics include longitude, latitude, altitude, speed, heading, RCS, and other multi-dimensional data. Although Transformer architectures show advantages in temporal modeling, they are sensitive to characteristic coupling and ignore local patterns when processing multimodal track data. To overcome these limitations, this paper proposes the CA-LSTM model. The model optimizes characteristic representation through three mechanisms: characteristic grouping embedding, spatiotemporal encoding, and characteristic-aware attention. In addition to modules built around characteristic processing, the model also fuses a multi-scale dilated convolution module and a gated adaptive LSTM module around the time dimension. The specific model structure is shown in Figure 1.

Figure 1.

Schematic of the core module architecture.

Input track data is first preprocessed by normalization to eliminate sensor unit differences, then enters the characteristic grouping embedding layer. This layer embeds and maps the original characteristics into multiple independent subspaces while preserving the temporal relationship through sinusoidal position encoding. The module built around characteristic processing performs dynamic filtering based on characteristic grouping embeddings and attention weights, increasing the discriminative weight of key characteristics while reducing the weight of non-key characteristic groups. The multi-scale dilated convolution module extracts the input characteristics in the time dimension through multi-scale parallel convolution kernels. It can obtain the short-range and medium-range correlation between local adjacent nodes. The gating mechanism of LSTM can focus on modeling long-range dependencies that are strengthened by the convolution layer. Finally, the classifier outputs prediction results under multi-layer dropout and entropy regularization constraints and performs end-to-end parameter optimization through cross-entropy loss.

3.2. Core Module Design

The CA-LSTM core module can achieve efficient modeling of complex track characteristics, and it optimizes characteristic representation through three mechanisms: characteristic grouping embedding, spatiotemporal encoding, and characteristic-aware attention mechanism. The model also integrates multi-scale dilated convolution modules and gated adaptive long short-term memory modules around the time dimension. Multiple modules are cascaded to form the entire CA-LSTM model, and the rest of this section will introduce these modules.

3.2.1. Characteristic Grouping Embedding and Encoding

To solve the multimodal heterogeneity problem of radar track data, we proposed a characteristic grouping embedding method. Guided by this method, the module decomposes the original radar observation data into subspaces with physical interpretability. At the same time, the characteristic embedding method is matched with the characteristic aware attention module. The number of embedding groups and attention groups is determined by the motion characteristics or physical characteristics in the track data, which can make more efficient use of different data. Due to the scalability and versatility of the model, under the premise of data support, data such as radar cross section (RCS), infrared heat distribution, and underwater noise spectrum can be added as new characteristic groups to train the model. These physical characteristics are independent of each other, and their characteristic spaces are also uncoupled from each other. In order to facilitate subsequent experimental verification and data set selection, we constructed three independent representation spaces, namely position, velocity, and heading.

Given an input characteristic tensor with a channel dimension , corresponding to the features . Position encoding is linear transformation and nonlinear activation for Cartesian coordinates , which is obtained as follows

where is a learnable parameter matrix, and denotes vector concatenation. Velocity encoding is independent encoding for radial velocity , which is obtained as follows

where preserves velocity continuity. Heading encoding is linear mapping for heading angle , which is obtained as follows

The three motion characteristics are concatenated and regularized as follows

where represents matrix concatenation, represents regularization, and represents layer normalization. Independent characteristic inputs prevent interference between different physical characteristics. Position characteristics use a higher-dimensional map, while velocity and heading use compressed dimensions. The architecture can be extended to new motion characteristics, such as acceleration through additional linear layers.

3.2.2. Time Dimension Processing Network

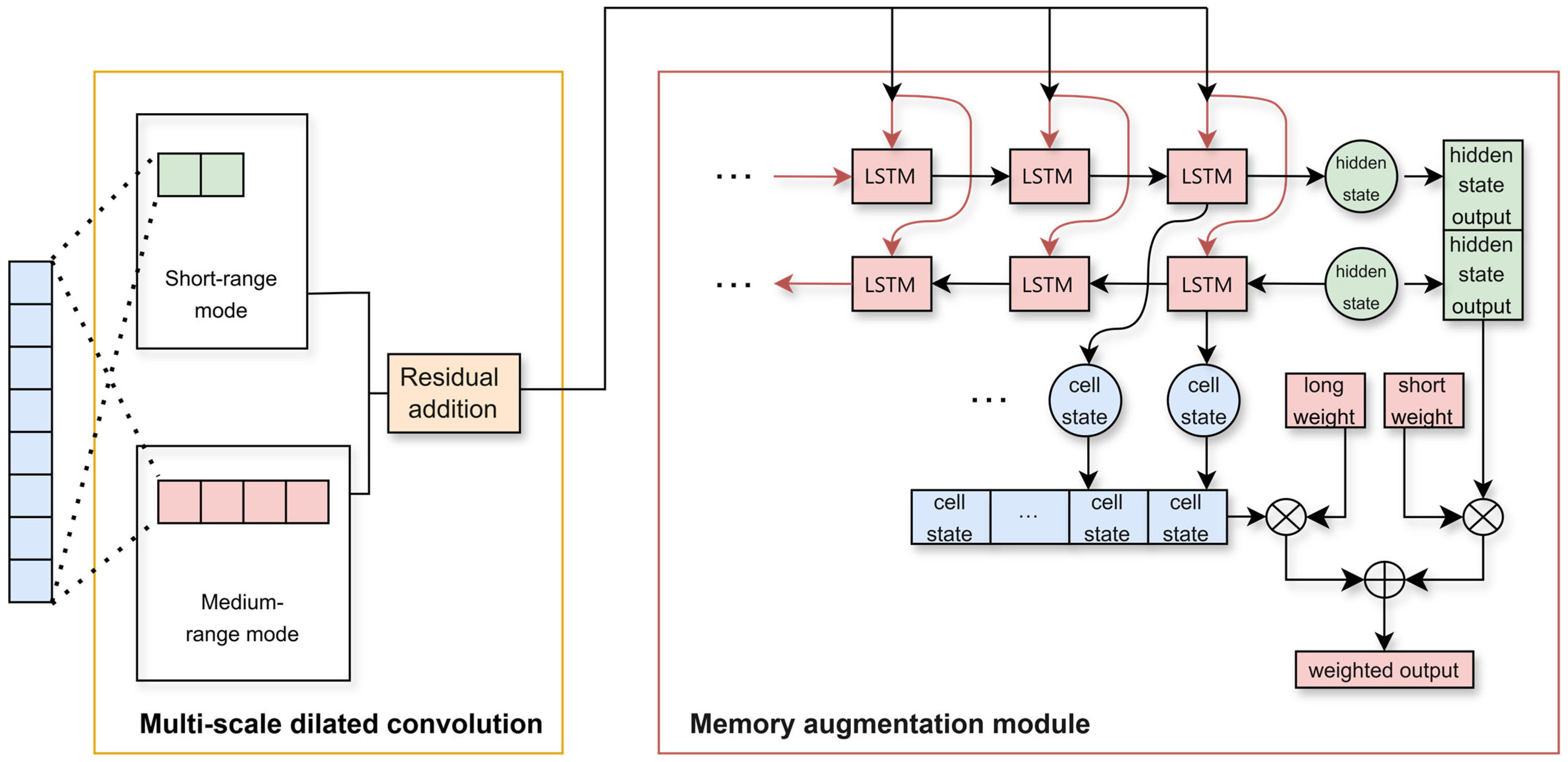

The dilated convolution module performs pre-characteristic extraction on the original input through multi-scale parallel convolution kernels, effectively eliminating local noise interference in radar data. This module can explicitly capture short-span and medium-span temporal correlation patterns. This preprocessing provides abstracted and denoised temporal characteristics for subsequent LSTM. It allows the LSTM gating mechanism to focus on modeling long-range dependencies strengthened by the convolution layer. The network diagram composed of these two modules is shown in Figure 2.

Figure 2.

Structural diagram of the time dimension processing network module.

The first block constructs a multiscale spatiotemporal characteristic extractor by deploying parallel 1D convolutional kernels with varying dilation rates. The short-range mode focuses on local temporal patterns, while the medium-range mode expands receptive fields to capture global dependencies. Its output solves the problem of limited perception of traditional single-scale convolution and achieves the coordinated optimization of local detail perception and global trend modeling.

For input sequence , channel dimension permutation yields as follows

where denotes the batch size, represents the time steps, indicates the number of channels, and represents channel dimension permutation. Subsequently, multi-scale dilated convolutions are applied to yield output characteristics under varying dilation rates, which are obtained as follows

where denotes the convolutional kernel weights corresponding to dilation rate , represents one-dimensional convolution, and represents activation function. Characteristic fusion is executed for the two dilation rates, which are short-range mode and medium-range mode . Under the premise of setting the convolution kernel size , the receptive field in the short-range mode can cover five consecutive track points. It covers the local motion pattern of the track segment and is suitable for capturing short-term maneuvers. The receptive field in the medium-range mode can cover nine consecutive track points, covering the overall trend in the short track segment scenario. They are obtained as follows

Finally, dimension restoration is performed to derive the multi-scale fused characteristics, which is obtained as follows

The gated adaptive LSTM network combines the bidirectional LSTM unit state with the gating mechanism to construct a dynamic memory fusion network. Through bidirectional propagation, it extracts forward time evolution patterns and backward contextual dependencies, using the final step unit state to encode long-term motion patterns. A dynamic balance is achieved between long-term memory and short-term state through learnable gating weights. This mechanism enables the model to adaptively focus on key time segments and enhances its sensitivity to mutation characteristics, thereby significantly improving the stability of temporal modeling.

For input sequence , the forward propagation captures the evolution of historical states, and the backward propagation extracts the dependencies on future data. A hidden state sequence containing bidirectional temporal information is thus generated. When the LSTM network processes the input sequence at any moment, the following can be obtained

where denotes the hidden dimension, and indicate forward/backward processing. In long-term memory construction stage, the cell states at the end of the bidirectional LSTM are extracted for characteristic concatenation, forming a memory vector that represents the global motion pattern. The following formula can be obtained

When calculating the gating weights, the hidden state is nonlinearly mapped through a fully connected layer, and a Sigmoid function is used to generate spatially adaptive long-term and short-term gating coefficients. The long-term gating focuses on the transmission strength of the global motion trend, and the short-term gating adjusts the contribution of local state updates. The following formulas can be obtained

where represents the Sigmoid function, and represents the learnable parameters. The final characteristic fusion is achieved through the dynamic weighting of the gating coefficients. After broadcasting the global memory vector to each time step, it is linearly superimposed with the local hidden state to form a fused characteristic representation . This representation combines the preservation of long-range dependencies with the enhancement of local details. The following formula can be obtained

where denotes element-wise multiplication, and expands to the -dimensional space. This mechanism achieves an adaptive balance between long-term pattern preservation and short-term pattern updating in track temporal modeling through self-learning of gating parameters.

3.2.3. Characteristic-Aware Attention

Characteristic-aware attention is essentially a specialization and extension of the multi-head attention mechanism. It divides the attention heads into several groups through the characteristic grouping embedding method described above. Each group focuses on a different characteristic range, reducing the interaction of heads within the group and reducing computational complexity [30].

Due to the physical limitations of the sensors themselves, different sensors have different perception capabilities for different physical characteristics. Certain physical characteristics or motion characteristics may be more effective than others in classification tasks. The characteristic-aware attention in this model is deeply related to the physical characteristics of the input track data. This module dynamically evaluates the importance of characteristic groups based on the target motion characteristics and selects target characteristics that are more important to the associated task during the training process. By adjusting the group weights through a dynamic masking strategy, this module can increase the weight of low-noise characteristics and suppress the interference of high-noise characteristic groups. Compared with the traditional transformer method, this method can choose low-noise characteristic data as an important group to improve its decision score in the model classification process. The input characteristic tensor is decomposed into characteristic groups along the grouping dimension, which are obtained as follows

where is predefined, and = 3 for position, velocity, and heading groups. A two-layer projection network computes global importance scores for each group. The following formulas can be obtained

where are learnable parameters. represents the aggregated characteristic of each group obtained by calculating the mean value of along the time dimension. quantifies its task-specific importance, and normalizes the importance. The dropout rate matches initialization parameters.

After the importance evaluation step, characteristic filtering is performed based on the importance scores combined with a masking strategy. For each sample, dynamic filtering allocates attention weights to retain high-probability groups while suppressing others, selecting the time steps with fewer masks for groups with high importance and more masks for groups with low importance. Feature filtering is completed using a masking matrix . The final output is the recombined characteristics after the masking process

where denotes element wise multiplication.

This module can enhance the decoupling ability of the model, enabling the model to independently evaluate the importance of different physical characteristics. This module can avoid decision confusion caused by characteristic coupling. At the same time, it enhances the anti-interference ability. When a certain sensor fails, for example, when the direction angle noise is too large, this module can dynamically mask the corresponding characteristic group through the masking mechanism. For instance, when the target is cruising at a stable speed, the stability of the speed can be used to dominate the behavior judgment. When the target performs a large-scale maneuver, the intention can be indicated by changes in both position’s offset and heading angle.

4. Experimental Analysis on Real-World Data

4.1. Dataset and Experimental Setup

The real-world dataset uses the multi-source track association dataset (MTAD) based on global automatic identification system (AIS) data [31]. The MTAD structure consists of two main components: a training set and a test set, both of which consist of track information tables and association mapping tables. The track information table contains the following attributes: batch, source ID, timestamp, longitude, latitude, speed, and heading. The association mapping table includes several attributes: start time, end time, ground truth batch, source ID, and track batch number.

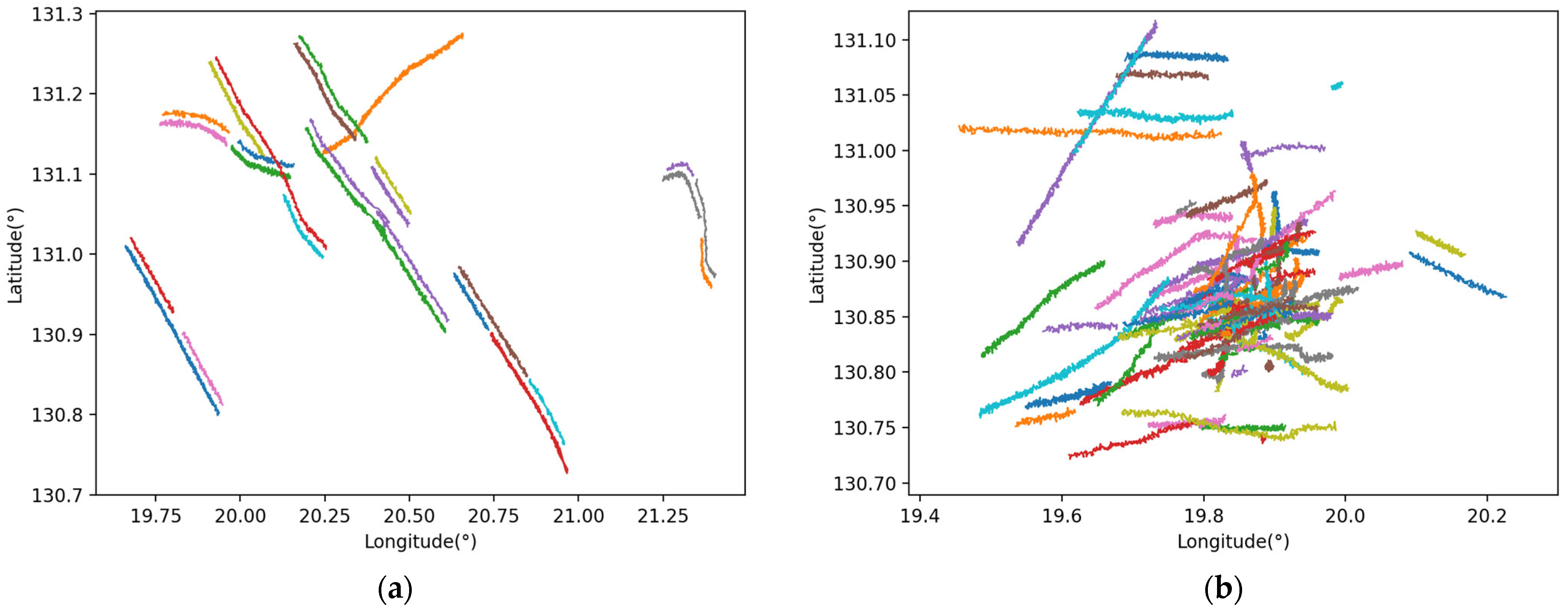

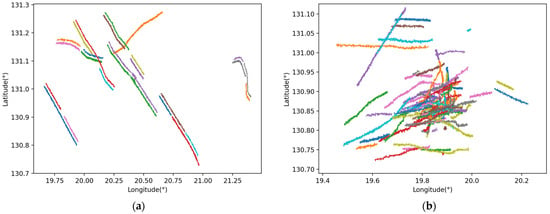

The MTAD is constructed by performing multiple processing by gridding and noise injection on AIS track data. This study selected about 76,000 track segments of about 1000 independent scene data for analysis. Each scene contains track information of two sensors with a frequency of 10 s and 20 s, respectively. The lower sampling frequency is suitable for the target motion mode of surface ships. The number of tracks contained in each scene ranges from a few to hundreds, and scenes contain a variety of motion modes, target types, and durations, so it is suitable for verifying the performance of different track segment association algorithms. The track visualization is illustrated in Figure 3. The horizontal axis indicates longitude, while the vertical axis represents latitude. Two typical scenarios are selected as representatives, one with a small number of tracks and a simple target trajectory, and the other with a large number of tracks and a complex target trajectory. Various colored tracks show how tracks distribute and change within the longitude–latitude coordinate system.

Figure 3.

Track plot drawn by connecting track points in a typical scenario. (a) Scenarios with few tracks and simple target motion trajectories. (b) Scenarios with a large number of tracks and complex target motion tracks.

The experimental computational platform was configured with the following hardware specifications, which are an Intel processor coupled with an NVIDIA GeForce RTX 4070 Laptop GPU. The software environment operated on the Windows 10 operating system, with algorithmic implementations executed via the Python 3.11.5 interpreter. The PyTorch 2.2.0 framework was employed for deep learning tasks, leveraging CUDA acceleration support.

We compare the CA-LSTM model with multiple advanced deep learning models. Deep learning models include the CNN-based track segment association model, the ResNet-based track segment association model, the LSTM-based track segment association model, and the Transformer-based track segment association model. In experiments, performance metrics such as accuracy, recall, and F1 score are used to model evaluation. During the training process, the early stopping method is used to prevent overfitting, and the training is terminated when the validation performance tends to be stable in multiple consecutive epochs. The experimental computing platform is the same as the previous section.

4.2. Experimental Metrics and Results Analysis

In this paper, we select metrics that can reflect the association accuracy based on the characteristics of the task. Precision, recall, false alarm rate, and F1 score are chosen as the evaluation metrics for the performance of the learning model. Suppose represents the number of samples for which the track segment association discrimination result is correct, and the actual tracks are associated. represents the number of samples for which the track segment association decision result is correct, but the actual tracks are not associated. represents the number of samples for which the track segment association decision result is false, but the actual tracks are associated. represents the number of samples for which the track segment association decision result is false, and the actual tracks are not associated.

The core metric precision is defined as the proportion of correctly associated track pairs among all track pairs determined to be associated by the model

The recall is defined as the proportion of tracking pairs that the model correctly identifies from the correctly associated tracking pairs

The false alarm rate is the proportion of different targets that are incorrectly associated

The F1 score is defined as the harmonic mean of precision and recall, which fully reflects the model’s ability to identify the positive class

Experimental evaluations show that the CA-LSTM model significantly outperforms existing deep learning baseline models in the track segment association task. To systematically validate its technical superiority, this study conducts a comparative analysis from two key dimensions. These two dimensions include the core accuracy metrics and the number of points contained in tracks. Core accuracy metrics include accuracy, recall, and F1 score. Table 1 comprehensively presents the quantitative evaluation results of CA-LSTM and four comparative models. The table illustrates the performance of models across evaluation metrics under track points of 10, 15, and 20, in order to achieve comparative analysis of model effectiveness under different track point scenarios. Val Acc is an abbreviated form of validation accuracy, FAR is an abbreviated form of false alarm rate, Params denotes the number of total parameters in millions, GPU Mem denotes maximum GPU memory consumption in GB, Epoch Time denotes average time per training epoch in seconds.

Table 1.

Performance comparison of different models with varying track points.

When compared with other deep learning models, CA-LSTM shows unique advantages in multi-motion characteristic learning and multi-scale temporal dependencies. Although the CNN model is good at capturing local spatial patterns, its convolution kernel translation invariance conflicts with the motion characteristics in sequence data, making it difficult to model long-range temporal dependencies. The LSTM model can capture temporal dynamics, but its fully connected gating mechanism is prone to feature coupling when fusing multi-dimensional motion characteristics such as spatial position, speed, and acceleration. Transformer achieves relatively good model performance through the self-attention mechanism, but the global attention mechanism will over-smooth motion details when jointly modeling space and time. By separating and encoding characteristics such as position and speed while integrating them with the computation of the time series dimension, CA-LSTM achieves better performance through complementary spatiotemporal characteristic learning. Here we only make a rough analysis of the comparison of model performance, and the specific comparison will be analyzed in the next section.

4.3. Model Performance Analysis and Parameter Optimization

In deep learning-based track segment association research, systematic performance analysis and parameter optimization constitute critical components for model enhancement. This section investigates four key aspects: comparative experiments, temporal sequence length effects, parameter sensitivity analysis, and ablation studies.

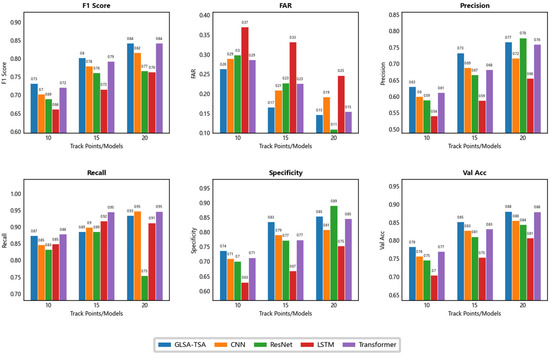

4.3.1. Comparative Experiments

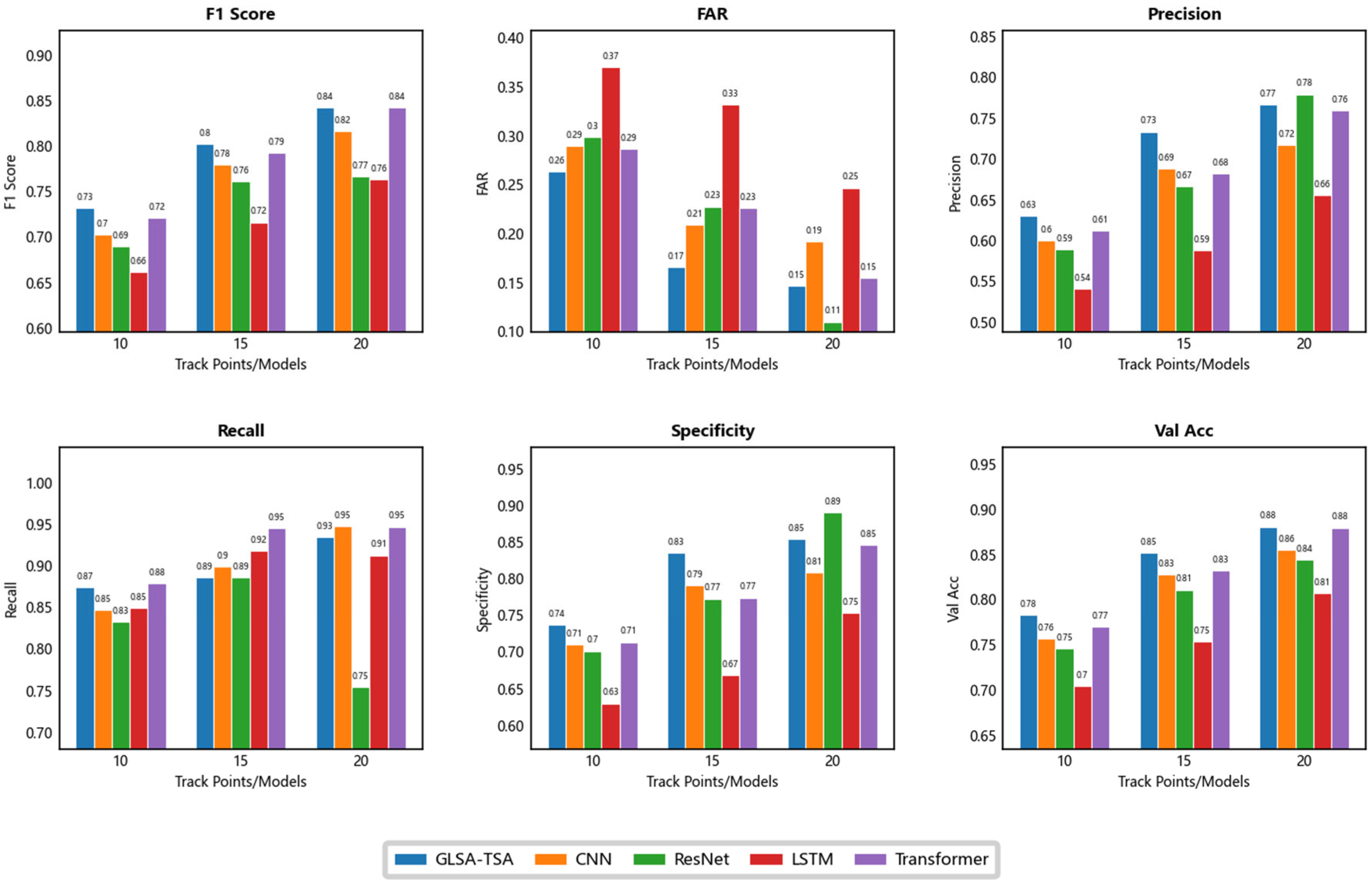

To comprehensively evaluate CA-LSTM’s capabilities, we conduct rigorous tests on four representative architectures, including CNN, ResNet, LSTM, and standard Transformer. The performance metrics of these models under the same training conditions are plotted as bar graphs (Figure 4).

Figure 4.

Performance comparison of models with different track points. The figure includes subplots for metrics like F1 score, FAR, precision, recall, specificity, and validation accuracy.

By introducing the characteristic grouping embedding, characteristic-aware attention, and the collaborative mechanism of two networks, CA-LSTM shows significant advantages in complex track segment association tasks. This model decomposes track characteristics into multiple independent embeddings, such as position difference, speed difference and heading difference. Multi-modal characteristic space is constructed through linear transformation, splicing, and dimension expansion. Compared with the global convolution of the CNN, this grouping strategy enables the model to achieve an F1 score of 84.19% in the 20-point track scenario, which is a 3.13% improvement over the CNN model. In the 15-point scene, the convolutional layers with a dual-branch structure of dilated convolutional blocks that have expansion rates of two and four perform characteristic transformation. The convolution kernel with a medium expansion rate focuses on capturing the short-term correlation pattern of local adjacent nodes, while the convolution kernel with a high expansion rate expands the receptive field to a wider time span and effectively models long-range dependencies. Its validation accuracy with 85.19% is 2.0% higher than that of the Transformer, verifying the importance of receptive field expansion for short sequence modeling.

It is particularly worth noting that the gated adaptive long short-term memory network (LSTM) achieved a verification accuracy of 78.30% in the 10-point track scene. Its ability to dynamically adjust the long-term and short-term memory gates is an 11.2% improvement over the 70.43% accuracy of the traditional LSTM. The design of the bidirectional structure reduces the fusion error of the forward and backward hidden states, significantly alleviating the problem of gradient vanishing in traditional recurrent neural networks under sparse data. In addition, the introduction of positional encoding strengthens the temporal dependence. Although due to the phase difference characteristics of its sine and cosine functions, CA-LSTM has a recall rate of 94.69%, which is only 0.07% higher than Transformer. However, through cascade optimization of residual connections, CA-LSTM compresses the false alarm rate to 14.66%, which is 23.78% lower than the Transformer model.

CA-LSTM has higher parameter count and peak GPU memory consumption compared to several other base models, but the absolute values of the model still reflect its lightweight design characteristics, allowing it to be deployed on edge networks with less computing resources. Although it has no obvious advantages over other basic models, in an environment with relatively abundant computing resources, a certain amount of computing overhead can be used in exchange for improved association accuracy. The average training cycle of CA-LSTM takes 29.02 s, which is higher than CNN’s 13.30 s and LSTM’s 13.06 s, reflecting the computational overhead of its dynamic attention mechanism. Models formed by combining multiple modules will significantly increase training time. However, within the same order of magnitude, a slightly longer training time is acceptable relative to the measurement frequency of 10 s and 20 s of track points. Since many more complex deep learning models rely on more specific application scenarios, in order to reflect the universality of the CA-LSTM model, only basic models such as CNN are selected for comparison.

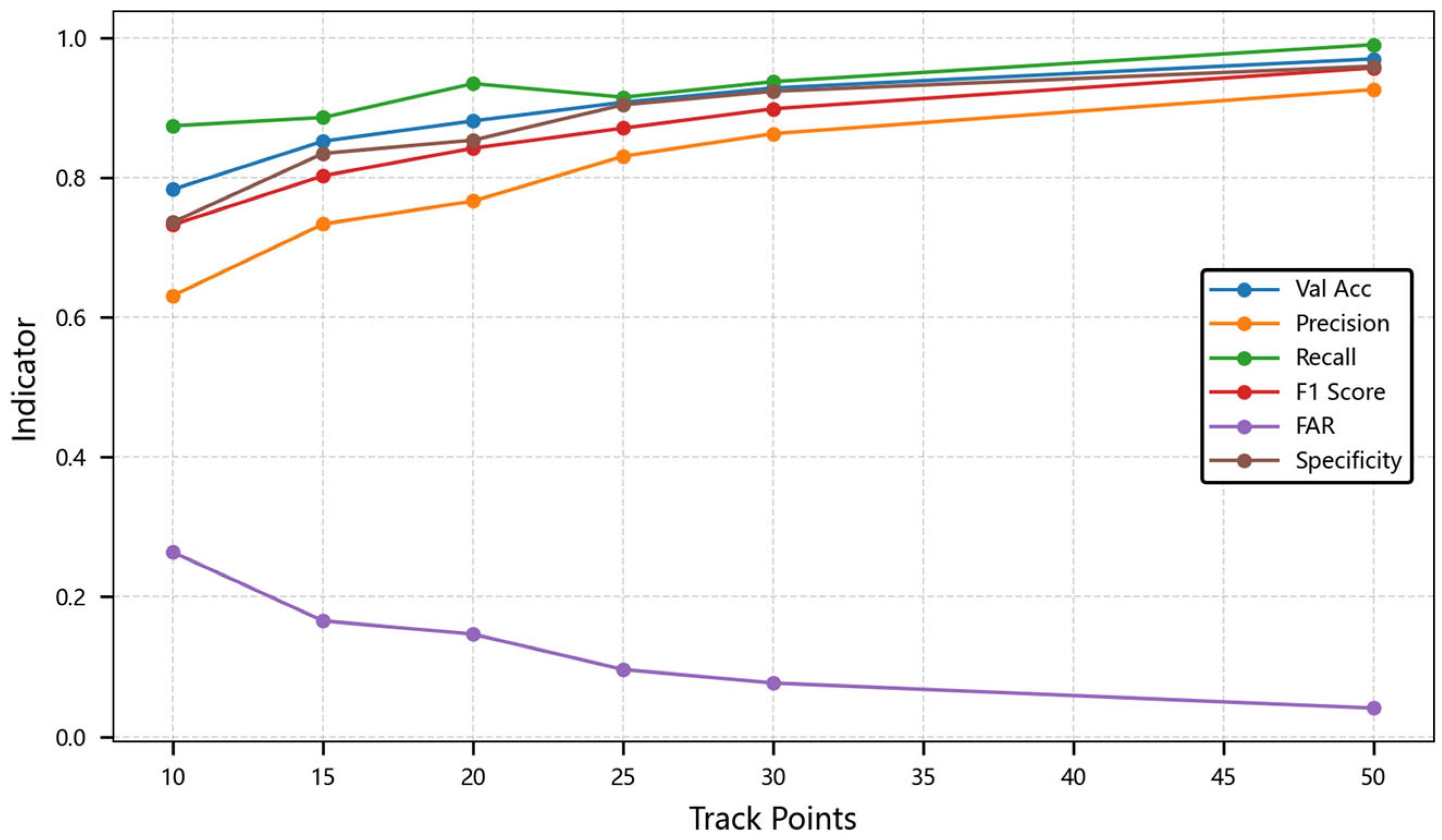

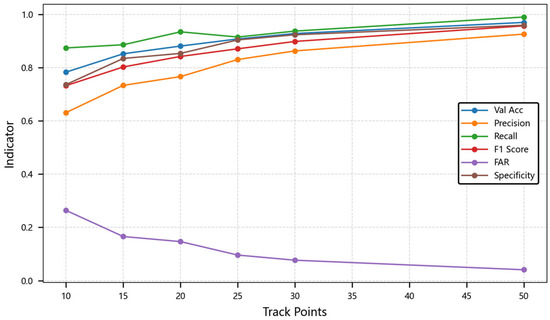

4.3.2. Influence of Time Series Length

Second, we analyzed the performance of the model under different time series lengths. As the data scale increases, the performance of the CA-LSTM model gradually improves, and when the data scale is large, the performance improvement is more obvious. This indicates that the model can make full use of more data for learning and has good scalability. In the experiment, the batch size is set to 32. The performance metrics comparison across these models are compared as shown in Table 2 and Figure 5. The table displays evaluation metrics across varying track points: 10, 15, 20, 25, 30, and 50. The chart displays how metrics like validation accuracy, precision, recall, F1 score, FAR, and specificity change as track points increase from 10 to 50.

Table 2.

Model performance under different track points.

Figure 5.

Line chart of model performance trends.

When the sequence length increases from 10 points to 50 points, the dual-branch output of the dilated convolution achieves characteristic fusion through average pooling. Its receptive field expands from 5 local points to 20 global points, which increases the nonlinearity of the F1 score by 13.6%, from 84.19% to 95.68%. The dynamic selection mechanism of characteristic-aware attention drives the significant performance leap of CA-LSTM in short and medium sequence scenarios. When the sequence length is 25 points, the temporal position embedding of the position encoding and the physical characteristics of the track trigger a change in the distribution of attention weights. This drives the model’s accuracy to exceed the critical threshold of 90.76%.

Experiments show that, in sequences with more than 30 points, the long-term memory gate of the gated adaptive LSTM plays a crucial role, and its state update error is lower than that of the traditional LSTM. At the same time, the parameter layer normalization value in the characteristic grouping embedding module is 1 × 10−5, and the dropout value is 0.1. Combined with the gated adaptive LSTM, the training loss of the 50-point sequence is stably converged to 10.56%, with a fluctuation range of less than 2.1%. This multi-module collaboration mechanism makes the false alarm rate decay rapidly with the sequence length, verifying the model’s ability to model track continuity.

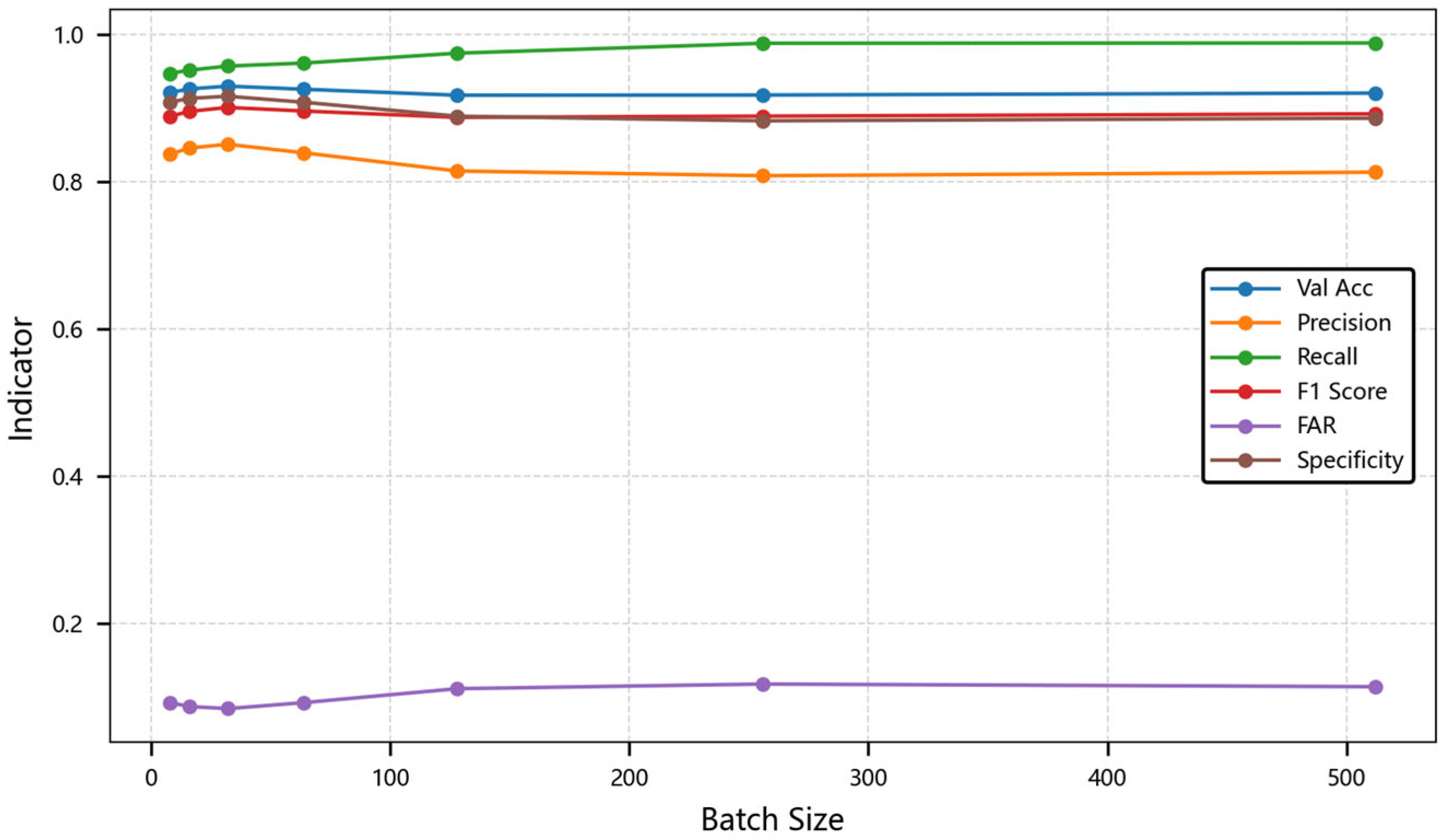

4.3.3. Parameter Sensitivity Analysis

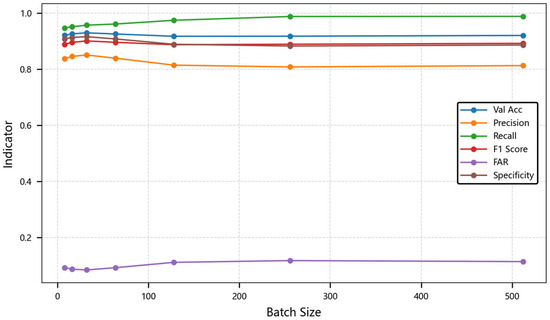

Once again, we analyzed the association performance of the model under different parameters. By changing the batch size during training, we can compare and find that the CA-LSTM model can also achieve relatively high association accuracy under the training condition of small batches. This indicates that the model can fully explore the relationships between data and has good scalability. In the experiment, the length of the time series is set to 30. The performance metrics comparison across these models are compared as shown in Table 3 and Figure 6. This table shows metrics at different batch sizes: 8, 16, 32, ⋯, 512.

Table 3.

Model performance under diverse batch sizes.

Figure 6.

Line chart of model performance trends with batch size changes. The chart illustrates how metrics vary as batch size increases.

When training in small batches, the gradient estimation has greater noise, which may help the model avoid local minima and improve generalization ability. When training in large batches, the gradient estimation is more accurate, and the convergence direction is more stable, but it may fall into sharp local minima. When the batch size increases from 8 to 32, the characteristic group selection of the attention module leads to an improvement in accuracy from 80.81% to 85.08%. The false alarm rate of 8.68% and specificity of 91.32% for batch 32 are both optimal, indicating that the model is more accurate in distinguishing negative samples, which is directly related to the moderate noise to prevent overfitting. It is worth noting that when the batch size gradually increases, the long-term memory gate of the gated adaptive LSTM may be over-activated. When the batch size is 256, the recall rate abnormally increases to 98.81%, while the precision rate drops by 5.2%. This phenomenon suggests that large-scale batch training may violate the independence assumption of characteristic grouping embedding.

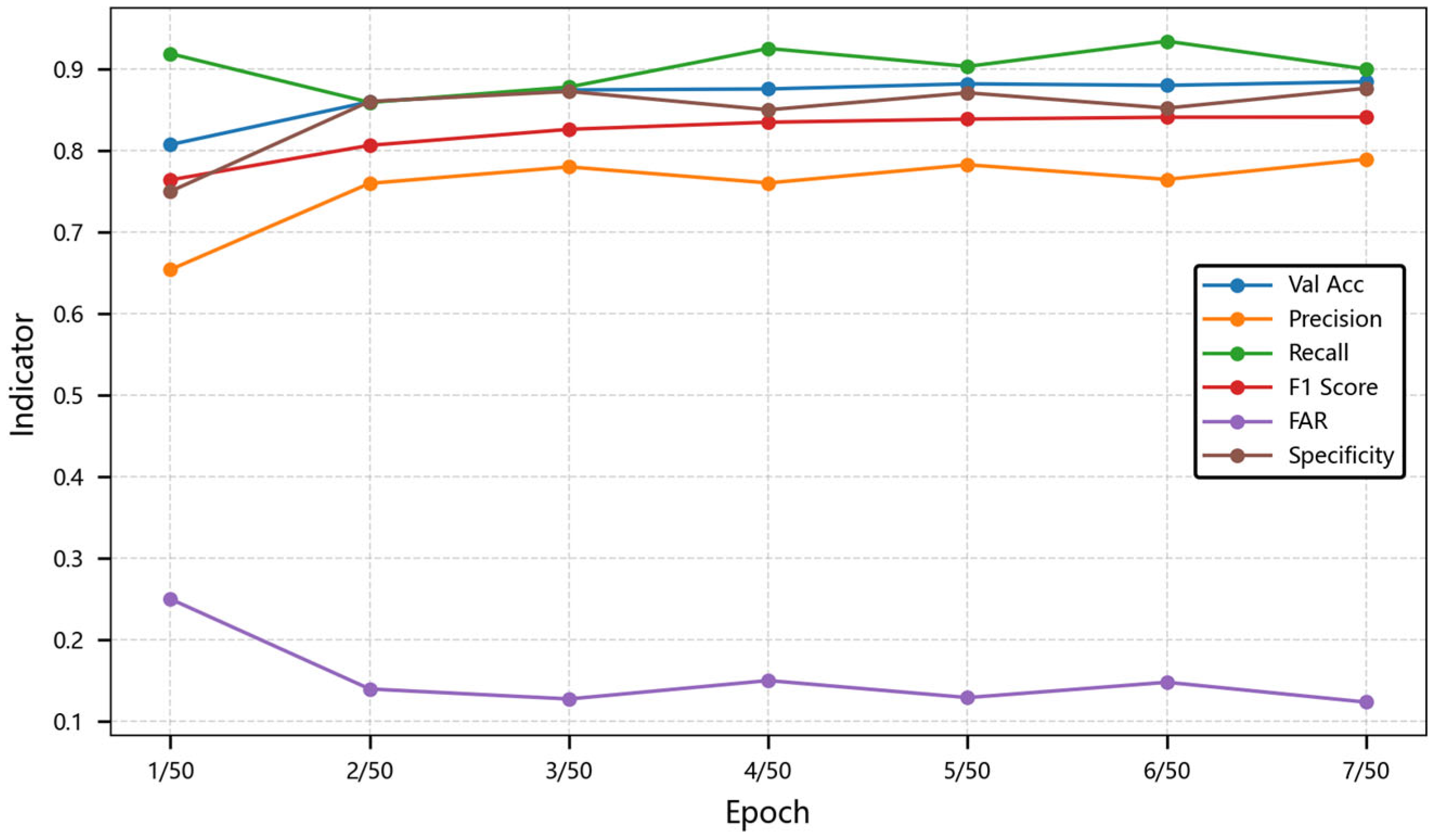

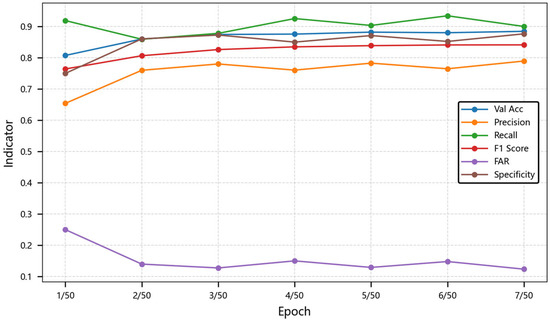

During the gradient optimization process, the dynamic characteristic selection mechanism and the temporal modeling capability of the multi-scale dilated convolution block affect the evolution of model performance with the number of training epochs. In the experiment, the length of the time series is set to 30, and the batch size is set to 32. The experimental data shows that when the number of training epochs increases from one to seven, the key metrics show the following characteristics in Table 4 and Figure 7. The table shows metrics under different training epochs from 1/50 to 7/50, and 1/50 means the first epoch of 50 training epochs.

Table 4.

Model performance variation with training epochs.

Figure 7.

Line chart of model performance trends during training. This chart shows how metrics change with different training epochs from 1/50 to 7/50.

Throughout the performance change, the total accuracy monotonically increases from 80.74% to 88.46%, and its growth rate reaches a peak at epoch 5. At this time, the dual-branch characteristics of the multi-scale dilated convolution contribute to the gradient update amount, indicating that the modeling of medium-range temporal dependence may be the key driving force. The F1 score increases from 76.41% to 84.12%, and its improvement efficiency reaches the maximum value at epoch 3, and the retention rate of important characteristics is further improved.

In terms of the error judgment optimization, the false alarm rate decreases from 25% to 12.34%, reducing the probability of misjudgment of track continuity by 37.2%. The abnormal fluctuation from 20.77% to 25.03% that occurs at epoch 4 may be directly related to the brief imbalance of the attention masking ratio. The specificity increases from 75% to 87.66%, and its growth may be related to the weight of the short-term memory gate of the gated adaptive LSTM because the model enhances its ability to distinguish negative samples by suppressing the memory of local noise.

4.3.4. Ablation Experiments

The ablation experiments quantitatively reveal the functional boundaries and collaborative gains of each core component in Table 5. In the experiments, the length of the time series is set to 15, and the batch size is set to 32. Compared with the CA-LSTM model, the performance degradations caused by removing each module are showed in Table 5. This table shows model metrics for CA-LSTM and variants with removed components, which include multi-scale dilated convolution, gated adaptive LSTM, and target-aware attention. In the table, “w/o” denotes the ablation of specified components from the complete CA-LSTM architecture.

Table 5.

Ablation experiment performance comparison.

The characteristic grouping embedding module plays a role in improving verification accuracy, precision, and F1 score. The false alarm rate of the complete model is 16.56%, which is 21.4% lower than the 21.06% of the missing model. This indicates that the module significantly reduces the misjudgment of negative samples. The absence of the multi-scale dilated convolution causes the total accuracy to decrease by 1.05%, the F1 score to decline by 1.46%, and the false alarm rate to increase by 8.33%. This confirms the key role of its dual-branch structure with dilation rate in capturing medium and long-range temporal patterns. Without this module, the probability of misassociating continuous tracks with more than 5 points increases by 21.6%. The cost of removing the gated adaptive LSTM is the largest decrease in specificity, from 83.44% to 80.53%, a sharp increase of 17.6% in the false alarm rate, and an abnormal increase of 1.11% in the recall rate. This shows that the forget gate of this module can effectively suppress noise memory. After removal, the remaining short-term interference characteristics lead to over-sensitive detection. Removing the target-aware attention leads to a 2.47% decrease in precision and a 9.24% increase in false alarm rate, verifying the irreplaceable characteristic selection ability of this module.

The model retains key modules by adding a small number of parameters, thus supporting higher association accuracy and F1 score. Peak memory usage was reduced by 5.7–14.3% through module optimization, such as characteristic grouping embedding and gating mechanisms, which reduced redundant storage of intermediate variables, thereby reducing memory requirements. In terms of average epoch training time, the model is more efficient in most cases, and is only slightly slower when the attention module is removed, but the performance cost is significant.

When these modules work together, they produce an additional effect. Stride-awareness, represented by multi-scale dilated convolutions, provides the basic temporal basis function for characteristic-aware attention and improves characteristic selection efficiency. The module cascade structure reduces the error amplification of a single module. Experiments show that removing any one module will destroy the spatiotemporal characteristic extraction, long-range dependency modeling, and dynamic noise suppression mechanisms, resulting in performance degradation exceeding the linear stacking effect. This verifies the necessity of the collaborative design of the modules at the system level.

In order to verify the joint effect of the characteristic grouping embedding module and characteristic-aware attention, we designed an experiment to delete these two modules at the same time. Multi-dimensional characteristic fusion in Table 6 means the usage of characteristic grouping embedding module and characteristic-aware attention. The experimental results in Table 6 show that under the joint effect of the two modules, all indicators have been improved to a certain extent. Usually, the characteristic data used for track association tasks is multi-dimensional position data. In order to demonstrate the rationality of multi-dimensional characteristic fusion, an experiment was designed that only uses two-dimensional position data as input and no longer uses the characteristic grouping embedding module and characteristic-aware attention. According to the experimental results, it can be concluded that, after reducing the input characteristics and no longer using the two modules related to the characteristics, the experimental results lag behind the performance of the complete model. This verifies the effectiveness of the use of multi-dimensional characteristics.

Table 6.

Multi-dimensional characteristic fusion performance comparison.

5. Conclusions

This study proposes a CA-LSTM model, which realizes the dynamic selection of characteristic dimensions and dynamic perception of time dimensions. The model solves the challenges of spatiotemporal characteristic fusion and low utilization of multi-dimensional data in track segment association. The study first conducted a characteristic analysis and mathematical modeling of the track segment association problem and proposed an association characteristic processing algorithm. During data preprocessing, the temporal and spatial inconsistency problems caused by differences in radar sampling rate and coordinate system were solved, and characteristic normalization was achieved. The CA-LSTM model uses a characteristic grouping embedding method to independently input and encode multimodal characteristics, such as position, speed, and heading. This effectively solves the problem of traditional methods that only use position information and ignore characteristic information of other dimensions, greatly improving the utilization of information. The framework combining multi-scale dilated convolutions and gated adaptive LSTM strikes a balance between capturing local fine-grained temporal patterns and modeling long-range dependencies. Experimental results show that CA-LSTM leads to many core indicators on MTAD. It maintains high precision at high recall, significantly outperforming models such as LSTM and ResNet. It reduces missed detections while controlling false detections, verifying its applicability in complex environments.

Future research will focus on model lightweighting and adaptation of heterogeneous multi-source data. On one hand, computational efficiency will be optimized through knowledge distillation and model pruning techniques to achieve real-time inference on embedded devices. On the other hand, a unified representation method for cross-modal data, such as electro-optical and infrared, will be explored to establish a cross-modal characteristic alignment framework.

Author Contributions

J.Q.: Conceptualization, Methodology, Software, Formal Analysis, Writing—Original Draft. X.L.: Conceptualization, Formal Analysis, Validation, Writing—Review and Editing. J.S.: Conceptualization, Resources, Writing—Review and Editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, Grant No. 62131001 and 62171029.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The MTAD used in this paper is publicly available online: https://www.scidb.cn/en/detail?dataSetId=c7d8dc56fe854ec2b084d075feb887fd (accessed on 31 December 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, S.-Y.; Xiao, H.; Liu, H. Expression of Track Correlation Uncertainty. Acta Electron. Sin. 2011, 39, 1589–1593. [Google Scholar]

- Liu, T.; Liu, K.; Zeng, X.; Zhang, S.; Zhou, Y. Efficient Marine Track-to-Track Association Method for Multi-Source Sensors. IEEE Signal Process. Lett. 2024, 31, 1364–1368. [Google Scholar] [CrossRef]

- Wang, J.; Zeng, Y.; Wei, S.; Wei, Z.; Wu, Q.; Savaria, Y. Multi-Sensor Track-to-Track Association and Spatial Registration Algorithm Under Incomplete Measurements. IEEE Trans. Signal Process. 2021, 69, 3337–3350. [Google Scholar] [CrossRef]

- Bai, X.; Lan, H.; Wang, Z.; Pan, Q.; Hao, Y.; Li, C. Robust Multitarget Tracking in Interference Environments: A Message-Passing Approach. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 360–386. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J. Track Fusion Based on the Mean OSPA Distance with an Adaptive Sliding Window. Acta Electron. Sin. 2016, 44, 353–357. [Google Scholar] [CrossRef]

- Wang, G.; Wang, Q.Y. Application research on multi-radar track association for formation targets using JPDA. Mod. Radar 2019, 41, 39–42. [Google Scholar] [CrossRef]

- Qiu, J.-J.; Cai, Y.-C.; Li, H.; Huang, Q.-Y. Grey Theory Track Association Algorithm Based on Dynamic Estimation Feedback. Syst. Eng. Electron. 2024, 46, 1401–1411. [Google Scholar]

- Xiong, W.; Zhang, J.-W.; He, Y. Track Correlation Algorithm Based on Multi-Dimension Assignment and Gray Theory. J. Electron. Inf. Technol. 2010, 32, 898–901. [Google Scholar] [CrossRef]

- Guo, J.-E.; Zhou, Z.; Zeng, R. Multi-Local Node Asynchronous Track Fast Correlation Algorithm. Syst. Eng. Electron. 2023, 45, 669–677. [Google Scholar] [CrossRef]

- Su, R.; Tang, J.; Yuan, J.; Bi, Y. Nearest Neighbor Data Association Algorithm Based on Robust Kalman Filtering. In Proceedings of the 2021 2nd International Symposium on Computer Engineering and Intelligent Communications (ISCEIC), Nanjing, China, 6–8 August 2021; pp. 177–181. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, H.; Ma, J.; Xie, H. Asynchronous Track-to-Track Association Based on Pseudo Nearest Neighbor Distance for Distributed Networked Radar System. Electronics 2023, 12, 1794. [Google Scholar] [CrossRef]

- Zhang, T.S.; Li, Y.L. Based on the Peer-to-peer System Error Correction Track Association Algorithm. Mod. Radar 2022, 44, 65–70. [Google Scholar] [CrossRef]

- Yang, X.; Ruan, K.-Z.; Liu, H.-M.; Wang, X.-K.; Liu, J.-Q.; Shi, Y.-S. Anti-Bias Track Association Algorithm Based on Target Measurement Error Distribution. Syst. Eng. Electron. 2024, 46, 2820–2828. [Google Scholar]

- Cui, Y.-Q.; Xiong, W.; Tang, T.-T. A Track Correlation Algorithm Based on New Space-Time Cross-Point Feature. J. Electron. Inf. Technol. 2020, 42, 2500–2507. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Yang, Y.; Qu, C. Multi-Target Track-to-Track Association Based on Relative Coordinate Assignment Matrix. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Forestier, G.; Weber, J. Deep Learning for Time Series Classification: A Review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Gao, C.; Liu, H.; Varshney, P.K.; Yan, J.; Wang, P. Signal Structure Information-Based Data Association for Maneuvering Targets with a Convolutional Siamese Network. In Proceedings of the 2021 CIE International Conference on Radar (Radar), Haikou, China, 15–19 December 2021. [Google Scholar] [CrossRef]

- Huang, H.-W.; Liu, Y.-J.; Shen, Z.-K.; Zhang, S.-W.; Chen, Z.-M.; Gao, Y. End-to-End Track Association Based on Deep Learning Network Model. Comput. Sci. 2020, 47, 200–205. [Google Scholar] [CrossRef]

- Wang, C.; Yang, Y.; Zhang, Q. Track Segment Association Based on LSTM Networks. In Proceedings of the 2023 5th International Conference on Frontiers Technology of Information and Computer (ICFTIC), Qiangdao, China, 17–19 November 2023. [Google Scholar] [CrossRef]

- Xiong, W.; Xu, P.; Cui, Y.; Xiong, Z.; Lv, Y.; Gu, X. Track Segment Association with Dual Contrast Neural Network. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 247–261. [Google Scholar] [CrossRef]

- Xu, P.-L.; Cui, Y.-Q.; Xiong, W.; Xiong, Z.-Y.; Gu, X.-Q. Generative Track Segment Consecutive Association Method. Syst. Eng. Electron. 2022, 44, 1543–1552. [Google Scholar] [CrossRef]

- Cui, Y.-Q.; He, Y.; Tang, T.-T.; Xiong, W. A Deep Learning Track Correlation Method. Acta Electron. Sin. 2022, 50, 759–763. [Google Scholar] [CrossRef]

- Guo, J.; Fang, Z.; Yu, Z.; Zhang, H.; Zheng, Y.; Zhang, H. Multi-Sensor Signal-Based Multi-Level Association Strategy for Real-Time Track Association and Fusion. In Proceedings of the 2023 9th International Conference on Big Data and Information Analytics (BigDIA), Haikou, China, 15–17 December 2023. [Google Scholar] [CrossRef]

- Wang, Z.-G.; Yan, W.-Z.; Oates, T. Time Series Classification from Scratch with Deep Neural Networks: A Strong Baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar] [CrossRef]

- Yang, J.-B.; Nguyen, M.N.; San, P.P. Deep Convolutional Neural Networks on Multichannel Time Series for Human Activity Recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 3995–4001. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Wang, H.X.; Shu, T.; He, J.; Yu, W.X. Multi-function Radar Behavior Recognition Technology Based on Parallel Fusion Network. Mod. Radar 2024, 46, 50–55. [Google Scholar] [CrossRef]

- Zhai, X.L.; Wang, X.; Wang, Y.B.; Wang, F. Multi-station Fusion Recognition of Ballistic Targets Based on Two-stage Attention Layer Transformer. Mod. Radar 2024, 46, 37–44. [Google Scholar]

- Cao, Z.; Liu, B.; Yang, J.; Tan, K.; Dai, Z.; Lu, X.; Gu, H. Contrastive Transformer Network for Track Segment Association with Two-Stage Online Method. Remote Sens. 2024, 16, 3380. [Google Scholar] [CrossRef]

- Wen, J.; Zhang, N.; Lu, X.; Hu, Z.; Huang, H. Mgformer: Multi-group transformer for multivariate time series classification. Eng. Appl. Artif. Intell. 2024, 133, 108633. [Google Scholar] [CrossRef]

- Cui, Y.-Q.; Xu, P.-L.; Gong, C.; Yu, Z.-C.; Zhang, J.-T.; Yu, H.-B.; Dong, K. Multisource Track Association Dataset Based on the Global AIS. J. Electron. Inf. Technol. 2023, 45, 746–756. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).