1. Introduction

Red tide is a marine ecological phenomenon characterized by the excessive proliferation of phytoplankton, such as algae, in seawater, leading to the water turning red or other colors [

1]. With the changes in the marine environment along China’s coast, the frequency of red tides has been increasing. Between 2011 and 2017, a total of 212 red tide events occurred in the East China Sea, with 6, 56, and 143 events in the waters off Shanghai, Fujian, and Zhejiang, respectively. Except for two large-scale red tides in Shanghai in 2016, Zhejiang experienced the highest frequency of red tides and the most extensive affected areas. The scale of red tides and the resulting economic losses have been growing, leading to increased negative impacts on marine ecosystems, coastal aquaculture, and the ecological environment. The toxins produced by red tides also pose a threat to human health [

2]. In recent years, with the acceleration of industrialization and urbanization in China, a large amount of pollutants have been discharged into the ocean, exacerbating the deterioration of the marine environment and further contributing to the occurrence of red tides. In recent years, red tide disasters have attracted increasing attention, making red tide detection an important area of research.

In recent years, China has made significant progress in red tide detection. Traditional monitoring technologies utilizing platforms such as ships and satellites, combined with ground observations and laboratory analyses, have been employed to monitor and study the occurrence, development, and dissipation of red tides. Currently, there are three main traditional methods for detecting red tides: “visual observation”, “instrumental measurement”, and “remote sensing”. The first two methods require substantial human and material resources for red tide detection, and the harsh field conditions increase the difficulty of detection. Remote sensing, which uses satellites to detect red tides, is an advanced method established with modern scientific technology. This method relies entirely on instruments for observation and can provide timely, synchronous, and large-scale monitoring of red tides. Remote sensing methods for red tide detection are primarily divided into three categories. The first category involves using parameters such as chlorophyll content to identify red tides [

3,

4]. In 2018, Jiang Dejuan and colleagues used chlorophyll concentration and other parameters to visually identify red tides in the Bohai Sea [

5]. The second category detects red tides by observing significant spectral differences in water bodies during red tide events. The explosive proliferation or aggregation of protozoa or bacteria during red tides causes changes in water color and spectral characteristics, particularly noticeable fluctuations around the green light band (550 nm). Remote sensing detects these spectral changes to identify red tides [

6,

7]. In 2017, Jiang Binbin and colleagues used the normalized water-leaving radiance at 550 nm as a characteristic band for red tide detection. The third category involves detecting red tides through the fluorescence of algae. For example, some algae exhibit fluorescence in the red light band [

8]. In 2018, Zhang Feng and colleagues used the fluorescence line height of different water bodies to extract red tide indices, thereby determining the state of the water for red tide detection [

9]. In 2021, Wang Siyuan and colleagues selected band information at 460, 530, 650, and 750 nm for red tide detection. Traditional red tide detection methods, based on expert experience, prior knowledge, and qualitative analysis of extensive reports, often have inaccurate and less applicable thresholds, limiting the improvement of red tide detection accuracy.

Deep learning has been widely applied in remote sensing applications such as image classification, target recognition, and image fusion. Due to its powerful capabilities in big data mining and feature extraction, deep learning has also been employed in red tide detection, achieving promising results [

10,

11]. This paper aims to detect red tides using satellite remote sensing image data, with image classification as the primary task. In China, with the continuous development of deep learning technology, an increasing number of scholars have begun to apply it to red tide detection. For instance, deep learning models like convolutional neural networks (CNN) have been used to identify red tides in satellite remote sensing images. By training models to extract features from red tide images, automatic identification and monitoring of red tides can be achieved. In 2018, Hu et al. proposed a CNN network composed of eight fully connected layers, demonstrating higher accuracy in red tide detection compared to traditional machine learning methods [

12]. In 2019, Kim et al. utilized GOCI data and the U-Net network to study red tide detection in waters around the Korean Peninsula [

13]. U-Net, widely used for pixel classification or target segmentation, has shown good performance in red tide detection. In 2022, Zhao et al. proposed the RDU-Net network based on the HY-1D coastal imager, improving the detection accuracy of red tide edges and dispersed distribution areas using deep learning. These studies validate the feasibility of using deep learning for remote sensing-based red tide detection [

14]. In 2023, Ding et al. employed GOCI data and an improved U-Net network to study red tide detection in waters around the East China Sea, achieving effective detection of red tides on the day of occurrence and obtaining good detection results [

15]. Overall, deep learning-based red tide detection and prediction technologies are still in a phase of continuous development and exploration both domestically and internationally. Although some progress has been made, there are still challenges and issues to be addressed. For example, deep learning models based on remote sensing information, such as CNN, have shown high detection accuracy in concentrated red tide areas but perform less ideally in detecting dispersed red tides. On the other hand, U-Net-based methods have demonstrated good performance in detecting large-scale concentrated and strip-distributed red tides and can effectively detect red tides on the day of occurrence. However, the detection accuracy for time-series red tide samples still needs improvement. Based on the above research, this paper proposes a red tide detection method based on a time series fusion network model.

3. CSF-RTDNet Red Tide Detection Model

3.1. Flowchart of Red Tide Detection Based on the CSF-RTDNet Model

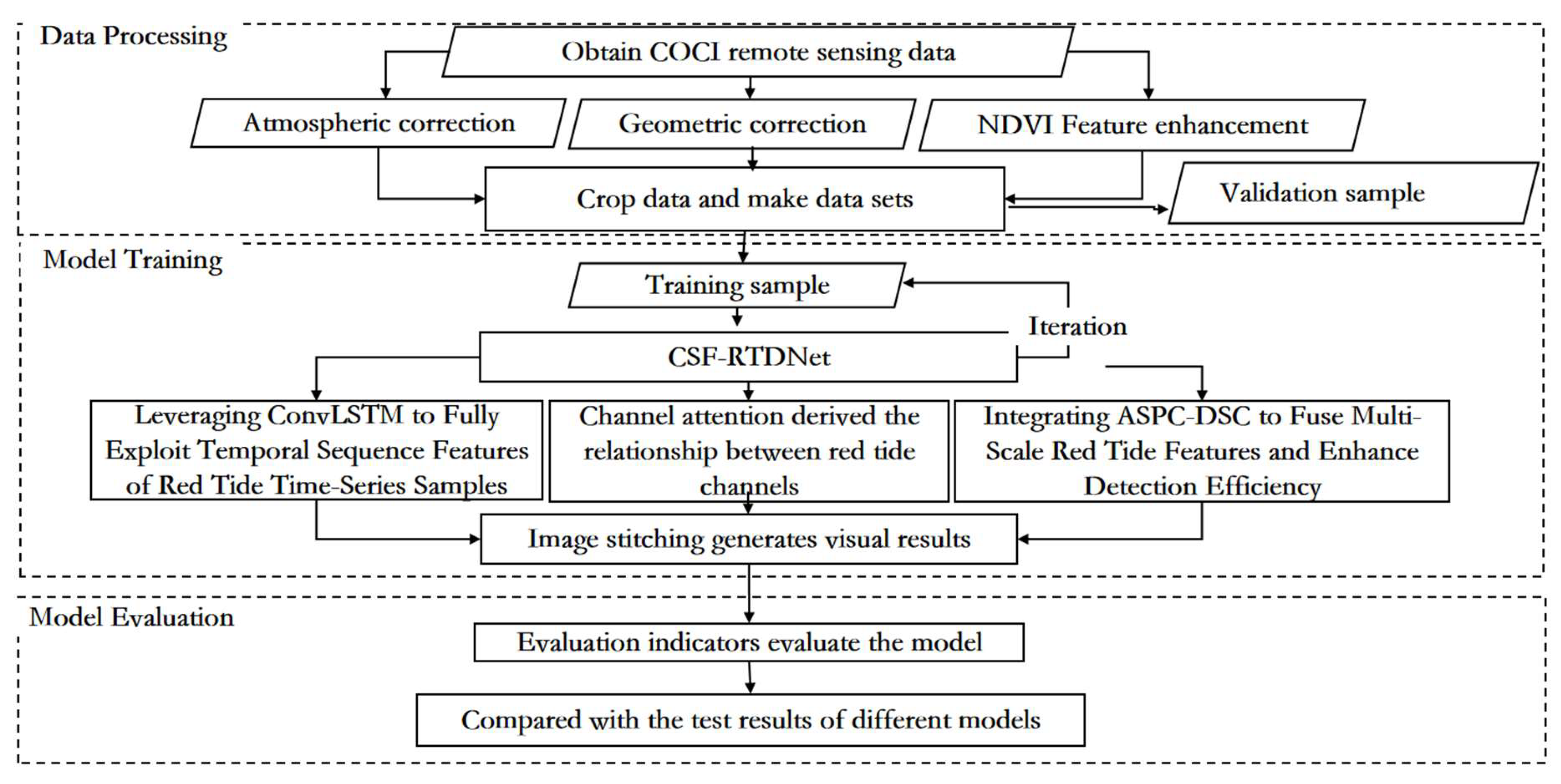

The overall technical approach of this paper is shown in

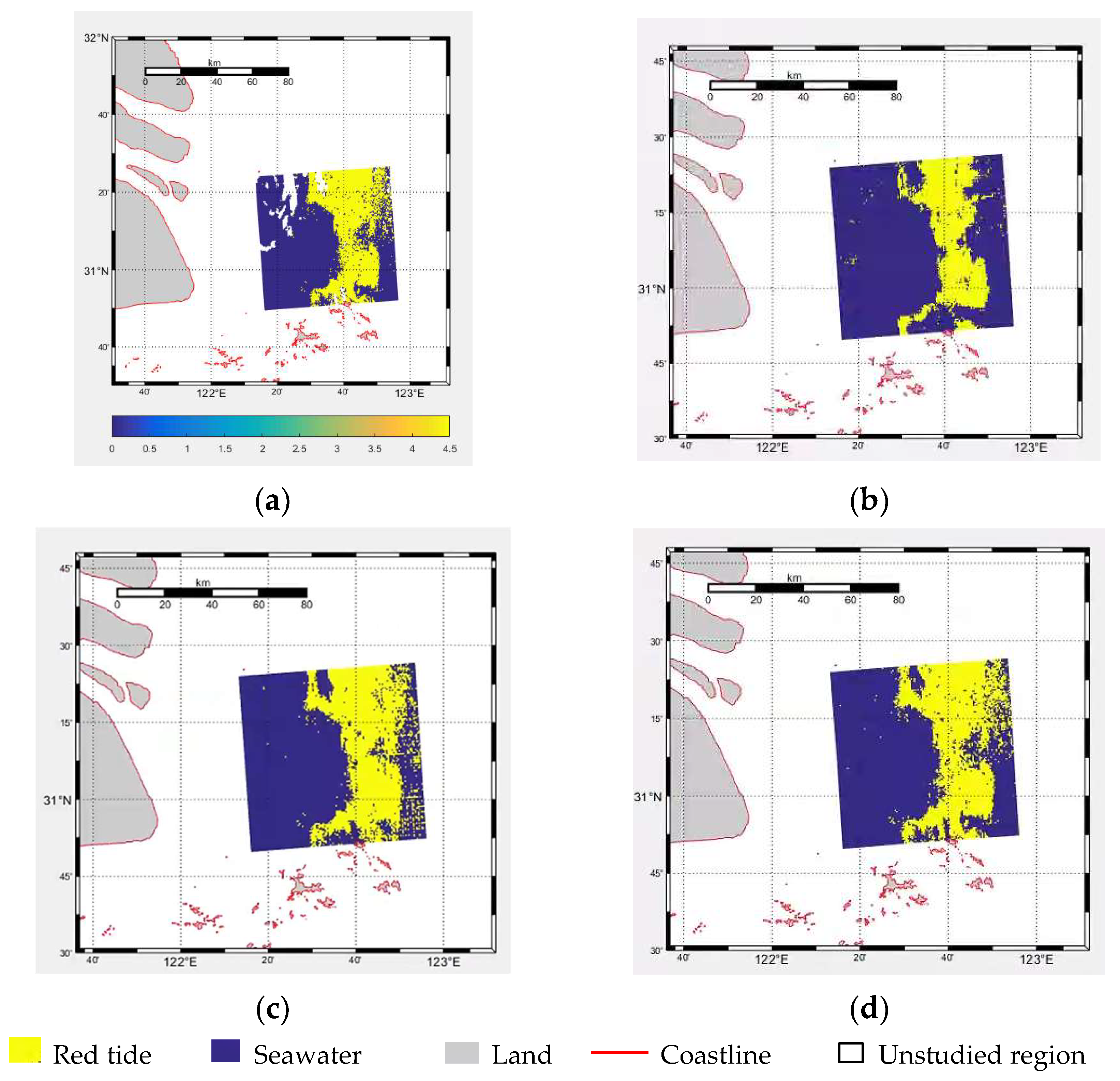

Figure 3, which is mainly divided into three parts: data processing, model training, and model evaluation. Firstly, the L1B data from the GOCI satellite is preprocessed through geometric correction, atmospheric correction, and other data processing methods, and the NDVI feature is fused to enhance the characteristics of red tides. Then, the data are cropped to create a dataset. Model training involves inputting the processed dataset into the red tide detection model based on the time series fusion network model for training. The red tide detection method based on the time series fusion network model introduces the ECA channel attention mechanism module, the atrous spatial pyramid convolution and depthwise separable convolution fusion module (ASPC-DSC), and the dropout layer on the basis of the traditional U-Net. This allows the model to effectively achieve cross-channel interaction, assign different weights according to the influence of different channels, and aggregate multi-scale contextual red tide feature information under multiple sampling rates, reducing computational resource usage while enhancing the ability to capture spatial information and improving the computational efficiency and inference speed of the model. The introduction of the convolutional long short-term memory network (ConvLSTM) effectively integrates the spatiotemporal feature changes of red tides at different time periods, fully exploiting the time series features of red tide samples. This enables better detection of red tides, and the relevant parameters are saved after the model is trained. Finally, the model evaluation uses metrics such as accuracy, precision, recall, and the kappa coefficient to assess the detection performance of the model and compares it with other red tide detection methods such as the support vector machine (SVM) model, the fully convolutional neural network (FCN), and the basic U-Net network model.

3.2. Feature Enhancement—NDVI

During a red tide event, microalgae, protozoa, or bacteria in the water undergo explosive proliferation or aggregation under certain environmental conditions, leading to an increase in chlorophyll content and changes in the water’s spectral characteristics. In GOCI remote sensing images, significant changes occur in the original six bands (412, 443, 490, 555, 660, and 680 nm), with the most pronounced fluctuations observed in the blue and green bands.

Due to the small spectral differences between pixels at the boundaries of red tides, the separability between red tides and seawater is weak, making it difficult to accurately distinguish the complex boundaries of small-scale, dispersed, and strip-like red tides. Research shows that high-resolution remote sensing images contain complex and rich red tide feature information, with strong responses in the red and near-infrared bands. The NDVI (Normalized Difference Vegetation Index) of red tides significantly differs from that of seawater and other objects. Therefore, this paper introduces NDVI as an input feature. The average NDVI values of both red tides and seawater are less than 0, with seawater having the smallest NDVI value, followed by red tides. Introducing NDVI enhances the feature information of red tides, making it easier to distinguish small-scale, dispersed, and strip-like boundary red tides. By combining GOCI’s original six-band data with NDVI, the spectral differences between red tides and seawater can be increased, enhancing their separability. In GOCI band data, the red and near-infrared bands correspond to B5 and B8, respectively. The NDVI Formula (2) is as follows:

3.3. Attention Mechanism of ECA Channel

The basic idea of attention mechanisms is to compute an importance distribution over the model’s inputs (such as word sequences or different regions of an image) and then weight the inputs based on this distribution, allowing the model to focus on more useful information. This is typically achieved by calculating a weighted sum of input features, where the weights are dynamically learned by the model and reflect the importance of each feature to the current task. Research shows that red tides are certain to occur in high-chlorophyll waters and may occur in low-chlorophyll waters. When the chlorophyll content in the water increases, red tides also erupt, and the data in the blue and green bands of remote sensing images show significant changes. Therefore, in red tide detection, assigning different weights based on the importance of different bands to red tide detection can effectively improve the classification accuracy of the detection model.

The ECA module can effectively achieve cross-channel interaction, assigning different weights based on the influence of each channel, thereby improving the classification accuracy of the detection model. Assuming an input feature map of size H × W × C, global pooling (with a pooling size of H × W) is first applied to obtain a 1 × 1 × C feature map, retaining only the channel dimension. This is followed by a 1D convolutional layer, enabling information interaction between each channel and its adjacent channels while sharing weights. Subsequently, a sigmoid layer is connected to produce a 1 × 1 × C feature. Finally, the original H × W × C feature map is multiplied element-wise with the 1 × 1 × C feature map to obtain the feature map enhanced with attention weights. By performing element-wise multiplication on the feature map, attention weights are increased, adjusting the correlation between different channels in the feature map and enhancing the model’s accuracy [

23]. The specific structure is illustrated in

Figure 4:

The ECA module structure is color-coded as follows: light blue represents the compressed features after global average pooling (GAP), green denotes the adaptive selection of 1D convolution kernel size for local cross-channel interaction, and orange indicates the final channel attention weights generated via Sigmoid activation. Colored arrows visualize dynamic interaction paths between channels, while brown highlights the output features recalibrated by attention weights.

3.4. Atrous Spatial Pyramid Convolution and Depthwise Separable Convolution (ASPC-DSC)

Atrous spatial pyramid pooling (ASPP) is a convolutional neural network structure in deep learning that combines the concepts of atrous convolution and spatial pyramid pooling [

24]. It is primarily used to process image data with multi-scale information and has demonstrated significant effectiveness. ASPP performs parallel sampling on the given input using atrous convolutions with different sampling rates to achieve pooling operations. It is then combined with global average pooling to form a new feature pyramid model, thereby aggregating multi-scale contextual information. This approach leverages atrous convolution to expand the receptive field of the convolutional kernel without downsampling. Specifically, atrous convolutions with rates of 1, 3, and 5 and a kernel size of 33 are employed, and depthwise separable convolution is used to reduce the number of parameters. The specific implementation of the ASPP module is as follows: the first branch uses a 11 standard convolution to maintain the original receptive field; the second to fourth branches use depthwise separable convolutions with different dilation rates to extract features and obtain varying receptive fields; and the fifth branch applies global average pooling to the input to capture global features. Finally, the feature maps from the five branches are concatenated along the channel dimension, and a 1 × 1 standard convolution is used to fuse the multi-scale information.

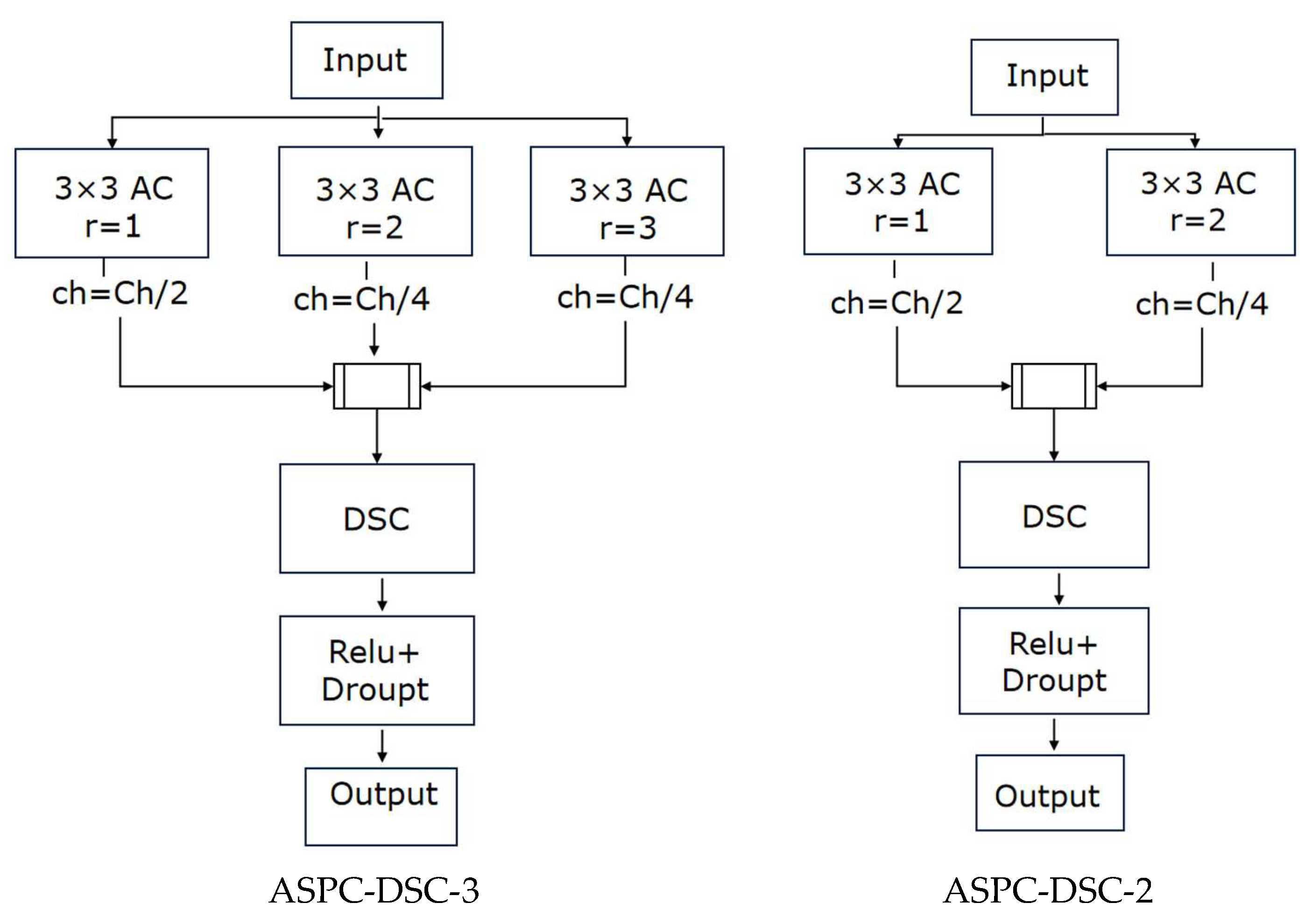

In this paper, inspired by the concept of ASPP, we propose the atrous spatial pyramid convolution with depthwise separable convolution (ASPC-DSC) module. ASPC consists of multiple parallel convolutions with different dilation rates, forming a new feature pyramid model that expands the receptive field of the convolutional kernel, thereby aggregating multi-scale contextual information. This enhances the model’s ability to extract features of red tides with complex boundaries at different scales, improving its capability to detect small-scale, dispersed, and strip-like red tides. Building on ASPC, ASPC-DSC introduces depthwise separable convolution (DSC) [

25], creating a novel and efficient feature extraction module. This module not only expands the receptive field and aggregates multi-scale contextual information, enhancing the model’s ability to detect red tide regions with complex boundaries and varying scales, but also reduces computational complexity through DSC, improving detection efficiency. As a result, it optimizes the model’s inference speed without compromising detection accuracy, thereby enhancing overall detection performance. The ASPC-DSC module adopts a three-branch parallel atrous convolution structure, where ASPC-DSC-3 uses dilation rates of 1, 2, and 3, with channel proportions of 50%, 25%, and 25%, respectively, and ASPC-DSC-2 uses dilation rates of 1 and 2, with channel proportions of 50% and 50%, respectively. As the number of convolutional layers increases, ASPC-DSC exhibits the property of a linearly growing receptive field with the dilation rate. For example, with a dilation rate of 2, the receptive field of a 3 × 3 convolutional kernel increases to 7 × 7, and with a dilation rate of 3, it increases to 11 × 11, ensuring the model can aggregate multi-scale contextual red tide feature information. Additionally, the integrated depthwise separable convolution (DSC) further optimizes the feature extraction method on the basis of ASPC, enabling the model to enhance its ability to capture spatial information while reducing computational resource usage, thereby improving red tide detection accuracy. The specific structure is illustrated in

Figure 5:

3.5. Long Short-Term Memory Network (LSTM)

Long short-term memory (LSTM) is a special type of recurrent neural network (RNN) architecture. LSTM is designed to address the issues of vanishing and exploding gradients that traditional RNNs encounter when processing long sequence data, enabling the network to learn and maintain long-term dependencies. The main feature of LSTM is the introduction of gate mechanisms and memory cells, which allow for the storage and control of long-term information. Specifically, the forget gate determines which historical information should be discarded, the input gate decides which new information should be stored in the memory cell, the reset gate determines which memories should be reset, and the output gate controls the output of the memory cell. This mechanism enables LSTM to retain long-term information and utilize it for prediction and decision-making when needed [

26].

LSTM has achieved successful applications in many fields, including speech recognition, image captioning, and natural language processing. For example, in speech recognition, LSTM can handle long-term dependencies in speech signals, thereby improving the accuracy of speech recognition. In image captioning, LSTM can generate descriptive text that matches the content of the image. In natural language processing, LSTM can manage long-term dependencies within sentences or paragraphs, enhancing the performance of tasks such as text classification and sentiment analysis. LSTM is a highly effective deep learning model capable of addressing long-term dependencies in sequential data and has been successfully applied in numerous domains.

3.6. CSF-RTDNet Model Framework

Through the study of previous red tide detection models, it has been found that traditional red tide detection methods, which rely on expert experience and qualitative analysis based on extensive reports, often have inaccurate thresholds and poor applicability. Additionally, the features used are relatively singular and insufficiently rich, limiting the improvement of red tide detection accuracy. Due to the strong temporal continuity and correlation in the development of red tides, current U-Net-based red tide detection methods can effectively detect red tides on the day they occur but perform poorly in detecting red tides in the following days. This is due to insufficient mining of temporal sequence features in time-series samples, which hinders better red tide detection. Therefore, based on the traditional U-Net, the ECA module is introduced to assign different weights according to the influence of different bands, allowing the model to focus on the channels that require the most attention, thereby improving the classification accuracy of the detection model. Simultaneously, the ordinary convolutional blocks in the first two layers of the U-Net structure are replaced with the ASPC-DSC-3 module, and the ordinary convolutional block in the third layer of the U-Net structure is replaced with the ASPC-DSC-2 module. This enables the fusion of multi-scale contextual red tide feature information under multiple sampling rates, enhancing the model’s ability to extract features at different scales. Depthwise separable convolution further optimizes the feature extraction method, allowing the model to enhance its ability to capture spatial information while reducing computational resource usage, thereby improving the model’s computational efficiency and inference speed. While maintaining multi-scale perception capabilities, the receptive field is expanded, strengthening the extraction of red tide features in complex boundary regions and improving red tide detection accuracy. The introduction of convolutional long short-term memory (ConvLSTM), which combines the temporal sequence processing capability of LSTM and the spatial feature processing capability of CNN, effectively integrates the spatiotemporal feature changes of red tides across different time periods. This fully exploits the temporal sequence features of red tide time-series samples, enabling more precise identification of red tides and thereby improving the classification accuracy of the detection model. Additionally, since the model training in this study is based on a small training set, a dropout layer is added before upsampling to avoid overfitting, which to some extent reduces the information lost during downsampling. The overall structure of the improved red tide detection model proposed in this paper is shown in

Figure 6.

5. Conclusions

The occurrence of red tides is related to various factors, including the physical and chemical conditions of the marine environment, the dynamic changes of biological populations, and meteorological conditions. For example, high nutrient concentrations, suitable water temperature and light conditions, and the proliferation of certain plankton populations can all contribute to the occurrence of red tides. Detecting red tides is challenging because their formation is a complex process involving the interaction of multiple factors. Additionally, the development of red tides exhibits strong temporal continuity and correlation. Current deep learning-based red tide detection methods often inadequately mine temporal sequence features, limiting their ability to effectively detect red tides. The CSF-RTDNet model algorithm proposed in this study addresses these challenges by integrating multi-temporal data, combining same-time-point data from previous days and multi-time-point data from the same day, to capture richer spatiotemporal features, thereby significantly improving red tide detection accuracy. Specifically, CSF-RTDNet first uses ConvLSTM to learn the spatiotemporal feature changes of the input remote sensing data. ConvLSTM combines the temporal sequence processing capability of LSTM with the spatial feature processing capability of CNN, effectively integrating the spatiotemporal feature changes of red tides across different time periods and fully exploiting the temporal sequence features of red tide samples. Furthermore, the ECA channel attention mechanism is introduced to assign different weights based on the influence of different bands on red tide detection, enabling deeper extraction of red tide features. The atrous spatial pyramid convolution with depthwise separable convolution (ASPC-DSC) module is designed to fuse multi-scale contextual red tide feature information under multiple sampling rates, enhancing the model’s ability to extract features at different scales. Depthwise separable convolution further optimizes the feature extraction process, allowing the model to enhance its ability to capture spatial information while reducing computational resource usage, thereby improving computational efficiency and inference speed. By integrating deep and shallow features as well as multi-scale spatial features, the model effectively addresses the issue of insufficient mining of temporal sequence features, significantly improving red tide detection accuracy.

Red tide detection is of great significance for prevention and management. By detecting the occurrence of red tides, timely measures can be taken, such as reducing pollutant emissions and adjusting fishing activities, to mitigate the impact of red tides on marine ecosystems and human health. This study explores the application of GOCI remote sensing data in red tide detection, and the experimental results demonstrate that the proposed method achieves promising detection performance, providing a new reference for red tide detection methods.