Abstract

To enhance scene perception and comprehension, infrared and visible image fusion (IVIF) integrates complementary data from two modalities. However, many existing methods fail to explicitly separate modality-specific and modality-shared features, which compromises fusion quality. To surmount this constraint, we introduce a novel hierarchical dual-branch fusion (HDF-Net) network. The network decomposes the source images into low-frequency components, which capture shared structural information, and high-frequency components, which preserve modality-specific details. Remarkably, we propose a pin-wheel-convolutional transformer (PCT) module that integrates local convolutional processing with directional attention to improve low-frequency feature extraction, thereby enabling more robust global–local context modeling. We subsequently introduce a hierarchical feature refinement (HFR) block that adaptively integrates multiscale features using kernel-based attention and dilated convolutions, further improving fusion accuracy. Extensive experiments on four public IVIF datasets (MSRS, TNO, RoadScene, and M3FD) demonstrate the high competitiveness of HDF-Net against 12 state-of-the-art methods. On the RoadScene dataset, HDF-Net achieves top performance across six key metrics—EN, SD, AG, SF, SCD, and SSIM—surpassing the second-best method by 0.67%, 1.85%, 17.67%, 5.26%, 3.33%, and 1.01%, respectively. These findings verify the generalization and efficacy of HDF-Net in practical IVIF scenarios.

1. Introduction

Image fusion has risen as a crucial research field in multimodal visual perception, driven by the rapid advancement of computer vision technologies [1,2]. This field has a variety of applications, including remote sensing, medical imaging, autonomous driving, object detection, and surveillance [3]. Among these tasks, infrared and visible image fusion (IVIF) is particularly significant in challenging environments, such as those with low illumination, nighttime scenes, and adverse weather conditions [4]. Infrared images capture thermal radiation [5], highlighting salient targets that may not be visible in low-light conditions, while visible images offer richer textures and spatial details. Fusing these modalities enables the integration of thermal and structural information, enhancing application tasks, including object detection, action recognition, and segmentation [6].

Despite their complementarity, the imaging mechanisms and semantic content of IR and VIS images differ substantially, presenting a challenge for the effective extraction and integration of features. Throughout the fusion process, a significant challenge is the delicate balance between fine-grained details and background structures [7]. IR images often lack sharp textures and borders, whereas VIS images are prone to noise and illumination variations. Failure to balance these aspects can lead to fused images with insufficient detail clarity or compromised background consistency, directly impacting downstream applications’ performance. For instance, inadequate fusion in surveillance and autonomous driving scenarios may result in missed targets or misclassification, reducing system reliability and safety [8]. Despite the advancements in traditional image fusion methods [9], such as multiscale decomposition or handcrafted fusion rules, their adaptability to dynamic environments is limited by their reliance on predefined operations and fixed fusion strategies, frequently leading to the loss of critical information.

Recent advances in deep learning have considerably enhanced IVIF performance. Convolutional neural networks (CNNs) have demonstrated strong local feature extraction capabilities [10], while generative adversarial networks (GANs) utilize adversarial learning to improve visual realism [11,12]. However, CNN-based approaches are inherently constrained by their limited receptive fields, making them less effective in modeling long-range dependencies and cross-modal relationships [13,14]. GAN-based methods, although promising, often suffer from training instability and mode collapse, and require large-scale datasets [15,16]. More recently, transformer-based architectures have been introduced into IVIF due to their success in modeling long-range dependencies and global context [17,18]. Compared with CNNs, transformers offer globally consistent feature representations and better preserve fine details in complex scenes. Nevertheless, current transformer-based fusion methods face challenges such as high computational complexity and inefficient feature alignment, which limit their scalability and practical application [19].

Moreover, existing deep learning-based methods often fail to explicitly differentiate between modality-shared features (e.g., backgrounds, global structures) and modality-specific features (e.g., textures, contours). This lack of targeted feature separation may lead to overfusion of low-frequency content and suppression of high-frequency details, compromising the overall quality of the fused image [20,21,22]. Studies have demonstrated that low-frequency features are frequently shared between IR and VIS images, while high-frequency features are typically modality-specific [23,24].

In response to these challenges, we propose a novel fusion framework named HDF-Net (hierarchical dual-branch feature extraction fusion network), designed explicitly for IVIF tasks. HDF-Net adopts a dual-branch encoder structure to extract modality-shared and modality-specific features separately, followed by a unified decoder to reconstruct the fused image [25]. The low-frequency branch is designed to capture global contextual information common to both modalities, whereas the high-frequency branch concentrates on intricate structures and textures.

To enhance the extraction of low-frequency information, we propose the pinwheel-convolutional transformer (PCT) module [26], which integrates a multilayer lite transformer architecture with a pinwheel-shaped convolutional kernel. The PCT module fulfils two critical functions. Firstly, employing asymmetric convolutions with pinwheel-shaped kernels effectively enlarges the receptive field, enabling robust feature extraction from low-contrast regions and large-scale background structures. Secondly, the PCT module adopts a localized, lightweight attention mechanism to replace conventional global attention, significantly reducing computational complexity while maintaining the Transformer’s capacity for global context modeling. This dual design addresses the inherent limitations of traditional convolutional layers in capturing global structural information and mitigates the high computational overhead commonly associated with standard transformer-based fusion networks.

In the high-frequency branch, we incorporate an invertible neural network (INN) module [27], which performs reversible transformations to preserve fine details, such as edges and textures, during fusion. INN ensures lossless information flow, which is essential for generating high-fidelity outputs. Our proposed HDF-Net introduces the following significant contributions:

- (1)

- We propose a hierarchical dual-branch fusion architecture that demonstrates exceptional performance in image fusion. This architecture effectively integrates infrared and visible images by separating them into low-frequency, shared features and high-frequency, modality-specific details, ensuring optimal fusion quality.

- (2)

- We design a novel PCT module to extract robust low-frequency features, strengthening the network’s capability to obtain background and large-scale structural data. Additionally, we employ an INN module to retain modality-specific high-frequency details with high fidelity.

- (3)

- We design a hierarchical feature refinement (HFR) module to refine fused features further. In contrast to conventional linear refinement, HFR incorporates kernel operations (KO) and dilated convolutions (DC) to enhance feature expression and facilitate robust multiscale fusion. By integrating KO and DC, representation learning becomes more flexible and effective across both frequency branches, thus improving the overall quality and stability of the fusion.

- (4)

- Our method surpasses existing approaches in visual quality and quantitative performance, as demonstrated by extensive experiments conducted on various public IVIF benchmark datasets, including MSRS [28], TNO [29], RoadScene [30], and M3FD [31].

2. Related Work

2.1. CNN- and GAN-Based Methods for IVIF

IVIF has attracted growing interest recently due to its broad applicability in computer vision tasks. Compared to single-modality imaging, fusing infrared (IR) and visible (VIS) images enables more comprehensive scene understanding, thereby boosting the robustness and validity of tasks such as object detection, scene perception, and target tracking [9,32,33,34].

Researchers have widely adopted CNN-based methods for their effectiveness in extracting local features. Early work, such as DenseFuse [35], used an autoencoder (AE) framework with dense connections to mitigate feature loss and enhance representation flow. However, its reliance on predefined fusion rules limited adaptability. Subsequently, IFCNN [36] introduced a fully trainable end-to-end architecture that learned both feature extraction and fusion in a data-driven manner. PMGI [37] proposed balancing gradient and intensity preservation, effectively maintaining detail and contrast in the fused images. SuperFusion [38] further addressed misalignment issues in IR and VIS images by introducing a feature alignment mechanism into the CNN framework. DAF-Net [39] integrated Restormer blocks and invertible neural networks (INNs) to improve global semantic consistency, better balancing local texture preservation and global structure modeling.

Despite these advances, CNN-based fusion approaches encounter numerous inherent challenges [40]. Their limited receptive fields impede the modeling of long-range dependencies and global context. At the same time, the overfusion of shared features and the neglect of modality-specific details are frequently the result of their lack of frequency-aware mechanisms. Additionally, their architectural rigidity limits their adaptability to various scene types and cross-modal data characteristics.

GAN-based approaches, such as FusionGAN [41], introduced adversarial training to generate perceptually realistic results. GANMcC [42] and AttentionFGAN [43] enhanced this framework with multidiscriminator setups and attention-based modules. TarDAL [44] introduced target-aware discriminators to better guide the fusion process for downstream tasks. However, these methods remain highly data-dependent, suffer from training instability, and often provide limited control over the interpretability of fused features.

2.2. Transformer-Driven Approaches and Limitations

Introducing the transformer architecture [45,46]—initially for NLP—marked a turning point in global modeling. Vision transformer (ViT) [47] and swin transformer [48] adapted this paradigm for image-level tasks. Due to their self-attention mechanism, transformers can more effectively model cross-modal relationships and long-range dependencies than CNNs [49,50].

SwinFuse [51] adopted swin transformer blocks for IVIF, balancing salient thermal targets and texture-rich visible details. However, its windowed attention introduces fragmented context modeling, potentially missing global consistency. CoCoNet [52] introduced contrastive learning and multilevel supervision but relied on extensive sample pairs and suffered from high training costs. CDDFuse [53] proposed a correlation-driven decomposition mechanism using Transformer–CNN hybrids to decouple shared and specific modality features. While it effectively improved feature disentanglement, it introduced high computational cost and complex pipeline design. Still, it suffered from information loss in high-frequency regions due to its dependence on global pooling strategies. PDFusion [54] adopted progressive dual-branch transformer fusion. Although it improved cross-layer semantic alignment, the framework lacked explicit frequency domain separation and involved repeated feature interactions that increased memory consumption and latency.

Despite leveraging Transformer structures, most prior methods do not explicitly separate frequency-aware representations and their attention mechanisms are computationally heavy, especially on high-resolution images. Furthermore, cross-modal alignment remains an open problem due to insufficient frequency-specific guidance.

To address the unresolved limitations in existing IVIF approaches, we propose HDF-Net, a fusion framework designed to achieve efficient and frequency-aware feature integration. Unlike prior works such as CDDFuse and PDFusion, which rely on heavy attention structures or implicit feature decoupling, HDF-Net employs a hierarchical dual-branch design that explicitly separates low-frequency shared content from high-frequency modality-specific details. This architectural decoupling improves interpretability while reducing feature entanglement.

Additionally, we present a lightweight transformer-based module (PCT) that integrates asymmetric convolutional operations to improve global modeling efficacy without imposing a high computational cost. PCT enables enhanced global context encoding with an expanded receptive field while avoiding the complexity of conventional full-attention designs. Furthermore, a feature refinement module (HFR) incorporating dilated convolutions and kernel operations is implemented to enhance multiscale alignment and suppress redundant activations. HDF-Net resolves numerous constraints associated with contemporary transformer-based fusion techniques, such as inadequate frequency-level separation, excessive computational overhead, and deficient cross-modal consistency.

3. Methods

This section begins by outlining the overall workflow of HDF-Net, followed by a comprehensive description of each module. Subsequently, we present the loss functions used in the model. For clarity, we will refer to the low-frequency global features as “core features” and the high-frequency local features as “refined features” in the following discussion.

3.1. Overview

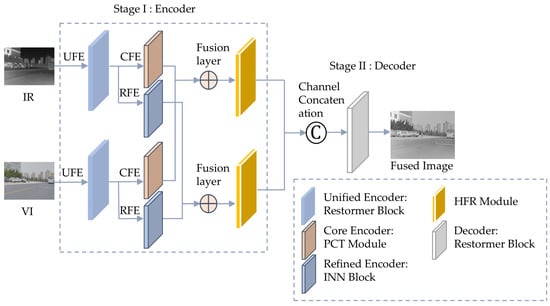

HDF-Net comprises a dual-branch encoder responsible for feature extraction and segmentation, and a decoder that generates the fused image. Figure 1 illustrates the comprehensive infrastructure of HDF-Net.

Figure 1.

The overall architecture of HDF-Net. The encoder includes three main modules: a unified feature encoder (UFE), core feature encoder (CFE), and refined feature encoder (RFE), followed by a hierarchical feature refinement (HFR) module and a unified decoder.

3.2. Encoder Architecture

As shown in Figure 1, the encoder comprises four essential components: the unified feature encoder (UFE), the core feature encoder (CFE), the refined feature encoder (RFE), and the hierarchical feature refinement (HFR) module. We denote these components as , and , respectively. Each module plays a distinct role in extracting and refining multi-scale and modality-specific features, ensuring comprehensive feature representation.

3.2.1. Unified Feature Encoder

The UFE is responsible for capturing supplementary and shared basic representations from both infrared and visible images , effectively extracting initial cross-modality information while preserving crucial structural details, i.e.,

We adopt the Restormer block in the UFE as a transformer-based encoder originally designed for image restoration. It applies self-attention in the frequency and channel domains, enabling efficient extraction of global features from high-resolution inputs while preserving computational efficiency [55].

3.2.2. Core Feature Encoder

To obtain the core features from the extracted shallow features , we define the transformation as:

To achieve this, we employ the pinwheel-convolutional transformer (PCT) module as the core feature encoder (CFE) core component, which enhances global and local feature representations.

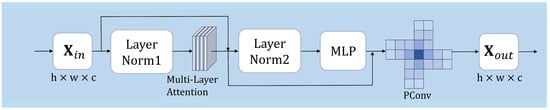

As illustrated in Figure 2, the PCT module integrates the multi-layer attention mechanism (MLA) and pinwheel-shaped convolution (PConv) to strengthen feature extraction. The MLA consists of multiple stacked self-attention layers, enabling effective modeling of long-range dependencies and feature refinement across various scales.

Figure 2.

The architecture of the PCT module.

Given an input feature map , the MLA module implements hierarchical self-attention operations to simulate long-range dependencies. In the first layer, we define the initial attention computation as: where represents the first AttentionBase module. The subsequent layers refine the attention output recursively:

where L represents the total number of MLA layers. We apply a 1 × 1 convolution after the final attention layer to ensure consistency in feature dimensionality:

where represents a pointwise convolution, ensuring channel adaptation while maintaining the original spatial resolution.

We introduce pinwheel convolution (PConv) as a follow-up to the MLA module to refine the extracted features further. To enhance directional sensitivity and spatial diversity, we design the pinwheel convolution (PConv) module with four asymmetric padding strategies, denoted as: Each padding tuple (top, right, bottom, left) in the pinwheel convolution (PConv) module defines an asymmetric spatial offset, shifting the receptive field off-center. This design enables the network to capture directional variations and spatial asymmetries—attributes often ignored by standard symmetric convolution. As demonstrated in prior work [26], such asymmetry aligns with the Gaussian-like distribution patterns commonly observed in infrared small targets, enhancing the feature extraction of weak signals; unlike conventional symmetric kernels, which aggregate features uniformly, our pinwheel-shaped kernels emphasize directional sensitivity, improving the detection of delicate structures and contour transitions in low-contrast or cluttered backgrounds. Mirroring the kernels would result in overlapping receptive fields, which dilutes feature diversity and hinders the network’s ability to model orientation-aware features.

After applying padding, we perform four parallel convolutions:

The sigmoid linear unit (SiLU), also known as the Swish activation, is defined as . It provides smooth activation and better convergence than ReLU. Batch normalization (BN) stabilizes and accelerates training by normalizing intermediate activations across each mini-batch. The convolution operation is denoted by , where each convolution kernel has a specific receptive field. For instance, represents a 1 × 3 convolution kernel with an output channel of , capturing horizontal spatial dependencies. Where each convolution branch extracts different directional feature responses. We then concatenate these outputs along the channel dimension. Here, Cat refers to the channel-wise concatenation operator that merges the multi-branch outputs into a unified representation:

Finally, the concatenated feature tensor undergoes normalization through a 2 × 2 convolution kernel. without additional padding. This operation refines the extracted features while maintaining spatial consistency. The dimensions of the output feature map are adjusted to predefined dimensions and , ensuring seamless integration with standard convolutional layers. Moreover, PConv serves as an adaptive channel-attention mechanism by effectively analyzing the significance of different convolutional orientations. The final output representation is computed as follows:

The final transformation adjusts the output spatial dimensions, setting the baseline, device control, and ending baseline while ensuring compatibility with standard convolutional layers. The effectiveness of PConv lies in its hierarchical multiscale receptive field, which mimics the Gaussian distribution of small target features.

3.2.3. Refined Feature Encoder

Contrary to CFE, the RFE focuses on extracting high-frequency refined features from the unified features, expressed as:

Here, is implemented using an invertible neural network (INN) with affine coupling layers. The refined feature encoder (RFE) employs an INN-based architecture to preserve crucial edge and texture details for image fusion. The INN module guarantees lossless feature extraction by allowing the input and output features to be mutually reconstructable. Thus, we integrate INN blocks with affine coupling layers to preserve practical details.

3.2.4. Fusion Layer

The fusion layer integrates the extracted core and refined features, maintaining consistency with the encoder’s feature extraction process, respectively, formulated as:

where and perform element-wise addition followed by pointwise convolution (1 × 1 Conv), representing linear transformations.

3.2.5. HFR Module

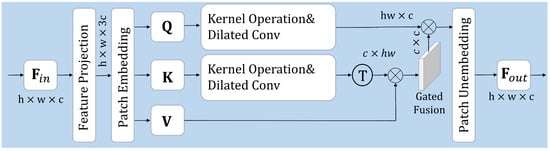

We introduce the hierarchical feature refinement (HFR) module after obtaining the fused low-frequency and high-frequency features from the respective encoding branches. We further integrate multimodal information to ensure more effective interaction and representation of features.

As is shown in Figure 3, an input feature map undergoes a feature projection step that first transforms it into an expanded representation to enhance channel-wise feature richness. Subsequently, a patch embedding operation is implemented to partition the input feature into nonoverlapping segments, producing the embedded representations , which are then processed by the self-attention mechanism.

Figure 3.

The architecture of the HFR module.

To improve feature representation robustness, we adopt a Mercer-based kernel operation to reconstruct Q and K through a projection mapping: . Next, dilated convolution (DC) is employed to process and : . We then compute the attention mechanism as follows:

where dynamically adjusts the attention weights to balance the contributions of different features, enabling adaptive fusion between core and refined features.

HFR integrates kernel operation (KO) with dilated convolution (DC) to construct a hierarchical representation space. KO maps the input features into a higher-dimensional space, allowing for more expressive similarity measurement between Q and K. Meanwhile, DC dynamically expands the receptive field while preserving spatial structures, effectively capturing multi-scale dependencies. The refined and are then utilized to compute adaptive attention weights, followed by a gated fusion mechanism that combines these attention- weighted responses with the value (V) representation.

3.3. Decoder

The decoder rebuilds the fused image by merging the core and refined features across the channel dimension:

To ensure consistency with the unified feature encoder (UFE), the decoder employs a series of Restormer blocks as its core computational units. These blocks are configured with the same embedding dimensionality, attention heads, and feedforward structures as in UFE, facilitating effective feature reconstruction while preserving global context and local detail integrity. This design balances structural simplicity and representational fidelity, avoiding redundant computation while ensuring that cross-modal semantic information is effectively integrated into the final fused output.

3.4. Loss Function

Given the unavailability of ground truth in IVIF, conventional supervised learning methods are unavailable. To address this, we design an unsupervised loss function that encourages the preservation of shared low-frequency structures and modality-specific high-frequency details. We define the overall loss as:

Here, the reconstruction loss ensures that the fused image F remains structurally close to the most informative parts of both source images and , by minimizing the intensity and structural similarity errors:

The max operator selects salient targets (e.g., warm bodies from IR and textures from VIS), and the SSIM term improves structural integrity by maintaining local luminance and contrast.

To enhance edge and texture information, especially in high-frequency regions extracted by the INN block, we introduce the gradient loss , which coordinates the gradients of the fused images and the input images:

focuses on preserving edge and texture information, especially in high-frequency regions. It is critical for capturing contours (e.g., pedestrians, vehicles), especially when INN is applied for detail preservation. The Sobel operator ∇ detects image gradients, aligning spatial edges of the fused result with the sources.

The decomposition loss is designed to enforce a high correlation between core features while encouraging the refined features to remain distinct:

where CC denotes the Pearson correlation coefficient, and represent core and refined features. The numerator promotes a high correlation between refined features (IR and VIS), encouraging shared structure. The denominator discourages excessive similarity in core features, enforcing that modality-specific features remain distinct. This balance avoids over-fusion and preserves modality-specific cues.

Moreover, considering that the HFR module refines feature representations through multiscale interactions, we further introduce a feature similarity loss , which ensures that the refined features and remain consistent with their original counterparts:

The HFR module generates enhanced representations via multiscale fusion. This term regulates feature drift, ensuring that:

- (1)

- Refined high-level features (after HFR) remain consistent with their low-level counterparts (before HFR).

- (2)

- It prevents oversmoothing or semantic deviation during refinement.

4. Results and Analysis

This section presents a systematic series of experiments conducted under various settings to validate the effectiveness and generalization capability of the proposed HDF-NET. First, we outline the experimental environment, which includes datasets, implementation details, and evaluation metrics. Secondly, we conduct comparative experiments against state-of-the-art fusion methods to showcase the superiority of HDF-NET in both qualitative and quantitative evaluations. Finally, we perform an ablation study to analyze the contributions of key components in our model and verify their impact on fusion performance.

4.1. Experimental Setup

4.1.1. Datasets

In our IVIF experiments, we assess the proposed method on four widely recognized benchmark datasets: MSRS, TNO, RoadScene, and M3FD. The infrared images are captured using thermal infrared (LWIR) sensors operating primarily in the 8–14 µm wavelength range. We train the HDF-NET model on the MSRS training dataset, which comprises 1083 pairs of images from two modalities. For performance evaluation, we test the model on the MSRS test set (300 pairs), RoadScene (55 pairs), TNO (42 pairs), and M3FD test set (300 pairs). These datasets encompass diverse real-world scenarios, allowing us to assess the stability and versatility of the proposed method. We thoroughly evaluate the model’s ability to preserve structural details, enhance contrast, and produce high-quality fusion results across different datasets.

4.1.2. Implement Details

We conducted all trials on a workstation equipped with an Intel(R) Core (TM) i7-14700KF CPU (Intel Corporation, Santa Clara, CA, USA) and an NVIDIA GeForce RTX 4070 Ti Super GPU (NVIDIA Corporation, Santa Clara, CA, USA). For preprocessing, we randomly crop the training images into 128 × 128 patches. The network undergoes training for 100 epochs in a single-stage setting employing a batch size of 16. We utilize the Adam optimizer, starting with a learning rate of , which decays by a factor of 0.5 every 20 epochs. The unified feature encoder (UFE) consists of four Restormer blocks, each utilizing eight attention heads and an embedding dimension of 64 for the network’s hyperparameter settings. The core transformer encoder (CTE) is constructed using lite transformer (LT) blocks that are dimensionally consistent with those used in the unified feature encoder (UFE), sharing the same embedding size and number of attention heads to maintain feature transformation compatibility. The loss function parameters used in Equation (13) are empirically set to , respectively, based on a grid search on the MSRS validation set. These values balance texture enhancement, modality decoupling, and feature consistency to ensure training stability and fusion quality. For RGB visible images (e.g., Figure 9), we convert them to the YCrCb color space, perform fusion on the Y (luminance) channel using our proposed method, and then recombine the original Cr and Cb channels with the fused Y to reconstruct the final color image.

4.1.3. Metrics

The comparative analysis is conducted across multiple benchmark datasets. We evaluate the performance using a set of widely recognized quantitative metrics, including entropy (EN), standard deviation (SD), average gradient (AG), spatial frequency (SF), structural similarity index (SSIM), mutual information (MI), and sum of correlation of differences (SCD). These metrics comprehensively assess the fusion outcomes in preserving information, contrast enhancement, detail retention, and structural consistency. Since the IVIF task lacks a ground truth fused image, all evaluation metrics (e.g., SSIM, MI, SCD, EN) are computed between the fused image and each input source image (IR and VIS), following widely adopted practice in prior fusion studies [36,39,53].

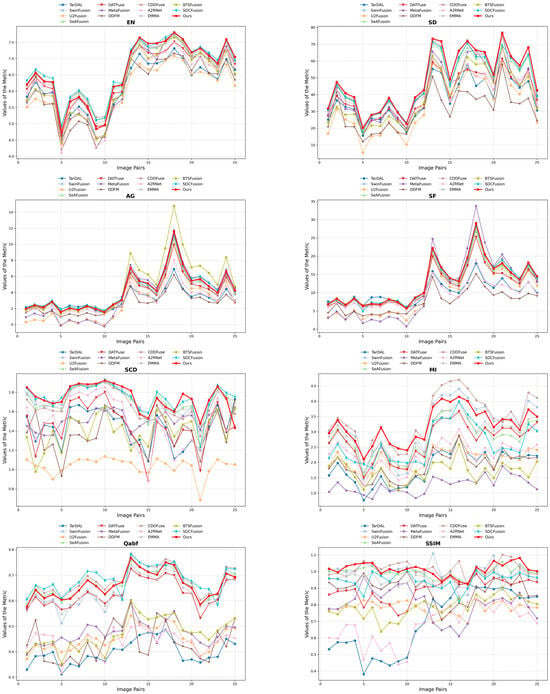

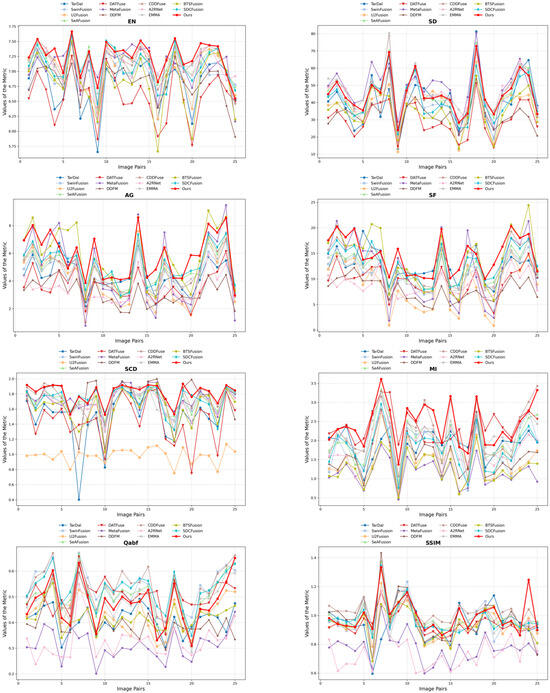

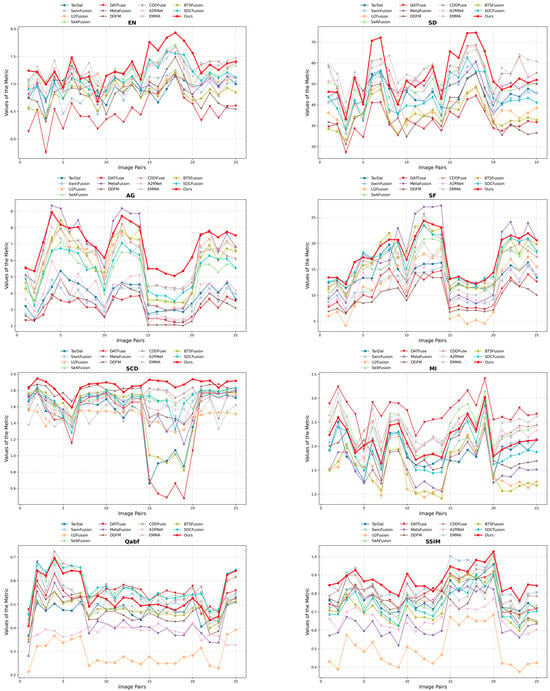

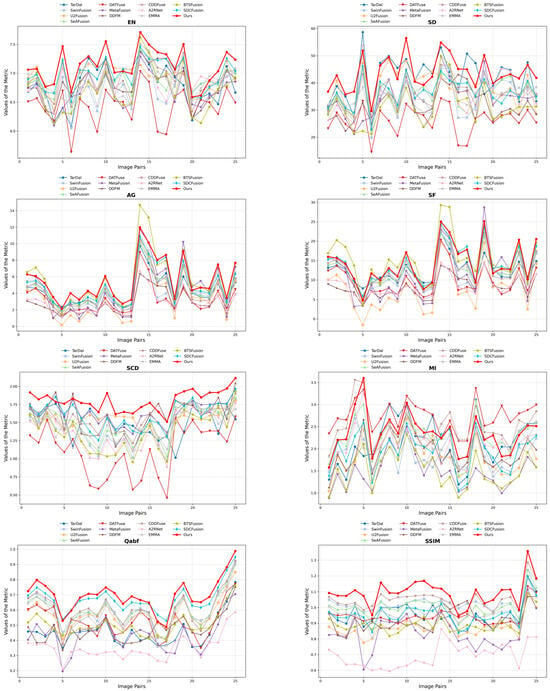

4.2. Comparison Results

To thoroughly assess the effectiveness of our proposed HDF-NET, we perform extensive comparisons with 12 state-of-the-art (SOTA) image fusion methods through qualitative and quantitative analyses. The selected baseline methods include TarDAL [44], SwinFusion [56], U2Fusion [57], SeAFusion [58], DATFuse [59], MetaFusion [60], DDFM [61], CDDFuse [53], A2RNet [62], EMMA [63], BTSFusion [64], and SDCFusion [65]. To guarantee an impartial and consistent evaluation, we utilize the publicly available implementations of these methods and apply the same experimental settings, including adversarial conditions, hyperparameters, and input preprocessing procedures. Appendix A (Figure A1, Figure A2, Figure A3 and Figure A4) shows per-pair metric performance curves for a more detailed performance trajectory across each dataset.

4.2.1. Results of the MSRS Dataset

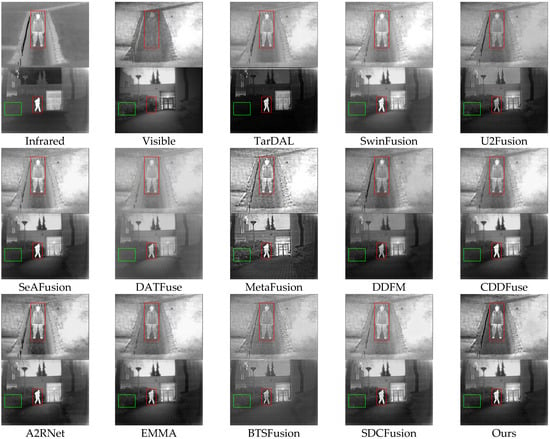

Qualitative analysis: Figure 4 shows fused results from multiple methods on the MSRS dataset. In the first scene, models such as SwinFusion, U2Fusion, DATFuse, and BTSFusion suffer from blurred edges and loss of detail in pedestrians and building contours. A2RNet improves target clarity but fails to maintain background definition. TarDAL performs poorly overall, while MetaFusion enhances infrared intensity but introduces unnatural contrast artifacts. In contrast, HDF-Net delivers sharp foreground-background separation and precise texture details, attributed to its PCT module’s expanded receptive field and the HFR module’s effective cross-scale refinement.

Figure 4.

Qualitative comparison of HDF-Net with 12 state-of-the-art methods on the MSRS dataset.

Appendix B (Figure A5) presents enlarged views of challenging regions for closer inspection. HDF-Net consistently maintains structural clarity and clean textures under intense lighting and low contrast, whereas other methods show blur or oversharpening. This highlights the robustness of our frequency-aware architecture in preserving fine textures and resisting illumination-induced degradation.

Quantitative analysis: Figure A1 compares HDF-NET and twelve advanced methods on 25 image pairs on the MSRS dataset, with average results summarized in Table 1. HDF-NET performs best in EN, SD, SF, SCD, and SSIM and ranks second in AG and MI. High EN and SF scores indicate rich information content and well-preserved textures, while superior SD reflects enhanced contrast. Leading SCD and SSIM values confirm strong structural preservation and similarity to source images, illustrating the robustness and overall capability of the proposed method.

Table 1.

Quantitative results of comparative experiments on the MSRS dataset. The highest values are presented in bold to highlight the comparative performance, while the second-highest values are underlined.

4.2.2. Results of the TNO Dataset

Qualitative analysis: Figure 5 shows results from TNO scenes, where traditional methods like TarDAL, SwinFusion, U2Fusion, and BTSFusion fail to preserve structural clarity under thermal and illumination discrepancies. TarDAL and U2Fusion produce blurred pedestrian figures, while SeAFusion struggles with vegetation structure preservation. A2RNet improves pedestrian representation but underperforms on background texture. EMMA and CDDFuse offer more precise contours, but minor texture degradation remains. However, HDF-NET preserves small-scale pedestrian features and large-scale background structures with high visibility. These results reflect the strength of HDF-NET in capturing modality-specific structures. Specifically, the INN module preserves edge details without introducing artifacts, while the PCT-enhanced CFE ensures global structure consistency under complex thermal-visual discrepancies.

Figure 5.

Qualitative comparison of HDF-Net with 12 state-of-the-art methods on the TNO dataset.

Quantitative analysis: Figure A2 compares HDF-NET and twelve advanced fusion methods on the TNO dataset, with average results summarized in Table 2. HDF-NET achieves leading performance in EN, SF, SCD, and MI, ranking second in SD, AG, and SSIM. These results highlight HDF-NET’s robust design, particularly the dual-branch architecture and the HFR module, which ensures effective fusion across modalities even under varying thermal contrast and complex scenes.

Table 2.

Quantitative results of comparative experiments on the TNO dataset. The highest values are presented in bold to highlight the comparative performance, while the second-highest values are underlined.

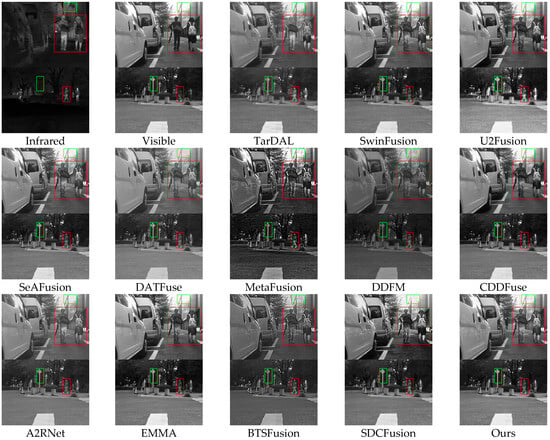

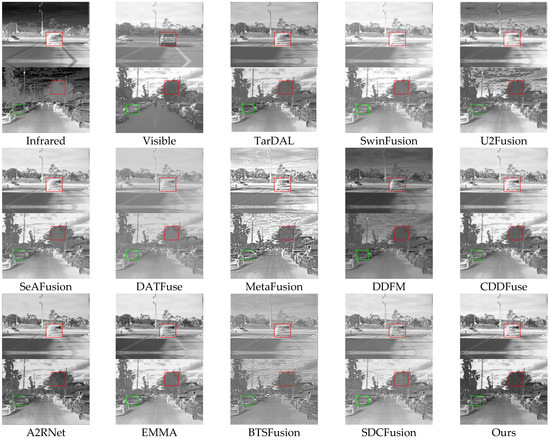

4.2.3. Results of the RoadScene Dataset

Qualitative analysis: Figure 6 illustrates representative fusion results in driving scenes. Several methods, including SwinFusion, DATFuse, DDFM, and BTSFusion, produce dark or low-contrast outputs, especially in regions containing vehicles, pedestrians, and roadside vegetation. TarDAL and U2Fusion blur critical object boundaries, while MetaFusion causes over-enhancement, introducing unrealistic visual artifacts. Although CDDFuse, A2RNet, and EMMA preserve more detail, they still struggle with fine texture in dense regions. HDF-NET consistently produces natural textures, well-defined contours, and high perceptual clarity. The HFR module plays a crucial role in these complex street scenes by adaptively integrating frequency-specific features, ensuring vehicles, pedestrians, and vegetation maintain clarity and contrast, which allows HDF-NET to deliver stable results in cluttered environments.

Figure 6.

Qualitative comparison of HDF-Net with 12 state-of-the-art methods on the RoadScene dataset.

Quantitative analysis: Figure A3 compares HDF-NET with twelve advanced methods on the RoadScene dataset, with mean results in Table 3. Evaluated using eight standard metrics, HDF-NET ranks highest in EN, SD, AG, SF, SCD, and SSIM, confirming its robustness in diverse fusion scenarios. These results underscore the benefits of the lightweight PCT module and the hierarchical fusion strategy, which together yield more precise, more semantically consistent representations in complex road scenes.

Table 3.

Quantitative results of comparative experiments on the RoadScene dataset. The highest values are presented in bold to highlight the comparative performance, while the second-highest values are underlined.

4.2.4. Results of the M3FD Dataset

Qualitative analysis: Figure 7 illustrates fused images produced by different methods across two representative scenes. TarDAL, U2Fusion, SeAFusion, BTSFusion, and A2RNet exhibit noticeable blurring, leading to indistinct pedestrian and architectural details. In Scene 1, SwinFusion, DATFuse, and DDFM suffer from inadequate brightness and contrast, resulting in distant targets blending into the background and obscuring structural features. MetaFusion displays excessive contrast and unnatural sharpness, particularly in building regions. While EMMA and CDDFuse effectively preserve pedestrian and vehicle details, they experience slight texture loss and background clarity issues. In contrast, HDF-NET delivers superior visual quality across all targets and environments, thanks to its efficient separation of frequency components and robust refinement across modalities.

Figure 7.

Qualitative comparison of HDF-Net with 12 state-of-the-art methods on the M3FD dataset.

Quantitative analysis: Figure A4 compares the proposed HDF-NET with twelve state-of-the-art fusion methods on the M3FD dataset, presenting the average results in Table 4. Evaluated using eight standard metrics, HDF-NET outperforms other methods, achieving top results in EN, SD, SCD, , and SSIM. These scores confirm the method’s capability to integrate complementary infrared and visible information, capturing fine details while preserving visual consistency and demonstrating robustness and effectiveness in complex and dynamic scenes.

Table 4.

Quantitative results of comparative experiments on the M3FD dataset. The highest values are presented in bold to highlight the comparative performance, while the second-highest values are underlined.

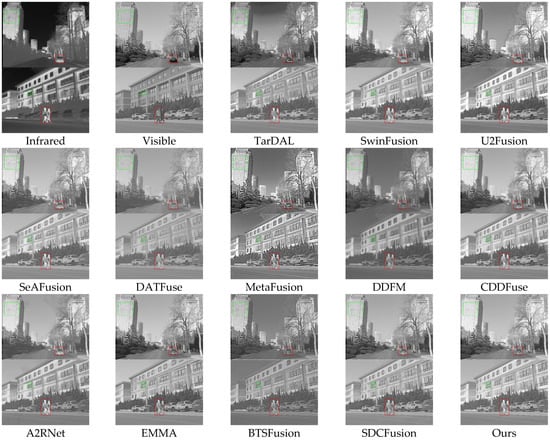

4.3. Ablation Study

To evaluate the validity of the pinwheel-convolutional transformer (PCT) module and the hierarchical feature refinement (HFR) module in image fusion tasks, we conduct a series of ablation studies on the MSRS dataset. We specifically design three experiments: (i) replacing the PCT module in the core feature encoder (CFE) with the standard lite transformer (LT) to examine the contributions of multilayer attention and pinwheel convolution to low-frequency feature extraction; (ii) removing the HRM module to analyze its role in multi-scale feature refinement and cross-modality alignment; and (iii) removing both PCT and HRM to form a minimal baseline for comparison.

As shown in Table 5, our full HDF-Net achieves top performance across all evaluation metrics, demonstrating the superiority of our proposed modules. Notably, removing the PCT module leads to degraded detail representation and contrast, highlighting its effectiveness in enhancing semantic low-frequency features. Omitting HRM causes significant drops in structural similarity metrics (SCD and SSIM), indicating its contribution to preserving spatial coherence and enhancing global-local feature interactions. The joint removal of both modules results in the worst performance, underscoring their complementary benefits.

Table 5.

Ablation study metrics results on the MSRS Test set.

Figure 8 illustrates visual comparisons under different ablation settings. Incorporating the HRM module enhances the preservation of delicate structures, resulting in more precise textures of trees and sidewalk edges and improving global-local consistency. The PCT module notably enhances image contrast and dynamic range, making thermal targets (e.g., pedestrians) more distinguishable and seamlessly integrated with the background under complex illumination conditions. Combining HRM and PCT, the complete HDF-Net achieves an optimal balance between thermal target enhancement and visible detail preservation, demonstrating robustness and effectiveness in real-world fusion scenarios.

Figure 8.

Visual comparison of ablation study results for each module on the MSRS dataset.

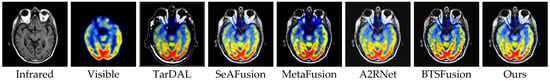

4.4. Medical Image Fusion

We performed a medical image fusion (MIF) test of 100 pairs of PET-CT and MRI-PET images. All models were initially trained on the IVIF projects and directly generalized to the MIF projects. The evaluation metrics are consistent with those used in the IVIF setting, aligning with state-of-the-art methods.

As shown in Figure 9, our method achieves visually and quantitatively superior performance. In particular, it demonstrates an enhanced capacity to preserve anatomical structures from MRI and metabolic details from PET, yielding fused images with more explicit tissue boundaries and better representation of functional intensity. Compared with SOTA methods such as SeAFusion, MetaFusion, and BTSFusion, our approach integrates cross-modal features more effectively, achieving a more balanced and informative fusion result across different MIF modalities.

Figure 9.

Visual comparison with 5 state-of-the-art methods for the MIF task.

4.5. Discussion

This section has demonstrated the superior performance of HDF-Net across multiple standard benchmarks, including MSRS, TNO, RoadScene, and M3FD. The proposed network consistently achieves leading results in key objective metrics such as EN, SD, AG, SF, SCD, and SSIM, validating its effectiveness in preserving structural details, enhancing salient targets, and maintaining global contrast under diverse imaging conditions.

While HDF-Net exhibits overall robustness, certain limitations persist. Preserving fine-grained textures can remain suboptimal in scenarios involving extremely low-contrast illumination or highly saturated infrared signals (e.g., intense thermal reflections at night). These observations provide meaningful guidance for the future extension and deployment of HDF-Net in broader fusion scenarios.

5. Conclusions

This paper presents HDF-Net, a hierarchical dual-branch network designed explicitly for fusing infrared and visible images. HDF-Net addresses the longstanding trade-off between structural integrity and texture detail preservation in fusion tasks by explicitly modeling modality-shared core and modality-specific refined features through a dedicated dual-branch encoder. To further enhance global contextual representation, particularly in low-contrast regions, we propose the pinwheel-convolutional transformer (PCT) module, which integrates multi-head attention with directional pinwheel convolution to expand the receptive field significantly. In addition, the hierarchical feature refinement (HFR) module leverages Mercer kernel mapping and dilated convolutions to construct a robust multi-scale attention mechanism, enabling more adaptive and discriminative cross-modal feature integration.

Comprehensive experiments on four publicly accessible IVIF datasets prove that HDF-Net consistently outperforms 12 state-of-the-art fusion approaches across eight quantitative evaluation metrics, yielding superior information preservation and structural fidelity results. Furthermore, we broaden the scope of HDF-Net to medical image fusion tasks such as MRI-PET, validating its strong generalization capabilities and practical utility beyond conventional fusion scenarios. In upcoming research, we aim to explore further the deployment of HDF-Net in broader fusion contexts, including multi-exposure image fusion and night vision enhancement, as well as its potential to improve performance in downstream vision projects, such as object recognition, instance segmentation, and scene comprehension.

Author Contributions

Conceptualization, Y.Z. (Yanghang Zhu); methodology, Y.Z. (Yanghang Zhu); software, Y.Z. (Yanghang Zhu); validation, Y.Z. (Yanghang Zhu), Y.Z. (Yaohua Zhu) and M.H.; resources, M.H.; data curation, J.J.; writing—original draft preparation, Y.Z. (Yanghang Zhu); writing—review and editing, Y.Z. (Yong Zhang). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The authors confirm that the data supporting this study’s findings are available within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

This appendix provides the complete per-image metric comparisons referenced in Section 4 of the main manuscript. For each of the four benchmark datasets—MSRS, TNO, RoadScene, and M3FD—we include detailed performance curves for the proposed method (HDF-Net) and 12 representative fusion baselines.

Figure A1.

Quantitative comparison of HDF-NET with 12 state-of-the-art methods on 25 pairs of images in the MSRS dataset.

Figure A2.

Quantitative comparison of HDF-NET with 12 state-of-the-art methods on 25 pairs of images in the TNO dataset.

Figure A3.

Quantitative comparison of HDF-NET with 12 state-of-the-art methods on 25 pairs of images in the RoadScene dataset.

Figure A4.

Quantitative comparison of HDF-NET with 12 state-of-the-art methods on 25 pairs of images in the M3FD dataset.

Appendix B

Figure A5 presents enlarged regions from representative MSRS scenes to supplement the main results. These examples highlight challenging conditions such as intense illumination and low-contrast structures. Compared with other methods, HDF-Net preserves more transparent textures and edges without introducing artifacts, confirming its advantage in handling fine-grained details under complex visual conditions.

Figure A5.

Qualitative close-up comparisons on a challenging scene from the MSRS dataset.

References

- Ma, W.; Wang, K.; Li, J.; Yang, S.X.; Li, J.; Song, L.; Li, Q. Infrared and Visible Image Fusion Technology and Application: A Review. Sensors 2023, 23, 599. [Google Scholar] [CrossRef] [PubMed]

- Neama, R.M.; Al-asadi, T.A. The Deep Learning Methods for Fusion Infrared and Visible Images: A Survey. Rev. D’intelligence Artif. 2024, 38, 575–585. [Google Scholar] [CrossRef]

- Zhang, X.; Demiris, Y. Visible, and Infrared Image Fusion Using Deep Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10535–10554. [Google Scholar] [CrossRef]

- Wu, Z.; Liu, W.; Wang, X. A Fusion Algorithm for Infrared and Visible Images Based on the Dual-Branch of CNN and Transformer. Proc. SPIE 2023, 12916, 129160S. [Google Scholar] [CrossRef]

- Yang, X.; Huo, H.; Wang, R.; Li, C.; Liu, X.; Li, J. DGLT-Fusion: A Decoupled Global–Local Infrared and Visible Image Fusion Transformer. Infrared Phys. Technol. 2022, 125, 104522. [Google Scholar] [CrossRef]

- Rao, D.; Wu, X.; Xu, T. TGFuse: An Infrared and Visible Image Fusion Approach Based on Transformer and Generative Adversarial Network. arXiv 2022, arXiv:2201.10147. [Google Scholar] [CrossRef]

- Chen, J.; Ding, J.; Yu, Y.; Gong, W. THFuse: An Infrared and Visible Image Fusion Network Using Transformer and Hybrid Feature Extractor. Neurocomputing 2023, 527, 71–82. [Google Scholar] [CrossRef]

- Vinoth, K.; Sasikumar, P. Multi-Sensor Fusion and Segmentation for Autonomous Vehicle Multi-Object Tracking Using Deep Q Networks. Sci. Rep. 2024, 14, 31130. [Google Scholar] [CrossRef]

- Xu, D.; Wang, Y.; Xu, S.; Zhu, K.; Zhang, N.; Zhang, X. Infrared and Visible Image Fusion with a Generative Adversarial Network and a Residual Network. Appl. Sci. 2020, 10, 554. [Google Scholar] [CrossRef]

- Zhang, C.; Li, X.; Xu, Y.; Wang, J.; Chen, R. CTDPGAN: Infrared and Visible Image Fusion Using CNN-Transformer Dual-Process-Based Generative Adversarial Network. Proc. Chin. Control. Conf. 2023, 42, 8044–8051. [Google Scholar] [CrossRef]

- Li, L.; Shi, Y.; Lv, M.; Jia, Z.; Liu, M.; Zhao, X.; Zhang, X.; Ma, H. Infrared and Visible Image Fusion via Sparse Representation and Guided Filtering in Laplacian Pyramid Domain. Remote Sens. 2024, 16, 3804. [Google Scholar] [CrossRef]

- Du, K.; Fang, L.; Chen, J.; Chen, D.; Lai, H. CTFusion: CNN-Transformer-Based Self-Supervised Learning for Infrared and Visible Image Fusion. Math. Biosci. Eng. 2024, 21, 6710–6730. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Jiang, Z.; Chen, X.; Zan, G.; Yang, M. FCMDNet: A Feature Correlation-Based Multi-Scale Dual-Branch Network for Infrared and Visible Image Fusion. Proc. Int. Conf. Robot. Comput. Vis. 2024, 6, 139–143. [Google Scholar] [CrossRef]

- Yin, H.; Xiao, J.; Chen, H. CSPA-GAN: A Cross-Scale Pyramid Attention GAN for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2023, 72, 5027011. [Google Scholar] [CrossRef]

- Lu, G.; Fang, Z.; Tian, J.; Huang, H.; Xu, Y.; Han, Z.; Kang, Y.; Feng, C.; Zhao, Z. GAN-HA: A Generative Adversarial Network with a Novel Heterogeneous Dual-Discriminator Network and a New Attention-Based Fusion Strategy for Infrared and Visible Image Fusion. Infrared Phys. Technol. 2024, 132, 105548. [Google Scholar] [CrossRef]

- Yang, X.; Huo, H.; Li, C.; Liu, X.; Wang, W.; Wang, C. Semantic Perceptive Infrared and Visible Image Fusion Transformer. Pattern Recognit. 2024, 149, 110223. [Google Scholar] [CrossRef]

- Gao, X.; Liu, S. BCMFIFuse: A Bilateral Cross-Modal Feature Interaction-Based Network for Infrared and Visible Image Fusion. Remote Sens. 2024, 16, 3136. [Google Scholar] [CrossRef]

- Li, X.; He, H.; Shi, J. HDCCT: Hybrid Densely Connected CNN and Transformer for Infrared and Visible Image Fusion. Electronics 2024, 13, 3470. [Google Scholar] [CrossRef]

- Luo, Y.; Luo, Z. Infrared and Visible Image Fusion: Methods, Datasets, Applications, and Prospects. Appl. Sci. 2023, 13, 10891. [Google Scholar] [CrossRef]

- Xing, M.; Liu, G.; Tang, H.; Qian, Y.; Zhang, J. CFNet: An Infrared and Visible Image Compression Fusion Network. Pattern Recognit. 2024, 156, 110774. [Google Scholar] [CrossRef]

- Park, S.; Vien, A.G.; Lee, C. Cross-Modal Transformers for Infrared and Visible Image Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 770–785. [Google Scholar] [CrossRef]

- Li, J.; Liu, L.; Song, H.; Huang, Y.; Jiang, J.; Yang, J. DCTNet: A Heterogeneous Dual-Branch Multi-Cascade Network for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2023, 72, 5030914. [Google Scholar] [CrossRef]

- Xu, X.; Shen, Y.; Han, S. Dense-FG: A Fusion GAN Model by Using Densely Connected Blocks to Fuse Infrared and Visible Images. Appl. Sci. 2023, 13, 4684. [Google Scholar] [CrossRef]

- Fu, Y.; Wu, X.J. A Dual-Branch Network for Infrared and Visible Image Fusion. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 10675–10680. [Google Scholar] [CrossRef]

- Yang, J.; Liu, S.; Wu, J.; Su, X.; Hai, N.; Huang, X. Pinwheel-shaped Convolution and Scale-based Dynamic Loss for Infrared Small Target Detection. arXiv 2024, arXiv:2412.16986. [Google Scholar] [CrossRef]

- Dinh, L.; Sohl-Dickstein, J.; Bengio, S. Density Estimation Using Real NVP. arXiv 2016, arXiv:1605.08803. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A Progressive Infrared and Visible Image Fusion Network Based on Illumination Aware. Inf. Fusion 2022, 83–84, 79–92. [Google Scholar] [CrossRef]

- Toet, A. The TNO Multiband Image Data Collection. Data Brief 2017, 15, 249–251. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Le, Z.; Jiang, J.; Guo, X. Fusiondn: A Unified Densely Connected Network for Image Fusion. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12484–12491. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware Dual Adversarial Learning and a Multi-Scenario Multi-Modality Benchmark to Fuse Infrared and Visible for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Sun, C.; Zhang, C.; Xiong, N. Infrared and Visible Image Fusion Techniques Based on Deep Learning: A Review. Electronics 2020, 9, 2162. [Google Scholar] [CrossRef]

- Patel, A.; Chaudhary, J. A Review on Infrared and Visible Image Fusion Techniques. In Intelligent Communication Technologies and Virtual Mobile Networks: ICICV 2019; Springer: Cham, Switzerland, 2020; pp. 127–144. [Google Scholar] [CrossRef]

- Duan, J.; Xiong, J.; Li, Y.; Ding, W. Deep Learning Based Multimodal Biomedical Data Fusion: An Overview and Comparative Review. Inf. Fusion 2024, 112, 102536. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. DenseFuse: A Fusion Approach to Infrared and Visible Images. IEEE Trans. Image Process. 2019, 28, 4796–4810. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L. IFCNN: A General Image Fusion Framework Based on Convolutional Neural Networks. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the Image Fusion: A Fast Unified Image Fusion Network Based on Proportional Maintenance of Gradient and Intensity. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12797–12804. [Google Scholar] [CrossRef]

- Tang, L.; Deng, Y.; Ma, Y.; Huang, J.; Ma, J. SuperFusion: A Versatile Image Registration and Fusion Network with Semantic Awareness. IEEE/CAA J. Autom. Sin. 2022, 9, 2121–2137. [Google Scholar] [CrossRef]

- Xu, J.; He, X. DAF-Net: A Dual-Branch Feature Decomposition Fusion Network with Domain Adaptive for Infrared and Visible Image Fusion. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39. [Google Scholar] [CrossRef]

- Zhang, X. Deep Learning-Based Multi-Focus Image Fusion: A Survey and a Comparative Study. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4819–4838. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A Generative Adversarial Network for Infrared and Visible Image Fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A Generative Adversarial Network with Multiclassification Constraints for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 5005014. [Google Scholar] [CrossRef]

- Rao, Y.; Liu, J.; Zhang, L. AttentionFGAN: Multi-Scale Attention Mechanism for Infrared and Visible Image Fusion. IEEE Trans. Multimed. 2024, 23, 1383–1396. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, H.; Zhang, Z. TarDAL: Target-Aware Dual Adversarial Learning Network for Image Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Visual Transformer. arXiv 2020, arXiv:2012.12556. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and Visible Image Fusion Methods and Applications: A Survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Ali, A.M.; Benjdira, B.; Koubaa, A.; El-Shafai, W.; Khan, Z.; Boulila, W. Vision Transformers in Image Restoration: A Survey. Sensors 2023, 23, 2385. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, Y.; Shao, W.; Li, H.; Zhang, L. SwinFuse: A Residual Swin Transformer Fusion Network for Infrared and Visible Images. IEEE Trans. Instrum. Meas. 2022, 71, 5016412. [Google Scholar] [CrossRef]

- Liu, J.; Lin, R.; Wu, G.; Liu, R.; luo, Z.; Fan, X.; Luo, Z; Fan, X. CoCoNet: Coupled Contrastive Learning Network with Multi-Level Feature Ensemble for Multi-Modality Image Fusion. Int. J. Comput. Vis. 2024, 132, 1748–1775. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion. arXiv 2022, arXiv:2211.14461. [Google Scholar] [CrossRef]

- Xu, S.; Zhou, C.; Xiao, J.; Tao, W.; Dai, T. PDFusion: A Dual-Branch Infrared and Visible Image Fusion Network Using Progressive Feature Transfer. J. Vis. Commun. Image Represent. 2024, 92, 104190. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Xu, H.; Ma, Y.; Guo, X.; Huang, J.; Fan, F.; Zhang, J.; Xu, Y. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image Fusion in the Loop of High-Level Vision Tasks: A Semantic-Aware Real-Time Infrared and Visible Image Fusion Network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y.; Duan, Y.; Si, T. DATFuse: Infrared and Visible Image Fusion via Dual Attention Transformer. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3159–3172. [Google Scholar] [CrossRef]

- Zhao, W.; Xie, S.; Zhao, F.; He, Y.; Lu, H. Metafusion: Infrared and Visible Image Fusion via Meta-Feature Embedding from Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13955–13965. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhu, Y.; Zhang, J.; Xu, S.; Zhang, Y.; Zhang, K.; Meng, D.; Timofte, R.; Van Gool, L. DDFM: Denoising Diffusion Model for Multi-Modality Image Fusion. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1 October 2023; IEEE: New York, NY, USA, 2023; pp. 8048–8059. [Google Scholar] [CrossRef]

- Li, J.; Yu, H.; Chen, J.; Ding, X.; Wang, J.; Liu, J.; Ma, H. A2RNet: Adversarial Attack Resilient Network for Robust Infrared and Visible Image Fusion. arXiv 2024, arXiv:2412.09954. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Zhang, K.; Xu, S.; Chen, D.; Timofte, R.; Van Gool, L. Equivariant Multi-Modality Image Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–20 June 2024; pp. 25912–25921. [Google Scholar] [CrossRef]

- Qian, Y.; Liu, G.; Tang, H.; Xing, M.; Chang, R. BTSFusion: Fusion of Infrared and Visible Image via a Mechanism of Balancing Texture and Salience. Opt. Lasers Eng. 2024, 173, 107925. [Google Scholar] [CrossRef]

- Liu, X.; Huo, H.; Li, J.; Pang, S.; Zheng, B. A Semantic-driven Coupled Network for Infrared and Visible Image Fusion. Inf. Fusion 2024, 108, 102352. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).