Enhancing Robustness in UDC Image Restoration Through Adversarial Purification and Fine-Tuning

Abstract

1. Introduction

2. Related Work

2.1. UDC Image Restoration

2.2. General Adversarial Attacks and Defenses

2.3. Adversarially Robust Image Restoration

3. Adversarial Attacks on UDC IR

3.1. White-Box Attacks

3.2. Black-Box Attacks

4. Adversarial Purification and Fine-Tuning

4.1. Adversarial Purification

4.2. Model Fine-Tuning

5. Experiments

5.1. Implementation Details

- Dataset. For the dataset, following [3,8], we synthesize the dataset using nine existing ZTE Axon 20 phone (ZTE, Shenzhen, China), PSFs and one real-scene PSF [56]. We generate a total of 21,060 training image pairs and 3600 testing image pairs from the 2016 training images and 360 testing images, which are collected from the HDRI Haven dataset [57].

- Experimental details. Specifically, we adopt a supervised loss function that combines L2 reconstruction loss and perceptual loss based on VGG-19 features (relu3_3 and relu4_2). For models involving adversarial training, the adversarial loss is also incorporated. The learning rate is initialized at and decayed using cosine annealing to . Fine-tuning is conducted with a batch size of 16. The training set is derived from the same synthetic dataset, using purified images generated via the DDPM process with varying diffusion steps (e.g., 50–100) to improve data diversity. Data augmentation includes random horizontal flips, 256 × 256 cropping, and light color jittering. For white-box attacks, PGD uses 20 iterations with step sizes of 1/256, 2/256, 4/256, and 8/256 under different difficulty levels, while C&W is implemented using its L2 version with 9 binary search steps and zero confidence. For black-box attacks, SimBA is conducted with 1000 iterations and a step size of 4/256. All experiments are performed on a server equipped with 8 NVIDIA RTX 3090 GPUs (Nvidia, Santa Clara, CA, USA) and 2 Intel Xeon Silver 4314 CPUs (Intel, Santa Clara, CA, USA). Runtime measurements are obtained using standard Python 3.8 timing tools and reported as the average inference time over all test images.

- UDC IR methods. We select six state-of-the-art UDCIR (Under-Display Camera image restoration) methods for comparison, including DAGF (Deep Atrous Guided Filter) [27], DISCNet (Dynamic Skip Connection Network) [3], UDCUNet (Under-Display Camera Image Restoration via U-shape Dynamic Network) [5], BNUDC (A Two-Branched Deep Neural Network for Restoring Images from Under-Display Cameras) [4], SRUDC (Under-Display Camera Image Restoration with Scattering Effect) [8], and DWFormer (Dynamic Window Transformer) [7]. We also include a general image restoration method called UFormer (U-Shaped Transformer) [58] for a comprehensive evaluation. All the methods are re-trained on the training dataset with the same parameter set to ensure a fair comparison. We adopt PSNR (Peak Signal-to-Noise Ratio) [59] and SSIM (Structure Similarity Index Measure) [52] as the evaluation metrics.

5.2. Robustness Evaluation on Attack Methods Comparison

- From the aspect of PSNR. Table 1 compares the PSNR performance of various deep UDCIR methods under different attack methods. All methods experience a significant decrease in performance after being subjected to adversarial attacks. DISCNet demonstrates the best performance in clean images with the PSNR of 35.237. UDCUNet and DAGF also perform well, with the PSNR of and , respectively. DWFormer and UFormer exhibit relatively lower performance. This can be attributed to the fact that DWFormer was trained on the TOLED&POLED dataset [1] and has a more significant number of parameters. The limited size of the training set and the larger model size prevent UFormer from fully leveraging its performance capabilities.

- 1.

- Single attack method on different restoration methods. In terms of PGD, this type of attack generally decreased the performance of all models. The performance of DAGF witnessed the most substantial decline, dropping from 24.972 to 13.772. BNUDC experienced the second most prominent decrease, going from 24.911 to 14.565. However, DISCNet and UDCUNet showed better restoration performance, indicating their robustness in handling such attacks. In terms of C&W, most models saw a significant decline in performance, particularly DISCNet and UDCUNet. UFormer, on the other hand, demonstrated significant robustness. In terms of SimBA, the impact of attacking UDCIR methods was relatively minor, with no significant vulnerabilities exposed. DISCNet and BNUDC exhibited a slight advantage in maintaining stable performance. In terms of square attack, the results showed notable differences in impact across different models. UFormer and DISCNet were more effective in dealing with such attacks, while DWFormer was particularly sensitive to square attacks, exhibiting a considerable decline in performance.

- 2.

- Single restoration method against different attack methods. DISCNet exhibits overall strong performance, excelling in handling clean images and maintaining high effectiveness under various attack types. However, it shows notable sensitivity to C&W attack, suggesting a potential weakness in its resilience against this specific type of adversarial attack. UDCUNet demonstrates robustness across attack scenarios, showcasing balanced performance under various adversarial conditions. Despite this, the model reveals a sensitivity to C&W attack, with a noticeable decline in performance when subjected to this particular form of attack. BNUDC stands out for its excellent performance under SimBA attacks, showcasing superior capabilities in the face of this specific adversarial technique. However, its performance experiences significant drops under other attack types, mainly exhibiting vulnerability to C&W attack, indicating the need for additional optimization or protective measures. DWFormer reveals vulnerability to square attack, displaying the weakest performance under this specific adversarial condition. Its general performance is average across other attack types and clean image processing, lacking standout achievements compared to its counterparts. UFormer demonstrates remarkable resilience under C&W attacks, highlighting its strength in maintaining robustness against this particular form of adversarial assault. The model exhibits balanced performance, showing competency in handling clean images and various attack types without specific weaknesses.

- From the aspect of SSIM.Table 2 compares the SSIM performance of these methods under different attack methods. Overall, DISCNet stands out as the most effective method. It leads with in processing clean images and maintains the top position with an average performance SSIM of under four different attack methods. This indicates that DISCNet is best suited to deal with unattacked clean images and demonstrates the strongest resilience and best overall performance under various attacks. To obtain a more detailed and comprehensive understanding of the robustness of different UDCIR methods against various attack methods, we analyze this table from two perspectives:

- 1.

- Single attack method on different restoration methods. Regarding specific attack methods, different methods have their respective strengths. For instance, while DISCNet shows the best resistance against PGD and Square attack, BNUDC and SRUDC perform better under SimBA. UFormer, on the other hand, indicates relatively better performance against the C&W attack.

- 2.

- Single restoration method against different attack methods. In terms of PGD attack, DISCNet demonstrates superior resilience, achieving the highest SSIM of . This indicates its effectiveness in countering this type of attack. Conversely, UDCUNet and UFormer show more vulnerability with SSIM of and , respectively, suggesting a greater sensitivity to PGD attacks. In terms of the C&W attack, a notable shift in performance is observed. UFormer emerges as the most resistant, with the SSIM of 0.441, indicating its strength against this sophisticated attack method. Conversely, SRUDC shows significant vulnerability, scoring only , highlighting its weak defense against CW attacks. In terms of SimBA, BNUDC, and SRUDC exhibit commendable resilience, scoring and , respectively. These scores reflect their robustness in scenarios where the attacker’s strategy is not fully known, a key strength for black-box attack scenarios. In contrast, DISCNet and DAGF show comparatively weaker performance under SimBA attacks. In terms of Square Attack, DISCNet again stands out, with the SSIM of , which underscores its effectiveness in repelling this efficient and effective attack. Conversely, DWFormer demonstrates a significant lack of resistance with a score of , pointing to its vulnerability to Square Attack.

5.3. Robustness Evaluation on Attack Objectives Comparison

- Comparison of different attack objectives in PGD in terms of PSNR. Table 3 shows the PSNR performance of various deep UDCIR methods under different attack objectives in the PGD attack. Analyzing the data reveals a trend indicating that the MSE objective tends to induce more pronounced degradation than the LPIPS objective. For DISCNet, SRUDC, DWFormer, DAGF, and UFormer, there is a marginal difference in performance following both types of attacks, with variations of approximately . However, BDUDC exhibits a notable contrast, experiencing a significant drop in PSNR from 24.911 to 14.565 under the MSE objective, while the LPIPS objective results in a PSNR decrease to 22.356 from 24.911. Although the MSE objective performs comparably to the LPIPS objective in more challenging restoration methods, it outperforms the latter in specific instances.

- Comparison of different attack objectives in PGD in terms of SSIM. Table 4 compares the SSIM performance of different attack objectives in PGD. It can be observed that the MSE objective yields more effective results compared to the LPIPS objective for most restoration methods. DISCNet and BNUDC exhibit poorer robustness when facing the LPIPS objective, primarily attributed to their utilization of VGG-based [60] visual loss methods. Other methods are more susceptible to the MSE objective.

5.4. Robustness Evaluation on Attack Levels Comparison

5.5. Defense Strategy Results

- PGD Objective Comparison. Table 5 presents the PSNR performance of adversarial training and our proposed defense strategy with PGD attack. After undergoing adversarial training, DISCNet exhibits improved robustness against PGD attack, but its restoration performance on clean images slightly decreases from to . UFormer, on the other hand, shows a significant improvement in robustness after adversarial training, while its performance on clean images remains relatively unchanged. When only implementing the DiffPure (DP) strategy, DISCNet experiences a more noticeable decline in performance on clean images, while UFormer shows a slight improvement. The DP strategy refers to the diffusion model of adversarial purification that first interferes with adversarial examples with noise through a forward diffusion process, and then reconstructs clean images through a reverse generation process to defend against attacks. This suggests that DISCNet is more sensitive to noise. However, when implementing our proposed defense strategy, UFormer demonstrates a significant improvement in performance on clean images compared to the original model, increasing from 18.795 to 19.312. DISCNet’s performance remains similar to the original model on clean images, but its robustness significantly improves when under attack.

5.6. Other Attack Methods Comparison

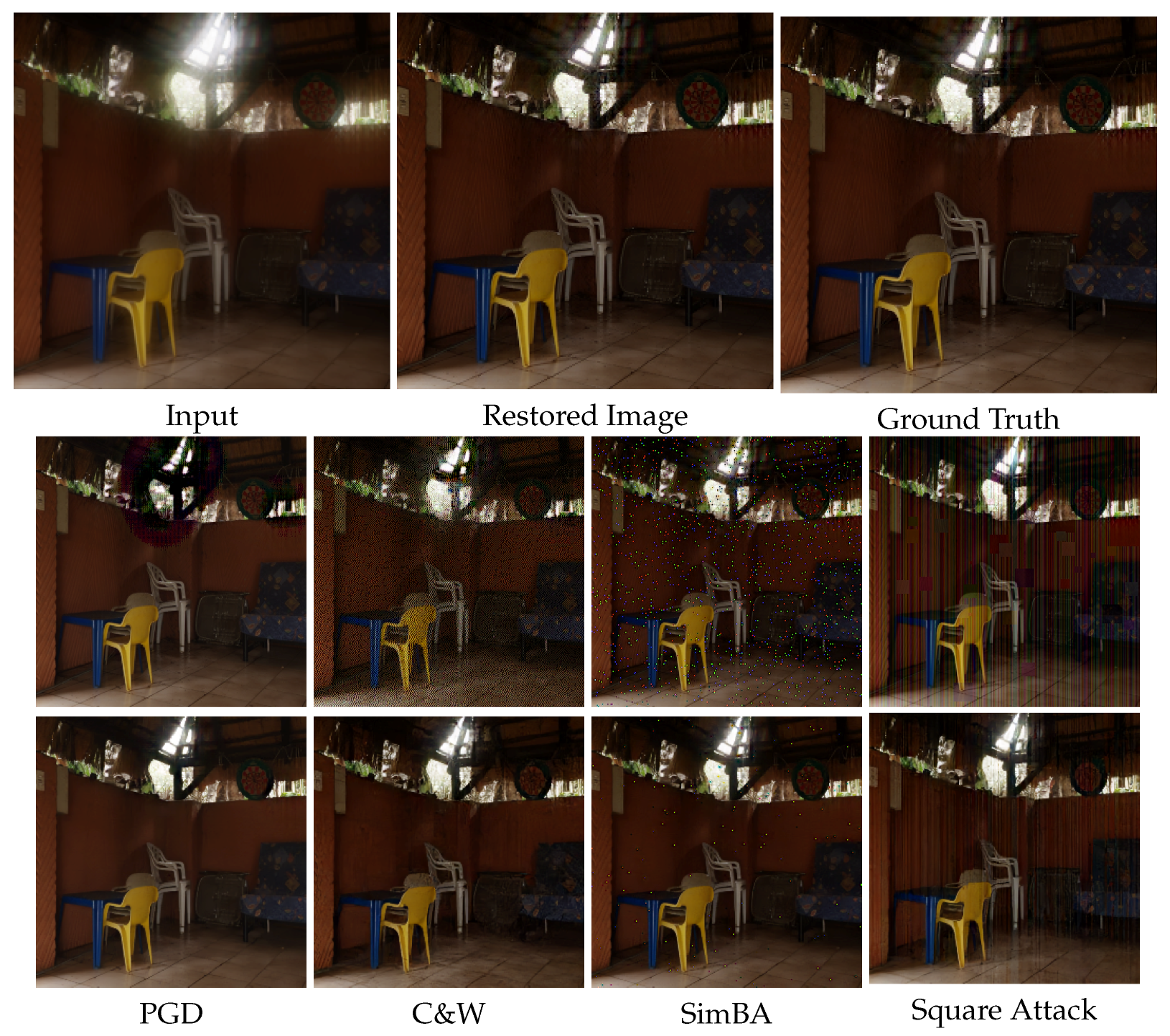

5.7. Visual Comparison

- Comparison of DISCNet under C&W attack with different defense strategies. In Figure 6, we present visual results of DISCNet with adversarial training and our proposed defense strategy under C&W attack. DISCNet fails to perform image restoration after being attacked, resulting in a completely black image. When adversarial training is applied, the attacked images exhibit black color patches and noticeable glare. Light diffusion is a significant phenomenon in the second row of images. When only DP is applied, there is a noticeable improvement in glare and irregular color patches in the images. However, some irregular color patches are still present, especially in the second row of images. When our proposed defense strategy is implemented, the diffusion around the light source in the images of the second row is significantly reduced. Similarly, no irregular color patches are observed in the images of the third row.

- Comparison of UFormer under C&W attack with different defense strategies. In Figure 7, we present additional visual results of the UFormer under the C&W attack. From the third column, it can be observed that UFormer exhibits numerous irregular patches when subjected to adversarial attacks, making it challenging to recover the original image. When adversarial training is applied, there is a slight improvement in the appearance of irregular patches in the visual results compared to the previous case. However, in the fifth and sixth columns, significant improvements in the appearance of irregular patches are observed when using both our proposed defense strategy and the only DP. Compared to the results obtained from clean images, our proposed defense strategy can preserve the overall structure of the original image and maintain good robustness.

- Comparison of DISCNet and UFormer under black attack with different defense strategies.Figure 8 visually compares adversarial training and our proposed defense strategy when facing a square attack and SimBA. Based on the first-row images, it can be seen that DISCNet exhibits noticeably large and small blocks of colors when subjected to square attacks. When our proposed method is implemented, these blocks of colors are significantly reduced, and the glare issue is well resolved. Moreover, in the second-row images, it can be seen that UFormer performs better when subjected to Simba attacks with the application of our method.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y.; Ren, D.; Emerton, N.; Lim, S.; Large, T.A. Image Restoration for Under-Display Camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Ali, A.M.; Benjdira, B.; Koubaa, A.; El-Shafai, W.; Khan, Z.; Boulila, W. Vision transformers in image restoration: A survey. Sensors 2023, 23, 2385. [Google Scholar] [CrossRef] [PubMed]

- Feng, R.; Li, C.; Chen, H.G.; Li, S.; Loy, C.C.; Gu, J. Removing Diffraction Image Artifacts in Under-Display Camera via Dynamic Skip Connection Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Koh, J.; Lee, J.; Yoon, S. BNUDC: A Two-Branched Deep Neural Network for Restoring Images from Under-Display Cameras. In Proceedings of the the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Liu, X.; Hu, J.; Chen, X.; Dong, C. UDC-UNet: Under-Display Camera Image Restoration via U-shape Dynamic Network. In Proceedings of the European Conference on Computer Vision Workshops, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Luo, J.; Ren, W.; Wang, T.; Li, C.; Cao, X. Under-Display Camera Image Enhancement via Cascaded Curve Estimation. IEEE Trans. Image Process. 2022, 31, 4856–4868. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Song, Y.; Du, X. Modular Degradation Simulation and Restoration for Under-Display Camera. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022. [Google Scholar]

- Song, B.; Chen, X.; Xu, S.; Zhou, J. Under-Display Camera Image Restoration with Scattering Effect. In Proceedings of the International Conference on Computer Vision, Paris, France, 1–6 October 2023. [Google Scholar]

- Tan, J.; Chen, X.; Wang, T.; Zhang, K.; Luo, W.; Cao, X. Blind Face Restoration for Under-Display Camera via Dictionary Guided Transformer. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 4914–4927. [Google Scholar] [CrossRef]

- Chen, X.; Wang, T.; Shao, Z.; Zhang, K.; Luo, W.; Lu, T.; Liu, Z.; Kim, T.; Li, H. Deep Video Restoration for Under-Display Camera. arXiv 2023, arXiv:2309.04752. [Google Scholar]

- Wang, Z.; Zhang, K.; Sankaranarayana, R.S. LRDif: Diffusion Models for Under-Display Camera Emotion Recognition. In Proceedings of the 2024 IEEE International Conference on Image Processing, Abu Dhabi, United Arab Emirates, 27–30 October 2024. [Google Scholar]

- Rossmann, K. Point spread-function, line spread-function, and modulation transfer function: Tools for the study of imaging systems. Radiology 1969, 93, 257–272. [Google Scholar] [CrossRef] [PubMed]

- Fukushima, K.; Miyake, S.; Ito, T. Neocognitron: A neural network model for a mechanism of visual pattern recognition. IEEE Trans. Syst. Man Cybern. 1983, 13, 826–834. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution: Attention Over Convolution Kernels. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Conference and Workshop on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.S.; Khan, F.S. Transformers in remote sensing: A survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Zhang, K.; Ren, W.; Luo, W.; Lai, W.; Stenger, B.; Yang, M.; Li, H. Deep Image Deblurring: A Survey. Int. J. Comput. Vis. 2022, 130, 2103–2130. [Google Scholar] [CrossRef]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Carlini, N.; Wagner, D.A. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2017. [Google Scholar]

- Guo, C.; Gardner, J.R.; You, Y.; Wilson, A.G.; Weinberger, K.Q. Simple Black-box Adversarial Attacks. In Proceedings of the ICML, Proceedings of Machine Learning Research, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 2484–2493. [Google Scholar]

- Andriushchenko, M.; Croce, F.; Flammarion, N.; Hein, M. Square Attack: A Query-Efficient Black-Box Adversarial Attack via Random Search. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Proceedings of the Conference and Workshop on Neural Information Processing Systems, Virtual, 6–12 December 2020. [Google Scholar]

- Nie, W.; Guo, B.; Huang, Y.; Xiao, C.; Vahdat, A.; Anandkumar, A. Diffusion Models for Adversarial Purification. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Zhou, Y.; Kwan, M.; Tolentino, K.; Emerton, N.; Lim, S.; Large, T.A.; Fu, L.; Pan, Z.; Li, B.; Yang, Q.; et al. UDC 2020 Challenge on Image Restoration of Under-Display Camera: Methods and Results. In Proceedings of the European Conference on Computer Vision Workshops, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Sundar, V.; Hegde, S.; Kothandaraman, D.; Mitra, K. Deep Atrous Guided Filter for Image Restoration in Under Display Cameras. In Proceedings of the ECCV Workshops, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. In Proceedings of the International Conference on Learning Representations (Poster), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Xu, H.; Ma, Y.; Liu, H.; Deb, D.; Liu, H.; Tang, J.; Jain, A.K. Adversarial Attacks and Defenses in Images, Graphs and Text: A Review. Int. J. Autom. Comput. 2020, 17, 151–178. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the International Conference on Learning Representations (Poster), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Moosavi-Dezfooli, S.; Fawzi, A.; Frossard, P. DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Chen, P.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C. ZOO: Zeroth Order Optimization Based Black-box Attacks to Deep Neural Networks without Training Substitute Models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017. [Google Scholar]

- Rahmati, A.; Moosavi-Dezfooli, S.; Frossard, P.; Dai, H. GeoDA: A Geometric Framework for Black-Box Adversarial Attacks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Gandikota, K.V.; Chandramouli, P.; Möller, M. On Adversarial Robustness of Deep Image Deblurring. In Proceedings of the IEEE International Conference on Image Processing, Bordeaux, France, 16–19 October 2022. [Google Scholar]

- Yu, Y.; Yang, W.; Tan, Y.; Kot, A.C. Towards Robust Rain Removal Against Adversarial Attacks: A Comprehensive Benchmark Analysis and Beyond. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Gui, J.; Cong, X.; Peng, C.; Tang, Y.Y.; Kwok, J.T. Adversarial Attack and Defense for Dehazing Networks. arXiv 2023, arXiv:2303.17255v2. [Google Scholar]

- Morris, J.X.; Lifland, E.; Yoo, J.Y.; Grigsby, J.; Jin, D.; Qi, Y. TextAttack: A Framework for Adversarial Attacks, Data Augmentation, and Adversarial Training in NLP. arXiv 2020, arXiv:2005.05909. [Google Scholar]

- Zhuang, H.; Zhang, Y.; Liu, S. A Pilot Study of Query-Free Adversarial Attack against Stable Diffusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Guo, R.; Chen, Q.; Liu, H.; Wang, W. Adversarial robustness enhancement for deep learning-based soft sensors: An adversarial training strategy using historical gradients and domain adaptation. Sensors 2024, 24, 3909. [Google Scholar] [CrossRef] [PubMed]

- Schott, L.; Rauber, J.; Bethge, M.; Brendel, W. Towards the first adversarially robust neural network model on MNIST. In Proceedings of the International Conference on Learning Representations (Poster), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Ding, G.W.; Lui, K.Y.C.; Jin, X.; Wang, L.; Huang, R. On the Sensitivity of Adversarial Robustness to Input Data Distributions. In Proceedings of the International Conference on Learning Representations (Poster), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Guo, C.; Rana, M.; Cissé, M.; van der Maaten, L. Countering Adversarial Images using Input Transformations. In Proceedings of the International Conference on Learning Representations (Poster), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Jia, X.; Wei, X.; Cao, X.; Foroosh, H. ComDefend: An Efficient Image Compression Model to Defend Adversarial Examples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Samangouei, P.; Kabkab, M.; Chellappa, R. Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models. In Proceedings of the International Conference on Learning Representations (Poster), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhou, J.; Liang, C.; Chen, J. Manifold Projection for Adversarial Defense on Face Recognition. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Mustafa, A.; Khan, S.H.; Hayat, M.; Shen, J.; Shao, L. Image Super-Resolution as a Defense Against Adversarial Attacks. IEEE Trans. Image Process. 2019, 29, 1711–1724. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Zhang, H.; Kim, J.; Hsieh, C.; Lee, J. Evaluating Robustness of Deep Image Super-Resolution Against Adversarial Attacks. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yue, J.; Li, H.; Wei, P.; Li, G.; Lin, L. Robust Real-World Image Super-Resolution against Adversarial Attacks. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, China, 20–24 October 2021. [Google Scholar]

- Song, Z.; Zhang, Z.; Zhang, K.; Luo, W.; Fan, Z.; Ren, W.; Lu, J. Robust Single Image Reflection Removal Against Adversarial Attacks. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Dong, Z.; Wei, P.; Lin, L. Adversarially-Aware Robust Object Detector. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Cai, M.; Wang, X.; Sohel, F.; Lei, H. Diffusion Models-Based Purification for Common Corruptions on Robust 3D Object Detection. Sensors 2024, 24, 5440. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhang, Z.; Zhang, X.; Zheng, H.; Zhou, M.; Zhang, Y.; Wang, Y. DR2: Diffusion-Based Robust Degradation Remover for Blind Face Restoration. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Yang, A.; Sankaranarayanan, A.C. Designing Display Pixel Layouts for Under-Panel Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2245–2256. [Google Scholar] [CrossRef] [PubMed]

- Poly Haven, The Public 3D Asset Library. Available online: https://polyhaven.com/hdris (accessed on 26 May 2025).

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A General U-Shaped Transformer for Image Restoration. In Proceedings of the Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Method | DISCNet [3] | UDCUNet [5] | BNUDC [4] | SRUDC [8] | DWFormer [7] | DAGF [27] | UFormer [58] | |

|---|---|---|---|---|---|---|---|---|

| Clean | 35.237 | 27.427 | 24.911 | 21.904 | 17.651 | 24.972 | 19.795 | |

| Attack | PGD | |||||||

| C&W | ||||||||

| SimBA | ||||||||

| SquareA | ||||||||

| Method | DISCNet [3] | UDCUNet [5] | BNUDC [4] | SRUDC [8] | DWFormer [7] | DAGF [27] | UFormer [58] | |

|---|---|---|---|---|---|---|---|---|

| Clean | 0.960 | 0.937 | 0.8917 | 0.834 | 0.715 | 0.855 | 0.642 | |

| Attack | PGD | |||||||

| C&W | ||||||||

| SimBA | ||||||||

| SquareA | ||||||||

| Method | Clean | MSE | LPIPS |

|---|---|---|---|

| DISCNet [3] | 35.237 | ||

| UDCUNet [5] | 27.427 | ||

| BNUDC [4] | 24.911 | ||

| SRUDC [8] | 21.904 | ||

| DWFormer [7] | 17.651 | ||

| DAGF [27] | 24.972 | ||

| UFormer [58] | 18.795 |

| Method | Clean | MSE | LPIPS |

|---|---|---|---|

| DISCNet [3] | 0.960 | ||

| UDCUNet [5] | 0.937 | ||

| BNUDC [4] | 0.892 | ||

| SRUDC [8] | 0.834 | ||

| DWFormer [7] | 0.715 | ||

| DAGF [27] | 0.855 | ||

| UFormer [58] | 0.642 |

| Method | Clean | MSE | LPIPS |

|---|---|---|---|

| DISCNet Adv. [3] | 34.082 | ||

| DISCNet [3] + DP | 32.773 | ||

| DISCNet [3] + DP + FT | 34.942 | ||

| UFormer Adv. [58] | 18.819 | ||

| UFormer [58] + DP | 18.950 | ||

| UFormer [58] + DP + FT | 19.938 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, W.; Song, Z.; Zhang, Z.; Lin, X.; Lu, J. Enhancing Robustness in UDC Image Restoration Through Adversarial Purification and Fine-Tuning. Sensors 2025, 25, 3386. https://doi.org/10.3390/s25113386

Dong W, Song Z, Zhang Z, Lin X, Lu J. Enhancing Robustness in UDC Image Restoration Through Adversarial Purification and Fine-Tuning. Sensors. 2025; 25(11):3386. https://doi.org/10.3390/s25113386

Chicago/Turabian StyleDong, Wenjie, Zhenbo Song, Zhenyuan Zhang, Xuanzheng Lin, and Jianfeng Lu. 2025. "Enhancing Robustness in UDC Image Restoration Through Adversarial Purification and Fine-Tuning" Sensors 25, no. 11: 3386. https://doi.org/10.3390/s25113386

APA StyleDong, W., Song, Z., Zhang, Z., Lin, X., & Lu, J. (2025). Enhancing Robustness in UDC Image Restoration Through Adversarial Purification and Fine-Tuning. Sensors, 25(11), 3386. https://doi.org/10.3390/s25113386