Abstract

Numerous existing methods demonstrate impressive performance in brightening low-illumination images but fail in detail enhancement and color correction. To tackle these challenges, this paper proposes a dual-branch network including three main parts: color space transformation, a color correction network (CC-Net), and a light-boosting network (LB-Net). Specifically, we first transfer the input into the CIELAB color space to extract luminosity and color components. Afterward, we employ LB-Net to effectively explore multiscale features via a carefully designed large–small-scale structure, which can adaptively adjust the brightness of the input images. And we use CC-Net, a U-shaped network, to generate noise-free images with vivid color. Additionally, an efficient feature interaction module is introduced for the interaction of the two branches’ information. Extensive experiments on low-light image enhancement public benchmarks demonstrate that our method outperforms state-of-the-art methods in restoring the quality of low-light images. Furthermore, experiments further indicate that our method significantly enhances performance in object detection under low-light conditions.

1. Introduction

Images and videos play significant roles in our daily lives, as they transmit messages and record significant moments. However, images captured under insufficient lighting conditions (e.g., nighttime) typically present color casts, as well as unnatural-looking visibility, and further deliver unsatisfactory or incorrect information for object detection and other advanced vision tasks [1,2]. Although it is possible to increase the brightness of an image by using a flashlight, increasing the ISO, or extending the exposure time during the shooting stage, these techniques require the photographer to have both advanced hardware and high technical proficiency [2,3].

Image enhancement techniques can effectively yield visually pleasing results from degraded inputs without upgrading equipment. Many traditional enhancement approaches, including pixel transformation-based [4], Retinex-based [5], and fusion-based [6,7] methods, have been reported to improve the quality of low-light images. Among them, the former directly processes the image pixels for generating high-quality images while introducing observable local over-enhancement. Retinex-based methods to enhance low-light images typically follow a “decomposition–adjustment–exposure control” process, but they yield extra color casts. Fusion-based techniques fuse multiple feature maps according to a certain rule for achieving promising performance but fail in detail enhancement.

Recently, the deep convolutional neural network (CNN) has been widely applied in medical image processing, object detection, image segmentation, etc., due to its powerful feature extraction and representation capabilities [8,9]. LLNet [10], based on the sparse denoising autoencoder, is a deep learning pioneering network which can achieve the goals of light enhancement and denoising. Subsequently, Retinex theory [11], semantic information [12], contrastive learning [13], and other technologies [14,15] were introduced into the CNN to improve its nonlinear mapping capacity from a degraded input to the corresponding high-quality image. Although these proposed learning-based methods can solve the problems encountered in traditional methods, they exhibit massive parameter space and heavily rely on computational resources. And many CNN-based models seldom utilize multiscale features at different scale spaces, introducing color distortion, blurry details, and unsatisfactory visual experiences.

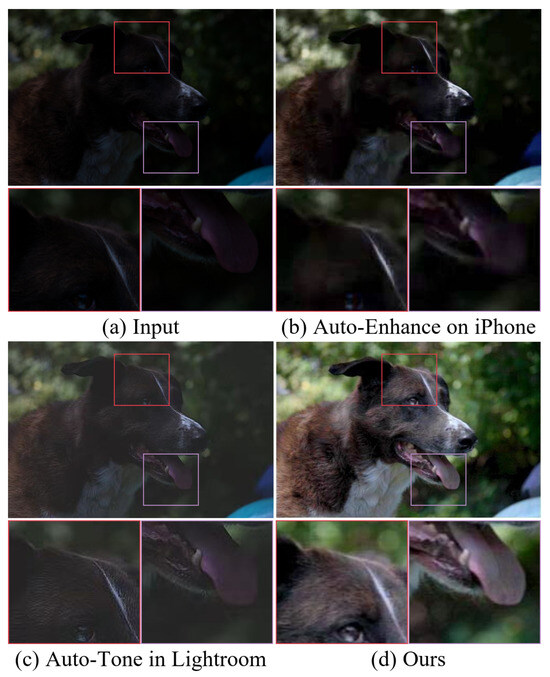

In this paper, we provide a feasible LLIE method called Large–small-scale Structure blended U-shaped network (LSUNet). LSUNet can effectively remove color casts and noise from images captured under suboptimal lighting conditions. Specifically, the original data are transferred into the HSV color space for separating the luminosity and color components to effectively overcome color casts. A light-boosting network (LB-Net) is used for the luminosity component, i.e., channel L, for light enhancement, and a color correction network (CC-Net) is employed for the color components, i.e., channel A and channel B, for color correction. Additionally, we also design an efficient feature interaction module (EFIM) to transfer luminosity and color features between CC-Net and LB-Net. Figure 1 shows the results generated by our method and other existing techniques. Intuitively, our method produces visually satisfactory images with high contrast, vivid color, and clearer details thanks to our carefully designed modules.

Figure 1.

Visual comparison on low-light image. (a) Low-light image randomly selected from MIT-Adobe FiveK. Light-enhanced results of (b) iPhone, (c) Lightroom, and (d) the proposed method. Clearly, our method can effectively brighten low-light images and remove color distortion.

To summarize, our main contributions are as follows:

- We propose a robust and feasible LLIE method which can process the luminosity and color components of low-light images in the HSV space. Experiments show that our LSUNet can yield noise-free and natural-looking images with clear details and vivid color. Additionally, our method is also helpful for object detection under low-light conditions.

- We propose a lightweight light-boosting network built on a large–small-scale structure for fully exploring multiscale features at different scale spaces. In comparison with existing multiscale structures, our method offers faster speed for light enhancement without extra observable under- and over-enhancement.

- We propose a color correction network based on a U-shaped network with a strided convolution strategy to remove color casts and noise. Additionally, an efficient feature interaction module (EFIM) is also presented to explore the relationship of luminosity and color features for generating natural-looking visibility and clearer details.

The remainder of this paper is organized as follows: In Section 2, we provide an overview of works related to the proposed approach. In Section 3, we introduce the proposed method in detail. In Section 4, we discuss the train details and benchmarks, as well as an ablation study, a comparison of computational complexity, and an application test. Finally, we present the conclusions on the proposed method in Section 5.

2. Related Work

Images captured under poor lighting conditions typically exhibit unsatisfactory visibility and low contrast. Consequently, plenty of LLIE techniques including traditional and learning-based methods have been proposed to improve the quality of degraded images.

2.1. Traditional Methods

In the early stage, pixel transformation-based and histogram equalization (HE)-based methods are usually used to restore image quality. The former, including Linear stretch, Gamma correction, and S-type function, can simplify the brightening of low-illumination images [16,17], but they introduce observable local over-/under-enhancement because of pixel stretch globally. HE-based methods, based on the statistical information of pixels including global/local histogram equalization, have been widely used for restoring the quality of degraded images acquired under suboptimal lighting conditions [18]. Huang et al. [19] proposed contrast limited dynamic quadri-histogram equalization, which includes sub-histogram partition and adaptive histogram clipping, as well as sub-histogram mapping and equalization. Dyke et al. [20] proposed an adaptive kernel-based method that seeks to address the issue of histogram sparsity for downstream applications. Yuan et al. [21] presented an adaptive histogram equalization method with visual perception consistency. Additionally, the genetic algorithm [22], multi-stage processing [23], the differential evolution algorithm [24], and other techniques have been proposed to optimize HE-based methods. But these methods show poor performance in robustness and generalization for the LLIE task and inevitability introduce unwanted color casts and blurry details.

Physical model-based methods, including Retinex and the atmospheric scattering model, exhibit good interpretability in light enhancement. Retinex-based methods [25,26] separate the image into illumination and reflectance components to brighten low-illumination images. Cai et al. [27] employed a cortex-like contour extraction algorithm and retina-inspired textural gradient detection for Retinex decomposition. Jia et al. [28] proposed a robust Retinex-based model with reflectance map re-weighting to improve and re-balance brightness. Veluchamy et al. [29] proposed a Retinex variational decomposition-based detail-preserving noise suppression model to address quality degradation issues in low-light images. Yang et al. [30] proposed the Weighted Low-Rank Tensor regularization Retinex (WLRT-Retinex) model, which introduces weighted low-rank tensor priors in the Retinex decomposition process. These Retinex-based methods suffer from observable color deviation and blurry details. Jeon et al. [31] designed an efficient and fast low-light image enhancement method using an atmospheric scattering model based on an inverted low-light image. Zhang et al. [32] utilized the atmospheric scattering model and color correction to yield a dehazed background sub-image.

2.2. Learning-Based Methods

Deep learning has revolutionized low-light enhancement, with convolutional neural network (CNN)-based methods [33,34] leading recent advancements. Wei et al. [35] incorporated Retinex theory into CNNs and proposed deep Retinex-Net for illumination adjustment. Zhang et al. [36] further built an effective network, Kindling the Darkness. Zhu et al. [37] proposed a robust Retinex decomposition network (named RRDNet) to predict noise for denoising while restoring underexposed images. Jiang et al. [38] reported a Retinex-based real-low to real-normal network which employs Decom-Net, Denoise-Net, and Relight-Net for decomposing, denoising, and performing contrast enhancement, respectively. Wu et al. [39] proposed a category-specific processing network which crops an input into patches and classifies these patches into “simple”, “medium”, and “hard” categories based on their information. Lim et al. [40] designed a deep stacked Laplacian restorer (DSLR) by leveraging useful properties of the Laplacian pyramid both in image and feature spaces. Notably, Zero-DCE [41] achieves unsupervised enhancement through learnable nonlinear curves, which are further optimized in Zero-DCE++ [42] by using lightweight depth-wise convolutions. Self-supervised approaches, such as maximum-entropy Retinex [43] and EnlightenGAN [14], reduce reliance on paired data.

Recently, researchers introduced Transformers from the field of NLP into low-light image enhancement, such as Retinexformer [44], a one-stage Retinex-based low-light enhancement framework. Xu et al. [45] proposed an unbalanced point-guided multiscale Transformer-based conditional normalizing flow for low-light image enhancement. Kou et al. [46] proposed a lightweight two-stage Transformer which contains an FFT-guidance block (FGB) and YOLOv3. Jiang et al. [47] proposed a Retinex-based framework to characterize the specific knowledge of the reflectance and illumination components while removing perturbation. Wang et al. [48] designed a luminance and chrominance dual-branch network, termed LCDBNet, for low-light image enhancement which divides low-light image enhancement into two sub-tasks, i.e., luminance adjustment and chrominance restoration. In addition, Nguyen et al. [49] proposed an LLIE method using a conditional diffusion model, which can effectively reduce training time and computational cost. Jiang et al. [50] proposed a diffusion-based unsupervised framework that incorporates physically explainable Retinex theory with diffusion models for low-light image enhancement. Although these learning-based methods show powerful generalization and robustness in the LLIE task, they yield color casts in the light-enhanced results.

3. Method

3.1. Motivation

Low-light images present low brightness and contrast, as well as unsatisfactory visibility. Although numerous learning-based methods show better performance in light enhancement and contrast stretch in the LLIE task in the RGB color space, they inevitably yield color casts and blurry details in the light-enhanced results. The main reason may be that the R, G, and B channels exhibit a strong correlation in color representation. Previous studies, e.g., Zero DCE [41] and CIDNet [51], have proven that transferring the image captured under suboptimal lighting conditions into the HSV, LAB, or other color spaces to separate the luminosity and color components can remove color casts to some extent. However, these methods present either poor multiscale feature representation or high computational complexity.

Inspired by Zero DCE [41], we design a large–small-scale structure blended U-shaped network to enhance the quality of low-light images in the LAB color space. Specifically, a large–small-scale structure and an enhanced U-shaped network are carefully designed to explore multiscale hierarchical features. And we also design an efficient feature interaction module (EFIM) to explore the relationship of luminosity and color features.

3.2. Network Architecture

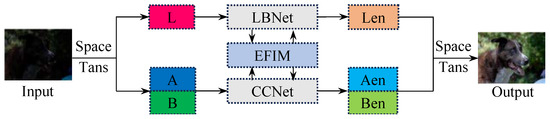

As shown in Figure 2, the proposed network, LSUNet, consists of a light-boosting network (LB-Net), a color correction network (CC-Net), and an efficient feature interaction module (EFIM).

Figure 2.

The architecture of the proposed method (LSUNet), which contains a light-boosting network (LB-Net), a color correction network (CC-Net), and an efficient feature interaction module (EFIM). The LB-Net is built upon the enhanced U-shaped network, the CC-Net is composed of the large–small-scale structure, and the EFIM is designed based on collaborative cross attention.

The input low-light image is first transferred into the LAB color space to separate its luminosity feature and color feature , a stage that can be formulated as

where denotes the color space transformation operation.

Subsequently, we apply the LB-Net and the CC-Net on luminosity feature and color feature to perform light boosting and color correction, respectively, for images acquired under suboptimal lighting conditions.

where , , and denote the enhanced luminosity and color features, respectively. and stand for the light-boosting and color correction operations. Additionally, the EFIM is employed for feature interaction in both branches.

3.2.1. Color Space Transformation

In the RGB color space, the image’s A, B, and C channels exhibit strong relationships in color representation. Hence, LLIE methods can restore the quality of low-light images in the RGB color space, but they may introduce observable color casts and cause an unnatural appearance. To handle this problem, we process the luminosity and color components of low-light images in the LAB color space for yielding visually pleasing images with vivid color. Specifically, we first normalize the pixels of the input image to ; then, the R, G, and B channels of the normalized pixels are processed by the linear transformation matrix to obtain X, Y, and Z features. This can be defined as

So, the image pixels values in the LAB color space can be calculated as

where is the nonlinear transformation function, which can be expressed as

where , and the inverse transformation can be defined as

where denotes the inverse of the linear transformation matrix .

3.2.2. Color Correction Network

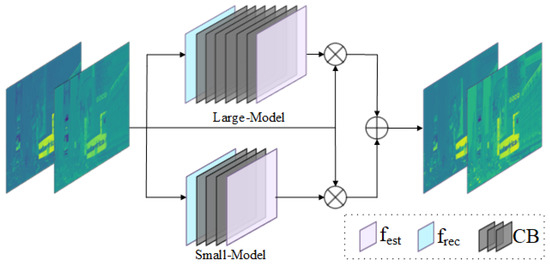

Numerous LLIE methods cannot successfully remove color casts. Additionally, image features exhibit different representations at different scale spaces. Therefore, we propose a color correction network (CC-Net), a dual-branch structure including a large-scale module (LM) and a small-scale module (SM), to explore the multiscale features of the A and B channels. Notably, these two modules consist of a feature extraction layer , a convolution block (CB), and a feature reconstruction layer . The detailed architecture is shown in Figure 3 and Table 1. In this work, we apply the CC-Net on the A and B channels to avoid color casts for low-light images.

Figure 3.

The structure of our CC-Net, which consists of a small-scale module (SM) and a large-scale model (LM). Notably, these modules include a feature extraction layer, a convolution block, and a feature reconstruction layer.

Table 1.

The parameters and details of the CC-Net model.

Specifically, we feed the color components, i.e., the A and B channels, into CC-Net. In the LM, the feature extraction layer is utilized to extract the shallow features. Then, the convolution block (CB), consisting of Conv and Conv, is applied to explore the depth feature. Finally, a feature reconstruction layer is used to refine the depth feature and further multiply it by the input in a pixel-by-pixel manner to obtain the large-scale feature . This stage can be formulated as

where ⊗ denotes pixel-wise multiplication and denotes the convolution block (CB) in the LM. denotes the A and B channels of the input. The SM presents similar processing to the LM, but its CB contains a different number of convolutions. The small-scale feature can be defined as

Finally, we integrate the small-scale feature and the large-scale feature for generating enhanced A and B channels as

where denotes the enhanced A and B channels and ⊕ denotes pixel-wise summation. Through the above processing, we can effectively mine multiscale features to achieve the goals of color correction and detail enhancement in low-light image enhancement.

3.2.3. Light-Boosting Network

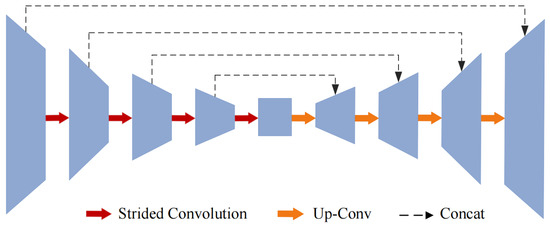

In our work, we propose a light-boosting network (LB-Net) based on the U-shaped network to process the luminosity component for light enhancement. The structure of LB-Net is shown in Figure 4 and consists of an encoder, a decoder, and a skip connection. The encoder employs four convolutions with batch normalization and ReLU function (CBRs) for detecting multi-level features. In addition, we integrate a strided convolution into two successive CBRs rather than max pooling to reduce computational complexity. Given the luminosity component of an input image, the encoding stage can be defined as

where denotes an convolution operation, denotes batch normalization, denotes the rectified linear unit function, denotes the strided convolution, ↓ denotes downsampling, and → indicates that the feature generated by the CBR is fed into the one.

Figure 4.

The structure of LB-Net including an encoder, a decoder, and a strided convolution.

The decoder has the same structure as the encoder, and the encoded feature is processed by the successive upsampling operation and the CBR to restore the feature maps of the encoder to their original resolution. Additionally, we also employ the skip connection between the CBR in the encoder and its corresponding CBR in the decoder to fully explore the hierarchical features. Finally, the output can be defined as

where i is chosen from the largest to the smallest in and l is chosen in the opposite order. denotes the concatenation operation. ↑ denotes the upsampling operation.

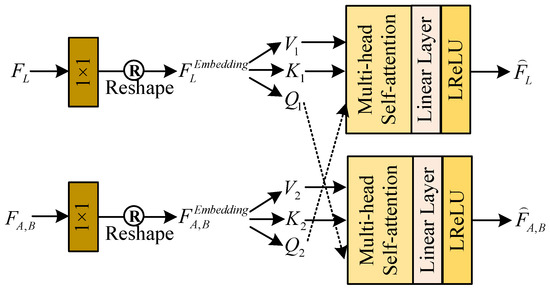

3.2.4. Efficient Feature Interaction Module

For taking full advantage of the correlation and complementarity of luminosity and color features, we design an efficient feature interaction module (EFIM) for information interaction between LB-Net and CC-Net. And the structure of the proposed EFIM is shown in Figure 5.

Figure 5.

The structure of the EFIM.

Firstly, we employ a Conv layer with kernel sizes of and the reshape operation on and to generate new sequence features and . Then, they are further split into query (Q), key (K), and value (V) subsequences with the same dimension.

where denotes the split operation, denotes the feature reshaping operation, and denotes the Conv layer with a kernel size of .

Subsequently, we exchange and , further perform successive multi-head self-attention, and apply a linear layer and the LeakyReLU function on and to generate mixed features and They can be defined as

3.3. Loss Function

In this paper, we employ the , , and loss functions to train the proposed method, which can assess the difference between the enhanced image and its corresponding ground truth. We analyze these three loss functions as follows.

Loss can improve the results of the proposed method by minimizing the differences between the predicted value and its ground truth. It can be defined as

where N is the number of samples, X is the input image, and Y is the ground truth.

Spatial Consistency Loss ensures that the output and input images share similar spatial consistency by calculating the difference between adjacent pixels. is defined as

where M is the number of local regions and is the four neighboring regions (top, down, left, and right) centered at area i.

Structure Similarity Loss . Compared with the and loss functions, , based on the Human Visual System (HVS), is sensitive to local structural changes in an image. Therefore, it was employed to measure the structural similarity between the enhanced image x and its corresponding ground truth y, and is defined as

where and are the pixel average value of the enhanced images and the ground truth. and represent the variance values of the pixels in the x and y directions, respectively; is the pixel covariance; and and are constants.

Total Loss. For generating visually pleasing results by the proposed method, we employ the total loss function including three types of losses, i.e., , , and , to train it. can be expressed as

where and represent the corresponding balance coefficients. We empirically set and to make our approach works well in the LLIE task.

4. Experiment and Analysis

In this section, we briefly describe the experimental details, datasets, and evaluation metrics. We then validate the effectiveness of the proposed method by using several public datasets. Finally, an ablation study and object detection in the dark are implemented.

4.1. Experimental Details

4.1.1. Training Details

As illustrated in Figure 2, the original data are converted into LAB images, and the output is transformed back into the original format. We first resize all input images to dimensions of before training. The Adam optimizer [52] is employed to train the proposed network, with the batch size being set to 8. The initial learning rate is established as 0.001 and is subsequently reduced by a factor of one-tenth after 50 epochs, for a total of 100 epochs. The proposed method is implemented by using the PyTorch-1.3 framework, and all validation experiments are conducted on an NVIDIA Tesla P100 GPU.

4.1.2. Datasets and Evaluation Metrics

We train our method on the MIT-Adobe FiveK [53] and LOL datasets [35]. The MIT-Adobe FiveK dataset comprises 4500 training image pairs and 500 test image pairs, while the LOL dataset consists of 485 training pairs and 15 test pairs. We evaluate performance by using PSNR, SSIM, and learned perceptual image patch similarity (LPIPS) [54]. Additionally, we perform tests on real-world datasets, including MEF [55], Fusion [56], and VV, using the natural image quality evaluator (NIQE) [57], neural feature-based image quality assessment (NFERM), lightness order error (LOE) [58], and information entropy (IE) to objectively evaluate image quality.

4.2. Ablation Study

For better understanding LSUNet, we conduct an ablation study, including color space transformation and the use of the loss function and CC-Net, on public datasets.

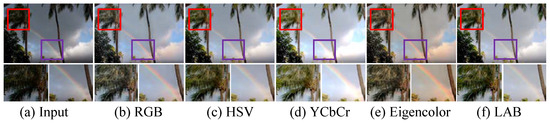

4.2.1. Study of Color Space Transformation

To verify the effectiveness of color space transformation, we apply the proposed network with identical configurations to improve low-illumination images in the RGB, HSV, YCbCr, Eigencolor, and LAB color spaces.

Concretely, the input is first converted into the HSV or LAB color space to separate color and luminance components; then, they are fed into LB-Net and CC-Net to restore the quality of the low-light images. Figure 6 presents light-enhanced images randomly selected from the MIT-Adobe FiveK dataset in different color spaces. Illustratively, the result generated in the YCbCr color space exhibits local over-enhancement, and that generated in Eigencolor exhibits color casts, while those generated in the RGB and HSV color spaces exhibit low contrast and blurry details. In contrast, the images improved in the LAB color space exhibit natural-looking visibility, vivid color, and clearer details.

Figure 6.

Ablation study of color space transformation. (a) Input image. Results generated in (b) RGB, (c) HSV, (d) YCbCr, (e) Eigencolor, and (f) LAB color spaces. The red and purple boxes indicate the magnified local detail regions. The red box highlights the improvement in detail clarity after enhancement, while the purple box emphasizes the accuracy and naturalness of color restoration.

Additionally, Table 2 depicts the average PSNR, SSIM, and AG scores of our LSUNet on MIT-Adobe FiveK in different color spaces. It can be seen that LSUNet in the LAB color space exhibits comparable and higher values of the AG, PSNR, and SSIM metrics compared with the other color spaces. In summary, the qualitative and quantitative evaluations show that our method in the LAB color space has superior performance on the LLIE task.

Table 2.

Average AG, PSNR, and SSIM scores of MIT Adobe FiveK in different color spaces.

4.2.2. Study of CC-Net

To evaluate the performance of the small-scale module (SM) and the large-scale module (LM) in CC-Net, we remove them from LSUNet. Table 3 demonstrates the average PSNR and SSIM scores of these operations. The results shows that the LM outperforms the SM in PSNR and SSIM. And our CC-Net can yield comparable and satisfactory PSNR and SSIM scores, benefiting from our carefully designed SM and LM.

Table 3.

Comparison of different modules in CC-Net on PSNR and SSIM.

4.2.3. Study of Loss Function

To evaluate the effectiveness of all mentioned loss functions, we train many versions of our method by using various combinations of loss functions. The average PSNR and SSIM scores on the MIT-Adobe Five-K dataset processed by these different versions of our method are presented in Table 4. As observed, , , and demonstrate similar performance in generating PSNR scores. In terms of SSIM values, the results indicate that outperforms by 0.1508 and by 0.0729. Overall, the total loss function effectively combines the advantages of these three losses and outperforms other combinations in generating satisfactory and comparable average PSNR and SSIM scores.

Table 4.

The average PSNR and SSIM scores generated by different versions of LSUNet.

4.3. Benchmark Evaluations

4.3.1. Comprehensive Evaluation on Synthetic Datasets

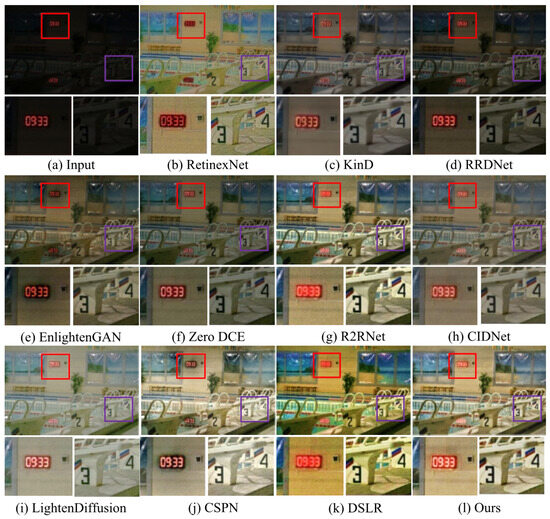

We first conduct visual comparisons and quantitative analyses on two synthetic benchmark datasets (i.e., MIT-Adobe FiveK and LOL) to demonstrate the effectiveness of our LSUNet in brightening low-light images.

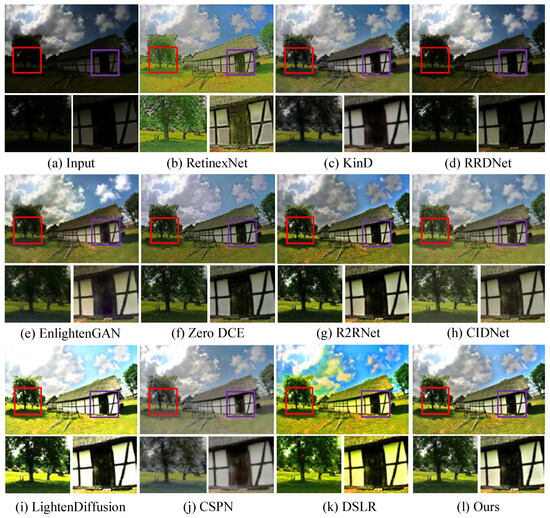

Qualitative analysis. We first test LSUNet and other state-of-the-art LLIE methods on the MIT Adobe FiveK benchmark, and randomly selected visual comparison results are depicted in Figure 7. It can be seen that RRDNet and Zero DCE fail to increase the brightness of low-light images and make the details clearer. EnlightGAN cannot effectively tackle local darkness. RetinexNet introduces unnatural-looking visibility, blurry details, and unwanted halo artifacts in the light-enhanced results. R2RNet improves brightness, contrast, and saturation, but the light-enhanced result presents unsatisfactory structural detail. KinD shows unsatisfactory performance in detail enhancement and removing local darkness. CIDNet fails to brighten low-illumination images. DSLR significantly enhances image brightness, but it yields observable color casts and local over-enhancement. LightenDiffusion effectively improves image contrast while generating blurry details. The CSPN-enhanced image exhibits unsatisfactory contrast. In contrast, our method outperforms other comparison LLIE methods in terms of contrast stretching, detail enhancement, and color correction.

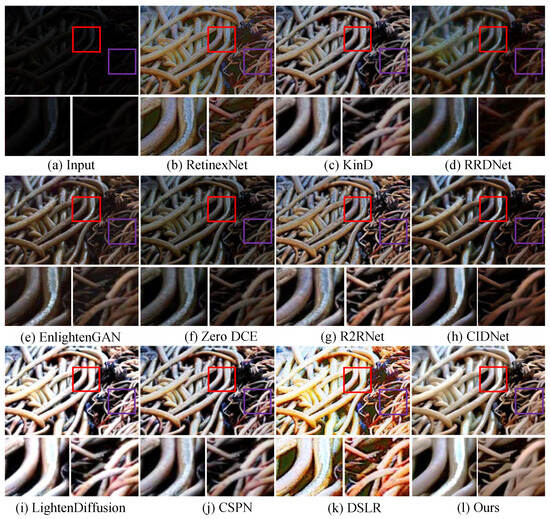

Figure 7.

Visual comparisons of different LLIE methods on MIT-Adobe FiveK dataset. The red and purple boxes highlight local detail zoom-in regions.

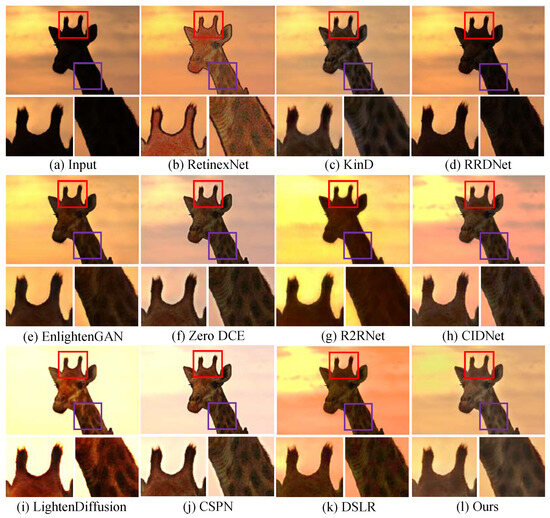

Additionally, Figure 8 shows the enhanced images generated by different LLIE methods. It can be easily observed that R2RNet shows satisfactory performance in light enhancement and contrast stretching while introducing observable halo artifacts and blurry details. RetinexNet cannot effectively remove inherent noise and unnatural visual appearance. RRDNet fails to yield high-quality results from the corresponding original input. EnlightenGAN and Zero DCE show similar performance on LLIE tasks, and their enhanced images still exhibit unwanted noise, local darkness, and blurry details. KinD shows satisfactory performance in detail sharpening, but it cannot remove the haze-like appearance completely and generate visually pleasing brightness. LightenDiffusion and CSPN show satisfactory performance in light enhancement, but the former generates a whitish tone in the light-enhanced images, and the latter generates unwanted halo artifacts. DSLR introduces an unnatural appearance, color casts, and amplified noise in the light-enhanced images. CIDNet shows poor performance in contrast stretching. On the contrary, LSUNet effectively removes color casts and improves visibility without observable amplified noise, over-/under-enhancement, and local darkness.

Figure 8.

Visual comparisons of different LLIE methods on LOL dataset. The red and purple boxes highlight local detail zoom-in regions.

Quantitative analysis. To quantitatively evaluate the performance of our LSUNet, we compare it with other LLIE methods by using PSNR, SSIM, and LPIPS. The average PSNR, SSIM, and LPIPS scores of different LLIE methods on synthetic datasets including MIT-Adobe FiveK and LOL are presented in Table 5. It can be easily seen that LSUNet shows better performance in creating the highest PSNR score and the lowest LPIPS score on MIT-Adobe FiveK than the comparison methods. In addition, our method has the highest SSIM score and the lowest LPIPS score on the LOL dataset. The qualitative and quantitative analyses suggest that our carefully designed LSUNet generally yields satisfactory visibility and a natural-looking appearance in the LLIE task.

Table 5.

Quantitative comparison of different methods on MIT-Adobe FiveK and LOL datasets.

4.3.2. Comprehensive Evaluation on Real Datasets

To further assess the generalization and robustness of LSUNet, we also conduct evaluation experiments on real datasets, including MEF, Fusion, and VV. Subsequently, we perform qualitative and quantitative evaluations on the light-enhanced results.

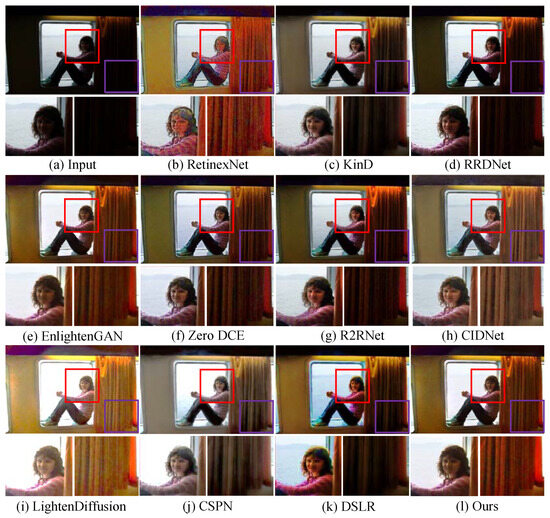

Qualitative analysis.Figure 9 shows the light-enhanced results randomly selected from the MEF dataset to test the performance of LSUNet and other comparison methods on local extremely low-light images. It can be easily found that KinD and RRDNet show poor performance in removing local darkness and contrast stretching. Although EnlightGAN, Zero DCE, and R2RNet outperform KinD and RRDNet in light enhancement for images captured under suboptimal lighting conditions, R2RNet introduces undesired halo artifacts and blurry details, Zero DCE yields a haze-like appearance in some light-enhanced images, and EnlightenGAN generates extra yellowish artifacts. RetinexNet significantly brightens low-illumination images, but the enhanced images exhibit unnatural-looking visibility and amplified noise. DSLR generates obvious halo artifacts and unnatural-looking appearance, LightenDiffusion fails in local enhancement and presents blurry details, and CSPN yields color casts. In comparison, the proposed method effectively removes local extremely low light and improves contrast without under-/over-enhancement.

Figure 9.

Visual comparisons of different LLIE methods on MEF dataset. The red and purple boxes highlight local detail zoom-in regions.

We further test our method on the Fusion and VV public datasets, and the light-enhanced example images generated by different LLIE methods are shown in Figure 10 and Figure 11, respectively. As shown in Figure 10, Zero DCE succeeds in light enhancement in low-illumination images but introduces an observable whitish tone and blurry details. R2RNet and RRDNet fail in detail boosting and local light enhancement in a yellow low-light image. Additionally, R2RNet generates observable local over-enhancement. KinD and EnlightenGAN cannot successfully tackle low contrast, and the former yields obvious color casts. RetinexNet fails to create visually pleasing images and remove the unnatural appearance. CIDNet and DSLR cannot remove local over-enhancement and local low contrast. LightenDiffusion generates an unnatural appearance and color casts. As illustrated in Figure 11, RRDNet, KinD, and R2RNet have unsatisfactory performance in removing local extremely low-illumination areas. RetinexNet introduces obvious noise, and EnlightenGAN produces local over-enhancement. LightenDiffusion shows poor performance in color correction and denoising. DSLR fails to brighten partial darkness, and CSPN generates a whitish tone and color casts in the light-enhanced results. CIDNet is also unsuccessful in removing color casts. In contrast, our method outperforms other comparison methods in generating noise-free images with vivid color and clearer details.

Figure 10.

Visual comparisons of different LLIE methods on Fusion dataset. The red and purple boxes highlight local detail zoom-in regions.

Figure 11.

Visual comparisons of different LLIE methods on VV dataset. The red and purple boxes highlight local detail zoom-in regions.

Quantitative analysis. We perform the quantitative analysis on three public benchmarks, i.e., MEF, Fusion, and VV, to verify the performance of the different methods. The latter’s average NIQE, NFERM, IE, and LOE scores on MEF, Fusion, and VV are shown in Table 6. From the quantitative evaluation scores illustrated in Table 6, it can be observed that LSUNet can generate comparable and more satisfactory values of the NIQE, NFERM, IE, and NIQE metrics than the comparison state-of-the-art LLIE methods. Overall, our method performs satisfactorily on images acquired under low-light conditions in terms of qualitative and quantitative evaluations.

Table 6.

Quantitative comparison of different methods on MEF, Fusion, and VV datasets.

Besides the above quantitative and qualitative analyses, we further conducted subjective visual evaluation involving 30 volunteers from various age groups and professions on the MEF, Fusion, and VV public datasets. Participants blindly rated light-enhanced real-world images on a scale of 1 to 5, with higher scores indicating more satisfactory visual quality. As shown in Table 7, it can be easily found that images improved by our method exhibit the highest visual scores. That is, our method outperforms other state-of-the-art LLIE methods in yielding natural-looking images with satisfactory visibility.

Table 7.

Visual ratings of performance of different methods on MEF, Fusion, and VV datasets given by participants.

4.4. Comprehensive Evaluation of Computational Complexity

We further compare computational complexity, including Param, Flops, and runtime, of all the above-listed LLIE methods on the LOL dataset to verify the efficiency of LSUNet, and the results are illustrated in Table 8. It can be easily found that our method is superior to other comparison methods in computational complexity. That is to say, our method can effectively restore the quality of low-illumination images.

Table 8.

Computational complexity comparison of existing LLIE methods on LOL benchmark.

4.5. Object Detection in the Dark

We also regard low-light image enhancement approaches as a pre-processing step for object detection under low-illumination conditions. Specifically, we first test several LLIE methods on the ExDark dataset [59], which comprises 5891 training images and 1472 test images taken under suboptimal lighting conditions, and further employ YOLO V5 on original and light-enhanced images to verify the effectiveness of the methods. Figure 12 demonstrates the object detection results of YOLO V5, and their corresponding average precision (AP) scores are shown in Table 9. It can be easily found that the LLIE methods can improve the performance of YOLO V5 under low-light conditions, and our LSUNet has superior performance in object detection in the dark compared with the other state-of-the-art LLIE methods.

Figure 12.

Comparison of object detection under low-light conditions. (a) shows the detection results of two giant pandas, (b) shows the detection results of a small car, (c,d) show the detection results of humans. The red and purple boxes in the figure highlight key areas of object detection to facilitate comparison of detection performance. From top to bottom are object detection results of original and light-enhanced images, respectively.

Table 9.

Quantitative analysis of our method on original/enhanced ExDark.

5. Conclusions

In this paper, we propose a network called large–small-scale structure blended U-Net (LSUNet) for low-light image enhancement which includes color space transformation, a light-boosting network (LB-Net), and a color correction network (CC-Net). This method processes low-light images in the LAB color space to extract the luminosity and color components. LB-Net is built upon the enhanced U-shaped network to explore the hierarchical features of the luminosity component, and CC-Net relies on the large–small-scale structure to extract the multiscale features of the color component. Additionally, an efficient feature interaction module is introduced for the interaction of the two branches. Extensive experiments demonstrate that this method can be effectively used for low-light image enhancement and can further improve object detection under inadequate lighting conditions. Although our method shows better performance in light enhancement and color correction, it fails to remove local over-enhancement and inherent noise. In the future, we can integrate a brightness perception module and hand-craft priors in the designed LSUNet for addressing these challenges.

Author Contributions

Conceptualization, H.C.; Writing—original draft, K.P. and H.L.; Writing—review & editing, K.P. and H.L.; Project administration, H.L.; Validation, W.W.; Supervision, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China (62462017), Guangxi Natural Science Foundation (2025GXNSFBA069390), Guangxi Science and Technology Project (AB2401008), Autonomous Region-Level Guilin University of Electronic Technology Student Innovation and Entrepreneurship Training Program Project (S202410595284), and Innovation Project of GUET Graduate Education (2025YCXB008).

Data Availability Statement

The data supporting the findings of this study are publicly available. No new data were created in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, J.; Li, B.; Tu, Z.; Liu, X.; Guo, Q.; Juefei-Xu, F.; Xu, R.; Yu, H. Light the night: A multi-condition diffusion framework for unpaired low-light enhancement in autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15205–15215. [Google Scholar]

- Ghari, B.; Tourani, A.; Shahbahrami, A.; Gaydadjiev, G. Pedestrian detection in low-light conditions: A comprehensive survey. Image Vis. Comput. 2024, 148, 105106. [Google Scholar] [CrossRef]

- Wang, H.; Köser, K.; Ren, P. Large Foundation Model Empowered Discriminative Underwater Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5609317. [Google Scholar] [CrossRef]

- Wei, R.; Wei, X.; Xia, S.; Chang, K.; Ling, M.; Nong, J.; Xu, L. Multi-scale wavelet feature fusion network for low-light image enhancement. Comput. Graph. 2025, 127, 104182. [Google Scholar] [CrossRef]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Zou, D.; Yang, B. Infrared and low-light visible image fusion based on hybrid multiscale decomposition and adaptive light adjustment. Opt. Lasers Eng. 2023, 160, 107268. [Google Scholar] [CrossRef]

- Xu, H.; Wang, M.; Chen, S. Multiscale luminance adjustment-guided fusion for the dehazing of underwater images. J. Electron. Imaging 2024, 33, 013007. [Google Scholar] [CrossRef]

- Prinzi, F.; Currieri, T.; Gaglio, S.; Vitabile, S. Shallow and deep learning classifiers in medical image analysis. Eur. Radiol. Exp. 2024, 8, 26. [Google Scholar] [CrossRef]

- Ganga, B.; Lata, B.; Venugopal, K. Object detection and crowd analysis using deep learning techniques: Comprehensive review and future directions. Neurocomputing 2024, 597, 127932. [Google Scholar] [CrossRef]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Fan, G.; Yao, Z.; Chen, G.Y.; Su, J.N.; Gan, M. IniRetinex: Rethinking Retinex-type Low-Light Image Enhancer via Initialization Perspective. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 2834–2842. [Google Scholar]

- Zhang, M.; Yin, J.; Zeng, P.; Shen, Y.; Lu, S.; Wang, X. TSCnet: A text-driven semantic-level controllable framework for customized low-light image enhancement. Neurocomputing 2025, 625, 129509. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhan, J.; He, S.; Dong, J.; Du, Y. Curricular contrastive regularization for physics-aware single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5785–5794. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Yi, X.; Xu, H.; Zhang, H.; Tang, L.; Ma, J. Diff-Retinex++: Retinex-Driven Reinforced Diffusion Model for Low-Light Image Enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhang, W.; Ren, P. Self-organized underwater image enhancement. ISPRS J. Photogramm. Remote Sens. 2024, 215, 1–14. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater image enhancement via weighted wavelet visual perception fusion. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 2469–2483. [Google Scholar] [CrossRef]

- Dhal, K.G.; Das, A.; Ray, S.; Gálvez, J.; Das, S. Histogram equalization variants as optimization problems: A review. Arch. Comput. Methods Eng. 2021, 28, 1471–1496. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, Z.; Zhang, J.; Li, Q.; Shi, Y. Image enhancement with the preservation of brightness and structures by employing contrast limited dynamic quadri-histogram equalization. Optik 2021, 226, 165877. [Google Scholar] [CrossRef]

- Dyke, R.M.; Hormann, K. Histogram equalization using a selective filter. Vis. Comput. 2023, 39, 6221–6235. [Google Scholar] [CrossRef]

- Yuan, Q.; Dai, S. Adaptive histogram equalization with visual perception consistency. Inf. Sci. 2024, 668, 120525. [Google Scholar] [CrossRef]

- Samraj, D.; Ramasamy, K.; Krishnasamy, B. Enhancement and diagnosis of breast cancer in mammography images using histogram equalization and genetic algorithm. Multidimens. Syst. Signal Process. 2023, 34, 681–702. [Google Scholar] [CrossRef]

- Sule, O.O.; Ezugwu, A.E. A two-stage histogram equalization enhancement scheme for feature preservation in retinal fundus images. Biomed. Signal Process. Control 2023, 80, 104384. [Google Scholar] [CrossRef]

- Rivera-Aguilar, B.A.; Cuevas, E.; Pérez, M.; Camarena, O.; Rodríguez, A. A new histogram equalization technique for contrast enhancement of grayscale images using the differential evolution algorithm. Neural Comput. Appl. 2024, 36, 12029–12045. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Rahman, Z.u.; Jobson, D.J.; Woodell, G.A. Retinex processing for automatic image enhancement. J. Electron. Imaging 2004, 13, 100–110. [Google Scholar] [CrossRef]

- Cai, R.; Chen, Z. Brain-like retinex: A biologically plausible retinex algorithm for low light image enhancement. Pattern Recognit. 2023, 136, 109195. [Google Scholar] [CrossRef]

- Jia, F.; Wong, H.S.; Wang, T.; Zeng, T. A reflectance re-weighted retinex model for non-uniform and low-light image enhancement. Pattern Recognit. 2023, 144, 109823. [Google Scholar] [CrossRef]

- Veluchamy, M.; Subramani, B. Detail preserving noise aware retinex model for low light image enhancement. J. Opt. 2025, 1–16. [Google Scholar] [CrossRef]

- Yang, W.; Gao, H.; Zou, W.; Liu, T.; Huang, S.; Ma, J. Low-Light Image Enhancement via Weighted Low-Rank Tensor Regularized Retinex Model. In Proceedings of the 2024 International Conference on Multimedia Retrieval, Phuket, Thailand, 10–14 June 2024; pp. 767–775. [Google Scholar]

- Jeon, J.J.; Park, J.Y.; Eom, I.K. Low-light image enhancement using gamma correction prior in mixed color spaces. Pattern Recognit. 2024, 146, 110001. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Q.; Feng, Y.; Cai, L.; Zhuang, P. Underwater Image Enhancement via Principal Component Fusion of Foreground and Background. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10930–10943. [Google Scholar] [CrossRef]

- Wang, H.; Sun, S.; Chang, L.; Li, H.; Zhang, W.; Frery, A.C.; Ren, P. INSPIRATION: A reinforcement learning-based human visual perception-driven image enhancement paradigm for underwater scenes. Eng. Appl. Artif. Intell. 2024, 133, 108411. [Google Scholar] [CrossRef]

- Jiang, Q.; Mao, Y.; Cong, R.; Ren, W.; Huang, C.; Shao, F. Unsupervised Decomposition and Correction Network for Low-Light Image Enhancement. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19440–19455. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the Darkness: A Practical Low-Light Image Enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, MM ’19, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar] [CrossRef]

- Zhu, A.; Zhang, L.; Shen, Y.; Ma, Y.; Zhao, S.; Zhou, Y. Zero-Shot Restoration of Underexposed Images via Robust Retinex Decomposition. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Wu, H.; Wang, C.; Tu, L.; Patsch, C.; Jin, Z. CSPN: A Category-specific processing network for low-light image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 11929–11941. [Google Scholar] [CrossRef]

- Lim, S.; Kim, W. DSLR: Deep stacked Laplacian restorer for low-light image enhancement. IEEE Trans. Multimed. 2020, 23, 4272–4284. [Google Scholar] [CrossRef]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Li, C.; Guo, C.; Loy, C.C. Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4225–4238. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Di, X.; Zhang, B.; Wang, C. Self-Supervised Image Enhancement Network: Training with Low-Light Images Only. arXiv 2020, arXiv:2002.11300. [Google Scholar] [CrossRef]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage Retinex-based Transformer for Low-light Image Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 12504–12513. [Google Scholar]

- Xu, L.; Hu, C.; Hu, Y.; Jing, X.; Cai, Z.; Lu, X. UPT-Flow: Multi-scale transformer-guided normalizing flow for low-light image enhancement. Pattern Recognit. 2025, 158, 111076. [Google Scholar] [CrossRef]

- Kou, K.; Yin, X.; Gao, X.; Nie, F.; Liu, J.; Zhang, G. Lightweight two-stage transformer for low-light image enhancement and object detection. Digit. Signal Process. 2024, 150, 104521. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Q.; An, Z.; Wang, Z.; Zhang, C.; Lin, C.W. Mutual Retinex: Combining Transformer and CNN for Image Enhancement. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2240–2252. [Google Scholar] [CrossRef]

- Wang, H.; Yan, X.; Hou, X.; Li, J.; Dun, Y.; Zhang, K. Division gets better: Learning brightness-aware and detail-sensitive representations for low-light image enhancement. Knowl.-Based Syst. 2024, 299, 111958. [Google Scholar] [CrossRef]

- Nguyen, C.M.; Chan, E.R.; Bergman, A.W.; Wetzstein, G. Diffusion in the Dark: A Diffusion Model for Low-Light Text Recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 4146–4157. [Google Scholar]

- Jiang, H.; Luo, A.; Liu, X.; Han, S.; Liu, S. Lightendiffusion: Unsupervised low-light image enhancement with latent-retinex diffusion models. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 161–179. [Google Scholar]

- Yan, Q.; Feng, Y.; Zhang, C.; Pang, G.; Shi, K.; Wu, P.; Dong, W.; Sun, J.; Zhang, Y. HVI: A New color space for Low-light Image Enhancement. arXiv 2025, arXiv:2502.20272. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Bychkovsky, V.; Paris, S.; Chan, E.; Durand, F. Learning photographic global tonal adjustment with a database of input/output image pairs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 97–104. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual Quality Assessment for Multi-Exposure Image Fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Fu, X.; Zhang, X.P.; Ding, X. A fusion-based method for single backlit image enhancement. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4077–4081. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv 2017, arXiv:1711.00591. [Google Scholar] [CrossRef]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the Exclusively Dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).