Abstract

In recent times, there has been a notable surge in multimodal approaches that decorate raw LiDAR point clouds with camera-derived features to improve object detection performance. However, we found that these methods still grapple with the inherent sparsity of LiDAR point cloud data, primarily because fewer points are enriched with camera-derived features for sparsely distributed objects. We present an innovative approach that involves the generation of virtual LiDAR points using camera images and enhancing these virtual points with semantic labels obtained from image-based segmentation networks to tackle this issue and facilitate the detection of sparsely distributed objects, particularly those that are occluded or distant. Furthermore, we integrate a distance-aware data augmentation (DADA) technique to enhance the model’s capability to recognize these sparsely distributed objects by generating specialized training samples. Our approach offers a versatile solution that can be seamlessly integrated into various 3D frameworks and 2D semantic segmentation methods, resulting in significantly improved overall detection accuracy. Evaluation on the KITTI and nuScenes datasets demonstrates substantial enhancements in both 3D and bird’s eye view (BEV) detection benchmarks.

1. Introduction

3D object detection plays a pivotal role in enhancing scene understanding for safe autonomous driving. In recent years, a large number of 3D object detection techniques have been implemented [1,2,3,4,5,6,7]. These algorithms primarily leverage information from LiDAR and camera sensors to perceive their surroundings. LiDAR provides low-resolution shape and depth information [8], while cameras capture dense images rich in color and textures. While multimodal-based 3D object detection has made significant advancements recently, their performance still notably deteriorates when dealing with sparse point cloud data. Recently, painting-based methods like PointPainting [6] have gained popularity in an attempt to address this issue. These methods decorate the LiDAR points with camera features. However, a fundamental challenge still persists in the case of objects lacking corresponding point clouds, such as distant and occluded objects. Despite the presence of camera features for such objects, there is no associated point cloud to complement these features. As a result, although they are beneficial for improving overall 3D detection performance, they still contend with sparse point clouds, as illustrated in Figure 1.

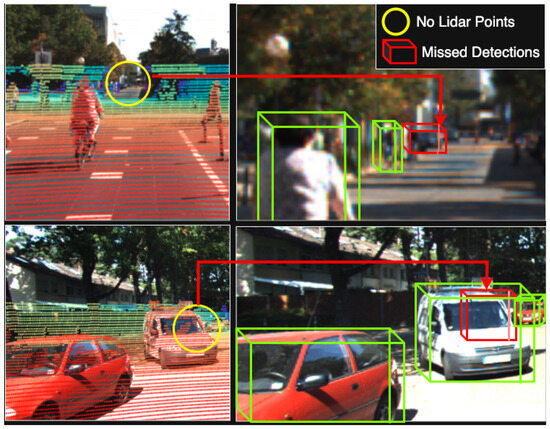

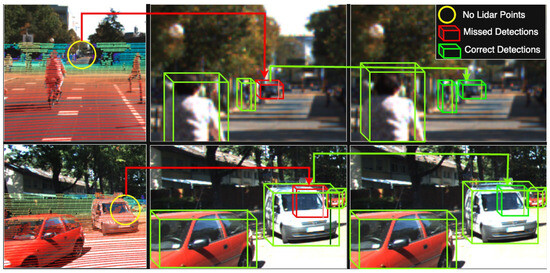

Figure 1.

Drawback of painting-based methods [6]. The yellow circle serves as an indicator of the absence of point clouds in image projection, while the red bounding box signifies the consequent detection failure resulting from the sparse point cloud. Despite the presence of certain semantic cues for the object within the yellow circle, the absence of LiDAR points prevents the integration of these semantic cues with LiDAR data.

In the context of autonomous driving, remote objects refer to those located beyond 30–50 m from the ego vehicle, often resulting in very few or no LiDAR points due to sensor limitations. Occluded objects are partially or fully blocked by other objects, such as parked vehicles or pedestrians behind large structures. These scenarios are common and pose critical challenges for object detection systems. Our goal is to enhance detection accuracy under these conditions by enriching sparse LiDAR inputs with semantically meaningful virtual points derived from camera images. In Figure 1, the yellow circle highlights a pedestrian that is occluded in the LiDAR view, resulting in the absence of 3D point cloud data at that location. The red bounding box shows a failure in detection caused by this sparsity, even though semantic information exists in the camera image. This illustrates a common failure case where conventional LiDAR-only or even painted-point approaches fall short due to the lack of geometric support.

One such practical implication of our model is risk anticipation and planning. In autonomous driving systems, while close-range detection is crucial for immediate response, early recognition of distant and occluded objects also plays a vital role in risk anticipation and planning, particularly at high speeds (e.g., highway scenarios) or in occluded urban intersections. For instance, recognizing a pedestrian or cyclist partially visible behind a parked vehicle from a distance allows autonomous systems to proactively adjust speed or trajectory, rather than react late. Thus, detecting sparse and remote objects is essential for both proactive safety and smoother navigation [7]. In response to the inherent sparsity of LiDAR data, several methods have been introduced to generate pseudo or virtual points. These methods bolster the sparse point clouds by introducing supplementary points around the existing LiDAR points. For example, Multimodal Virtual Point (MVP) [9] generates virtual points by completing the depth information of 2D instance points using data from the nearest 3D points. Similarly, Sparse Fuse Dense (SFD) [10] creates virtual points based on depth completion networks [11]. These virtual points play a crucial role in enhancing the geometric representation of distant objects, showcasing their potential to significantly enhance the performance of 3D detection methods. However, current implementations have yet to fully harness the advantages of integrating semantic results from semantic networks with virtual points. The incorporation of semantic information into the augmented point cloud, which includes both the original and virtual points, not only enriches the dataset but also increases the model’s overall robustness [6].

Most recent fusion-based methods primarily concentrate on different fusion stages: early fusion, which involves combining LiDAR and camera data at an early stage; deep fusion, where features from both camera and LiDAR are combined by feature fusion; and late fusion, which combines the output candidates or results from both LiDAR and camera detection frameworks at a later stage. However, there is minimal emphasis on the quality of the training data, a crucial aspect in any detection framework. This issue is particularly evident in many fusion-based methods that lack sufficient sparse training samples for sparsely distributed object such as occluded and distant objects. The absence of comprehensive training data makes the trained model fragile and, as a result, incapable of effectively detecting distant objects during the testing phase. Consequently, fusion-based methods also face the challenge of inadequate data augmentation. The inherent disparities between 2D image data and 3D LiDAR data make it difficult to adapt several data augmentation techniques that are effective for the latter. This limitation poses a significant barrier and is a primary factor leading to the generally lower performance of multimodal methods in comparison to their single-modal counterparts [12].

To address these issues, we propose a simple yet effective “VirtualPainting” framework. Our method addresses the problem of sparse LiDAR points by generating virtual points using depth completion network PENet [11]. To elaborate, we initiate the generation of supplementary virtual points and seamlessly merge them with the original points, resulting in an augmented LiDAR point cloud dataset. This augmented dataset subsequently undergoes a “painting” process utilizing features derived from cameras. The camera-derived features are in the form of semantic scores or per-pixel class scores. The augmented LiDAR point cloud is concatenated with per pixel class score to obtain a feature-rich point cloud. The result is twofold: it not only yields a denser point cloud in the form of augmented LiDAR point clouds but also enables a seamless combination of camera features and the point clouds. The virtual points, generated via the depth completion network, are now linked with camera features, resulting in a more comprehensive data representation. In certain scenarios where camera features were present but lacked corresponding LiDAR sensor points, these camera features remained unincorporated. Although high-resolution cameras provide rich semantic information, they lack precise depth data, which is critical for accurate 3D localization and object boundary estimation [7]. LiDAR complements this by offering geometrically accurate range information. Our proposed method leverages both modalities, particularly benefiting cases like those shown in Figure 1, where high-resolution semantic cues exist but lack corresponding depth data from LiDAR. Without LiDAR, these objects are semantically identifiable but not localizable in 3D space, which is essential for autonomous navigation.

Additionally, we address the challenges of insufficient training samples for distant objects and the absence of adequate data augmentation techniques by integrating a method called distance-aware data augmentation (DADA). In this approach, we intentionally generate sparse training samples from objects that are initially densely observed by applying a distant offset. Considering that real-world scenes frequently involve incomplete data due to occlusion, we also introduce randomness by selectively removing portions to simulate such occlusion. By integrating these training samples, our model becomes more resilient during the testing phase, especially in the context of detecting sparsely distributed objects, such as occluded or distant objects, which are frequently overlooked in many scenarios. Examples of objects that frequently suffer from sparse point coverage include pedestrians stepping out from behind vehicles, cyclists approaching from a distance, and vehicles at intersections over 50 m away. Our method enhances detection accuracy for these cases by generating virtual points where LiDAR fails and enriching them with semantic cues. In brief, our contributions are summarized as follows:

- An innovative approach that augments virtual points obtained through the joint application of LiDAR and camera data with semantic labels derived from image-based semantic segmentation.

- Integration of distance-aware data augmentation method for improving models’ ability to identify sparse and occluded objects by creating training samples.

- A generic method that can incorporate any variation of 3D frameworks and 2D semantic segmentation for improving the overall detection accuracy.

- Evaluation on the KITTI and nuScenes datasets shows major improvement in both 3D and BEV detection benchmarks, especially for distant objects.

2. Related Work

2.1. Single Modality

Existing LiDAR-based methods can be categorized into four main groups based on their data representation: point-based, grid-based, point-voxel-based, and range-based. PointNet [13] and PointNet++ [14] are early pioneering works that apply neural networks directly to point clouds. PointRCNN [15] introduced a novel approach, directly generating 3D proposals from raw point clouds. VoxelNet [1] introduced the concept of a VFE (voxel feature encoding) layer to learn unified feature representations for 3D voxels. Building upon VoxelNet, CenterPoint [16] devised an anchor-free method using a center-based framework based on CenterNet [17], achieving state-of-the-art performance. SECOND [2] harnessed sparse convolution [18] to alleviate the challenges of 3D convolution. PV-RCNN [19] bridged the gap between voxel-based and point-based methods to extract more discriminative features. Voxel-RCNN [3] emphasized that precise positioning of raw points might not be necessary, contributing to the efficiency of 3D object detection. PointPillars [12] innovatively extracted features from vertical columns (Pillars) using PointNet [13]. Despite these advancements, all these approaches share a common challenge—the inherent sparsity of LiDAR point cloud data, which impacts their overall efficiency.

2.2. Fusion-Based

The inherent sparsity of LiDAR point cloud data has sparked research interest in multimodal fusion-based methods. MV3D [4] and AVOD [20] create a multi-channel bird’s eye view (BEV) image by projecting the raw point cloud into BEV. AVOD [20] takes both LiDAR point clouds and RGB images as input to generate features shared by the Region Proposal Network (RPN) and the refined network. MMF [21] benefits from multi-task learning and multi-sensor fusion, while 3D-CVF [5] fuses features from multi-view images, and Sniffer Faster RCNN [22] combines and refines the 2D and 3D proposal together at the final stage of detection. CLOCs [23] and Sinffer Faster R-CNN++ [24] take it one step further and refine the confidences of 3D candidates using 2D candidates in a learnable manner. These methods face limitations in utilizing image information due to the sparse correspondences between images and point clouds. Additionally, fusion-based methods encounter another challenge—a lack of sufficient data augmentation. In this paper, we address both issues by capitalizing on the virtual points and using data augmentation techniques.

2.3. Point Decoration Fusion

Recent developments in fusion-based methods have paved the way for innovative approaches like point decoration fusion-based methods, which enhance LiDAR points with camera features. PointPainting [6], for instance, suggests augmenting each LiDAR point with the semantic scores derived from camera images. PointAugmenting [7] acknowledges the limitations of semantic scores and proposes enhancing LiDAR points with deep features extracted from a 2D object detectors. FusionPainting [25] takes a step further by harnessing both 2D and 3D semantic networks to extract additional features from both camera images and the LiDAR point cloud. Nevertheless, it is important to note that these methods also grapple with the sparse nature of LiDAR point cloud data, as illustrated in Figure 1. Even though PointAugmenting addresses this issue with data augmentation techniques, the inadequacy of sparse training samples for sparse objects leads to their failure in detection.

3. VirtualPainting

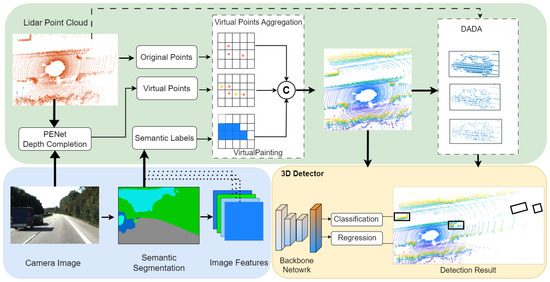

We provide an overview of the proposed “VirtualPainting” framework in Figure 2. It addresses the sparsity of LiDAR point clouds and the lack of training samples for sparse objects by introducing multiple enhancement modules. These include semantic segmentation, depth completion, virtual point painting, and distance-aware data augmentation, ultimately feeding into a 3D object detection network. For details on each stage, please refer to Figure 2. Each component plays a specific role in improving the quality and density of point cloud data. Together, they contribute to more reliable detection of distant and occluded objects in challenging driving scenarios.

Figure 2.

Overview of our VirtualPainting architecture, which comprises five distinct stages: firstly, a 2D semantic segmentation module responsible for computing pixel-wise segmentation scores; secondly, an image-based depth completion network named “PENet” generates the LiDAR virtual point cloud; thirdly, the VirtualPainting process involves painting virtual and original LiDAR points with semantic segmentation scores; and fourthly, the distance-aware data augmentation (DADA) component employs a distance-aware sampling strategy, creating sparse training samples primarily from nearby dense objects and, finally, a 3D detector for obtaining final detection result.

3.1. Image-Based Semantic Network

Two-dimensional images captured by cameras are rich in texture, shape, and color information. This richness offers valuable complementary information for point clouds, ultimately enhancing three-dimensional detection. To leverage this synergy, we employ a semantic segmentation network to generate pixel-wise semantic labels. We employ the BiSeNetV2 [26] segmentation model for this purpose. This network takes multi-view images as its input and delivers pixel-wise classification labels for both foreground instances and the background. It is worth noting that our architecture is flexible, allowing for the incorporation of various semantic segmentation networks to generate semantic labels. For semantic segmentation in our KITTI dataset experiments, we use the BiSeNetv2 [26] network. This network underwent an initial pretraining phase on the CityScapes [27] dataset and was subsequently fine-tuned on the KITTI dataset using PyTorch 2.0 [28]. For the simplicity of our implementation, we chose to ignore classes cyclist and bike because there is a difference in the class defination of a cyclist between KITTI semantic segmentation and object detection tasks. In object detection, a cyclist is defined as a combination of the rider and the bike, whereas in semantic segmentation, a cyclist is defined as solely the rider, with the bike being a separate class. Similarly for nuScenes, we developed a custom network using the nuImages dataset, which comprises 100,000 images containing 2D bounding boxes and segmentation labels for all nuScenes classes. The segmentation network utilized a ResNet backbone to extract features at various strides, ranging from 8 to 64, and incorporated an FCN segmentation head for predicting nuScenes segmentation scores.

3.2. PENet for Virtual Point Generation

The geometry of nearby objects in LiDAR scans is often relatively complete, whereas for distant objects, it is quite the opposite. Additionally, there is a challenge of insufficient data augmentation due to the inherent disparities between 2D image data and 3D LiDAR data. Several data augmentation techniques that perform well with 3D LiDAR data are difficult to apply in multimodal approaches. This obstacle significantly contributes to the usual under performance of multimodal methods when compared to their single-modal counterparts. To address these issues, we employ PENet to transform 2D images into 3D virtual point clouds. This transformation unifies the representations of images and raw point clouds, allowing us to handle images much like raw point cloud data. We align the virtual points generated from the depth completion network with the original points to create a augmented point cloud data. This approach collectively enhances the geometric information of sparse objects while also establishing an environment for a unified representation of both images and point clouds. Much like SFD, our approach relies on the virtual points produced by PENet. The PENet architecture is initially trained exclusively on the KITTI dataset, which encompasses both color images and aligned sparse depth maps. These depth maps are created by projecting 3D LiDAR points onto their corresponding image frames. The dataset comprises 86,000 frames designated for training, in addition to 7000 frames allocated for validation, and a further 1000 frames designated for testing purposes. Our PENEt is trained on the training set.The images are standardized at a resolution of 1216 × 352. Typically, a sparse depth map contains approximately 5 valid pixels, while ground truth dense depth maps have an approximate 16 coverage of valid pixels [11].

3.3. Painting Virtual Points

The current implementation of LiDAR point cloud painting methods has not harnessed the advantages of associating semantic results from the semantic network with virtual points. Incorporating semantic information into the augmented point cloud, which includes both the original and virtual points, not only enriches the dataset but also enhances the model’s robustness. Let us refer to the original points generated from LiDAR scan as “raw point cloud”, denoted by R, and the point clouds generated from depth completion network as “virtual points”, denoted by V. Starting with a set of raw clouds R, we have the capability to transform it into a sparse depth map S using a known projection . We also have an associated image denoted as I corresponding to R. By providing both I and S as inputs to a depth completion network, we obtain a densely populated depth map labeled as D. Utilizing a known projection , we can then generate a set of virtual points denoted as V.

Our VirtualPainting algorithm comprises three primary stages. In the initial stage, utilizing the virtual points acquired from the depth completion network, we align these virtual points with the original raw LiDAR points, effectively generating an augmented LiDAR point cloud denoted as A with N points. In the second stage, as previously mentioned, the segmentation network produces C-class scores. In the case of KITTI [29], C equals 4 (representing car, pedestrian, cyclist, and background), while for nuScenes [30], C is 11 (comprising 10 detection classes along with background). In the final stage, the augmented LiDAR points undergo projection onto the image, and the segmentation scores corresponding to the relevant pixel coordinates (h, w) are appended to the augmented LiDAR point, resulting in the creation of a painted LiDAR point. This transformation process involves a homogeneous transformation followed by projection into the image as given in Algorithm 1. Algorithm 1 outlines the process of generating painted augmented LiDAR points. Here is a step-by-step explanation of each input and operation:

- denotes the original raw LiDAR point cloud, where N is the number of points and indicates spatial dimensions (e.g., ).

- represents the virtual points generated using a depth completion network. These are spatially aligned with the original LiDAR points.

- The augmented point cloud A is obtained by combining the raw and virtual points, i.e., , where .

- is the semantic segmentation score map, where W and H are the width and height of the image, and C is the number of semantic classes.

- is the homogeneous transformation matrix used to project 3D points into the camera coordinate frame.

- is the camera projection matrix used to map 3D coordinates into 2D image coordinates.

The output is , the painted augmented point cloud containing both spatial and semantic features.

The algorithm iterates over each point :

- 1

- The 3D point is projected onto the image plane to obtain 2D coordinates using the ‘PROJECT’ function, which applies the transformation T followed by projection through M.

- 2

- The semantic class score vector is retrieved from S at the pixel coordinates .

- 3

- The final painted point is generated by concatenating the 3D point with its corresponding class score vector .

This painted representation enriches the spatial LiDAR data with semantic context from the image, enabling better detection of sparse or distant objects.

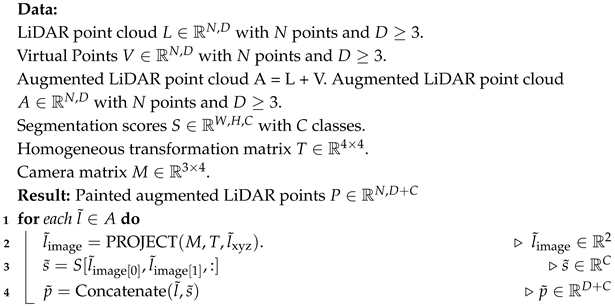

| Algorithm 1: VirtualPainting (L, V, A, S, T, M). |

|

3.4. Distance-Aware Data Augmentation

As mentioned earlier, the absence of comprehensive geometric information for sparse objects can significantly hamper detection performance. To overcome this challenge, we aim to enhance our understanding of the geometry of sparsely observed distant objects by generating training samples derived from densely observed nearby objects. While several established methods exist to address this challenge, such as random sampling or farthest point sampling, it is important to note that these techniques often result in an uneven distribution pattern within the LiDAR-scanned point cloud. In this context, we adopt a sampling strategy [31] that takes into account both LiDAR scanning mechanics and scene occlusion. Within the context of a nearby ground truth box with position and its associated inside points , we introduce a random distance offset as follows: . Subsequently, we proceed to convert the points into a spherical coordinate system and voxelize them into spherical voxels, aligning with the LiDAR’s angular resolution. Within each voxel, we compute the distances between the points. If the points are found to be in very close proximity, with their distance being almost negligible, falling below a predefined threshold , we choose to calculate the average of these points. This results in a set of sampled points that closely mimic the distribution pattern of real scanned points, as depicted in Figure 2. During the training process, similar to the GT-AUG (Shi, Wang, and Li, 2019) [15] approach, we incorporate these sampled points and bounding box information into the training samples to facilitate data augmentation. This augmentation technique has the potential to address the shortage of training samples for distant objects. Additionally, we randomly remove portions of dense LiDAR points to simulate occlusion, aiming to potentially resolve the scarcity of occluded samples during training. In the last phase of our architecture, the 3D detector receives the input in the form of the painted version of the augmented point cloud. As there are no alterations to the backbones or other architectural components, providing the painted point cloud as input to any 3D detector such as PointRCNN, VoxelNet, PVRCNN, PointPillars, and so on, is very straightforward to obtain the final detection results. For our final experimental evaluation, we primarily used PV-RCNN due to its strong baseline performance on KITTI and nuScenes. However, we also tested on PointPillars and PointRCNN to show the generalizability of our approach.

3.5. LiDAR Network Details

We use the OPENPCDet [32] tool for the KITTI dataset, incorporating 3D detectors such as PVRCNN, PointPillars, PointRCNN, and VoxelNEt, with only minimal adjustments to the point cloud dimension. In order to accommodate segmentation scores from a semantic network, we expand the dimension by adding the total number of classes used in the segmentation network. Since our architecture remains unchanged beyond this point, it remains generic and can be readily applied to any 3D detector without requiring complex configuration modifications. Similarly, for the nuScenes dataset, we utilize an enhanced version of PointPillars, as detailed in [6]. These enhancements involve alterations to the pillar resolution, network architecture, and data augmentation techniques. Firstly, we reduce the pillar resolution from 0.25 m to 0.2 m to improve the localization of small objects. Secondly, we revise the network architecture to incorporate additional layers earlier in the network. Lastly, we adjust the global yaw augmentation from to [6].

4. Experimental Setup and Results

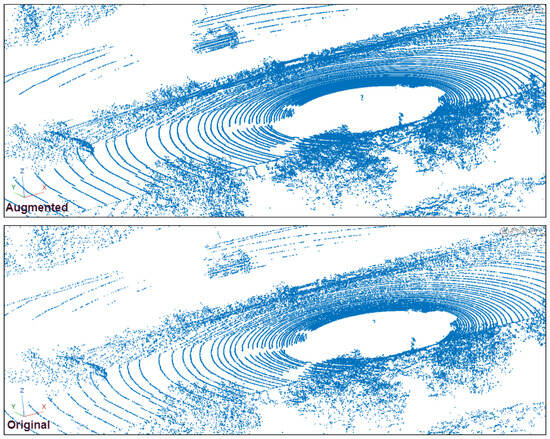

We evaluate the effectiveness of our proposed VirtualPainting framework using two standard large-scale 3D object detection datasets: KITTI and nuScenes. The KITTI dataset comprises approximately 7500 training and 7500 testing frames, featuring objects such as cars, pedestrians, and cyclists. Detection difficulty is categorized into easy, moderate, and hard, based on object occlusion, truncation, and distance. The dataset exhibits a long-tail distribution with many pedestrians, cars and cyclists located beyond 30 m, contributing to severe point sparsity in LiDAR scans. Each sample includes front-view RGB images and Velodyne 3D point clouds, with accurate calibration between modalities. The nuScenes dataset includes 1000 scenes (20 s each) captured at 2 Hz across urban environments, and comprises over 1.4M annotated 3D bounding boxes across 10 classes including car, truck, pedestrian, bicycle, and motorcycle. It contains data from six cameras, one LiDAR, and five radars, offering full 360-degree coverage (see Figure 3). Its densely annotated frames, complex city scenes, and large class imbalance introduce challenging cases of sparsity and occlusion, making it ideal for evaluating robust multimodal methods like ours. While Table 1, Table 2 and Table 3 present our method’s quantitative improvements, we also include qualitative visualizations in Figure 4. These examples highlight successful detections of occluded or distant objects by our VirtualPainting-based models that were missed by baseline approaches, demonstrating our method’s robustness in sparse detection scenarios.

Figure 3.

Illustration of augmented LiDAR point cloud and original LiDAR point cloud. The augmented LiDAR point cloud being slightly more dense in comparison to the original is the result of combining the original raw LiDAR points with the virtual points generated by PENet.

Table 1.

Results on the KITTI test BEV detection benchmark. L&I denotes LiDAR and Image input, i.e., multimodal models. “Improvement” refers to the increase in Average Precision (AP) of our VirtualPainting-based multimodal models relative to their corresponding LiDAR-only baselines. Performance gains are most evident for pedestrians and cyclists, particularly at longer distances where sparse LiDAR points often lead to missed detections. ↑ denotes additional improvement over baseline methods.

Table 2.

Comparision of PointPainting-based models with our VirtualPainting-based models.

Table 3.

Comparisons of performance on the nuScenes test set, with reported metrics including NDS, mAP, and class-specific AP.

Figure 4.

Qualitative comparison of object detection results. The baseline method fails to detect the distant object highlighted in the scene, while our proposed VirtualPainting approach successfully identifies it, demonstrating improved robustness for sparse and occluded objects.

4.1. KITTI Results

We initially assess our model’s performance using the KITTI dataset and contrast it with the current state-of-the-art methods. In Table 1, you can observe the outcomes for the KITTI test BEV detection benchmark. Notably, there is a substantial enhancement in mean average precision (mAP) compared to both single and multimodality baseline methods like AVOD, MV3D, PointRCNN, PointPillars, and PVRCNN. This improvement is particularly prominent in the pedestrian and cyclist categories, which are more challenging to detect, especially when it comes to sparse objects. Notably, the moderate and hard classes within the pedestrian and cyclist categories exhibit more significant improvements when compared to the easy class, as depicted in Table 1. We can clearly observe that for PointRCNN, our approach exhibits a notable enhancement of +4.09, +3.79 and +4.52, +2.89, specifically for the moderate and hard difficulty levels within the pedestrian and cyclist classes. This trend is consistent across various other models as well. Similarly, Table 2 provides a comparison of our methods with point-painting based approaches. Although there is not a remarkable increase in mean Average Precision, there is still some notable enhancement.

4.2. nuScenes Results

Additionally, we assess the performance of our model using the nuScenes dataset, as displayed in Table 3. Our approach surpasses the single-modality PointPillar-based model in all categories. Furthermore, the enhanced variant called PaintedPointPillars, which is based on the point-painting method for PointPillars, exhibits improvements in terms of nuScenes Detection Score (NDS) and Average Precision (AP) across all ten classes in the nuScenes dataset. Similarly, as seen in our previous results, this improvement is most noticeable in classes such as pedestrian, bicycle, and motorcycle, where the likelihood of remaining undetected in occluded and distant sparse regions is higher.

5. Ablation Studies

5.1. VirtualPainting Is a Generic and Flexible Method

As depicted in Table 1 and Table 2, we assess the genericity of our approach by integrating it with established 3D detection frameworks. We carry out three sets of comparisons, each involving a single-modal method and its multimodal counterpart. The three LiDAR-only models under consideration are PointPillars, PointRCNN, and PVRCNN. As illustrated in Table 2, VirtualPainting consistently demonstrates enhancements across all single-modal detection baselines. These findings suggest that VirtualPainting possesses a general applicability and could potentially be extended to other 3D object detection frameworks. Likewise, Table 4 and Table 5 demonstrate that several elements, including virtual points and semantic segmentation, can be seamlessly incorporated into the architecture without requiring intricate modifications, thus highlighting the flexibility of our approach. Similary, we also evaluated the inference speed of our method on an NVIDIA RTX 3090 GPU. While the inference speed is higher compared to the original PointPainting method, it still remains faster than other single-modality based methods, as indicated in Table 5.

Table 4.

Analysis of the impacts of different components on the KITTI test set, with results assessed using the AP (Average Precision) metric calculated across 40 recall positions specifically for pedestrian and cyclist class.

Table 5.

Ablation studies for different components on nuScenes dataset. Similar to KITTI, the semantic network plays a pivotal role in enhancing performance in the nuScenes dataset.

5.2. Where Does the Improvement Come from?

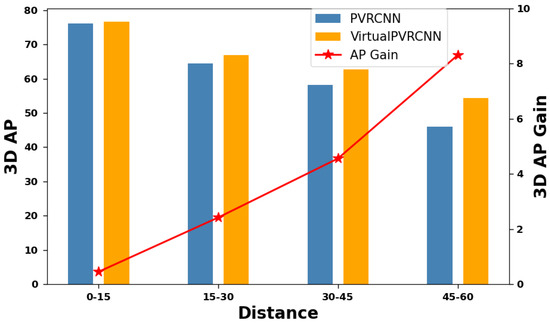

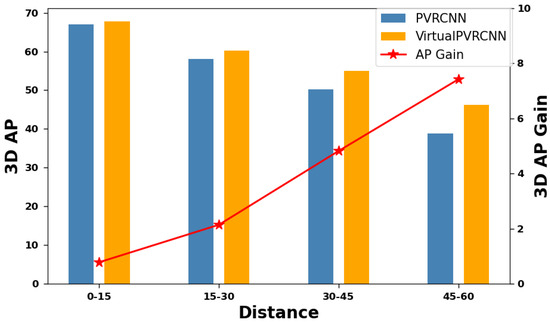

To gain a comprehensive understanding of how VirtualPainting leverages camera cues and augmentation techniques applied to LiDAR points to enhance 3D object detection models, we offer a thorough analysis encompassing both qualitative and quantitative aspects. Initially, we categorize objects into three groups based on their proximity to the ego-car: those within 30 m, those falling within the 30 to 50-m range, and those located beyond 50 m. Figure 5 and Figure 6 illustrate the relative improvements achieved through multimodal fusion within each of these distance groups. In essence, VirtualPainting consistently enhances accuracy across all distance ranges. Notably, it delivers significantly higher accuracy gains for long-range objects compared to short-range ones. This phenomenon may be attributed to the fact that long-range objects often exhibit sparse LiDAR point coverage, and the combination of augmentation techniques applied to these sparse points, rendering them denser, along with the inclusion of high-resolution camera semantic labels, effectively bridges the information gap. We use 15 m intervals (0–15, 15–30, etc.) to match typical LiDAR resolution drop-off ranges. The numbers of cyclist/pedestrian samples per bin in KITTI are approximately 0–15 m: 860; 15–30 m: 720; 30–45 m: 510; 45–60 m: 260. These distributions align with expected real-world object distributions across distances. Likewise, as illustrated in Table 4 and Table 6, the incorporation of semantic segmentation significantly elevates precision compared to other elements, including the virtual point cloud and DADA. Although there is noticeable improvement when both virtual points and DADA are integrated, the primary contribution stems from the attachment of semantic labels to the augmented point cloud, which contains both virtual points and the original LiDAR points for both KITTI and nuScenes datasets.

Figure 5.

Performance improvement along different detection distance (KITTI test set) and 3D AP for cyclist class.

Figure 6.

Performance improvement along different detection distance (KITTI test set) and 3D AP for pedestrian class.

Table 6.

Inference speed across various multi- and single-modality frameworks.

6. Conclusions

In this paper, we propose a generic 3D object detector that combines both LiDAR point cloud and camera image to improve the detection accuracy, especially for sparsely distributed objects. We address the sparsity and inadequate data augmentation problems of LiDAR point cloud through the combined application of camera and LiDAR data. Using a depth completion network, we generate virtual point clouds to make the LiDAR point clouds dense and adopt the point decoration mechanism to decorate the augmented LiDAR point clouds with image semantics, thus improving the detection for those objects that generally go undetected due to sparse LiDAR points. Moreover, we design a distance-aware data augmentation technique to make the model robust for occluded and distant objects. Experimental results demonstrate that our approach can significantly improve detection accuracy, particularly for these specific objects.

Author Contributions

Conceptualization, S.D.; Methodology, S.D.; Formal analysis, S.D.; Investigation, D.Q. and M.D.T.; Resources, M.D.T.; Data curation, D.Q. and D.C.; Writing—original draft, S.D.; Writing—review & editing, S.D.; Visualization, D.C.; Supervision, Q.Y.; Project administration, Q.Y.; Funding acquisition, Qing Yang. All authors have read and agreed to the published version of this manuscript.

Funding

This research was funded by National Science Foundation: CNS-1852134, OAC-2017564, and ECCS-2010332.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in [Arxiv] at [https://doi.org/10.48550/arXiv.2312.16141].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-CNN: Towards High Performance Voxel-Based 3D Object Detection. arXiv 2020, arXiv:2012.15712. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. arXiv 2017, arXiv:1611.07759. [Google Scholar]

- Yoo, J.H.; Kim, Y.; Kim, J.S.; Choi, J.W. 3D-CVF: Generating Joint Camera and LiDAR Features Using Cross-View Spatial Feature Fusion for 3D Object Detection. arXiv 2020, arXiv:2004.12636. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4603–4611. [Google Scholar]

- Wang, C.; Ma, C.; Zhu, M.; Yang, X. PointAugmenting: Cross-Modal Augmentation for 3D Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11789–11798. [Google Scholar]

- Dhakal, S.; Qu, D.; Carrillo, D.; Yang, Q.; Fu, S. OASD: An Open Approach to Self-Driving Vehicle. In Proceedings of the 2021 Fourth International Conference on Connected and Autonomous Driving (MetroCAD), Richardson, TX, USA, 27–28 May 2021; pp. 54–61. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal Virtual Point 3D Detection. arXiv 2021, arXiv:2111.06881. [Google Scholar]

- Wu, X.; Peng, L.; Yang, H.; Xie, L.; Huang, C.; Deng, C.; Liu, H.; Cai, D. Sparse Fuse Dense: Towards High Quality 3D Detection with Depth Completion. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 5408–5417. [Google Scholar]

- Shi, Y.; Hu, J.; Li, L. PENet: Pre-Enhanced Network for Object Detection and Instance Segmentation. In Proceedings of the 2023 3rd International Conference on Neural Networks, Information and Communication Engineering (NNICE), Xi’an, China, 24–26 February 2023; pp. 184–189. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. arXiv 2018, arXiv:1812.05784. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413, 02413. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-based 3D Object Detection and Tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11779–11788. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. arXiv 2019, arXiv:1904.08189, 08189. [Google Scholar]

- Liu, B.; Wang, M.; Foroosh, H.; Tappen, M.; Penksy, M. Sparse Convolutional Neural Networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 806–814. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10526–10535. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View Aggregation. arXiv 2017, arXiv:1712.02294. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task Multi-Sensor Fusion for 3D Object Detection. arXiv 2020, arXiv:2012.12397. [Google Scholar]

- Dhakal, S.; Chen, Q.; Qu, D.; Carillo, D.; Yang, Q.; Fu, S. Sniffer Faster R-CNN: A Joint Camera-LiDAR Object Detection Framework with Proposal Refinement. In Proceedings of the 2023 IEEE International Conference on Mobility, Operations, Services and Technologies (MOST), Dallas, TX, USA, 18–20 October 2023; pp. 1–10. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10386–10393. [Google Scholar]

- Dhakal, S.; Carrillo, D.; Qu, D.; Yang, Q.; Fu, S. Sniffer Faster R-CNN++: An Efficient Camera-LiDAR Object Detector with Proposal Refinement on Fused Candidates. ACM J. Auton. Transport. Syst. 2023, 36, 1138. [Google Scholar] [CrossRef]

- Xu, S.; Zhou, D.; Fang, J.; Yin, J.; Zhou, B.; Zhang, L. FusionPainting: Multimodal Fusion with Adaptive Attention for 3D Object Detection. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3047–3054. [Google Scholar]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11618–11628. [Google Scholar]

- Wu, H.; Wen, C.; Li, W.; Li, X.; Yang, R.; Wang, C. Transformation-Equivariant 3D Object Detection for Autonomous Driving. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence (AAAI’23/IAAI’23/EAAI’23), Washington, DC, USA, 7–14 February 2023; pp. 1–8. [Google Scholar] [CrossRef]

- OpenPCDet Development Team. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 2 April 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).