A Hybrid Security Framework for Train-to-Ground (T2G) Communication Using DOA-Optimized BPNN Detection, Bayesian Risk Scoring, and RL-Based Response

Abstract

:1. Introduction

- We analyze the security vulnerabilities of the T2G communication system to identify potential attack surfaces and abnormal behavior patterns.

- A hybrid feature selection strategy combining logistic regression and forward sequential selection is proposed to obtain a compact and relevant feature subset, thereby improving model efficiency and generalization.

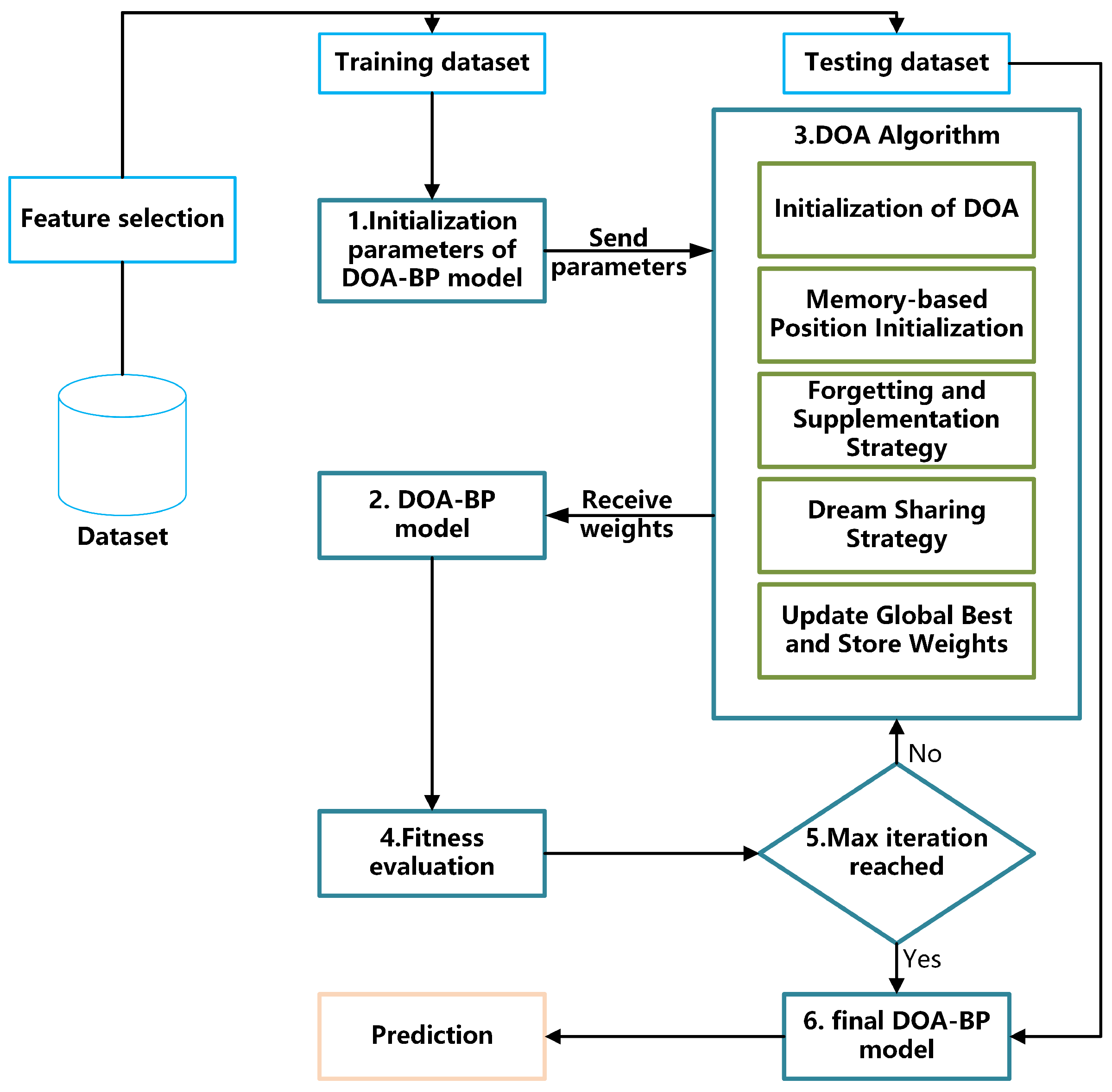

- An intrusion detection model based on DOA-BPNN is developed. The DOA enhances the initialization of network weights, accelerates convergence, and reduces the risk of local minima. Simulation results show that the proposed method achieves improved detection accuracy and training stability compared with the standard BP model.

- A Bayesian risk scoring mechanism is introduced to quantify threat levels based on detection results. It incorporates detection confidence, anomaly intensity, and contextual adjustment to generate standardized real-time risk scores, enhancing threat perception and supporting dynamic defense decisions.

- A reinforcement learning–based response module is incorporated, using Q-learning to adaptively select optimal defense actions under varying risk levels and attack types. This enables closed-loop, feedback-driven responses, providing intelligent and scalable protection for the T2G communication system.

- To comprehensively evaluate the proposed framework, comparative experiments are conducted with other optimization algorithms in both binary and multi-class classification tasks. The framework’s effectiveness is also evaluated in scenarios with and without active response mechanisms.

2. Related Work

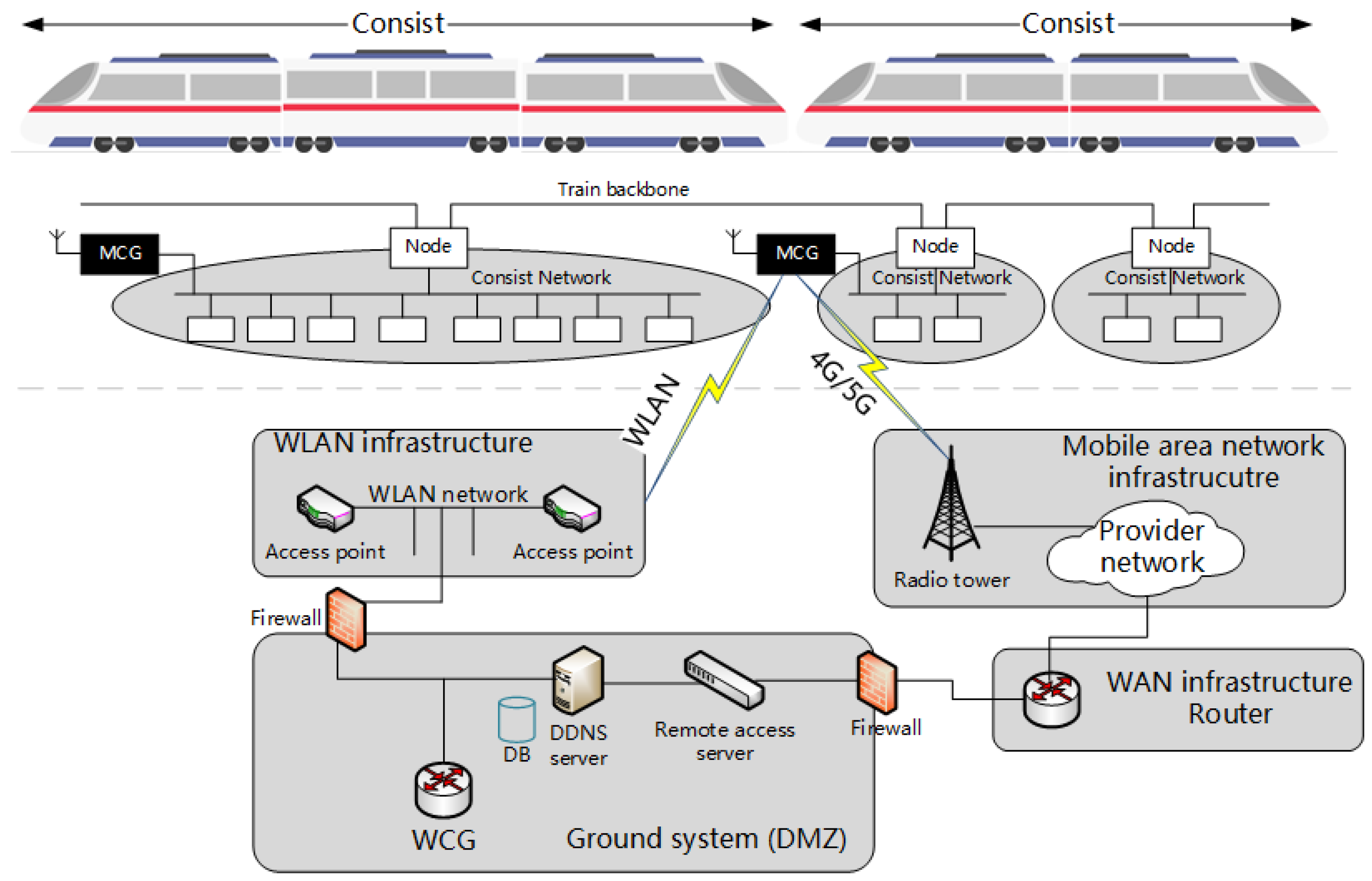

3. T2G Communication Security, Vulnerabilities, and Threats

- The attacker possesses in-depth knowledge of the T2G system and may be capable of physically following the train;

- The attacker can impersonate legitimate communication entities or replay previously captured T2G data packets;

- The attacker is able to access communication devices and modify or relay data packets at different times and locations;

- The attacker may exploit radio equipment deployed along the railway to eavesdrop on, forge, or jam T2G communications;

- The attacker cannot physically dismantle, damage, or illegally relocate T2G devices without being detected.

3.1. Threats Overview

- Eavesdropping and Traffic Analysis: T2G communication often relies on public networks (e.g., 4G/5G), making it susceptible to passive attacks. Adversaries can deploy eavesdropping equipment along the railway to intercept and analyze transmitted packets. Even when encryption is applied, traffic analysis techniques (e.g., observing packet size, frequency, and timing) may reveal sensitive information such as train operating patterns, schedules, or equipment status.

- Message Tampering and Forgery: Attackers may conduct man-in-the-middle (MitM) attacks by hijacking onboard communication channels or accessing network points at stations or along the track. This allows them to inject falsified operational parameters, fault reports, or location data, which could mislead the ground control system.

- Replay Attack: Due to the periodic and predictable nature of T2G data transmissions, attackers can record legitimate packets and replay them at a later time to generate misleading operational states, potentially misleading decision-making on the ground side.

- Denial-of-Service (DoS) Attacks: Attackers may disrupt T2G services by launching network-layer or physical-layer DoS attacks. These include flooding the channel with excessive traffic, exhausting system resources, or using high-power jamming signals. In busy station environments, such attacks could lead to widespread train delays and service disorganization.

- Unauthorized Access and Device Compromise: Key T2G hardware components—such as Mobile Communication Gateways (MCGs), data acquisition units, and transmission controllers—may be compromised through supply chain vulnerabilities, misconfigurations, or leaked credentials. Without physically damaging the device, attackers could implant malicious code, enabling persistent access, data leakage, or remote shutdown of train communication functions.

- Data Integrity Attacks: T2G data is often uploaded in batches for trajectory reconstruction, fault diagnostics, and performance analysis. Attackers could corrupt historical data or inject false supplementary records, undermining the consistency of datasets and impairing predictive maintenance or safety evaluations.

- Routing and Protocol Exploits: In multi-train or edge-computing environments, T2G communication may leverage Software-Defined Networking (SDN), Mobile Edge Computing (MEC), or dynamic routing protocols. Adversaries may exploit protocol vulnerabilities to perform attacks such as route spoofing or cache poisoning, manipulating data paths or delaying service responses.

- Mobile Network-Specific Threats: When relying on public 4G/5G infrastructures, T2G systems are also exposed to mobile-specific threats such as rogue base stations, DNS spoofing, and signaling storms. These techniques can intercept sessions, downgrade encryption, or force channel switching, potentially enabling more sophisticated or coordinated attacks.

3.2. Multi-Stage Advanced Attacks

- Forgery-Jamming Combination During Online Operation: While the train is in active service, attackers may monitor T2G transmissions to infer data formats and forge onboard status messages with structurally identical characteristics. Short-term jamming—achieved via high-frequency interference or intentional data congestion—is then introduced during legitimate message transmission windows, preventing the ground system from receiving authentic data. As a result, the forged message may be erroneously accepted as the “current train status”. This attack vector can be exploited to simulate faults, mislead train scheduling systems, or conceal actual anomalies.

- Low-Frequency Injection with Replay: During normal operation, attackers intermittently inject low-frequency anomalies—such as borderline temperature, voltage, or current fluctuations—to mimic natural operational variations without triggering alarms. Once the train enters a depot for centralized data upload, historical “normal” messages may be replayed or combined with fabricated records, introducing logical inconsistencies in the dataset. This undermines predictive maintenance algorithms and health monitoring processes, potentially resulting in incorrect lifecycle estimations, false alarms, or unnecessary maintenance interventions.

- Sequence Tampering and Timestamp Drift: T2G data packets transmitted during high-speed operation often contain critical temporal metadata such as timestamps and sequence numbers, which are essential for event reconstruction by ground-based systems. By subtly modifying timestamps (e.g., shifting by a few seconds) or scrambling the order of transmission, attackers can distort causal sequences (e.g., “braking → acceleration → fault alarm”), leading to misinterpretation of events and compromising situational awareness and decision-making on the ground.

3.3. Attack Classification

4. Methodology

4.1. Proposed System Overview

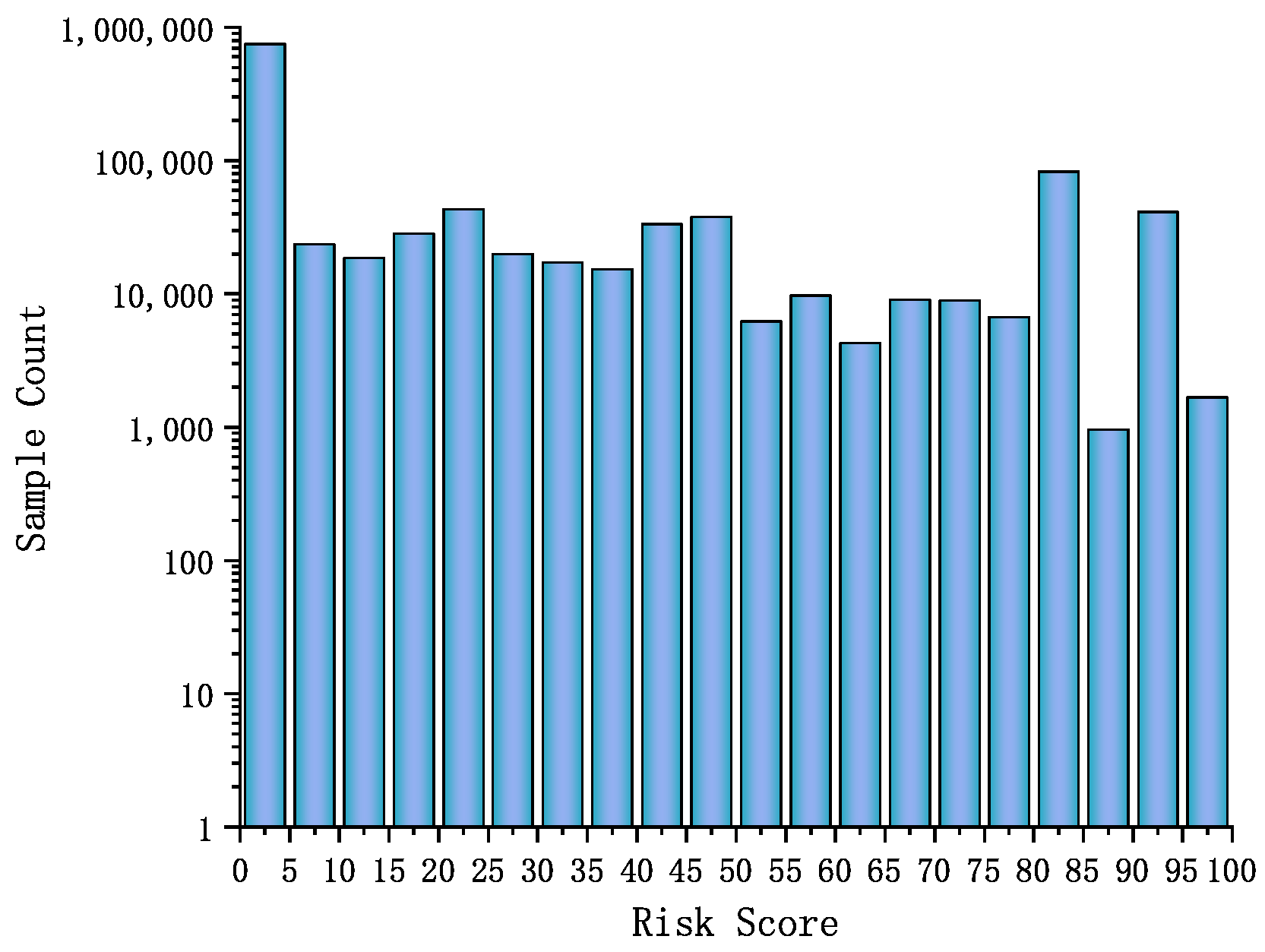

4.2. Dataset Description

4.3. Dataset Pre-Processing

- Data Cleaning: Duplicate records and samples with more than 75% missing values were removed to reduce noise and improve data quality.

- Dataset Splitting: The dataset was first split into two parts, with 80% allocated to the DOA-BP intrusion detection module and the remaining 20% reserved for the Bayesian risk scoring and reinforcement learning–based response modules. Within the 80% assigned to intrusion detection, an additional 80:20 split was applied to create the training and test sets. This two-stage partitioning yields a final distribution of 64% for training, 16% for testing, and 20% for evaluation of downstream components. This hierarchical strategy ensures data independence between modules, prevents information leakage, and supports unbiased performance evaluation. Stratified sampling was employed throughout to maintain consistent class distribution across all subsets.

- Class Balancing: Stratified random sampling maintained representativeness. All samples of rare attack types (e.g., mitm, ransomware, injection) were retained. For normal traffic, a 20% sampling rate was applied, and for other common attacks, 10% was used to balance data volume and computational efficiency.

- GAN-Based Augmentation: For attack types with very few samples (e.g., mitm, with only 1473 samples), a Generative Adversarial Network (GAN) was used to create additional synthetic data, enhancing generalization by matching the original distribution.

- Feature Engineering and Encoding: Non-numeric fields were converted into numeric format, and formatted numeric fields were cleaned and standardized. Categorical features were label-encoded, and mappings were saved to ensure interpretability and traceability. Finally, all numeric features were normalized to the [0, 1] range using min–max scaling to standardize the input for model training.

4.4. Feature Selection

4.5. Dream Optimization Algorithm (DOA)

4.6. DOA-BPNN Model

| Algorithm 1 DOA Optimization for BPNN Parameters |

|

4.7. Bayesian Risk Scoring

4.8. Reinforcement Learning-Based Response Decision Model

- Monitoring and Logging: This action records and monitors train status and communication activities to detect threats such as eavesdropping and traffic analysis or unauthorized access, which correspond to backdoor attacks in the TON_IoT dataset. By tracking data flows between the train and ground systems, it helps identify unusual communication patterns or unauthorized access attempts in real-time.

- Limiting Train Communication: In response to DoS/DDoS or communication jamming attacks, which are related to DDoS or flooding attacks in the TON_IoT dataset, this action limits the transmission rate of non-critical data, such as diagnostic messages, ensuring that crucial T2G control data, like operational commands or fault information, are prioritized and protected from overload.

- Train Deceleration: If a MitM or message tampering attack is detected, which could correspond to MitM or injection attacks in the TON_IoT dataset, this action automatically initiates deceleration to ensure the train operates safely by reducing speed. This allows time to verify data integrity and control the situation before a critical failure occurs.

- Emergency Braking: In cases of replay attacks or data integrity attacks, similarly to replay or ransomware attacks in the TON_IoT dataset, this action triggers emergency braking to rapidly stop the train, ensuring that any compromised operational data, such as false fault reports or location data, do not lead to unsafe situations, thereby protecting passengers and infrastructure.

- System Reset: If a device compromise or routing exploit (similar to backdoor or command injection attacks in the TON_IoT dataset) is detected, this action performs a secure reboot or restoration of the affected T2G devices, such as MCG, to ensure the systems are cleared of any malicious modifications and return to a secure state, allowing communication to resume safely.

5. Experiments and Analysis of Results

5.1. Experimental Setup

5.2. Evaluation Metrics

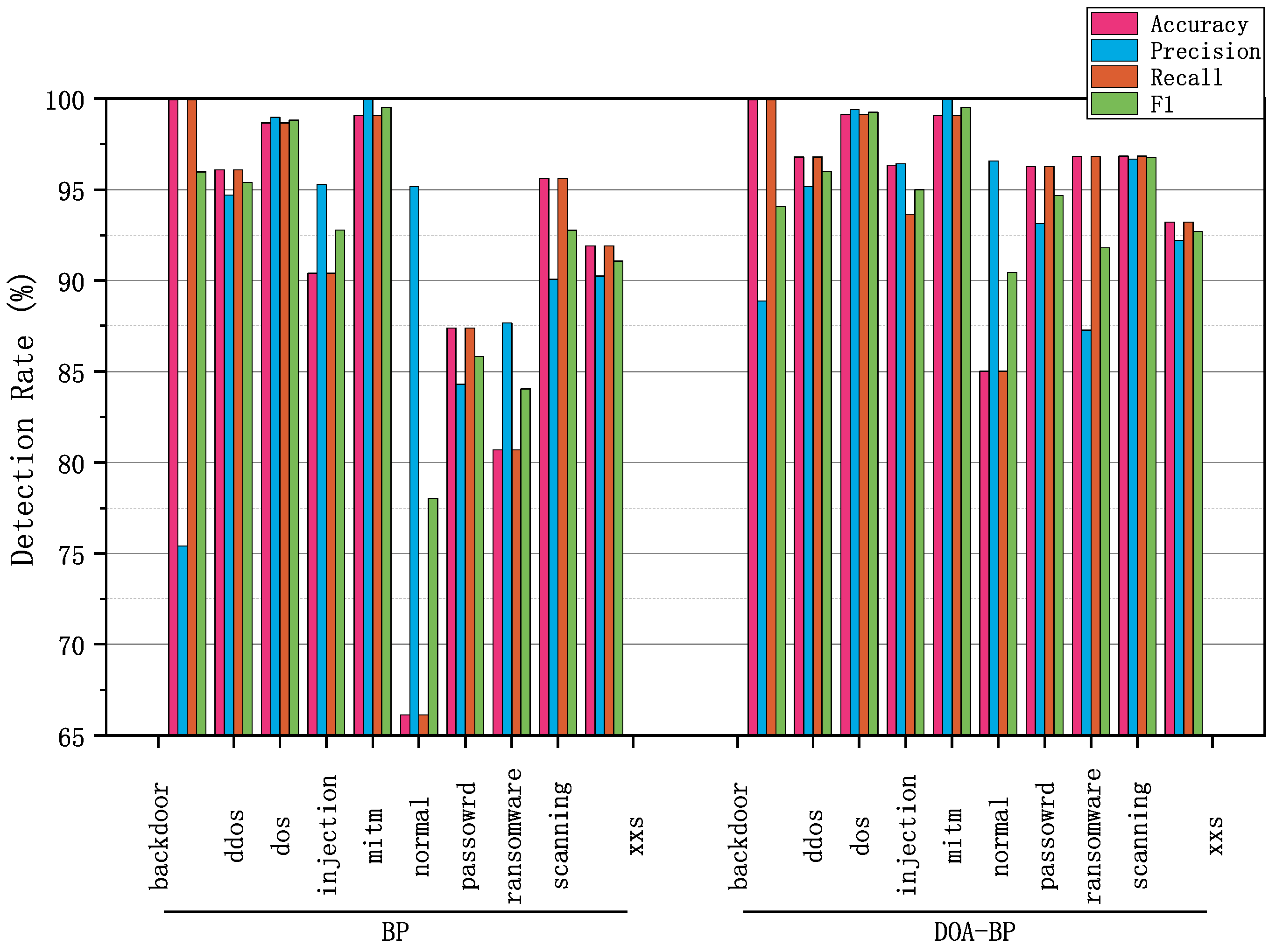

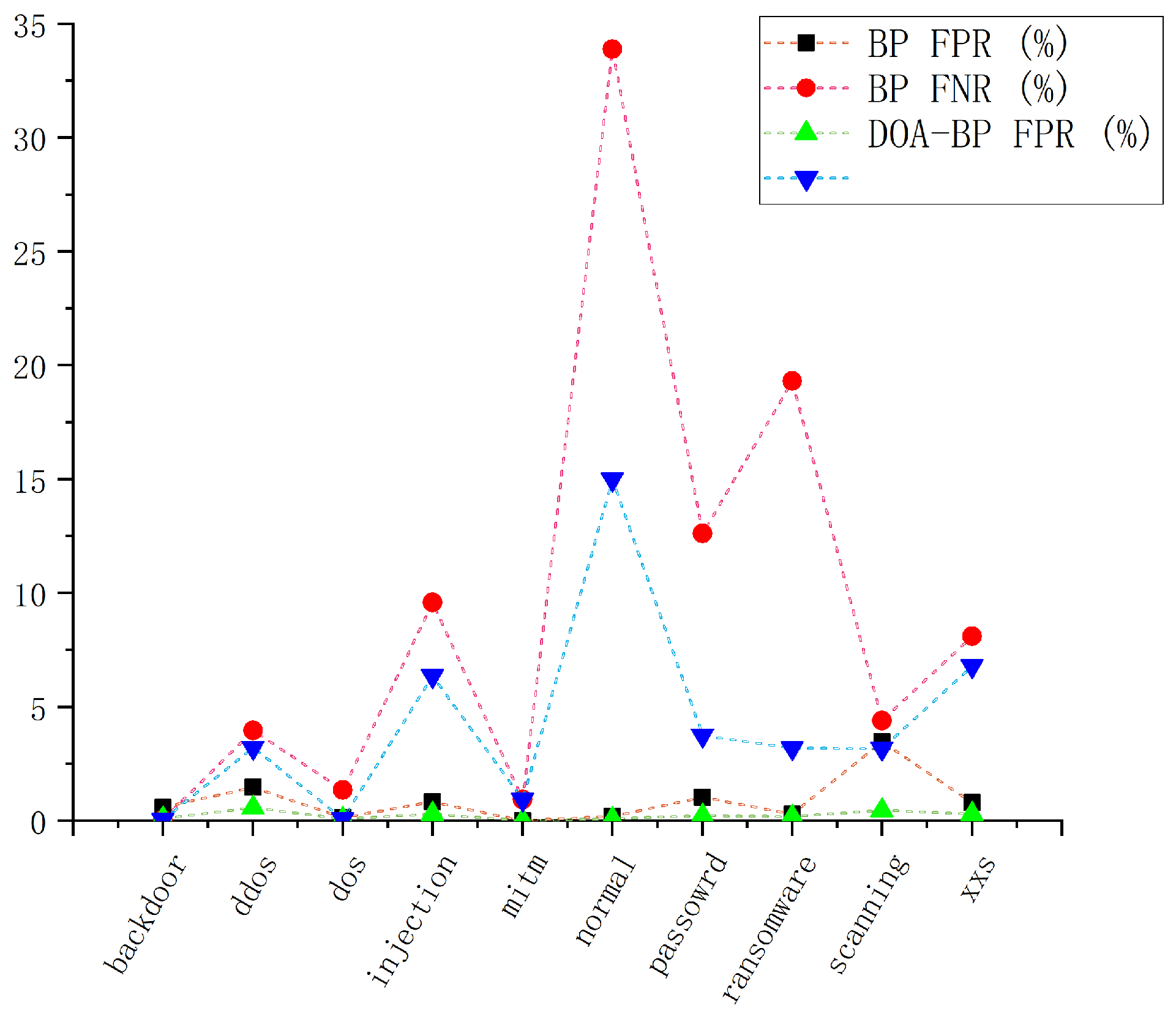

5.3. Performance of DOA-BPNN Model

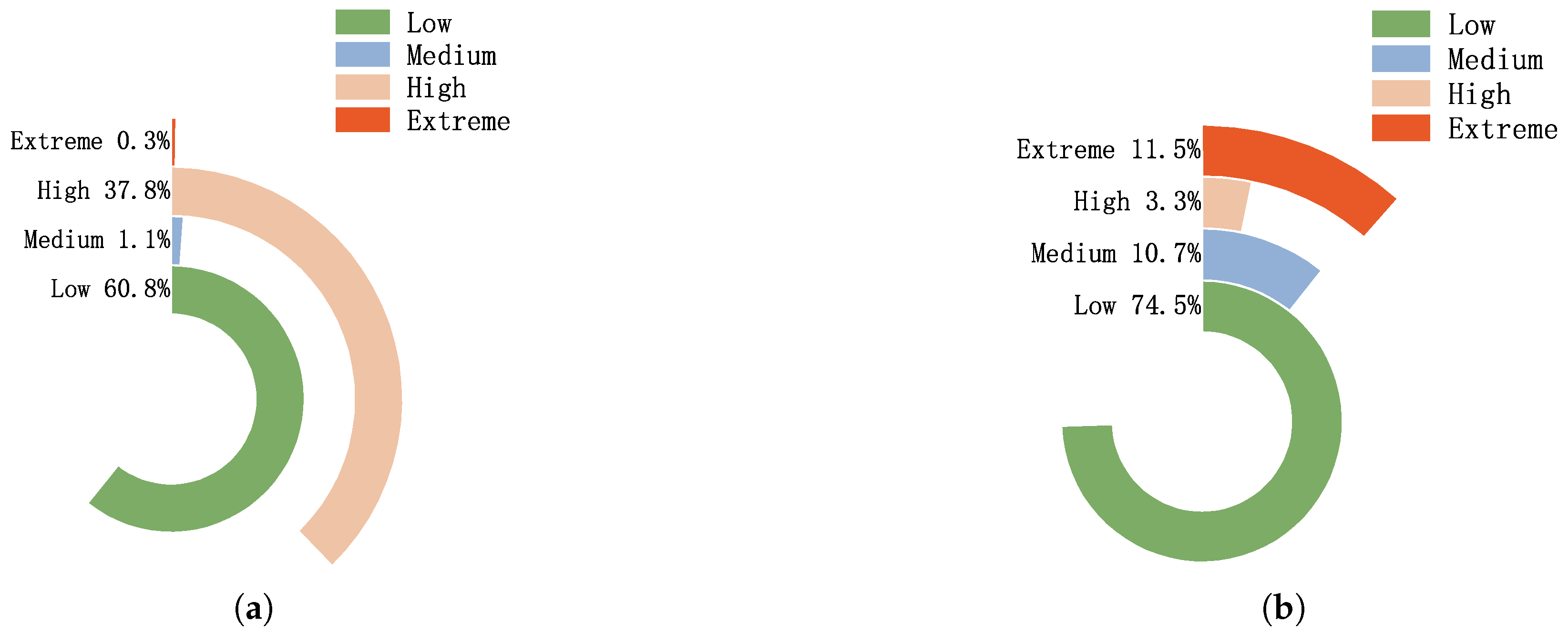

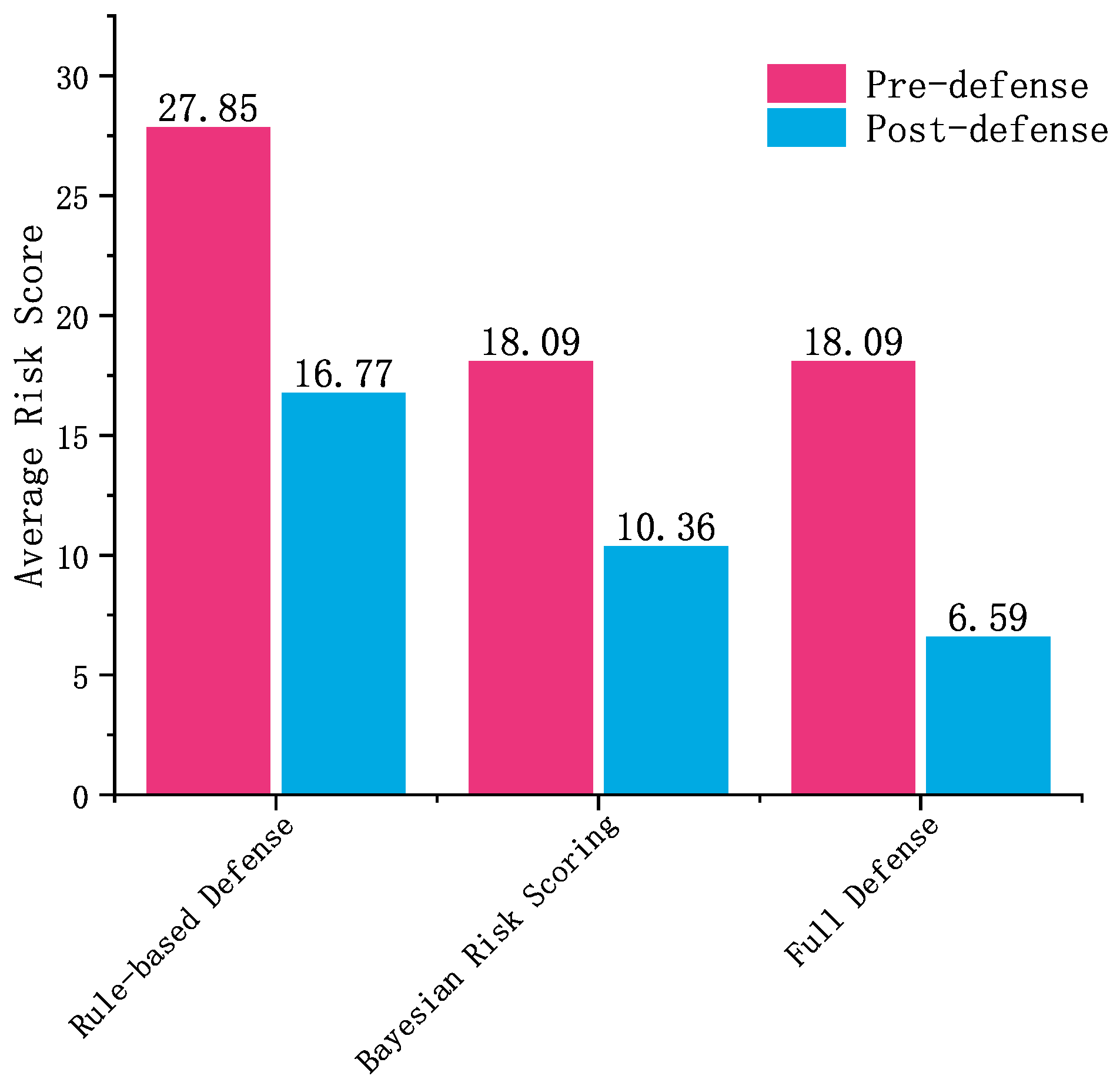

5.4. Risk-Aware Decision System Results

- Rule-based Defense: This configuration uses the DOA-BP detection model to identify attacks. It directly converts anomaly scores into risk scoring and applies basic rule-based responses, without any Bayesian risk scoring or reinforcement learning. This setup represents a typical approach used in traditional security systems.

- Bayesian Risk Scoring: This configuration builds on the rule-based defense scenario by integrating Bayesian risk scoring for more accurate risk evaluation. The DOA-BP detection model remains the same, but the addition of Bayesian risk scoring leads to better risk identification and more precise risk evaluation compared to the rule-based defense scenario.

- Full Defense: This configuration extends the Bayesian risk scoring scenario by incorporating an RL-based response module. The Bayesian risk model evaluates risks based on attack type impact, detection confidence, and environmental factors, while the RL module dynamically selects optimal defense actions based on the assessed risk, offering a more adaptive and context-aware defense system.

- Pre-Defense: The risk score calculated immediately after an attack is detected, before any defensive action is applied. This reflects the initial severity of the detected threat.

- Post-Defense: The risk score measured after applying the corresponding defense action. This shows how effectively the defense reduces the risk.

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Union of Railways. Railway Statistics Synopsis, 2024th ed.; International Union of Railways: Paris, France, 2024. [Google Scholar]

- IEC 61375-2-6; Electronic Railway Equipment—Train Communication Network (TCN)—Part 2-6: On-board to Ground Communication. Edition 1.0; International Electrotechnical Commission (IEC): Geneva, Switzerland, 2018.

- Moustafa, N. A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets. Sustain. Cities Soc. 2021, 72, 102994. [Google Scholar] [CrossRef]

- Booij, T.M.; Chiscop, I.; Meeuwissen, E.; Moustafa, N.; Den Hartog, F.T. ToN_IoT: The role of heterogeneity and the need for standardization of features and attack types in IoT network intrusion data sets. IEEE Internet Things J. 2021, 9, 485–496. [Google Scholar] [CrossRef]

- Alsaedi, A.; Moustafa, N.; Tari, Z.; Mahmood, A.; Anwar, A. TON_IoT telemetry dataset: A new generation dataset of IoT and IIoT for data-driven intrusion detection systems. IEEE Access 2020, 8, 165130–165150. [Google Scholar] [CrossRef]

- Lang, Y.; Gao, Y. Dream Optimization Algorithm (DOA): A novel metaheuristic optimization algorithm inspired by human dreams and its applications to real-world engineering problems. Comput. Methods Appl. Mech. Eng. 2025, 436, 117718. [Google Scholar] [CrossRef]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Bonafede, C.E.; Giudici, P. Bayesian networks for enterprise risk assessment. Phys. A Stat. Mech. Its Appl. 2007, 382, 22–28. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Gao, B.; Bu, B.; Zhang, W.; Li, X. An intrusion detection method based on machine learning and state observer for train-ground communication systems. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6608–6620. [Google Scholar] [CrossRef]

- Song, Y.; Bu, B.; Zhu, L. A novel intrusion detection model using a fusion of network and device states for communication-based train control systems. Electronics 2020, 9, 181. [Google Scholar] [CrossRef]

- Lu, H.; Zhao, Y.; Song, Y.; Yang, Y.; He, G.; Yu, H.; Ren, Y. A transfer learning-based intrusion detection system for zero-day attack in communication-based train control system. Clust. Comput. 2024, 27, 8477–8492. [Google Scholar] [CrossRef]

- Yin, B.; Bu, B.; Gao, B.; Li, Q. A hybrid intrusion detection method using improved stacking ensemble algorithm and false positive elimination strategy for CBTC. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 4253–4258. [Google Scholar]

- Li, W.; Yan, Z.; He, R.; Zong, L.; Zhang, F.; Zhan, Y. A novel machine learning based intrusion detection method for 5G empowered CBTC systems. In Proceedings of the 2022 International Wireless Communications and Mobile Computing (IWCMC), Dubrovnik, Croatia, 30 May–3 June 2022; pp. 211–216. [Google Scholar]

- Kong, X.Y.; Yang, G.H. An intrusion detection method based on self-generated coding technology for stealthy false data injection attacks in train-ground communication systems. IEEE Trans. Ind. Electron. 2022, 70, 8468–8476. [Google Scholar] [CrossRef]

- Fakhereldine, A. Security of Communication-Based Train Control Systems. Ph.D. Thesis, Queen’s University, Kingston, ON, Canada, 2025. [Google Scholar]

- Chowdhury, R.; Sen, S.; Goswami, A.; Purkait, S.; Saha, B. An implementation of bi-phase network intrusion detection system by using real-time traffic analysis. Expert Syst. Appl. 2023, 224, 119831. [Google Scholar] [CrossRef]

- Sheikhi, S. An effective fake news detection method using WOA-xgbTree algorithm and content-based features. Appl. Soft Comput. 2021, 109, 107559. [Google Scholar] [CrossRef]

- Jiang, H.; He, Z.; Ye, G.; Zhang, H. Network intrusion detection based on PSO-XGBoost model. IEEE Access 2020, 8, 58392–58401. [Google Scholar] [CrossRef]

- Hsu, C.M.; Azhari, M.Z.; Hsieh, H.Y.; Prakosa, S.W.; Leu, J.S. Robust network intrusion detection scheme using long-short term memory based convolutional neural networks. Mob. Netw. Appl. 2021, 26, 1137–1144. [Google Scholar] [CrossRef]

- Lv, L.; Wang, W.; Zhang, Z.; Liu, X. A novel intrusion detection system based on an optimal hybrid kernel extreme learning machine. Knowl.-Based Syst. 2020, 195, 105648. [Google Scholar] [CrossRef]

- Liu, T.; Yao, J.; Sun, Q. Intrusion detection algorithm of EPSO combined with BP neural network. In Proceedings of the 2020 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Vientiane, Laos, 11–12 January 2020; pp. 893–896. [Google Scholar]

- Zhao, J.; Jing, X.; Yan, Z.; Pedrycz, W. Network traffic classification for data fusion: A survey. Inf. Fusion 2021, 72, 22–47. [Google Scholar] [CrossRef]

- Abbasi, M.; Shahraki, A.; Taherkordi, A. Deep learning for network traffic monitoring and analysis (NTMA): A survey. Comput. Commun. 2021, 170, 19–41. [Google Scholar] [CrossRef]

- Attique, D.; Wang, H.; Wang, P. Fog-assisted deep-learning-empowered intrusion detection system for RPL-based resource-constrained smart industries. Sensors 2022, 22, 9416. [Google Scholar] [CrossRef]

- Azimjonov, J.; Kim, T. Stochastic gradient descent classifier-based lightweight intrusion detection systems using the efficient feature subsets of datasets. Expert Syst. Appl. 2024, 237, 121493. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Moustafa, N.; Turnbull, B.; Choo, K.K.R. An ensemble intrusion detection technique based on proposed statistical flow features for protecting network traffic of internet of things. IEEE Internet Things J. 2018, 6, 4815–4830. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, ACT, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Siddique, K.; Akhtar, Z.; Khan, F.A.; Kim, Y. KDD cup 99 data sets: A perspective on the role of data sets in network intrusion detection research. Computer 2019, 52, 41–51. [Google Scholar] [CrossRef]

- Tareq, I.; Elbagoury, B.M.; El-Regaily, S.; El-Horbaty, E.S.M. Analysis of ton-iot, unw-nb15, and edge-iiot datasets using dl in cybersecurity for iot. Appl. Sci. 2022, 12, 9572. [Google Scholar] [CrossRef]

- Yaras, S.; Dener, M. IoT-based intrusion detection system using new hybrid deep learning algorithm. Electronics 2024, 13, 1053. [Google Scholar] [CrossRef]

- De Menezes, F.S.; Liska, G.R.; Cirillo, M.A.; Vivanco, M.J. Data classification with binary response through the Boosting algorithm and logistic regression. Expert Syst. Appl. 2017, 69, 62–73. [Google Scholar] [CrossRef]

- George, P.G.; Renjith, V. Evolution of safety and security risk assessment methodologies towards the use of bayesian networks in process industries. Process Saf. Environ. Prot. 2021, 149, 758–775. [Google Scholar] [CrossRef]

- Sanders, R. The Pareto principle: Its use and abuse. J. Serv. Mark. 1987, 1, 37–40. [Google Scholar] [CrossRef]

- Rainio, O.; Teuho, J.; Klén, R. Evaluation metrics and statistical tests for machine learning. Sci. Rep. 2024, 14, 6086. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Rezaei, H.; Bozorg-Haddad, O.; Chu, X. Grey wolf optimization (GWO) algorithm. In Advanced Optimization by Nature-Inspired Algorithms; Springer: Singapore, 2017; pp. 81–91. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2018, 22, 387–408. [Google Scholar] [CrossRef]

| Class | Dataset Records | ||

|---|---|---|---|

| Training Set | Testing Set | Evaluation Set | |

| Backdoor | 45,526 | 11,382 | 14,227 |

| DDoS | 552,385 | 138,097 | 172,620 |

| DoS | 302,429 | 75,608 | 94,509 |

| Injection | 405,582 | 101,397 | 126,744 |

| Mitm | 94,259 | 23,565 | 29,456 |

| Normal | 142,710 | 35,678 | 44,597 |

| Password | 153,983 | 38,497 | 48,119 |

| Ransomware | 65,233 | 16,309 | 20,385 |

| Scanning | 639,757 | 159,940 | 199,924 |

| Xss | 188,960 | 47,240 | 59,050 |

| No. | Feature Name | No. | Feature Name |

|---|---|---|---|

| 1 | src_ip | 11 | src_pkts |

| 2 | src_port | 12 | src_ip_bytes |

| 3 | dst_ip | 13 | dst_pkts |

| 4 | dst_port | 14 | dst_ip_bytes |

| 5 | proto | 15 | dns_qclass |

| 6 | duration | 16 | dns_qtype |

| 7 | src_bytes | 17 | dns_rcode |

| 8 | dst_bytes | 18 | http_request_body_len |

| 9 | conn_state | 19 | http_response_body_len |

| 10 | missed_state | 20 | http_status_code |

| Risk Level | Range |

|---|---|

| Low | [0, 25] |

| Medium | (25, 50] |

| High | (50, 75] |

| Extreme | (75, 100] |

| Decision | Description |

|---|---|

| Monitoring and Logging | Record and monitor train status and anomalies to detect potential threats in real-time |

| Limiting Train Communication | Limit the transmission rate of non-critical communications to prioritize train device data |

| Train Deceleration | Automatically initiate deceleration to ensure smooth operation during an attack |

| Emergency Braking | Trigger emergency braking to quickly stop the train in case of a severe threat |

| System Reset | Performs a secure reboot or restores the train device to a safe state |

| Processor | Intel Core i7 (2.30 GHz, 11th Generation) |

|---|---|

| Operating System | Windows 10 |

| RAM | 32 GB |

| Language | Python |

| Matlab Version | Matlab R2022a |

| GPU | NVIDIA GeForce RTX 3060 |

| IDE | Jupyter Notebook |

| Numpy | 2.2.5 |

| PyTorch | 2.7.0 |

| Pandas | 2.2.3 |

| Scikit-learn | 1.6.1 |

| Batch Size | 1024 |

| Population Size | 20 |

| Maximum Iterations | 60 |

| Training Epochs | 80 |

| Hidden Layer Structure | [128, 64] |

| Learning Rate Scheduler | Cosine Annealing |

| Initial Learning Rate | 0.001 |

| Minimum Learning Rate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, C.; Zhang, W.; Sun, P.; Wang, H.; Yang, C. A Hybrid Security Framework for Train-to-Ground (T2G) Communication Using DOA-Optimized BPNN Detection, Bayesian Risk Scoring, and RL-Based Response. Sensors 2025, 25, 3208. https://doi.org/10.3390/s25103208

Sun C, Zhang W, Sun P, Wang H, Yang C. A Hybrid Security Framework for Train-to-Ground (T2G) Communication Using DOA-Optimized BPNN Detection, Bayesian Risk Scoring, and RL-Based Response. Sensors. 2025; 25(10):3208. https://doi.org/10.3390/s25103208

Chicago/Turabian StyleSun, Chaoyuan, Weijiao Zhang, Peng Sun, Hui Wang, and Chunhui Yang. 2025. "A Hybrid Security Framework for Train-to-Ground (T2G) Communication Using DOA-Optimized BPNN Detection, Bayesian Risk Scoring, and RL-Based Response" Sensors 25, no. 10: 3208. https://doi.org/10.3390/s25103208

APA StyleSun, C., Zhang, W., Sun, P., Wang, H., & Yang, C. (2025). A Hybrid Security Framework for Train-to-Ground (T2G) Communication Using DOA-Optimized BPNN Detection, Bayesian Risk Scoring, and RL-Based Response. Sensors, 25(10), 3208. https://doi.org/10.3390/s25103208