Abstract

Light detection and ranging (LiDAR) sensor technology for people detection offers a significant advantage in data protection. However, to design these systems cost- and energy-efficiently, the relationship between the measurement data and final object detection output with deep neural networks (DNNs) has to be elaborated. Therefore, this paper presents augmentation methods to analyze the influence of the distance, resolution, noise, and shading parameters of a LiDAR sensor in real point clouds for people detection. Furthermore, their influence on object detection using DNNs was investigated. A significant reduction in the quality requirements for the point clouds was possible for the measurement setup with only minor degradation on the object list level. The DNNs PointVoxel-Region-based Convolutional Neural Network (PV-RCNN) and Sparsely Embedded Convolutional Detection (SECOND) both only show a reduction in object detection of less than 5% with a reduced resolution of up to 32 factors, for an increase in distance of 4 factors, and with a Gaussian noise up to and . In addition, both networks require an unshaded height of approx. 0.5 m from a detected person’s head downwards to ensure good people detection performance without special training for these cases. The results obtained, such as shadowing information, are transferred to a software program to determine the minimum number of sensors and their orientation based on the mounting height of the sensor, the sensor parameters, and the ground area under consideration, both for detection at the point cloud level and object detection level.

1. Introduction

In addition to the application field of highly automated driving, light detection and ranging (LiDAR) sensors are increasingly being used to count people in publicly accessible places or the field of security applications [1,2]. In these applications, it is important to reliably detect people in the sensor’s working area and still be as cost-effective as possible. Edge devices are often used for this purpose, where data processing is carried out locally on the device itself [3]. For easy installation, they must be especially energy-efficient. Neural networks are usually used to detect people. In order to design the entire sensor system as efficiently as possible, both the requirements for the optical sensor of the LiDAR and the required computing power of the entire edge device should be optimized. For this purpose, the influences of different LiDAR sensor parameters on the resulting object detection with deep neural networks (DNNs) must be investigated, as in [4] for cameras. These relationships are usually not analytically realizable for DNNs and their black box characteristics [5] and thus have to be treated in a data-driven approach. Therefore, this paper analyzes the correlation between sensor and sensor setup parameters and object detection using various DNNs to define future optimization potential. For this purpose, the dependence of the distance between the scene and the sensor, the resolution of the sensor, and the effect of noise and shading in the point cloud on DNN-based people detection are investigated.

The remainder of this paper is organized as follows. Section 2 discusses the related work on point cloud augmentation and LiDAR-based people detection using deep learning. The augmentation of the point clouds with the resolution, distance, noise, and shading parameters are explained in Section 3. Section 4 shows the data set structure used, and in Section 5, the deep learning methods for the training and testing are described. The effects of the augmented parameters on LiDAR-based object detection are discussed in Section 6. The work of this paper is summarized in Section 8, and an outlook is given in Section 9.

2. State of the Art

In the field of artificial neural networks (ANNs) based on image data from cameras, the influence of different camera effects, such as on the accuracy of the camera system itself and, as in [6], on the prediction performance of object recognition algorithms and DNNs, was investigated. In [7], the authors investigate the influence of noise and brightness on the performance of camera-based DNNs for object detection. For LiDAR data, the authors of [8] show the differences between image and point cloud object detection and the influence of distant objects on object detection in point clouds. In order to overcome the influence of different point densities at different distances, the authors in [9] present a method in which the point density of objects to be detected is evenly distributed over the entire point cloud. However, the point density for object detection is influenced by different sensors’ distance and resolution. This is why the authors in [10,11] are working on making DNNs robust to interchanging data from different LiDAR sensors with different resolutions. Sensor-specific effects also influence the quality of object detection with DNNs, as shown in [12], based on the example of the motion distortion effect. Another important challenge in developing measurement systems used with deep learning methods and developing deep learning methods themselves is the lack of data. For this reason, the authors of [13] present a measurement setup for automatically generating a labeled data set for people detection. In [14,15], the authors present a method for the variation in the pose of the point cloud for developing robust deep learning methods. The authors in [16] present a method for generating new synthetic point clouds by interpolating existing point clouds. In [17], new synthetic point clouds are generated by combining existing point clouds of rotating LiDAR sensors along the azimuth axis and tilting the inserted sections. This allows the data set to be artificially expanded, and the developed DNNs are more transferable to other measurement setups. Augmenting existing point clouds is another way of artificially enlarging data sets and recreating certain effects [18]. In [19,20,21,22], methods for augmenting point clouds with a reduced resolution are presented. Therefore, in [19], the point cloud is divided into different cubes, and random points in these cubes are removed. In [20], a position in the 2D view of the point cloud is randomly selected, and all points in a random radius around this position are removed. The authors of [21] present a method in which the key points of the point cloud are identified and removed using adversarial learning. In [22], edges are defined as key points from which only a few points are removed, and surfaces as zones from which more points can be removed. In [23], the shape of objects is distorted based on a vector field to better generalize object detection with DNNs. This is also done by testing the sensitivity to noise. To do this, the authors add or delete critical points from the point cloud to generate noise. In [24,25], the point clouds are augmented by noise. In [24], the Cartesian coordinates of the points are randomly noisy. The authors of [25] augment the homogeneous coordinates of each point of the point cloud with noise based on a uniform distribution.

However, none of these papers follow a hardware-oriented approach in which the resolution and noise of the point cloud are augmented according to the operation principle of the hardware of the real LiDAR sensor.

This paper presents a functional principle-based augmentation of sensor and measurement setup parameters based on LiDAR point clouds for people detection. For this purpose, specific parameters of real measured point clouds for people detection are presented after considering the fundamentals—the sensor and measurement setup. Sensor-internal parameters, such as noise in the angle measurement of the mirrors and depth measurements and a lower resolution of the scan pattern in both azimuth and elevation direction, are augmented close to the functional principle of the hardware. The mounting height and the shadowing of people by other objects are also augmented and discussed. The influences of individual parameters on the performance of DNNs for object detection are then presented. Based on the acquired results, a software tool for optimizing LiDAR-based measurement setups for people detection is introduced. To the best of the authors’ knowledge, this is the first work that applies such functional principle-based sensor parameter augmentations to real measured point clouds for people detection and quantifies their influence on the object detection performance of DNNs. In the following sections, the functionality of the sensors used is described in more detail. Therefore, this paper analyzes the correlation between sensor and sensor setup parameters and object detection using various DNNs to define future optimization potential. For this purpose, the dependence of the distance between the scene and the sensor, the resolution of the sensor, and the effect of noise and shading in the point cloud on DNN-based people detection are investigated.

2.1. ToF LiDAR Sensors

LiDAR sensors measure the distances of reflected light beams in space to detect objects. The LiDAR sensors emit laser pulses. The emitted laser pulses hit obstacles in the sensor’s scanning range, the so-called field of view (); they are partially reflected and detected in the sensor. For so-called time of flight () LiDAR sensors, the ToF between the emission of the light pulse from the sensor and the re-arrival of the reflected pulse at the sensor’s detector is measured. To determine the radial distance r between the location of the reflection and the sensor, this is calculated from the speed of light c and the [26], as shown in Equation (1).

LiDAR sensors vary in size and performance depending on their area of application. However, strict safety standards must be observed when used in the vicinity of people, as the sensor’s laser operates in a wavelength range that is invisible to humans. Therefore, the eye’s natural protective mechanisms are limited. To ensure safety and prevent eye injuries, the average transmitting power of the laser is therefore limited [27]. This energy limitation makes it difficult to detect the received light power at the sensor detector, which is reduced by diffuse reflections through the atmosphere, partial absorption on the object, and non-directional backscattering. Without any effect on the average transmission power, the laser can be focused more, increasing the energy density. However, there is a risk that small spatial angles and homogeneous surfaces will result in total reflection of the light beam. For this reason, high-performance detectors must be used [28] to obtain detections on the point cloud level in challenging environments and large distances. In addition to the and the output angles of the laser beam, the relative velocity of an object to the sensor can also be determined and the intensity of the returning light measured [28,29]. Different LiDAR sensor types control the laser pulse’s direction in various ways. These different scanning mechanisms are presented below.

2.2. Qb2 Operating Principle

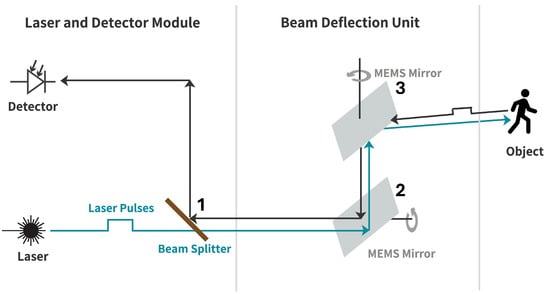

The Qb2 from Blickfeld GmbH (Munich, Germany) is used in this paper because it provides the data structure described in [13] for the automated label toolchain used and has a verifiable scanning pattern. The Qb2 is a s microelectromechanical system (MEMS)-based LiDAR sensor, whose structure and operating principle are shown schematically in Figure 1. The laser passes through a beam splitter (1) and is emitted in the desired direction via two MEMS-based mirrors (2, 3). The two mirrors perform oscillating rotational movements to generate a defined scan pattern [26]. The distance and angle measurements performed are saved in the form of point clouds.

Figure 1.

Working principle of the Qb2 LiDAR sensor [26].

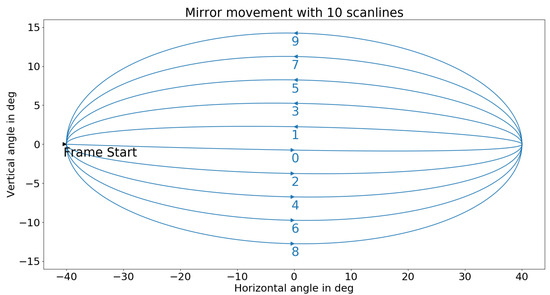

By oscillating the mirrors (2, 3) in Figure 1, the sensor generates an elliptical scan pattern, as shown in Figure 2.

Figure 2.

Visualization of the scan pattern of the Qb2 LiDAR sensor from Blickfeld GmbH [30].

2.3. Artificial Neural Networks for 3D Object Detection

Like the brain as its natural counterpart, ANNs consist of individual neurons, whereby the complexity of nature with about nerve cells of a human brain and an estimated connections of artificial applications remains unmatched to date [31]. The artificial neurons have inputs and outputs and are usually organized in different layers. An output value is generated from an input vector using an activation function and passed on via weighted connections. This creates an information flow from the neurons in the input layer via one or multiple inner layers to the output nodes. The learning method forms the basis of the ANNs, as the weighting of the connections between the nodes is adjusted according to its scheme during training. These adjustments make it possible to reproduce output values for identical and similar inputs and thus achieve a learning capability [32]. There are various training methods for ANNs. The supervised training used for this work requires labeled data. This means that a correct result is already available as a label, and the ANN’s output is compared with this during training. The resulting error is then minimized. Other learning methods include unsupervised training—for example, pattern recognition—and reinforcement learning, in which no error is calculated between the actual and desired output, but only feedback on success or failure is transmitted [33].

2.3.1. Feedforward Neural Networks

Feedforward neural networks (FNNs) form one category of ANNs. These networks only have connections from one layer to the following layer. FNNs are fully connected if each neuron has a connection to each neuron in the next layer. There are also derivatives in which connections skip one or more layers [34].

2.3.2. Convolutional Neural Networks

Convolutional neural networks (CNNs) are FNNs with limited connections between the layers, primarily designed for image processing. The pixel values of an image distributed across the input layer are grouped into small subsets to identify structures that emerge later in the network. Therefore, input values are folded into convolution layers with filter kernels. The results of these operations are then reduced in pooling layers before the information is further processed in hidden layers of an FNN [35]. Classical 2D-based CNNs perform worse with 3D data than 2D image processing, so these models must be specially adapted for 3D applications. One method is to project the point clouds onto a plane to obtain different 2D views [36]. Another common practice is dividing the data into so-called voxels, smaller three-dimensional cubes representing the points in the volume. Although this variant is computationally efficient, it also entails a loss of information within the voxels [37]. The two CNNs used in the course of this work are presented below. These are specially optimized for 3D object detection with LiDAR point clouds and are based on the basic concept of CNN.

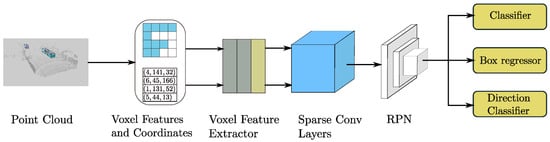

2.3.3. SECOND

Sparsely Embedded Convolutional Detection (SECOND) uses voxels, as described previously, for object detection. The coordinates and the number of points per cube are recorded during creation. After extracting features of the voxels from the individual points using an FNN, as shown in Figure 3, spatially sparse convolution is used. This means that only the voxels containing data points are considered in a CNN. With many free volumes in a 3D data set, SECOND remains computationally efficient. Finally, the points are analyzed with a regional proposal network (RPN), which creates so-called regions of interest (ROIs). In these, the exact positions of objects are determined, and corresponding bounding boxes are created [36].

Figure 3.

Structure of the SECOND model ([36], S. 4).

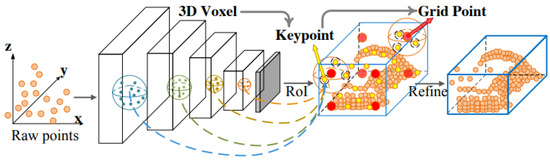

2.3.4. PV-RCNN

The PointVoxel-Region-based Convolutional Neural Network (PV-RCNN) also creates a defined number of voxels from the point clouds. Apart from processing these voxels using sparse convolution and the creation of ROIs, as in the SECOND model, point-based methods are additionally used. Key points are extracted from the voxels using furthest point sampling. This means that points as far apart as possible within a cube are selected to represent its features. Further information about the ROI is then extracted from these key points. This use of the two different approaches, illustrated in Figure 4, ensures high accuracies of PV-RCNN in object detection [37].

Figure 4.

Structure of the PV-RCNN model ([37], S. 1).

2.4. Validation Metrics

To evaluate the results of the DNNs with the different variations in the augmented data sets, result parameters are required that illustrate the performance of the people detection models. As in [38], a threshold value for the Intersection over Union (IoU) is used from which an object is considered a true positive. As per Equation (2), this is calculated as the quotient of the IoU set of the predicted bounding boxes () and the labels () [38].

Precision is the rate of the correct predictions () of the neural network compared to the total number of predictions (). This can be calculated formally using Equation (3) [38].

Recall is the rate of the correct predictions () compared to the total number of labeled objects (). The recall can be calculated using Equation (4) [38].

The average precision (AP) is calculated, as described in [39], from the area under the precision and recall curve. The AP can be calculated from the precision P and recall R according to the following Equation (5):

For the calculation of the mean intersection over union () of the bounding boxes, the mean value of the IoU for the number of bounding boxes n predicted by the neural network is calculated according to the following Equation (6).

The values for recall, AP, and an average of the predicted number of objects were output as implemented in [40] according to [38,39]; the further metrics were implemented according to the requirements of this work.

3. Augmentation of the LiDAR Dataset

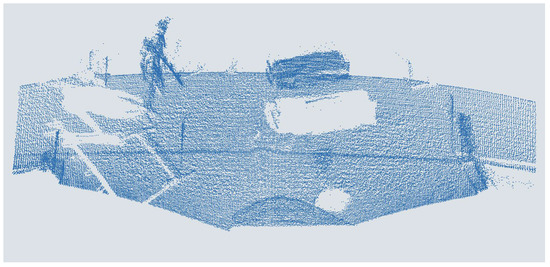

DNNs for LiDAR object detection are usually trained using supervised learning. During training, the deviation between the prediction of the network and the ground truth is calculated. After each training, a batch is used to adapt the weights of the neurons in the ANN via backpropagation. A labeled data set is required for this. Therefore, the data set presented in [13] is used for the people detection considered in this work. This data set was generated with the LiDAR sensor Qb2 from the Blickfeld GmbH [41] and contains 14,819 labeled point clouds. An exemplary point cloud is shown in Figure 5. The sensor was mounted stationary on the ceiling of the entrance area of the canteen at Kempten University of Applied Sciences at a height of approximately , which is almost wide and around long. The objects appearing in the LiDAR point clouds are mostly people walking or standing and various objects of different sizes that have been placed or moved through. At the edges of the Qb2’s FoV, parts of the door frames and a wall can be seen. Labels were automatically generated using the object lists from reference cameras mounted in the room. The labels are stored as .txt files in the unified 3D box label format [30].

Figure 5.

Person in a point cloud of a MEMS-based scanning ToF LiDAR sensor.

In order to investigate the influences of the LiDAR sensor parameters resolution, distance, noise, and shading on people detection with DNNs, the influences of the sensor parameters on the point cloud must be represented in the respective data set. In order to implement this as time and cost-efficiently as possible, these effects are augmented onto the existing real data set. This way, the data set can be built on existing data containing real scenes and people. Augmentation allows large amounts of data to be generated very quickly. A corresponding generation with real hardware would be very time-consuming and cost-intensive. A basic simulation of the point cloud with a high-fidelity sensor model, such as in [42], with a correspondingly complex scenario, is also more time-consuming and computationally intensive, as all scenes, objects, and simulation parameters have to be defined and calculated from scratch. With the augmentations, however, only a slight deviation from reality is to be expected, as the reduction in intensity in protected areas only has a small extinction coefficient and the deviation in the curvature of the scan lines only results in a slight shift in the input features of the DNNs due to the previous voxelization. However, it should be noted that synthetically generated or adjusted data may deviate to a certain extent from real data, for example, due to cross-effects between different parameters or simplifications assumed. For example, when augmenting the distance, a change in intensity is neglected, and the curvature of the scan lines is simplified. Nevertheless these limitations must be assessed depending on the application and the trade-off between accuracy and efficiency.

3.1. Resolution Augmentation

Two parameters in the scan pattern determine the resolution of the MEMS-based LiDAR sensor used in this work. Considering the scan pattern shown in Figure 2, the number of scan lines in the elevation direction and the number and thus the distance between two points on a scan line in the azimuth direction can be adjusted. Since the experimental setup used in this paper described in [13] does not provide any further information to increase the resolution of the point cloud by augmenting and, as shown in [13], the measurement setup has achieved a sufficient resolution of the point clouds for people detection, only the reduction in the resolution by augmentation is used. For this purpose, the azimuth and elevation resolution of the scan pattern are reduced, as described below.

3.1.1. Reduction in the Azimuth Resolution

For the augmentation of the data set with a lower azimuth resolution, corresponding to a sensor with a lower azimuth resolution, more and more points per scan line were deleted step by step. The points in each point cloud are assigned a point id to be identified in Python (v.3.11.6) according to their scan sequence. The number of retained points was gradually reduced for every second point scanned to achieve a continuous and efficient reduction in resolution without increasing the computational effort by using a step size that was too small. This reduced the original quantity of around 83,000 points per point cloud to as few as 20 points. This reduction in point density results in twelve new versions of the original data set, simplified in terms of point density, corresponding to an increase in the sensor distance to the object up to 26 times. The lowest resolution corresponds to the point density on an object from a sensor with a distance of about 243 . An exemplary point cloud is shown in Figure 6.

Figure 6.

People in a point cloud augmented with one-eights azimuth resolution from a MEMS-based scanning LiDAR sensor.

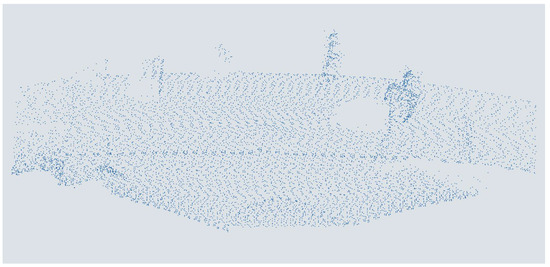

3.1.2. Reduction in the Elevation Resolution

For the augmentation of the data set with a low elevation resolution, corresponding to a sensor with a lower elevation resolution, an increasing number of scan lines were deleted gradually. The individual points are first assigned to the different rows to do this. This is done by determining the minima and maxima, the edge points of the scan lines, in the course of the x-values. Then, two consecutive lines are always kept except for the first one. In this way, seven augmented versions are generated from the original data sets, down to 1095 points per point cloud of the points corresponding to a point density like a 8-fold mounting height. An exemplary point cloud is shown in Figure 7.

Figure 7.

People in a point cloud augmented with one-eighth elevation resolution from a MEMS-based scanning LiDAR sensor.

3.2. Distance Augmentation

Suppose the distance between the sensor and the object is increased. In that case, the distance between the points in the point cloud and the origin of the coordinates in the sensor coordinate system increases on the one hand, and the point density on the object decreases on the other. In addition, the intensity of the measured partially reflected laser pulse decreases due to the attenuation in the atmosphere, and the scene captured by the sensor becomes larger. In order to be able to augment the influence of a greater distance between the sensor and the working area in the point cloud, the existing point cloud must be shifted from the coordinate origin in the y-direction, the point density on the object must be reduced, and the surrounding scene must extend to the FoV of the LiDAR sensor. The change in intensity over distance was not addressed within the scope of this work. The focus concentrated on the geometric properties, as the extinction coefficient for the weather-protected measurement setup is small, and the influence on DNNs based on point clouds, as in [43], is often negligible. This augmentation of the point cloud is discussed in more detail in the following sections.

3.2.1. Reduction in the Resolution

In order to augment the distance between the sensor and object on the data set on a sensor-specific basis, the scan pattern of the Qb2 from Figure 2 must be considered. The reductions in resolution described in Section 3.1 are combined for the augmentation. As a result, the point density on the object decreases with increasing distance in both dimensions, azimuth, and elevation, corresponding to the real data. The data sets processed in this way consist of three different versions with simulated 2-fold, 4-fold, and 8-fold mounting heights or a quarter, sixteenth, and sixty-fourth of the points.

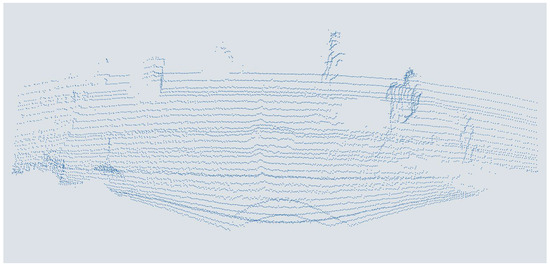

3.2.2. Expanding the Measured Point Cloud

The height multiplier H is introduced to expand the point cloud of the surrounding scene in the sensor’s FoV. In addition to reducing the point density, the FoV, which is increased by a factor of , should also be taken into account to ensure that the adjustment is as close to reality as possible, which is why new points are added around the remaining original data. The FoV is increased by a factor of to achieve a continuous and efficient increase in height without enlarging the computational effort by using a step size that was too small. A ground surface is used since no information is available for this area, which does not exist in the test area. The approximation assumes that cut-off objects and their shadows at the edge of the original FoV are not continued in the generated part. For this purpose, a reference height must be determined using an average of the existing points’ highest values. In order to extend the existing scan lines on both sides, the azimuth angles are first determined from the Cartesian - and -coordinates of the edge points, as described in Equation (7).

Although these angles should remain maximum or minimum values, their new azimuth angle can be calculated by adjusting the y-values of the original edge points , as described in Equation (8). The two values form the limits of an interval where the azimuth angles of the points to be created are to be located. The distance should correspond to the average distance between the remaining points on the scan line.

In addition, complete scan lines must be added at the top and bottom, which is why the elevation angle is calculated from - and -values, as described in Equation (9). As with the azimuth angle, an interval can be determined in which new scan lines with the average elevation distances are inserted.

With the help of of the ground and H and the generated angles, the remaining Cartesian coordinates can be determined, as described in Equations (10) and (11).

A noise function is applied to the newly generated points’ values for a more realistic arrangement. Finally, is added to the y-values of the original points and the labels to adjust the mounting height in the y-coordinates. The augmented point cloud thus replicates an increased mounting height of the sensor much better than a simple reduction in the point density. However, the modified data are somewhat different from real measurements. On the one hand, the scan lines are not straight but converge at the ends, as shown in Figure 2. The scan lines can be extended from the endpoints as the sensor already cuts off the intersection point. On the other hand, as shown in Figure 8, they must be continued with a constant elevation angle to avoid a too-high point density in the middle of the sides of the FoV. Also, the shadows of objects at the edge of the original area cannot be continued in the newly generated ground, and no change in intensity is assumed. These assumptions lead to deviations in the point distribution and, thus, the scan pattern in the point cloud, which can influence the prediction of the DNNs. This influence cannot be analytically precisely defined due to the black box characteristics of the DNNs. However, it can be assumed that the influence on object detection between scan patterns is transferable. This also applies to the relationship between objects at the edge of the original FoV and their shadows. As there is a consistent pattern here, there is a high probability that the DNNs learn this, which only leads to a slight deviation in the results. The deviation in the curvature of the scan lines only results in a slight shift in the input features of the DNNs due to the previous voxelization. The influence of the simplified intensity reduction can be classified as minor, as the extinction coefficient for the weather-protected measurement setup is small, and the intensity feature, for example, as in [43], has only a minor influence. However, due to the deviation, the trained models should not be transferred directly to an application; instead, they should be optimized with real data and evaluated for robustness.

Figure 8.

Point cloud of people augmented with double distance from a scanning ToF LiDAR sensor.

3.3. Noise Augmentation

Figure 1 shows the working principle of a scanning TOF LiDAR sensor. To capture the 3D information in the sensor’s environment, it calculates the point of a reflection in the point cloud from the ToF, the azimuth and elevation angle of the sent laser pulse, and the intensity of the reflected laser pulse. These fundamental measurement variables can be influenced by noise. Internal sources, such as temperature variations, edge effects, and clock errors, introduce deviations in range and angular measurements. In contrast, external sources, including material properties, environmental light, and vibrations, distort signal intensity and geometry. These combined influences result in a degradation of the overall performance of the LiDAR sensor in capturing precise spatial information. Random noise probability density functions such as background radiation noise, detector noise, and circuit noise all obey Gaussian distribution, as claimed in [44]. In [45], the authors show a Gaussian noise distribution for the ToF measurement. This is why a Gaussian noise distribution is used as the basis for the augmentation of the point clouds described below. The authors do not define if this distribution has a non-zero mean, which would suggest systematic bias, or a zero mean, which would suggest entirely random noise. LiDAR noise in real-world situations should combine both, resulting from software processing, instrument imperfections, and environmental circumstances. The robustness and performance of machine learning algorithms trained may be affected if the augmentation considers only zero-mean or non-zero mean Gaussian noise, failing to capture the underlying properties of LiDAR noise. The bell-shaped curve of a Gaussian distribution, also called a normal distribution, is a continuous probability distribution [46] determined by the mean of the distribution and the standard deviation . Equation (12) gives a Gaussian distribution’s probability density function as in [46].

In this work, several noise-augmented data sets are generated where different noise distributions with both and are augmented to the point clouds, as shown in Figure 9. The noise is successively increased until a test group of ten people, with different experience estimates between no experience with point clouds and the labeling of approx. 300,000 point clouds could no longer reliably distinguish people from other objects in the point clouds with the human eye. Nevertheless, all persons provide the same threshold. This threshold value is then subdivided and combined with to show the progression of the influence and the individual parameters. Table 1 shows the and combinations used for the augmentation of the point clouds. It should be noted that the distributions and , as well as and , are purely theoretical distributions that have no relevance in the technical field but are included for completeness and clarity of the evaluation.

Figure 9.

People in a point cloud augmented with Gaussian distributed noise with and from a MEMS-based scanning ToF LiDAR sensor.

Table 1.

Augmented parameters of Gaussian distributions.

In order to be able to assess the influence of the noise of the various measured variables of the sensor on object detection, the original data set is augmented for the parameter combinations shown in Table 1 for the geometrical values TOF, azimuth, and elevation angle of the emitted laser pulse as well as the intensity values. In addition, the combinations of noise from the angle measurement (azimuth + elevation) and the ToF and angle measurement (ToF, azimuth, and elevation) are augmented. In this way, 66 different data sets with different noise parameters are created.

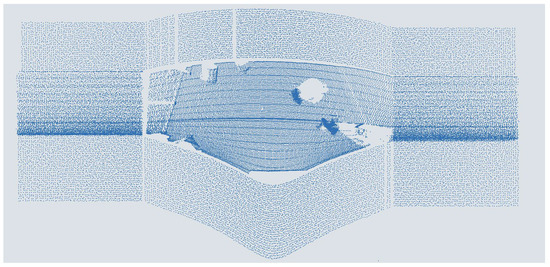

3.4. Shading Augmentation

A small distance between several people or a non-central sensor position can cause shadowing. This means that, as described in [13], other obstacles in the direction of the laser beam partially obscure people or objects. For example, very small people can disappear completely in the point cloud, while at least the head of a full-grown person is usually captured. To simulate occlusions of various sizes, points are removed directly above the ground in strips with heights ranging from to in steps. This achieves shading from no shading to complete occlusion of an adult of average height [47] with steadily increasing augmented shading without excessively increasing the computational effort due to a too small step size. This is shown as an example in Figure 10. The aim is to determine how much of a body must be visible to reliably detect people. The points to be removed with the corresponding y-values are found and deleted by sorting the data points by height.

Figure 10.

People in a point cloud augmented with 1 m shading from a MEMS-based scanning ToF LiDAR sensor.

4. Dataset for the Influence Analysis

Based on the measurement setup presented in [13], a data set is recorded with the Qb2 (SN: ZXRPMBIAC, Firmware: CHIARA v1.12.7) to train the DNNs. Based on this data set, different data sets with various resolutions, distances to the scene, noise, and shading of people in the point cloud of the LiDAR sensor are augmented to investigate the individual influence of the parameters on the object detection performance of DNNs. To train DNNs for people detection, the data sets are converted into the custom data set format of the OpenPCDet Toolbox described in [40]. To convert the data to the new file format, the point clouds are converted from .pcd format to .npy files with the x, y, z, format, and the labels from JSON format to the unified 3D box definition label format for each object i and the maximum number of detectable classes n as described in [13] with : .

In this way, 95 data sets with 14,819 different labeled point clouds are generated for the training and testing of the DNNs. Each data set is divided into train, test, and validation data, as shown in Table 2. A sample of each data set is publicly available at https://huggingface.co/datasets/LukasPro/PointCloudPeople (accessed on 11 March 2025) and licensed under the CC-BY-4.0.

Table 2.

Train, test, and validation datasplit.

5. Artificial Neural Network Training

The OpenPCDet Toolbox (v0.5.2) is used to train DNNs. The data sets are loaded as custom data sets in the configuration file, and the SECOND and PV-RCNN networks are selected for training. As it is only a matter of people detection, the configurations of both network architectures were adapted to detect people as covered in the data sets. Two different scenarios are examined during training and testing. On the one hand, the case where a network is trained with optimal data, i.e., the original data, and then tested with the augmented data, corresponds to cost-efficient sensor hardware and, on the other hand, the training and testing of the networks with the augmented data. The hyperparameters learning rate and batch size are then optimized, and both network architectures are trained for a maximum of 100 epochs each.

6. Results

This chapter presents the results of the DNNs SECOND and PV-RCNN training and testing with the different augmented data sets. These are presented in the following chapters depending on the augmented parameter. For each augmented data set, the DNNs are trained with the original data set, tested with the corresponding augmented data set (E), and trained and tested with the augmented data set (TE). For calculating the AP and , as described in Section 2.4, a threshold value of is used to calculate the confusion metrics, as in [39]. Both DNNs show promising results for people detection for the original non-augmented data set. The SECOND network achieves an and a for the best epoch. The PV-RCNN achieves an and a of for the best epoch. Thus, both networks achieve very good results for people detection for both metrics compared to the existing literature as for SECOND in [36,37,48] and for PV-RCNN in [37,48]. Considering the influence of the augmented effects on the prediction of the DNNs, the metric is primarily used in the further course of this work for the best possible comparability since the AP presented in [39] is only partially applicable.

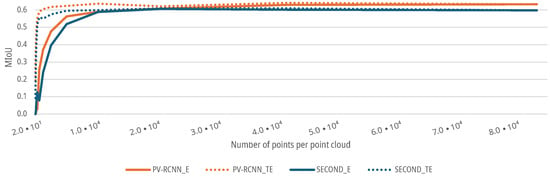

6.1. Results for Azimuth Resolution Augmentation

For the data sets with an augmented azimuth resolution, as described in Section 3.1.1, both the SECOND and the PV-RCNN show a lower for a low azimuth resolution of the corresponding scenes of the point clouds. The initial of both DNNs of for the SECOND and for the PV-RCNN remains at a stable level for both the E- and TE-cases up to a resolution of 10,402 points in the point cloud with a degradation (see Figure 11). Afterward, the of both DNNs for the E-case decreases further with decreasing azimuth resolution. For the TE-case, the of both DNNs only drops to a of for the SECOND and for the PV-RCNN up to a resolution of 2601 points before it then also decreases significantly for both networks with decreasing resolution, see Figure 11. Thus, both DNNs show that the prediction accuracy decreases with decreasing azimuth resolution. For the present measurement setup or scenario, the azimuth resolution of the sensor could be reduced by a factor of 8 without significant degradation in the prediction accuracy of the DNNs. In addition, the results show that even networks trained with high resolutions can deliver good results up to a reduction in the azimuth resolution by a factor of 8, with a degradation of the of <5% with for the SECOND and for the PV-RCNN; by training the DNNs with a lower resolution, the performance can even be maintained up to a resolution reduced by a factor of 32, with a degradation of the of <5% with for the SECOND and for the PV-RCNN.

Figure 11.

Impact of augmented azimuth resolution on the of DNNs for people detection.

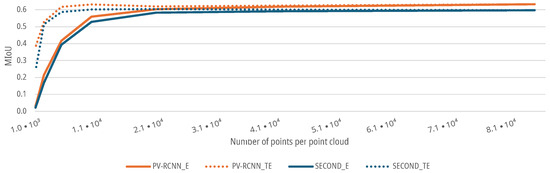

6.2. Results for Elevation Resolution Augmentation

For the data sets with an augmented elevation resolution, as described in Section 3.1.2, both DNNs show similar behavior as the reduced azimuth resolution. The SECOND and the PV-RCNN have a lower for a low elevation resolution of the respective scenes of the point clouds. The initial of both DNNs of for the SECOND and for the PV-RCNN remains stable for both the E and TE-cases up to a resolution of 20,804 points of the point clouds with a degradation , see Figure 12. Subsequently, the of both DNNs for the E-case decreases further with decreasing elevation resolution. For the TE-case, the of both networks only drops to a of for the SECOND and for the PV-RCNN up to a resolution of 5201 points before it then also decreases sharply with decreasing resolution, see Figure 12. Thus, both DNNs show that the prediction accuracy decreases with decreasing elevation resolution. For the present measurement setup or scenarios, the elevation resolution of the sensor could be reduced by a factor of 4 without significant degradation in the prediction accuracy of the DNNs. In addition, the results show that even DNNs trained with high resolutions, in this case, deliver good results up to a reduction in the elevation resolution by a factor of 4, with a deterioration of the of <5% with for the SECOND and for the PV-RCNN; by training the DNNs with a lower resolution, the performance can even be maintained up to a resolution reduced by a factor of 16, with a decrease in the of <5% with for the SECOND and for the PV-RCNN.

Figure 12.

Impact of augmented elevation resolution on the of DNNs for people detection.

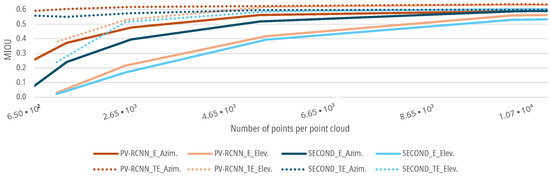

The following Figure 13 shows that the curves for both DNNs and training methods fall more significantly for a decrease in the elevation resolution than for a reduced azimuth resolution. This means that for the present measurement setup, the elevation resolution has a more significant influence on the people detection of the DNNs than the azimuth resolution of the sensor. The reason for this cannot be explained analytically due to the black box behavior of the DNNs. However, the deviation could be due to the data set, as groups of people who know each other tend to walk closer next to each other instead of behind each other and, therefore, need less resolution in the measurement setup in the elevation direction. Furthermore, this could also be due to the voxelization of the input features. The voxels in the implementation of the OpenPCDet Toolbox have different resolutions in the longitudinal, lateral and height directions. The voxels in the height direction, which are primarily influenced by the elevation resolution, are larger, and the resolution is therefore lower. This can lead to a cross-influence of the elevation direction augmentation on the prediction quality in the models or measurement setup used here. A further detailed analysis of this correlation should take place in the future and is discussed in Section 9.

Figure 13.

Impact comparison of augmented azimuth and elevation resolution on the of DNNs for people detection.

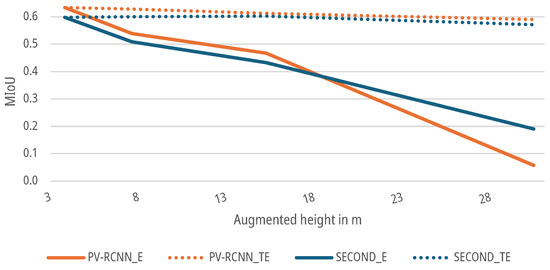

6.3. Results for Distance Augmentation

For the data sets with an augmented higher mounting height, as described in Section 3.2, both DNNs SECOND and PV-RCNN were again trained and tested for both the E-case and TE-case. As shown in Figure 14, the of both DNNs for the E-case drop to a of for the SECOND and for the PV-RCNN up to an augmented height of . The networks already fall below the threshold from the first measurement point when the distance of the original measurement setup is doubled. For the TE-case, the of both networks drop only marginally to a of for the SECOND and for the PV-RCNN at a working distance of . The networks do not exceed the threshold up to the measurement point at a measurement height of with for the SECOND and for the PV-RCNN compared to the initial data sets.

Figure 14.

Impact of augmented height on the of DNNs for people detection.

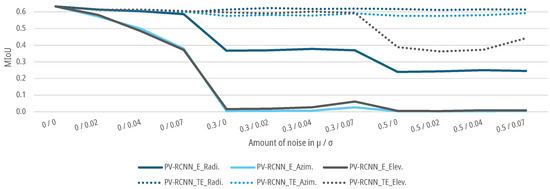

6.4. Results for Noise Augmentation

For the data sets with an augmented noise on the geometrical parameters of the point clouds, as described in Section 3.3, DNNs SECOND and PV-RCNN are again trained and tested for the E-case and TE-case. As shown in Figure 15 and Figure 16, the of both DNNs for the E-case drop to a of for azimuth noise and for elevation noise for the SECOND and for azimuth noise and for elevation noise for the PV-RCNN up to an augmented noise of and . The DNNs fall below the threshold for noise with a and with for azimuth noise and for elevation noise for the SECOND and for azimuth noise and for elevation noise for the PV-RCNN. For the radial noise, both DNNs for the E-case drop to a of for the SECOND and for the PV-RCNN up to an augmented noise of and . The DNNS fall below the threshold for noise with a and for the SECOND with and for the PV-RCNN with . In the E-case, both DNNs show significantly less sensitivity to noise in the radial measurement than in the azimuth or elevation measurement. For the TE-case, the of both DNNs drop only marginally to a of , , and for radial, azimuth, or elevation noise for the SECOND and , , and for radial, azimuth, or elevation noise for the PV-RCNN. This means that the DNNs can learn the task of people detection on data with noise on the radial, azimuth, or elevation measurement without significant degradation. The results of both models in the E-case show that has a more substantial effect on the models than the of the noise. This could be due to the voxelization of the deep learning methods. Due to the lower effective resolution and the averaging, is already relativized in the data processing before the actual DNN. At the same time, a propagates into the network and can only be compensated there to a limited extent. This is, as shown in Figure 15 and Figure 16, critical for the E-cases, as the model cannot compensate for the bias due to the lack of training.

Figure 15.

Impact of augmented noise on the of SECOND for people detection.

Figure 16.

Impact of augmented noise on the of PV-RCNN for people detection.

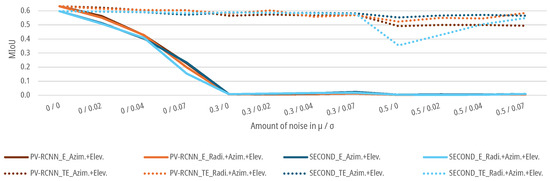

In addition, noise is combined for the azimuth and elevation measurements as well as the radial, azimuth, and elevation measurements. In that case, the object detection performance of both models drops more sharply for both cases with slight noise of the measurement variables up to and than with a single noisy measurement variable, as shown in Figure 17. No significant deviations can be recognized for higher noise in the TE case. Both DNNs can learn the correlations from the data for both test cases. However, from a noise level of , both models show slight deterioration in learning the correlations for a combined noise level.

Figure 17.

Impact of combined augmented noise on the radial, azimuth, and elevation measurement on the of DNNs for people detection.

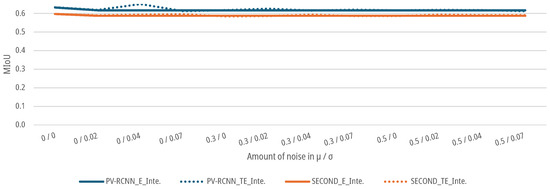

As shown in Figure 18, both the SECOND and the PV-RCNN show no sensitivity for the data sets augmented with noise on the intensity parameters of the point cloud. Both DNNs show for the E-case a drop to a of for the SECOND and for the PV-RCNN up to an augmented noise of and . The prediction accuracy of the DNNs does not fall below the threshold. For the TE-case, the of both networks drop only marginally to a of for the SECOND and for the PV-RCNN at an augmented noise of and . Again, the networks do not exceed the threshold compared to the initial data sets.

Figure 18.

Impact of augmented noise on the intensity parameters on the of DNNs for people detection.

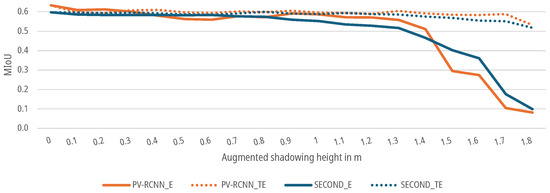

6.5. Results for Shading Augmentation

For the augmented data sets, which show the effect of shading as described in Section 3.4, both the SECOND and the PV-RCNN for the E-case as well as the TE-case, as shown in Figure 19, show a continuous decrease in the with increasing augmented shading. In the E-case, the of the predictions of both DNNs drops only marginally between 0 and augmented shading to a of for the SECOND network and a of for the PV-RCNN. In the range from to , the then drops significantly to for the SECOND and for the PV-RCNN. The 5% threshold is undercut by the SECOND at with and for the PV-RCNN at with . For the TE-case, the of the predictions of both DNNs drops only marginally up to a shading height of to a of for the SECOND and a of for the PV-RCNN. The 5% threshold is achieved at an augmented shading height of for the SECOND with and at for the first time with and with for the PV-RCNN. In the range from to , the of both DNNs begins to drop significantly. For the test subjects with heights in this range, the retrained networks show that they can detect people only with very few points and their shadows on the ground plane.

Figure 19.

Impact of augmented shadowing on the of DNNs for people detection.

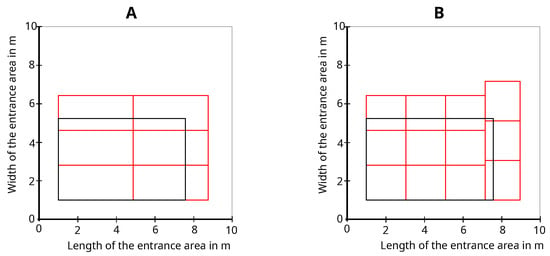

7. Optimum Number of Sensors and Sensor Position

In order to cover a working area as cost-effectively and efficiently as possible, a minimum number of sensors is required, as the sensors account for a significant proportion of the costs of the entire measurement setup. In [13], the calculation of a sufficient ceiling height is shown to ensure that a sensor detects at least one point of a person detection between the 5% and 95% percentile of people’s heights in Germany at typical distances between people. The overlap of the FoVs of different sensors is not considered since according to [13], this reduces the efficiency of the measurement setup without guaranteeing reliability. This approach is combined with the results from the previous Section 6.5, where a maximum shadowing on can be accepted with a degradation of less than 5% in the TE case. The TE case was selected because it is based on implementing a real measurement setup, and the models are presumably trained based on their existing data. Looking at a test group with a mean height of [47], combined with the results of the maximum shadowing of , a minimum height of from the body of the person detected in the FoV of the sensors for the given people height distribution can be defined, which the used DNNs needs, in order to detect people reliably without being explicitly trained on shadowing. Thus, is defined as the minimum height from which a person can be detected for the program to optimize the number of sensors and, compared to [13], to calculate the optimized number of sensors for detection at object detection level and not at point level. This method is used to develop a program that allows the optimal arrangement of LiDAR sensors to be found for work areas of different sizes. This allows the minimum number of sensors to be determined to save on costs and resources. For this purpose, the sensor parameters horizontal angle FoV , vertical angle FoV , the mounting height h, and the length l and width b of the working area are program inputs. Afterward, a plot with the optimized positioning of the sensors above the working area for detection at the point cloud level and object detection level is generated. The program calculates the optimum sensor alignment, as shown in Figure 20.

Figure 20.

Flowchart of the program calculating the minimum number of sensors for a defined working area.

The measurement setup-dependent maximum permissible FoV is calculated from the minimum scan height of for shadowed people detection and the minimum distance between two people and height difference described in [49], as shown in Equation (13), as the basis for optimizing the number of sensors and orientation. Based on the example of the measurement setup used to generate the data set, this optimization process results in the number of sensors and sensor orientation shown in Figure 21A for detection at the point cloud level and Figure 21B for detection at the object list level. The black rectangle shows the given working area, and the red rectangles show the detection areas for each sensor. The smaller detection area in Figure 21B for detection at the object list level resulting from the minimum height without shadowing a person for an object detection compared to detection at point cloud level in Figure 21A is recognizable.

Figure 21.

Optimized sensor number and orientation for detection at point cloud level (A) and detection on object detection level (B) based on the measurement setup.

8. Conclusions

This article investigates the influence of different LiDAR sensors and mounting parameters on the people detection performance of DNNs by augmenting a real data set. For this purpose, 95 different data sets are generated with the different sensor and mounting parameters resolution, noise, scene distance, and shadowing of people detections. Their influence on the people detection performance of different state-of-the-art DNNs is compared. Real scenarios are processed and evaluated through the hardware-oriented augmentation of the original data set for people detection performance. The results show that the resolution of the sensors can be reduced and the working distance increased within the measurement setup used in this project with only minor degradation on object list level of less than 5% with a reduced resolution of up to 32 factors or a distance of 4 factors. In addition, a higher noise level can be tolerated in the geometric and intensity measurements with a degradation on object list level of less than 5% with a Gaussian noise up to and . The results show that the tested DNNs are more sensitive to reduced elevation resolution than the azimuth resolution. This could be due to the information extraction in the DNNs, which partially compensates for a widespread distribution, whereas the shift in the entire distribution also leads to a shift in the information. Due to the black box characteristics of the DNNs, a fully analytical approach is not feasible here either. In addition, the networks show a higher sensitivity for noise in the azimuth and elevation measurement than for the ToF measurement. For the shading of people, it is shown that should be mapped in the point cloud from one point downwards to guarantee reliable people detection. Based on this information, a software program is presented that determines the minimum number of sensors required and their position based on the parameters of the measurement setup for detections at both point cloud and object detection levels. The results enable optimizing the sensor systems and the entire measurement setup to make LiDAR people detection systems more efficient, cost-effective, and reliable.

9. Outlook

Based on the results presented in this paper, LiDAR sensors can be developed more specifically for people counting and security applications in the future. Using the results obtained, sensor manufacturers can reduce costs and increase the sensors’ energy efficiency through adapted sensor technology. The augmentation methods presented can also enrich data sets and thus contribute to developing and training more robust DNNs. In addition, the insights gained into shadowing and the software for optimizing the number of sensors in an overall measurement setup can further contribute to the further spread of LiDAR-based people counting and security applications on the market and thus further improve reliability and data protection in the future. In future work, the relationship between the combinations of the parameters considered separately here, as well as the combination of different model configurations, such as the resolution of the voxelization and the object detection should be investigated, as this will certainly lead to crossover effects. This is possible, for example, when examining the combination of an increased distance and a lower resolution. The increased distance already reduces the point density on a measurement object, which influences object detection quality. An even lower resolution of the sensor would reduce the point density on the object even further and further impair object detection quality. The same effects could also occur with noise and a lower point density on the measurement object, for example, due to a lower resolution or a greater distance.

Author Contributions

Conceptualization, L.H.; data curation, L.H., F.S., J.Z. and S.D.; methodology, L.H.; software, L.H., F.S., J.Z. and S.D.; supervision, T.Z., M.K. and A.W.K.; validation, L.H., F.S., J.Z. and S.D.; visualization, L.H., F.S., J.Z. and S.D.; writing—original draft, L.H.; writing—review and editing, L.H., T.Z., M.K., F.B., M.J. and A.W.K. All authors have read and agreed to the published version of the manuscript.

Funding

This paper shows results from the project LiPeZ. This project is supported by the German Federal Ministry for Economic Affairs and Climate Action (BMWK) on the basis of a decision by the German Bundestag (Grant number: KK5227004DF1). The authors acknowledge the financial support from the Ministry; the responsibility for the content remains with the authors.

Data Availability Statement

A section of the original data presented in the study are openly available at https://huggingface.co/datasets/LukasPro/PointCloudPeople (accessed on 11 March 2025) and licensed under the CC-BY-4.0.

Conflicts of Interest

Author Florian Bindges was employed by the company Blickfeld GmbH. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Blickfeld GmbH. 3D-LiDAR-Basierter Perimeterschutz. Available online: https://www.blickfeld.com/de/anwendungen/security/ (accessed on 17 December 2024).

- Blickfeld GmbH. Datenschutzkonforme Personenzählung und Analyse von Menschenmengen. Available online: https://www.blickfeld.com/de/anwendungen/crowd-analytics/ (accessed on 17 December 2024).

- IBM Deutschland GmbH. What is Edge AI? Available online: https://www.ibm.com/topics/edge-ai (accessed on 17 December 2024).

- Borel-Donohue, C.; Young, S.S. Image quality and super resolution effects on object recognition using deep neural networks. In Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications; SPIE: Baltimore, MD, USA, 2019; Volume 11006, pp. 596–604. [Google Scholar]

- University of Michigan. AI’s Mysterious ‘Black Box’ Problem, Explained. Available online: https://umdearborn.edu/news/ais-mysterious-black-box-problem-explained (accessed on 17 December 2024).

- Wu, Y.; Tsotsos, J. Active control of camera parameters for object detection algorithms. arXiv 2017, arXiv:1705.05685. [Google Scholar]

- Rodríguez-Rodríguez, J.A.; López-Rubio, E.; Ángel-Ruiz, J.A.; Molina-Cabello, M.A. The Impact of Noise and Brightness on Object Detection Methods. Sensors 2024, 24, 821. [Google Scholar] [CrossRef] [PubMed]

- Engels, G.; Aranjuelo, N.; Arganda-Carreras, I.; Nieto, M.; Otaegui, O. 3D object detection from lidar data using distance dependent feature extraction. arXiv 2020, arXiv:2003.00888. [Google Scholar]

- Corral-Soto, E.R.; Grandhi, A.; He, Y.Y.; Rochan, M.; Liu, B. Effects of Range-based LiDAR Point Cloud Density Manipulation on 3D Object Detection. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 3166–3172. [Google Scholar]

- Richter, J.; Faion, F.; Feng, D.; Becker, P.B.; Sielecki, P.; Glaeser, C. Understanding the Domain Gap in LiDAR Object Detection Networks. arXiv 2022, arXiv:2204.10024. [Google Scholar]

- Theodose, R.; Denis, D.; Chateau, T.; Frémont, V.; Checchin, P. A deep learning approach for LiDAR resolution-agnostic object detection. IEEE Trans. Intell. Transp. Syst. 2021, 23, 14582–14593. [Google Scholar] [CrossRef]

- Haider, A.; Haas, L.; Koyama, S.; Elster, L.; Köhler, M.H.; Schardt, M.; Zeh, T.; Inoue, H.; Jakobi, M.; Koch, A.M.; et al. Modeling of Motion Distortion Effect of Scanning LiDAR Sensors for Simulation-based Testing. IEEE Access 2024, 12, 13020–13036. [Google Scholar] [CrossRef]

- Haas, L.; Zedelmeier, J.; Bindges, F.; Kuba, M.; Zeh, T.; Jakobi, M.; Koch, A.W. Data Protection Regulation Compliant Dataset Generation for LiDAR-Based People Detection Using Neural Networks. In Proceedings of the 2024 Conference on AI, Science, Engineering, and Technology (AIxSET), Laguna Hills, CA, USA, 30 September–2 October 2024; pp. 98–105. [Google Scholar] [CrossRef]

- Zhang, C.; Li, A.; Zhang, D.; Lv, C. PCAlign: A general data augmentation framework for point clouds. Sci. Rep. 2024, 14, 21344. [Google Scholar] [CrossRef] [PubMed]

- Morin-Duchesne, X.; Langer, M.S. Simulated lidar repositioning: A novel point cloud data augmentation method. arXiv 2021, arXiv:2111.10650. [Google Scholar]

- Chen, Y.; Hu, V.T.; Gavves, E.; Mensink, T.; Mettes, P.; Yang, P.; Snoek, C.G. Pointmixup: Augmentation for point clouds. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 330–345. [Google Scholar]

- Xiao, A.; Huang, J.; Guan, D.; Cui, K.; Lu, S.; Shao, L. Polarmix: A general data augmentation technique for lidar point clouds. Adv. Neural Inf. Process. Syst. 2022, 35, 11035–11048. [Google Scholar]

- Zhu, Q.; Fan, L.; Weng, N. Advancements in point cloud data augmentation for deep learning: A survey. Pattern Recognit. 2024, 153, 110532. [Google Scholar] [CrossRef]

- Zhang, Z.; Girdhar, R.; Joulin, A.; Misra, I. Self-supervised pretraining of 3D features on any point-cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Online, 19–25 June 2021; pp. 10252–10263. [Google Scholar]

- He, Y.; Zhang, Z.; Wang, Z.; Luo, Y.; Su, L.; Li, W.; Wang, P.; Zhang, W. IPC-Net: Incomplete point cloud classification network based on data augmentation and similarity measurement. J. Vis. Commun. Image Represent. 2023, 91, 103769. [Google Scholar] [CrossRef]

- Ma, W.; Chen, J.; Du, Q.; Jia, W. PointDrop: Improving object detection from sparse point clouds via adversarial data augmentation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 10004–10009. [Google Scholar]

- Li, D.; Shi, G.; Li, J.; Chen, Y.; Zhang, S.; Xiang, S.; Jin, S. PlantNet: A dual-function point cloud segmentation network for multiple plant species. ISPRS J. Photogramm. Remote Sens. 2022, 184, 243–263. [Google Scholar] [CrossRef]

- Lehner, A.; Gasperini, S.; Marcos-Ramiro, A.; Schmidt, M.; Mahani, M.A.N.; Navab, N.; Busam, B.; Tombari, F. 3D-VField: Adversarial augmentation of point clouds for domain generalization in 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17295–17304. [Google Scholar]

- Kong, L.; Liu, Y.; Chen, R.; Ma, Y.; Zhu, X.; Li, Y.; Hou, Y.; Qiao, Y.; Liu, Z. Rethinking range view representation for lidar segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 228–240. [Google Scholar]

- Zhang, W.; Xu, X.; Liu, F.; Zhang, L.; Foo, C.S. On automatic data augmentation for 3D point cloud classification. arXiv 2021, arXiv:2112.06029. [Google Scholar]

- Blickfeld GmbH. Qb2 Documentation. Available online: https://docs.blickfeld.com/qb2/Qb2/index.html (accessed on 29 September 2024).

- Wang, C.; Yang, X.; Xi, X.; Nie, S.; Dong, P. Introduction to LiDAR Remote Sensing; CRC Press: Boca Raton, FL, USA, 2024. [Google Scholar]

- Gotzig, H.; Geduld, G.O. LIDAR-Sensorik; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2015; pp. 317–334. [Google Scholar]

- Haas, L.; Leuze, N.; Haider, A.; Kuba, M.; Zeh, T.; Schöttl, A.; Jakobi, M.; Koch, A.W. VelObPoints: A Neural Network for Vehicle Object Detection and Velocity Estimation for Scanning LiDAR Sensors. In 2024 IEEE SENSORS; IEEE: New York, NY, USA; pp. 1–4.

- Blickfeld GmbH. Scan Pattern. Available online: https://docs.blickfeld.com/qb2/Qb2/working_principles/scan_pattern.html (accessed on 18 December 2024).

- Traeger, M.; Eberhart, A.; Geldner, G.; Morin, A.M.; Putzke, C.; Wulf, H.; Eberhart, L.H.J. Künstliche neuronale Netze. Der Anaesthesist 2003, 52, 1055–1061. [Google Scholar] [CrossRef] [PubMed]

- Paetz, J. Neuronale Netze: Grundlagen; Springer: Berlin/Heidelberg, Germany, 2006; pp. 27–41. [Google Scholar]

- Krauss, P. Wie Lernt Künstliche Intelligenz? Springer: Berlin/Heidelberg, Germany, 2023; pp. 125–138. [Google Scholar]

- Lippe, W.-M. Künstliche Neuronale Netze; Springer: Berlin/Heidelberg, Germany, 2006; pp. 45–243. [Google Scholar]

- Dörn, S. Neuronale Netze; Springer: Berlin/Heidelberg, Germany, 2017; pp. 89–148. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-voxel feature set abstraction for 3D object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 14–19 June 2020; pp. 10529–10538. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. 3D Object Detection Evaluation 2017. Available online: https://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d (accessed on 18 December 2024).

- OpenPCDet Development Team. OpenPCDet: An Open-source Toolbox for 3D Object Detection from Point Clouds. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 22 May 2024).

- Blickfeld GmbH. Qb2—Datasheet. Datasheet. Available online: https://www.blickfeld.com/lidar-sensor-products/qb2/ (accessed on 22 May 2024).

- Haider, A.; Pigniczki, M.; Köhler, M.H.; Fink, M.; Schardt, M.; Cichy, Y.; Zeh, T.; Haas, L.; Poguntke, T.; Jakobi, M.; et al. Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces. Sensors 2022, 19, 7556. [Google Scholar] [CrossRef] [PubMed]

- Zeh, T.; Haas, L.; Haider, A.; Kastner, L.; Kuba, M.; Jakobi, M.; Koch, A.W. Data-Driven Performance Evaluation of Machine Learning for Velocity Estimation Based on Scan Artifacts from LiDAR Sensors. SAE Int. J. CAV 2025, 8, 11. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, J.; Jiang, C.; Xie, T.; Liu, R.; Wang, Y. Adaptive Suppression Method of LiDAR Background Noise Based on Threshold Detection. Appl. Sci. 2003, 13, 3772. [Google Scholar] [CrossRef]

- Belhedi, A.; Bartoli, A.; Bourgeois, S.; Gay-Bellile, V.; Hamrouni, K.; Sayd, P. Noise modelling in time-of-flight sensors with application to depth noise removal and uncertainty estimation in three-dimensional measurement. IET Comput. Vis. 2015, 9, 967–977. [Google Scholar] [CrossRef]

- Chugani, V. Gaussian Distribution; A Comprehensive Guide. Available online: https://www.datacamp.com/tutorial/gaussian-distribution?dc_referrer=https%3A%2F%2Fchatgpt.com%2F (accessed on 22 January 2025).

- Statista GmbH. Mittelwerte von Körpergröße, -gewicht und BMI bei Männern in Deutschland nach Altersgruppe im Jahr 2021. Available online: https://de.statista.com/statistik/daten/studie/260920/umfrage/mittelwerte-von-groesse-gewicht-und-bmi-bei-maennern-nach-alter/ (accessed on 28 April 2025).

- Xu, Q.; Zhong, Y.; Neumann, U. Behind the curtain: Learning occluded shapes for 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 2893–2901. [Google Scholar]

- Galaktionow, B. Wie Viel Nähe Schickt Sich. Available online: https://www.sueddeutsche.de/wissen/frage-der-woche-wie-viel-naehe-schickt-sich-1.584878 (accessed on 22 May 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).