1. Introduction

Spatially resolved thermal measurements [

1] of incandescent objects [

2] have relevant applications in several fields ranging from the industrial production of metals [

2,

3] and ceramics [

4] to monitoring the eruptive activities of volcanos [

5,

6]. Recent attention on the monitoring and controlling of incandescent or molten metals is being triggered by the rapid development of additive manufacturing deposition processes [

7,

8]. In such techniques, a spatially focused energy source like a laser, an electron beam, or an electric arc determines the local fusion of a metallic substrate [

8,

9] generating a melt pool. The melt pool is enlarged by adding extra material, provided by a wire, or ejected as powder by nozzles that solidifies, depositing a solid track when the heating source moves away. The part to be printed is sliced into multiple tracks in several layers, defining a sequence of instructions that are executed by the printing machine [

9]. Although a lot of research efforts have been done [

10] some issues are still open: the geometrical match of the part with the design cannot be guaranteed for all geometries due to excessive or reduced metal deposition [

11]. Both defects could also be related to the spatial temperature distribution in the part that influences the melt pool temperature. A warmer melt pool will solidify later, collecting more raw material and potentially deforming the part, thus affecting the final geometry [

11]. High-speed thermal monitoring, based on pyrometers [

12,

13] or mid-infrared cameras [

14] proved to be able to control and stabilize the deposition rate [

10] and to monitor porosity [

14]; nonetheless, the pyrometer-based control suffers significant limitations in complex paths [

15]. Pyrometers lack spatial resolution that is important to monitor the geometry of the track [

16,

17]. Temperature measurements by mid-infrared cameras are subject to the uncertainty of the emissivity coefficient, which changes in the phase transitions or due to oxidation [

13,

18]. To overcome this limitation, the ratio pyrometers split light into two spatially superimposed narrow wavebands, reducing the effect of uncertainty on emissivity on temperature estimation [

12,

13,

15]. Ratio pyrometers provide accurate temperature measurements but lack spatial resolution [

13]. Implementing the technique of ratio pyrometry with cameras requires two filtered narrow bands and spatially superimposed images [

13], which requires a dedicated optical setup with high cost and complexity [

19]. A wide application of additive manufacturing techniques requires reducing the cost of the monitoring systems while improving speed and spatial resolution, which could be obtained by substituting the existing systems, mid-infrared cameras or the ratio pyrometer with the much less expensive and commonly diffused visible CMOS cameras based on the Bayer pattern filter [

17,

20,

21].

1.1. Temperature Measurement with Cameras

Early research on incandescent object temperature measurement relies on monochrome cameras [

22,

23,

24], proposing calibration models directly relating the gray level to temperature. To improve robustness against emissivity coefficient variation, systems based on the ratio pyrometry principle [

13], also known as two-color, have been developed. The ratio pyrometry algorithm requires obtaining two spatially superimposed images representing the same scene at the same time in two different spectral bands, obtained by filtering incoming light with two narrow band spectral filters [

19,

25,

26,

27,

28,

29]. All these systems require a specific optical setup to guarantee the spatial superposition of the images acquired at the different wavebands: a dichroic mirror [

25,

26,

28] and a filter assembly [

27] or a plenoptic lens [

19,

29]. Using a color or a multispectral camera with color filters positioned on each pixel of the CMOS sensor avoids the need for a dedicated optical setup. Nonetheless, the filters installed on the sensor present a broad spectral transmission making the ratio pyrometry algorithm not fully applicable [

13,

17]. Several calibration approaches have been proposed specifically to address the challenge posed by the broad spectral response of the sensor’s filters, which differs from the idealized narrow-band filter assumption [

17,

20,

30,

31]. One of the proposed calibration approaches maps the color space of the camera into the xy color space defined by the CIE 1931 standard [

32] with a conversion matrix and then rely on the standard observer to model the Planckian locus that associates the chromaticity values to temperature. The approach of converting color space is tested only above 1700 K [

21]. Nonetheless, this method appears not ready for industrial application since a robust calibration method is missing. The conversion to xy observer is sensitive to white balance setting and to the color conversion matrix optimization. Both of these are strongly illumination-dependent, requiring specific lighting equipment for reliable calibration. A recent approach models the spectral overlap of RGB cameras by mapping the red, green, and blue channels with equivalent wavelengths to rely on the two-color method [

17]. In addition, the blue channel is subtracted from the others to compensate for the near-infrared light collected by the camera due to the leaks of the filters, but this approximation holds only up to around 2000 K. Processing of high melting point metals in additive manufacturing easily overcomes this limit [

33,

34], requiring the development of an algorithm that better approximates the physical modelling of the system and generalizes to higher temperature. A preliminary test has been performed with multispectral cameras with narrow band filters by optimizing a matrix to avoid band crosstalk. The optimization is specific for the optical system [

34]. Calibration data are collected by looking at a black body furnace at a known temperature [

34,

35]. The anti-crosstalk matrix appears not applicable to the RGB cameras due to the broader spectral bands, requiring a full description of the physics of the system. A full model regressing temperature from the RGB color space is proposed in [

36] but the method proposed is limited to the working range of the black body used for calibration, typically too low for additive manufacturing.

1.2. Planckian Locus in RGB Color Space

To overcome the limitations related to limited temperature range of the black body source used for calibration while keeping into account the complexity of the system, a consistent physical model has to be developed. Therefore, the Planckian locus, relating chromaticity to temperature, is obtained by integrating the radiated emission by incandescent objects at a given temperature in the wavelength range of the camera. Radiated light intensity is determined by the Planck’s law, modeling the incandescent object as a graybody with a constant emissivity coefficient. Radiated light is attenuated by the transmission of the optical chain: including lens, bandpass or attenuation filters, and filters installed directly on the sensor. The latter are modeled with the quantum efficiency parameter of the camera [

17,

31,

36]. Therefore, estimating the transformation from observed colors to temperature requires an accurate knowledge of the quantum efficiency (QE) of the camera together with its optical chain since uncertainty on QE derives also from variability among sensors, filters, and lenses that should be nominally identical, being the same model [

37]. QE uncertainty could affect the calibration, requiring compensation to run the model [

17].

1.3. Quantum Efficiency

The QE of the cameras [

37] is measured according to the EMVA 1288 standard [

38]: monochromatic light of known intensity is conveyed on the sensor by a monochromator, scanning the wavelength. The intensity of the signal generated by the camera is collected for each wavelength and each band. The procedure requires specific instrumentation, dedicated labs, and optical expertise [

17,

30]. Alternative methods infer QE from several light-emitting diodes with different peak emission wavelengths [

39] or sets of filters [

40] or infer it with deep learning networks [

41]. These methods require dedicated tools and provide limited precision. Therefore, a method specifically dedicated to optimizing QE for temperature calibration that is strongly sensitive to the near-infrared region of the spectrum between 700 and 1000 nm is not available.

1.4. Regression of Chromaticity to Temperature

Once the Planckian locus is determined, the temperature needs to be regressed from chromaticity. The proposed methods are approximating the CIE 1931 Planckian locus with a function [

42,

43], interpolation [

44], matrix search [

36] or regressing with a neural network [

21]. Function approximation is defined only in limited temperature ranges on the CIE 1931 Planckian locus; therefore, it cannot be generalized to a generic locus. The neural network shows higher robustness on noisy data than other methods [

21], allowing generalization on multispectral cameras not supported by interpolation [

44] or matrix search [

36].

1.5. Our Approach

In this paper, we present a novel method for temperature measurement in industrial process monitoring of molten metals, with particular emphasis on applications in additive manufacturing. The approach is designed to operate over the broad temperature ranges typical of arc discharge and laser-based processes, while supporting acquisition rates of tens of Hz and spatial resolutions down to tens of micrometers. Unlike state-of-the-art techniques that prioritize accuracy within narrow calibration ranges or rely on dedicated optical setups—such as two-color pyrometry methods, which require spatially superimposed images filtered through narrow spectral bands—our method emphasizes robustness and generalizability. Specifically, our method enables temperature estimation using widely available and relatively low-cost color or multispectral cameras.

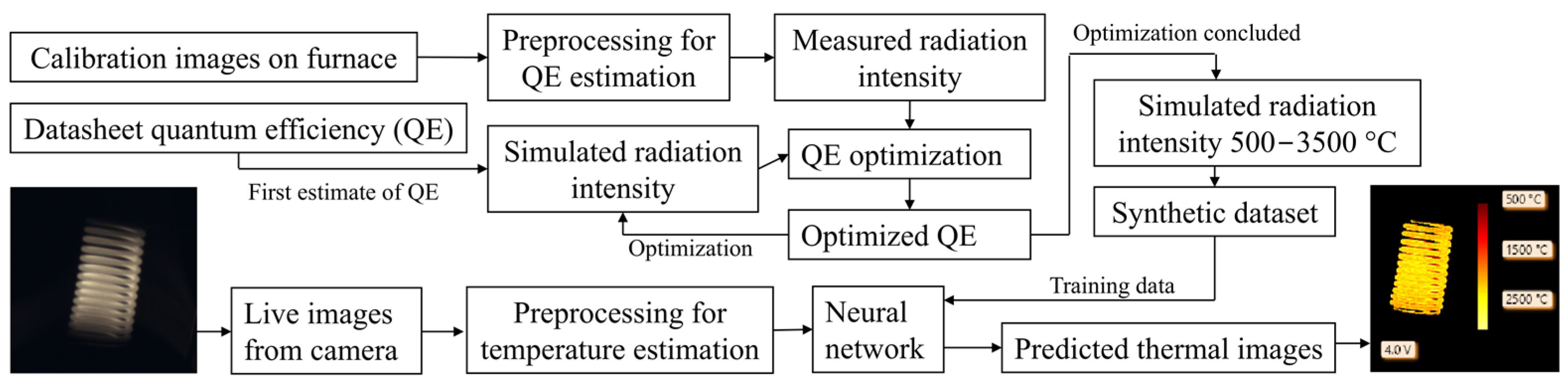

To address the limitations posed by the complex, wavelength-dependent quantum efficiency of such sensors, we incorporate the physical principles governing the optical system into the calibration process as a physics-based abstraction. This enables temperature prediction beyond the range covered by standard black body calibration, while accounting for the full spectral response of the imaging system. In particular, we refine the quantum efficiency (QE) values, typically reported in camera datasheets, using images acquired from a furnace that approximates a black body source at various temperatures. An overview of the full pipeline—from camera calibration and QE optimization to temperature prediction via a neural network—is illustrated in

Figure 1.

The proposed method involves two main stages: a calibration phase for quantum efficiency (QE) optimization and a temperature estimation phase using a trained neural network. In the first stage, calibration images are acquired by imaging an Inconel 718 plate heated in a furnace across a known temperature range. An initial estimate of the camera’s spectral quantum efficiency is obtained from manufacturer datasheets. To account for the limited dynamic range of industrial cameras in the calibration phase, multiple images with varying exposure times at the same temperature are acquired. The calibration images are then preprocessed to extract measured radiation intensities, which are compared with simulated intensities based on Planck’s law and the initial QE estimate. A numerical optimization procedure is used to adjust the QE curve to minimize the discrepancy between measured and simulated data. The resulting optimized QE is subsequently used to simulate spectral radiation intensities over a wide temperature range (500−3500 °C), generating a synthetic dataset for the expected radiation intensities.

In the second stage, this synthetic dataset—consisting of simulated images paired with known temperatures—is used to train a neural network to perform temperature regression from normalized camera data. During inference, live images acquired by the camera are preprocessed similarly and input into the trained network, which outputs predicted thermal images. The neural network approach improves robustness to noise [

21,

34,

45] and overcomes previous methods based on the approximation of the Planckian locus with polynomials [

42,

43,

44]. Our method relies on normalization procedures that cancel all the effects that affect the magnitude of signal without affecting its spectral distribution, improving robustness towards exposure time or working distance variations while reducing the effect of the uncertainty on emissivity.

A wide experimental campaign validates the calibration method both on a standard color camera and on a multispectral camera, confirming that QE estimation is feasible on several cameras. Results of thermal measurements on calibration images are reported, with an estimate of their uncertainty. The generalization capability has been proven by measuring the temperature of a filament of a halogen lamp temperature calibrated. Our approach allows thermal imaging across a broad temperature range, including regions where the acquisition of calibration images at a known temperature is impractical, while ensuring consistency with the physical characteristics of the imaging system.

The paper is structured as follows.

Section 2 describes the materials and methods used for the experiment including the cameras, the furnace for heat treatment of metals, and the quantum efficiency calibration method.

Section 3 presents the experimental results obtained both with a color camera and with a multispectral camera.

Section 4 discusses the results comparing them with the state of the art, while

Section 5 presents some conclusions.

2. Materials and Methods

This section provides a detailed overview of the proposed method and experimental setup.

Section 2.1 describes the industrial color camera used for the experiment, together with the furnace used for metal heat treatment, which approximates a black body source. The dataset acquisition procedure is detailed in

Section 2.2. The full experiment has been repeated with a multispectral camera, introduced in

Section 2.3.

Section 2.4 explains the physical modeling of the optical system, including the camera, lens, and filters. Initial data for the model hyperparameters are reported in

Section 2.5.

Section 2.6 describes the preprocessing of experimental data, and

Section 2.7 introduces the calibration method used to refine the optical properties of the imaging system and to predict the Planckian locus in a wide temperature range. The neural network used for temperature inference—trained exclusively on a synthetic dataset describing the Planckian locus—is detailed in

Section 2.8.

Section 2.9 describes the method for uncertainty evaluation.

Finally, alternative temperature calibration methods from the literature are discussed in

Section 2.10 and

Section 2.11. The two-color method [

17], which estimates temperature from the ratio of intensities at two wavelengths, is presented in

Section 2.10. Another approach, presented in

Section 2.11, derives the Planckian locus directly from quantum efficiency [

36], offering insight into its physical significance. Calibration approaches specific to multispectral cameras are also discussed, particularly those involving the optimization of an anti-crosstalk matrix [

34,

35]. The results obtained with an anti-crosstalk matrix with the same camera used in this paper and the same furnace have been previously reported [

34].

2.1. Camera and Lens

A general-purpose industrial color CMOS camera has been chosen for testing the calibration algorithm. The camera model is UI-3370CP-C-GL-R2 (IDS Imaging Development Systems GmbH, Obersulm, Germany). The maximum camera resolution is 2048 × 2048 pixels and pixel size is 5.5 μm. The optical chain includes a visible bandpass filter model BP550 (Midwest Optical Systems, Palatine, Illinois), used to reduce sensitivity outside the 400–700 nm range, and a 50 mm focal lens model LM50JC10M (Kowa Optimed Deutschland GmbH, Duesseldorf, Germany). The dark level offset is set to 50 to include 95% of the noise distribution of the signal generated by the dark current. The digital gain is set to the minimum and the sensor gain to −7 to maximize the dynamic range. Finally, a pseudo-white balance has been done by setting the gain of red, green, and blue channels to 1.0, 2.0, and 4.0, respectively.

2.2. Dataset Acquisition

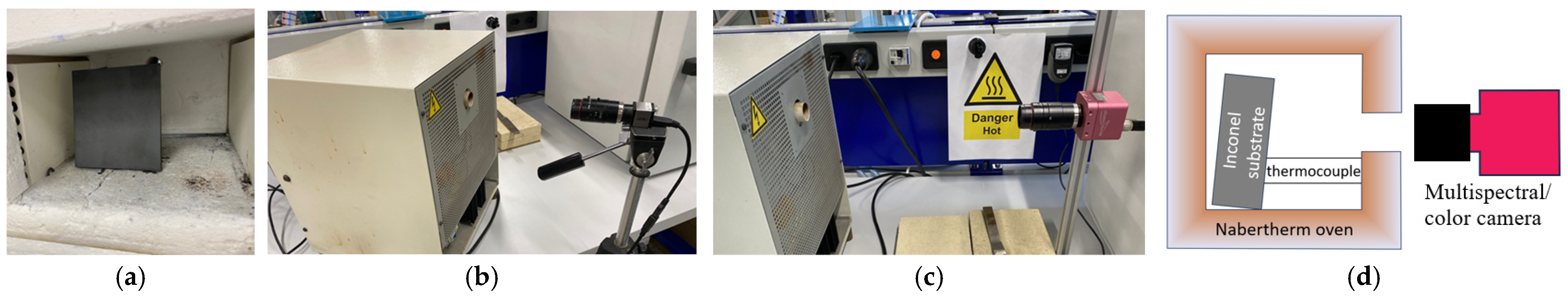

The experimental setup (

Figure 2) used for dataset acquisition includes a oven for heat treatments of metals capable of reaching 1100 °C (Nabertherm GmbH, Lilienthal, Germany). A hole in the lateral wall allows the camera to look inside the oven at an Inconel 718 substrate as shown in

Figure 2. The inner chamber approximates a black body source of radiation.

The ground truth temperature of the substrate, a metallic plate of Inconel 718, is measured with a K thermocouple in contact with its surface. The thermocouple features mineral insulation and an Inconel 600 metallic sheath of 3 mm diameter. The maximum operating temperature is 1100 °C. Accuracy data are reported in

Section 2.9. To ensure good thermal contact, the plate is slightly tilted to allow the thermocouple to rest firmly against its surface. The thermocouple is connected to a temperature controller that tunes the heating resistances of the oven.

The camera is positioned approximately 30 cm from the oven, with its optical axis roughly aligned with the axis of a viewing hole in the oven wall, providing a clear line of sight to the Inconel sample inside. The optics are focused on the surface of the Inconel plate, and the aperture is set to f/4.0.

Following the installation of the camera, the oven is switched on and set to its maximum operating temperature of 1100 °C. The heating process takes several hours, after which an additional hour is allowed to ensure thermal equilibrium between the oven, the sample, and the camera. Once thermal stability is achieved, a batch of images corresponding to 1100 °C is acquired.

Subsequently, the target temperature on the controller is decreased in 10 °C steps, down to 700 °C. The calibration temperature array is At each step, a brief pause of a few minutes is observed to allow thermal stabilization before acquiring the next image batch. An additional acquisition is performed at 100 °C, where incandescence is negligible; these images serve as dark references, capturing sensor dark current across exposure times.

Ambient light is minimized during all measurements to avoid interference. For each temperature level, multiple exposure times are tested. For the color camera, the shortest exposure is 0.1 ms; for the multispectral camera, it is 10 ms. Each subsequent exposure is obtained by multiplying the previous one by approximately 1.26 (i.e., ), forming a logarithmic scale. This scale is chosen to match the exponential nature of blackbody radiation intensity as a function of temperature.

At each temperature and exposure setting, 10 images are acquired and averaged to reduce temporal noise. Dark-frame subtraction is applied using the 100 °C reference images. Demosaicing is then performed, and the average gray level for each spectral band is calculated within a predefined region of interest (ROI) on the Inconel 718 surface. The ROI corresponds to an elliptical area of approximately 10,000 pixels.

The full dataset includes 13,330 images for the color camera and 5440 for the multispectral one.

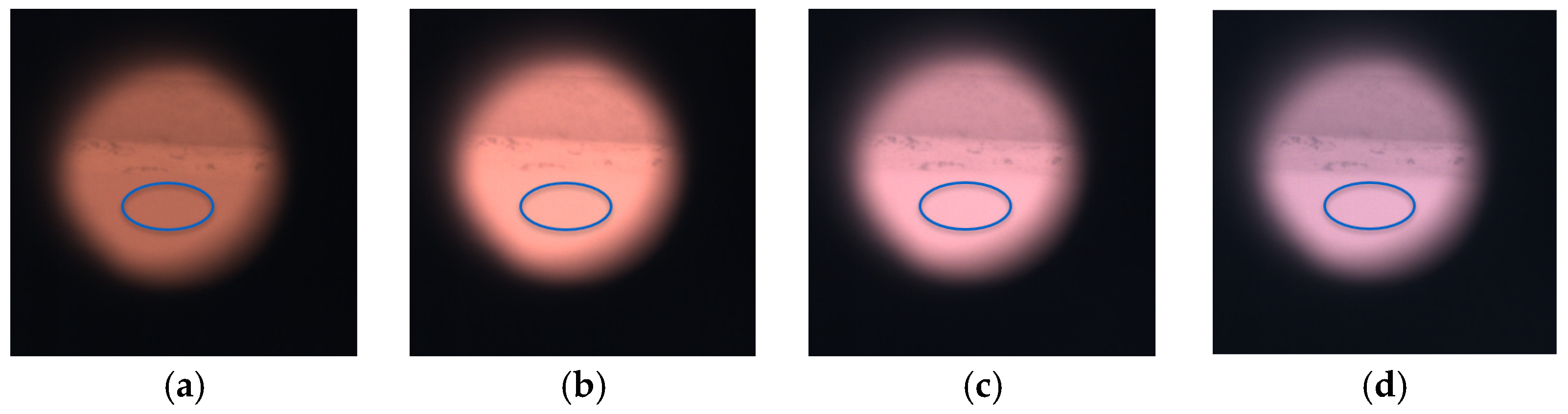

Figure 3 presents representative examples of the images acquired with the color camera: the circular field of view corresponds to the oven’s lateral aperture, with the surrounding area appearing dark. The Inconel 718 substrate is located at the lower portion of the frame, where the elliptical ROI is defined.

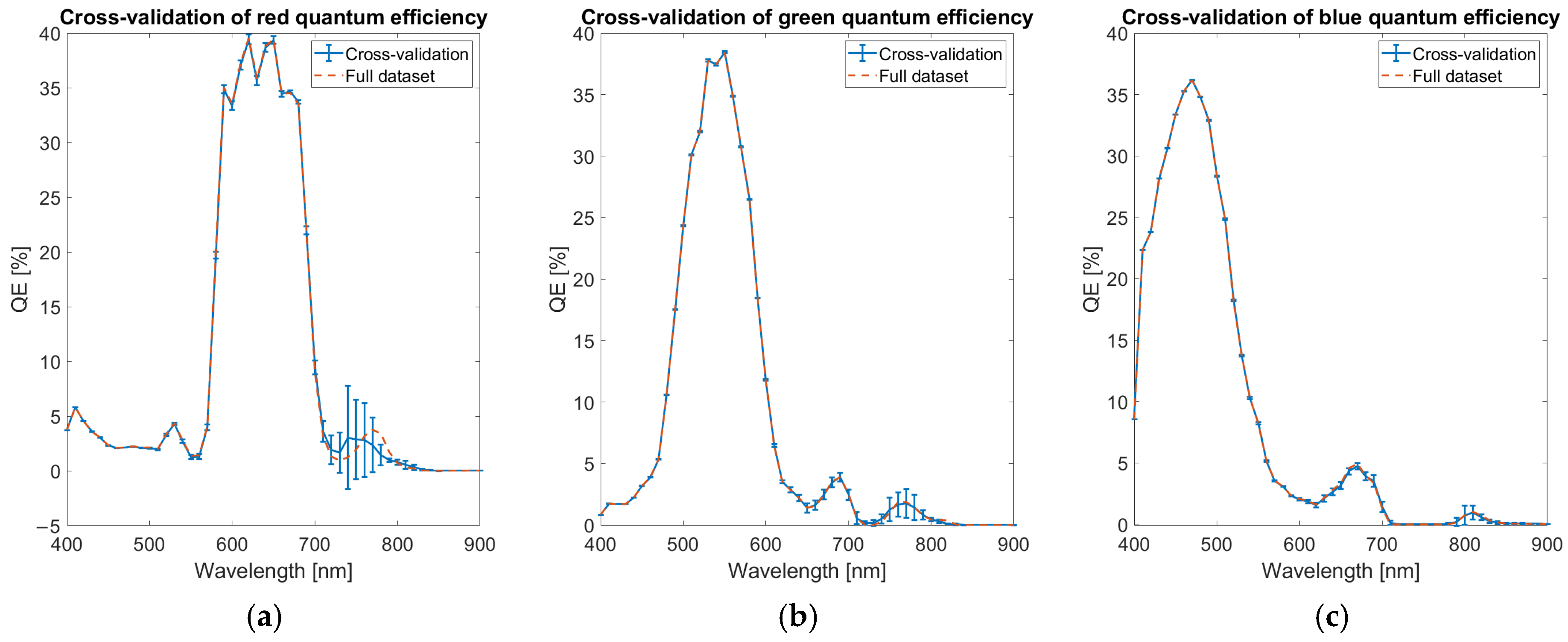

2.3. Multispectral Camera

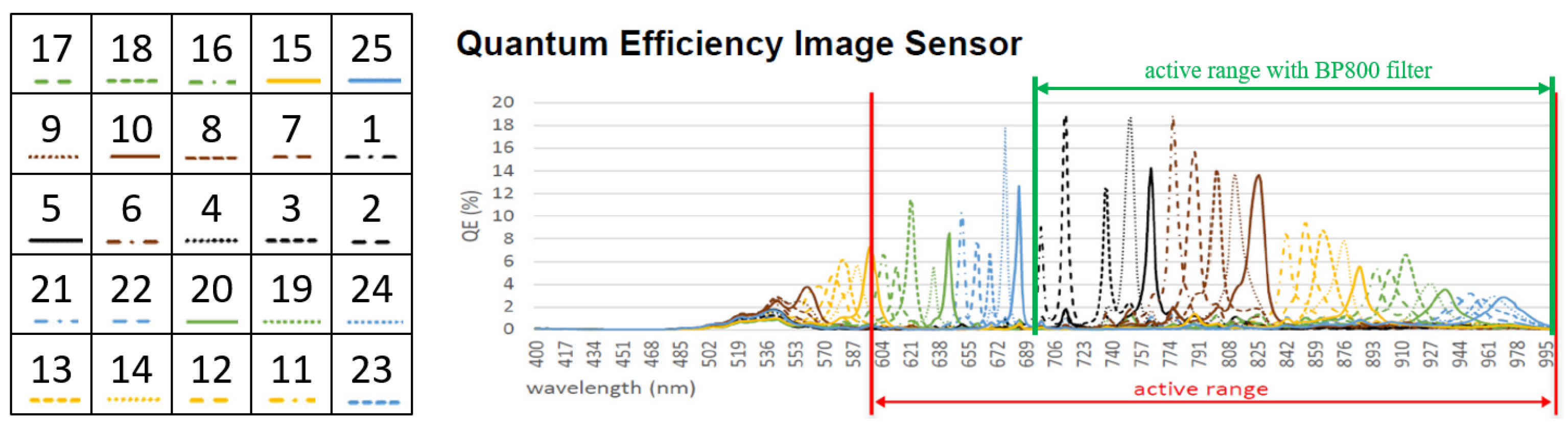

A second experimental campaign captured images of the same substrate with a multispectral camera in the near-infrared range. The used camera is MV1-D2048x1088-HS02-96-G2 (PhotonFocus AG, Lachen, Switzerland) based on the CMV2K-SM5x5-NIR sensor (Imec, Leuven, Belgium). The sensor has a pattern size of 5 × 5 with 25 different microfilters installed on the pixels. The quantum efficiency plot (

Figure 4) shows a relevant spectral overlap of the QE among microfilters meaning that a photon of a specified wavelength could determine a count in multiple spectral bands. A BP800 bandpass filter (Midwest Optical Systems) has been installed to limit the sensitivity of the camera in the range 700–1000 nm and the same optic of the previous setup has been used. A neutral density filter ND200 (Midwest Optical Systems) has been installed to attenuate light by a factor of around 100 times. Images have been collected in the temperature range of 800–1100 °C with steps of 10 °C, since the attenuation filter made the images in the range 700–800 °C too dim. As in the previous case, 10 images are collected and averaged, dark images are subtracted, demosaicing is done according to the scheme in

Figure 4, and the gray levels for each band and temperature are evaluated by averaging on an ROI corresponding to the metallic substrate.

2.4. Physical Modelling

Physical modelling of the imaging system is represented in

Figure 5. The surface of the incandescent metal at temperature

emits radiation with spectral radiance described by the Planck law. Radiation attenuation by the transmission through air

is considered negligible. Radiation is collected by the lens under a solid angle

and attenuated by the transmission through optical filters

and through the lens

. The sensor converts photons into electrons, with quantum efficiency depending on band (red, green, blue) and wavelength

. Therefore, the Planck law is rewritten in terms of photon density. The pixel collects the photons hitting its sensitive area

and integrates them over exposure time

and over the entire spectral range. The photoelectron signal is then amplified by a band dependent gain

, quantized into an 8-bit digital signal and postprocessed to obtain a color image.

To estimate the number of photoelectrons generated in a camera pixel due to blackbody radiation, we begin with the spectral radiance of light

emitted by a black body at temperature

and wavelength

. The spectral radiance is described by Planck’s law and expressed in units of

. An emissivity coefficient

, supposed to be independent of wavelength and temperature in graybody approximation, is introduced to describe the non-ideality of the emission. The spectral radiance is attenuated by considering the transmission spectra of the atmosphere

, of the lens

and of the filters

.

The parameters

and

equal to 0.0144 mK [

46], are the first and the second radiation constants, respectively. The spectral radiance is converted to spectral photon radiance

by dividing by the energy of a single photon,

yielding the expression

. The total number of photons incident on a pixel is then obtained by integrating this quantity over the sensor’s wavelength sensitivity range, and multiplying by the pixel area

, the solid angle of collection

, and the exposure time

. To account for the detector’s efficiency, the integrand is weighted by the quantum efficiency

of each band

(3 for the color camera and 25 for the multispectral one). The resulting number of photoelectrons

collected by a pixel of band

at temperature

is thus given by Equation (2):

The signal digitized by the camera

is obtained by multiplying the photoelectrons

by the internal gain of the camera

that could be different for the red, green, and blue bands b due to the white balance. Dark signal subtraction allows to compensate for the contribution of dark current, and therefore its effect will be neglected in the simulation. The coefficient

collects all the terms independent of wavelength and exposure time. Furthermore, the system quantum efficiency

is defined to keep into consideration all the terms depending on the wavelength. The digital signal collected by each pixel of the camera, after dark noise subtraction is reported in Equation (3):

To make color estimation versus temperature independent of exposure time, the whole Equation (3) is divided by

, obtaining Equation (4):

where

is the thermally generated signal simulated for a single pixel of the camera for band

and temperature

. The

coefficients have to be optimized in the calibration step for each band independently. The

coefficients are the product of internal gains

, related to camera configuration, emissivity

dependent on the sample,

depending on the experimental setup, and

that is constant. The gains

are estimated in the calibration phase, while

and

are simplified by calculating the ratio of multiple bands.

2.5. Numerical Approximations

Integration is performed numerically by summing values calculated at selected sampling wavelengths. The integration range is chosen arbitrarily, considering where the optical system could show some sensitivity, with more attention to longer wavelengths where light emission by incandescent objects is stronger. The color camera shows sensitivity from UV to far infrared, and smooth QE versus wavelength. The multispectral camera has microfilters transmitting only narrow bands, and its sensitivity is in the near-infrared range, therefore a finer subsampling and a different integration range are considered. The integration parameters for the color and the multispectral cameras are reported in

Table 1.

An initial estimate for the quantum efficiency (QE)

of the system is obtained by multiplying the QE

of each microfilter band for the transmission of the lens

and of the filters

, as reported in Equation (5):

The transmission of the atmosphere

is approximated to 1 since the system is designed mainly for applications in additive manufacturing, where the working distance is limited to a few tens of centimeters and the absorption of the air is negligible. Initial data are obtained from datasheets. For the multispectral camera, the QE reported in the datasheet (

Figure 4) of each microfilter has been approximated by a Lorentzian–Cauchy distribution (intensity

, half-width at half-maximum

and location parameter

), multiplied by transmission of the filters

and of the lens

according to Equation (6):

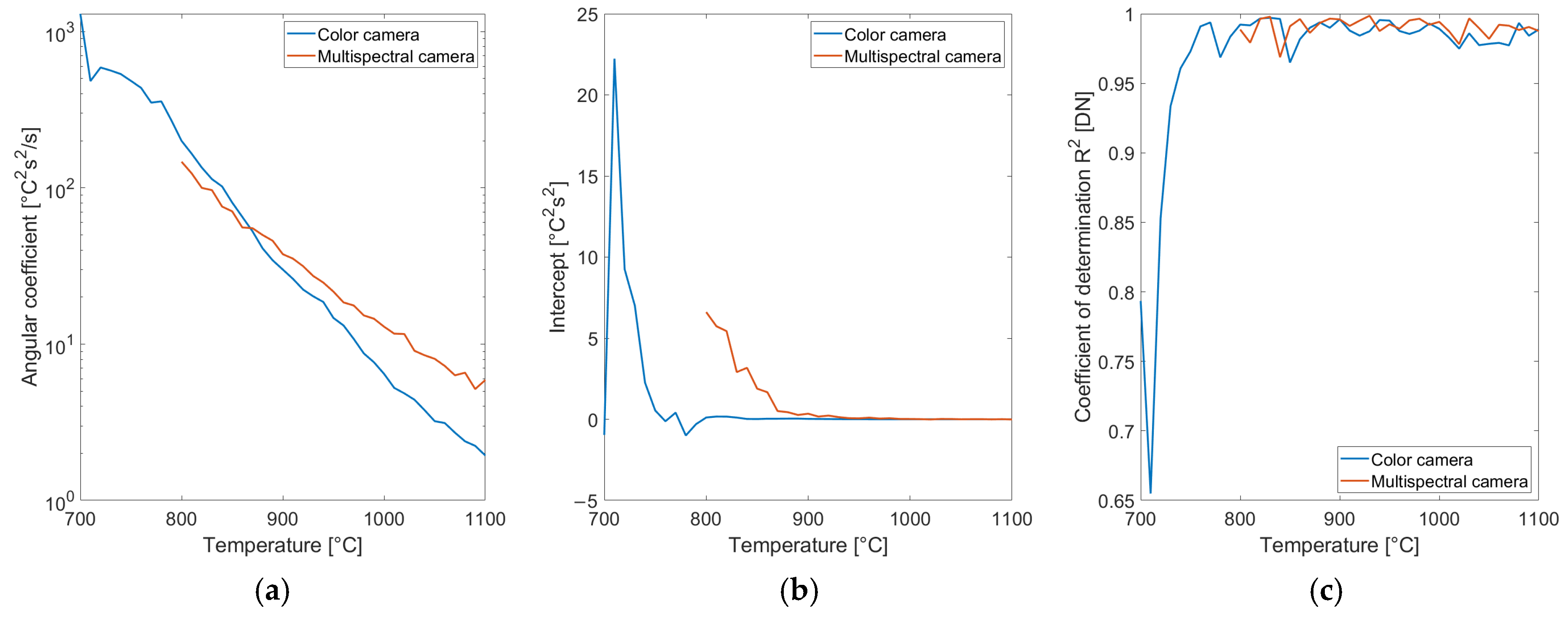

2.6. Data Preprocessing

The goal of preprocessing is to prepare the experimental data

representing the average gray values measured on calibration images versus band, temperature, and exposure time for the optimization step of

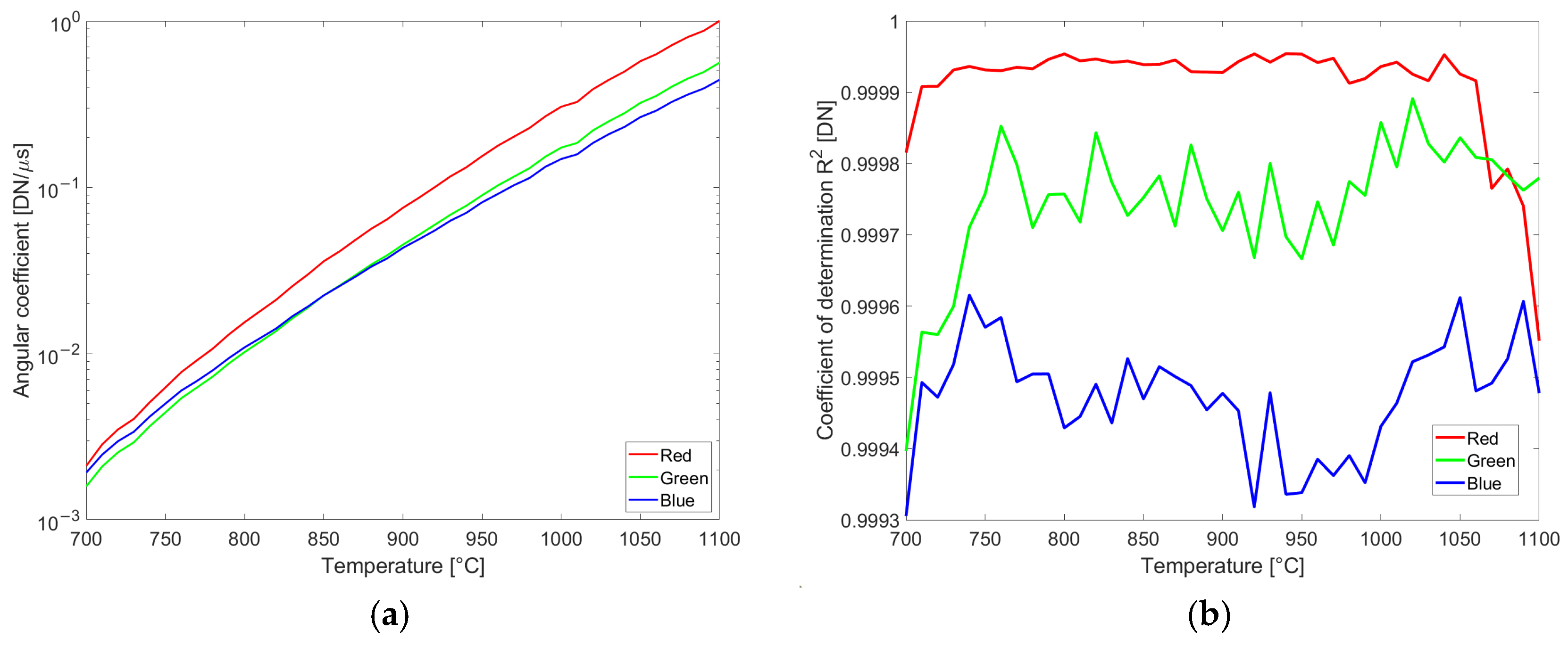

. Dividing the average gray value measured on the calibration images by exposure time is not accurate, due to the uncertainty in the dark level, even after dark image subtraction. Therefore, an alternative approach based on images acquired with multiple exposure times is proposed. First, the average gray values

collected for the same temperature and the same band are grouped and plotted versus exposure time. Then, pixel intensities near saturation (here set above 240), and too dark (below 10), are discarded. On the remaining data, a least squares fitting of a line is performed. The angular coefficient

is an accurate estimation of the thermally generated signal

evaluated at the calibration temperatures

. Plots of angular coefficients and coefficients of determination R

2 for the color camera are reported in

Figure 6.

The proximity of the coefficient of determination R2 to 1 confirms the linearity of the sensor light collection and readout versus exposure time. The same range of values for R2 is obtained when processing data acquired with the multispectral camera.

2.7. Quantum Efficiency Optimization

The optimization algorithm improves the estimate of the QE by reducing the discrepancy between the simulated data

(

Section 3.1 and

Section 3.2) and the experimental data

(

Section 3.3) for all calibration temperatures

and bands. Optimization is designed to be performed independently on each band. Since coefficient

is unknown, simulated data are divided by the datum at 1100 °C obtaining

, and the same is done for measured data obtaining

. The initial QE of the system composed by camera, lens, and infrared block filter is obtained from datasheets according to Equation (4) and identified as

. QE is defined as an array of values, sampled on linearly spaced wavelengths with the step indicated in

Table 1. The QE is scaled from the range 0–100% to 0–1. The iterative optimization process is an evolutive algorithm that modifies the QE array and keeps the new one if the loss function improves. Each optimization step starts by changing the value of the QE array by summing or subtracting a constant. If the result of the sum is outside the definition range of QE (0–1), the element of the array is set to the nearest limit (0 if negative and 1 if above). The modified QE array is used for

estimation according to Equation (3), followed by division by the estimated intensity at 1100 °C to obtain

. Then, the loss function is calculated. If the loss improves, the new QE array is kept, otherwise the modification is discarded. The optimization step is repeated on the next element of the array up to the end. To enforce convergence, the constant change is weighted on the wavelengths, with a weight equal to 0.01 in the transmission range of the optical filter (BP550 or BP800), decreasing gradually to 0.0001 outside. The optimization cycle is repeated for 400 iterations, decreasing the change by 10 times every 100 iterations on the entire array. The loss function is composed of the sum of two parts:

The first part checks that predicted intensities are correct for all the calibration temperatures for a specific band b;

The second part verifies that the QE does not differ too much from the original one by the rms of the difference between estimated and nominal Qes.

The optimization is repeated for the 3 bands of the color camera and for the 25 bands of the multispectral camera. The final QE of the system obtained at the end of the optimization is identified as

. Results are reported in

Section 3.1. At the end of the optimization, for the color camera the simulated data has been divided by their maximum that is the value simulated for the red band at

identified as

. The experimental data obtained by the color camera are also divided by their maximum

. After that the gain coefficients

are estimated for each band as the ratio of the observed and simulated datum at 1100 °C and the simulated data are adjusted accordingly. The optimized quantum efficiency

is used to simulate the Planckian locus relating color to temperature according to Equation (3).

2.8. Neural Network for Temperature Estimation

Temperature estimation is done through a regression neural network trained on a synthetic dataset corresponding to the Planckian locus previously calculated. The choice of training on full synthetic data is related to the fact that real training data with accurate ground-truth are difficult to obtain above 1100 °C—that is the maximum operating temperature of the black body source. Synthetic data enables robust, physics-informed training under controlled conditions. Results presented in

Section 3.2 and

Section 3.4 have been obtained by testing the network on real measurements showing the absence of bias.

Each image of the synthetic dataset has a width equal to 1 pixel and height equal to the number of bands of the camera, representing the simulated radiation intensities

that could be observed at a specific temperature

. The training data, corresponding to the Planckian locus, are generated by Equation (3) with optimized QE

providing

. Simulated data

are normalized to cancel the dependence on emissivity, exposure time and experimental setup. The training data for the color camera are stored in an array of two-element tuples obtained by dividing the red and blue simulated data by green

. The green over green ratio, equal to 1, is discarded. Equation (7) shows that normalization of simulated data cancels the effects of the optical configuration

and of the emissivity

in a graybody approximation.

Furthermore, ; therefore, the effect of exposure time is also canceled. For the multispectral camera simulated data are normalized by dividing by the average of the bands in the mid-spectral range (bands from 9 to 16). The dataset consists of 3000 synthetic tuples, generated at 1 °C intervals over a temperature range from 500 °C to 3500 °C. The dataset has been randomly split into 70% for training and 30% for testing. Synthetic data are used because accurate ground-truth measurements are difficult to obtain across the full temperature range up to 3500 °C. The use of synthetic data allows consistent, noise-free labels for training, making them ideal for developing and validating the regression network. The network is a multilayer perceptron with 5 layers (250, 200, 150, 100, and 50 neurons per layer with ReLU activation).

Loss is cross entropy L2 and no dropout is used. L2 loss is chosen because it is well suited for regression tasks such as temperature estimation. It penalizes larger errors more heavily than smaller ones, thereby encouraging the model to produce accurate predictions across the entire temperature range. Moreover, it provides smooth gradients and promotes stable convergence during optimization, which is important for training convergence. Since the training is conducted exclusively on synthetic data, free from noise or experimental variability, there is no need to employ noise-robust alternatives such as L1 or Huber loss. The training is executed on an Intel CPU for 400 epochs using a Adam optimizer with a learning rate of 0.00001 and a batch size of 32.

In inference, the network accepts as input gray value data, normalized as the simulated ones, and predicts a temperature. Normalization of gray value data removes, at the first order of approximation, all the contribution that do not alter the wavelength spectrum of the signal including exposure time, optics aperture, working distance, and reduce the effect of uncertainty on emissivity.

The results obtained by the proposed approach are compared, in

Section 3, with the results obtained with two state-of-the-art methods, described in

Section 2.9 and

Section 2.10.

2.9. Uncertainty Evaluation

To ensure the traceability and reliability of the temperature estimation, an uncertainty budget is evaluated according to the Guide to the Expression of Uncertainty in Measurement (GUM) principles. The uncertainty on the estimated temperature is evaluated by propagating the uncertainties of the input parameters through the calibration model using the law of propagation of uncertainty. According to the approximation of independence between noise sources, the sensitivity coefficients of the temperature estimation function with respect to each uncertain parameters—such as camera noise and emissivity slope—are determined. These are combined with the standard uncertainties of each parameter to compute the combined standard uncertainty.

The temperature estimation process includes the following steps: image acquisition, dark image subtraction, normalization, and neural network regression. After dark subtraction, the resulting signal in each band is denoted . The final estimated temperature is expressed as a differentiable function (representing normalization and neural network regression) that is optimized during calibration. Therefore . In the hypothesis that the signals over different bands presents non-correlated noise sources; the total uncertainty is given by the partial derivative of the calibration model versus the signal for each band multiplied by the uncertainty on the same band plus the uncertainty affecting the calibration model.

The main uncertainty sources affecting the calibration model itself are uncertainty on emissivity slope

and on reference temperature. Zero-order emissivity effects are removed through normalization, but the residual dependence on emissivity slope remains. The uncertainty on emissivity slope has been estimated considering how emissivity could change among several materials. The contribution of image noise to the calibration process is considered negligible due to the averaging of 10 images over a region of 10,000 pixels, reducing the variance by a factor of

. The uncertainty of the reference temperature

is determined by the thermocouple’s intrinsic uncertainty (±4.4 °C, IEC 60584-2 Class 1), supposing a rectangular distribution due to the limited available data. The sensitivity coefficient

describing the propagation of uncertainty on reference temperature through the calibration model has been estimated by the Montecarlo method. The partial derivatives method appeared not applicable since uncertainty on reference temperature affects several parameters that are correlated by the calibration model. Contributions to the uncertainty are summarized in

Table 2.

The temperature measurement relies on an image acquired by a color camera (sensor CMV4000 by CMOSIS) and by a multispectral camera (CMV2000 by CMOSIS), making the image acquisition process a central contributor to uncertainty. The sensor of the color camera has a saturation capacity of , and a readout noise variance of . The sensor of the multispectral camera has a saturation capacity of , and a readout noise variance of . According to the EMVA 1288 standard, the digital output signal is given by: where is the number of photoelectrons, is the dark current, and is the internal gains for band . The total variance of the digital signal is where is the contribution of shot noise with Poisson distribution; is noise related to sensor read out signal, and is quantization error. For high signal levels and short exposure times, shot noise dominates, leading to a signal to noise ratio (SNR) on the color camera of 92 corresponding to a standard deviation of noise equal to 2.78 DN. The SNR of the multispectral camera is 116, corresponding to a standard deviation of noise equal to 2.19 DN. The noise quantization, considering that the pixel bit depth is 8 bit for both cameras, has a standard deviation of approximately equal to 0.29 DN, and is independent on exposure time and gain.

The full uncertainty budget is presented in Equation (8).

The partial derivatives

have been estimated numerically by running the temperature prediction model on synthetic data according to Equation (9):

For the partial derivatives involving camera signal, the parameter has been set to 1 DN. For the partial derivatives involving emissivity slope, the parameter has been set to 0.01 . The sensitivity coefficient on temperature has been evaluated by running the full calibration model after applying a noise distribution with the described characteristics to the reference temperatures. To determine the propagation on reference temperature through the calibration model, the Montecarlo method has been used. Several optimization runs of the QE have been done to estimate the propagation of uncertainty on the reference temperature through the calibration model.

Results of uncertainty versus temperature for the color and the multispectral camera are reported in

Section 3.5.

2.10. Two-Color Method

The most widely experimented approach in the state of the art for estimation of temperature from color cameras consists of approximating the red and the green channels as narrow bands and applying the ratio pyrometer formula [

13]. This method completely neglects both the spectral overlaps of the red and green channels and the infrared light in the range from 700 to 1000 nm collected by the sensor due to imperfections in the infrared cut filter. One of the latest implementations of the two-color approach partially solves these issues [

17]: in the hypothesis that temperature is below 2000 K the intensity of the blue channel is supposed to be mainly due to infrared light above 700 nm. Therefore, dimensionality reduction is applied as

and

. The blue channel

is subtracted to the red

and to the green ones

to reduce the infrared light component. To keep spectral overlap in consideration, the wavelengths of the red and green channels,

and

, are chosen by an optimization procedure. The formula for temperature estimation (in °C) is:

In Equation (10) the estimated temperature is

while

is the second radiation constant. Calibration requires two steps. The first step has the goal to estimate the gains

applied to the measured values. Gains are determined by plotting the measured data

versus the data

simulated with datasheet QE obtaining three plots, one for each band

. In each plot the data are fitted with a line: the intercept is negligible and the gain for each band

is calculated as the inverse of the angular coefficient. The procedure is then repeated by comparing normalized data after blue subtraction with simulated ones determining the gains ratio

. Once the gains are optimized the second calibration step optimizes equivalent wavelengths

and

by a non-linear minimization. The goal function is the root mean square of the temperature prediction error

for all the calibration temperatures

. Initial optimization values are 500 nm for green and 620 nm for red. Results are reported in

Section 3.6.

2.11. Effect of Quantum Efficiency

To investigate the impact of quantum efficiency on temperature estimation, an additional literature method for calibrating the temperature of a color camera is considered. This approach aims to estimate the Planckian locus directly from QE [

36], mapping color to temperature in a two-dimensional color space. Temperature is then regressed from color using Ohno’s interpolation method [

44]. Estimated color data

are obtained according to Equations (3) and (4) with the quantum efficiency dataset

obtaining

. The measured data

for each band are adjusted to match the simulated values by multiplying them by the

coefficients. These gains are calculated as the ratio

, where

represents the simulated value at 1100 °C and

is the corresponding measured value. After determining gains

both the simulated and the measured data are converted into chromaticity coordinates, obtaining a two-dimensional representation, which describes color independent of luminance. Experimental data coordinates are

and

while simulated data coordinates are

and

. The temperature

is estimated for each value of

by mapping the experimental coordinates

onto the Planckian locus defined by

with triangular interpolation [

44]. The simulation has been repeated with the QE obtained by the datasheet

and with the QE optimized from our method

. To take into account some infrared absorption not considered by

, the simulation has been repeated by increasing

, mapped between 0 and 1, by 0.01, 0.02, and 0.05. Results are reported in

Section 3.6.

3. Results

Results regarding QE optimization (

Section 3.1), temperature estimation on calibration images (

Section 3.2), and the capability of generalizing outside the calibration range are introduced in

Section 3.3. Results obtained with alternative calibration methods are reported in

Section 3.4.

3.1. Results for Optimized Quantum Efficiency

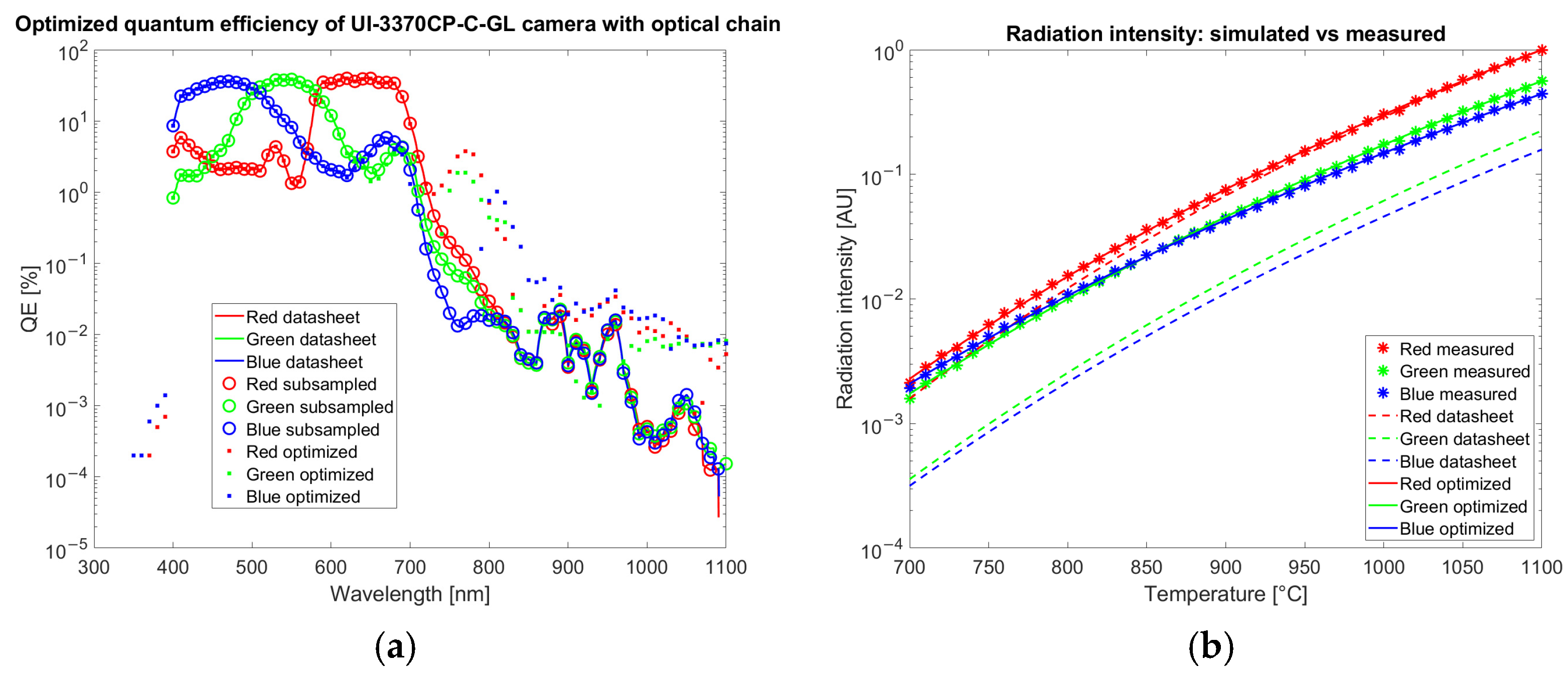

The results of the optimization of QE for the color camera are reported in

Figure 7 and for the multispectral camera in

Figure 8. The radiation intensities are estimated with Equation (3) and corrected by the gain coefficient

. Estimation is repeated both with QE from the datasheet

, and with optimized QE

.

Data reported in

Figure 7a shows that the correction applied to the QE by the optimization is around 1% from 700 to 900 and about 0.01% from 900 to 1100 nm where 100% corresponds to the collection of all the incoming photons. On average, the quantum efficiency increased by 0.05%. The rms of the difference in the QE before and after the optimization is 0.66%. After optimization the RMS of the percentage error between measured and theoretical values is 2.3% on the entire dataset.

The correction applied to the QE of the multispectral camera (

Figure 8a) by the optimization algorithm is around 1% or lower.

Both for the color and the multispectral camera, the optimization strongly improves the agreement of simulated data with experimental measurements acquired in calibration as observed in

Figure 7b and

Figure 8b (crosses corresponding to measured data are superimposed to the continuous line calculated with an optimized QE and not to dashed lines obtained from datasheet QE).

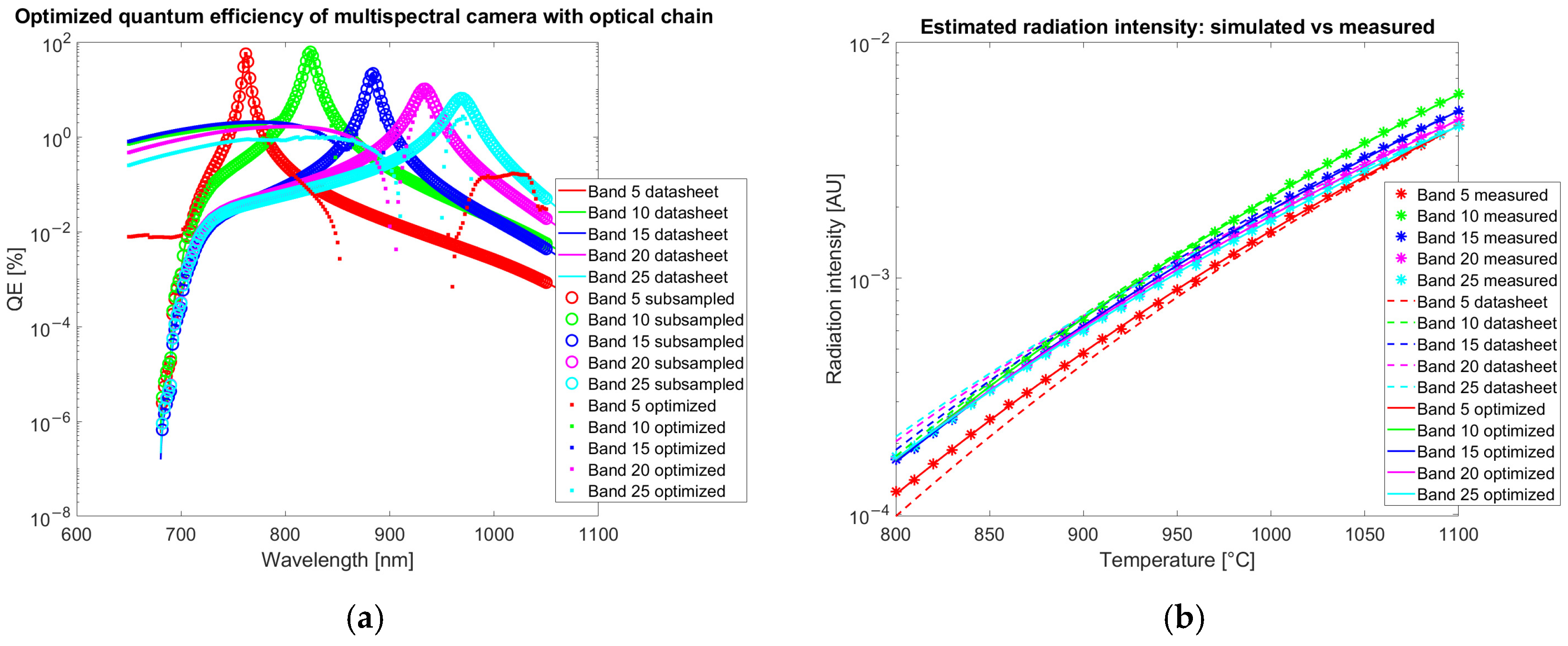

To assess the robustness of the QE estimation, a cross-validation procedure is performed. The calibration dataset is divided into five distinct temperature groups, uniformly sampled across the available temperature range. The QE optimization model is independently trained on each of these subsets, resulting in five separate QE curves. The average and standard deviation across these curves are then computed at each wavelength, providing an estimate of the uncertainty associated with the QE determination. This wavelength-dependent uncertainty is reported as error bars with coverage factor of 1 in the cross-validation QE plots in

Figure 9, offering an insight into the sensitivity of the calibration process to variations in the calibration data. The cross-correlation confirms the robustness of the QE estimation algorithm with only limited variations of a few percent in the spectral region among 700 and 850 nm, where the leaks of the infrared-cut filters are present.

3.2. Temperature Estimation in the Calibration Range

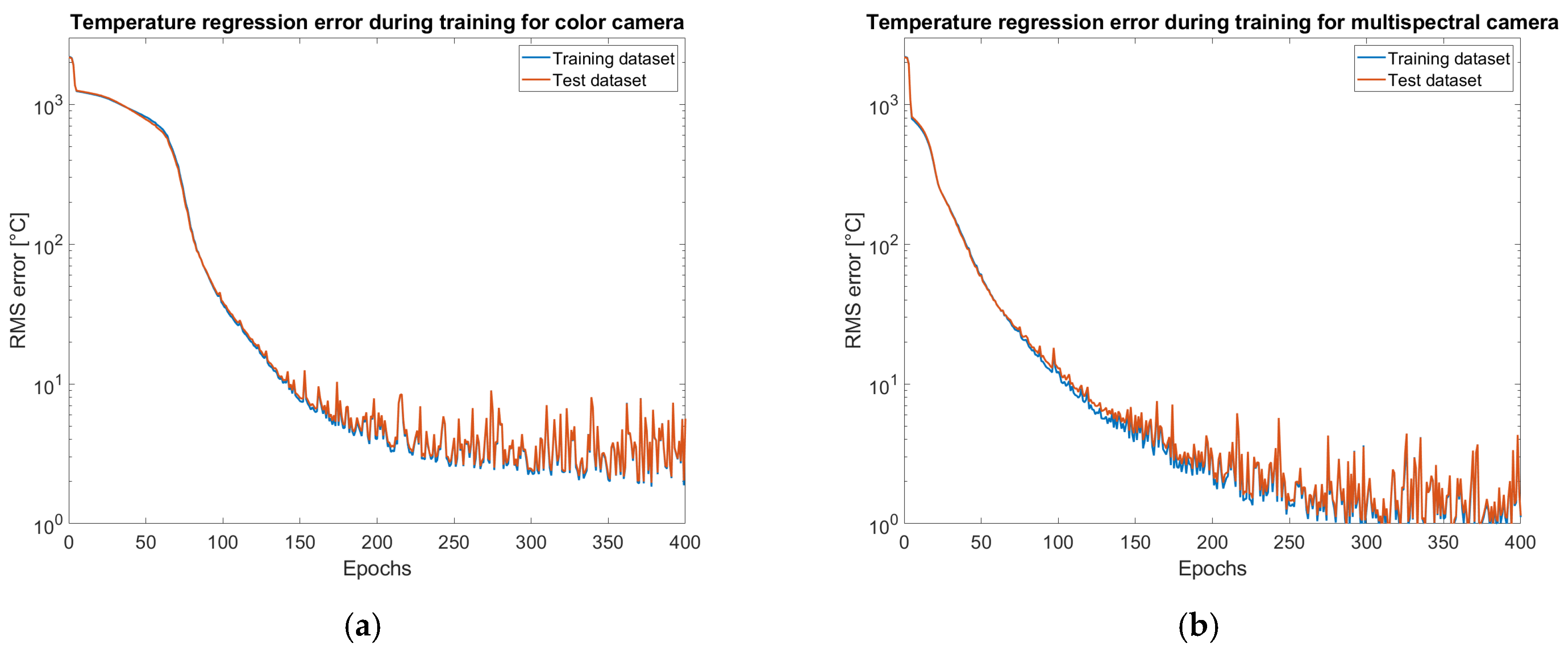

The results of the training of the neural network for temperature estimation are reported in

Table 3. Images are resized by a factor of two to avoid interpolation due to demosaicing. The network is tested and trained on the synthetic dataset simulated with optimized quantum efficiency. The test has been repeated by estimating temperature from single pixels of calibration images.

The regression errors evaluated on training and test datasets have been recorded during the training at the end of each epoch. The plots or rms error on temperature prediction versus the training epoch reported in

Figure 10 show a smooth convergence behavior, with no signs of overfitting. The model achieves consistent generalization performance across the test dataset.

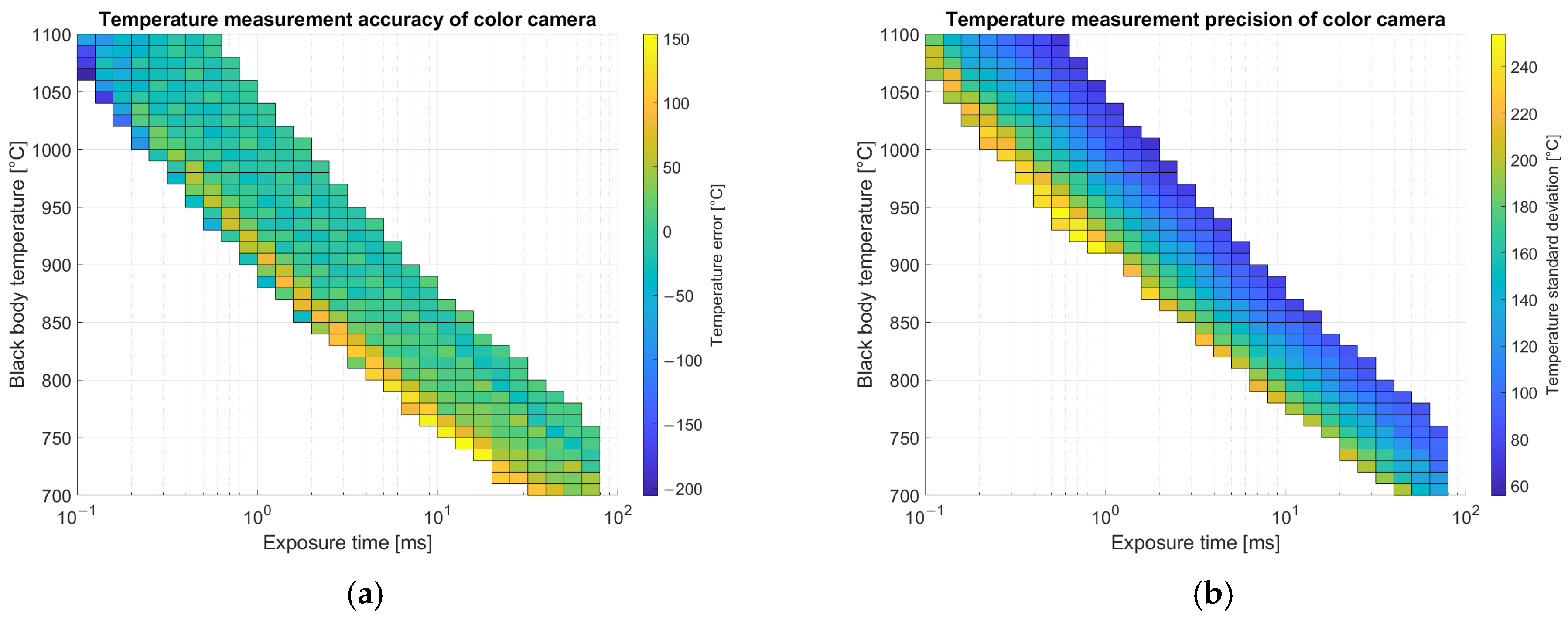

Inference is done by generating a temperature image pixel by pixel. The first step is to subtract the dark image, then demosaicing is applied and images of the single channels are split. For pixels non-saturated on all the bands (20–220 digital numbers), an estimator independent of exposure time is evaluated. For the color camera both red over green and blue over green ratios are chosen. The normalized estimators are provided to the network that regresses a temperature measurement. Results for average error and standard deviation on calibration images for the color camera are shown in

Figure 11 and for the multispectral camera in

Figure 12.

Results reported in

Figure 11 for temperature measurements are related only to images with a relevant number of valid pixels (not saturated nor dim) in the Region of Interest (ROI) corresponding to the metallic substrate shown in

Figure 3 as a blue ellipse. In the ROI, supposed at uniform temperature, average and standard deviation of predicted temperatures over the pixels are evaluated. Accuracy is estimated as the difference between the measured temperature on each pixel and the ground truth measured with the thermocouple, averaged on the entire ROI. Pixel-to-pixel variations evaluated by standard deviation provide an estimate of precision. Accuracy is better on images with exposure times that are intermediate between the minimum and the maximum allowed for that temperature. On the other hand, precision (standard deviation) is lower when the exposure time is higher due to the better signal-to-noise ratio.

Results reported in

Figure 12 for temperature measurements with the multispectral camera are related only to images with a relevant number of valid pixels (not saturated nor dim) in the Region of Interest (ROI) corresponding to the metallic substrate. Accuracy shows less dependence on exposure time with respect to the color camera but depends slightly on temperature. Precision is better on images with higher exposure time due to the better signal-to-noise ratio.

3.3. Results About Electronic Noise

The plots reported in

Figure 11b and

Figure 12b reports the standard deviation of the estimated temperature versus reference temperature and exposure time for color camera and multispectral camera, respectively. They both follow a similar pattern showing that, at a fixed reference temperature, the standard deviation of the predicted temperature in a unform region reduces together with exposure time. This behavior seems to highlight a strict relation between exposure time, reference temperature, and uncertainty related mainly to the electronic noise of the camera. To verify this assumption a modelling of electronic noise versus exposure time is proposed. According to EMVA 1288 the variance of the noise

could be obtained by summing shot noise, readout noise and quantization error

as explained in

Section 2.9. The signal generated by the camera is

, considering the dark subtraction. The normalization calculates the ratio of couples of signals: red (

over green (

corresponding to

and blue (

over green corresponding to

. Therefore, according to uncertainty propagation, the total noise variance on the red to green ratio could be calculated according to Equation (11):

By considering that the photoelectrons depends on photon flux

, by quantum efficiency

and by exposure time

, the signal could be expressed as

. Since the shot noise has a Poisson distribution its variance could be expressed in terms of photoelectron signal

. Substituting in Equation (11) and grouping versus exposure time Equation (12) is obtained:

Equation (12) could be simplified as

by defining a first term

that multiplies

dependent on electronic noise, and a second term

that multiplies

independent of electronic noise but dependent only on ratios of gains, quantum efficiencies, and photon fluxes. The total noise variance is the sum of the variances over the two ratios weighted by sensitivity coefficients determined by propagation through the neural network. Total variance is therefore

. Substituting the results obtained for

and

and multiplying by

the Equation (13) is obtained:

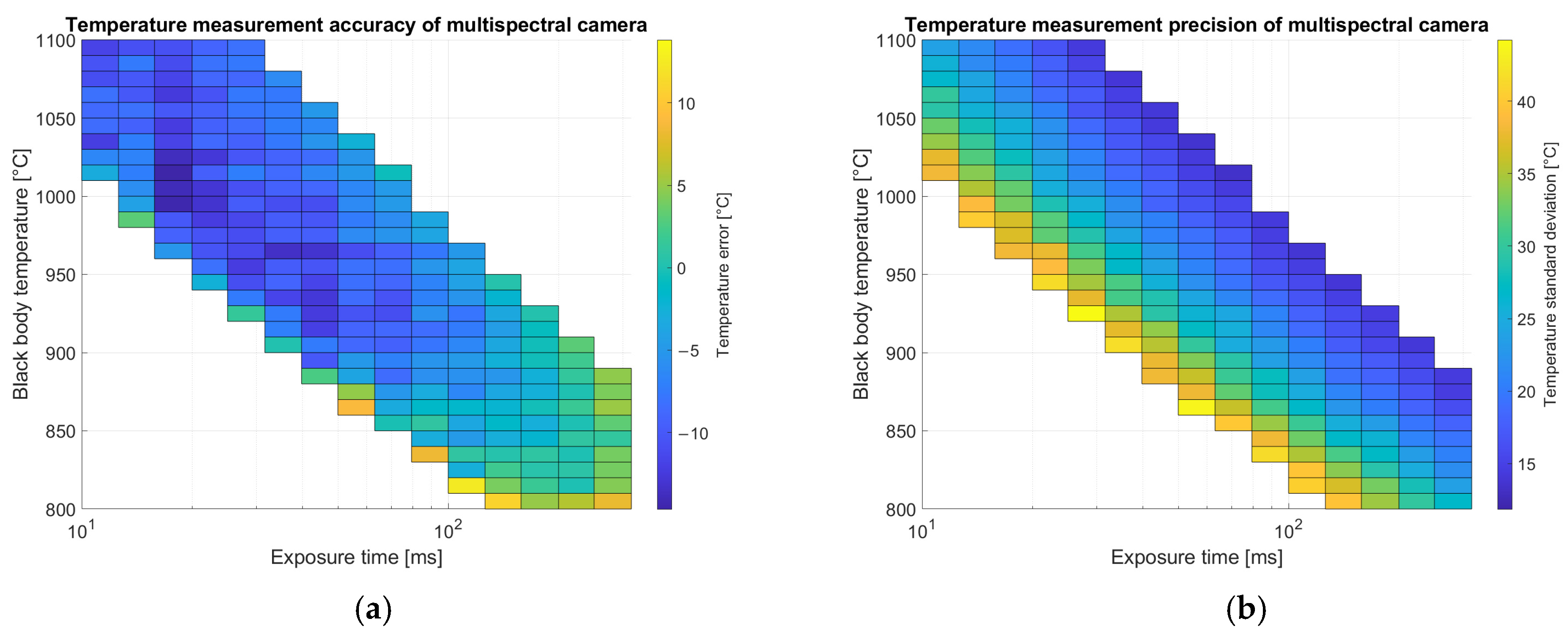

Equation (13) corresponds to a line. In order to verify the correctness of the modeling the standard deviation data reported in

Figure 11b and

Figure 12b are grouped by reference temperature. The data in each group are squared, multiplied by the square of the exposure time and regressed to a line versus exposure time. Fitting results for the color and the multispectral cameras are reported in

Figure 13 versus reference temperature.

The results reported in

Figure 13 confirm the validity of the uncertainty model proposed to account for the electronic noise since the coefficient of determination is nearly one for almost all temperatures except the lowest ones. The model points out the relevant role of the exposure time in the uncertainty. The line intercept, keeping into account the effect of electronic noise is almost negligible for temperatures above 800 °C for the color camera and at 900 °C for the multispectral one, showing the limited contribution of this term. The largest contribution to the noise is provided by the angular coefficient, taking into account the imbalance in the photon flux over several channels.

3.4. Temperature Estimation Outside the Calibration Range

The temperature estimation network is trained using simulated data covering a temperature range of 500–3500 °C for both a color camera and a multispectral camera. To evaluate its ability to generalize beyond the calibration range (700–1100 °C), images of a temperature-calibrated halogen bulb (Stefan Boltzmann lamp by 3B Scientific) are captured and processed using both cameras.

The filament temperature is adjusted by controlling the applied voltage, ranging from 1.5 V (corresponding to 800 °C, where incandescence becomes visible) to a maximum of 12.0 V (2600 °C), which represents the filament’s nominal operating condition. Temperature is calculated from the electrical resistance of the filament. Images are captured at temperature increments of 100 °C, from 800 °C up to 2600 °C, setting the corresponding voltage on the tunable power supply.

For each temperature level, multiple exposure times are used, matching those applied during image acquisition at the furnace, extended to reach the minimum exposure time allowed by both cameras (40 μs for the color camera and 50 μs for the multispectral). At each exposure time and voltage, five images are captured and averaged to reduce quantization error. The dark signal is corrected by subtracting the average of five images taken with the same exposure time but with the lamp turned off.

A rectangular region of interest (ROI) is defined in the image over the filament. Pixels with gray values exceeding 240 (before dark subtraction) are considered saturated and removed, as well as those with gray values below 10. If more than half of the pixels in the ROI remain after filtering, the image is processed by evaluating the temperature for each individual pixel.

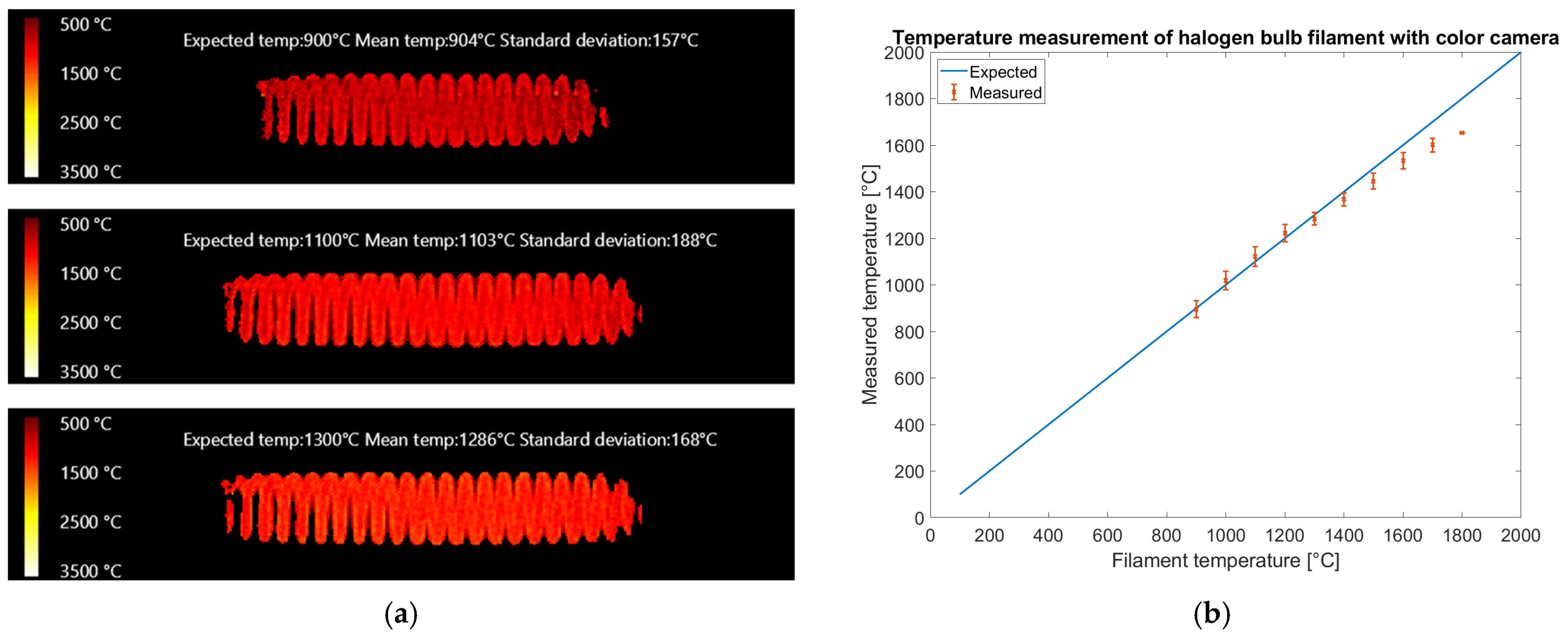

Temperature images of the filament are shown in

Figure 14a, reporting expected temperature, mean temperature, and standard deviation evaluated on all the valid pixels of the region of interest.

Figure 14b presents a plot of the average temperature estimates for images taken at the same halogen bulb voltage but with different exposure times. The error bars represent twice the standard deviation over mean temperatures measured on images with the same filament temperature but different exposure times.

The results reported in

Figure 14 show that the color camera could measure up to 1800 °C: above that value, the red band saturates at the shortest exposure time allowed by the camera (40 μs) and color-to-temperature conversion is not possible. The reference temperature is within error bars up to 1400 °C, showing that our calibration method generalizes above the calibration range (700–1100 °C). Between 1400 °C and 1800 °C, the temperatures appear underestimated. The rms error on filament temperature measurements on the reduced dataset in the expected temperature range of 900–1400 °C is 28 °C on 40 valid filament images, showing enough pixels were neither saturated nor dim. The rms error on the full temperature range including 59 valid images is 58 °C.

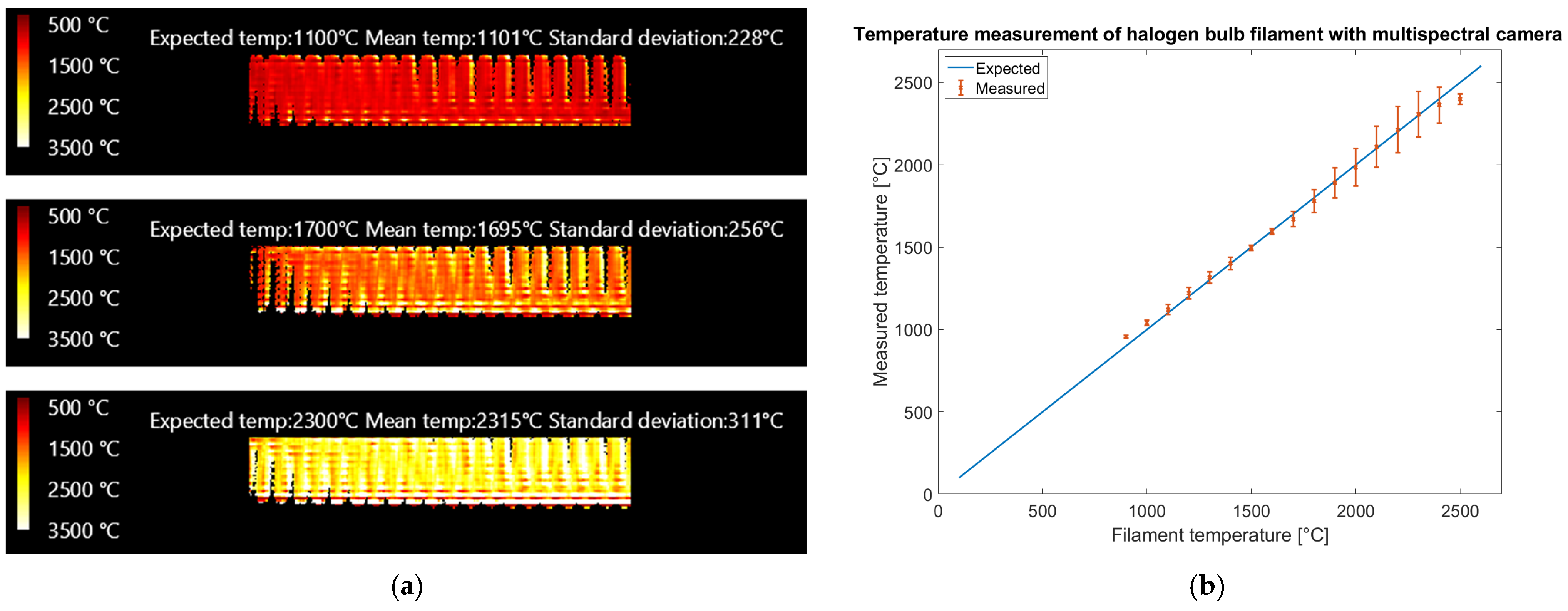

Figure 15 presents the results obtained with the multispectral camera.

The results reported in

Figure 15 show that the multispectral camera could measure up to 2600 °C, although uncertainty starts increasing above 1800 °C due to the saturation of some bands. Different from the color camera, a limited number of saturated bands have been accepted in the processing at the price of higher uncertainty. The reference temperature is within error bars up to 2400 °C, showing that our calibration method generalizes above the calibration range (700–1100 °C). The rms error on filament temperature prediction, evaluated on the entire dataset of 123 valid images up to 2600 °C, showing at least 50% of the filament neither saturated nor dimmed, is 45.8 °C. Limiting the temperature range to 1000–1400 °C, as the color camera, 46 valid images remain with rms error on temperature prediction reduced to 31.0 °C.

The color camera generates temperature images (

Figure 14a) with higher spatial resolution than the multispectral camera (

Figure 15a), allowing the visualization of the wires of the coil. This could be explained considering that the pixel size of both cameras is 5.5

and that the magnification provided by the lens is roughly the same, but the multispectral camera requires a subregion of 5 × 5 pixels of the sensor to generate a temperature read while the color camera, thanks to the debayering algorithm with interpolation, generates a temperature read for every pixel. On the multispectral camera, the distance among sampling regions for the different bands is much bigger than on the color camera, and this could be critical when sharp temperature edges are present. In the multispectral camera the bands in the subregion used for temperature estimation may observe regions of the sample at different temperatures, potentially generating false temperature readings. Nonetheless the operating range of the multispectral camera is wider, covering the temperature range from 1000 °C to 2500 °C, while the color camera is limited to 1800 °C. The color camera works in the visible spectral range (400–700 nm) while the multispectral works in the near infrared (700–950 nm). In the latter, the intensity variation with temperature is lower, allowing a wider temperature range. Nonetheless, the color camera provides higher spatial resolution at a lower equipment cost and requires fewer processing steps than the multispectral one. The advent of extremely wide dynamic range color cameras for automotive could increase the temperature range while keeping the other advantages. An overall limitation of the color camera regards interference of ambient light, therefore low-temperature measurements (below 1000 °C) are possible only in a dark environment. The multispectral camera is more robust in this regard, since in lab or industrial environments near-infrared light is usually absent.

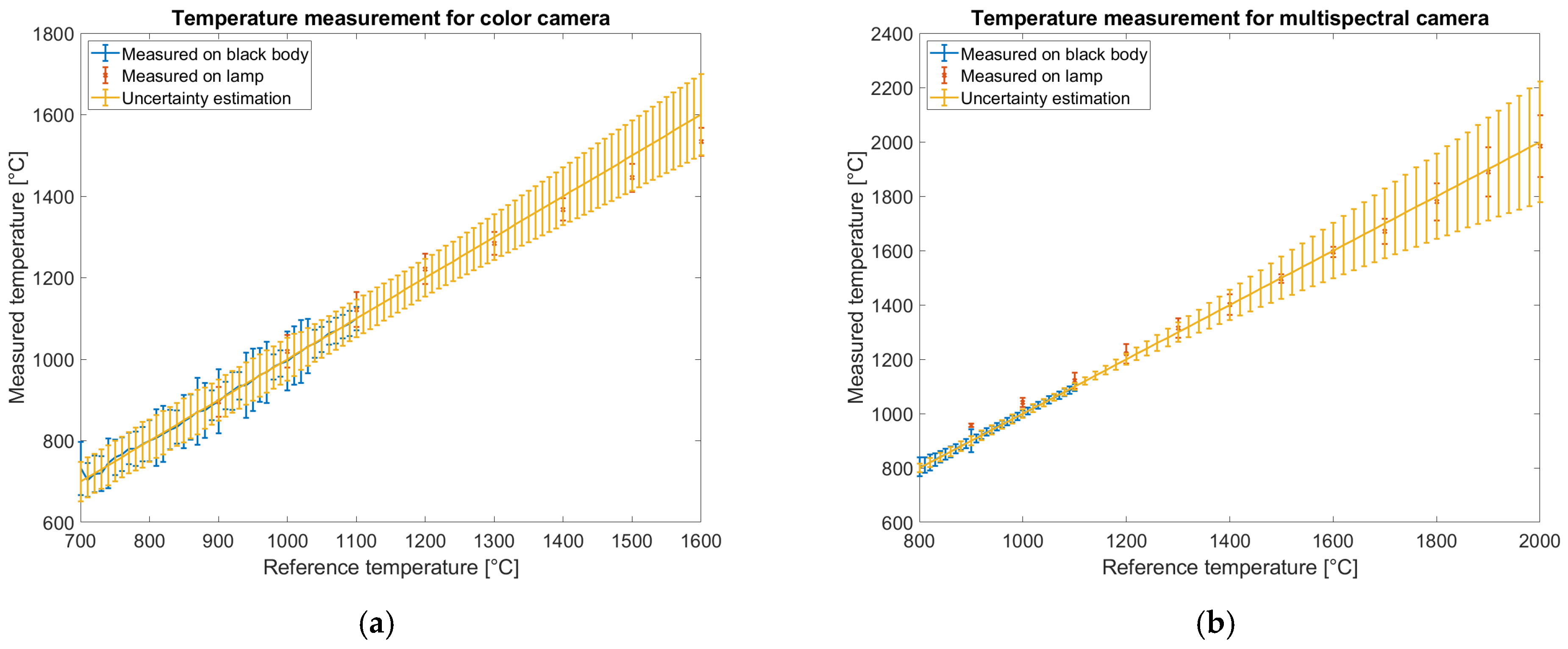

3.5. Results Comparison

The overall comparison of temperature measurements using both the color and multispectral cameras is presented in

Figure 16. Each plot shows measurements performed on the blackbody source and the lamp, along with the estimated Type B uncertainty, as described in

Section 2.9. Blackbody images are acquired in the temperature range of 700 °C to 1100 °C for the color camera and from 800 °C to 1100 °C for the multispectral camera. The uncertainties for experimental data are estimated based on the standard deviation of the measured temperature within the region of interest. From the data shown in

Figure 11b and

Figure 12b, only the measurement corresponding to the optimized exposure time is reported. For the lamp, the data reflect the statistical uncertainty of the measurements.

Type B uncertainty accounts for the emissivity uncertainty (which has a negligible effect), the propagation of signal uncertainty via partial derivatives, and the uncertainty of the reference temperature, which increases outside the calibration range. All uncertainties are reported with a coverage factor of 2, corresponding to a 95% confidence level. A good agreement between the measured and predicted uncertainties is observed. If required by the application, the uncertainty range could be further reduced by averaging across more pixels. In this analysis, uncertainties are calculated assuming a 3 × 3 pixel region for the color camera and a 2 × 2 pixel region for the multispectral camera. The color camera exhibits higher uncertainty than the multispectral camera within the calibration range; however, its uncertainty increases more slowly with temperature, suggesting better suitability for extended-range temperature measurements.

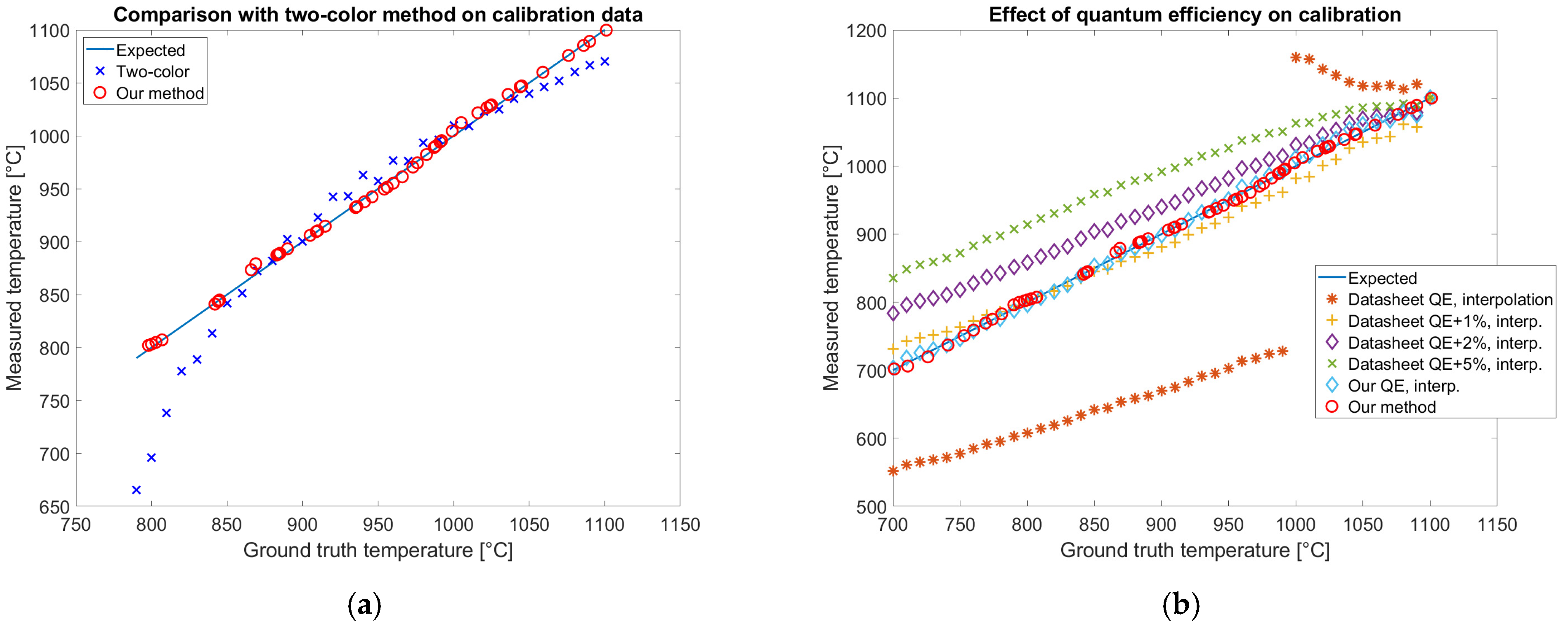

3.6. Results of Alternative Approaches

Alternative calibration approaches are the two-color method [

17], presented in

Section 2.10, and the direct estimation of the temperature from the simulated Planckian locus with temperature regression based on triangular interpolation [

44], presented in

Section 2.11. Both these methods have been tested on the calibration data of the color camera, after removing the dependence on exposure time. Results are reported in

Figure 17.

For each method, the average, the standard deviation, and the root mean square of temperature prediction errors have been evaluated and reported in

Table 4.

The results reported in

Figure 17 and in

Table 4 show that our method fits the calibration data better than all the alternative approaches that have been tested showing the lowest rms error of 3.7 °C. The temperature measured by the two-color method (

Figure 17a) does not completely fit the variability of the data with a rms error of 35.5 °C. The best alternative approach to our method is the temperature measurement with the optimized QE and Ohno interpolation providing a rms error of 6.0 °C. The rms error is slightly higher than the one obtained with our method confirming that the neural network overperforms interpolation. If the datasheet QE is used with the same method, the temperature prediction error is almost two orders of magnitude worse. The modified datasheet QE allows the simulation of the data variability when a 0.02 constant is uniformly added, showing the necessity of an optimization step of QE in calibration.

4. Discussion

Our method proposes innovations over the state of the art regarding quantum efficiency estimation and temperature estimation from RGB color. These topics are discussed by comparing our results with previous ones in

Section 4.1 and

Section 4.2. In

Section 4.3 the effect of exposure time is discussed. Limits of calibration are presented in

Section 4.4.

4.1. Quantum Efficiency Estimation

The quantum efficiency estimation is a key step in camera calibration for temperature estimation. This result has been proven by simulating the signal collected by both a color and a multispectral camera for each band with the QE reported on the datasheet. The result does not match the experimentally observed gray levels on images acquired by looking at an Inconel 718 plate in a furnace at a known temperature. The discordance could be observed by comparing simulated and measured data reported in

Figure 7b and

Figure 8b. The relevance of QE on temperature estimation is shown in

Figure 17b, where the datasheet QE is perturbed. On the other hand, ignoring the spectral overlap of the bands and approximating them as narrow, as done in the two-color method, introduces an error in the temperature estimation, as shown in

Figure 17a. The temperature prediction by the two-color method shows a trend that could be attributed to the excessive simplification of the system.

Figure 17b shows that the optimized QE allows for the estimation of the temperature, correctly capturing the complexity of the system both with our method based on a neural network and with an interpolation method. The adjustment performed by the optimization is less than 1% confirming the sensitivity of the color to temperature simulation to the uncertainty of QE. Our QE optimization method relies on images acquired by a furnace, approximating a black body source, typically available in labs where glowing hot parts are present. Alternative methods of inferring QE from several light-emitting diodes with different peak emission wavelengths [

39] or using sets of narrow band filters [

40] require dedicated tools and may not reach the required accuracy.

Limitations involve the convergence of the optimization method for QE that has been shown experimentally for both a color camera and a multispectral one, without providing full mathematical proof. Convergence happens since the spectra acquired at different temperatures are linearly independent, providing a robust dataset for the fit. Hyperparameters have been chosen by trial and error and a general rule to choose wavelength integration range, subsampling, weights, and weights decay cannot be formulated. Since the vast majority of the commercially available color cameras present similar datasheet QE, the hypothesis that the presented hyperparameters can guarantee general convergence appears reasonable. Moreover, the estimated QE does not differ so much from the datasheet one, therefore, it is believed to be reliable, even if it has not been verified with another technique. Finally, the estimated QE also considers the optical chain and the filters and does not directly provide information on the camera sensor itself.

4.2. Temperature Estimation from RGB Color

Our approach directly estimates temperature from RGB color, without converting the color space into a standard like CIE31 or CIE76. This choice presents the advantage of avoiding the uncertainty related to the conversion matrix. Furthermore, conversion is not possible or noisy when one of the bands shows a signal that is too dim or saturated. In this case, measuring in the color space of the camera potentially allows discarding the critical band and reconstructing the temperature from the remaining data. Conversion matrices, applied in the case of multispectral cameras to avoid band crosstalks [

34,

35], appears not applicable to color cameras since they do not capture the complexity of the QE of the system. Attempts to use a conversion matrix have been done in a temperature range above 1700 K [

21], where limited temperature calibration references are available. For lower temperatures, the approach of modeling the Planckian locus in the color space of the camera relying on a black body source as a reference for calibration appears more robust. In our approach, temperature estimation from color is done by a neural network trained on the Planckian locus calculated after calibration. The results reported in

Table 4 show that the neural network overcomes the interpolation method with a lower RMS on the measurement error. The network has been designed to generate a limited number of floating-point operations, that could be accelerated by dedicated hardware.

4.3. Effect of Exposure Time

The data reported in

Figure 11,

Figure 12,

Figure 14 and

Figure 15 show that the biggest source of uncertainty in temperature measurement is the effect of exposure time. The dynamic range of an 8-bit camera seems too limited to capture the variability of the intensity generated by glowing objects. In

Figure 11 and

Figure 12 a wide portion of the plot does not report the temperature measurement because the signal of at least one of the bands is too dim or saturated. In future works, a high dynamic range camera will be tested to improve robustness towards exposure time variation. Temperature measurement at different exposure times appears different, generating an uncertainty reported in

Table 3 of 63.2 °C for the color camera and of 24.3 °C for the multispectral one. This uncertainty is attributed to the instability of the dark level, to the shot noise of the sensor, and to the quantization error. The first adds a bias to the signal, the second adds white noise on each reading and the last becomes relevant when exposure time is not optimal. The sources of uncertainty sum up influencing the data provided to the neural network and, therefore, the temperature estimation. A high dynamic range camera could reduce signal quantization uncertainty, potentially improving temperature measurement precision.

4.4. Limits of Calibration

The accuracy of the proposed calibration procedure depends on the combined spectral response of the imaging system and the radiative properties of the observed material. Any modification to the optical assembly that alters the system’s spectral transmission—such as replacing the lens with one made of different materials or coatings—requires recalibration. Similarly, changing the camera affects the quantum efficiency (QE) curve of the sensor, which directly influences the spectral response and also necessitates recalibration. The observed material is also a key factor. A change in the target material—such as switching to a different metal with a distinct emissivity slope—alters the spectral radiance profile and requires recalibration to preserve measurement accuracy.

Changes in working distance do not affect calibration unless they introduce significant atmospheric absorption, which could alter the spectral composition of the received signal. In typical laboratory or industrial conditions and over short distances, this effect is negligible, and recalibration is not required. However, in environments with long optical paths or significant temperature or gas composition gradients (e.g., high humidity or combustion gases), the spectral response could be affected, and recalibration may be necessary. Since this work is addressed to additive manufacturing that operates on short distances the effect of atmospheric absorption has been considered as negligible for future work.

In contrast, variations in aperture, focus, or exposure time do not influence the spectral response. In particular, the normalization procedure applied in this method effectively cancels the influence of exposure time, ensuring that such adjustments do not require recalibration. Overall, while recalibration is needed for any change that significantly affects spectral transmission or material emissivity, the procedure remains straightforward and can be conducted using standard tools available in a typical additive manufacturing laboratory.