Abstract

In modern manufacturing, making accurate and timely decisions requires the ability to effectively handle multiple types of data. This paper presents a multimodal system designed specifically for smart manufacturing applications. The system combines various data sources including images, sensor data, and production records, using advanced multimodal large language models. This approach addresses common limitations of traditional single-modal methods, such as isolated data analysis and poor integration between different data types. Key contributions include a unified method for representing different data types, dynamic semantic tokenization for better data processing, strong alignment strategies across modalities, and a practical two-stage training method involving initial large-scale pretraining and later fine-tuning for specific tasks. Additionally, a novel Transformer-based model is introduced for generating both images and text, significantly improving real-time decision-making capabilities. Experiments on relevant industrial datasets show that this method consistently performs better than current state-of-the-art approaches in tasks like image–text retrieval and visual question answering. The results demonstrate the effectiveness and versatility of the proposed methods, offering important insights and practical solutions to enhance intelligent manufacturing, predictive maintenance, and anomaly detection, thus supporting the development of more efficient and reliable industrial systems.

1. Introduction

In modern industrial settings, production tasks are growing increasingly complex, driving an urgent demand for advanced intelligent systems capable of multimodal data perception, analysis, and fusion-based decision-making [1,2]. These systems must be designed to manage the collaborative processing of multi-source heterogeneous data, including visual information, sensor signals, and production records. Such data are inherently characterized by pronounced temporal dependencies and heterogeneity, posing significant challenges for conventional single-modal processing methods. These methods often fall short of capturing the latent relationships between modalities, thereby limiting the effectiveness of intelligent perception systems in real-world applications. As a result, issues such as data silos and undesirable decision-making accuracy often arise, thus preventing the overall efficiency and responsiveness of industrial operations.

Achieving robust multimodal data fusion, however, remains a formidable challenge. The diverse modalities involved exhibit significant disparities in structure, feature space, and temporal resolution. For example, image data typically embody high-dimensional static representations; time-series data are expressed as low-dimensional dynamic sequences; and text data consist of highly semantic, unstructured content. The interactions among these modalities are often nonlinear and intricately complex. Traditional industrial intelligent systems tend to process each modality in isolation, resulting in fragmented analysis workflows that are inefficient and incapable of uncovering deeper cross-modal associations. In this context, multimodal data fusion emerges as a critical enabling technology. By integrating visual, temporal, and textual information into a unified feature representation space, a more holistic and nuanced understanding of industrial system complexities can be attained. The ability to perform such fusion and collaborative analysis is fundamental to propelling industrial automation toward higher levels of intelligence, adaptability, and sophistication. Large multimodal models have demonstrated remarkable potential in this regard, offering robust mechanisms for the efficient fusion of heterogeneous data types such as images, sensor readings, and production logs. Compared to traditional single-modal models, these architectures exhibit superior generalization capabilities and enhanced robustness, making them well suited to the demands of real-time perception and intelligent decision-making in dynamic and complex industrial environments. Their core strength lies in the capacity to encode disparate modalities within a shared feature space, enabling deep semantic understanding and intelligent inference across data types. This capability is pivotal for achieving high-precision perception and automated decision support in modern industrial contexts.

Recent advancements in models such as GPT-4o, LLaMA, and DeepSeek have demonstrated transformative progress in multimodal data understanding and generation tasks, driven by large-scale pretraining and sophisticated cross-modal alignment strategies [3,4,5,6]. Extending the fusion mechanisms of these large multimodal models to the domain of industrial multi-source data analysis holds tremendous promise. It can effectively dismantle data silos, unlock hidden cross-modal relationships, and provide robust theoretical and technological foundations for real-time perception, predictive analysis, and decision-making in industrial applications.

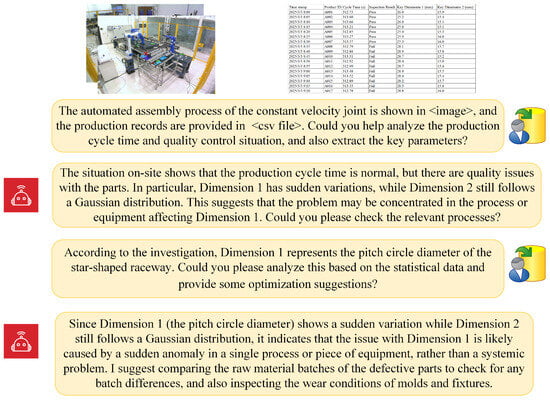

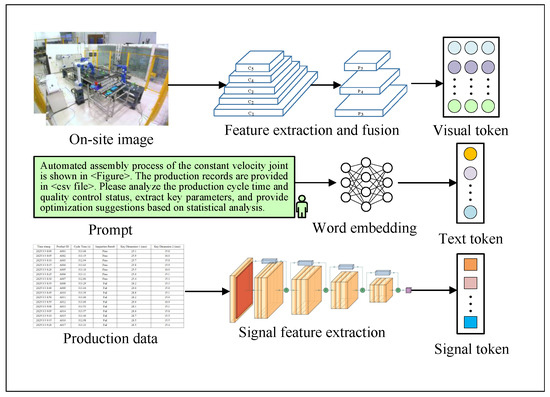

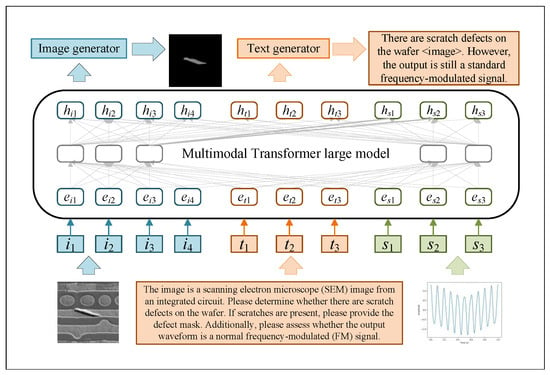

Building on this foundation, the present study proposes the development of a multimodal intelligent perception and decision-making system tailored for complex industrial scenarios. By harnessing the complementary characteristics of image data, time-series signals, and unstructured text, we introduce a unified multimodal representation learning framework. This framework employs a two-stage strategy of large-scale pretraining followed by task-specific fine-tuning, enabling the model to effectively adapt to diverse industrial applications. The core research contributions encompass the design of robust multimodal data alignment methodologies, the formulation of scalable pretraining strategies, the implementation of few-shot fine-tuning techniques for industrial deployment, and the construction of a unified bidirectional image–text generation model [7,8]. Collectively, these advancements culminate in the realization of an industrial question answering and decision-making system, illustrated in Figure 1. This system is capable of delivering accurate, efficient, and real-time intelligent Q&A services to support critical industrial functions such as production management, equipment maintenance, fault diagnosis, and anomaly detection, thereby markedly enhancing decision-making efficiency and elevating the overall intelligence of production environments.

Figure 1.

Dialogue example of a multimodal large language model-based Q&A system for smart manufacturing.

In conclusion, the development of multimodal intelligent perception and decision-making systems has emerged as a pivotal requirement in the pursuit of next-generation intelligent manufacturing and industrial automation [9,10]. Despite substantial progress, current approaches continue to face formidable challenges in achieving deep, meaningful fusion of multimodal data and supporting high-quality, real-time decision-making in industrial contexts. This study aims to overcome these limitations by constructing a novel industrial intelligent perception and decision-making framework powered by large-scale multimodal models [11]. The proposed framework emphasizes cross-modal feature alignment and unified semantic modeling, taking into full consideration the heterogeneous nature of industrial data sources and the complex, dynamic requirements of industrial decision processes.

By uncovering the fundamental mechanisms through which multimodal data fusion can enhance industrial intelligence and decision-making efficacy, this research advances the development of an efficient, scalable, and adaptive optimization and decision-making architecture. The outcomes of this work are poised to deliver critical technological support for key domains including intelligent manufacturing, predictive maintenance, and fault diagnostics. Beyond these applications, the findings will also provide valuable theoretical guidance and practical reference for extending artificial intelligence technologies to increasingly complex industrial environments, paving the way for more autonomous, resilient, and intelligent industrial systems.

2. Related Works

2.1. Current Progress in Modality Alignment and Instruction Tuning for Multimodal Large Language Models

Large language models (LLMs) have garnered widespread recognition for their transformative impact on natural language processing (NLP) and text generation. Nevertheless, early iterations of these models were predominantly confined to single-modal inputs, presenting substantial limitations when addressing complex tasks that require the integration and analysis of multidimensional signals [12,13]. To address this challenge, the research community has progressively advanced the development of multimodal models, with the fusion of visual, signal, and textual information emerging as a particularly promising direction. These models demonstrate the capacity to simultaneously process and reason over heterogeneous data streams, unlocking new possibilities for tackling increasingly complex and diverse applications.

Notable progress in this domain includes the work of Liu et al., who extended instruction-based learning into the language–vision domain through the introduction of the LLaVA (large language and vision assistant) series, an end-to-end trained family of multimodal models [14]. By integrating a vision encoder with a large language model, LLaVA enables unified understanding across modalities and significantly enhances zero-shot generalization capabilities for novel tasks. Building on this foundation, LLaVA 1.5 further validated the effectiveness of employing a multilayer perceptron (MLP) as the vision–language connector [15]. This innovative approach encodes images through grid partitioning, allowing the model to handle arbitrary resolutions with flexibility and scalability.

To further improve the efficiency and adaptability of large language model fine-tuning, Luo et al. proposed the incorporation of lightweight connector modules between image encoders and large language models. This design not only facilitates seamless joint optimization but also integrates a routing mechanism that allows the model to automatically switch between single-modal and multimodal instruction processing. Leveraging this framework, the authors introduced LaVIN (large vision and instruction network), a novel multimodal model [16]. The LaVIN framework is underpinned by modality-mixed adaptation (MMA) and multimodal training strategies, enabling rapid adaptation to vision–language tasks without the need for extensive pretraining.

Extending the concept of multimodal fusion beyond two modalities, Yin et al. developed a multimodal instruction-tuning dataset that encompasses both images and point cloud data. This dataset emphasizes fine-grained detail and factual knowledge, with a thorough exposition of its construction methodology. In addition, they proposed a language-assisted multimodal instruction-tuning framework designed to optimize modality extension, accompanied by baseline models, experimental results, and in-depth analysis. Further expanding on these efforts, Zhang et al. introduced the large language and vision assistant with reasoning (LLaVAR) model [17]. LLaVAR aims to enhance multimodal understanding by leveraging rich text–image datasets and improves generalization to unseen tasks through instruction tuning, demonstrating strong performance in cross-modal reasoning tasks.

Summary of related work in multimodal vision-language models is shown in Table 1. Despite these advancements, current research remains predominantly focused on general-purpose vision-language applications, leaving a notable gap in the field of industrial intelligent perception and decision-making [16]. Existing multimodal large models are primarily trained on natural images and open-domain text, with a conspicuous absence of domain-specific industrial data such as manufacturing process documentation, equipment operation logs, and real-time sensor signals. This deficiency significantly restricts their utility in industrial environments characterized by high data heterogeneity and complex decision-making requirements. Consequently, the integration of existing multimodal large models with industrial intelligence demands, toward the development of an efficient, adaptable, and transferable industrial multimodal intelligent perception and decision-making system, remains an urgent and strategically important research frontier. Addressing these challenges will be critical to advancing intelligent manufacturing, predictive maintenance, and industrial safety management, and should constitute a priority for future research endeavors.

Table 1.

Summary of related work in multimodal vision-language models.

2.2. Current Applications of Large Language Models in Industrial Domains

In recent years, large language models have made transformative strides in natural language processing, computer vision, and multimodal learning. Despite these advancements, their application in industrial domains remains at an early, exploratory stage. Although select enterprises and research institutions have begun to integrate large language models into industrial intelligence pipelines, most existing industrial intelligence systems continue to rely heavily on traditional machine learning methods or rule-based decision-making frameworks. As a result, the full potential of large language models, particularly in cross-modal data fusion, intelligent perception, and autonomous decision-making, has yet to be fully leveraged. At present, applications of large language models in industrial contexts are primarily concentrated in intelligent manufacturing, predictive maintenance, intelligent quality inspection, and fault diagnosis. To better understand the current landscape and future development trajectories, this section examines two representative application scenarios: industrial vibration signal analysis and industrial anomaly detection.

In the domain of industrial vibration signal analysis, Wang et al. proposed a large-model-based analytical framework [18] that leverages a pretrained large-scale time series–text joint model to enhance the representational capacity of multidimensional signal features. This advancement has led to marked improvements in the diagnostic accuracy of bearing faults. Similarly, Ye et al. developed a large-model-based feature learning approach for vibration signals [19], employing a cross-modal knowledge distillation mechanism that enables the model to automatically extract key fault patterns from gearbox vibration data, thereby improving diagnostic precision and operational efficiency. Ribeiro et al. introduced a large language model trained on multichannel vibration signals that integrate visual, temporal, and textual information [20]. By employing cross-modal attention mechanisms and contrastive learning strategies, the model is capable of detecting and diagnosing six distinct types of motor faults. The vibration data are captured using accelerometers placed along two perpendicular axes, with the multi-head self-attention module independently processing inputs from different sensors to achieve efficient and robust feature extraction. Additionally, Li et al. proposed a self-supervised learning framework based on large language models [21] designed to diagnose gear pitting faults in scenarios where only limited raw vibration data are available.

In the field of industrial anomaly detection, multimodal large language models have demonstrated considerable potential in interpreting complex textual inputs and generating diverse outputs in combination with visual data. Jongheon et al. introduced WinCLIP [22], which encodes both textual descriptions and target images, while aggregating multi-scale visual features and text embeddings to ensure coherent alignment between modalities. Zhou et al. proposed AnomalyCLIP [23], a prompt-learning approach that learns generalized representations of normal and anomalous states, thereby enhancing the ability of the model to generalize across disparate domains. This technique reduces the dependency on manually crafted prompts and broadens the applicability of the model to industrial and medical anomaly detection scenarios. Building on this concept, Zhaopeng et al. introduced AnomalyGPT [24], a novel approach that simulates anomalies from normal samples to generate descriptive textual narratives of faults. A lightweight decoder based on visual–text feature matching was designed to directly compare local visual features with textual descriptions, enabling pixel-level anomaly localization. Through prompt learning, AnomalyGPT embeds industrial anomaly detection knowledge within multimodal large language models, creating prompt embeddings that allow for seamless integration of image data, anomaly localization outcomes, and user-provided textual inputs to facilitate robust anomaly detection and localization.

Summary of large model applications in industrial domains is shown in Table 2. While these advances in multimodal data fusion and industrial intelligent decision-making lay a solid foundation for further exploration, existing studies typically address narrowly defined tasks using specialized technologies. These approaches are typically optimized for unimodal data streams and lack the ability for deep cross-modal interactions, making them unsuitable for highly heterogeneous and complex decision-making environments in industrial settings. A review of the current literature reveals no comprehensive framework that systematically employs large-scale multimodal models for industrial data processing, deep cross-modal alignment, and intelligent decision optimization. Therefore, this research aims to establish an industrial multimodal intelligent perception and decision-making framework capable of concurrently processing diverse data sources, including visual imagery, sensor outputs, and production logs. Moreover, the framework will explore advanced methodologies for feature alignment, information fusion, and cross-modal reasoning mechanisms, enabling it to meet the demands of dynamic optimization tasks in complex and evolving industrial environments.

Table 2.

Summary of large model applications in industrial domains.

3. Materials and Methods

This chapter presents a multimodal methodology designed to unify visual data, production records, and textual descriptions for enhanced representation learning and cross-modal generation in industrial diagnostics and monitoring tasks. The proposed approach integrates heterogeneous data sources through an innovative semantic tokenization mechanism and a shared Transformer-based architecture, enabling more accurate and context-aware analysis of complex operational environments. By translating dense visual features, structured signal data, and sparse linguistic inputs into a common token space, this framework facilitates a more holistic understanding of machine behavior and degradation patterns.

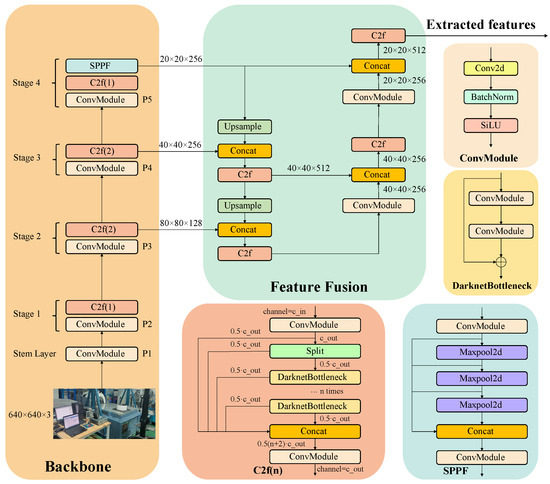

3.1. End-to-End Pretraining Based on Semantic Tokens

To learn unified representations from three modalities: visual data, production records, and textual descriptions, this study proposed an end-to-end multimodal pretraining framework. First, the entire input image is processed using a trainable CNN-based visual encoder (CSPDarknet pretrained on ImageNet image dataset) [25], followed by a convolution layer and a max-pooling layer, which is shown in Figure 2. This encoding process generates grid-based feature maps without relying on predefined bounding boxes, ensuring that global contextual information critical for reasoning tasks is preserved and continuously updated during training. For the input image , its feature is obtained through the following formula:

where function denotes a visual feature encoder parameterized by , producing a total of l feature vectors, each of dimensionality c.

Figure 2.

Comprehensive illustration of CNN-based visual encoder architecture.

To bridge the representational gap between dense visual features, structured production records, and sparse language tokens, a dynamic visual and signal dictionary was introduced. The dictionary clusters semantically similar visual features and indexed production data into shared semantic markers. These semantic embeddings are dynamically updated during training using momentum-based moving averages, and non-differentiability issues were resolved by leveraging a gradient stop technique. Specifically, the visual dictionary is defined as a matrix composed of k embedding vectors, each of dimensionality c. We denote the j-th vector in this dictionary as . To associate a given visual feature with a corresponding dictionary entry, we identify its closest match in by performing a nearest neighbor search:

This lookup defines a mapping function f that assigns each input feature to its most semantically relevant embedding in :

where the visual feature is represented using a semantically similar embedding vector. Initially, the dictionary is populated with random embeddings and subsequently refined through a momentum-based moving average update during training. The update rule is given by:

where represents the updated embedding vector associated with the selected index, and is a momentum coefficient that controls the update rate.

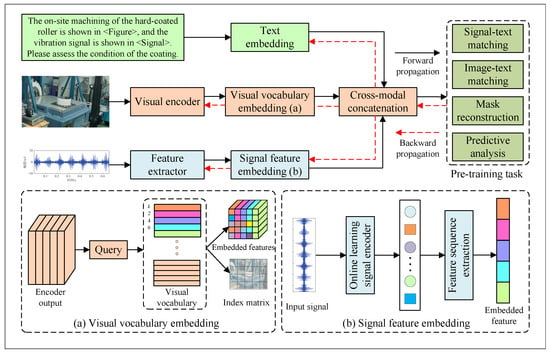

Visual and production-related features are thus transformed into discrete semantic tokens comparable to text tokens, enabling joint processing. The workflow of generating a dictionary is shown in Figure 3.

Figure 3.

Workflow of generating dynamic visual and signal dictionary for bridging representational gaps.

The fused multimodal representations text embeddings from a WordPiece tokenizer, production record embeddings mapped into the same latent space, and dictionary-encoded visual features, concatenated and fed into a multi-layer Transformer network. The Transformer simultaneously handles modality fusion and task-specific decoding. Sinusoidal position encodings were applied to maintain spatial relationships in visual and production-record embeddings.

3.2. Unified Bidirectional Image–Text Generation Model

The model training objectives included three pretraining tasks: masked language modeling (MLM), masked visual modeling (MVM), and multimodal matching (MTM). For MLM, random text tokens were masked and predicted using surrounding text, visual, and production information. The goal of the pretraining task is to predict masked text tokens by maximizing their log-likelihood, conditioned on the surrounding context formed by both masked image tokens and masked text tokens , where and denote the masked positions. The Transformer model, parameterized by , and the objective minimizes the following cross-entropy loss:

Since the layout structure remains fixed during this process, the objective encourages the model to capture meaningful relationships between the layout, the textual content, and the associated visual elements. For MVM, certain visual and production semantic tokens were masked and inferred from the context, with masking strategies adjusted to prevent trivial copying from neighboring regions. The loss function is defined as:

MTM loss optimized the ability of the model to distinguish aligned from non-aligned triplets of images, production records, and text. The model takes contextual text and image as input and outputs a binary label of either “aligned” or “misaligned”, optimized using the binary cross-entropy loss:

After the pretraining process, as shown in Figure 4, all modalities are unified and transformed into discrete token sequences that serve as input to the backbone large language model. Specifically, visual inputs are first processed by a CSPDarknet-based convolutional backbone [25] to extract high-level image features, which are subsequently quantized into visual tokens. Signal data are encoded via a one-dimensional convolutional ResNet architecture, capturing temporal patterns and structural information before being discretized into sequential tokens. Textual inputs are embedded using pretrained word embedding models, converting natural language into token representations compatible with the vocabulary space of the model. These unified token sequences from heterogeneous modalities are then fed into the large Transformer-based language model, enabling cross-modal representation learning and unified generation through a shared attention-based architecture.

Figure 4.

Aligning multiple modalities for input to the Transformer-based language model.

To enable the model to support multiple modal inputs and output text and images simultaneously, we propose a unified bidirectional image–text generation framework based on a multimodal Transformer architecture, which is shown in Figure 5. The model jointly handles image-to-text (I2T) and text-to-image (T2I) generation tasks within a single architecture, significantly reducing design complexity and improving parameter efficiency. The backbone of the framework is a multi-layer LLaMA-based Transformer consisting of multi-head self-attention, feed-forward layers, rotary positional embeddings, and normalization techniques. To stabilize training, this experiment follows CogView [26] by modifying the attention computation as follows:

where the hyperparameter is set to 32. Most parameters are shared across I2T and T2I tasks, except for task-specific linear output layers. The Transformer accepts input sequences composed of visual and textual tokens and maps them into contextual embeddings for prediction. For T2I tasks, an additional image generator is employed to transform predicted visual token grids into high-resolution images.

Figure 5.

Unified Bidirectional Image–Text Generation Framework Based on Multimodal Transformer Architecture.

Training is performed in two stages. First, token-level training using teacher-forcing optimizes cross-entropy loss for both I2T and T2I tasks. Second, sequence-level training addresses exposure bias. For I2T, self-critical sequence training (SCST) is applied with CIDEr-D rewards. For T2I, CLIP-based [27] image-level loss is introduced to promote semantic consistency between the generated images and the input text. This loss is computed based on the cosine similarity between the CLIP-derived embeddings of the generated image and the corresponding textual description, ensuring that both modalities are aligned within a shared semantic space. The T2I generation utilizes a mask-predict non-autoregressive decoding strategy to enhance inference speed, requiring only four sampling steps.

4. Results

This section presents a comprehensive suite of ablation studies designed to rigorously assess the performance of the proposed methodology. The ensuing analysis offers critical insights into the effectiveness of each component. Furthermore, comparative evaluations are included to benchmark the proposed approach against existing state-of-the-art methods. To carry out the experiment, the training process of the proposed and comparative models is performed on a GPU workstation, configured with 128.0 GB of RAM, an Intel Xeon E5-2698 CPU running at 3.6 GHz, and NVIDIA Tesla V100 GPU. All algorithms are implemented in Python 3.10 using the PyTorch framework.

4.1. Results of Vision-Language Pretraining Tasks

This experiment uses the MS COCO [28] and Visual Genome (VG) datasets [29] for pretraining, as most vision-language pretraining tasks are built upon these datasets. To ensure fair evaluation and prevent data leakage, only the training and validation splits of these datasets are utilized during model training. The effectiveness of the model is assessed through two downstream tasks: image–text retrieval, evaluated on the Flickr30K dataset [30], and visual question answering (VQA), evaluated using the VQA 2.0 dataset [31].

4.1.1. Image–Text Retrieval

Image–text retrieval comprises two different tasks: text retrieval (TR), where the goal is to find the most relevant textual description for a given image, and image retrieval (IR), which selects the most relevant image from a set of candidate descriptions. As a core task in vision-language learning, image–text retrieval underpins numerous real-world applications.

In line with common approaches, this experiment constructs mini-batches by sampling t image–text pairs with correct (aligned) annotations. For each image, the remaining texts in the batch are treated as negative (misaligned) samples. The retrieval task is formulated as a binary classification problem, where the model learns to distinguish between aligned and misaligned pairs. To make this prediction, the joint embedding derived from the Transformer’s output tokens is used. Because the image–text retrieval objective closely mirrors the image–text matching (ITM) task used during pretraining, the pretrained parameters naturally transfer well during fine-tuning.

The RAdam optimizer is used, with a learning rate of , decay rates of the moment estimates set to and , and batch size set to 32. The model is trained for 30 epochs until convergence, with the learning rate halved empirically at the 5th, 10th, and 20th epochs.

VSE++ [32], SCAN [33], Unicoder-VL [34], BLIP-2 [35], -VLM [36] and UNITER [37] algorithms are used for comparative evaluation. The experiments are conducted on the MS COCO [28] and Flickr30k [30] datasets, with the corresponding results presented in Table 3 and Table 4. The results demonstrate that the proposed pretraining approach outperforms previous vision-language pretraining methods on most metrics for both MS COCO and Flickr30k. The performance gains highlight the effectiveness of the approach in learning high-quality image–text embeddings through an end-to-end training framework, while also demonstrating the value of the visual dictionary in capturing semantically rich visual features.

Table 3.

Performance evaluation of the model on the image–text retrieval task using the MS COCO dataset. The results in bold indicate the best performance among the compared algorithms for each test set.

Table 4.

Performance evaluation of the model on the image–text retrieval task using the Flickr30k dataset. The results in bold indicate the best performance among the compared algorithms for each test set.

4.1.2. Visual Question Answering

Visual question answering (VQA) challenges a model to generate answers based on both an image and a corresponding natural language question. This task closely approaches intelligent AI, requiring the machine to perform cross-modal reasoning between vision and language in a human-like manner. In this experiment, VQA is modeled as a classification problem, where a multi-layer perceptron is trained to predict categorical answers. Binary cross-entropy loss is used as the optimization objective, with the same optimizer configurations and initial learning rate carried over from the pretraining phase.

ViLBERT [38], VisualBERT [39], LXMERT [40], BEiT [41], and UNITER [37] algorithms are used for comparative evaluation, with results summarized in Table 5. Among these, LXMERT serves as the most directly comparable baseline, sharing both the backbone architecture and pretraining datasets with the proposed method. The proposed method outperforms LXMERT by 5.84% on the test-dev set and 5.57% on the test-std set. Notably, LXMERT also utilizes additional out-of-domain datasets during pretraining. Even under this disadvantaged experimental setup, the proposed method still surpasses UNITER. The strong performance of the proposed method on the VQA task demonstrates the advantages of end-to-end pretraining approaches for visual question answering.

Table 5.

Performance evaluation of the model on the visual question answering task using the VQA 2.0 dataset. The results in bold indicate the best performance among the compared algorithms for each test set.

4.2. Results of Multimodal Alignment Tasks

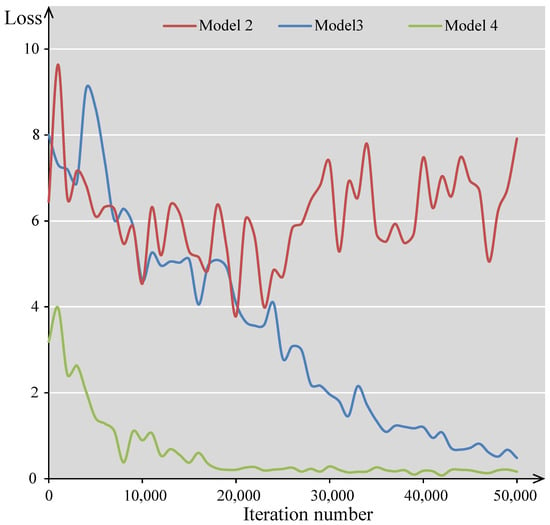

To evaluate the effectiveness of the proposed feature embedding and modality alignment, ablation experiments were conducted by training a total of four different models. First, Model 1 serves as the baseline, utilizing only textual and layout information and trained with a masked language modeling (MLM) objective. Model 2 builds upon this baseline by introducing visual information, where image patches are linearly projected and incorporated as image embeddings into the model architecture. Then, Models 3 and 4 progressively apply the MVM and MTM objectives, respectively, for further pretraining based on Model 2.

The variations in the loss functions during the fine-tuning process of the four models on multimodal datasets are illustrated in Figure 6. The experiments show that the loss function of Model 2 fails to converge. This may be due to the absence of supervisory signals in the image modality, resulting in ineffective alignment between visual and linguistic information. This cross-modal discrepancy likely leads to training instability, preventing the loss from converging.

Figure 6.

Loss convergence curves of the ablation models show that Model 2 fails to converge. By introducing the MVM objective, the loss begins to converge properly. The addition of the MTM objective further reduces the loss.

For tasks that are primarily based on structured production data, such as the FUNSD [42] and CORD [43] datasets, and for image-centric tasks like RVL-CDIP [44] and PubLayNet [45], the performance metrics of different models are presented in Table 6. The results show that Model 1, despite lacking image embedding, achieves reasonably well on certain tasks. This highlights the significant contribution of the language modality, comprising both text and layout information, in document understanding. However, the overall performance remains suboptimal. Moreover, Model 1 is incapable of handling image-centric document analysis tasks, as these require visual modality input. Incorporating visual embeddings in Model 2 by simply appending linearly projected image patches to text embeddings leads to unexpected drawbacks. Specifically, it results in performance drops on the CORD and RVL-CDIP datasets and causes training instability (loss divergence) on PubLayNet. These outcomes indicate that, without a dedicated pretraining objective targeting visual modality, the model struggles to learn meaningful visual features. To address this, the masked visual modeling (MVM) objective is introduced, which randomly masks regions of the image input and requires the model to reconstruct them, thereby promoting better visual representation learning, preserving visual information all the way to the final layer of the model. A comparison between Model 3 and Model 2 demonstrates that MVM improves performance on both CORD and RVL-CDIP. Since the use of linear image embeddings alone already enhances performance on FUNSD, MVM does not provide further gains for that dataset. A comparison between Model 3 and Model 4 shows that the MTM objective leads to improvements across all tasks. Additionally, MTM reduces the loss of the visual task of PubLayNet. These findings demonstrate that MTM not only strengthens visual representation learning but also enhances the ability to capture cross-modal interactions.

Table 6.

Performance metrics of multimodal alignment tasks. The results in bold indicate the best performance among the compared algorithms for each test set.

4.3. Results of Bidirectional Image–Text Generation Model

This experiment evaluates the model on the MS COCO dataset, where each image is annotated with five image captions. The model is trained on the training set and evaluated on the validation set, with 25,000 images randomly selected for testing. For the image-to-text generation task, we follow the standard practice in most image captioning studies and evaluate our model on the Karpathy test split, a subset of the validation set containing 2500 images. The model is initialized from the pretrained X-LXMERT model [46] to enable direct comparison. This model adopts the LXMERT architecture [40] and is pretrained on the MS COCO Captions [28], Visual Genome [29], and VQA [47] datasets.

To better assess the quality of generated images, a human evaluation is conducted for comparison with existing works, and the results are visualized in Figure 4, Figure 5, Figure 6 and Figure 7. Specifically, we compare our model with two state-of-the-art publicly available models: the GAN-based DM-GAN [48] and the Transformer-based X-LXMERT [46], both of which represent strong baselines. We also include a variant of our model without CLIP loss (denoted as “No CLIP”) in the comparison. We randomly sample 300 image captions from the MS COCO test set and use each model to generate images based on those captions. During evaluation, image–caption pairs generated by our model and the baselines are presented in random order to ten English-proficient volunteers with over ten years of learning experience. The volunteers are asked to judge which image (1) looks more realistic and (2) is semantically better matched to the original image caption.

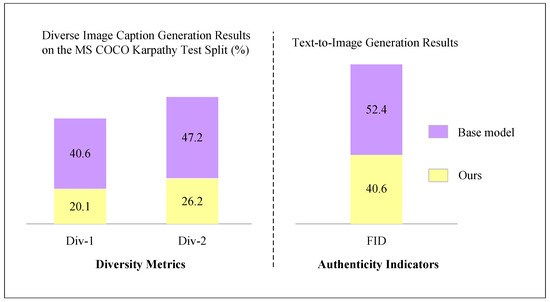

Figure 7.

Comparison of the performance of models in diverse image caption generation and content-rich image generation.

To evaluate the realism of the generated images, we adopt the Fréchet inception distance (FID) [49], where a lower score indicates a closer match between the distribution of generated images and that of real images. For the generated image captions, we use n-gram diversity (Div-n) [50] to measure diversity, and CIDEr-D [51] to assess accuracy. This experiment is conducted on the Karpathy split [52] of the MS COCO Captions dataset [28], where each image is annotated with five captions, aligning well with the objective of our method. As illustrated in Figure 7 and Table 7, the proposed training strategy proves effective in producing diverse image captions. The caption sets generated by our method show significant improvements in diversity, with absolute gains of 51.7% in Div-1 and 74.7% in Div-2 compared to baseline methods. Although the captions generated by our method are more diverse and describe the image from different perspectives, they are slightly less accurate in terms of CIDEr-D compared to those generated by baseline models.

Table 7.

Diverse image caption generation results of the model on the MS COCO Karpathy test split. The results in bold indicate the best performance among the compared algorithms for each test set.

For the text-to-image generation task, incorporating diverse image descriptions improves the FID score, reducing it from 52.4 to 40.6. These results quantitatively confirm the capability of the system in producing effective diverse captions and content-rich image generation. This subsection proposes a bidirectional image–text framework capable of generating both multiple diverse image captions and semantically rich images. Built on a Transformer-based unified network, the approach considers the relationships among multiple input captions, effectively enhancing the diversity of generated captions. The effectiveness of the model is validated both quantitatively and qualitatively on the MS COCO Captions dataset.

5. Discussion

The proposed multimodal large language model-based intelligent perception and decision-making framework demonstrates substantial advancement in addressing the complex challenges associated with heterogeneous industrial data fusion. By effectively integrating visual information, production signals, and textual descriptions, our approach significantly enhances the capabilities of intelligent systems in smart manufacturing contexts. Through comprehensive evaluations, we have validated the robust performance of the model across diverse tasks including image–text retrieval, visual question answering, and multimodal alignment, thereby affirming its practical applicability and theoretical contribution to industrial automation.

Our experimental results indicate that the end-to-end pretraining strategy, complemented by semantic tokenization and unified bidirectional generation, effectively addresses the inherent challenges of modality disparity and representational gaps. Notably, our model consistently outperformed baseline and state-of-the-art methods across key evaluation metrics. In image–text retrieval tasks, our model exhibited superior precision, highlighting the effectiveness of our multimodal matching loss and masked modality prediction strategies in learning rich, discriminative embeddings. Similarly, substantial performance gains were observed in visual question answering tasks, demonstrating enhanced cross-modal reasoning capabilities facilitated by our proposed multimodal alignment framework.

The ablation studies provided valuable insights into the contributions of individual model components. The introduction of masked visual modeling was critical in stabilizing training and enabling effective visual information integration. Moreover, the multimodal matching objective further refined the ability of the model to align and exploit multimodal information, leading to noticeable improvements in downstream task performance. This layered approach not only confirms the importance of each pretraining task but also underscores the necessity of a structured and incremental training regime for optimal performance.

The unified bidirectional image–text generation framework introduced in this study significantly advances multimodal generation capabilities within industrial settings. By employing a shared Transformer architecture, our model successfully achieves seamless integration of image-to-text and text-to-image generation processes. The innovative use of a semantic dictionary for dynamic embedding updates further enables the model to bridge the semantic gap between modalities effectively, fostering a deeper and more nuanced understanding of complex industrial scenarios. Additionally, the incorporation of CLIP-based loss for text-to-image tasks substantially enhanced semantic consistency between generated images and their textual descriptions, indicating a promising direction for future multimodal generative models.

Despite the considerable advancements achieved, our study also highlights several limitations and areas for future research. First, the reliance on pre-existing datasets such as MS COCO and Visual Genome may limit its generalizability to highly specialized industrial datasets, which often exhibit unique and nuanced characteristics. Thus, developing dedicated large-scale industrial multimodal datasets could significantly enhance future model robustness and adaptability. Additionally, while our current approach emphasizes feature alignment and multimodal fusion, exploring advanced model interpretability techniques could further elucidate how multimodal decisions are made, enhancing user trust and facilitating practical deployment.

Finally, future research should explore extending the capabilities of models to incorporate real-time data streaming and dynamic adaptability within actual industrial production environments. Addressing scalability, real-time inference, and efficient incremental learning will be essential to ensuring the readiness of the framework for widespread industrial adoption. By continuously refining these aspects, the proposed framework has the potential to significantly transform intelligent manufacturing, enabling more autonomous, efficient, and adaptive industrial processes.

6. Conclusions

This study presents a comprehensive multimodal intelligent perception and decision-making framework specifically designed for smart manufacturing applications. By integrating visual imagery, production signals, and textual data into a unified representation learning structure, our proposed model substantially improves the accuracy and effectiveness of industrial decision-making processes. The empirical evaluation clearly demonstrates the superior performance of our approach over existing methodologies, highlighting the efficacy of our semantic tokenization mechanism, modality alignment strategies, and unified bidirectional generation framework.

The insights gained from this research not only provide a robust theoretical foundation for multimodal fusion but also establish practical guidelines for the deployment of intelligent industrial systems. Nonetheless, further exploration into dedicated industrial datasets, model interpretability, real-time adaptability, and scalable implementation remains crucial. Advancing these frontiers will undoubtedly enhance the applicability and resilience of multimodal intelligent systems, fostering significant progress toward more autonomous, efficient, and sophisticated smart manufacturing environments.

Author Contributions

Conceptualization, T.W. and D.L.; methodology, T.W.; software, D.J.; validation, T.W., B.Z. and D.J.; formal analysis, B.Z.; investigation, D.J.; data curation, D.J.; writing—original draft preparation, T.W.; writing—review and editing, D.J.; visualization, B.Z.; supervision, D.L.; funding acquisition, D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China (2023YFB3107700), National Industrial Internet Innovation and Development Program Project: “Development of Endogenous Security Industrial Switch Equipment”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this study are openly available. The MS COCO dataset [28] and the Visual Genome dataset [29] used for pretraining tasks can be accessed through their respective official repositories. Additionally, the Flickr30K dataset [30] and VQA2.0 dataset [31] utilized for evaluation purposes are also publicly available. All supporting data can be obtained from the following sources: MS COCO: https://cocodataset.org/ (accessed on 1 May 2025). Visual Genome: https://homes.cs.washington.edu/~ranjay/visualgenome/api.html (accessed on 1 May 2025). Flickr30K: https://www.kaggle.com/datasets/hsankesara/flickr-image-dataset/data (accessed on 1 May 2025) VQA2.0: https://visualqa.org/ (accessed on 1 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alsaif, K.M.; Albeshri, A.A.; Khemakhem, M.A.; Eassa, F.E. Multimodal Large Language Model-Based Fault Detection and Diagnosis in Context of Industry 4.0. Electronics 2024, 13, 4912. [Google Scholar] [CrossRef]

- Bai, T.; Liang, H.; Wan, B.; Xu, Y.; Li, X.; Li, S.; Yang, L.; Li, B.; Wang, Y.; Cui, B.; et al. A Survey of Multimodal Large Language Model from A Data-centric Perspective. arXiv 2024, arXiv:2405.16640. [Google Scholar]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- DeepSeek-AI; Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; et al. DeepSeek-V3 Technical Report. arXiv 2025, arXiv:2412.19437. [Google Scholar]

- Jiang, X.; Li, J.; Deng, H.; Liu, Y.; Gao, B.B.; Zhou, Y.; Li, J.; Wang, C.; Zheng, F. MMAD: A Comprehensive Benchmark for Multimodal Large Language Models in Industrial Anomaly Detection. arXiv 2025, arXiv:2410.09453. [Google Scholar]

- Jiang, Y.; Lu, X.; Jin, Q.; Sun, Q.; Wu, H.; Zhuo, C. FabGPT: An Efficient Large Multimodal Model for Complex Wafer Defect Knowledge Queries. In Proceedings of the ACM/IEEE International Conference On Computer Aided Design (ICCAD), Newark, NJ, USA, 27–31 October 2024. [Google Scholar] [CrossRef]

- Fei, N.; Lu, Z.; Gao, Y.; Yang, G.; Huo, Y.; Wen, J.; Lu, H.; Song, R.; Gao, X.; Xiang, T.; et al. Towards artificial general intelligence via a multimodal foundation model. Nat. Commun. 2022, 13, 3094. [Google Scholar] [CrossRef]

- Geipel, M.M. Towards a Benchmark of Multimodal Large Language Models for Industrial Engineering. In Proceedings of the 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), Padova, Italy, 10–13 September 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Huang, D.; Yan, C.; Li, Q.; Peng, X. From Large Language Models to Large Multimodal Models: A Literature Review. Appl. Sci. 2024, 14, 5068. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, Y.; Hong, Y.; Shang, P.; Wang, Q.; Fu, Q.; Liu, K. A Survey of Multimodel Large Language Models. In Proceedings of the 3rd International Conference on Computer, Artificial Intelligence and Control Engineering (CAICE ’24), New York, NY, USA, 26–28 January 2024; pp. 405–409. [Google Scholar] [CrossRef]

- Lin, Y.; Chang, Y.; Tong, X.; Yu, J.; Liotta, A.; Huang, G.; Song, W.; Zeng, D.; Wu, Z.; Wang, Y.; et al. A Survey on RGB, 3D, and Multimodal Approaches for Unsupervised Industrial Image Anomaly Detection. arXiv 2025, arXiv:2410.21982. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. In Proceedings of the 37th Conference on Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Oh, A., Neumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023. [Google Scholar]

- Liu, H.; Li, C.; Li, Y.; Lee, Y.J. Improved Baselines with Visual Instruction Tuning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–18 June 2024; pp. 26286–26296. [Google Scholar] [CrossRef]

- Liu, F.; Zhu, T.; Wu, X.; Yang, B.; You, C.; Wang, C.; Lu, L.; Liu, Z.; Zheng, Y.; Sun, X.; et al. A medical multimodal large language model for future pandemics. NPJ Digit. Med. 2023, 6, 226. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, R.; Gu, J.; Zhou, Y.; Lipka, N.; Yang, D.; Sun, T. LLaVAR: Enhanced Visual Instruction Tuning for Text-Rich Image Understanding. arXiv 2024, arXiv:2306.17107. [Google Scholar]

- Wang, Y.; Yang, M.; Li, Y.; Xu, Z.; Wang, J.; Fang, X. A Multi-Input and Multi-Task Convolutional Neural Network for Fault Diagnosis Based on Bearing Vibration Signal. IEEE Sens. J. 2021, 21, 10946–10956. [Google Scholar] [CrossRef]

- Ye, Z.; Yu, J. Deep morphological convolutional network for feature learning of vibration signals and its applications to gearbox fault diagnosis. Mech. Syst. Signal Process. 2021, 161, 107984. [Google Scholar] [CrossRef]

- Ribeiro Junior, R.F.; Areias, I.A.d.S.; Campos, M.M.; Teixeira, C.E.; Silva, L.E.B.d.; Gomes, G.F. Fault detection and diagnosis in electric motors using 1d convolutional neural networks with multi-channel vibration signals. Measurement 2022, 190, 110759. [Google Scholar] [CrossRef]

- Li, X.; Li, J.; Qu, Y.; He, D. Semi-supervised gear fault diagnosis using raw vibration signal based on deep learning. Chin. J. Aeronaut. 2020, 33, 418–426. [Google Scholar] [CrossRef]

- Jeong, J.; Zou, Y.; Kim, T.; Zhang, D.; Ravichandran, A.; Dabeer, O. WinCLIP: Zero-/Few-Shot Anomaly Classification and Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 19606–19616. [Google Scholar] [CrossRef]

- Zhou, Q.; Pang, G.; Tian, Y.; He, S.; Chen, J. AnomalyCLIP: Object-agnostic Prompt Learning for Zero-shot Anomaly Detection. arXiv 2024, arXiv:2310.18961. [Google Scholar]

- Gu, Z.; Zhu, B.; Zhu, G.; Chen, Y.; Tang, M.; Wang, J. AnomalyGPT: Detecting Industrial Anomalies Using Large Vision-Language Models. In Proceedings of the 38th AAAI Conference on Artificial Intelligence/36th Conference on Innovative Applications of Artificial Intelligence/14th Symposium on Educational Advances in Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Wooldridge, M., Dy, J., Natarajan, S., Eds.; Association Advancement Artificial Intelligence: Washington, DC, USA, 2024; Volume 38, No. 3. pp. 1932–1940. [Google Scholar]

- Wang, C.Y.; Mark Liao, H.Y.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Ding, M.; Yang, Z.; Hong, W.; Zheng, W.; Zhou, C.; Yin, D.; Lin, J.; Zou, X.; Shao, Z.; Yang, H.; et al. CogView: Mastering Text-to-Image Generation via Transformers. In Proceedings of the 35th Conference on Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Brookline, MA, USA, 2021; Volume 139. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science. Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2641–2649. [Google Scholar] [CrossRef]

- Goyal, Y.; Khot, T.; Summers-Stay, D.; Batra, D.; Parikh, D. Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6325–6334. [Google Scholar] [CrossRef]

- Faghri, F.; Fleet, D.J.; Kiros, J.R.; Fidler, S. VSE++: Improving Visual-Semantic Embeddings with Hard Negatives. arXiv 2018, arXiv:1707.05612. [Google Scholar]

- Lee, K.H.; Chen, X.; Hua, G.; Hu, H.; He, X. Stacked Cross Attention for Image-Text Matching. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2018; Volume 11208, pp. 212–228. [Google Scholar]

- Li, G.; Duan, N.; Fang, Y.; Gong, M.; Jiang, D. Unicoder-VL: A Universal Encoder for Vision and Language by Cross-Modal Pre-Training. In Proceedings of the 34th AAAI Conference on Artificial Intelligence/32nd Innovative Applications of Artificial Intelligence Conference/10th AAAI Symposium on Educational Advances in Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Association Advancement Artificial Intelligence: Washington, DC, USA, 2020; Volume 34, pp. 11336–11344. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 19730–19742. [Google Scholar]

- Zeng, Y.; Zhang, X.; Li, H.; Wang, J.; Zhang, J.; Zhou, W. X2-VLM: All-in-One Pre-Trained Model for Vision-Language Tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 3156–3168. [Google Scholar] [CrossRef]

- Chen, Y.C.; Li, L.; Yu, L.; El Kholy, A.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. UNITER: UNiversal Image-TExt Representation Learning. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; pp. 104–120. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. In Proceedings of the 33rd Conference on the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alche Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Li, L.H.; Yatskar, M.; Yin, D.; Hsieh, C.J.; Chang, K.W. VisualBERT: A Simple and Performant Baseline for Vision and Language. arXiv 2019, arXiv:1908.03557. [Google Scholar]

- Tan, H.; Bansal, M. LXMERT: Learning Cross-Modality Encoder Representations from Transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar]

- Bao, H.; Dong, L.; Piao, S.; Wei, F. Beit: Bert pre-training of image transformers. arXiv 2021, arXiv:2106.08254. [Google Scholar]

- Jaume, G.; Ekenel, H.K.; Thiran, J.P. FUNSD: A Dataset for Form Understanding in Noisy Scanned Documents. In Proceedings of the 15th IAPR 2019 International Conference on Document Analysis and Recognition Workshops (ICDARW) and 2nd International Workshop on Open Services and Tools for Document Analysis (OST), Sydney, Australia, 21–25 September 2019; IAPR: Groningen, The Netherlands, 2019; Volume 2, pp. 1–6. [Google Scholar] [CrossRef]

- Park, S.; Shin, S.; Lee, B.; Lee, J.; Surh, J.; Seo, M.; Lee, H. CORD: A Consolidated Receipt Dataset for Post-OCR Parsing. In Proceedings of the Workshop on Document Intelligence at NeurIPS 2019, Vancouver, BC, Canada, 14 December 2019. [Google Scholar]

- Harley, A.W.; Ufkes, A.; Derpanis, K.G. Evaluation of deep convolutional nets for document image classification and retrieval. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 991–995. [Google Scholar] [CrossRef]

- Zhong, X.; Tang, J.; Jimeno Yepes, A. PubLayNet: Largest Dataset Ever for Document Layout Analysis. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1015–1022. [Google Scholar] [CrossRef]

- Cho, J.; Lu, J.; Schwenk, D.; Hajishirzi, H.; Kembhavi, A. X-LXMERT: Paint, Caption and Answer Questions with Multi-Modal Transformers. arXiv 2020, arXiv:2009.11278. [Google Scholar]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. VQA: Visual Question Answering. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar] [CrossRef]

- Zhu, M.; Pan, P.; Chen, W.; Yang, Y. DM-GAN: Dynamic Memory Generative Adversarial Networks for Text-to-Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; IEEE Computer Society: Washington, DC, USA, 2019; pp. 5795–5803. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the 31st Annual Conference on Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Shetty, R.; Rohrbach, M.; Hendricks, L.A.; Fritz, M.; Schiele, B. Speaking the Same Language: Matching Machine to Human Captions by Adversarial Training. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4155–4164. [Google Scholar] [CrossRef]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. CIDEr: Consensus-based image description evaluation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar] [CrossRef]

- Karpathy, A.; Fei-Fei, L. Deep Visual-Semantic Alignments for Generating Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 664–676. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).