Abstract

Camouflaged object detection (COD) encounters substantial difficulties owing to the visual resemblance between targets and their environments, together with discrepancies in multiscale representation of features. Current methodologies confront obstacles with feature distraction, modeling far-reaching dependencies, fusing multiple-scale details, and extracting boundary specifics. Consequently, we propose ATDMNet, an amalgamated architecture combining CNN and transformer within a numerous phases feature extraction framework. ATDMNet employs Res2Net as the foundational encoder and incorporates two essential components: multi-head agent attention (MHA) and top-k dynamic mask (TDM). MHA improves local feature sensitivity and long-range dependency modeling by incorporating agent nodes and positional biases, whereas TDM boosts attention with top-k operations and multiscale dynamic methods. The decoding phase utilizes bilinear upsampling and sophisticated semantic guidance to enhance low-level characteristics, hence ensuring precise segmentation. Enhanced performance is achieved by deep supervision and a hybrid loss function. Experiments applying COD datasets (NC4K, COD10K, CAMO) demonstrate that ATDMNet establishes a new benchmark in both precision and efficiency.

1. Introduction

Camouflaged object detection (COD) [1] is a persistent and challenging domain within computer vision, concentrating on the identification of objects purposefully manufactured to coordinate with sophisticated scenes. These targets tend to be concealed leveraging innovative techniques, including alterations in color, form, texture, or illumination, complicating their identification via conventional visual inspection systems. Accurately identifying such targets becomes crucial for various practical applications, including military reconnaissance for target recognition, concealed animal monitoring in wildlife protection, and the detection of illegal intrusions in security surveillance systems, among others.

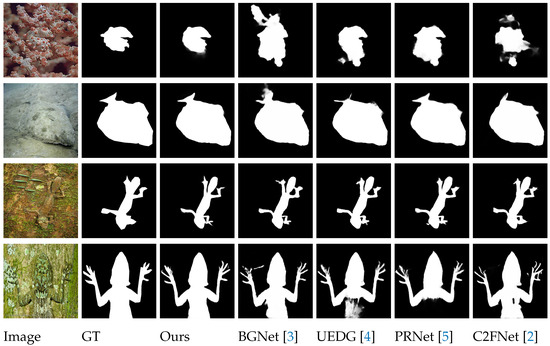

Camouflaged objects typically exhibit complicated feature spaces and multiscale information, hampering the ability of existing methods to effectively and comprehensively extract and represent these characteristics, thus restricting detection accuracy. Notwithstanding breakthroughs in CNN-based COD, intrinsic constraints endure the following: (1) constrained receptive field for global context: the confined character of convolutional procedures limits the modeling of long-range dependencies; (2) inappropriate multiscale feature fusion: unsophisticated techniques frequently produce redundant or conflicting feature interactions; (3) boundary ambiguity: recurrent pooling operations decline spatial resolution, compromising the precision of edge delineation. Even SOTA methods [2] are insufficient for achieving the accurate segmentation of camouflaged targets in complex scenarios. The challenge illustrated in Figure 1 mostly evolves from the model’s deficient selectivity in processing scale-related feature signals, impeding the distinction between camouflaged objects and closely comparable backgrounds. Therefore, mitigating this issue involves the enhanced usage of multiscale feature fusion and local feature attention.

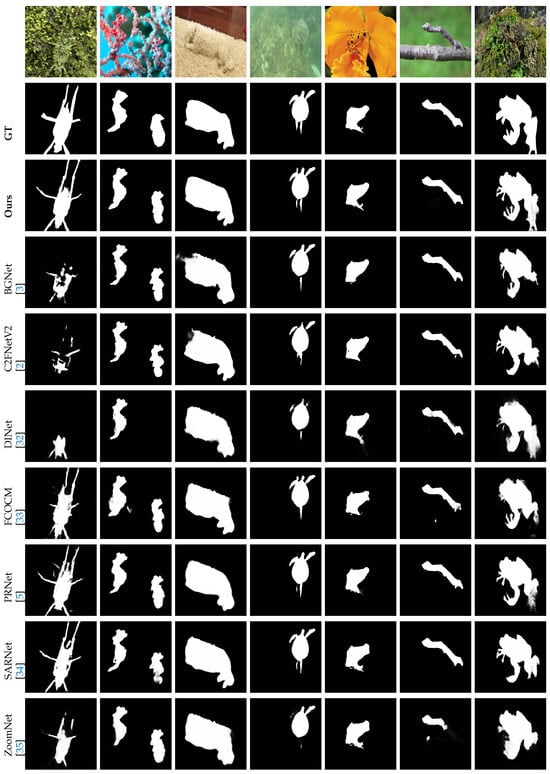

Figure 1.

A visual comparison of our ATDMNet with contemporary SOTA methods [2,3,4,5], such as BGNet [3] and PRNet [5], for COD. Certain approaches inadequately segment the region, failing to reliably gather and forecast tracked items.

To take on these problems, we render an updated camouflaged target detection approach, ATDMNet, that boosts detection performance while preserving computational economy. ATDMNet attains SOTA consequences at the site, surpassing rival methodologies in both parameter efficiency and computational efficacy. ATDMNet’s network architecture leverages an end-to-end design structured into three primary stages: feature extraction, information integration, and guided refinement.

During the feature extraction phase, ATDMNet utilizes Res2Net as the foundational encoder. The input image undergoes processing via convolutional layers, batch normalization, activation functions, and max-pooling layers, facilitating the extraction of multi-level semantic features with residual block layers. The features are further refined by adopting GFM layers, merging the superficial representation strengths of convolutional neural networks (CNNs) with the profound semantic modeling skills of transformers. This hybrid method markedly raises the model’s target localization effectiveness.

During the information integration phase, the multi-head agent (MHA) mechanism is employed to facilitate a bidirectional attention mechanism with agent nodes and positional biases. The top-k dynamic mask (TDM) module is concurrently utilized to create a multi-tiered attention mechanism. This approach utilizes convolution to offer query, key, and value representations, successfully incorporating multiscale global information and augmenting the model’s ability to represent and integrate characteristics.

During the guided refinement phase, the decoder incrementally reconstructs the feature map dimensions via an attention mechanism in conjunction with bilinear upsampling. This approach generates an individual channel output, exploiting high-level semantic information to enhance low-level features, which is employed to further augment the feature refinement process. A deep supervision technique and a hybrid loss function are employed to separately direct the learning process at each level of the decoder, markedly boosting the model’s identification and generalization skills. Collectively, these innovations empower ATDMNet to achieve the precise identification and segmentation of disguised targets, establishing an entirely novel norm in the domain.

To investigate the viability of the proposed ATDMNet methodology, we execute extensive and thorough experiments on three prominent COD benchmark datasets, including NC4K [6], COD10K [7], and CAMO [8]. The experimental findings indicate a weighted F-measure of 0.804 and a mean absolute error (MAE) of 0.021, surpassing the second-best model, PRNet [5], which attains values of 0.799 and 0.023, respectively, on the COD10K-test dataset. ATDMNet reaches new SOTA performances across all benchmarks. This study’s principal contributions are as follows:

(1) We present a unique ATDMNet architecture that effectively blends feature information across several scales, showcasing improved adaptability to the feature properties of disguised objects at various resolutions. This capacity substantially improves overall detection efficacy, facilitating more precise identification in intricate situations.

(2) The multi-head agent (MHA) module and the top-k dynamic mask (TDM) module are implemented to improve the model’s responsiveness to local features. These modules proficiently capture long-range connections and enhance the dissemination of information globally, hence strengthening the model’s ability to detect disguised items in various contexts.

(3) Through comprehensive quantitative and qualitative testing, we confirm that ATDMNet exemplifies remarkable proficiency in modeling scale-related information and capturing border aspects. The findings suggest that ATDMNet attains exceptional accuracy and reliability in disguised target detection, establishing an unprecedented standard in the domain.

2. Related Work

2.1. Camouflaged Object Detection

Conventional COD methodologies [9] typically rely on saliency detection and segmentation algorithms to identify distinctive characteristics. Nevertheless, in intricate camouflage situations, these techniques frequently exhibit suboptimal performance owing to their restricted ability to precisely differentiate the specific distinctions between concealed items and their ambient background. Consequently, typical techniques sometimes necessitate the incorporation of supplementary features or auxiliary data to augment detection precision and reinforce their resilience in more discerning settings.

In recent years, convolutional neural network (CNN)-based strategies for COD have substantially enhanced detection accuracy. These methodologies can be classified into three primary strategies: (a) boundary guidance: BGNet [3] investigates supplementary edge semantic information pertinent to objects and delineates prominent structural characteristics of the target. BSA-Net [10] utilizes a dual-stream separation attention module to distinguish between foreground and background, integrating this with a boundary guidance module to augment boundary feature comprehension, thus enhancing detection efficacy. (b) Frequency domain information enhancement: Zhong et al. [11] incorporated frequency domain information into CNNs by employing a multi-head self-attention mechanism and high-order relationship modules to retrieve and incorporate cross-domain features, thereby augmenting the model’s capacity to detect subtle camouflage patterns. FSEL [12] introduces an entanglement transformer block (ETB) and a dual-domain inverse resolver, adeptly capturing correlations across several frequency bands and synthesizing cross-domain features for enhanced detection. FPNet [13] employs a dual-stage frequency-range-sensing technique that enhances detection efficacy via the coarse and fine localization of camouflaged targets. FGSA-Net [14] transfers features into the frequency domain and dynamically modulates the intensity of frequency components by organizing and interacting with them within non-overlapping circular sections of the spectrogram. (c) Utilization of contextual information: Spider [15], a cohesive parameter-sharing model, evolves and differentiates robust context dependence (CD) across multiple fields, combining one-shot training and image-mask group prompts to strengthen the model’s efficacy. C2F-Net [2] applies cross-layer context-aware features, constructs a cascaded attention-driven cross-layer fusion module (ACFM) and a dual-branch global context module (DGCM), and computes attention coefficients for multi-level features to enhance and amalgamate salient features.

2.2. Transformers in Computer Vision

Since the introduction of the transformer architecture, it has swiftly attained transformative breakthroughs in the domain of natural language processing (NLP) [16]. Recently, the transformer architecture has been customized for implementation in computer vision. In contrast to typical CNNs, transformers utilize the self-attention technique to comprehensively capture dependencies among input data. This attribute renders it appropriate for diverse visual domains. It has been extensively applied in tasks, encompassing critical domains such as image classification, semantic segmentation, object detection, and salient object detection.

Transformer-based models are progressively emerging as a predominant trend in the COD domain. HGINet [17] employs the Hierarchical Graph Interactive Transformer (HGIT) for facilitating bidirectional communication across hierarchical features. FSPNet [18] adopts non-local techniques to augment the local representation of transformers and explores the interaction of adjacent tags through high-order relationships grounded in graphs. CamoFormer [19] presents the Simple Masked Separable Attention (MSA) mechanism, which segments multi-head self-attention into three components, each employing distinct masking methods. UGTR [20] amalgamates probability with the transformer architecture and embraces uncertainty to facilitate reasoning in ambiguous situations.

Our ATDMNet is constructed upon the prevailing transformer structure. Rather than emphasizing on the creation of an entirely new architecture, our objective is to investigate alternative approaches to maximize the efficacy of self-attention mechanisms in COD tasks. We incorporate multi-level semantic characteristics into the multi-head agent attention modules, successfully emphasizing features at various scales through the integration of top-k operations and multiscale attention masks. This architecture renders our study distinctly unique among transformer-based COD methodologies.

3. Proposed ATDMNet

3.1. Overall Architecture

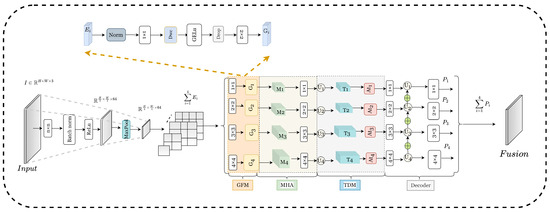

We utilize an encoder–decoder architecture to construct our ATDMNet. ATDMNet constitutes a comprehensive training framework, as seen in Figure 2.

Figure 2.

ATDMNet addresses challenges in binary segmentation. The GFM and MHA modules integrate multi-level features retrieved by Res2Net across many scales within the encoder. Top-k dynamic mask (TDM) is employed to enhance feature representation. The decoder derives the single-channel output using upsampling and convolutional layers to reconstruct the feature map. The structures of MHA and TDM are presented in Figure 3 and Figure 4.

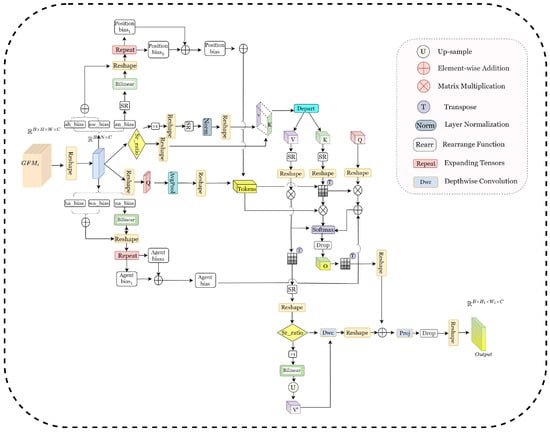

Figure 3.

Multi-head agent (MHA).

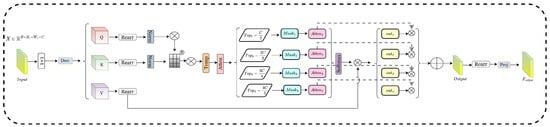

Figure 4.

Top-k dynamic mask (TDM).

Encoder: We rely on Res2Net as the encoder by default, as the visual transformer has demonstrated strong effectiveness in binary segmentation tasks. The input image is processed through many convolutional layers, batch normalization, and activation functions, resulting in the output . Subsequently, a max-pooling layer is applied to downsample the feature map by fifty percent, resulting in the output . This is succeeded by four essential residual blocks, each generating its corresponding output .

We feed the multi-level semantic features from the encoder into the GFM. The GFM model is utilized to achieve an improved equilibrium between efficiency and performance by executing convolutions and feature transformation:

The features obtained by GFM transformation are transferred to MHA together for multiscale feature fusion to generate . The process is defined as follows:

Subsequently, within the context of the preceding feature fusion, varied attention masks are applied to spotlight the elements of diverse scales highlighted by the TDM module, hence augmenting the model’s representational capacity and generalization efficacy. This procedure is delineated as follows:

The acquired features are input into the decoder to produce a feature fusion map .

3.2. Agent Top-k Dynamic Mask (ATDM)

Acquiring scale information across numerous characteristic areas is essential for the detection of camouflaged objects. Consequently, augmenting feature representation and emphasizing local features are essential for enhanced detection accuracy. Consequently, we present an innovative method that integrates the conventional multi-head self-attention mechanism with agent tokens. We present Agent Top-k Selection and Dynamic Masking, which enhance the model’s capacity to deal with long-range dependencies while preserving sensitivity to intricate local details.

In our methodology, distinct attention heads are assigned specific responsibilities to enhance the model’s capability to concentrate on significant features. The implementation of agent features is crucial in this process. Utilizing adaptive average pooling, we derive crucial information from the input feature map, which is subsequently employed to generate various attention matrices. The Top-k maximum values are chosen to enhance the attention mechanism, hence augmenting the model’s capacity to identify pertinent characteristics. This approach boosts feature attention while simultaneously decreasing computing complexity, thus promoting the more precise and efficient recognition of camouflaged objects.

Our ATDM is constructed on a multi-head self-attention fusion agent to minimize computation, specifically multi-head self-attention (MHSA). Let , where H and W signify height and width, respectively, and C denotes the number of channels. The equation for MHSA is as follows:

In this context, Q represents the query matrix, K denotes the key matrix, V signifies the value matrix, and refers to the dimension of the key vector, which is utilized to scale the dot product for the purpose of stabilizing the Softmax function. These calculations are executed, making use of parallel attention mechanisms.

Among these, and are the transformation matrices for the query, key, and value of the i-th head, respectively. The results from all heads are concatenated and subsequently subjected to a final linear transformation, followed by layer normalization, which generates the output of the multi-head attention.

The aforementioned facilitates the concurrent operation of several independent attention heads, each focusing on distinct facets of the incoming data. The attention mechanism is utilized to encode spatial information, allowing the model to effectively capture interdependence across spatial dimensions.

3.2.1. Multi-Head Agent (MHA)

The primary distinction in our is the incorporation of agent nodes (agent tokens) and the position and agent bias for multiscale feature integration and sensitivity to spatial location. To accomplish this, we transform the input tensor X from the shape to , where represents its flattened sequence form.

If , spatial reduction () is executed first. The size of the convolution kernel is the . Setting results in the downsampling of the spatial dimensions of the input feature map. The particular operation is as follows:

Subsequently, compute the key value, where . When , we perform spatial downsampling as follows:

Ultimately, partitioning into K and V, compute the query Q and then employ adaptive average pooling from Q to generate tokens.

Figure 3 illustrates that in MHA, position bias and agent bias are two fundamental position-aware biases that establish bidirectional attention and simultaneously capture both global and local information. They are utilized for agent-to-grid attention and grid-to-agent attention, respectively. The model can explicitly encode relative position information through position bias and agent bias. However, their mechanisms of action are fundamentally different.

From the perspective of structural design and effect, position bias is employed to assess the attention of agent tokens towards the spatial grid, which comprises three components: (1) (): the spatial correlation between tokens and their immediate vicinity; (2) (): the inclination of tokens towards the horizontal orientation; (3) (): the inclination of tokens towards the vertical orientation. Upon the initialization of , sampling from a truncated normal distribution is employed. From this, we can deduce that the positional bias is as follows:

During the computation of attention, is adjusted in size according to to correspond with the spatial dimensions of K.

Agent bias is employed in the attention computation of spatial grids to tokens and comprises three components: (1) (): modeling the local neighborhood association of spatial position to tokens; (2) (): modeling the preference of the horizontal position to tokens; (3) (): modeling the preference of the vertical position to tokens. The initialization procedure for mirrors that of , but the dimensions are modified to to correspond with the input resolution.

Regarding functional objectives, serves as a dynamic receptive field controller that modulates the agent’s attention distribution range via the temperature coefficient; functions as an information routing selector, utilizing learnable parameters to establish the information aggregation pathway from various spatial locations to the agent token, leading to a distributed feature integration akin to a voting mechanism.

Meanwhile, several positional biases are implemented to modify the attention weights. These biases establish supplementary connections between agent nodes and ordinary nodes, enabling the recording of more intricate interaction patterns and upgrading the capacity of the model to learn spatial dependencies.

For agent-to-grid attention,

For grid-to-agent attention,

Among them, and represent the positional biases from the agent to the grid and from the grid to the agent, respectively.

The output is generated. Initially, the dimensions of are modified. If , carry out bilinear interpolation and upsampling on V and incorporate deep convolution into V to augment local features. Ultimately, execute linear projection. MHA enhances the model’s sensitivity to local features through the integration of agent nodes and promotes efficient information flow using a bidirectional attention mechanism.

3.2.2. Top-k Dynamic Mask (TDM)

This module implements a customized multi-head self-attention mechanism to capture spatial and channel dependencies in feature maps, employing and layers to produce queries for the input features via convolution operations. Specifically, Q denotes the query, but K and V signify distinct representations of the input features. Meanwhile, transform into multi-head format using the function to execute attention computations across various heads.

Afterwards, normalize K and V along the final dimension.

After that, calculate the original attention matrix, where serves as the scaling factor.

is a trainable temperature parameter utilized to modulate the attention scores, enabling the model to adaptively regulate the focus intensity for each head throughout training. This module creates an initial attention matrix (Attn) by assessing the similarity between Q and K, thereafter selecting a specified number of components based on the attention scores through the top-k operation. Attention masks of varying scales ( to ) regulate the allocation of attention across many levels, progressively augmenting the quantity of selected attention elements to establish a multi-tiered attention mechanism.

Upon implementing regularization at each attention level (, , etc.), the resultant attention matrices are multiplied by the value V to produce weighted outputs ( to ).

These outputs correspondingly delineate the attention ranges of varying densities. For instance, exclusively accounts for the top 50% of attention values, while encompasses 80%.

In the concluding phase, the outputs from the several attention layers are weighted and consolidated, utilizing weighting parameters to . Thereafter, the consolidated output undergoes a projection and transformation procedure to produce the integrated multiscale attention output.

Decoder: Considering as the number of output channels, the shape of the transformed feature map is , where N signifies the batch size, indicates the output channels, and and indicate the height and width of the feature map at scale n. Refer to Figure 2 for specifics. The multiscale fusion feature map is derived by the attention mechanism and upsampling process, and it can be represented as follows:

denotes the bilinear upsampling operation employed for aligning spatial dimensions. Thereafter, the feature map undergoes processing via a convolutional layer to yield a single-channel output, which can be formally articulated as follows:

3.3. Loss Function

In accordance with [21], we refer to the predictions generated by the ATDMNet decoder as . All predicted maps , with the exception of the final prediction map , are employed for multiscale fusion as previously delineated. In the phase of training, each is upsampled to correspond with the dimensions of ground truth , and a weight matrix is calculated to highlight the significance of boundary regions. All projections are governed by the BCE loss and IoU loss, with the final loss calculated as the average of the BCE and IoU losses. The overall loss function is the aggregate of multi-stage losses, each with a distinct weight.

4. Experimental Results

4.1. Experiment Setup

Implementation details: We utilize the PyTorch 1.8+ library [22] to implement ATDMNet. A pretrained Res2Net [23] functions as the encoder, whereas PVTv2 [24] acts as the primary backbone. We additionally provide findings using alternate architectures, including the transformer-based Swin Transformer [25] and the CNN-based ResNet [26]. The utilized optimizer is Adam [27], utilizing an initial learning rate of and a weight decay of to mitigate overfitting. The learning rate schedule employs Cosine AnnealingLR, which is appropriate for small batch training in this experiment and mitigates local optimality through periodic restarts. The maximum period is 20, and the lowest learning rate is . Gradient clipping is employed for regularization with a threshold of 0.5 to avert gradient explosion. All training datasets are sized at 448 × 448 pixels. The computational load is regulated while preserving details, in accordance with the layer architecture of PVTv2. Constrained by GPU video memory, the model is subjected to end-to-end training for 60 epochs, necessitating approximately 7 h with a batch size of 8 on an NVIDIA RTX 4090 24 GB (NVIDIA Corporation, Santa Clara, CA, USA), thus optimizing video memory utilization and batch stability.

Datasets: We assess our approach using three prevalent COD benchmarks: CAMO [8], COD10K [7], and NC4K [6]. CAMO comprises 2500 photos, with an equal distribution of camouflaged objects and non-camouflaged objects. COD10K comprises 5066 camouflaged photos, 3000 background images, and 1934 non-camouflaged photographs. NC4K is an extensive dataset comprising 4121 test photos. In accordance with prior studies, we utilize 1000 images from CAMO and 3040 images from COD10K for training, reserving the remaining images for testing.

Evaluation Metrics: To assess the initial three tasks, we employ the subsequent metrics: The mean absolute error (MAE) adaptive F-measure () [28], mean E-measure () [29], and structure measure () [30] are referenced in [31]. Reduced values of M and elevated values of , , and signify superior performance.

4.2. Qualitative Evaluation

Visualization Predictions

Figure 5 presents a systematic comparison of the proposed ATDMNet against seven SOTA camouflaged object detection (COD) algorithms across several scenarios featuring multiple camouflaged items, ranging from Column 1 (Lizard) to Column 7 (Frog). Quantitative assessments indicate that current methodologies, such as BGNet [3], C2FNetV2 [2], and DINet [32], demonstrate considerable deficiencies in identifying low-contrast camouflaged objects. As an illustration, these approaches exhibit insufficient segmentation of the camouflaged lizard in Column 1, with significant false positives (e.g., erroneously categorizing background textures as feline characteristics). This boost in performance arises from ATDMNet’s hierarchical multiscale fusion design, which incorporates cross-scale attention methods to increase discriminative feature representation.

Figure 5.

Visual comparisons of the predictions between the proposed ATDM and other SOTA methods (Baseline) [2,3,5,32,33,34,35]. Segmentation masks are shown in white.

4.3. Quantitative Evaluation

4.3.1. Comparison with Existing COD Methods

We contrast our ATDMNet with eight ResNet50-based SOTA COD models, including BGNet [3], BSANet [36], SegMaR [37], ZoomNet [35], C2FNet [2], FEDER [38], ICEG [39], and CamoFormer [19]; four Res2Net50-based models, including FDNet [40], SINetV2 [1], DINet [32], and FCOCM [33]; and seven transformer-based methods, including FPNet [41], FSPNet [42], SARNet [34], UEDG [4], VSCode [43], RISNet [44], and PRNet [5]. To ensure a fair comparison, the prediction results are either directly provided by the authors or generated using their well-trained models.

As observed in Table 1, our proposed ATDMNet consistently and markedly surpasses prior methods across all three benchmarks, without any requirement for any post-processing techniques or additional information for training. In comparison with contemporary CNN-based COD methodologies, including ZoomNet [35], FDNet [40], and SegMaR [37], which utilize multiple stages training and inference strategies that introduce additional computational burden, ATDMNet consistently attains significantly better outcomes across all benchmarks. Moreover, in comparison to transformer-based models (e.g., VSCode [43] and RISNet [44]), our approach surpasses them, establishing new SOTA benchmarks.

Table 1.

Quantitative comparison with the recent SOTA methods on three benchmark datasets. ↑/↓ denotes that larger/smaller is better. “-” is not available. The top two models are bolded and underlined, respectively. Red and blue indicate the best and second performance respectively. Same explanations apply to the following tables.

4.3.2. Computational Efficiency Analysis

Table 2 illustrates that, regarding the correlation between parameter size and MACs, the Res2Net50 branch exhibits that ATDM-R2N (29.5 M/43.2 G) is markedly inferior to SINetV2 (51.1 M/68.5 G) and obtains the highest FPS (88.4). This is attributable to the multiscale residual architecture of Res2Net and the dynamic mask co-optimization of TDM, which eliminates superfluous feature computations. FDNet (28.7 M/45.6 G) demonstrates performance equivalent to ATDM-R2N, albeit with a marginally reduced FPS (85.6), suggesting that MHA’s proxy nodes enhance the efficacy of parallel computing.

Table 2.

Computational efficiency comparison of SOTA COD methods.

We investigate the trade-off between FPS and computing efficiency, notably contrasting transformers and CNNs. Under identical MACs, FPS markedly exceeds that of CNN approaches (e.g., ATDM-R2N), implying that GPU parallel computing optimization for the transformer is more beneficial, particularly when the model minimizes superfluous attention computations (such as the top-k screening of TDM). Nonetheless, pure CNN technologies (such as BGNet and ZoomNet) are constrained by local receptive fields and necessitate additional layers to capture long-range dependencies, leading to an escalation in parameters and computational demands, yet yielding minimal innovations in speed.

4.4. Ablative Studies

To evaluate the sensitivity of local details in our proposed method, the capacity to incorporate global information, and the efficacy of each major module, we perform ablation studies on three datasets. We numerically and qualitatively illustrate the impact of local feature sensitivity and long-range dependence on the model.

4.4.1. Validation of Module Combination

Table 3 demonstrates that the integration of MHA boosts from 0.742 to 0.769 (+2.7%), while MAE shrinks from 0.032 to 0.029. This improvement is attributed to MHA’s capacity to equilibrate the significance of global and local information, thereby alleviating redundant computations by dynamically modulating the attention range through spatial reduction ( in Formula (9)) and explicitly modeling spatial connections ( and in Formulas (13) and (14)) with sparse interactions among agent nodes. With the integration of TDM, the for multiscale targets on NC4K increases by 0.9% ; TDM obtains cross-scale complementarity with top-k screening and multi-level mask fusion. Simultaneously, in conjunction with channel standardization (Formula (19)) and the dynamic temperature parameter (Formula (20)), the attention strength is adaptively modulated to facilitate the implicit simulation of channel dependence. In COD10K, the combined application of MHA and TDM results in a 1.6% improvement , supporting the complementary optimization of both approaches via “feature enhancement” and “feature focusing”.

Table 3.

Ablation study results (performance comparison on CAMO, COD10K, and NC4K datasets).

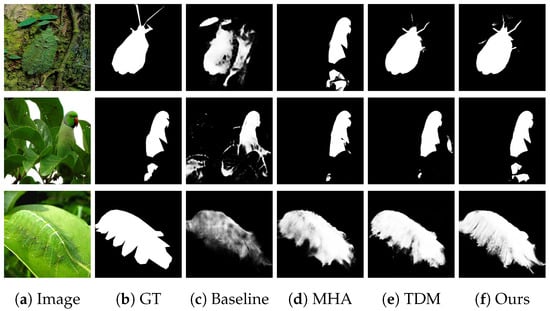

4.4.2. Feature Visualization

As illustrated in Figure 6d, the incorporation of MHA results in a transformation of the caterpillar limb outlines from indistinct (Baseline) to distinct, while simultaneously diminishing the false detection regions of analogous textures (e.g., leaf textures) in the background, attributable to the amplification of local features by the MHA’s agent nodes. As illustrated in Figure 6e, the incorporation of TDM transforms the parrot trunk area from fragmented (Baseline) to intact, while the interference response of the leaf backdrop diminishes (MAE falls by 0.002) due to TDM’s integration of multiscale characteristics via dynamic masks. As illustrated in Figure 6e, the comprehensive model facilitates the more precise segmentation of the transition zone between insect transparent wings and the backdrop, achieving a of 0.915.

Figure 6.

Results of the ablation study visualized. (a) Input image; (b) ground truth; (c) Baseline; (d) incorporating MHA; (e) incorporating TDM; (f) ATDMNet.

4.4.3. Balance Analysis of the Number of MHA Agent Nodes

We perform experiments utilizing MHA with differing quantities of agent nodes, establishing five experimental groups to train ATDMNet. The number of agent nodes is configured to various values (4, 8, 16, 32, and 64). The default top-k ratio of the fixed TDM module is 50%, and the number of dynamic mask levels is configured to three layers. As indicated in Table 4, with 16 agent nodes, the COD10K and CAMO datasets attain optimal values across several metrics, including , , , and . In the NC4K dataset, the maximum value is recorded with eight agent nodes. Augmenting the number of agent nodes beyond 16 (e.g., to 32 or 64) results in performance deterioration, perhaps due to noise from duplicate calculations or overfitting.

Table 4.

Performance with varying quantities of agent nodes.

4.4.4. Adaptability Analysis of TDM Top-k Ratio

We established varying top-k ratios while maintaining a constant number of agent nodes in the MHA module and a fixed number of dynamic mask levels in the TDM module. According to Table 5, a 70% top-k ratio achieves the optimal performance across all three datasets. A low ratio results in the excessive filtration of essential features, causing reductions in and . Conversely, an excessively high ratio introduces noise, resulting in reductions in MAE and . CAMO exhibits heightened sensitivity to the top-k ratio, demonstrating a substantial increase in at 70%. Consequently, for intricate situations, a top-k ratio of 70% to 80% is advised to achieve a balance between accuracy and robustness.

Table 5.

Performance across various top-k ratios.

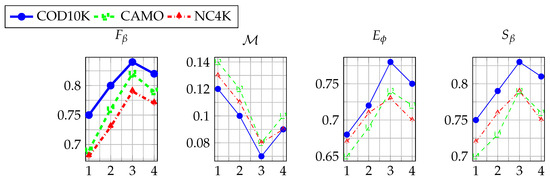

4.4.5. Optimization Analysis of Dynamic Mask Level

Expanding upon the sensitivity study of MHA and TDM hyperparameters, we further examine the influence of the number of dynamic mask layers on ATDMNet’s performance. The number of agent nodes is fixed at an optimal value of 16, and the top-k ratio is set at 70%. As depicted in Figure 7, a deficient number of layers results in the inadequate fusion of multiscale characteristics, leading to performance deterioration. Conversely, an overabundance of layers engenders computational redundancy, resulting in diminished performance (e.g., on COD10K declines from 0.84 to 0.82). Upon thorough investigation, we conclude that three layers represent the global optimum, attaining peak performances across all three datasets while efficiently incorporating multiscale features.

Figure 7.

Influence of the number of dynamic mask levels on performance.

5. Conclusions

We present ATDMNet, a unique architecture for camouflaged object detection (COD) that combines a Res2Net-based encoder with a multi-dead agent attention (MHA) mechanism and a top-k dynamic mask (TDM) module to tackle issues in multiscale feature fusion and boundary refining. The MHA improves local sensitivity and global dependency modeling through agent nodes and positional biases, whilst the TDM emphasizes essential features via dynamic multiscale masking. The decoder utilizes bilinear upsampling and high-level semantic guidance to enhance low-level features, resulting in the accurate segmentation of disguised targets. ATDMNet’s resilience in intricate circumstances and computing efficacy position it as a standard for forthcoming COD research.

Author Contributions

R.F. and Y.L. conceptualized the study and devised the methodology; R.F., Y.L. and C.-C.C. formulated the algorithms; R.F. and Y.D. executed software implementation and conducted experiments; R.F., Y.L., C.-C.C. and P.Y. performed validation and analysis; R.F. and K.Z. conducted formal analysis; R.F. and Y.D. examined the data; Y.L. and C.-C.C. supplied resources; P.Y. and K.Z. curated the datasets; R.F. authored the original draft; Y.L. and C.-C.C. reviewed and revised the manuscript; Y.D. generated visualizations; Y.L. oversaw the project and secured funding. All writers reviewed and endorsed the final manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Undergraduate Innovation and Entrepreneurship project (202410623003), the Sichuan Science and Technology Program (2023YFH0058), and the Engineering Research Center for ICH Digitization and Multi-source Information Fusion (G3-KF2022).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets produced and examined and the ATDMNet source code in this study are now freely accessible on GitHub at https://github.com/dfryeel/free.git (accessed on 1 March 2025). This study did not generate any new datasets. For further information or materials, please reach out to the corresponding author.

Conflicts of Interest

The authors disclose no conflicts of interest. The funders played no part in the study’s design, data collection, analysis, interpretation, manuscript preparation, or publication decision.

References

- Fan, D.P.; Ji, G.P.; Cheng, M.M.; Shao, L. Concealed object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6024–6042. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Liu, S.J.; Sun, Y.J.; Ji, G.P.; Wu, Y.F.; Zhou, T. Camouflaged object detection via context-aware cross-level fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6981–6993. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Chen, C.; Xiang, T.Z. Boundary-guided camouflaged object detection. arXiv 2022, arXiv:2207.00794. [Google Scholar]

- Lyu, Y.; Zhang, H.; Li, Y.; Liu, H.; Yang, Y.; Yuan, D. UEDG:Uncertainty-Edge Dual Guided Camouflage Object Detection. IEEE Trans. Multimed. 2024, 26, 4050–4060. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, X.; Wang, F.; Sun, J.; Sun, F. Efficient Camouflaged Object Detection Network Based on Global Localization Perception and Local Guidance Refinement. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 5452–5465. [Google Scholar] [CrossRef]

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 11591–11601. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2777–2787. [Google Scholar]

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Hall, J.R.; Cuthill, I.C.; Baddeley, R.; Shohet, A.J.; Scott-Samuel, N.E. Camouflage, detection and identification of moving targets. Proc. R. Soc. B Biol. Sci. 2013, 280, 20130064. [Google Scholar] [CrossRef]

- Li, P.; Yan, X.; Zhu, H.; Wei, M.; Zhang, X.P.; Qin, J. Findnet: Can you find me? boundary-and-texture enhancement network for camouflaged object detection. IEEE Trans. Image Process. 2022, 31, 6396–6411. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, B.; Tang, L.; Kuang, S.; Wu, S.; Ding, S. Detecting camouflaged object in frequency domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4504–4513. [Google Scholar]

- Sun, Y.; Xu, C.; Yang, J.; Xuan, H.; Luo, L. Frequency-spatial entanglement learning for camouflaged object detection. In Proceedings of the European Conference on Computer Vision, Paris, France, 26–27 March 2025; Springer: Berlin, Germany, 2025; pp. 343–360. [Google Scholar]

- Cong, R.; Sun, M.; Zhang, S.; Zhou, X.; Zhang, W.; Zhao, Y. Frequency perception network for camouflaged object detection. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1179–1189. [Google Scholar]

- Zhang, S.; Kong, D.; Xing, Y.; Lu, Y.; Ran, L.; Liang, G.; Wang, H.; Zhang, Y. Frequency-Guided Spatial Adaptation for Camouflaged Object Detection. arXiv 2024, arXiv:2409.12421. [Google Scholar] [CrossRef]

- Zhao, X.; Pang, Y.; Ji, W.; Sheng, B.; Zuo, J.; Zhang, L.; Lu, H. Spider: A Unified Framework for Context-dependent Concept Segmentation. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Wang, H.; Wu, Z.; Liu, Z.; Cai, H.; Zhu, L.; Gan, C.; Han, S. Hat: Hardware-aware transformers for efficient natural language processing. arXiv 2020, arXiv:2005.14187. [Google Scholar]

- Yao, S.; Sun, H.; Xiang, T.Z.; Wang, X.; Cao, X. Hierarchical graph interaction transformer with dynamic token clustering for camouflaged object detection. IEEE Trans. Image Process. 2024, 33, 5936–5948. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Dai, H.; Xiang, T.Z.; Wang, S.; Chen, H.X.; Qin, J.; Xiong, H. Feature shrinkage pyramid for camouflaged object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5557–5566. [Google Scholar]

- Yin, B.; Zhang, X.; Fan, D.P.; Jiao, S.; Cheng, M.M.; Van Gool, L.; Hou, Q. Camoformer: Masked separable attention for camouflaged object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10362–10374. [Google Scholar] [CrossRef] [PubMed]

- Yang, F.; Zhai, Q.; Li, X.; Huang, R.; Luo, A.; Cheng, H.; Fan, D.P. Uncertainty-guided transformer reasoning for camouflaged object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4146–4155. [Google Scholar]

- Dickson, M.C.; Bosman, A.S.; Malan, K.M. Hybridised loss functions for improved neural network generalisation. In Proceedings of the Pan-African Artificial Intelligence and Smart Systems Conference, Windhoek, Namibia, 6–8 September 2021; Springer: Berlin, Germany, 2021; pp. 169–181. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Bi, H.; Zhang, C.; Wang, K.; Tong, J.; Zheng, F. Rethinking camouflaged object detection: Models and datasets. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 5708–5724. [Google Scholar] [CrossRef]

- Chen, Y.; Zhuang, Z.; Chen, C. Object Detection Method Based on PVTv2. In Proceedings of the 2023 IEEE 3rd International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 26–28 May 2023; pp. 730–734. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Koonce, B.; Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Springer: Berlin, Germany, 2021; pp. 63–72. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 248–255. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. arXiv 2018, arXiv:1805.10421. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4548–4557. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Zhou, X.; Wu, Z.; Cong, R. Decoupling and Integration Network for Camouflaged Object Detection. IEEE Trans. Multimed. 2024, 26, 7114–7129. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Ma, Z.; Li, T.; Li, X.; Ma, J. RUPT-FL: Robust Two-layered Privacy-preserving Federated Learning Framework with Unlinkability for IoV. IEEE Trans. Veh. Technol. 2024, 74, 5528–5541. [Google Scholar] [CrossRef]

- Xing, H.; Gao, S.; Wang, Y.; Wei, X.; Tang, H.; Zhang, W. Go Closer to See Better: Camouflaged Object Detection via Object Area Amplification and Figure-Ground Conversion. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5444–5457. [Google Scholar] [CrossRef]

- Pang, Y.; Zhao, X.; Xiang, T.Z.; Zhang, L.; Lu, H. Zoom in and out: A mixed-scale triplet network for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2160–2170. [Google Scholar]

- Zhu, H.; Li, P.; Xie, H.; Yan, X.; Liang, D.; Chen, D.; Wei, M.; Qin, J. I can find you! boundary-guided separated attention network for camouflaged object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 3608–3616. [Google Scholar]

- Jia, Q.; Yao, S.; Liu, Y.; Fan, X.; Liu, R.; Luo, Z. Segment, magnify and reiterate: Detecting camouflaged objects the hard way. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4713–4722. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Tang, L.; Zhang, Y.; Guo, Z.; Li, X. Camouflaged Object Detection With Feature Decomposition and Edge Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 22046–22055. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Zhang, Y.; Guo, Z.; Li, X.; Danelljan, M.; Yu, F. Strategic Preys Make Acute Predators: Enhancing Camouflaged Object Detectors by Generating Camouflaged Objects. arXiv 2024, arXiv:2308.03166. [Google Scholar]

- Song, Y.; Li, X.; Qi, L. Camouflaged Object Detection with Feature Grafting and Distractor Aware. arXiv 2023, arXiv:2307.03943. [Google Scholar]

- Cong, R.; Sun, M.; Zhang, S.; Zhou, X.; Zhang, W.; Zhao, Y. Frequency Perception Network for Camouflaged Object Detection. arXiv 2024, arXiv:2308.08924. [Google Scholar]

- Huang, Z.; Dai, H.; Xiang, T.Z.; Wang, S.; Chen, H.X.; Qin, J.; Xiong, H. Feature Shrinkage Pyramid for Camouflaged Object Detection with Transformers. arXiv 2023, arXiv:2303.14816. [Google Scholar]

- Luo, Z.; Liu, N.; Zhao, W.; Yang, X.; Zhang, D.; Fan, D.P.; Khan, F.; Han, J. VSCode: General Visual Salient and Camouflaged Object Detection with 2D Prompt Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17169–17180. [Google Scholar]

- Wang, L.; Yang, J.; Zhang, Y.; Wang, F.; Zheng, F. Depth-Aware Concealed Crop Detection in Dense Agricultural Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 17201–17211. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).