Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images

Abstract

1. Introduction

- (1)

- We have developed ConvNeXt-U, a lightweight model that reduces computational resource demands and is suitable for deployment in resource-constrained environments.

- (2)

- The model includes an attention mechanism that automatically focuses on key regions in images, enhancing feature extraction and improving the accuracy of boundary and detail recognition in cropland.

- (3)

- Our model maintains high computational efficiency, making it suitable for processing large-scale remote sensing data and enhancing its applicability in agricultural management.

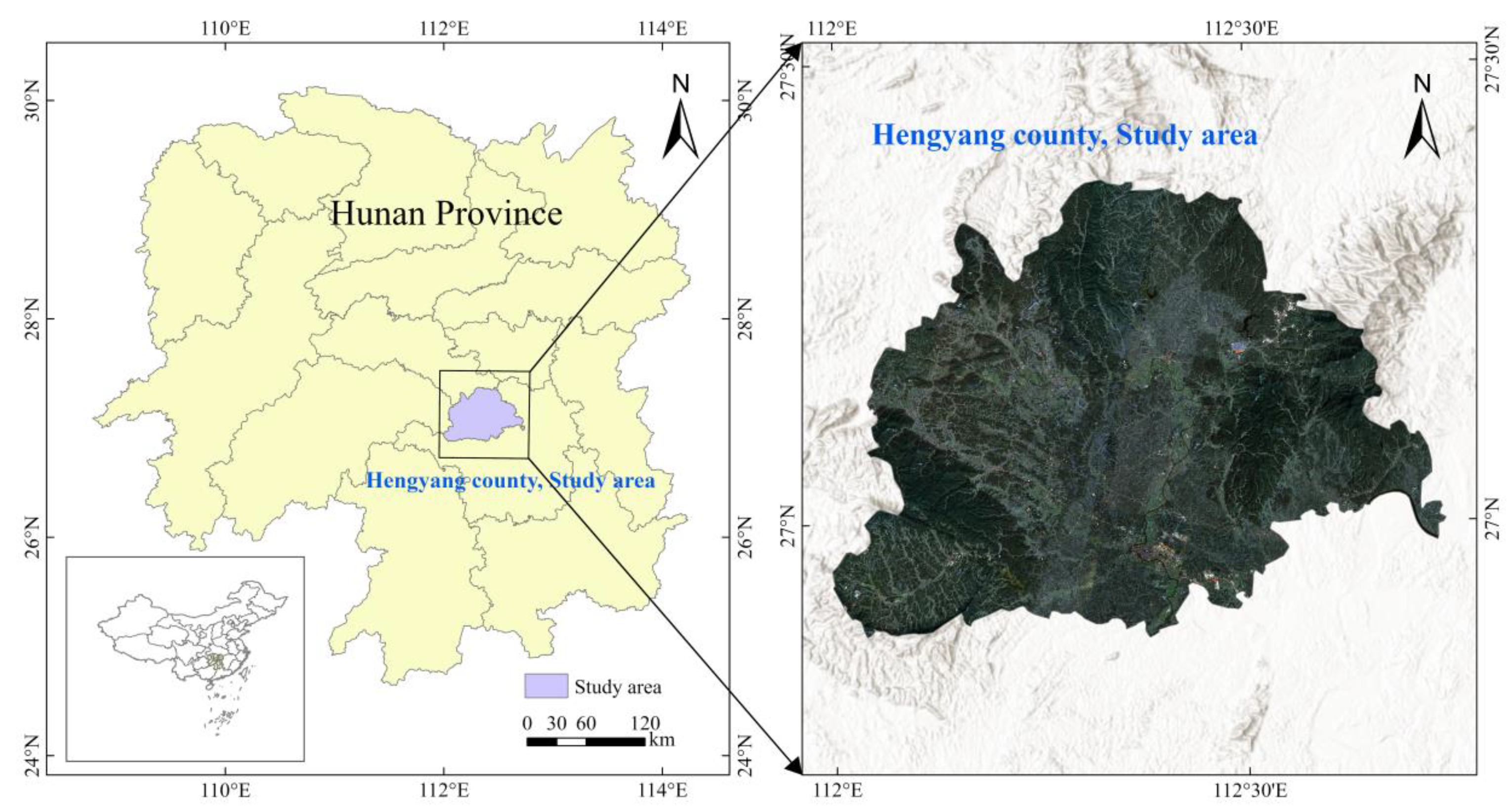

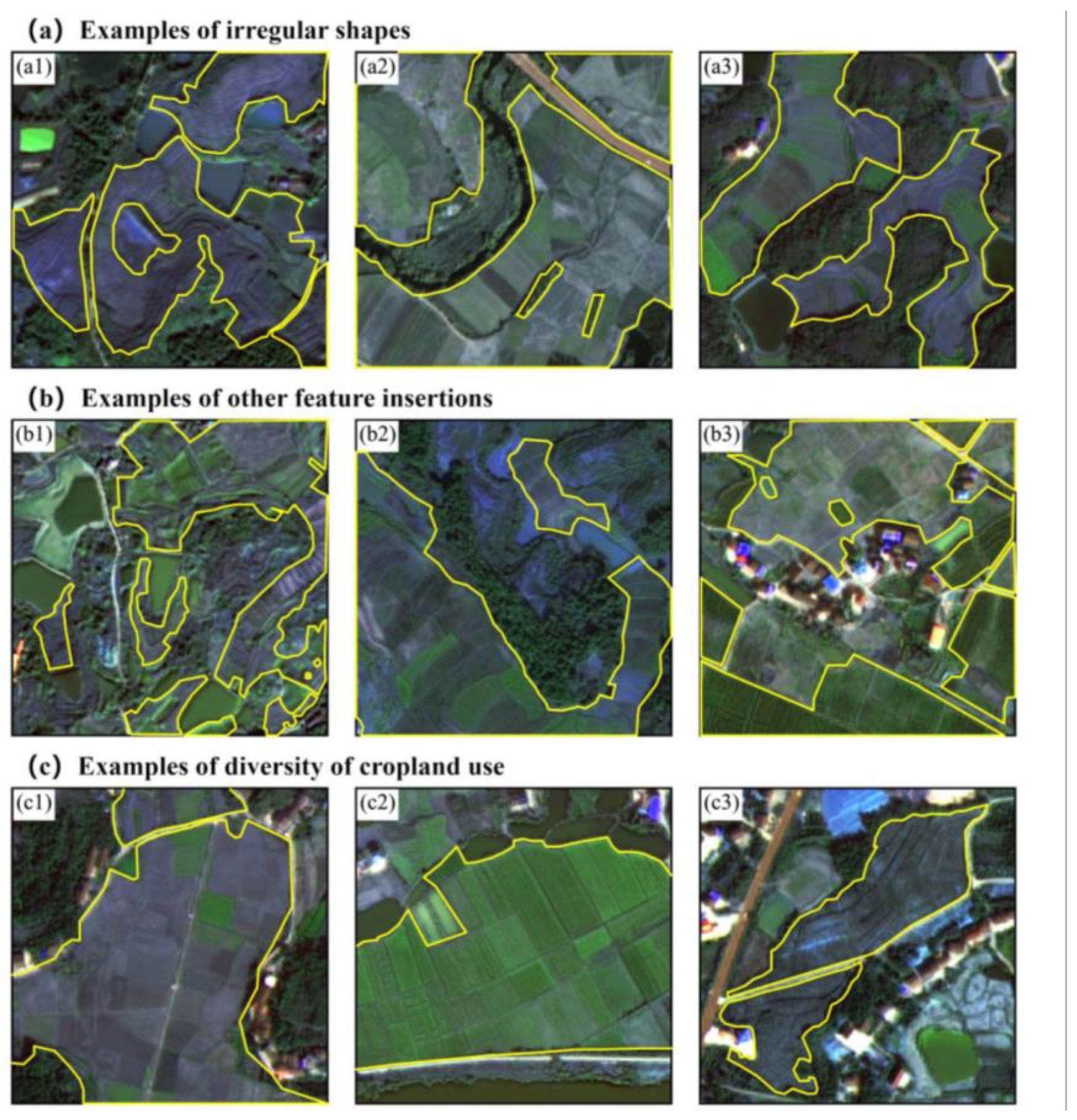

2. Study Area and Data

2.1. Study Area

2.2. Gaofen-2 Satellite Image

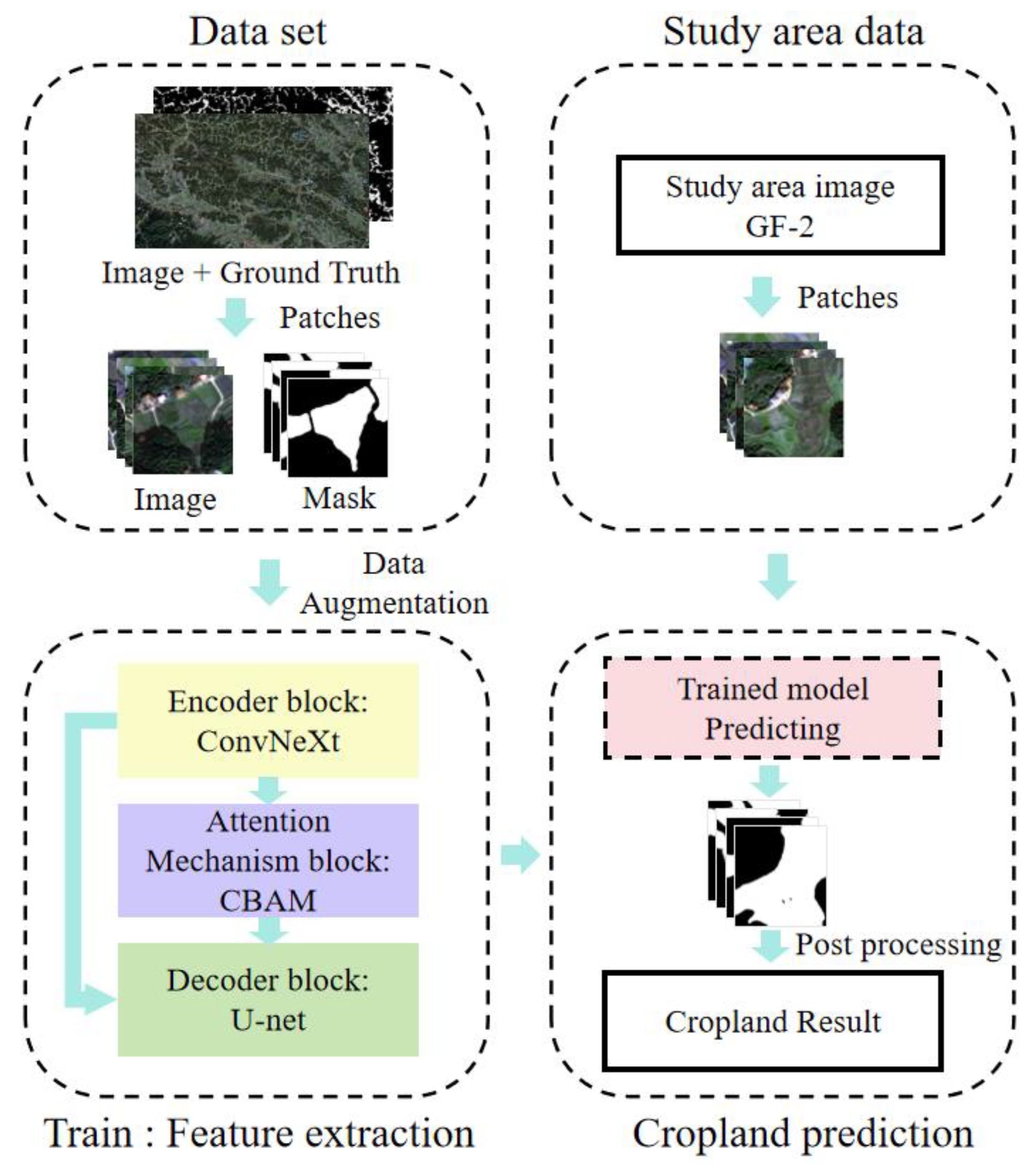

3. Methods

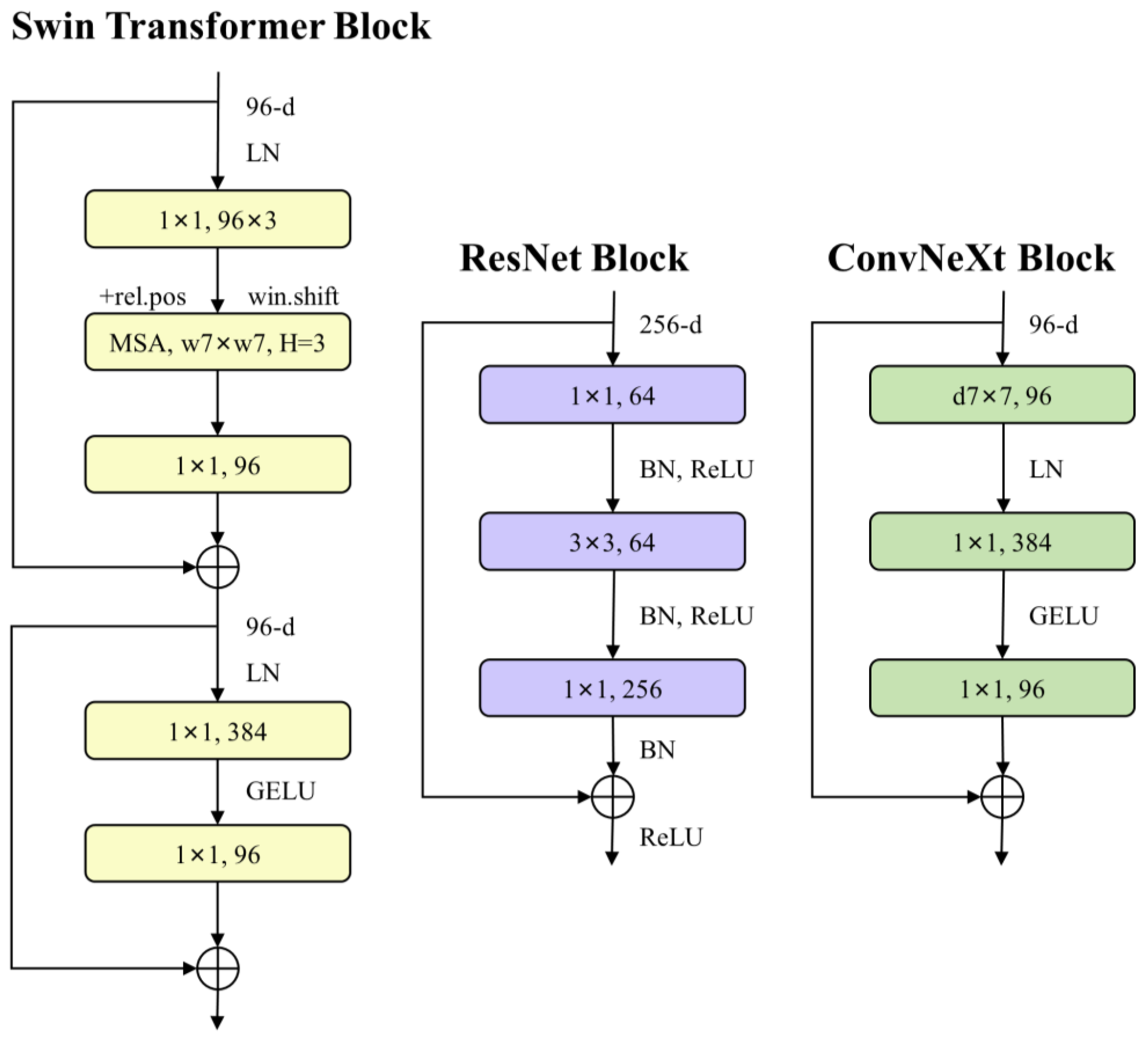

3.1. ConvNeXt

- (1)

- Macroscopic Design: Inspired by the multi-stage architecture of Swin Transformers, the researchers adjusted feature map resolutions and computational resources at various stages to enhance the network’s expressive capacity.

- (2)

- ResNeXt Concept: Grouped convolution techniques enable the sharing of filters across multiple groups, thereby increasing the network’s width without significantly raising computational demands.

- (3)

- Inverted Bottleneck: By adopting an inverted bottleneck structure akin to that used in Transformers, the model significantly increased the hidden dimensions of the multi-layer perceptron (MLP) blocks relative to the input dimensions, thereby reducing computational costs.

- (4)

- Large Kernel Sizes: The architecture benefits from larger convolutional kernel sizes (e.g., from 3 × 3 to 7 × 7), enhancing performance while keeping computational complexity manageable.

- (5)

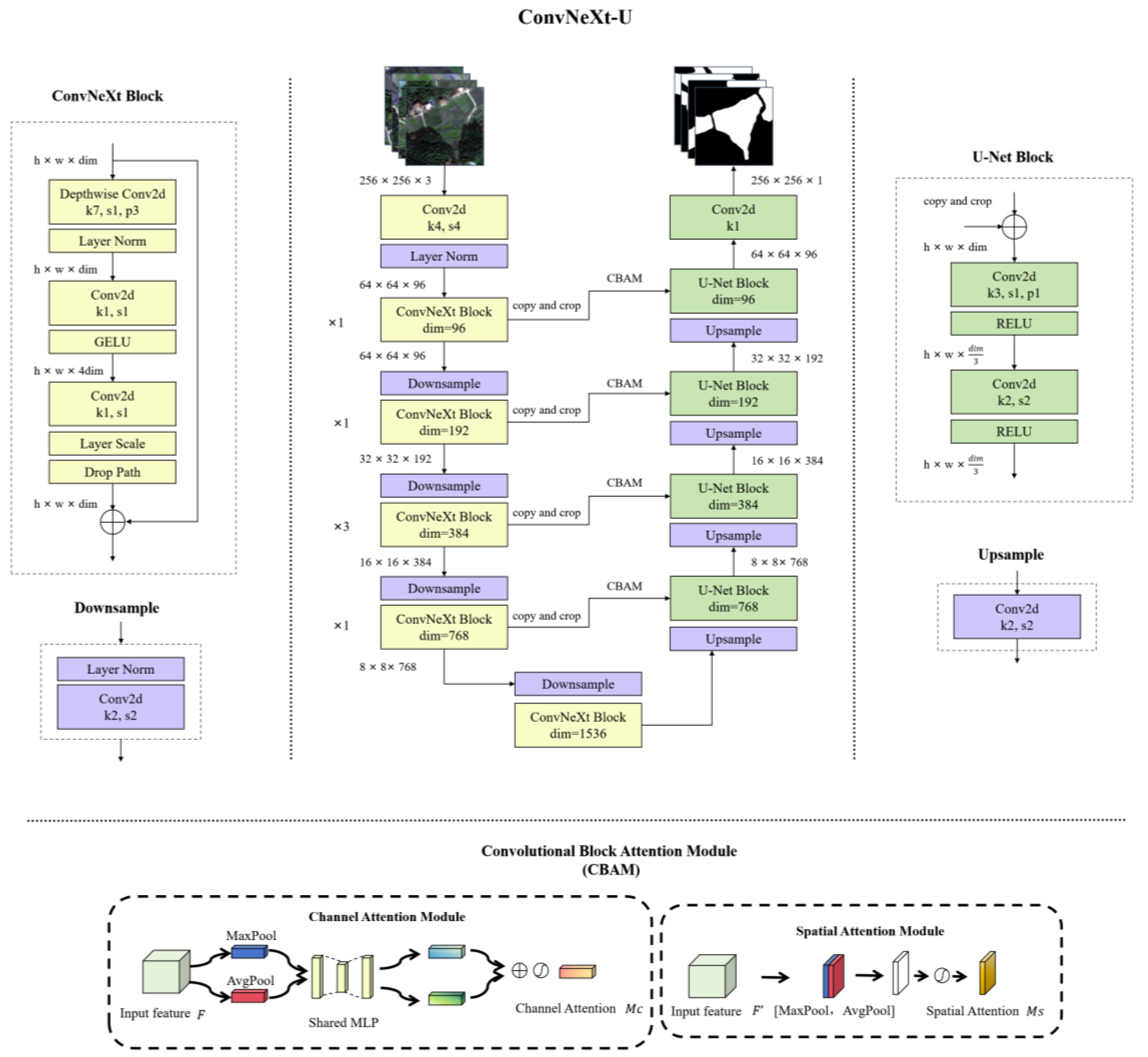

3.2. ConvNeXt-U

3.3. Evaluation Metric

3.4. Implementation Details

4. Results

4.1. Ablation Study for the Module

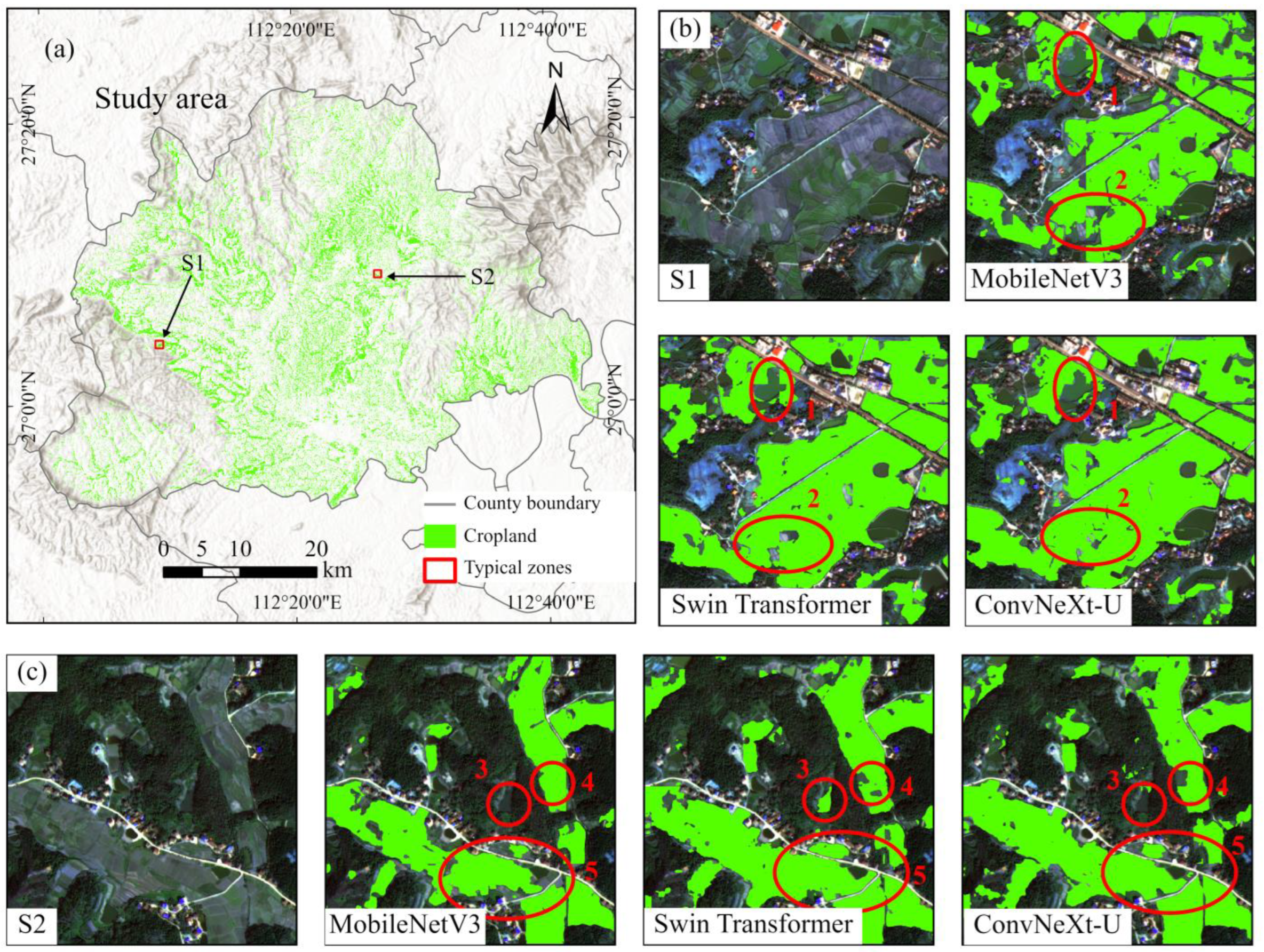

4.2. Extraction of Fragmented Cropland Fragmentation on Study Area Gaofen-2 Images

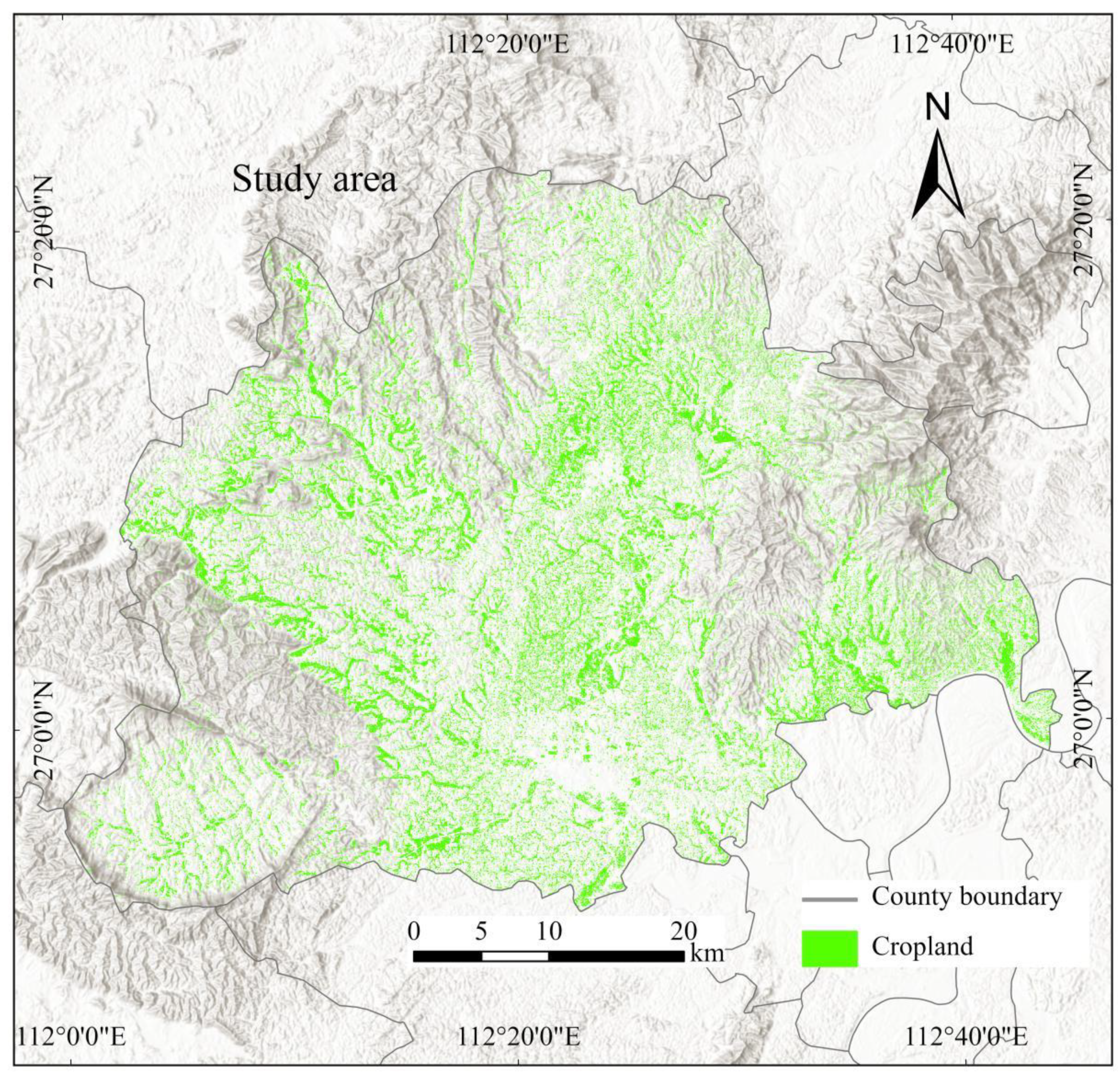

4.3. Map of Fragmented Cropland

5. Discussion

6. Conclusions

- (1)

- Replacing the U-Net encoder with ConvNeXt improves the model’s ability to extract fragmented cropland from remote sensing images, resulting in higher extraction accuracy compared to the original U-Net.

- (2)

- We incorporated the CBAM attention mechanism into both the U-Net and the enhanced ConvNeXt-U models. Comparative experiments consistently showed that CBAM-augmented models achieved superior extraction accuracy compared to their baseline counterparts. This indicates that the CBAM mechanism improves the model’s ability to capture detailed features of fragmented cropland, resulting in more accurate extraction.

- (3)

- A comparison of the Swin Transformer, MobileNetV3, ResUnet, and VGG16 models reveals that the enhanced ConvNeXt-U (+CBAM) model outperforms them in fragmented cropland extraction.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhou, N.; Yang, P.; Wei, C.; Shen, Z.; Yu, J.; Ma, X.; Luo, J. Accurate extraction method for cropland in mountainous areas based on field parcel. Trans. Chin. Soc. Agric. Eng. 2021, 37, 260–266. [Google Scholar] [CrossRef]

- Deng, O.; Ran, J.; Huang, S.; Duan, J.; Reis, S.; Zhang, J.; Zhu, Y.-G.; Xu, J.; Gu, B. Managing fragmented croplands for environmental and economic benefits in China. Nat. Food 2024, 5, 230–240. [Google Scholar] [CrossRef]

- Cui, Z.; Zhang, H.; Chen, X.; Zhang, C.; Ma, W.; Huang, C.; Zhang, W.; Mi, G.; Miao, Y.; Li, X.; et al. Pursuing sustainable productivity with millions of smallholder farmers. Nature 2018, 555, 363–366. [Google Scholar] [CrossRef]

- Ren, C.; Zhou, X.; Wang, C.; Guo, Y.; Diao, Y.; Shen, S.; Reis, S.; Li, W.; Xu, J.; Gu, B. Ageing threatens sustainability of smallholder farming in China. Nature 2023, 616, 96–103. [Google Scholar] [CrossRef]

- Cohn, A.S.; VanWey, L.K.; Spera, S.A.; Mustard, J.F. Cropping frequency and area response to climate variability can exceed yield response. Nat. Clim. Change 2016, 6, 601–604. [Google Scholar] [CrossRef]

- Wang, X. Contribution of fragmented croplands. Nat. Food 2024, 5, 202–203. [Google Scholar] [CrossRef] [PubMed]

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 global land cover: Algorithm refinements and characterization of new datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Han, Z.; Dian, Y.; Xia, H.; Zhou, J.; Jian, Y.; Yao, C.; Wang, X.; Li, Y. Comparing Fully Deep Convolutional Neural Networks for Land Cover Classification with High-Spatial-Resolution Gaofen-2 Images. ISPRS Int. J. Geo-Inf. 2020, 9, 478. [Google Scholar] [CrossRef]

- Li, M.; Long, J.; Stein, A.; Wang, X. Using a semantic edge-aware multi-task neural network to delineate agricultural parcels from remote sensing images. ISPRS J. Photogramm. Remote Sens. 2023, 200, 24–40. [Google Scholar] [CrossRef]

- Dubrovin, K.; Stepanov, A.; Verkhoturov, A. Cropland Mapping Using Sentinel-1 Data in the Southern Part of the Russian Far East. Sensors 2023, 23, 7902. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. Isprs J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef]

- Tariq, A.; Yan, J.; Gagnon, A.S.; Khan, M.R.; Mumtaz, F. Mapping of cropland, cropping patterns and crop types by combining optical remote sensing images with decision tree classifier and random forest. Geo-Spat. Inf. Sci. 2023, 26, 302–320. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Murden, D. Evaluating high resolution SPOT 5 satellite imagery for crop identification. Comput. Electron. Agric. 2011, 75, 347–354. [Google Scholar] [CrossRef]

- Hakim, A.M.Y.; Matsuoka, M.; Baja, S.; Rampisela, D.A.; Arif, S. Predicting Land Cover Change in the Mamminasata Area, Indonesia, to Evaluate the Spatial Plan. ISPRS Int. J. Geo-Inf. 2020, 9, 481. [Google Scholar] [CrossRef]

- Fernández-Urrutia, M.; Arbelo, M.; Gil, A. Identification of Paddy Croplands and Its Stages Using Remote Sensors: A Systematic Review. Sensors 2023, 23, 6932. [Google Scholar] [CrossRef] [PubMed]

- Tseng, M.-H.; Chen, S.-J.; Hwang, G.-H.; Shen, M.-Y. A genetic algorithm rule-based approach for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2008, 63, 202–212. [Google Scholar] [CrossRef]

- Chang, J.; Jiang, Y.; Tan, M.; Wang, Y.; Wei, S. Building Height Extraction Based on Spatial Clustering and a Random Forest Model. ISPRS Int. J. Geo-Inf. 2024, 13, 265. [Google Scholar] [CrossRef]

- Luo, J.; Hu, X.; Wu, T.; Liu, W.; Xia, L.; Yang, H.; Sun, Y.; Xu, N.; Zhang, X.; Shen, Z.; et al. Research on intelligent calculation model and method of precision land use/cover change information driven by high-resolution remote sensing. ZGGX 2021, 25, 1351–1373. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Wu, W.; Chen, T.; Yang, H.; He, Z.; Chen, Y.; Wu, N. Multilevel segmentation algorithm for agricultural parcel extraction from a semantic boundary. Int. J. Remote Sens. 2023, 44, 1045–1068. [Google Scholar] [CrossRef]

- Yan, S.; Yao, X.; Sun, J.; Huang, W.; Yang, L.; Zhang, C.; Gao, B.; Yang, J.; Yun, W.; Zhu, D. TSANet: A deep learning framework for the delineation of agricultural fields utilizing satellite image time series. Comput. Electron. Agric. 2024, 220, 108902. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar] [CrossRef]

- Quanlong, F.; Boan, C.; Guoqing, L.I.; Xiaochuang, Y.; Bingbo, G.; Lianchong, Z. A review for sample datasets of remote sensing imagery. ZGGX 2022, 26, 589–605. [Google Scholar] [CrossRef]

- Masoud, K.M.; Persello, C.; Tolpekin, V.A. Delineation of Agricultural Field Boundaries from Sentinel-2 Images Using a Novel Super-Resolution Contour Detector Based on Fully Convolutional Networks. Remote Sens. 2019, 12, 59. [Google Scholar] [CrossRef]

- Turkoglu, M.O.; D’Aronco, S.; Perich, G.; Liebisch, F.; Streit, C.; Schindler, K.; Wegner, J.D. Crop mapping from image time series: Deep learning with multi-scale label hierarchies. Remote Sens. Environ. 2021, 264, 112603. [Google Scholar] [CrossRef]

- Chen, B.; Zheng, H.; Wang, L.; Hellwich, O.; Chen, C.; Yang, L.; Liu, T.; Luo, G.; Bao, A.; Chen, X. A joint learning Im-BiLSTM model for incomplete time-series Sentinel-2A data imputation and crop classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102762. [Google Scholar] [CrossRef]

- Chen, B.; Feng, Q.; Niu, B.; Yan, F.; Gao, B.; Yang, J.; Gong, J.; Liu, J. Multi-modal fusion of satellite and street-view images for urban village classification based on a dual-branch deep neural network. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102794. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-33737-3. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Montreal, QC, Canada, 8–13 December 2014; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Li, W.; Dong, R.; Fu, H.; Wang, J.; Yu, L.; Gong, P. Integrating Google Earth imagery with Landsat data to improve 30-m resolution land cover mapping. Remote Sens. Environ. 2020, 237, 111563. [Google Scholar] [CrossRef]

- Ajadi, O.A.; Barr, J.; Liang, S.-Z.; Ferreira, R.; Kumpatla, S.P.; Patel, R.; Swatantran, A. Large-scale crop type and crop area mapping across Brazil using synthetic aperture radar and optical imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102294. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Miao, Y.; Blackburn, G.A. Deep ResU-Net Convolutional Neural Networks Segmentation for Smallholder Paddy Rice Mapping Using Sentinel 1 SAR and Sentinel 2 Optical Imagery. Remote Sens. 2023, 15, 1517. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, B.; Lu, J.; Wang, B.; Ding, Z.; He, S. Vegetation extraction from Landsat8 operational land imager remote sensing imagery based on Attention U-Net and vegetation spectral features. J. Appl. Remote. Sens. 2024, 18, 032403. [Google Scholar] [CrossRef]

- Guo, H.; Sun, L.; Yao, A.; Chen, Z.; Feng, H.; Wu, S.; Siddique, K.H.M. Abandoned terrace recognition based on deep learning and change detection on the Loess Plateau in China. Land Degrad. Dev. 2023, 34, 2349–2365. [Google Scholar] [CrossRef]

- Wang, L.; Chen, Q.; Zhou, Z.; Zhao, X.; Luo, J.; Wu, T.; Sun, Y.; Liu, W.; Zhang, S.; Zhang, W. Crops planting structure and karst rocky desertification analysis by Sentinel-1 data. Open Geosci. 2021, 13, 867–879. [Google Scholar] [CrossRef]

- Xu, F.; Yao, X.; Zhang, K.; Yang, H.; Feng, Q.; Li, Y.; Yan, S.; Gao, B.; Li, S.; Yang, J.; et al. Deep learning in cropland field identification: A review. Comput. Electron. Agric. 2024, 222, 109042. [Google Scholar] [CrossRef]

- Liu, X. A SAM-based method for large-scale crop field boundary delineation. In Proceedings of the 2023 20th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Madrid, Spain, 11–14 September 2023; pp. 1–6. [Google Scholar]

- Du, C.; Wang, Y.; Yang, Z.; Zhou, H.; Han, M.; Lai, J.-H. PARCS: A Deployment-Oriented AI System for Robust Parcel-Level Cropland Segmentation of Satellite Images. Proc. AAAI Conf. Artif. Intell. 2023, 37, 15775–15781. [Google Scholar] [CrossRef]

- Jong, M.; Guan, K.; Wang, S.; Huang, Y.; Peng, B. Improving field boundary delineation in ResUNets via adversarial deep learning. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102877. [Google Scholar] [CrossRef]

- Mei, W.; Wang, H.; Fouhey, D.; Zhou, W.; Hinks, I.; Gray, J.M.; Van Berkel, D.; Jain, M. Using Deep Learning and Very-High-Resolution Imagery to Map Smallholder Field Boundaries. Remote Sens. 2022, 14, 3046. [Google Scholar] [CrossRef]

- Wang, S.; Waldner, F.; Lobell, D.B. Unlocking Large-Scale Crop Field Delineation in Smallholder Farming Systems with Transfer Learning and Weak Supervision. Remote Sens. 2022, 14, 5738. [Google Scholar] [CrossRef]

- Guo, R.; Liu, J.; Li, N.; Liu, S.; Chen, F.; Cheng, B.; Duan, J.; Li, X.; Ma, C. Pixel-Wise Classification Method for High Resolution Remote Sensing Imagery Using Deep Neural Networks. ISPRS Int. J. Geo-Inf. 2018, 7, 110. [Google Scholar] [CrossRef]

- Rieke, C. Deep Learning for Instance Segmentation of Agricultural Fields. Ph.D. Thesis, Friedrich Schiller University Jena, Jena, Germany, 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the Proceedings of the 27th International Conference on International Conference on Machine Learning, Haifa, Isreal, 21–24 June 2010; Omnipress: Madison, WI, USA, 2010; pp. 807–814. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian Error Linear Units (GELUs). Available online: https://arxiv.org/abs/1606.08415v5 (accessed on 16 August 2024).

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LO, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Liu, Y.; Cheng, M.-M.; Hu, X.; Bian, J.-W.; Zhang, L.; Bai, X.; Tang, J. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders 2023. arXiv 2023. [Google Scholar] [CrossRef]

- Shaolin, H.E.; Jinghua, X.U.; Shuaiyi, Z. Land use classification of object-oriented multi-scale by UAV image. Remote Sens. Land Resour. 2013, 25, 107–112. [Google Scholar] [CrossRef]

- Xu, P.; Xu, W.; Lou, Y.; Zhao, Z. Precise classification of cultivated land based on visible remote sensing image of UAV. J. Agric. Sci. Technol. 2019, 21, 79–86. [Google Scholar]

- A coarse-to-fine weakly supervised learning method for green plastic cover segmentation using high-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2022, 188, 157–176. [CrossRef]

| Gaofen-2 Bands | Wavelength (nm) | Resolution (m) |

|---|---|---|

| 1 (blue) | 450–520 | 3.2 |

| 2 (green) | 520–590 | 3.2 |

| 3 (red) | 630–690 | 3.2 |

| 4 (NIR) | 760–900 | 3.2 |

| 5 (panchromatic) | 450–900 | 0.8 |

| Acc (%) | Precision (%) | Recall | F1 | IoU (%) | ||

|---|---|---|---|---|---|---|

| Hengyang Dataset | ConvNeXt-U | 84.6 | 79.5 | 0.992 | 0.883 | 79.0 |

| U-Net | 81.3 | 76.1 | 0.991 | 0.861 | 75.6 | |

| Denmark Dataset | ConvNeXt-U | 90.8 | 92.1 | 0.938 | 0.929 | 86.8 |

| U-Net | 89.4 | 86.0 | 0.951 | 0.922 | 84.8 |

| Acc (%) | Precision (%) | Recall | F1 | IoU (%) | |

|---|---|---|---|---|---|

| ConvNeXt_U (+CBAM) | 85.2 | 80.5 | 0.986 | 0.886 | 79.5 |

| ConvNeXt_U | 84.6 | 79.5 | 0.992 | 0.883 | 79.0 |

| U-Net (+CBAM) | 83.6 | 78.7 | 0.987 | 0.876 | 77.9 |

| U-Net | 81.3 | 76.1 | 0.991 | 0.861 | 75.6 |

| Acc (%) | Precision (%) | Recall | F1 | IoU (%) | |

|---|---|---|---|---|---|

| ConvNeXt_U (+CBAM) | 91.1 | 91.2 | 0.957 | 0.930 | 87.0 |

| ConvNeXt_U | 90.8 | 92.1 | 0.938 | 0.929 | 86.8 |

| U-Net (+CBAM) | 89.8 | 88.0 | 0.937 | 0.925 | 86.5 |

| U-Net | 89.4 | 86.0 | 0.951 | 0.922 | 84.8 |

| Acc (%) | Precision (%) | Recall | F1 | IoU (%) | |

|---|---|---|---|---|---|

| ConvNeXt_U (+CBAM) | 85.2 | 80.5 | 0.986 | 0.886 | 79.5 |

| Swin Transformer | 85.1 | 81.4 | 0.965 | 0.883 | 79.1 |

| MobileNetV3 | 83.4 | 78.4 | 0.985 | 0.874 | 77.6 |

| ResUnet | 81.8 | 76.6 | 0.992 | 0.865 | 76.1 |

| VGG16 | 80.5 | 75.8 | 0.979 | 0.855 | 74.6 |

| Parameter Count | FLOPs | Throughput (Image/s) | |

|---|---|---|---|

| ConvNeXt_U (+CBAM) | 0.17 M | 3.030 G | 37 |

| Swin Transformer | 27.15 M | 30.922 G | 28 |

| MobileNetV3 | 3.90 M | 1.25 G | 35 |

| ResUnet | 25.56 M | 21.652 G | 0.44 |

| VGG16 | 138.36 M | 80.673 G | 0.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Cao, S.; Lu, X.; Peng, J.; Ping, L.; Fan, X.; Teng, F.; Liu, X. Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images. Sensors 2025, 25, 261. https://doi.org/10.3390/s25010261

Liu S, Cao S, Lu X, Peng J, Ping L, Fan X, Teng F, Liu X. Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images. Sensors. 2025; 25(1):261. https://doi.org/10.3390/s25010261

Chicago/Turabian StyleLiu, Shukuan, Shi Cao, Xia Lu, Jiqing Peng, Lina Ping, Xiang Fan, Feiyu Teng, and Xiangnan Liu. 2025. "Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images" Sensors 25, no. 1: 261. https://doi.org/10.3390/s25010261

APA StyleLiu, S., Cao, S., Lu, X., Peng, J., Ping, L., Fan, X., Teng, F., & Liu, X. (2025). Lightweight Deep Learning Model, ConvNeXt-U: An Improved U-Net Network for Extracting Cropland in Complex Landscapes from Gaofen-2 Images. Sensors, 25(1), 261. https://doi.org/10.3390/s25010261