Application of Stereo Digital Image Correlation on Facial Expressions Sensing

Abstract

1. Introduction

2. Literature Review

3. Method

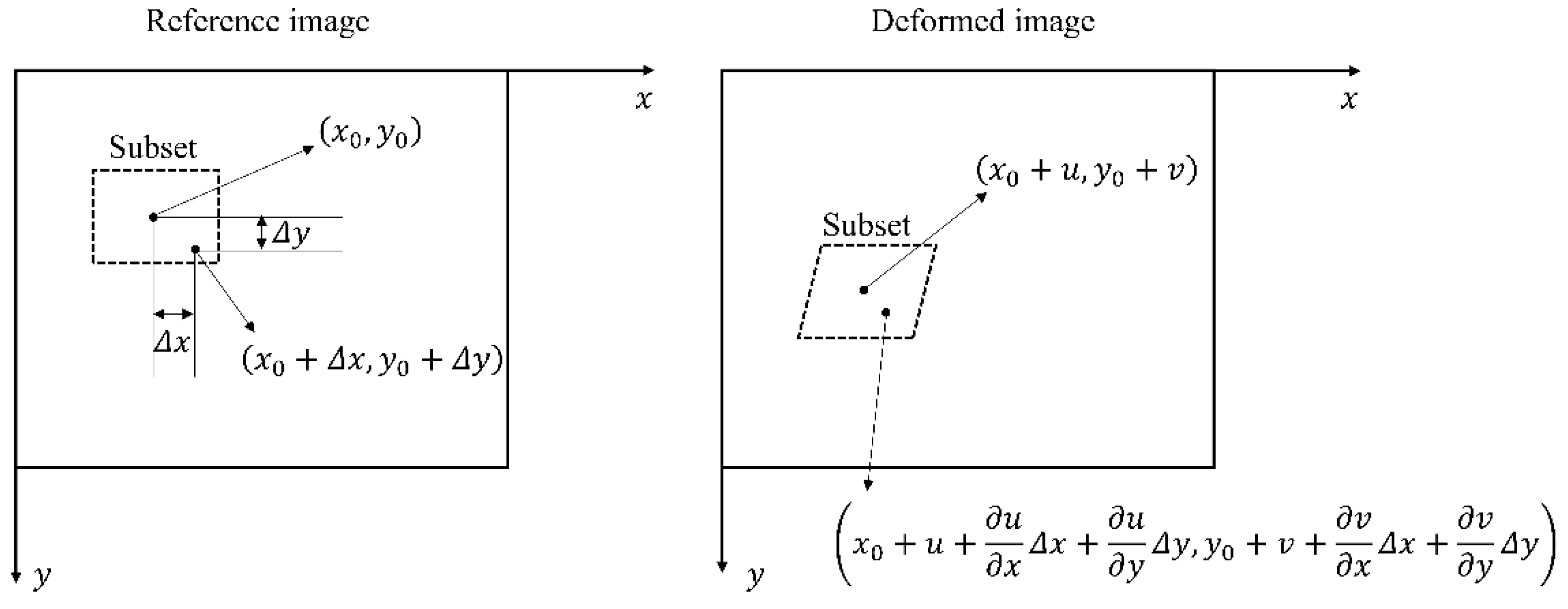

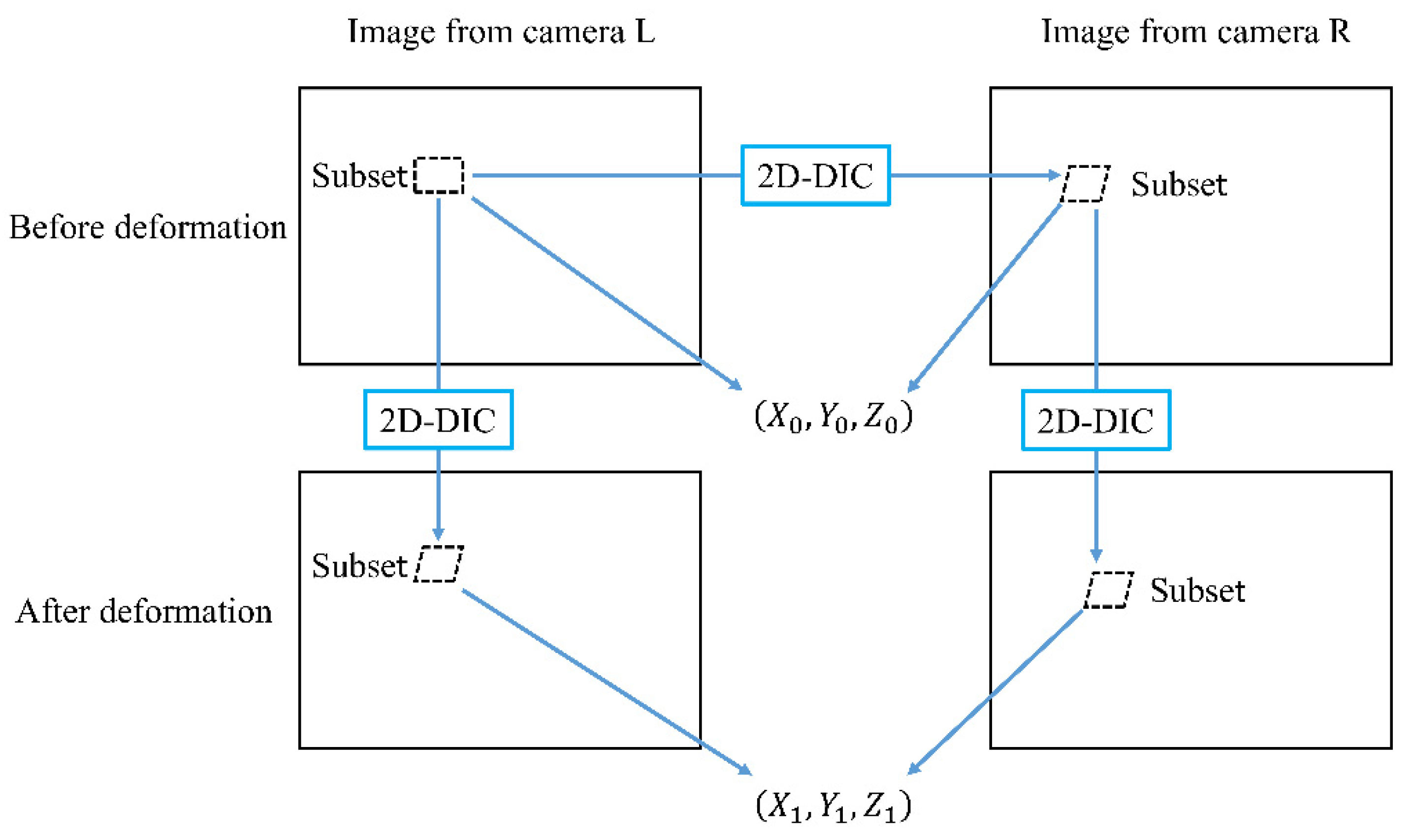

3.1. The Principle of 3D-DIC

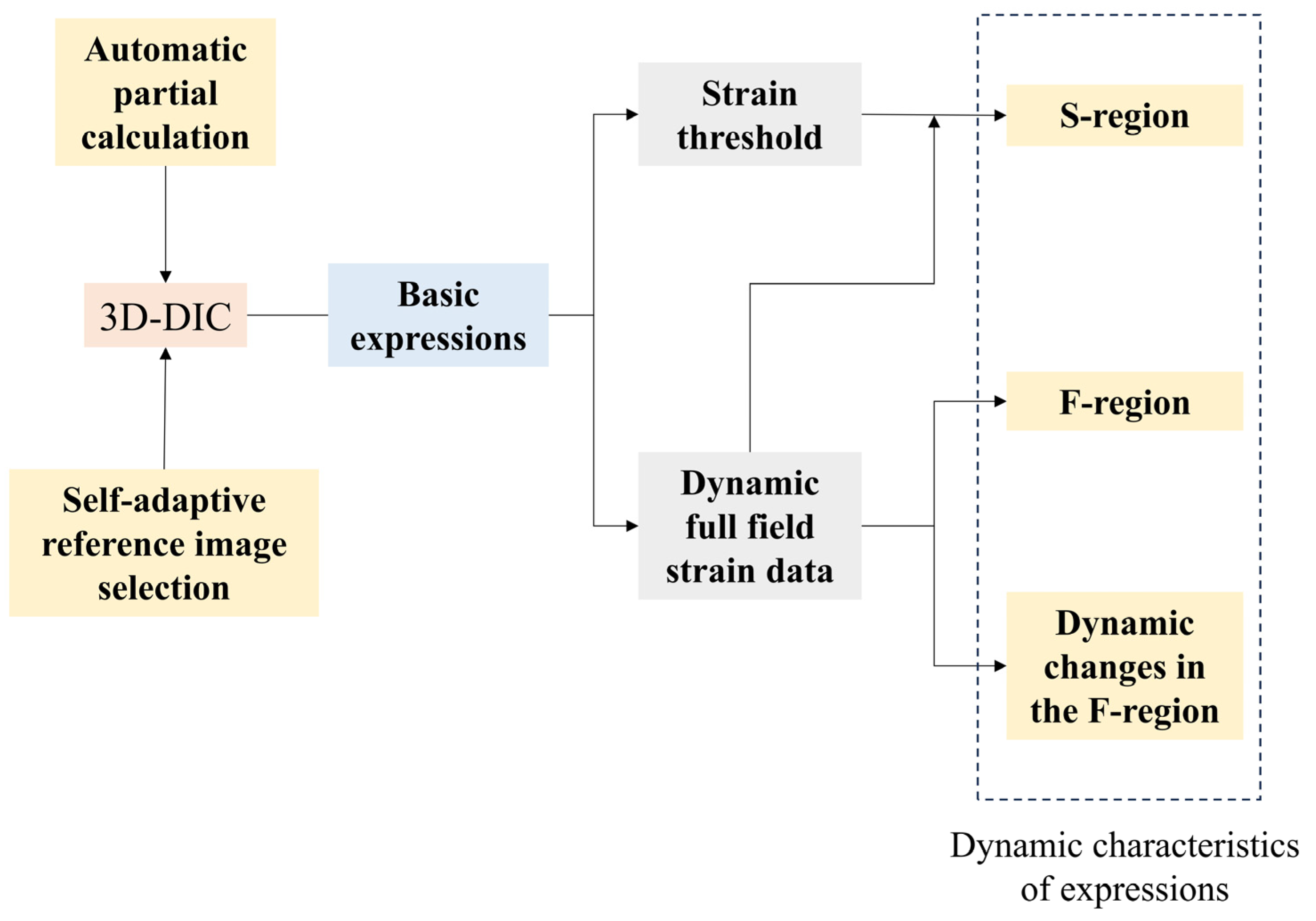

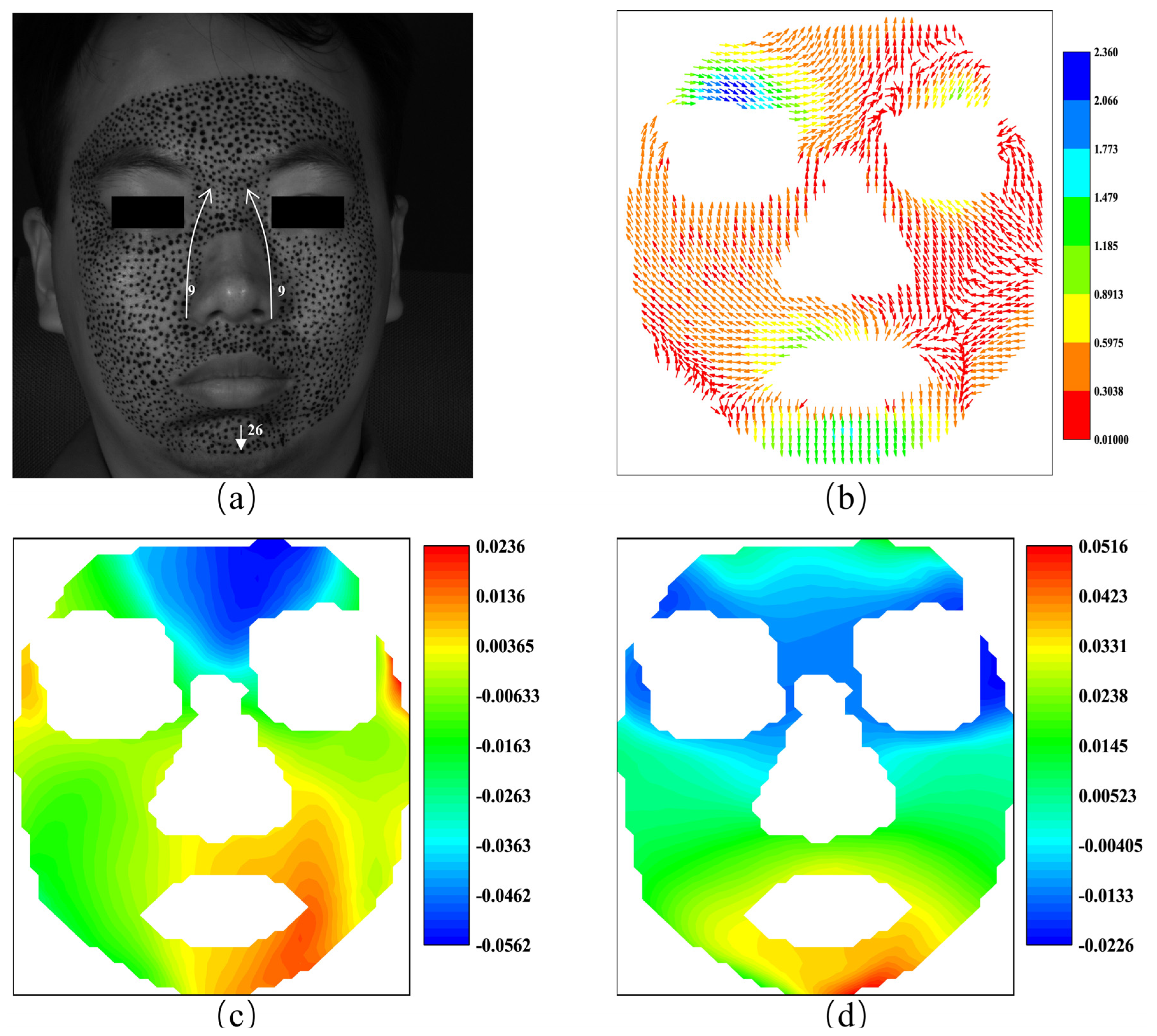

3.2. Customized 3D-DIC for Facial Deformation Analysis

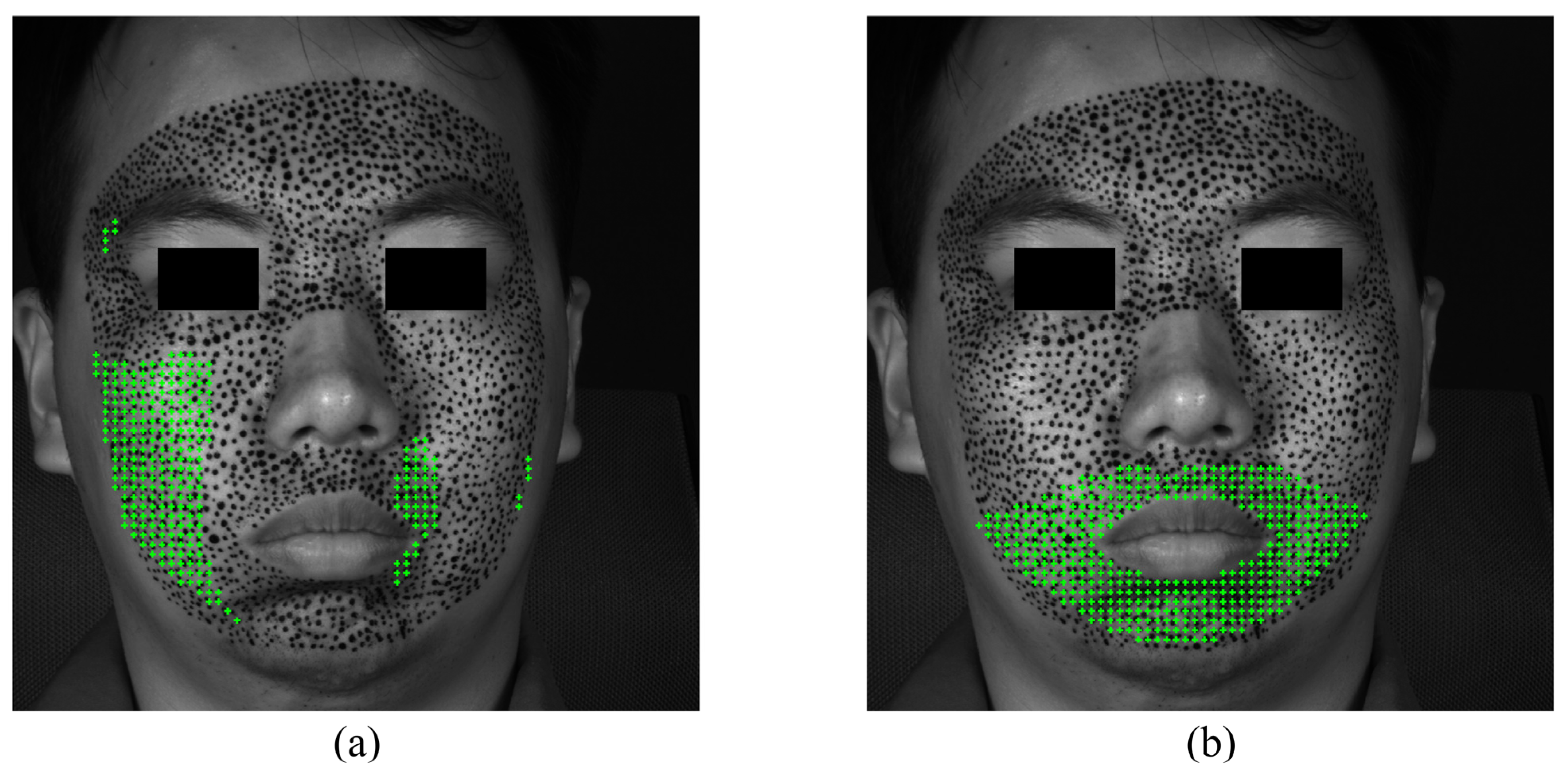

3.3. Dynamic Deformation Analytical Strategy

4. Experiments and Results

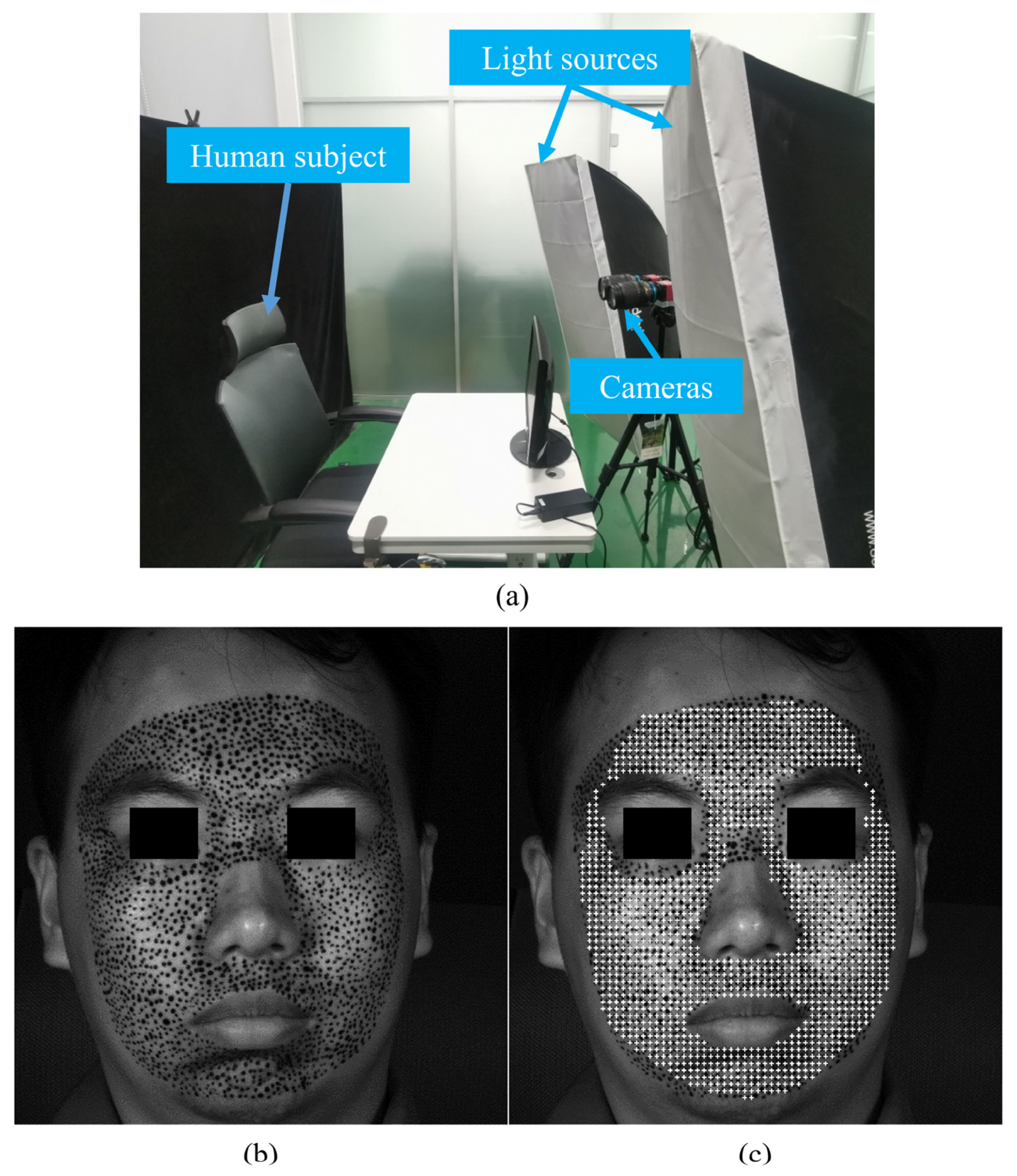

4.1. Experimental Environment and Set-Up

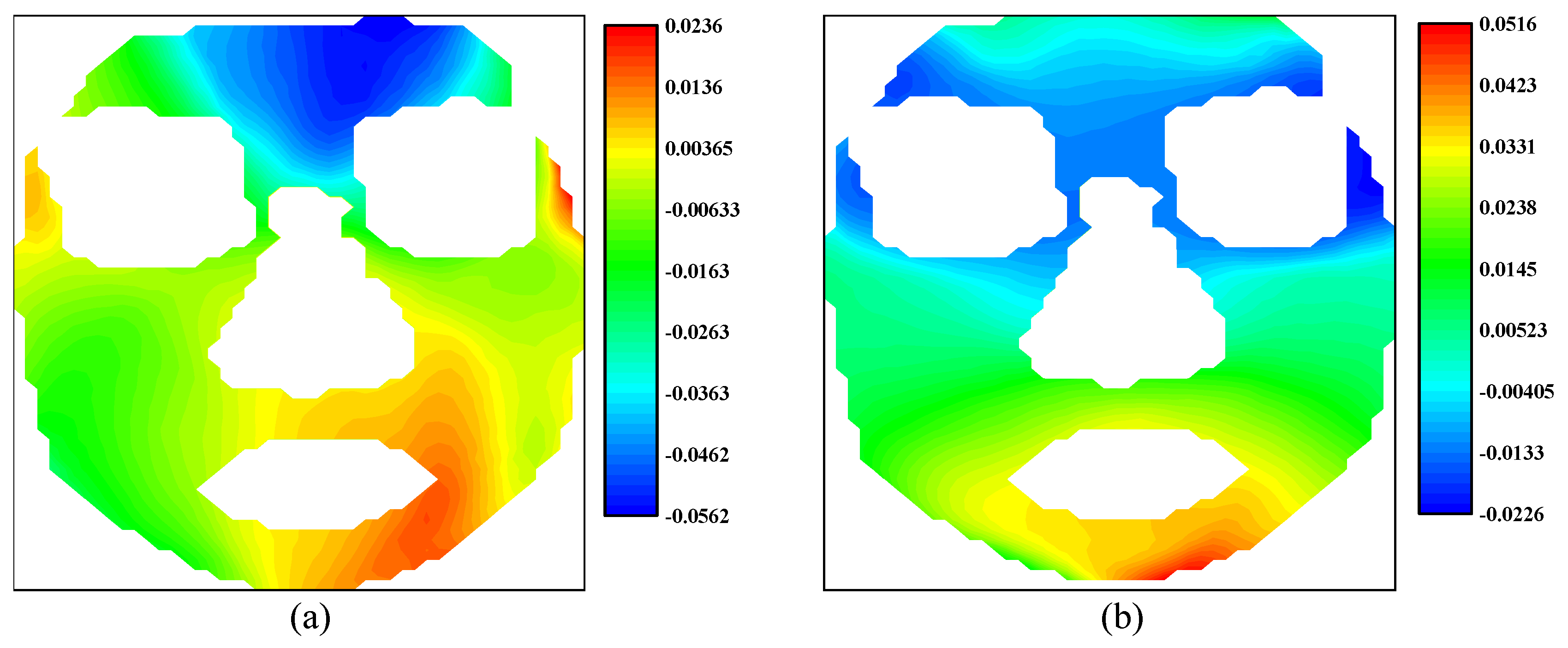

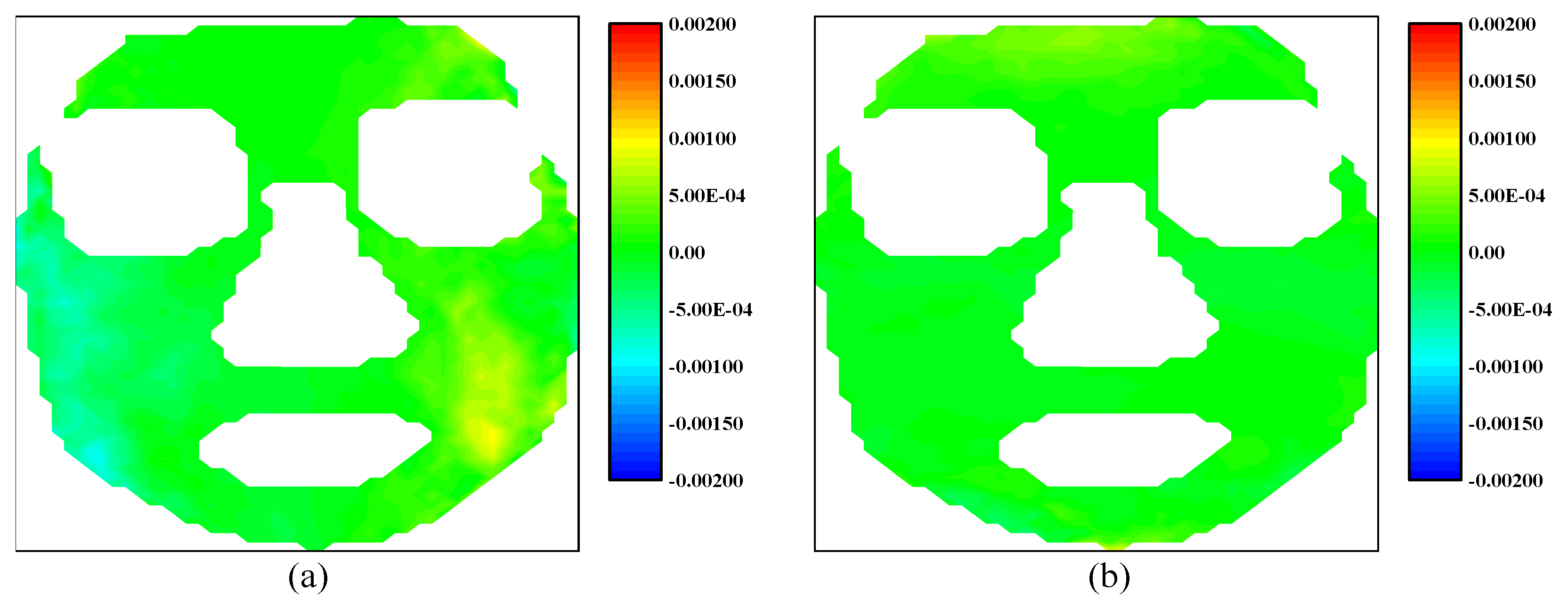

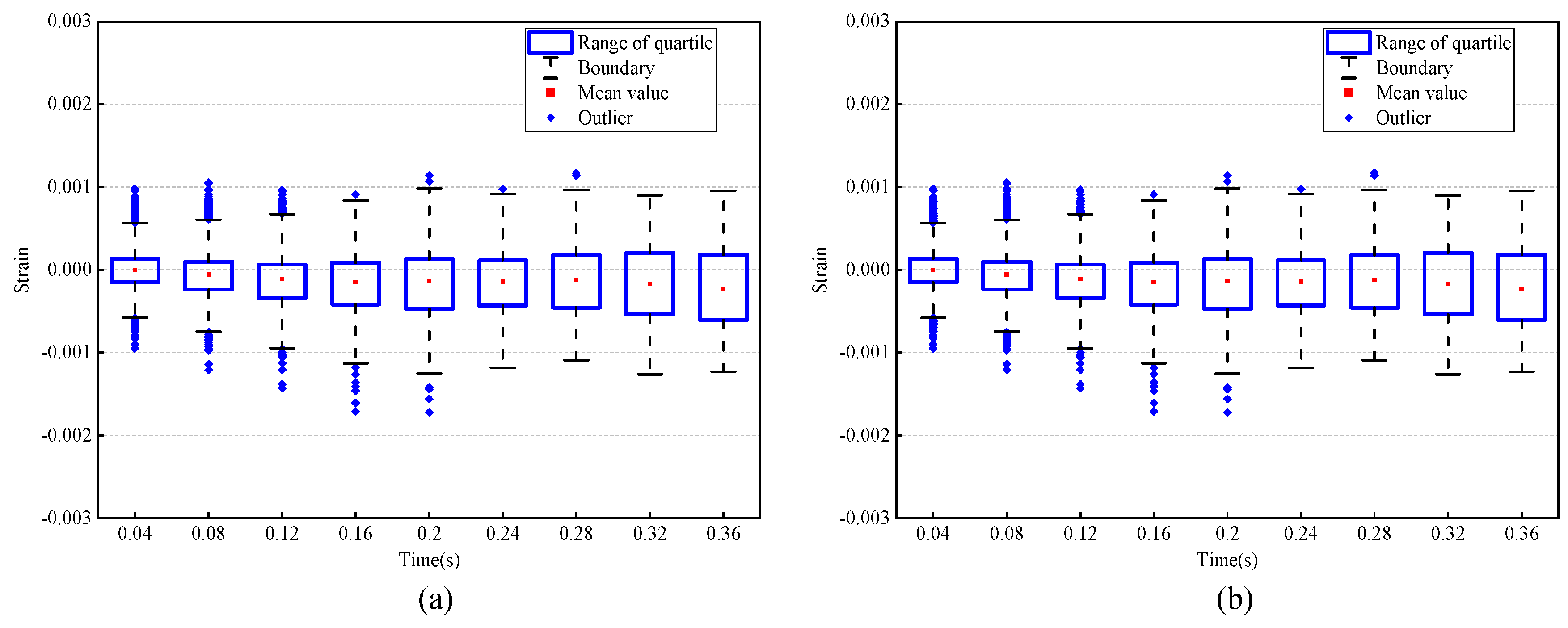

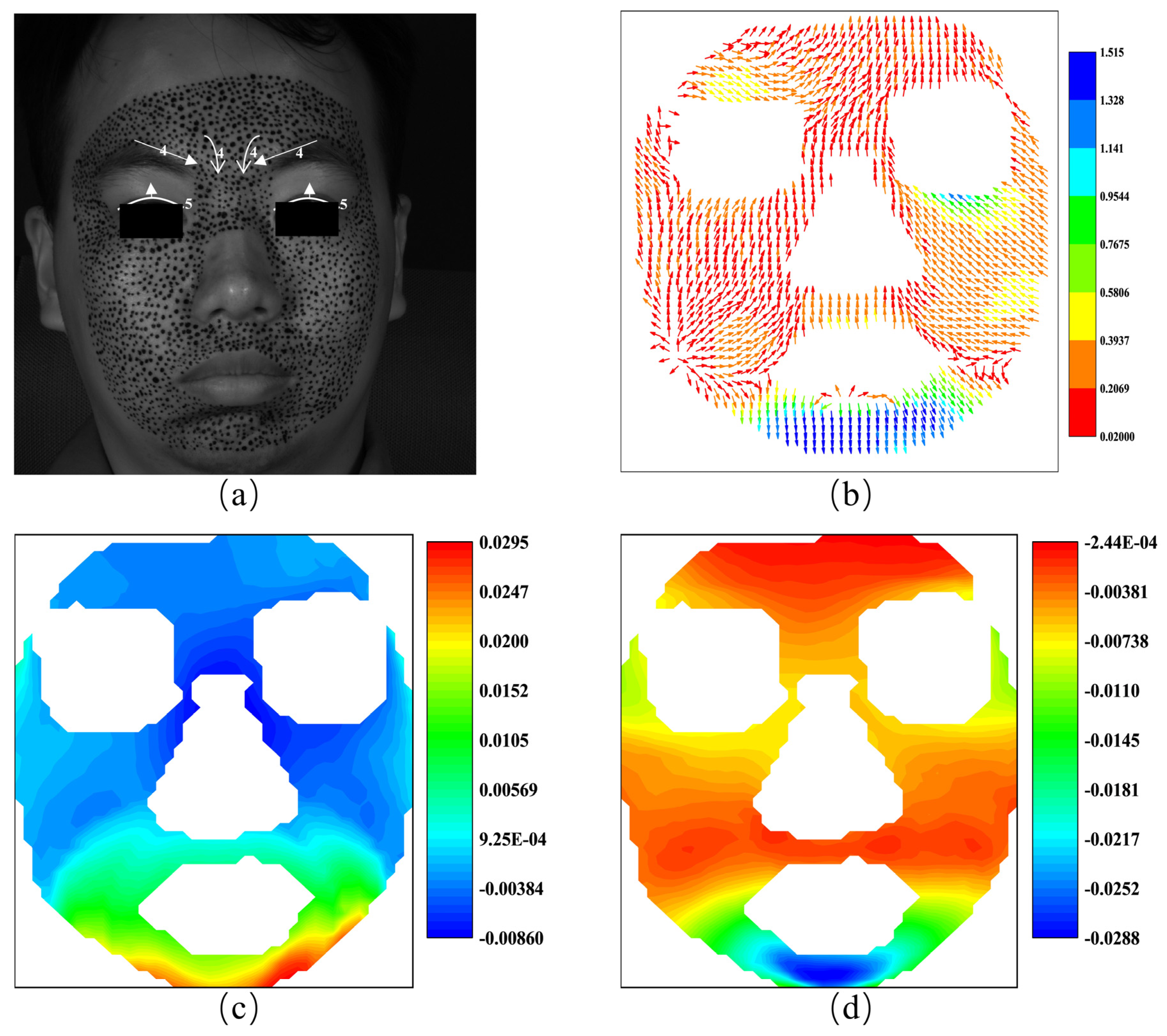

4.2. Experiment for Neutral Status

4.3. The Temporal and Spatial Characteristics of the Six Basic Expressions

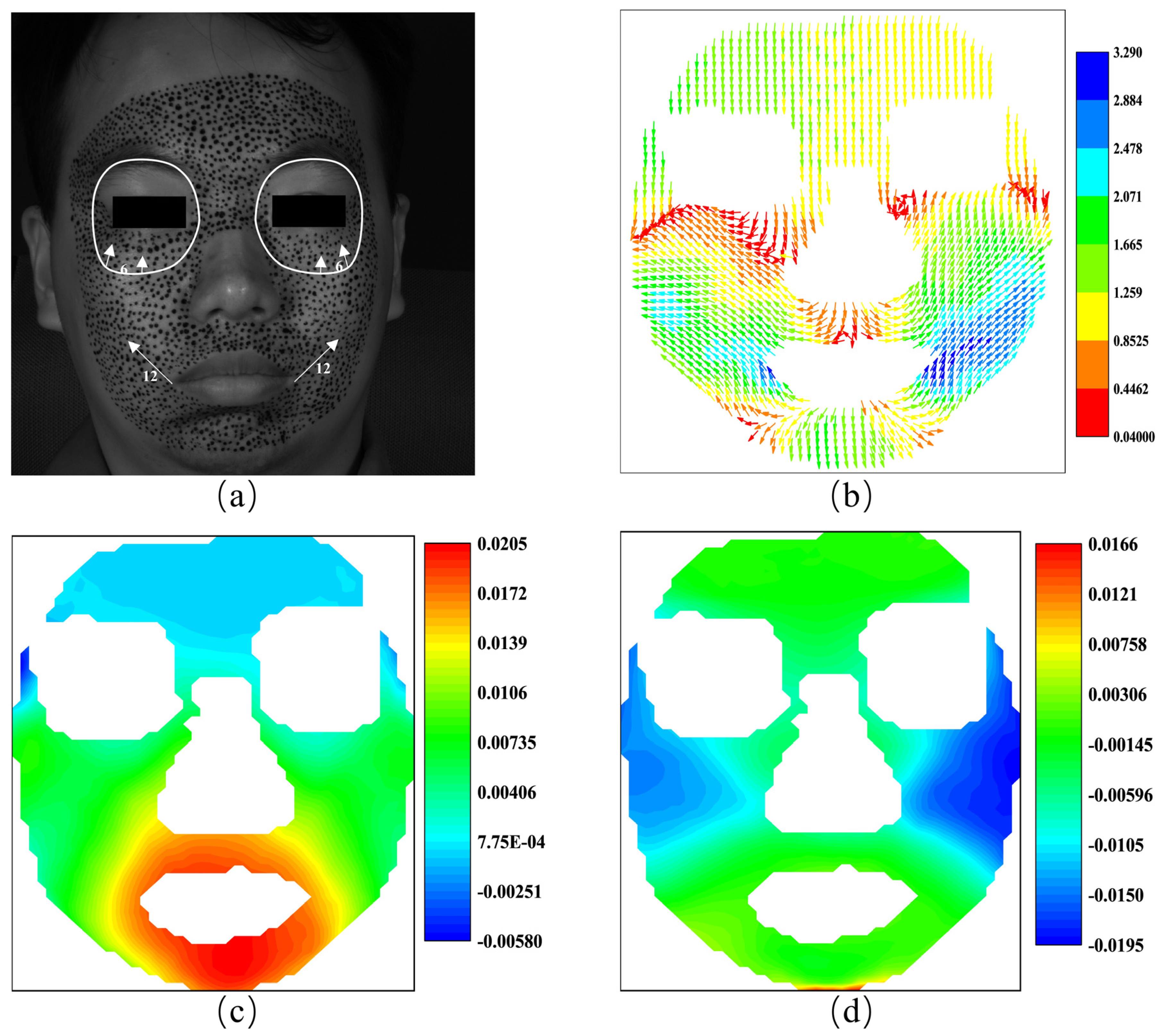

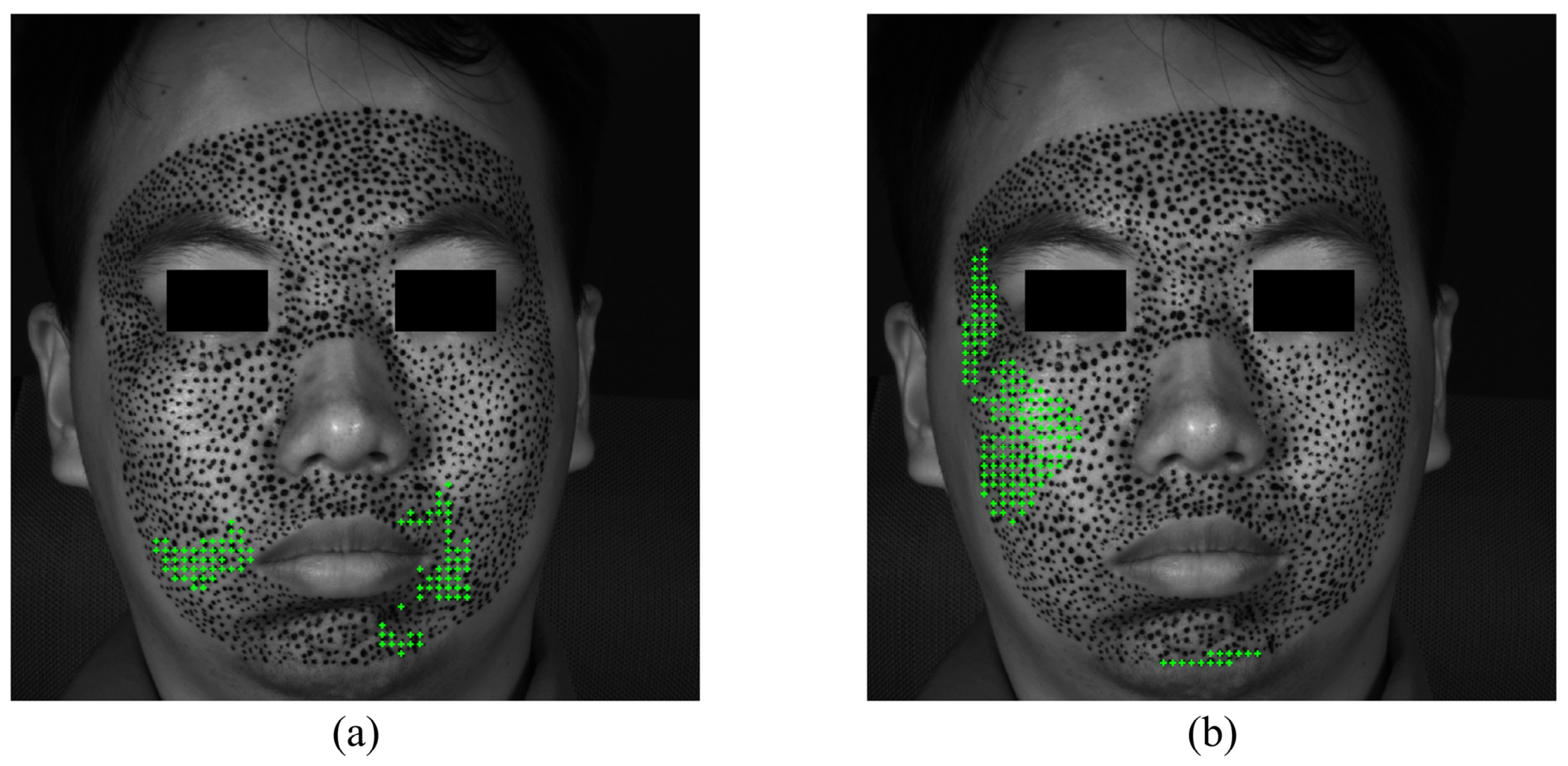

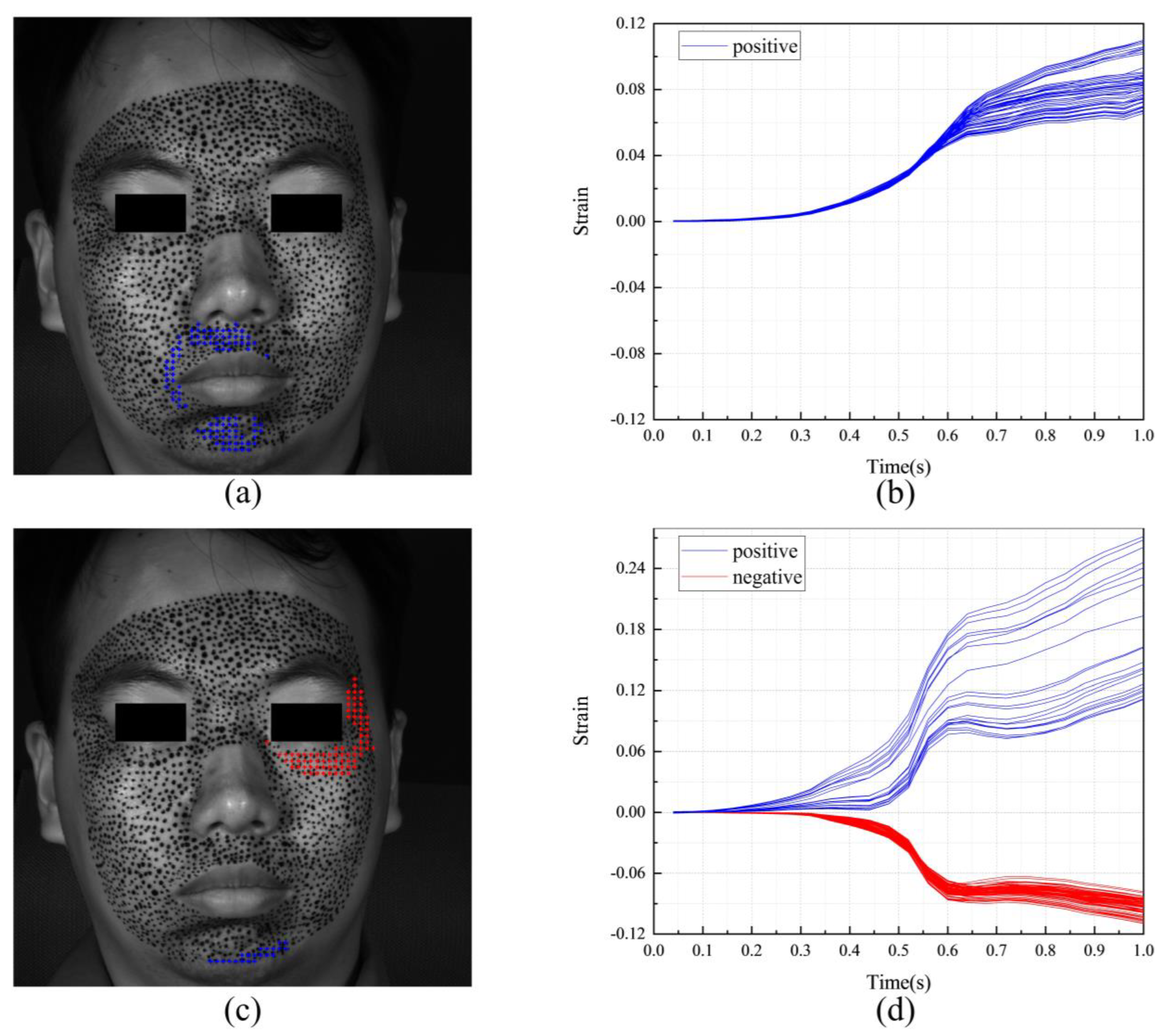

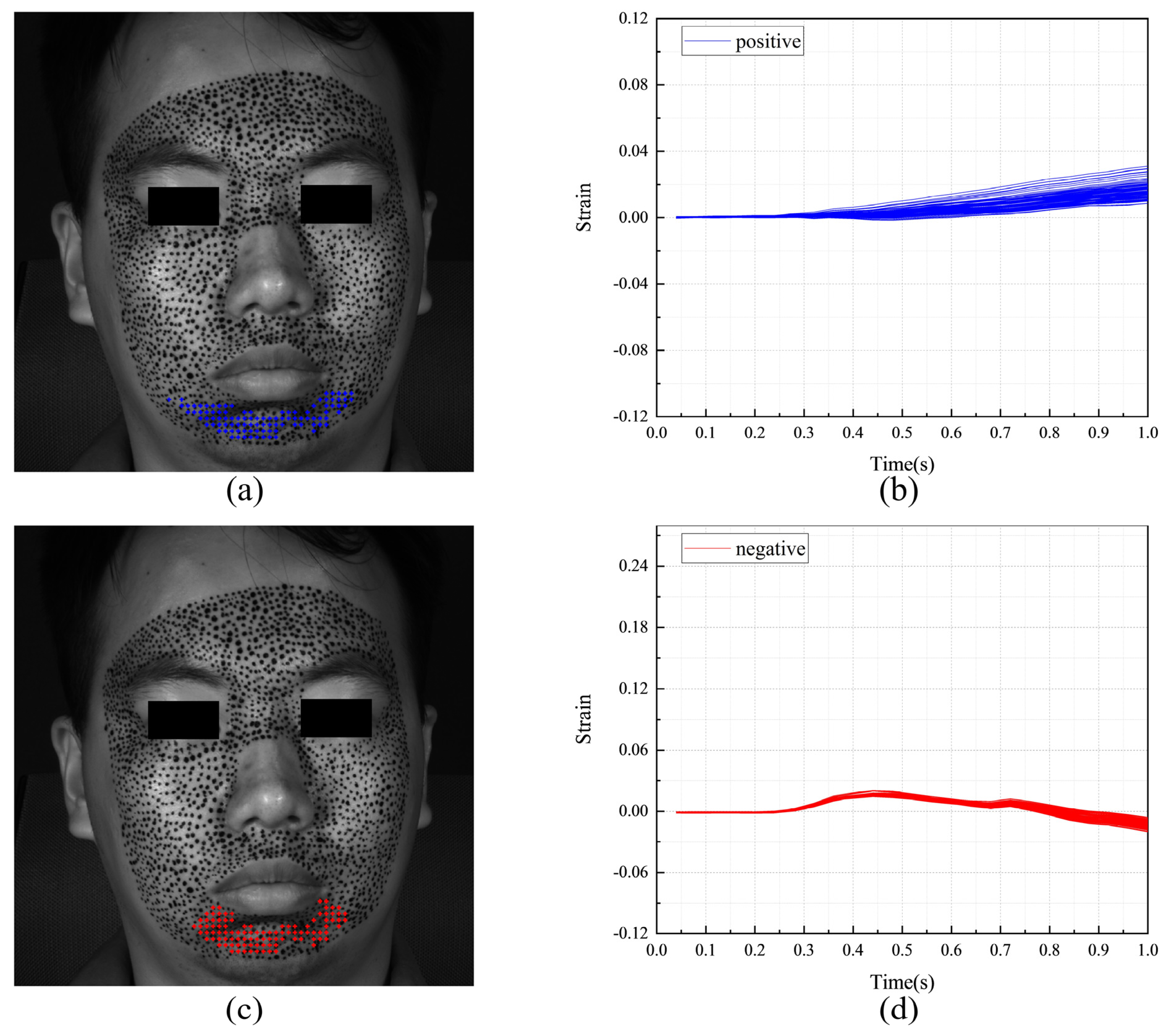

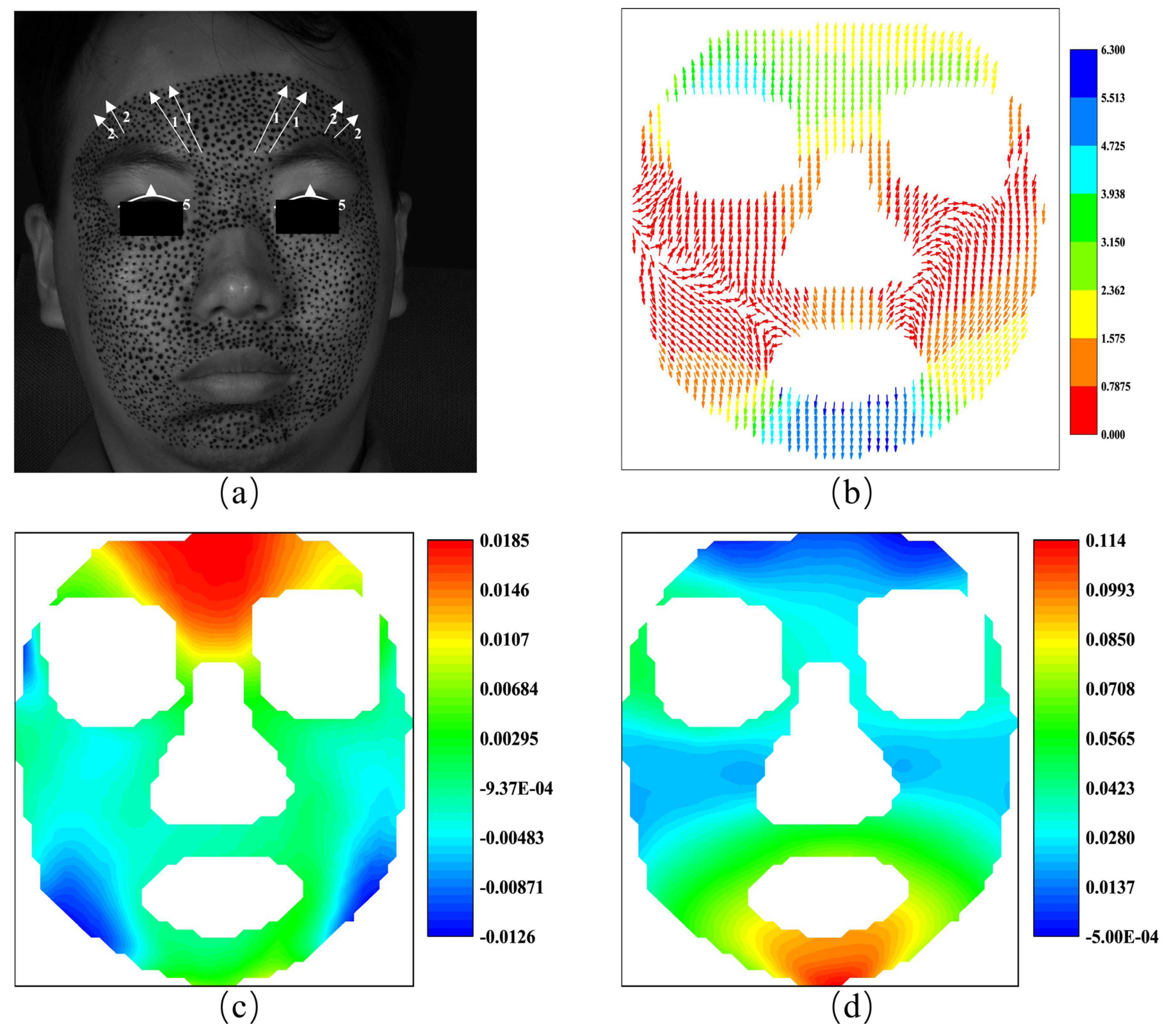

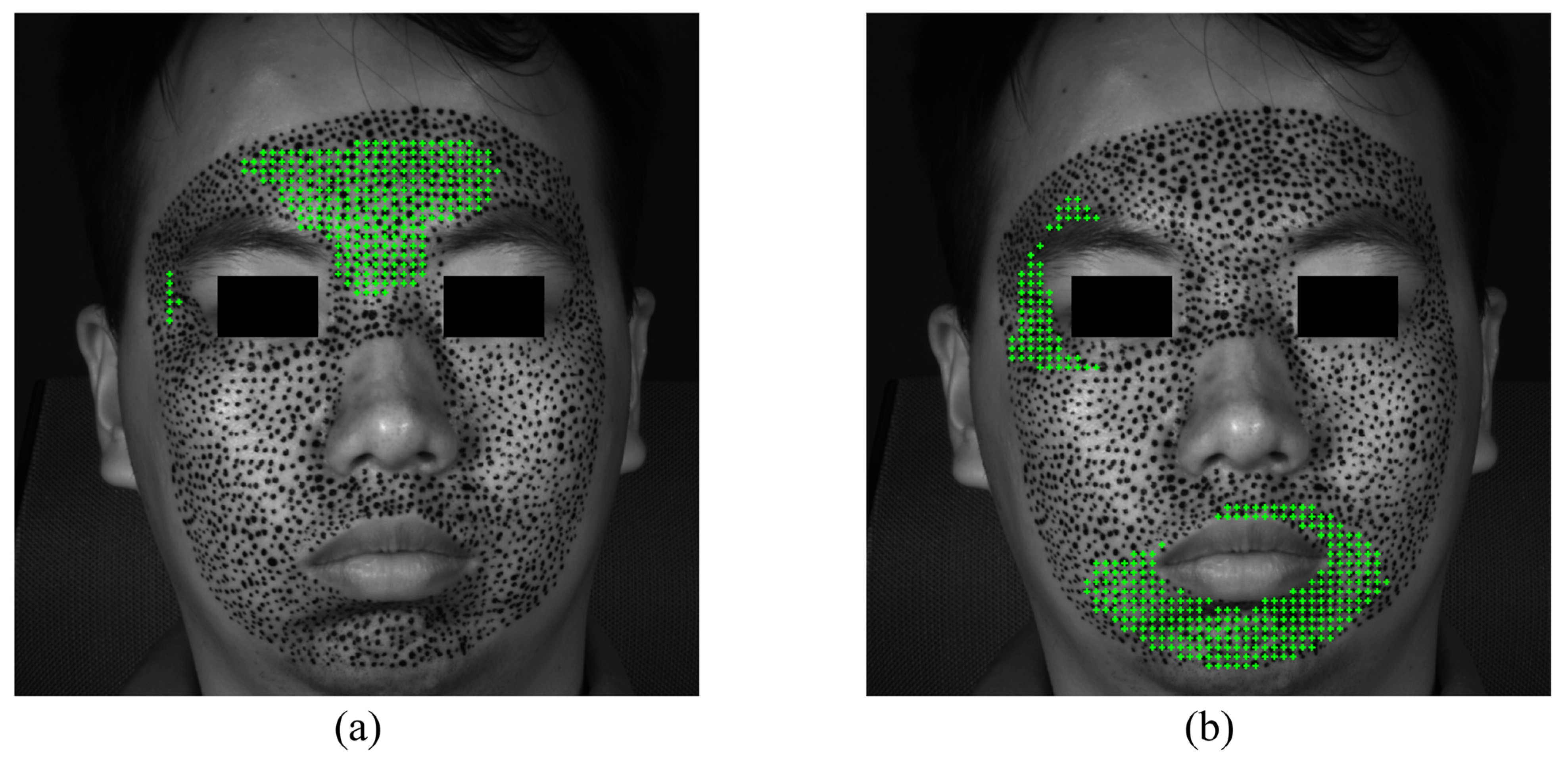

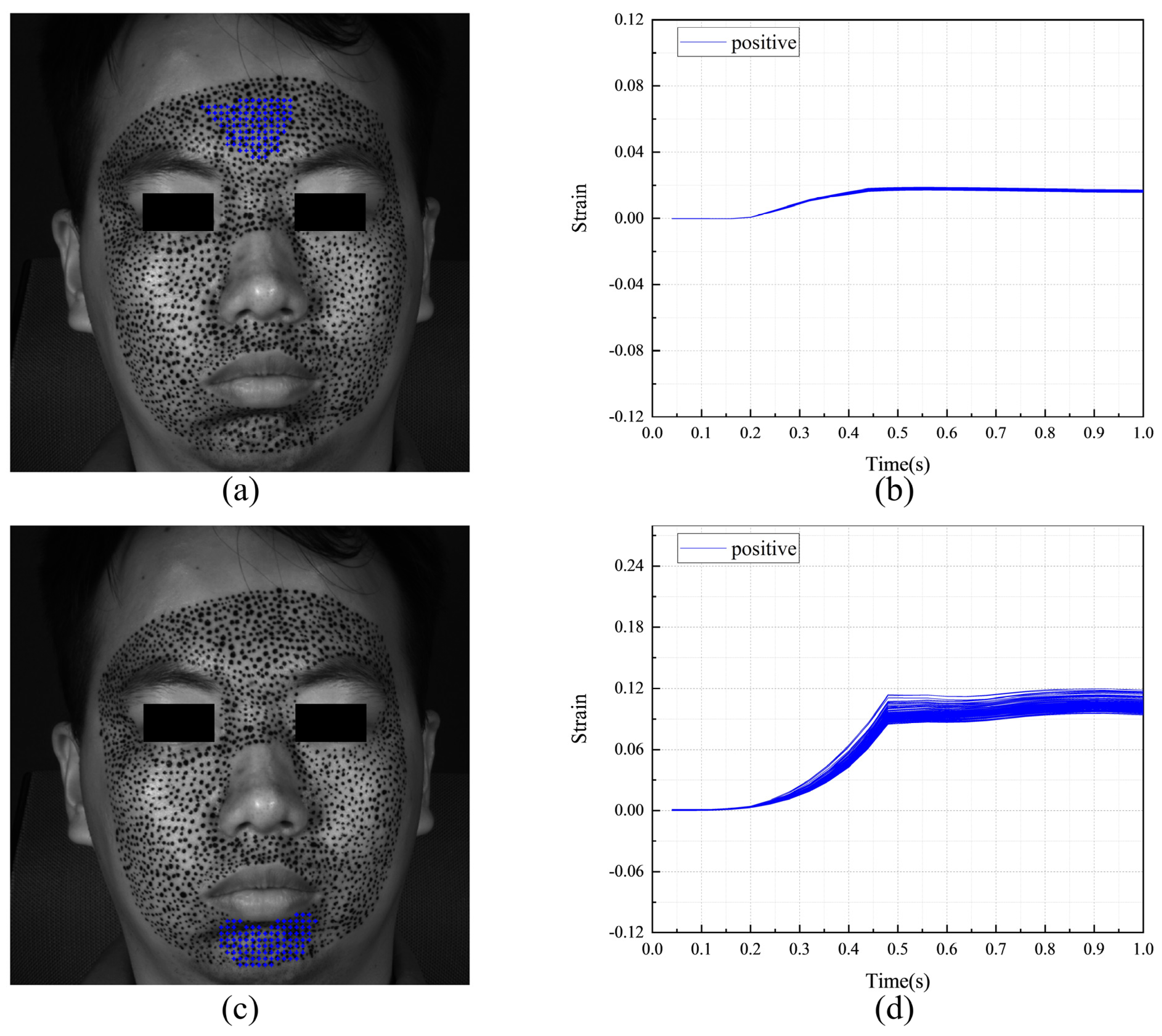

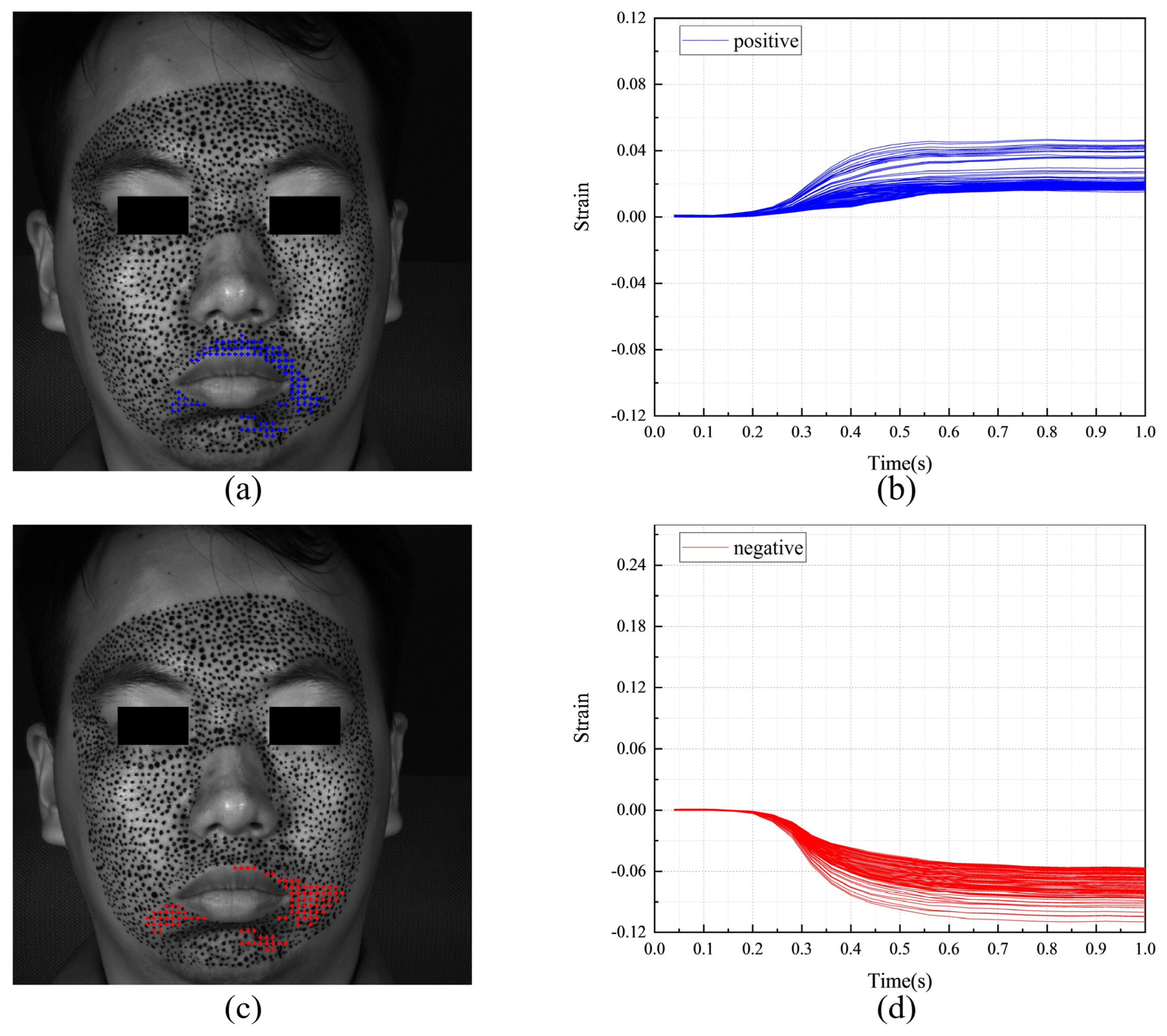

4.3.1. Happiness

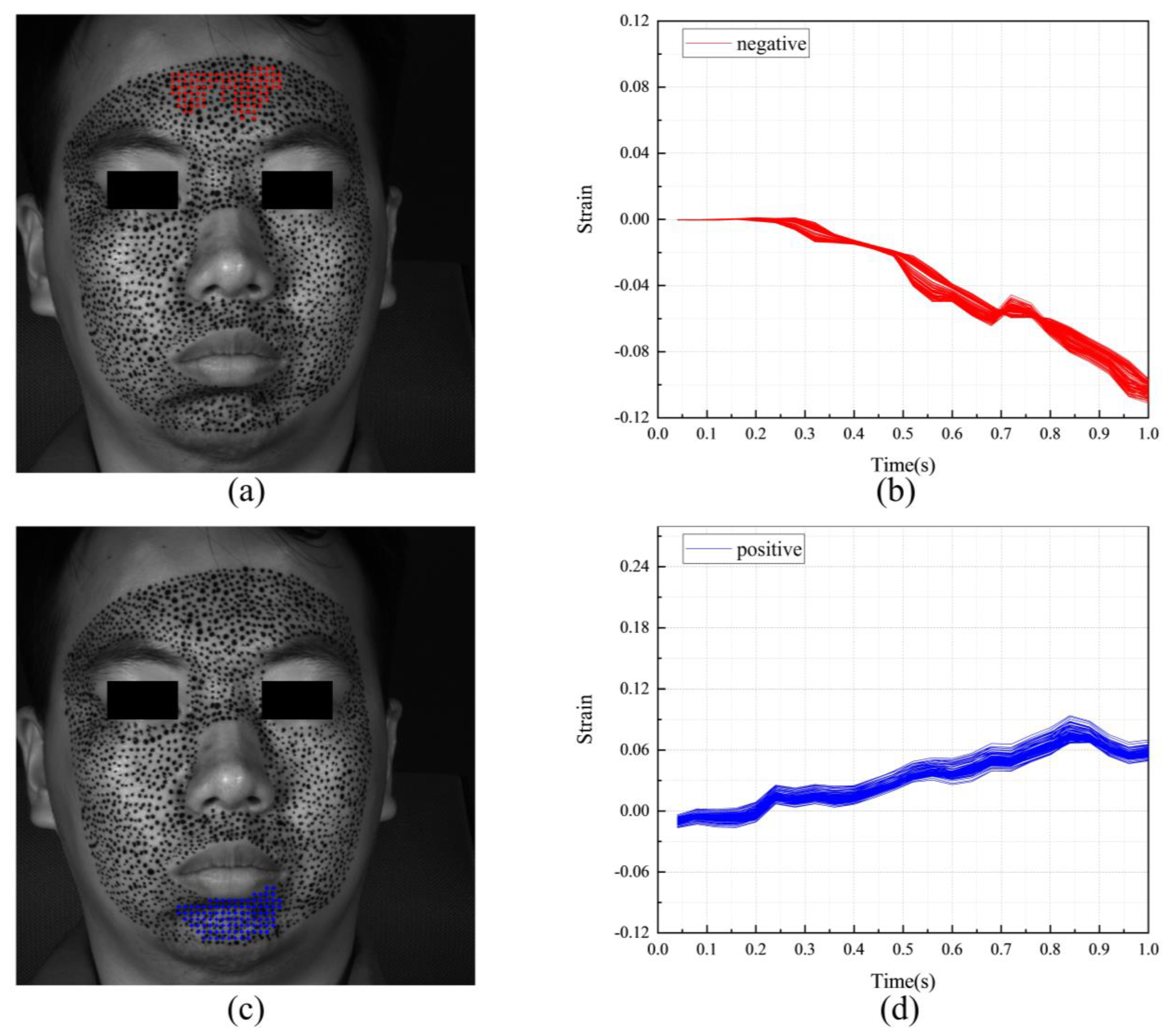

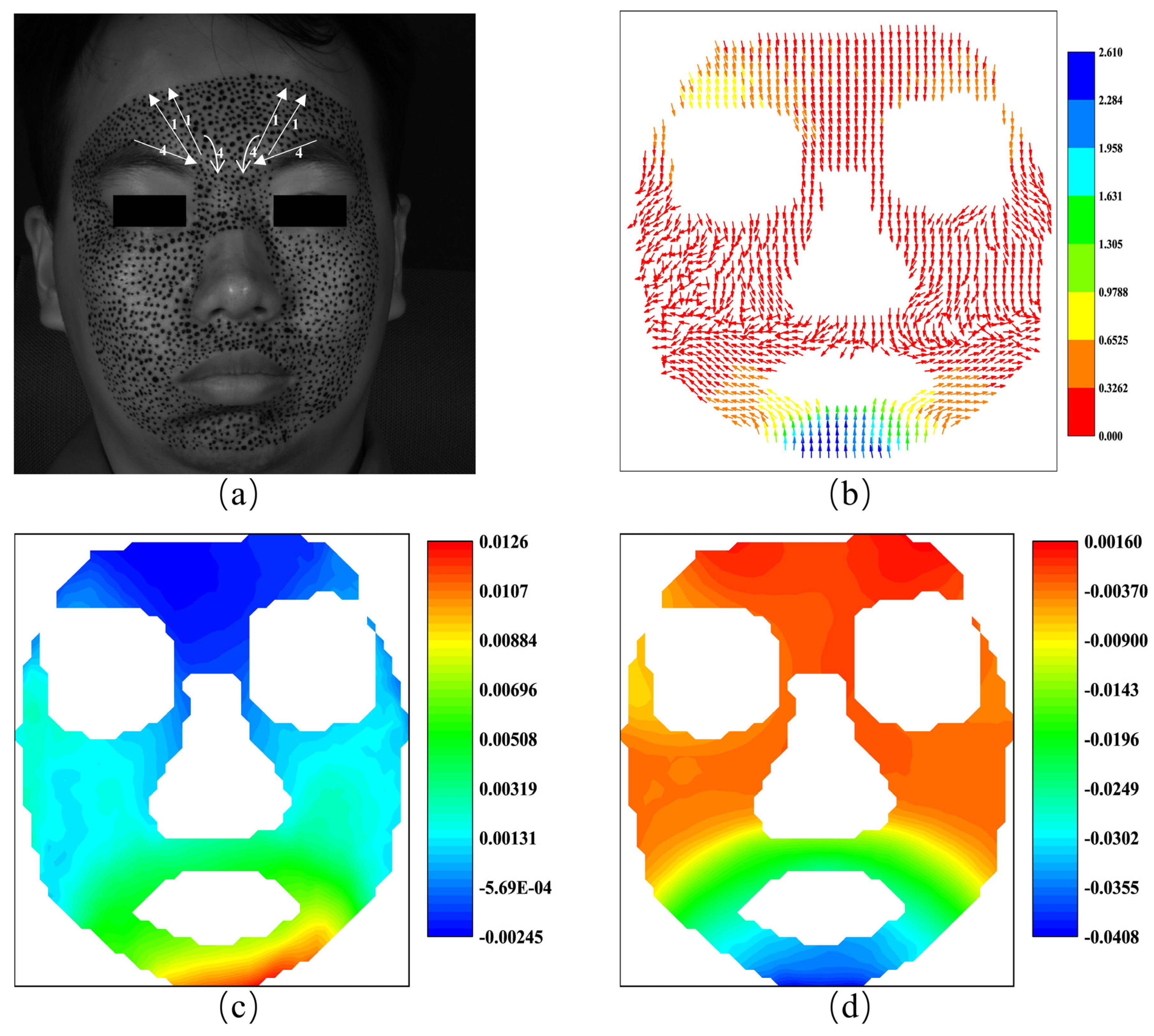

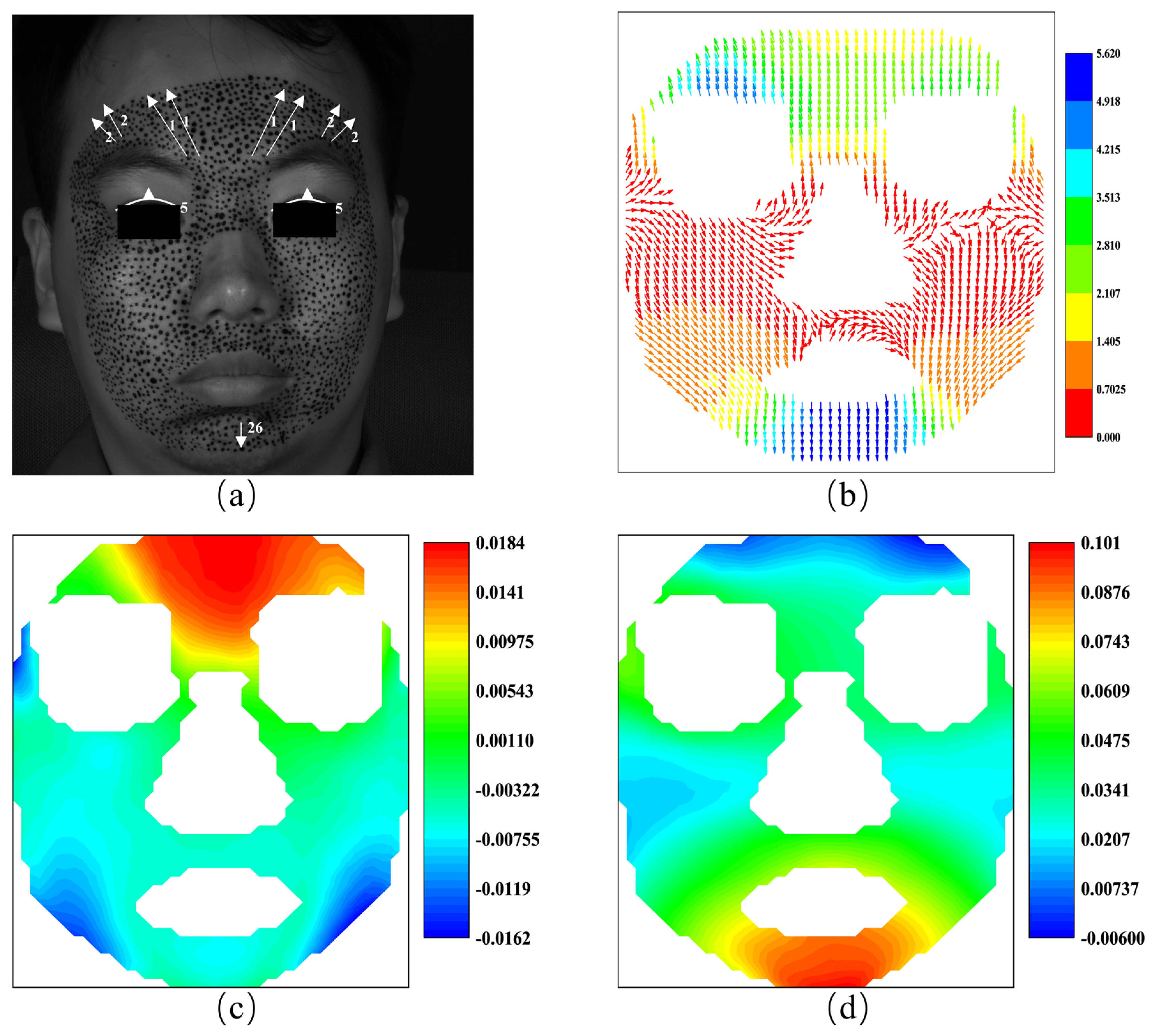

4.3.2. Anger

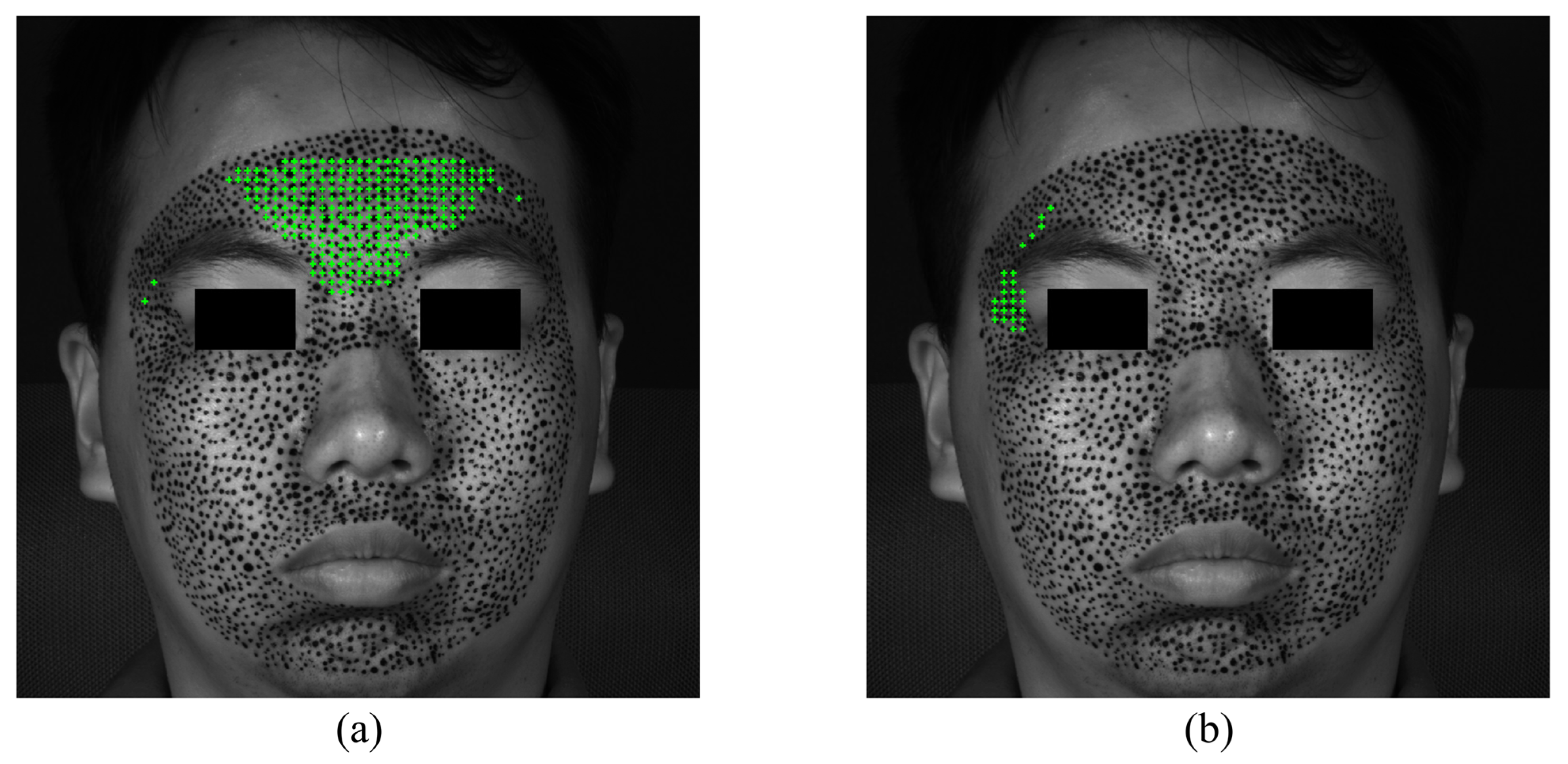

4.3.3. Disgust

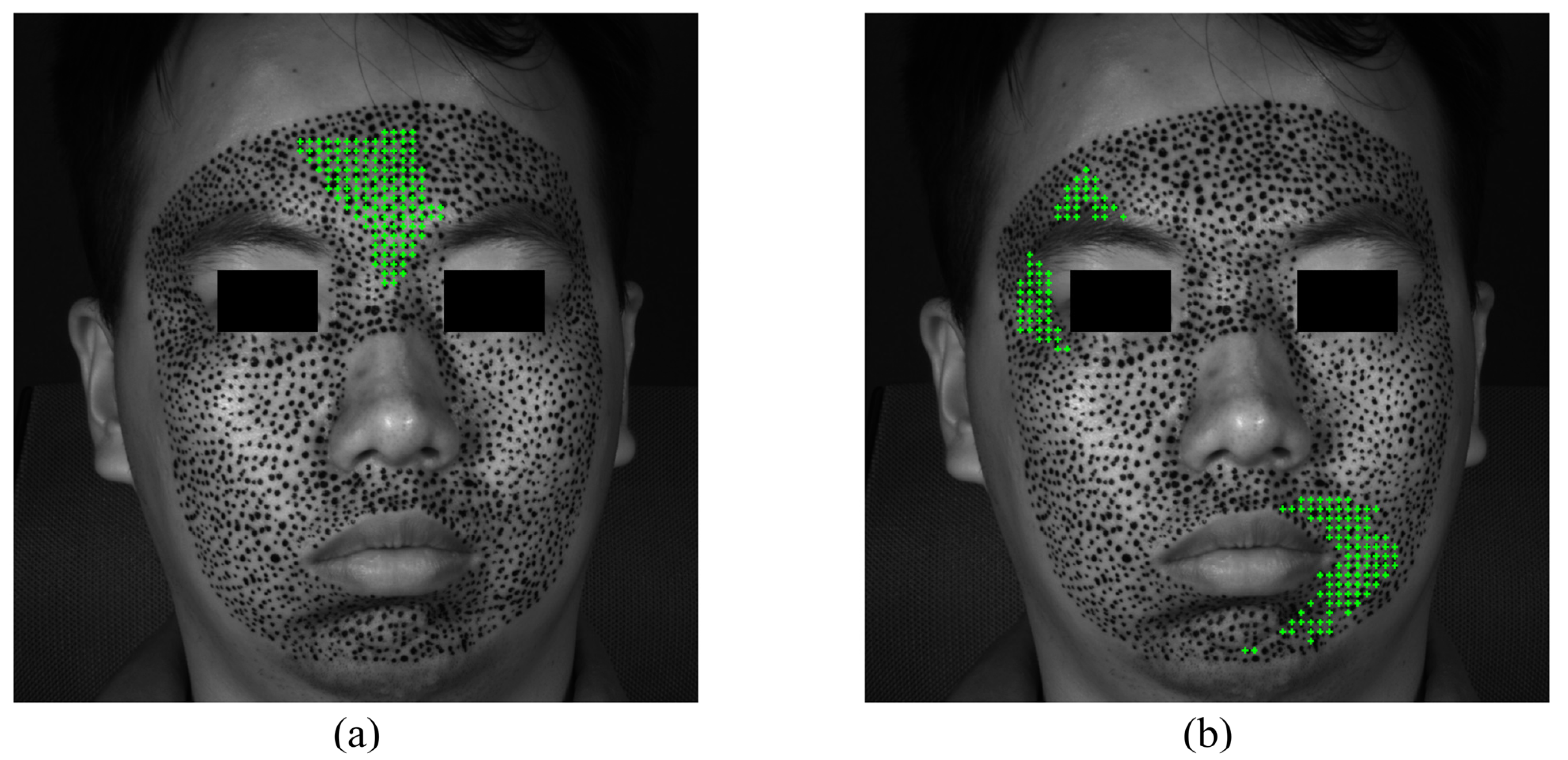

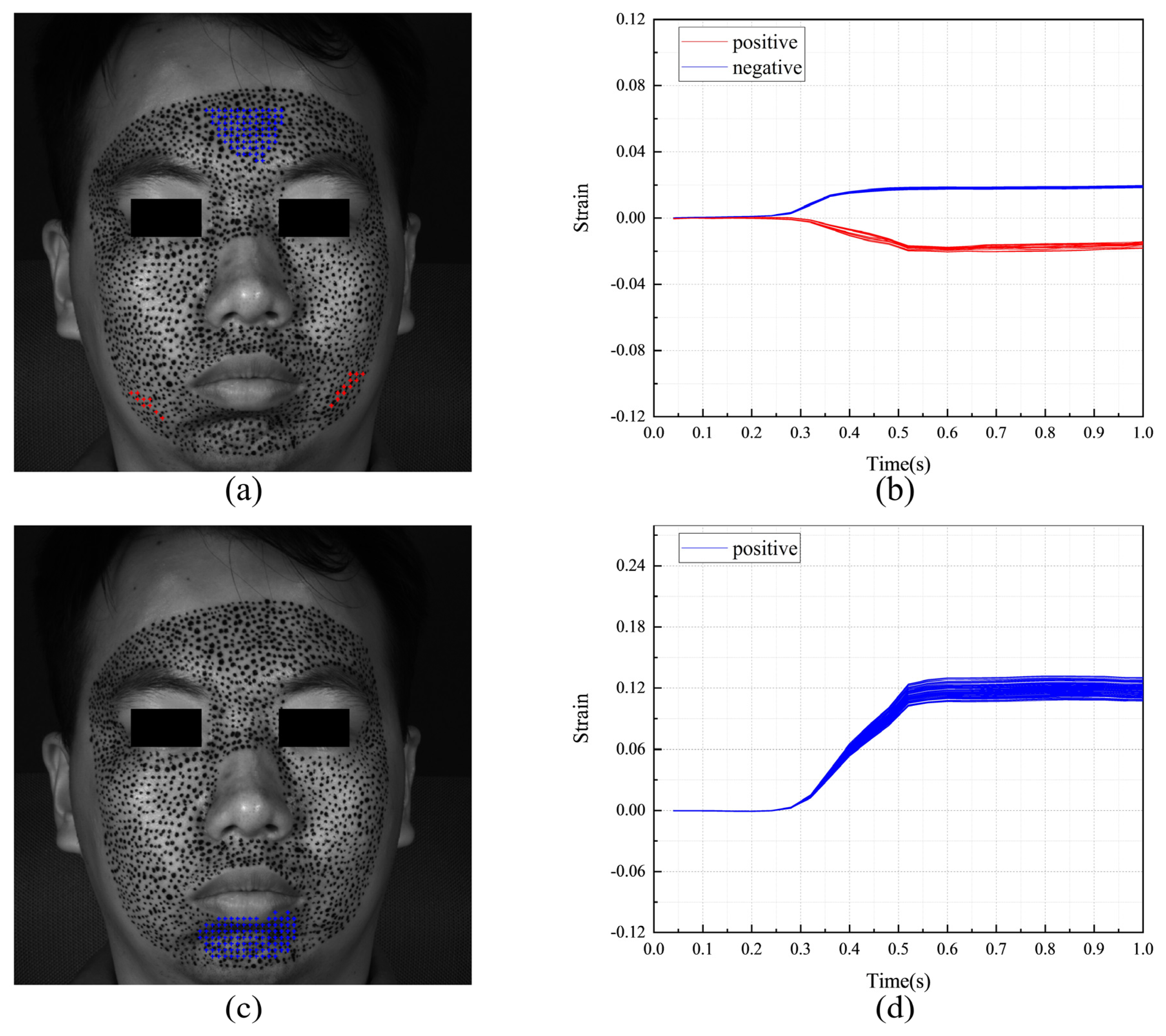

4.3.4. Fear

4.3.5. Sadness

4.3.6. Surprise

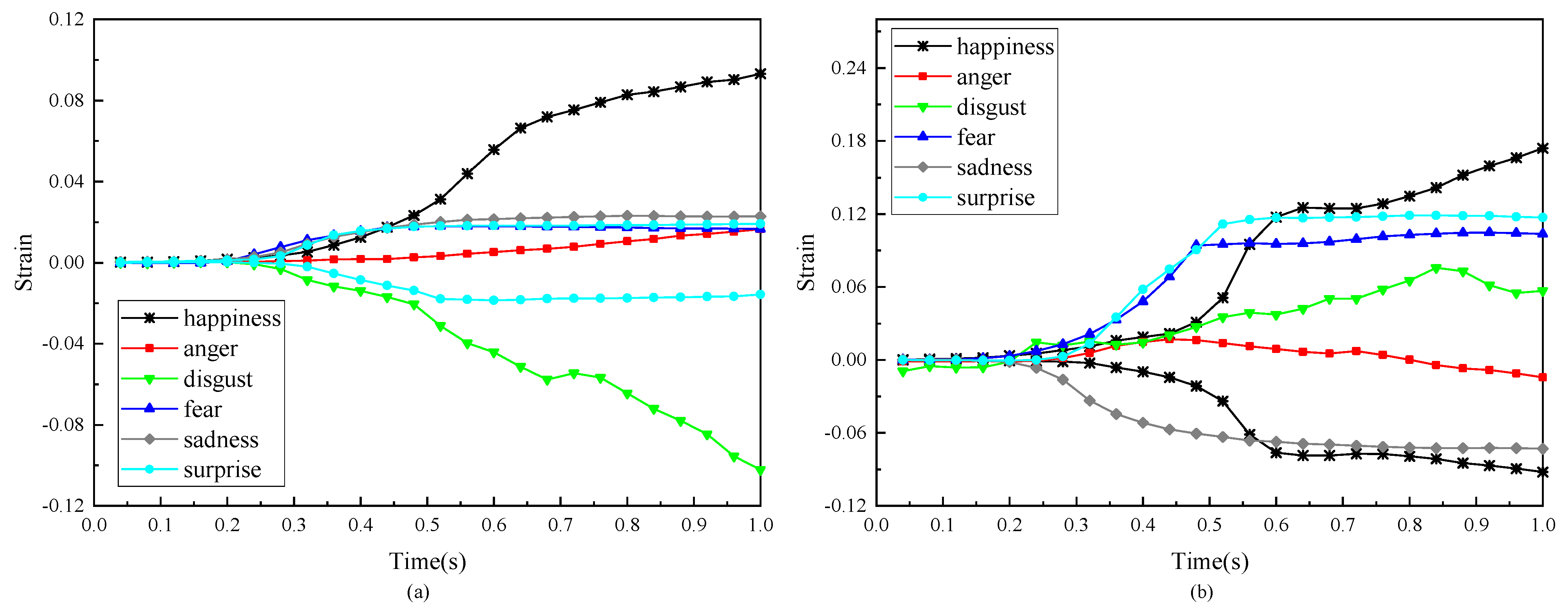

4.3.7. Comparison of the Different Expressions

4.4. Discuss

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Darwin, C.; Ekman, P.; Prodger, P. The Expression of the Emotions in Man and Animals, 3rd ed.; HarperCollins: London, UK, 1999; p. xxxvi. 472p. [Google Scholar]

- Cheng, L.C.; Lin, C.Y.; Huang, C.C. Visualization of Facial Expression Deformation Applied to the Mechanism Improvement of Face Robot. Int. J. Soc. Robot. 2013, 5, 423–439. [Google Scholar] [CrossRef]

- Happy, S.L.; Routray, A. Automatic Facial Expression Recognition Using Features of Salient Facial Patches. IEEE Trans. Affect. Comput. 2015, 6, 1–12. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Sorenson, E.R.; Friesen, W.V. Pan-cultural elements in facial displays of emotion. Science 1969, 164, 86–88. [Google Scholar] [CrossRef] [PubMed]

- Borgalli, R.A.; Surve, S. Review on learning framework for facial expression recognition. Imaging Sci. J. 2022, 70, 483–521. [Google Scholar] [CrossRef]

- Bedford, O.; Hwang, K.K. Guilt and shame in Chinese culture: A cross-cultural framework from the perspective of morality and identity. J. Theor. Soc. Behav. 2003, 33, 127–144. [Google Scholar] [CrossRef]

- Li, J.; Wang, L.Q.; Fischer, K.W. The organisation of Chinese shame concepts? Cogn. Emot. 2004, 18, 767–797. [Google Scholar] [CrossRef]

- Tracy, J.L.; Robins, R.W. Show your pride—Evidence for a discrete emotion expression. Psychol. Sci. 2004, 15, 194–197. [Google Scholar] [CrossRef]

- Du, S.C.; Tao, Y.; Martinez, A.M. Compound facial expressions of emotion. Proc. Natl. Acad. Sci. USA 2014, 111, E1454–E1462. [Google Scholar] [CrossRef]

- LaPlante, D.; Ambady, N. Multiple Messages: Facial Recognition Advantage for Compound Expressions. J. Nonverbal Behav. 2000, 24, 211–224. [Google Scholar] [CrossRef]

- Palermo, R.; Coltheart, M. Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behav. Res. Methods Ins. C 2004, 36, 634–638. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychol. Sci. Public Interest 2019, 20, 1–68. [Google Scholar] [CrossRef]

- Spencer-Smith, J.; Wild, H.; Innes-Ker, A.H.; Townsend, J.; Duffy, C.; Edwards, C.; Ervin, K.; Merritt, N.; Paik, J.W. Making faces: Creating three-dimensional parameterized models of facial expression. Behav. Res. Methods Instrum. Comput. 2001, 33, 115–123. [Google Scholar] [CrossRef][Green Version]

- Cohn, J.F.; Sayette, M.A. Spontaneous facial expression in a small group can be automatically measured: An initial demonstration. Behav. Res. Methods 2010, 42, 1079–1086. [Google Scholar] [CrossRef] [PubMed]

- Sayette, M.A.; Cohn, J.F.; Wertz, J.M.; Perrott, M.A.; Parrott, D.J. A Psychometric Evaluation of the Facial Action Coding System for Assessing Spontaneous Expression. J. Nonverbal Behav. 2001, 25, 167–185. [Google Scholar] [CrossRef]

- Hao, L.F.; Wang, S.F.; Peng, G.Z.; Ji, Q. Facial Action Unit Recognition Augmented by Their Dependencies. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 187–194. [Google Scholar] [CrossRef]

- Cordaro, D.T.; Sun, R.; Keltner, D.; Kamble, S.; Huddar, N.; McNeil, G. Universals and Cultural Variations in 22 Emotional Expressions Across Five Cultures. Emotion 2018, 18, 75–93. [Google Scholar] [CrossRef]

- Gendron, M.; Crivelli, C.; Barrett, L.F. Universality Reconsidered: Diversity in Making Meaning of Facial Expressions. Curr. Dir. Psychol. Sci. 2018, 27, 211–219. [Google Scholar] [CrossRef]

- Hamm, J.; Kohler, C.G.; Gur, R.C.; Verma, R. Automated Facial Action Coding System for dynamic analysis of facial expressions in neuropsychiatric disorders. J. Neurosci. Methods 2011, 200, 237–256. [Google Scholar] [CrossRef] [PubMed]

- Riehle, M.; Kempkensteffen, J.; Lincoln, T.M. Quantifying Facial Expression Synchrony in Face-To-Face Dyadic Interactions: Temporal Dynamics of Simultaneously Recorded Facial EMG Signals. J. Nonverbal Behav. 2017, 41, 85–102. [Google Scholar] [CrossRef]

- Arcaro, M.J.; Schade, P.F.; Vincent, J.L.; Ponce, C.R.; Livingstone, M.S. Seeing faces is necessary for face-domain formation. Nat. Neurosci. 2017, 20, 1404–1412. [Google Scholar] [CrossRef]

- Martin, J.; Rychlowska, M.; Wood, A.; Niedenthal, P. Smiles as Multipurpose Social Signals. Trends Cogn. Sci. 2017, 21, 864–877. [Google Scholar] [CrossRef] [PubMed]

- Ben, X.Y.; Ren, Y.; Zhang, J.P.; Wang, S.J.; Kpalma, K.; Meng, W.X.; Liu, Y.J. Video-Based Facial Micro-Expression Analysis: A Survey of Datasets, Features and Algorithms. IEEE Trans. Pattern Anal. 2022, 44, 5826–5846. [Google Scholar] [CrossRef] [PubMed]

- Allaert, B.; Ward, I.R.; Bilasco, I.M.; Djeraba, C.; Bennamoun, M. A comparative study on optical flow for facial expression analysis. Neurocomputing 2022, 500, 434–448. [Google Scholar] [CrossRef]

- Zhai, M.L.; Xiang, X.Z.; Lv, N.; Kong, X.D. Optical flow and scene flow estimation: A survey. Pattern Recogn. 2021, 114, 107861. [Google Scholar] [CrossRef]

- Pan, B.; Wu, D.F.; Yu, L.P. Optimization of a three-dimensional digital image correlation system for deformation measurements in extreme environments. Appl. Optics 2012, 51, 4409–4419. [Google Scholar] [CrossRef] [PubMed]

- Pan, B. Digital image correlation for surface deformation measurement: Historical developments, recent advances and future goals. Meas. Sci. Technol. 2018, 29, 082001. [Google Scholar] [CrossRef]

- Pan, B.; Wu, D.F.; Xia, Y. High-temperature deformation field measurement by combining transient aerodynamic heating simulation system and reliability-guided digital image correlation. Opt. Laser Eng. 2010, 48, 841–848. [Google Scholar] [CrossRef]

- Yan, T.H.; Su, Y.; Zhang, Q.C. Precise 3D shape measurement of three-dimensional digital image correlation for complex surfaces. Sci. China Technol. Sci. 2018, 61, 68–73. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Pan, B.; Grediac, M.; Song, W.D. Accuracy-enhanced constitutive parameter identification using virtual fields method and special stereo-digital image correlation. Opt. Laser Eng. 2018, 103, 55–64. [Google Scholar] [CrossRef]

- Staloff, I.A.; Guan, E.; Katz, S.; Rafailovitch, M.; Sokolov, A.; Sokolov, S. An in vivo study of the mechanical properties of facial skin and influence of aging using digital image speckle correlation. Skin Res. Technol. 2008, 14, 127–134. [Google Scholar] [CrossRef]

- Staloff, I.A.; Rafailovitch, M. Measurement of skin stretch using digital image speckle correlation. Skin Res. Technol. 2008, 14, 298–303. [Google Scholar] [CrossRef] [PubMed]

- Miura, N.; Arikawa, S.; Yoneyama, S.; Koike, M.; Murakami, M.; Tanno, O. Digital Image Correlation Strain Analysis for the Study of Wrinkle Formation on Facial Skin. J. Solid Mech. Mater. Eng. 2012, 6, 545–554. [Google Scholar] [CrossRef][Green Version]

- Miura, N.; Sakamoto, T.; Aoyagi, Y.; Yoneyama, S. Visualizing surface strain distribution of facial skin using stereovision. Theor. Appl. Mech. Lett. 2016, 6, 167–170. [Google Scholar] [CrossRef]

- Cheng, X.S.; Wang, S.B.; Wei, H.X.; Li, L.A.; Huo, Z.Z.; Li, C.W.; Wang, Z.Y. Digital image correlation by natural textures on biological skin. Opt. Laser Eng. 2023, 165, 107547. [Google Scholar] [CrossRef]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Pan, B.; Xie, H.M.; Wang, Z.Y.; Qian, K.M.; Wang, Z.Y. Study on subset size selection in digital image correlation for speckle patterns. Opt. Express 2008, 16, 7037–7048. [Google Scholar] [CrossRef] [PubMed]

- Su, Y.; Zhang, Q.C.; Fang, Z.; Wang, Y.R.; Liu, Y.; Wu, S.Q. Elimination of systematic error in digital image correlation caused by intensity interpolation by introducing position randomness to subset points. Opt. Laser Eng. 2019, 114, 60–75. [Google Scholar] [CrossRef]

- Hua, T.; Xie, H.M.; Wang, S.M.; Hu, Z.X.; Chen, P.W.; Zhang, Q.M. Evaluation of the quality of a speckle pattern in the digital image correlation method by mean subset fluctuation. Opt. Laser Technol. 2011, 43, 9–13. [Google Scholar] [CrossRef]

- Pan, B.; Lu, Z.X.; Xie, H.M. Mean intensity gradient: An effective global parameter for quality assessment of the speckle patterns used in digital image correlation. Opt. Laser Eng. 2010, 48, 469–477. [Google Scholar] [CrossRef]

- Wang, Z.Y.; Li, H.Q.; Tong, J.W.; Ruan, J.T. Statistical analysis of the effect of intensity pattern noise on the displacement measurement precision of digital image correlation using self-correlated images. Exp. Mech. 2007, 47, 701–707. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, X.; Wang, S.; Wei, H.; Sun, X.; Xin, L.; Li, L.; Li, C.; Wang, Z. Application of Stereo Digital Image Correlation on Facial Expressions Sensing. Sensors 2024, 24, 2450. https://doi.org/10.3390/s24082450

Cheng X, Wang S, Wei H, Sun X, Xin L, Li L, Li C, Wang Z. Application of Stereo Digital Image Correlation on Facial Expressions Sensing. Sensors. 2024; 24(8):2450. https://doi.org/10.3390/s24082450

Chicago/Turabian StyleCheng, Xuanshi, Shibin Wang, Huixin Wei, Xin Sun, Lipan Xin, Linan Li, Chuanwei Li, and Zhiyong Wang. 2024. "Application of Stereo Digital Image Correlation on Facial Expressions Sensing" Sensors 24, no. 8: 2450. https://doi.org/10.3390/s24082450

APA StyleCheng, X., Wang, S., Wei, H., Sun, X., Xin, L., Li, L., Li, C., & Wang, Z. (2024). Application of Stereo Digital Image Correlation on Facial Expressions Sensing. Sensors, 24(8), 2450. https://doi.org/10.3390/s24082450