Abstract

Computer vision (CV)-based systems using cameras and recognition algorithms offer touchless, cost-effective, precise, and versatile hand tracking. These systems allow unrestricted, fluid, and natural movements without the constraints of wearable devices, gaining popularity in human–system interaction, virtual reality, and medical procedures. However, traditional CV-based systems, relying on stationary cameras, are not compatible with mobile applications and demand substantial computing power. To address these limitations, we propose a portable hand-tracking system utilizing the Leap Motion Controller 2 (LMC) mounted on the head and controlled by a single-board computer (SBC) powered by a compact power bank. The proposed system enhances portability, enabling users to interact freely with their surroundings. We present the system’s design and conduct experimental tests to evaluate its robustness under variable lighting conditions, power consumption, CPU usage, temperature, and frame rate. This portable hand-tracking solution, which has minimal weight and runs independently of external power, proves suitable for mobile applications in daily life.

1. Introduction

Wearable devices (WDs) use sensors installed directly on the hand and fingers to perform hand tracking and gesture recognition, making them haptic and precise. However, WDs are expensive, and their major limitation is their dependence on hand size and shape, which can greatly limit movements and/or require continuous adaptations.

In contrast, by using cameras surrounding the scene and recognition algorithms, computer vision (CV) is very useful for developing touchless hand-tracking and gesture recognition systems [1,2,3] that are low-cost, precise, and have high versatility in recognizing every hand size and silhouette without the necessity of re-implementation or adaptation. The primary benefit of CV-based systems lies in their ability to operate without physical contact, allowing for unrestricted, fluid, and natural movements. Moreover, the hand is not constrained by any wearable device, enabling it to naturally grasp a wide range of daily-use tools.

This is why CV-based systems are gaining popularity in several fields, like human-system interaction, virtual reality environments, and even remote operations for medical procedures and rehabilitation [4].

Usually, CV-based hand tracking uses cameras placed in a fixed position, for example, on a desk, and a computer to run CV-based strategies for tracking. However, this configuration limits daily interactions and makes these technologies incompatible with mobile or ubiquitous applications [5]. Indeed, CV-based technologies usually require remarkable computing capabilities, especially when real-time applications with a minimum number of frames are required. As a consequence, the tracking systems cannot be transported easily or with minimal hindrance.

In systems featuring stationary cameras, users are also obliged to carry out their tasks within a predetermined field of view, restricting their activities to a specific location. A more effective solution would be to change the position of the hand-tracking sensor to be consistent with the user’s field of view, for example, placing the sensor on the user’s head.

This requirement is even more evident when virtual reality (VR) or augmented reality (AR) headsets are used. These systems are typically composed of a head-mounted display (HMD) and a physical controller or a dedicated device for hand tracking. Enabling controller-free interaction with virtual environments would allow one to grasp real-world objects, making the interaction natural and immersive [6], which is recommended, especially in AR applications.

In the last few years, several manufacturers have integrated sensors (RGB, IR, depth, or a combination of them) directly into their headsets, using CV-based strategies for hand tracking [7].

One of these sensors, already market-available and widely used, is the Leap Motion Controller (LMC) [8,9,10].

The LMC offers precise hand tracking, a high frame rate, and adequate latency [11,12] at an affordable price and compact dimensions [13].

Moreover, the LMC’s software, in its recent release, has implemented a head-mounted option that enables tracking the hand from the back instead of the palm. Therefore, both the hardware and software features make the LMC particularly suitable for grasping applications.

Head-mounted hand tracking is an effective design choice because the user’s hands are free to interact with the world without worrying about the position of the hand-tracking sensor, which consistently captures the user’s field of view [14]. Nevertheless, a limitation remains: the presence of a computer for hand-tracking operations strongly limits the portability of the system.

With the recent advancements in single-board computers (SBC), powerful, compact, and portable computing devices have become available. These devices are capable of managing LMC sensors at sufficient frame rates [15].

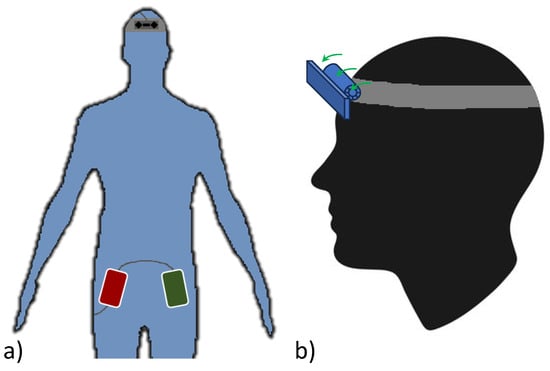

In this work, we present a portable hand-tracking system based on an LMC (version 2 released in 2023) controlled by an SBC powered by a power bank. Figure 1a illustrates an embodiment of the proposed system: the LMC is mounted on the head and connected to the SBC powered by a power bank. Both the SBC and power bank are compact and can be carried in a pocket. Head-mounted support has been adapted to accommodate the LMC, allowing for the adjustment of the orientation of the sensor (as shown in Figure 1b) according to the user’s requirements.

Figure 1.

System embodiment: (a) pocket location of the SBC and power bank with respect to the head-mounted LMC; (b) details of the LMC support that enables changing the vertical orientation of the sensor.

In this embodiment, the proposed system can be adapted for several applications, leaving both hands completely free. Moreover, the system is independent of an external power supply and its weight is minimal, making it suitable for outdoor applications.

The remainder of this paper is organized as follows. Section 2 reports the related works; Section 3 describes the proposed system in detail; Section 4 presents a use case and some experimental tests, assessing the robustness of the proposed device under variable lighting conditions, as well as its power consumption, CPU usage, temperature, and frame rate; and finally, Section 5 presents the conclusions and outlines future work.

2. Related Works

One of the first works to use a head-mounted sensor for 2D hand-gesture recognition was [14], where only a single RGB camera was used to minimize the weight.

In [16], a head-mounted gaze-directed camera was used for 3D hand-tracking of everyday manipulation tasks. However, the system needed a powerful computational machine to achieve tracking at 12 frames per second (fps).

In [17], two RGB head-mounted synchronized cameras were proposed (the two cameras were calibrated offline) for 3D hand-gesture recognition, similar to [16]. The captured videos, with frames sized at 320 × 240, were processed at about 30 fps using a dedicated personal computer. The RGB system was lightweight (65 g), but its bottleneck was the personal computer, which was heavy and bulky.

In 2013, the first version of the LMC was presented for 3D hand tracking. It was a revolutionary device, being very lightweight (only 32 g), compact (80 × 30 × 11.30 mm), fast (between 40 fps to 120 fps, depending on the managing hardware), and precise (about 1 mm) [18,19]. In the beginning, the LMC was designed to be placed and used on a desk and connected to a personal computer, and several works appeared using it in disparate applications [8,20,21,22,23,24].

However, the sensor’s features made it very suitable for head-mounted hand tracking, and in 2014, the software of the LMC was updated to support VR tracking mode, designed to provide hand tracking while the device was mounted on virtual reality headsets [25].

In the following years, several works were presented using the LMC in a head-mounted assembly, also in combination with VR/AR headsets [6,26,27,28,29,30,31], confirming the sensor’s suitability for this type of task.

Nowadays, several VR/AR headsets have built-in hand-tracking capabilities, like Microsoft Hololens 2 [32,33,34] and Meta Quest [35,36].

However, in general, VR/AR headsets either continue to be connected to a personal computer (which limits their usable space) or have expensive built-in CPUs that mostly hinder access to raw data (such as Meta Quest [37]).

Recently, in [38], the authors proposed a system based on an LMC connected to a mini PC powered by a very powerful power bank. The LMC is head-mounted, whereas the mini PC and power bank are placed inside a backpack that the user has to wear. Indeed, this design allows for complete mobility by the user but is uncomfortable. As the authors pointed out, the LMC software requires an X_86 machine, limiting the possibility of using a lightweight SBC. However, in [15], the authors demonstrated that it is possible to run the LMC software on an SBC equipped with an ARM CPU architecture through virtualization, reaching almost 30 fps. The SBC used was a Raspberry Pi 4 Model B [39] (Raspberry Pi Ltd., Cambridge, UK), which we here abbreviate as RSP.

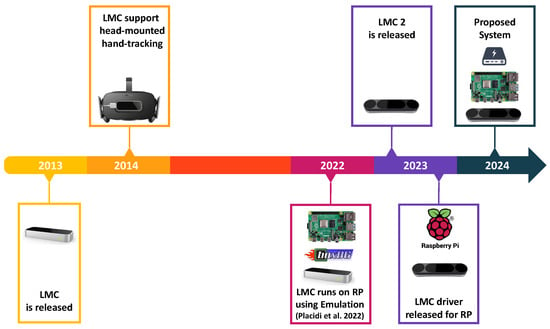

Finally, in December 2023, the LMC manufacturer released a new version that supports the RSP [40,41]. Figure 2 depicts the timeline of the LMC.

Figure 2.

Timeline of the LMC: The sensor was first designed to be driven by a personal computer. In recent embodiments over the last two years, it has started to be driven by an SBC (Placidi et al. 2022 [15]). Further, in its latest release, the ROI was increased and back-hand tracking was enabled, allowing for complete head-mounted embodiments.

The design and development of a portable forearm-tracking system requires satisfying several critical requirements: reduced costs and energy consumption, increased battery life, decreased temperature, reduced CPU usage, and maximized frame rate, among others. Without meeting these requirements, a forearm-tracking system would be incomplete. The system we propose herein satisfies the requirements for complete forearm tracking with an extensible ROI. Moreover, we have also kept the cost of the system low without compromising precision.

3. System Design

The LMC is a compact device designed for precise hand and finger tracking in three-dimensional space. Measuring just 84 mm × 20 mm × 12 mm and weighing only 29 g, it offers portability and ease of use. With low power consumption requirements of 5 volts and 500 milliamps, it efficiently integrates into various setups without compromising performance [42]. The controller uses a combination of infrared sensors and cameras to track the movements of hands and fingers with high accuracy [18]. The drivers for the LMC are compatible with a wide range of desktop CPUs, operating systems, and VR/AR headsets. Recently, they have also been made available for the RSP, resulting in minimized energy consumption and optimized performance for the LMC itself.

The RSP used is equipped with a Broadcom BCM2711 CPU (Broadcom Inc., North San Jose, CA, USA), Quad-core Cortex-A72 (ARM v8) 64-bit SoC at 1.8 GHz, and 4 GB of RAM, with the RSP enclosed in its case. This is a powerful and low-cost RSP with low dimensions and weight (88 mm × 58 mm × 19.5 mm and 46 g) and low energy consumption (max 15 W). As a result, the RSP stands out among the SOCs that best meet the requirements of the proposed system.

Given that the LMC now supports the RSP, the natural synergy between them makes their combination an obvious choice. Together, they provide precise hand-tracking capabilities, enhancing the overall functionality and usability of the system. Moreover, the proposed system is conveniently powered by an external portable power supply, ensuring flexibility and ease of use in various settings.

In fact, through a USB-C port, the RSP is connected to a power bank (with dimensions of 105 mm × 68 mm × 20 mm, weight of 190 g, and capacity of 10,000 mAh) capable of providing a maximum power of 20 W. The RSP is connected to the LMC through a USB 3.0 port.

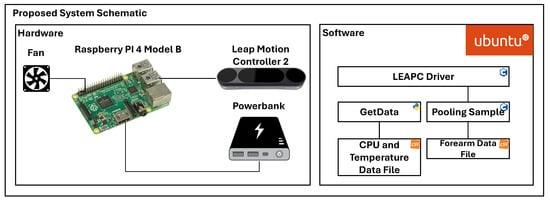

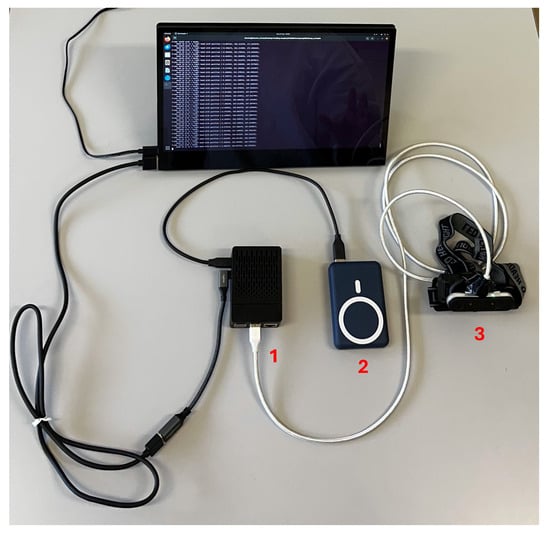

The design of the proposed system is shown in Figure 3. The RSP is placed into a protective case (not shown in Figure 3 but indicated as 1 in Figure 4) that prevents it from being exposed to dust, humidity, and impacts that could damage the board. The RSP is equipped with passive heat dissipation (heatsinks) and a fan for active dissipation to avoid overheating due to the high-intensity operations required for tracking, both hosted in the protective case.

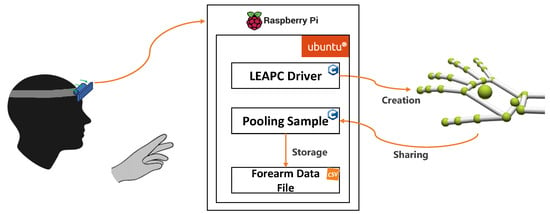

Figure 3.

System architecture.

Figure 4.

System embodiment: (1) the RSP enclosed in its case, (2) the power bank, (3) the LMC inside the head-mounted support. The presence of the monitor, not used in the final embodiment, is necessary during system calibration to set the values of the parameters.

The RSP runs on the Ubuntu 20.04 operating system optimized for ARM architectures. The official LMC software for the RSP was installed, and the LeapC API was used for the development of the scripts for data acquisition.

The “Pooling Sample” script, provided with the LMC software, was modified to enable data saving. The initial modification was to set the acquisition mode to head-mounted, running a specific function defined in the LeapC API. After the conclusion of this phase, data frame streaming was initialized. For each frame, data regarding the frame ID, the frame rate, the hand IDs, and all the coordinates of the joints of each hand are extracted and saved in a CSV file. Figure 3 also reports the “GetData” script, developed in Python 3.9, which, although not part of the final system embodiment, is used to collect data on CPU usage and temperature behavior.

A detailed illustration of how the proposed system operates, including the workflows and the interactions between the hardware and software components, is shown in Figure 5.

Figure 5.

Workflow and interactions between the hardware and software components of the proposed system.

The LMC captures hand movements within a three-dimensional coordinate system that is centered around the device itself. The LeapC driver is hosted on the RSP and acquires the raw data from the LMC. From the raw data, the LMC software creates a numerical model of the hand represented as a stick model, reflecting the real anatomy of the human hand, as shown in the right part of Figure 5.

In particular, the LMC data are organized into frames. Each frame represents a distinct snapshot in time and contains all the data captured by the controller at that moment. Within each frame, information about detected hands is provided. This includes data such as hand ID, palm position (x, y, z coordinates), palm velocity, palm normal (the direction the palm is facing), and palm direction (the direction the palm is moving). For each detected hand, detailed information about individual fingers is provided. This includes data such as finger ID, finger type (thumb, index finger, middle finger, ring finger, pinky finger), fingertip position (x, y, z coordinates), finger direction, and finger velocity. Additionally, the LMC can capture raw image data through its infrared cameras. However, these data are primarily used for internal processing and calibration and can potentially be accessed by developers for specialized applications. For this reason, in this work, hand data provided by the API of the LMC are used because they are precise and reliable [18], and the algorithm used by the controller is designed to be efficient for use with applications that require a high frame rate. Finally, the Pooling Sample script establishes the connection with the LeapC driver and receives the LMC data frames. We modified this script to concatenate the data frames, converting each of them into a comma-separated value (CVS) format and saving the data in a table-structured format.

Throughout all the tests conducted, the Graphical User Interface (GUI) was intentionally disabled. This measure was implemented with the dual purpose of conserving energy and minimizing CPU usage. It is crucial to note that this particular characteristic is not a limitation; rather, it aligns with the system’s design intention in scenarios where user–system interaction does not require visual interface elements. For this reason, the absence of a GUI and the option to do away with a monitor altogether serve to optimize the system for its intended purpose.

Figure 4 shows the final embodiment of the proposed system, arranged on a table. As stated above, the monitor is only used for calibration purposes, and for this reason, it is powered by an external power source. In the scenario depicted in Figure 4, the RSP is running the ‘Pooling Sample’ script, which prints the frame number and some specific numerical information regarding the forearms. In these conditions, where the GUI of the OS and the print of the strings in the system terminal are slowing down the system’s performance, the frame rate is about 60 fps, providing near real-time capabilities.

4. Measurements

The proposed system is characterized by its portability, for which power consumption estimation is very important because it directly affects battery duration. Additionally, CPU usage and its temperature are other fundamental parameters to consider in portable applications, for which the RSP could be placed in areas with little airflow, such as pockets.

For the above reasons, some measurements, tests, and evaluations are required for the complete characterization of the system. The tests were conducted considering two different scenarios: the RSP without the case and the RSP inside the case with a fan.

The first scenario is necessary to establish baseline measurements to be used for reference. The second scenario is the operative one and represents the real operating conditions.

For each scenario, four different configurations were tested:

- 1.

- RSP is on but without a connected LMC (RSP): The RSP is not actively involved in intensive operations or processes, consuming its lower-bound power and generating a lower-bound heat compared to when it is engaged in demanding tasks.

- 2.

- LMC is connected to RSP but not tracking (RSP + LMC): Compared to just the RSP, the LeapD daemon is running but data streaming is not requested by any software.

- 3.

- LMC is connected to RSP and data from forearm tracking is requested (RP + LMC + Data): Compared to the RSP + LMC task, a script for data acquisition, provided as an example by the LMC manufacturer, is running. The script requests data from the LeapD.

- 4.

- LMC is connected to the RSP, forearm tracking is running, and data are printed on the console (RP + LMC + Data + Print): Compared to the RP + LMC + Data task, the script is also printing data on the console. This configuration represents the worst-case (upper-bound) scenario for power consumption since, for the considered portable applications, the monitor is not used.

For all the above configurations, the OS GUI was disabled, leaving only the system terminal rendered on the screen, the latter being powered by an independent power source. The only energy absorbed from the power bank is related to the video data shared through the HDMI port, which we consider negligible.

For these experiments, the proposed system was arranged on a table, and the LMC was placed on the head of the user. For configurations 3 and 4, the user typed on a keyboard and interacted with the smartphone to simulate different hand poses and occlusions. The methodology and results are reported in Section 4.1 and Section 4.2.

Another fundamental aspect to consider is the frame rate of the proposed system. The frame rate of the LMC is variable and dependent on several factors such as CPU availability, lighting conditions, the presence of hand occlusions, etc. For this reason, the proposed system was tested in real operational scenarios, in which the user interacted with different mechanical tools and under different lighting conditions. The methodology and results are reported in Section 4.3.

4.1. Power Consumption

For each scenario and configuration, we report the electric voltage, current, and total power consumption. For the measurements, we used a USB tester connected between the USB-C power input of the RSP and the USB-C power output of the power bank. The sensitivities of the USB tester used are 0.01 V, 0.01 A, and 0.01 W.

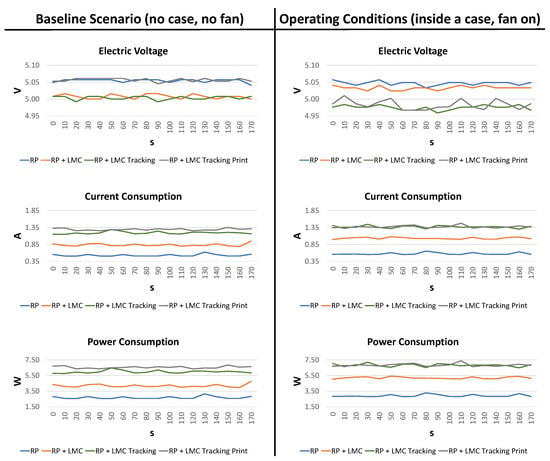

The data were collected every 10 s for a total of 170 s. Table 1 reports the averaged results for both the baseline scenario (when the RSP was not inside the case and without a fan) and the operating conditions (when the RSP was inside the case and in the presence of a switched-on fan).

Table 1.

Voltage, current, and power consumption in two different scenarios: Baseline scenario (left) and operating conditions (right). In the operating conditions, the use of a fan is also considered to maintain a low operating temperature. Averaged measurements are reported.

Starting from the RSP without the case (baseline scenario), the electric voltage was almost the same across all tasks, ranging between 4.99 V and 5.06 V.

Instead, the absorbed current varied depending on the task. In the first configuration (RSP), the current consumption ranged between 0.50 A and 0.62 A (average 0.53 A), and in the RSP + LMC configuration, it ranged between 0.79 A and 0.95 A (average 0.84 A). Consequentially, based on our measurements, 0.33 A was absorbed by the LMC, confirming the datasheet of the manufacturer, which stated that the LMC can require up to 0.5 A [42]. In fact, in this task, the LeapD was running, and the IR sensors were on, but there was no data request from any application. In the RSP + LMC + Data configuration, the absorbed current ranged between 1.15 A and 1.29 A (average 1.19 A), and finally, in the RSP + LMC + Data + Print configuration, it ranged between 1.26 A and 1.36 A (average 1.30 A). The increased current can mainly be attributed to the increased CPU usage.

Finally, we measured the active power consumption [W], which can be also calculated as , where V is the electric voltage measured in volts [V] and I is the current measured in amperes [A]. We compared the values of P measured with the ones calculated, and the results were the same. When the first configuration was considered (RSP), the average power consumption ranged between 2.55 W and 3.15 W (average 2.68). In the RSP + LMC configuration, it ranged between 3.95 W and 4.75 W (average 4.19 W); in the RSP + LMC + Data configuration, it ranged between 5.75 W and 6.47 W; and in the RSP + LMC + Data + Print configuration, it ranged between 5.75 W and 6.47 W (average 5.97 W). Since, as previously stated, the electric voltage remained constant across all tasks, the differences in power consumption can be attributed to the increment in current consumption, and the same considerations hold.

The same measurements were repeated with the RSP using the operating configuration (fan on). In this case, the electric voltage was almost the same for the RSP and the RSP + LMC configurations, but a slight drop was measured for the RSP + LMC + Data and the RSP + LMC + Data + Print configurations. Regarding current consumption, in the RSP configuration, the values ranged between 0.55 A and 0.65 A (average 0.58 A). Compared with the RSP without a fan, the current consumption increased by 0.05 A. After the connection of the LMC, the average current consumption increased to 1.03 A, ranging between 1.00 and 1.08. Compared with the RSP without a fan, the current consumption increased by 0.19 A. In the RSP + LMC + Data configuration, the average current consumption increased to 1.37 A, ranging between 1.30 and 1.45, with an increase of 0.18 A compared to the same configuration without the fan. Finally, in the RSP + LMC + Data + Print configuration, the average current consumption increased to 1.38 A, ranging between 1.32 and 1.48, with an increase of 0.08 A. On average, compared with the ‘fanless’ configuration, the proposed configuration required 0.13 A, in line with the current consumption stated by the manufacturer of the fan. The increments in power consumption compared to the fanless configuration were 0.23 W, 1.01 W, 0.84 W, and 0.30 W, which equates to an average of 0.60 W. The increased power consumption cannot be totally attributed to the fan. The introduction of the fan has an acceptable impact on power consumption, but as discussed in the next section, it is fundamental to keep the RSP temperature under control.

Finally, Figure 6 reports the plots for the baseline scenario and the operating conditions: the relevant result is that all measurements exhibit stability over time.

Figure 6.

Voltage, current, and power measurements for both considered scenarios.

4.2. CPU Usage and Temperature

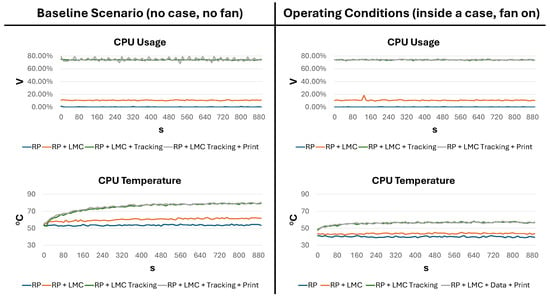

For each scenario, we report the CPU usage [%] and the CPU temperature [°C], measured using the RSP’s on-board sensor and the script GetData, as described in Section 3. Compared to the measurements of energy consumption, these measurements required more samples because the temperature took more time to converge to the final value. For this reason, a script was developed to acquire values every 10 s for 890 s. Table 2 reports the results for both the RSP baseline (when the RSP was not inside the case) and the proposed configuration (when the RSP was inside the case with the fan).

Table 2.

CPU usage (in %) and temperature (in °C) for both considered scenarios.

Starting from the baseline scenario, the CPU usage was minimal in the RSP configuration (the GUI was disabled). When the LeapD was running (RSP + LMC), CPU usage was almost 11%, and it reached 74.02% when data were requested from an application (RSP + LMC + Data). When printing was added (RSP + LMC + Data + Print), the average CPU usage increased slightly. Regarding the temperature, in the RSP configuration, it was 53.66 °C and increased for each task: 60.36 °C, 74.75 °C, and 75.49 °C. This demonstrates that without any active cooling, the temperatures reached were too high. In fact, during the most intense task (RSP + LMC + Data + Print), there was a peak temperature of 80.34 °C. As reported in the specifications, the RSP began to throttle the processor when the temperature reached 80 °C, increasing when it reached the maximum temperature of 85 °C [43]. This justifies the adoption of a fan installed on a case. In fact, in the proposed configuration, even though the CPU usage was almost identical to the baseline scenario, the drop in temperature was evident across all tasks. Even in the most intense task, the maximum temperature registered was 58.43 °C, which was almost 22 °C lower than in the same task without the fan, although it is now enclosed in a case. Additionally, in both scenarios, passive adhesive aluminum heatsinks were used, as shown in Figure 7.

Figure 7.

The aluminum heatsinks used during the measurements for the baseline and the proposed assembly are highlighted with yellow boxes. The figure also shows the RSP enclosed in the case and the fan used for cooling in the proposed assembly.

Finally, the plots for the baseline scenario and the operating configuration are shown in Figure 8. Although CPU usage was stable, the CPU temperature significantly increased, especially in the baseline scenario. In fact, in the RSP + LMC + Tracking + Print configuration, the temperature exceeded 70 °C after only 160 s and was unstable, showing an increasing trend. In contrast, in the same scenario for the final RSP assembly, after the same amount of time, the temperature had already converged to a stable value at around 58 °C.

Figure 8.

CPU usage (in %) and temperature (in °C) for both scenarios.

The last significant piece of information gathered from using the proposed device in both scenarios was the power bank’s battery duration. We left the system in RSP mode for the baseline scenario until it turned off due to battery expiration, and we found out that the duration was around 12 h. Then, we repeated the same task for the operating conditions (fan on), but now in RSP + LMC + Data + Print mode, the duration was 5 h. The results indicate that for the worst-case scenario, the duration of the battery is completely compatible with most human activities the system is involved in tracking. It is worth noting that for the baseline scenario, we did not perform measurements in the other modalities both because we were interested in the maximum duration of the battery (obtainable when only the RSP is on) and because we did not want to risk damaging the RSP due to heat.

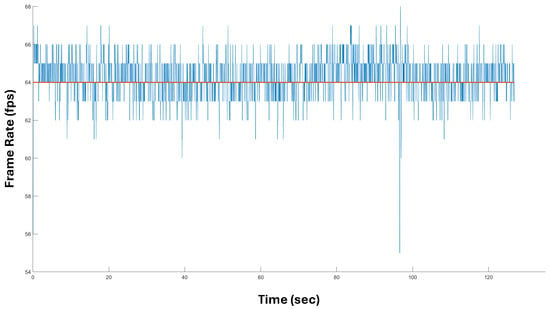

4.3. Hand Tracking

In the previous sections, the RSP demonstrated its capability of running an LMC while remaining well within acceptable energy consumption and CPU temperature limits, without saturating the CPU, when an active system is used (fan). As a final test, we acquired data from both forearms in real operative conditions (RSP + LMC + Data) and checked the fps, considering that for real-time, fluid, interactions, the minimal fps value is 25–35 [44,45].

We used the proposed system in the configuration shown in Figure 9, where the system was mounted on a helmet to improve safety at work (as can be seen, the RSP and the power bank were also fixed on the back part of the helmet). In addition, forearm tracking could be used to check for dangerous forearm positions regarding a mechanic tool and proactively stop the tool in the case of risks.

Figure 9.

The proposed system mounted on a safety helmet used for testing real-life scenarios.

We tested the proposed system while the forearms were performing different tasks: simple movements in the air (the person was standing up), typing on a keyboard (the person was sitting), and interacting with a manual tool (the person was walking around the room while performing tool tapping). For the latter task, the illumination conditions varied due to the fact the person was moving near a window, then in the center of the room, and finally, near the illumination of a lamp. This is an important aspect because the LMC is based on the use of infrared light to operate: external sources of infrared light could negatively affect tracking precision. Furthermore, in this situation, the software activates specific countermeasures to compensate for this, requiring considerable CPU overload [46]. However, in our test, the results of which are reported in Figure 10, the proposed system was capable of operating at an average of 64 fps, with values ranging between 62 fps and 66 fps. Only in one isolated case did it drop to 55 fps, corresponding to a fast passage close to the window. Ultimately, the system always operated above 30 fps, thus ensuring real-time tracking.

Figure 10.

The frame rate of the LMC was read from the internal data structure and reported. The original averaged values also contained decimals, which have been eliminated for convenience.

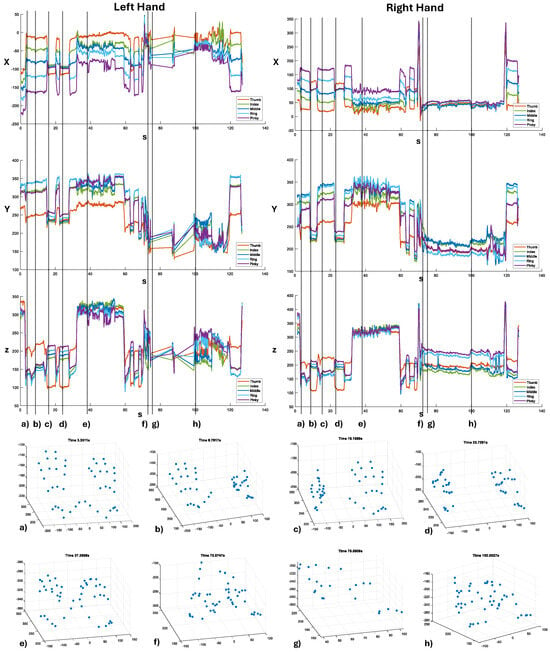

Figure 11 shows the spatial coordinates (X, Y, Z) of the fingertips of both hands throughout the data acquisition, corresponding to the movements analyzed for fps in Figure 10. To enhance the understanding of the plots, vertical lines are drawn corresponding to specific hand poses, as shown in plots (a) to (h). The plots were created using Matlab 2023a, with blue dots used to mark the joints of the hands acquired by the LMC. For the reported poses, movements were smooth except when the person was interacting with the manual tool (Figure 11f). In this case, the left hand was almost completely obscured by the tool, and the LMC lost track at some point (Figure 11g). Regarding the right hand, tracking was more stable because most of the time, the person was interacting with the index finger, and consequently, the hand was not hidden behind the tool, making it visible to the LMC. However, self-occlusions also occurred, where some fingers obstructed the view of others. In these cases, hand-tracking precision decreased significantly, and jumps occurred when the LMC recovered the right tip position.

Figure 11.

The plots of the spatial positions of each fingertip of both hands as measured by the LMC. To improve visualization, measurements for each spatial coordinate are shown in separate plots. For each coordinate, measurements of the fingertips from the same hand are shown in the same plot with different colors to facilitate comparison. The vertical dotted lines represent particular hand poses (shown in the scatter plots from (a–h) to better contextualize the plots.

5. Conclusions and Future Work

In this work, we proposed a portable hand-tracking system based on a head-mounted LMC connected to an RSP. The system is powered by a 10,000 mAh power bank. Several measurements were conducted. Regarding power consumption, the system is capable of running for 5 h in tracking mode. CPU usage is not saturated, leaving space to run other applications. The temperature is very well controlled using heatsinks and a fan, even under the most intense load, which, in this case, occurs when the LMC is tracking and external software is requesting the frames and saving the data. Regarding frame rate, the system is capable of running at an average of about 60 fps without any significant drops. Tracking precision is good and stable, as demonstrated by the test we conducted under changing operative conditions, although overall precision could be affected.

Considering these results, it can be confidently asserted that the proposed system holds significant promise for various precise hand-tracking applications. Head-mounted hand tracking emerges as a particularly effective design choice due to its inherent advantages: users can freely engage with their surroundings without being hindered by the position of the hand-tracking sensor, which consistently aligns with their field of view. This freedom allows users to grasp real-world objects, fostering a natural and immersive interaction experience. Additionally, the system’s portability has been substantiated by its ability to seamlessly integrate the computing power of the RSP with the demands of the LMC, alongside a battery life that accommodates all major tasks.

Future work will be in the direction of definitive system engineering. First of all, a button to start and stop hand tracking will be added. This will enable the possibility of pausing tracking when it is not necessary, allowing significant energy savings and, consequently, increasing usage time.

To further increase the efficiency of the system, the velocity of the fan will be controlled, taking into account the temperature of the CPU. To minimize fan usage, heatsinks with different shapes and materials with better thermal conductivity will be tested.

Another aspect is to implement data streaming using Wi-Fi. In this way, it will be possible to run intensive computations like deep learning on a dedicated workstation and retrieve the results, significantly extending the range of potential applications [47]. After these improvements, the system will be extensively tested in real-world applications involving gesture recognition and action prediction in both indoor and outdoor scenarios.

Hand-tracking technology holds promise across various practical applications, particularly in healthcare. It can enhance hand rehabilitation by providing detailed quantitative analysis and feedback on hand movements [1,2,3,47]. Additionally, it can contribute to research endeavors focused on motor imagery development when hand motor execution data could be useful, especially when integrated with tools like electroencephalography [48]. Beyond healthcare, hand tracking has implications in gaming [49], human–computer interaction [50], and security/authentication systems [51].

Author Contributions

Conceptualization, M.P. and G.P.; methodology, M.P.; software, M.P. and A.D.M.; validation, M.P., G.P. and L.N.; investigation, M.P. and A.D.M.; writing—original draft preparation, M.P.; writing—review and editing, M.P., A.D.M., D.L., E.M., F.M., L.N., V.S. and G.P.; visualization, M.P., A.D.M., D.L., E.M., F.M., L.N., V.S. and G.P.; supervision, M.P. and G.P.; project administration, M.P. and G.P.; resources: G.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from the subject involved in the study.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Petracca, A.; Carrieri, M.; Avola, D.; Basso Moro, S.; Brigadoi, S.; Lancia, S.; Spezialetti, M.; Ferrari, M.; Quaresima, V.; Placidi, G. A virtual ball task driven by forearm movements for neuro-rehabilitation. In Proceedings of the 2015 International Conference on Virtual Rehabilitation (ICVR), Valencia, Spain, 9–12 June 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Carrieri, M.; Petracca, A.; Lancia, S.; Basso Moro, S.; Brigadoi, S.; Spezialetti, M.; Ferrari, M.; Placidi, G.; Quaresima, V. Prefrontal Cortex Activation Upon a Demanding Virtual Hand-Controlled Task: A New Frontier for Neuroergonomics. Front. Hum. Neurosci. 2016, 10, 53. [Google Scholar] [CrossRef] [PubMed]

- Placidi, G.; Cinque, L.; Petracca, A.; Polsinelli, M.; Spezialetti, M. A Virtual Glove System for the Hand Rehabilitation based on Two Orthogonal LEAP Motion Controllers. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017. SCITEPRESS—Science and Technology Publications. [Google Scholar] [CrossRef]

- Theodoridou, E.; Cinque, L.; Mignosi, F.; Placidi, G.; Polsinelli, M.; Tavares, J.M.R.S.; Spezialetti, M. Hand Tracking and Gesture Recognition by Multiple Contactless Sensors: A Survey. IEEE Trans.-Hum.-Mach. Syst. 2023, 53, 35–43. [Google Scholar] [CrossRef]

- Hu, F.; He, P.; Xu, S.; Li, Y.; Zhang, C. FingerTrak: Continuous 3D hand pose tracking by deep learning hand silhouettes captured by miniature thermal cameras on wrist. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–24. [Google Scholar] [CrossRef]

- Masurovsky, A.; Chojecki, P.; Runde, D.; Lafci, M.; Przewozny, D.; Gaebler, M. Controller-Free Hand Tracking for Grab-and-Place Tasks in Immersive Virtual Reality: Design Elements and Their Empirical Study. Multimodal Technol. Interact. 2020, 4, 91. [Google Scholar] [CrossRef]

- Luong, T.; Cheng, Y.F.; Möbus, M.; Fender, A.; Holz, C. Controllers or Bare Hands? A Controlled Evaluation of Input Techniques on Interaction Performance and Exertion in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2023. [Google Scholar] [CrossRef] [PubMed]

- Sharif, H.; Eslaminia, A.; Chembrammel, P.; Kesavadas, T. Classification of activities of daily living based on grasp dynamics obtained from a leap motion controller. Sensors 2022, 22, 8273. [Google Scholar] [CrossRef] [PubMed]

- Viyanon, W.; Sasananan, S. Usability and performance of the leap motion controller and oculus rift for interior decoration. In Proceedings of the 2018 International Conference on Information and Computer Technologies (ICICT), DeKalb, IL, USA, 23–25 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 47–51. [Google Scholar]

- Corrêa, A.G.D.; Kintschner, N.R.; Campos, V.Z.; Blascovi-Assis, S.M. Gear VR and leap motion sensor applied in virtual rehabilitation for manual function training: An opportunity for home rehabilitation. In Proceedings of the 5th Workshop on ICTs for improving Patients Rehabilitation Research Techniques, Lisbon, Portugal, 11–13 September 2019; pp. 148–151. [Google Scholar]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the Kinect sensor and Leap Motion controller—A literature review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef] [PubMed]

- Kincaid, C.; Johnson, P.; Charles, S.K. Feasibility of using the Leap Motion Controller to administer conventional motor tests: A proof-of-concept study. Biomed. Phys. Eng. Express 2023, 9, 035009. [Google Scholar] [CrossRef] [PubMed]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Evaluation of the leap motion controller as a new contact-free pointing device. Sensors 2014, 15, 214–233. [Google Scholar] [CrossRef]

- Hammer, J.H.; Beyerer, J. Robust hand tracking in realtime using a single head-mounted rgb camera. In Proceedings of the Human-Computer Interaction. Interaction Modalities and Techniques: 15th International Conference, HCI International 2013, Las Vegas, NV, USA, 21–26 July 2013; Proceedings, Part IV 15. Springer: Berlin/Heidelberg, Germany, 2013; pp. 252–261. [Google Scholar]

- Placidi, G.; Di Matteo, A.; Mignosi, F.; Polsinelli, M.; Spezialetti, M. Compact, Accurate and Low-cost Hand Tracking System based on LEAP Motion Controllers and Raspberry Pi. In Proceedings of the ICPRAM, Online, 3–5 February 2022; pp. 652–659. [Google Scholar]

- Sun, L.; Liu, G.; Liu, Y. 3D hand tracking with head mounted gaze-directed camera. IEEE Sen. J. 2013, 14, 1380–1390. [Google Scholar] [CrossRef]

- Akman, O.; Poelman, R.; Caarls, W.; Jonker, P. Multi-cue hand detection and tracking for a head-mounted augmented reality system. Mach. Vis. Appl. 2013, 24, 931–946. [Google Scholar] [CrossRef]

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the accuracy and robustness of the leap motion controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef] [PubMed]

- Placidi, G.; Cinque, L.; Polsinelli, M.; Spezialetti, M. Measurements by a LEAP-based virtual glove for the hand rehabilitation. Sensors 2018, 18, 834. [Google Scholar] [CrossRef] [PubMed]

- de Los Reyes-Guzmán, A.; Lozano-Berrio, V.; Alvarez-Rodriguez, M.; Lopez-Dolado, E.; Ceruelo-Abajo, S.; Talavera-Diaz, F.; Gil-Agudo, A. RehabHand: Oriented-tasks serious games for upper limb rehabilitation by using Leap Motion Controller and target population in spinal cord injury. NeuroRehabilitation 2021, 48, 365–373. [Google Scholar] [CrossRef] [PubMed]

- Martins, R.; Notargiacomo, P. Evaluation of leap motion controller effectiveness on 2D game environments using usability heuristics. Multimed. Tools Appl. 2021, 80, 5539–5557. [Google Scholar] [CrossRef]

- Fereidouni, S.; Sheikh Hassani, M.; Talebi, A.; Rezaie, A.H. A novel design and implementation of wheelchair navigation system using Leap Motion sensor. Disabil. Rehabil. Assist. Technol. 2022, 17, 442–448. [Google Scholar] [CrossRef] [PubMed]

- Ding, I.J.; Hsieh, M.C. A hand gesture action-based emotion recognition system by 3D image sensor information derived from Leap Motion sensors for the specific group with restlessness emotion problems. Microsyst. Technol. 2022, 28, 403–415. [Google Scholar] [CrossRef]

- Hisham, B.; Hamouda, A. Arabic sign language recognition using Ada-Boosting based on a leap motion controller. Int. J. Inf. Technol. 2021, 13, 1221–1234. [Google Scholar] [CrossRef]

- Lindsey, S. Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets. Master’s Thesis, Florida Institute of Technology, Melbourne, FL, USA, 2017. [Google Scholar]

- Moser, K.R.; Swan, J.E. Evaluation of user-centric optical see-through head-mounted display calibration using a leap motion controller. In Proceedings of the 2016 IEEE Symposium on 3D User Interfaces (3DUI), Greenville, SC, USA, 19–20 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 159–167. [Google Scholar]

- Wright, T.; De Ribaupierre, S.; Eagleson, R. Design and evaluation of an augmented reality simulator using leap motion. Healthc. Technol. Lett. 2017, 4, 210–215. [Google Scholar] [CrossRef]

- Chien, P.H.; Lin, Y.C. Gesture-based head-mounted augmented reality game development using leap motion and usability evaluation. In Proceedings of the 15th International Conference on Interfaces and Human Computer Interaction, IHCI 2021 and 14th International Conference on Game and Entertainment Technologies, GET, Online, 21–23 July 2021; pp. 149–156. [Google Scholar]

- Zhang, H. Head-mounted display-based intuitive virtual reality training system for the mining industry. Int. J. Min. Sci. Technol. 2017, 27, 717–722. [Google Scholar] [CrossRef]

- Gusai, E.; Bassano, C.; Solari, F.; Chessa, M. Interaction in an immersive collaborative virtual reality environment: A comparison between leap motion and HTC controllers. In Proceedings of the New Trends in Image Analysis and Processing–ICIAP 2017: ICIAP International Workshops, WBICV, SSPandBE, 3AS, RGBD, NIVAR, IWBAAS, and MADiMa 2017, Catania, Italy, 11–15 September 2017; Revised Selected Papers 19. Springer: Berlin/Heidelberg, Germany, 2017; pp. 290–300. [Google Scholar]

- BELANOVÁ, D.; YOSHIDA, K. Hand Position Tracking Correction of Leap Motion Controller Attached to the Virtual Reality Headset. Int. J. Biomed. Soft Comput. Hum. Sci. Off. J. Biomed. Fuzzy Syst. Assoc. 2020, 25, 29–37. [Google Scholar]

- Microsoft Corporation. Microsoft HoloLens 2. 2024. Available online: https://www.microsoft.com/en-us/hololens (accessed on 10 January 2024).

- Zhang, S.; Ma, Q.; Zhang, Y.; Qian, Z.; Kwon, T.; Pollefeys, M.; Bogo, F.; Tang, S. Egobody: Human body shape and motion of interacting people from head-mounted devices. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 180–200. [Google Scholar]

- Doughty, M.; Ghugre, N.R. HMD-EgoPose: Head-mounted display-based egocentric marker-less tool and hand pose estimation for augmented surgical guidance. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 2253–2262. [Google Scholar] [CrossRef] [PubMed]

- Schäfer, A.; Reis, G.; Stricker, D. Controlling Continuous Locomotion in Virtual Reality with Bare Hands Using Hand Gestures. In Proceedings of the International Conference on Virtual Reality and Mixed Reality, Virtual, 26 June–1 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 191–205. [Google Scholar]

- Abdlkarim, D.; Di Luca, M.; Aves, P.; Maaroufi, M.; Yeo, S.H.; Miall, R.C.; Holland, P.; Galea, J.M. A methodological framework to assess the accuracy of virtual reality hand-tracking systems: A case study with the Meta Quest 2. Behav. Res. Methods 2023, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Meta Platforms, Inc. Meta Quest 3: New Mixed Reality VR Headset. 2024. Available online: https://www.meta.com/quest/quest-3/ (accessed on 10 January 2024).

- Challenor, J.; White, D.; Murphy, D. Hand-Controlled User Interfacing for Head-Mounted Augmented Reality Learning Environments. Multimodal Technol. Interact. 2023, 7, 55. [Google Scholar] [CrossRef]

- Raspberry Pi Ltd. Raspberry Pi Website. 2024. Available online: https://www.raspberrypi.org/ (accessed on 27 February 2024).

- Raspberry Pi and Python Users—We’ve Got News for You! Available online: https://www.reddit.com/r/Ultraleap/comments/181bohc/raspberry_pi_and_python_users_weve_got_news_for/ (accessed on 27 February 2024).

- Leap Motion Controller Software for Raspberry Pi. Available online: https://leap2.ultraleap.com/gemini-downloads/#tab-desktop (accessed on 27 February 2024).

- Leap Motion Controller 2 Datasheet. Available online: https://www.ultraleap.com/datasheets/leap-motion-controller-2-datasheet_issue13.pdf (accessed on 3 February 2024).

- The Operating Temperature For A Raspberry Pi. Available online: https://copperhilltech.com/content/The%20Operating%20Temperature%20For%20A%20Raspberry%20Pi%20%E2%80%93%20Technologist%20Tips.pdf (accessed on 3 February 2024).

- Qian, C.; Sun, X.; Wei, Y.; Tang, X.; Sun, J. Realtime and robust hand tracking from depth. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NA, USA, 27–30 June 2014; pp. 1106–1113. [Google Scholar]

- Roy, K.; Akif, M.A.H. Real time hand gesture based user friendly human computer interaction system. In Proceedings of the 2022 International Conference on Innovations in Science, Engineering and Technology (ICISET), Chattogram, Bangladesh, 25–28 February 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 260–265. [Google Scholar]

- What is the Leap Motion Controller’s Operating Environment Range? Available online: https://support.ultraleap.com/hc/en-us/articles/360004328878-What-is-the-Leap-Motion-Controller-s-operating-environment-range (accessed on 2 March 2024).

- Placidi, G.; Di Matteo, A.; Lozzi, D.; Polsinelli, M.; Theodoridou, E. Patient–Therapist Cooperative Hand Telerehabilitation through a Novel Framework Involving the Virtual Glove System. Sensors 2023, 23, 3463. [Google Scholar] [CrossRef] [PubMed]

- Van der Lubbe, R.H.; Sobierajewicz, J.; Jongsma, M.L.; Verwey, W.B.; Przekoracka-Krawczyk, A. Frontal brain areas are more involved during motor imagery than during motor execution/preparation of a response sequence. Int. J. Psychophysiol. 2021, 164, 71–86. [Google Scholar] [CrossRef]

- Voigt-Antons, J.N.; Kojic, T.; Ali, D.; Möller, S. Influence of hand tracking as a way of interaction in virtual reality on user experience. In Proceedings of the 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), Athlone, Ireland, 26–28 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Li, J.; Liu, X.; Wang, Z.; Zhang, T.; Qiu, S.; Zhao, H.; Zhou, X.; Cai, H.; Ni, R.; Cangelosi, A. Real-time hand gesture tracking for human–computer interface based on multi-sensor data fusion. IEEE Sen. J. 2021, 21, 26642–26654. [Google Scholar] [CrossRef]

- Dayal, A.; Paluru, N.; Cenkeramaddi, L.R.; Yalavarthy, P.K. Design and implementation of deep learning based contactless authentication system using hand gestures. Electronics 2021, 10, 182. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).