Comparison of Point Cloud Registration Techniques on Scanned Physical Objects

Abstract

1. Introduction

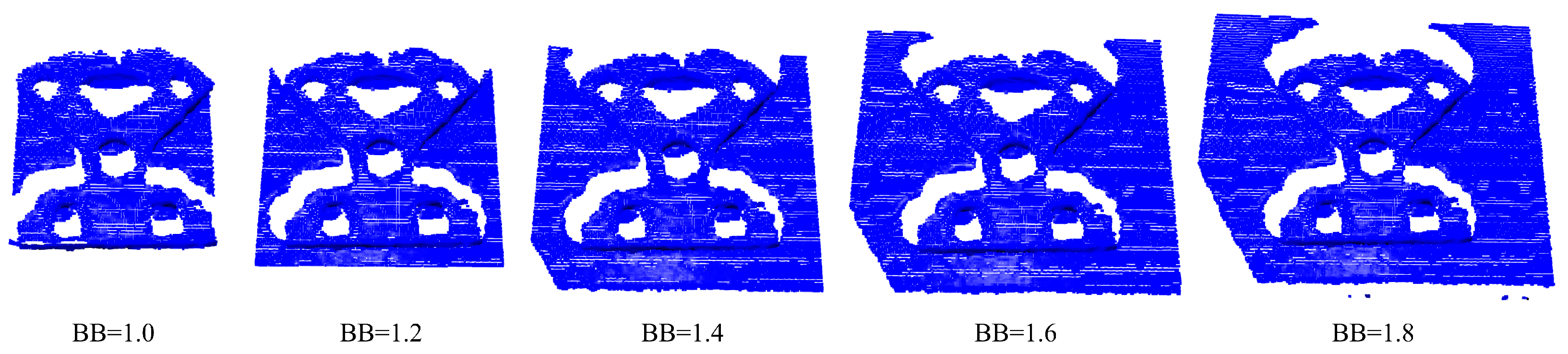

- Each point cloud consists of a single object that is already segmented from the environment. However, we adapt the quality of the cutout as a parameter.

- We already assign each point cloud a label corresponding to the represented object.

- A comparison of the performance of six registration methods, applied on real-world scans of 3D-printed objects, with relatively basic geometries, and their CAD models.

- A dataset consisting of a series of real-world scans, based on the Cranfield benchmark dataset [23] with available ground-truth estimation. The Python code, used to run the experiments, and the point cloud scans with their ground truth, are available at https://github.com/Menthy-Denayer/PCR_CAD_Model_Alignment_Comparison.git (accessed on 14 February 2024).

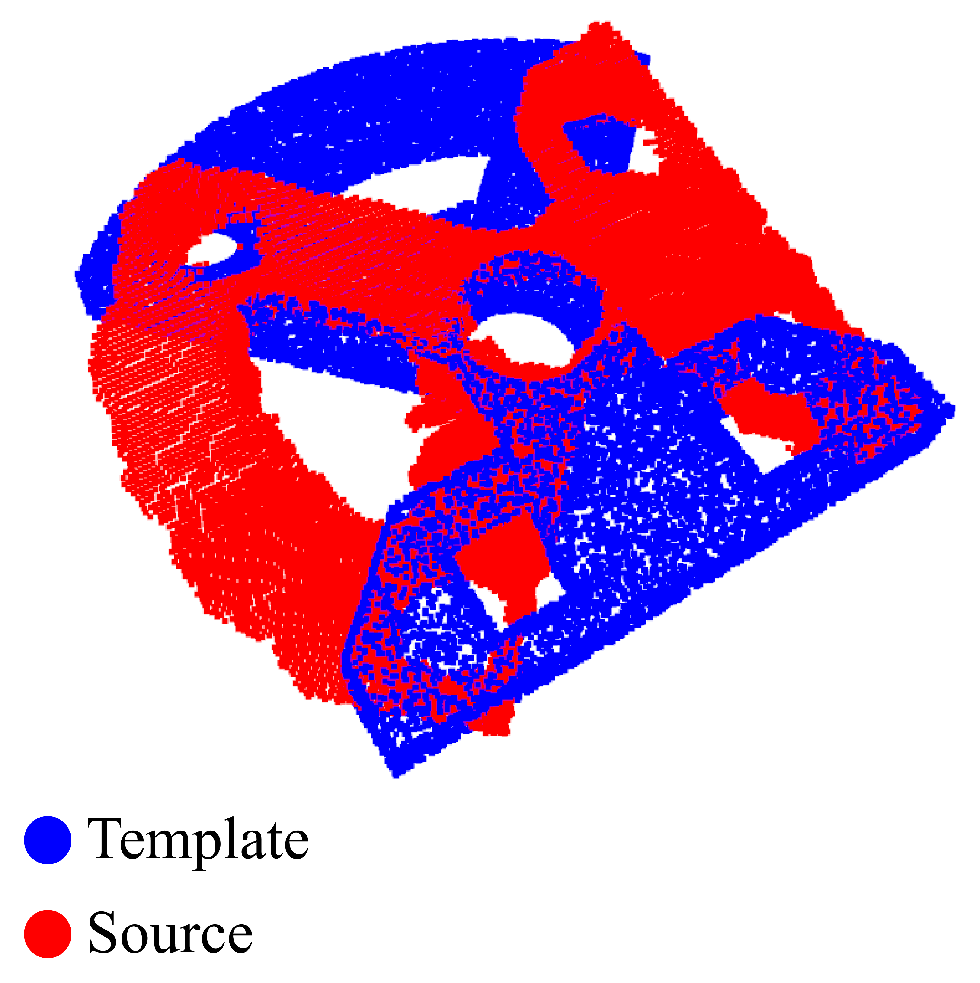

2. Methodology

2.1. Registration Methods

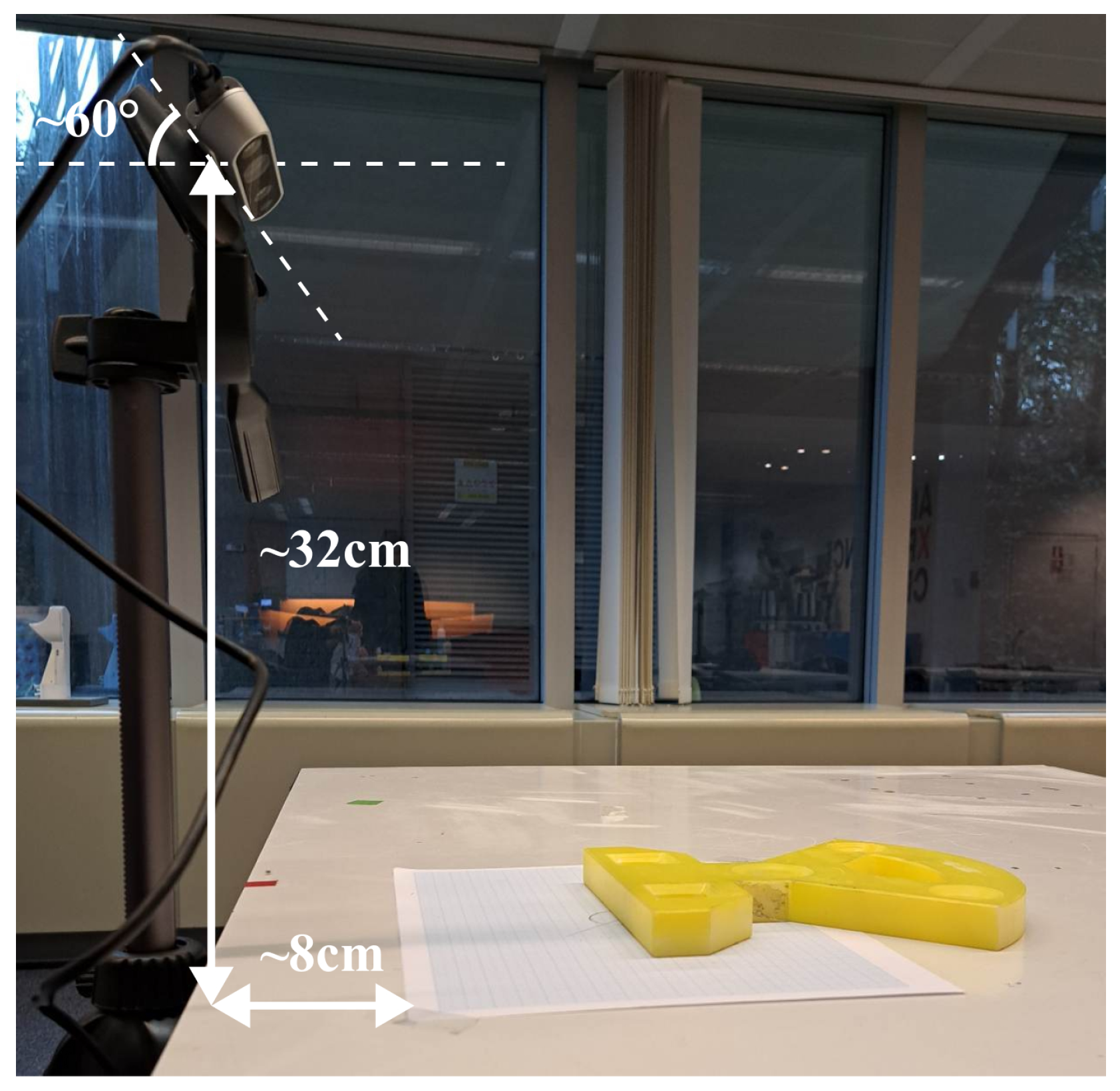

- Robustness to noise. Three-dimensional cameras were used to create point clouds. Working in nonoptimal lighting conditions or cluttered environments results in measurement errors and noise. This leads to deformations of the scan compared to the real object, making it more difficult to find correspondences for the registration methods.

- Robustness to partiality. Since we used a single camera in this paper, the object was only visible from one perspective. As a result, the captured point cloud was incomplete, missing the parts of the object, which the camera could not see.

- Limited computation time. For real-time applications, the computation time for the registration process has to be limited. Timing can also be an important aspect to consider when training the learning-based methods.

- Ability to generalize to different objects. The different methods have to work on a wide variety of objects to be widely applicable. Learning-based methods are trained on available CAD models and risk overfitting. Non-learning-based methods can generalize better, which may come at the cost of a lower performance.

2.1.1. Non-Learning-Based Methods

- GO-ICP [8] improves upon the standard ICP method by finding the global optimum solution. ICP-based methods solve the registration problem by minimizing a cost function. These algorithms typically establish correspondences based on distance. GO-ICP is robust to noise. However, the method tends to be slow.

- RANSAC [41] is a global registration method, often used in scene reconstruction [48]. It uses the RANSAC algorithm to find the best fit between the template and source point clouds. Features are extracted using Fast Point Feature Histogram (FPFH) [50], which is a point-based method [32]. RANSAC is robust to outliers and noise and does not require any training process. However, it requires a preliminary step for feature extraction and there are multiple parameters to tune.

- FGR [42] is a fast registration method, requiring no training. It also uses FPFH to extract features but does not recompute the correspondences during the execution. It can perform partial registration but is more sensitive to noise.

2.1.2. Learning-Based Methods

- PointNetLK [43] is a learning-based method. It uses PointNet to generate descriptors for each point. This information is then used to compute the transformation matrix through training. The method is robust to noise and partial data. However, the performance drops when the method is applied to unseen data and for large transformations.

- RPMNet [9] is another learning-based method. It combines the RPM method with deep learning. RPM itself builds upon ICP, using a permutation matrix to assign correspondences. The transformation matrix is computed using singular value decomposition (SVD). RPMNet is robust to initialization and noise, and also works for larger transformations. RPMNet is, however, reported by [9] to be slower than other methods like ICP or DCP.

- ROPNet [44] is a learning-based method, created to solve the partial registration problem. First, a set of overlapping points is established. Afterwards, wrong correspondences are removed, turning the partial-to-partial into a partial-to-complete problem. Finally, SVD is used to compute the transformation matrix. It is robust to noise and can generalize well.

2.2. Metrics

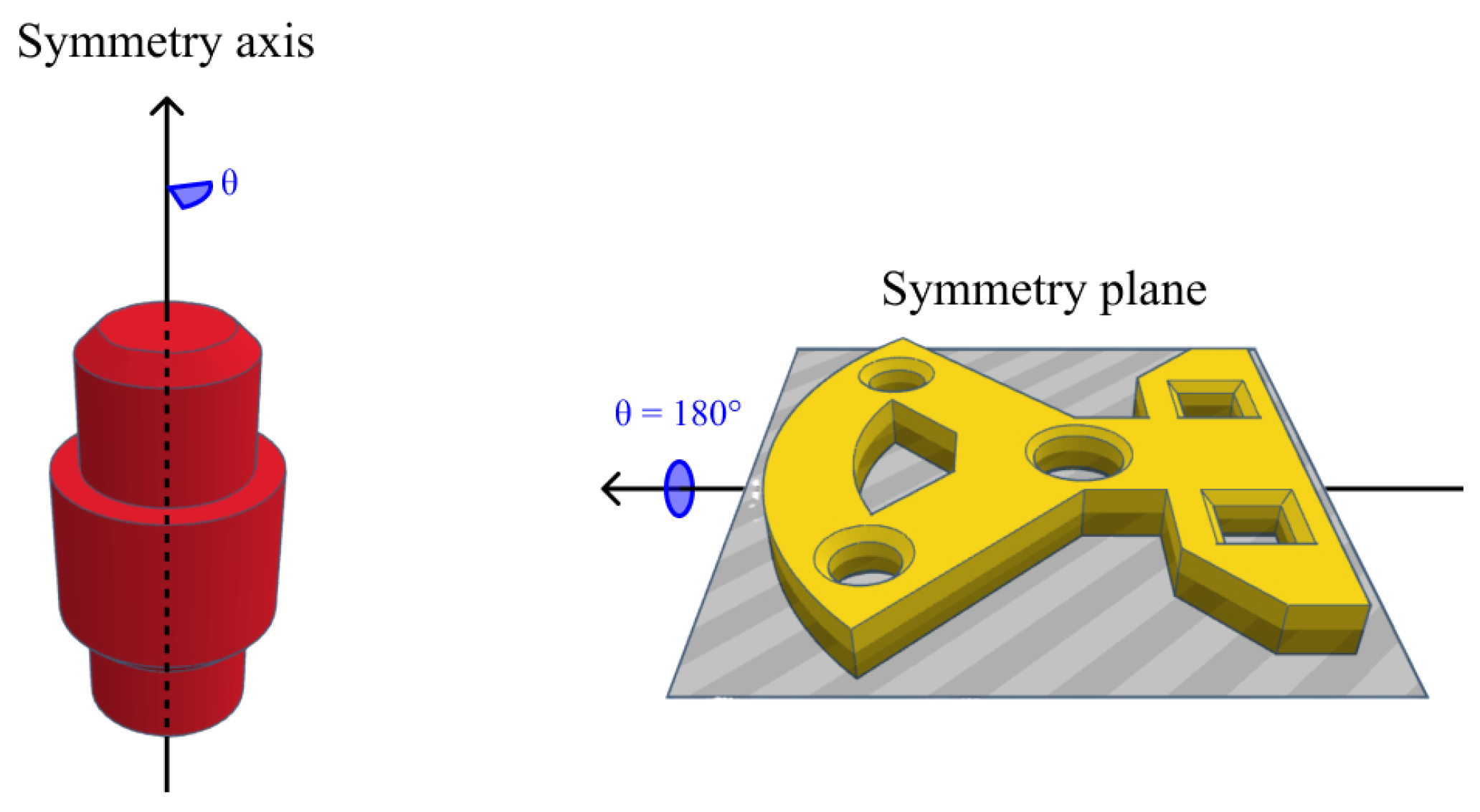

2.3. Materials

2.4. Ground-Truth Estimation

- We centred both point clouds on the origin by subtracting their mean.

- Using an estimation of the normal vector on the table, we performed a rotation around the template x-axis, aligning the y-axes of both objects.

- Given the known rotation of the object on the table, we performed a final rotation around the new y-axis.

- We performed visual corrections for the rotation and mainly translation, similar to [6].

2.5. Registration Parameters

2.6. Data Processing

3. Results

3.1. Training Validation Results

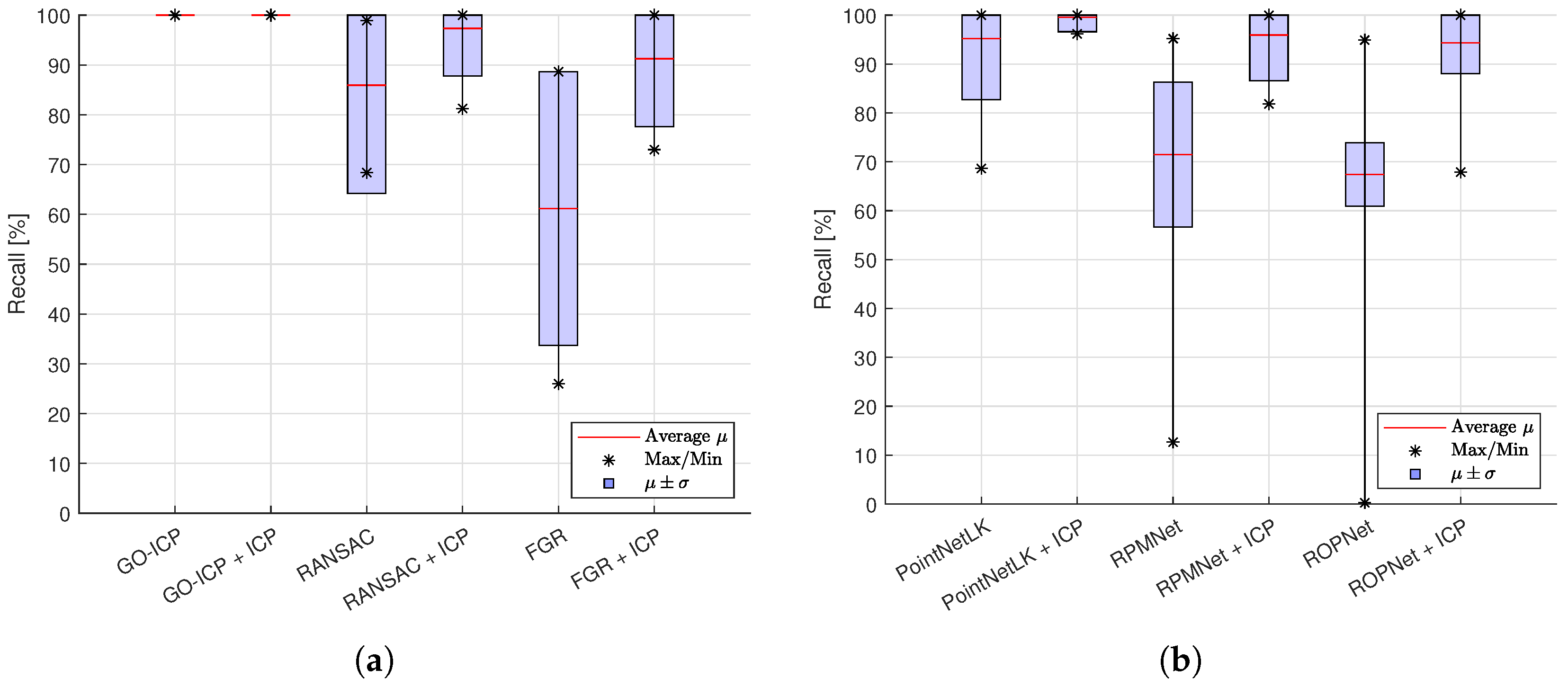

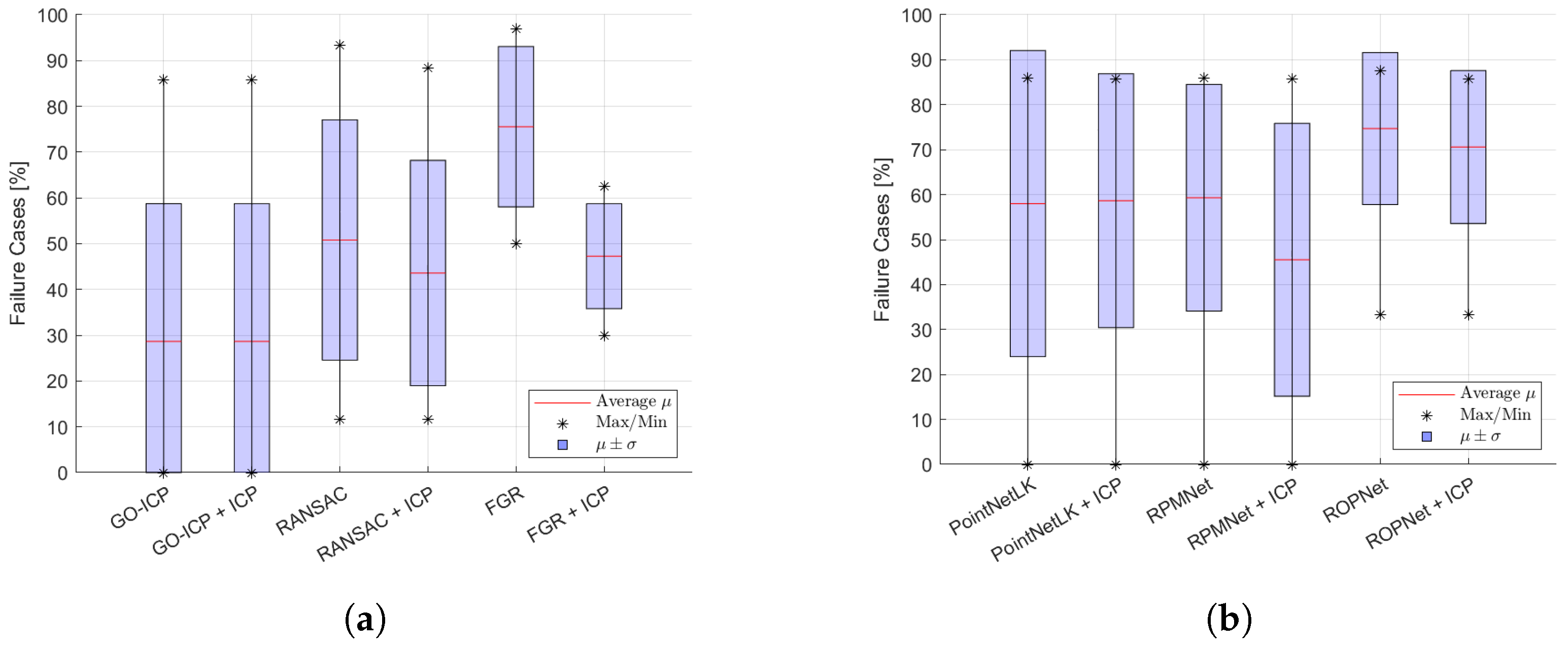

3.2. PCR Methods Comparison

4. Discussion

4.1. Registration Parameters

4.2. PCR Methods Comparison

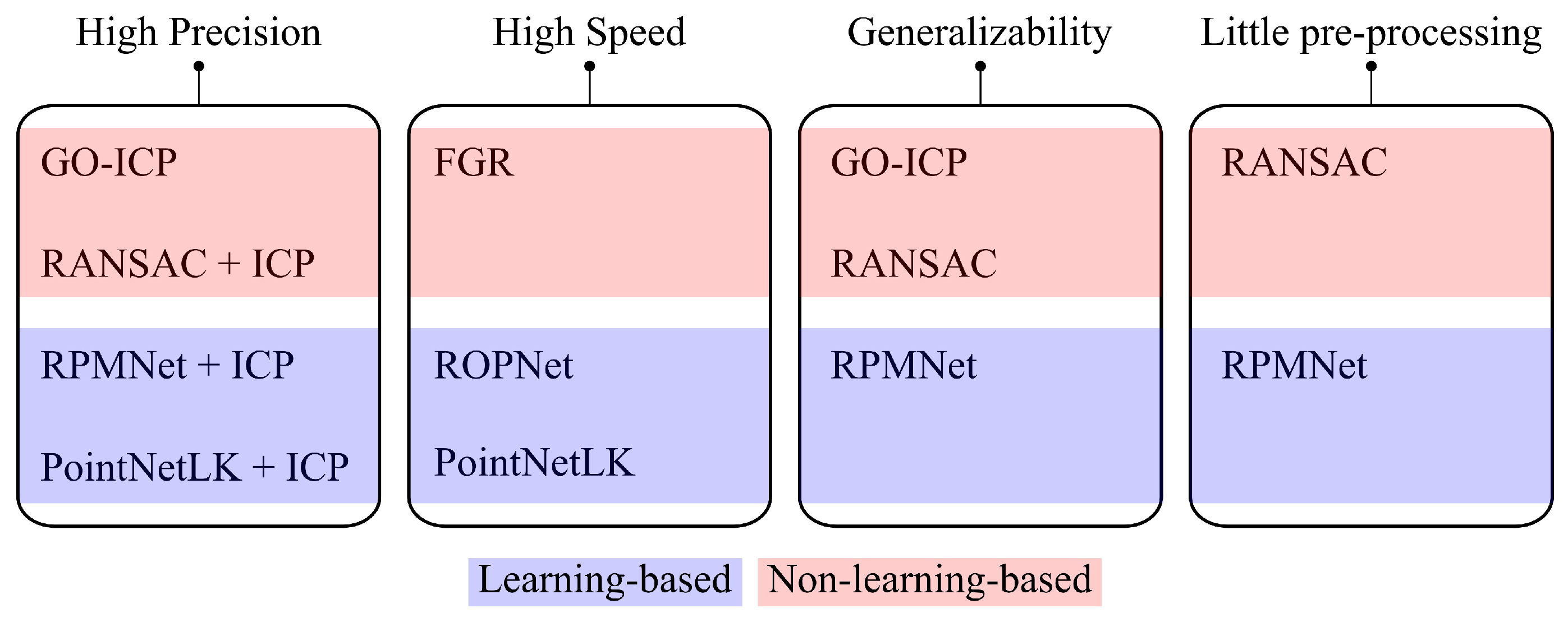

4.3. PCR Methods Guidelines

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Ground-Truth Validation

| Type | Data | MRAE | MRTE | RMSE | RMSE | MAE | MAE | Recall | |

|---|---|---|---|---|---|---|---|---|---|

| [ϵ/σ] | [°] ↓ | [mm] ↓ | [°] ↓ | [mm] ↓ | [°] ↓ | [mm] ↓ | [%] ↑ | [/] ↑ | |

| Cleaned point clouds | 1.85 | 1.89 | 0.02 | 1.09 | 2.69 | 0.00 | 100 | 1.00 | |

| 1.22 | 1.11 | 0.01 | 0.64 | 7.93 | 0.00 | 0.00 | 0.01 | ||

| 1.50 | 2.53 | 0.01 | 1.46 | 0.70 | 0.00 | 100 | 1.00 | ||

| 0.88 | 1.66 | 0.01 | 0.96 | 0.83 | 0.01 | 0.00 | 0.00 | ||

| 1.24 | 2.93 | 0.01 | 1.69 | 0.64 | 0.00 | 100 | 1.00 | ||

| 0.66 | 1.48 | 0.01 | 0.85 | 0.86 | 0.00 | 0.00 | 0.01 | ||

| 1.50 | 2.97 | 0.01 | 1.71 | 0.71 | 0.00 | 100 | 1.00 | ||

| 0.87 | 1.62 | 0.01 | 0.94 | 1.07 | 0.00 | 0.00 | 0.00 | ||

| 1.58 | 3.57 | 0.01 | 2.06 | 0.73 | 0.01 | 100 | 1.00 | ||

| 0.96 | 2.34 | 0.01 | 1.35 | 1.07 | 0.01 | 0.00 | 0.00 | ||

| 1.58 | 3.36 | 0.01 | 1.94 | 0.75 | 0.01 | 100 | 1.00 | ||

| 0.93 | 2.17 | 0.01 | 1.25 | 1.08 | 0.01 | 0.00 | 0.00 |

Appendix B. List of Averaged Metrics

| Method | Data | MRAE | MRTE | RMSE | RMSE | MAE | MAE | Recall | Failure | Time | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| [ϵ/σ] | [°] ↓ | [mm] ↓ | [°] ↓ | [mm]↓ | [°] ↓ | [mm]↓ | [%]↑ | [/]↑ | [%] ↓ | [s] ↓ | |

| Non-learning-based methods | |||||||||||

| GO-ICP | 4.85 | 2.73 | 0.04 | 1.58 | 4.00 | 0.00 | 100 | 0.98 | 28.64 | 15.50 | |

| 3.08 | 1.61 | 0.02 | 0.93 | 4.13 | 0.01 | 0.00 | 0.04 | 30.08 | 23.14 | ||

| GO-ICP + ICP | 4.85 | 2.73 | 0.04 | 1.58 | 3.99 | 0.00 | 100 | 0.98 | 28.64 | 0.01 | |

| 3.10 | 1.58 | 0.03 | 0.91 | 4.14 | 0.01 | 0.00 | 0.04 | 30.08 | 0.00 | ||

| RANSAC | 18.62 | 6.23 | 0.16 | 3.60 | 16.43 | 0.02 | 85.94 | 0.88 | 50.82 | 0.27 | |

| 12.64 | 4.20 | 0.09 | 2.42 | 31.31 | 0.03 | 21.71 | 0.13 | 26.24 | 0.22 | ||

| RANSAC + ICP | 5.85 | 2.83 | 0.05 | 1.63 | 9.70 | 0.01 | 97.34 | 0.93 | 43.58 | 0.04 | |

| 7.20 | 2.51 | 0.06 | 1.45 | 29.05 | 0.02 | 9.52 | 0.16 | 24.63 | 0.02 | ||

| FGR | 27.41 | 11.67 | 0.29 | 6.74 | 19.08 | 0.06 | 61.18 | 0.58 | 75.57 | 0.10 | |

| 38.52 | 4.56 | 0.13 | 2.63 | 14.14 | 0.06 | 27.50 | 0.20 | 17.52 | 0.17 | ||

| FGR + ICP | 12.59 | 4.95 | 0.10 | 2.86 | 7.81 | 0.01 | 91.26 | 0.81 | 47.26 | 0.04 | |

| 12.26 | 3.40 | 0.10 | 1.96 | 7.00 | 0.02 | 13.62 | 0.20 | 11.46 | 0.03 | ||

| Learning-based registration | |||||||||||

| PointNetLK | 14.04 | 6.36 | 0.11 | 3.67 | 12.55 | 0.02 | 95.18 | 0.86 | 57.92 | 0.12 | |

| 13.86 | 1.09 | 0.11 | 0.63 | 34.82 | 0.01 | 12.44 | 0.16 | 34.05 | 0.06 | ||

| PointNetLK + ICP | 4.12 | 2.36 | 0.03 | 1.36 | 7.80 | 0.00 | 99.57 | 0.97 | 58.65 | 0.05 | |

| 8.55 | 1.31 | 0.07 | 0.76 | 36.18 | 0.00 | 2.95 | 0.15 | 28.24 | 0.03 | ||

| RPMNet | 25.78 | 10.21 | 0.21 | 5.90 | 11.08 | 0.04 | 71.48 | 0.62 | 59.31 | 0.12 | |

| 7.77 | 1.09 | 0.06 | 0.63 | 2.75 | 0.01 | 14.83 | 0.14 | 25.20 | 0.09 | ||

| RPMNet + ICP | 7.59 | 3.65 | 0.06 | 2.11 | 4.52 | 0.01 | 95.94 | 0.89 | 45.52 | 0.04 | |

| 8.05 | 2.80 | 0.06 | 1.62 | 6.61 | 0.01 | 9.31 | 0.22 | 30.34 | 0.03 | ||

| ROPNet | 25.88 | 10.99 | 0.21 | 6.35 | 30.84 | 0.05 | 67.38 | 0.72 | 74.70 | 0.06 | |

| 10.22 | 2.19 | 0.08 | 1.27 | 9.32 | 0.02 | 6.50 | 0.08 | 16.90 | 0.02 | ||

| ROPNet + ICP | 7.90 | 4.89 | 0.06 | 2.82 | 31.91 | 0.01 | 94.33 | 0.95 | 70.60 | 0.04 | |

| 12.31 | 3.30 | 0.09 | 1.91 | 8.76 | 0.02 | 6.29 | 0.12 | 17.01 | 0.05 | ||

References

- Alizadehsalehi, S. BIM/Digital Twin-Based Construction Progress Monitoring through Reality Capture to Extended Reality (DRX). Ph.D. Thesis, Eastern Mediterranean University, İsmet İnönü Bulvarı, Gazimağusa, 2020. [Google Scholar]

- Bhattacharya, B.; Winer, E.H. Augmented reality via expert demonstration authoring (AREDA). Comput. Ind. 2019, 105, 61–79. [Google Scholar] [CrossRef]

- Jerbić, B.; Šuligoj, F.; Švaco, M.; Šekoranja, B. Robot Assisted 3D Point Cloud Object Registration. Procedia Eng. 2015, 100, 847–852. [Google Scholar] [CrossRef][Green Version]

- Ciocarlie, M.; Hsiao, K.; Jones, E.G.; Chitta, S.; Rusu, R.B.; Şucan, I.A. Towards Reliable Grasping and Manipulation in Household Environments. In Experimental Robotics; Khatib, O., Kumar, V., Sukhatme, G., Eds.; Series Title: Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2014; Volume 79, pp. 241–252. [Google Scholar] [CrossRef]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef]

- Yang, H.; Shi, J.; Carlone, L. TEASER: Fast and Certifiable Point Cloud Registration. IEEE Trans. Robot. 2021, 37, 314–333. [Google Scholar] [CrossRef]

- Sarode, V.; Li, X.; Goforth, H.; Aoki, Y.; Srivatsan, R.A.; Lucey, S.; Choset, H. PCRNet: Point Cloud Registration Network using PointNet Encoding. arXiv 2019, arXiv:1908.07906. [Google Scholar]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A Globally Optimal Solution to 3D ICP Point-Set Registration. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2241–2254. [Google Scholar] [CrossRef]

- Yew, Z.J.; Lee, G.H. RPM-Net: Robust Point Matching Using Learned Features. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11821–11830. [Google Scholar] [CrossRef]

- Li, L.; Wang, R.; Zhang, X. A Tutorial Review on Point Cloud Registrations: Principle, Classification, Comparison, and Technology Challenges. Math. Probl. Eng. 2021, 2021, 9953910. [Google Scholar] [CrossRef]

- Stilla, U.; Xu, Y. Change detection of urban objects using 3D point clouds: A review. ISPRS J. Photogramm. Remote Sens. 2023, 197, 228–255. [Google Scholar] [CrossRef]

- Gu, X.; Wang, X.; Guo, Y. A Review of Research on Point Cloud Registration Methods. Mater. Sci. Eng. 2019, 782, 022070. [Google Scholar] [CrossRef]

- Zhang, Z.; Dai, Y.; Sun, J. Deep learning based point cloud registration: An overview. Virtual Real. Intell. Hardw. 2020, 2, 222–246. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Huang, X.; Mei, G.; Zhang, J. Cross-source point cloud registration: Challenges, progress and prospects. Neurocomputing 2023, 548, 126383. [Google Scholar] [CrossRef]

- The Stanford 3D Scanning Repository. Available online: https://graphics.stanford.edu/data/3Dscanrep (accessed on 14 September 2023).

- Zeng, A.; Song, S.; Niessner, M.; Fisher, M.; Xiao, J.; Funkhouser, T. 3DMatch: Learning Local Geometric Descriptors from RGB-D Reconstructions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 199–208. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Monji-Azad, S.; Hesser, J.; Löw, N. A review of non-rigid transformations and learning-based 3D point cloud registration methods. ISPRS J. Photogramm. Remote Sens. 2023, 196, 58–72. [Google Scholar] [CrossRef]

- Fontana, S.; Cattaneo, D.; Ballardini, A.L.; Vaghi, M.; Sorrenti, D.G. A benchmark for point clouds registration algorithms. Robot. Auton. Syst. 2021, 140, 103734. [Google Scholar] [CrossRef]

- Osipov, A.; Ostanin, M.; Klimchik, A. Comparison of Point Cloud Registration Algorithms for Mixed-Reality Cross-Device Global Localization. Information 2023, 14, 149. [Google Scholar] [CrossRef]

- Drost, B.; Ulrich, M.; Bergmann, P.; Hartinger, P.; Steger, C. Introducing MVTec ITODD—A Dataset for 3D Object Recognition in Industry. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2200–2208. [Google Scholar] [CrossRef]

- Abu-Dakka, F.J.; Nemec, B.; Kramberger, A.; Buch, A.G.; Krüger, N.; Ude, A. Solving peg-in-hole tasks by human demonstration and exception strategies. Ind. Robot. Int. J. 2014, 41, 575–584. [Google Scholar] [CrossRef]

- Hattab, A.; Taubin, G. 3D Modeling by Scanning Physical Modifications. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Bahia, Brazil, 26–29 August 2015; pp. 25–32. [Google Scholar] [CrossRef]

- Decker, N.; Wang, Y.; Huang, Q. Efficiently registering scan point clouds of 3D printed parts for shape accuracy assessment and modeling. J. Manuf. Syst. 2020, 56, 587–597. [Google Scholar] [CrossRef]

- Kumar, G.A.; Patil, A.K.; Chai, Y.H. Alignment of 3D point cloud, CAD model, real-time camera view and partial point cloud for pipeline retrofitting application. In Proceedings of the 2018 International Conference on Electronics, Information, and Communication (ICEIC), Honolulu, HI, USA, 24–27 January 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Xu, H.; Chen, G.; Wang, Z.; Sun, L.; Su, F. RGB-D-Based Pose Estimation of Workpieces with Semantic Segmentation and Point Cloud Registration. Sensors 2019, 19, 1873. [Google Scholar] [CrossRef]

- Si, H.; Qiu, J.; Li, Y. A Review of Point Cloud Registration Algorithms for Laser Scanners: Applications in Large-Scale Aircraft Measurement. Appl. Sci. 2022, 12, 10247. [Google Scholar] [CrossRef]

- Liu, L.; Liu, B. Comparison of Several Different Registration Algorithms. Int. J. Adv. Netw. Monit. Control 2020, 5, 22–27. [Google Scholar] [CrossRef][Green Version]

- Brightman, N.; Fan, L. A brief overview of the current state, challenging issues and future directions of point cloud registration. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, X-3/W1-2022, 17–23. [Google Scholar] [CrossRef]

- Zhao, B.; Chen, X.; Le, X.; Xi, J.; Jia, Z. A Comprehensive Performance Evaluation of 3-D Transformation Estimation Techniques in Point Cloud Registration. IEEE Trans. Instrum. Meas. 2021, 70, 5018814. [Google Scholar] [CrossRef]

- Xu, N.; Qin, R.; Song, S. Point cloud registration for LiDAR and photogrammetric data: A critical synthesis and performance analysis on classic and deep learning algorithms. ISPRS Open J. Photogramm. Remote Sens. 2023, 8, 100032. [Google Scholar] [CrossRef]

- Ao, S.; Hu, Q.; Yang, B.; Markham, A.; Guo, Y. SpinNet: Learning a General Surface Descriptor for 3D Point Cloud Registration. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 11748–11757. [Google Scholar] [CrossRef]

- Zhao, Y.; Fan, L. Review on Deep Learning Algorithms and Benchmark Datasets for Pairwise Global Point Cloud Registration. Remote Sens. 2023, 15, 2060. [Google Scholar] [CrossRef]

- Qian, J.; Tang, D. RRGA-Net: Robust Point Cloud Registration Based on Graph Convolutional Attention. Sensors 2023, 23, 9651. [Google Scholar] [CrossRef] [PubMed]

- Yuan, W.; Eckart, B.; Kim, K.; Jampani, V.; Fox, D.; Kautz, J. DeepGMR: Learning Latent Gaussian Mixture Models for Registration. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 733–750. [Google Scholar]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27 October–1 November 2003; Volume 3, pp. 2743–2748. [Google Scholar] [CrossRef]

- Myronenko, A.; Song, X. Point-Set Registration: Coherent Point Drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Hamsici, O.C.; Feng, S.; Sharma, P.; Gernoth, T. DeepPRO: Deep Partial Point Cloud Registration of Objects. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 5663–5672. [Google Scholar] [CrossRef]

- Yew, Z.J.; Lee, G.H. REGTR: End-to-end Point Cloud Correspondences with Transformers. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 6667–6676. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Carthography. Graph. Image Process. 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Fast Global Registration. In Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Series Title: Lecture Notes in Computer Science; Springer International Publishing: Cham, Swizerland, 2016; Volume 9906, pp. 766–782. [Google Scholar] [CrossRef]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. PointNetLK: Robust & Efficient Point Cloud Registration Using PointNet. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7156–7165. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, D.; Lin, C.; Yan, R.; Gómez-Fernández, F.; Yang, N.; Feng, Z. Point Cloud Registration using Representative Overlapping Points. arXiv 2021, arXiv:2107.02583. [Google Scholar]

- Ge, X. Non-rigid registration of 3D point clouds under isometric deformation. ISPRS J. Photogramm. Remote Sens. 2016, 121, 192–202. [Google Scholar] [CrossRef]

- Chen, Q.Y.; Feng, D.Z.; Hu, H.S. A robust non-rigid point set registration algorithm using both local and global constraints. Vis. Comput. 2023, 39, 1217–1234. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Mahmood, B.; Han, S. 3D Registration of Indoor Point Clouds for Augmented Reality. In Computing in Civil Engineering; American Society of Civil Engineers: Reston, VA, USA, 2019; p. 8. [Google Scholar]

- Wang, S.; Kang, Z.; Chen, L.; Guo, Y.; Zhao, Y.; Chai, Y. Partial point cloud registration algorithm based on deep learning and non-corresponding point estimation. Vis. Comput. 2023, Online. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar] [CrossRef]

- Sarode, V. Learning3D: A Modern Library for Deep Learning on 3D Point Clouds Data. Available online: https://github.com/vinits5/learning3d (accessed on 4 November 2022).

- Wang, Y.; Solomon, J.M. PRNet: Self-Supervised Learning for Partial-to-Partial Registration. arXiv 2019, arXiv:1910.12240v2. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A deep representation for volumetric shapes. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar] [CrossRef]

- Wang, Y.; Solomon, J. Deep Closest Point: Learning Representations for Point Cloud Registration. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3522–3531. [Google Scholar] [CrossRef]

- Zhao, J. Point Cloud Denoise. 2023. Original-Date: 2019-05-07T06:25:29Z. Available online: https://github.com/aipiano/guided-filter-point-cloud-denoise (accessed on 30 April 2023).

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Han, X.F.; Jin, J.S.; Wang, M.J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Hurtado, J.; Gattass, M.; Raposo, A. 3D point cloud denoising using anisotropic neighborhoods and a novel sharp feature detection algorithm. Vis. Comput. 2023, 39, 5823–5848. [Google Scholar] [CrossRef]

- Wu, H.; Miao, Y.; Fu, R. Point cloud completion using multiscale feature fusion and cross-regional attention. Image Vis. Comput. 2021, 111, 104193. [Google Scholar] [CrossRef]

) indicates the parameter is checked, but no convergence was reached. Training models are reported in Section 2.3.

) indicates the parameter is checked, but no convergence was reached. Training models are reported in Section 2.3.

) indicates the parameter is checked, but no convergence was reached. Training models are reported in Section 2.3.

) indicates the parameter is checked, but no convergence was reached. Training models are reported in Section 2.3.| Method | GO-ICP | RANSAC | FGR | PointNetLK | RPMNet | ROPNet |

|---|---|---|---|---|---|---|

| Zero mean | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Refinement | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Bounding box |  | / | ||||

| Voxel size [m] | ||||||

| MSE Threshold [m] | / | / | / | / | / | |

| Trim fraction [/] | / | / | / | / | / | |

| Training model | / | / | / | ✓ | ✓ | ✓ |

| Dataset | Data | MRAE | MRTE | RMSE | RMSE | MAE | MAE | Recall | |

|---|---|---|---|---|---|---|---|---|---|

| [A/L] | [°] ↓ | [mm] ↓ | [°] ↓ | [mm] ↓ | [°] ↓ | [mm] ↓ | [%] ↑ | [/] ↑ | |

| PointNetLK | |||||||||

| 1 | A | 2.13 | 0.78 | 0.07 | 0.05 | 0.37 | 0.00 | 91.71 | 1.00 |

| 2 | L | 0.31 | 2.94 | 0.00 | 2.88 | 0.13 | 0.01 | 97.82 | 1.00 |

| RPMNet | |||||||||

| 1 | A | 2.60 | 3.00 | 0.04 | 2.23 | 0.82 | 0.00 | 70.76 | 1.00 |

| 2 | A | 2.90 | 3.16 | 0.05 | 2.14 | 0.67 | 0.00 | 72.82 | 1.00 |

| 3 | A | 2.71 | 16.42 | 0.04 | 10.52 | 0.85 | 0.11 | 49.78 | 1.00 |

| 4 | A | 2.40 | 25.76 | 0.02 | 17.94 | 0.98 | 0.32 | 30.40 | 1.00 |

| ROPNet | |||||||||

| 1 | A | 0.02 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 | 1.00 |

| 2 | A | 0.24 | 1.76 | 0.00 | 1.22 | 0.11 | 0.00 | 99.91 | 1.00 |

| 3 | A | 0.99 | 8.83 | 0.02 | 11.95 | 0.49 | 0.14 | 79.67 | 1.00 |

| 4 | A | 1.12 | 11.51 | 0.01 | 7.33 | 0.48 | 0.05 | 69.58 | 1.00 |

) indicates no convergence.

) indicates no convergence.

) indicates no convergence.

) indicates no convergence.| Method ↓ | Precision | Variance | Speed | Generalizability | Pre-Processing |

|---|---|---|---|---|---|

| Metric → | R2 ↑ | σ(R2) ↓ | Time [s] ↓ | Failure [%] ↓ | Recall [%] for BB = 1.8 |

| GO-ICP | 0.98 | 0.04 | 15.50 | 28.64 |  |

| RANSAC | 0.93 | 0.16 | 0.27 | 43.58 | 21.70 |

| FGR | 0.81 | 0.20 | 0.10 | 47.26 | 12.65 |

| PointNetLK | 0.97 | 0.15 | 0.12 | 58.65 | 0 |

| RPMNet | 0.89 | 0.22 | 0.12 | 45.52 | 48.87 |

| ROPNet | 0.95 | 0.12 | 0.06 | 70.60 | / |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Denayer, M.; De Winter, J.; Bernardes, E.; Vanderborght, B.; Verstraten, T. Comparison of Point Cloud Registration Techniques on Scanned Physical Objects. Sensors 2024, 24, 2142. https://doi.org/10.3390/s24072142

Denayer M, De Winter J, Bernardes E, Vanderborght B, Verstraten T. Comparison of Point Cloud Registration Techniques on Scanned Physical Objects. Sensors. 2024; 24(7):2142. https://doi.org/10.3390/s24072142

Chicago/Turabian StyleDenayer, Menthy, Joris De Winter, Evandro Bernardes, Bram Vanderborght, and Tom Verstraten. 2024. "Comparison of Point Cloud Registration Techniques on Scanned Physical Objects" Sensors 24, no. 7: 2142. https://doi.org/10.3390/s24072142

APA StyleDenayer, M., De Winter, J., Bernardes, E., Vanderborght, B., & Verstraten, T. (2024). Comparison of Point Cloud Registration Techniques on Scanned Physical Objects. Sensors, 24(7), 2142. https://doi.org/10.3390/s24072142