Securing Your Airspace: Detection of Drones Trespassing Protected Areas

Abstract

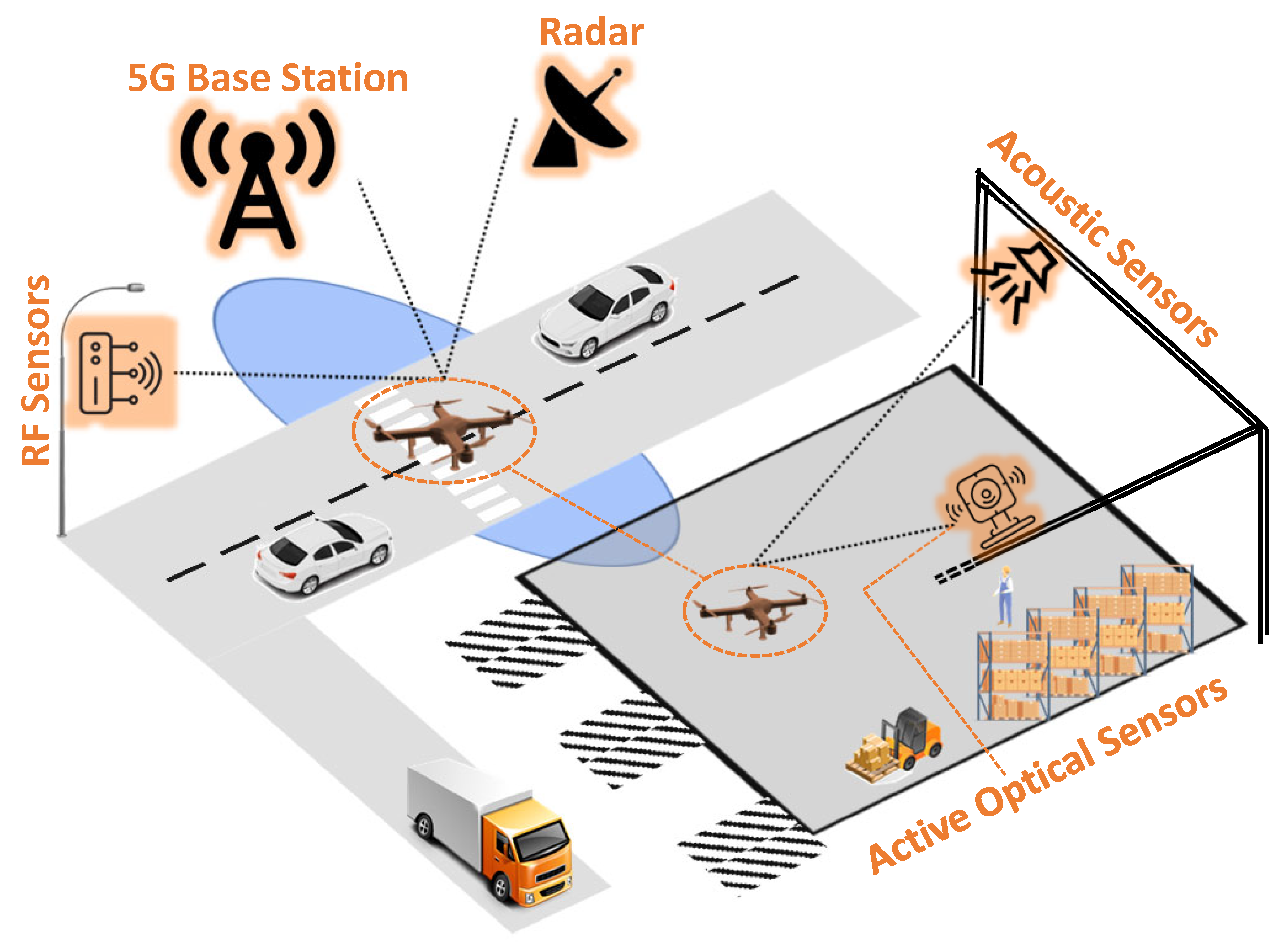

1. Introduction

2. Radars

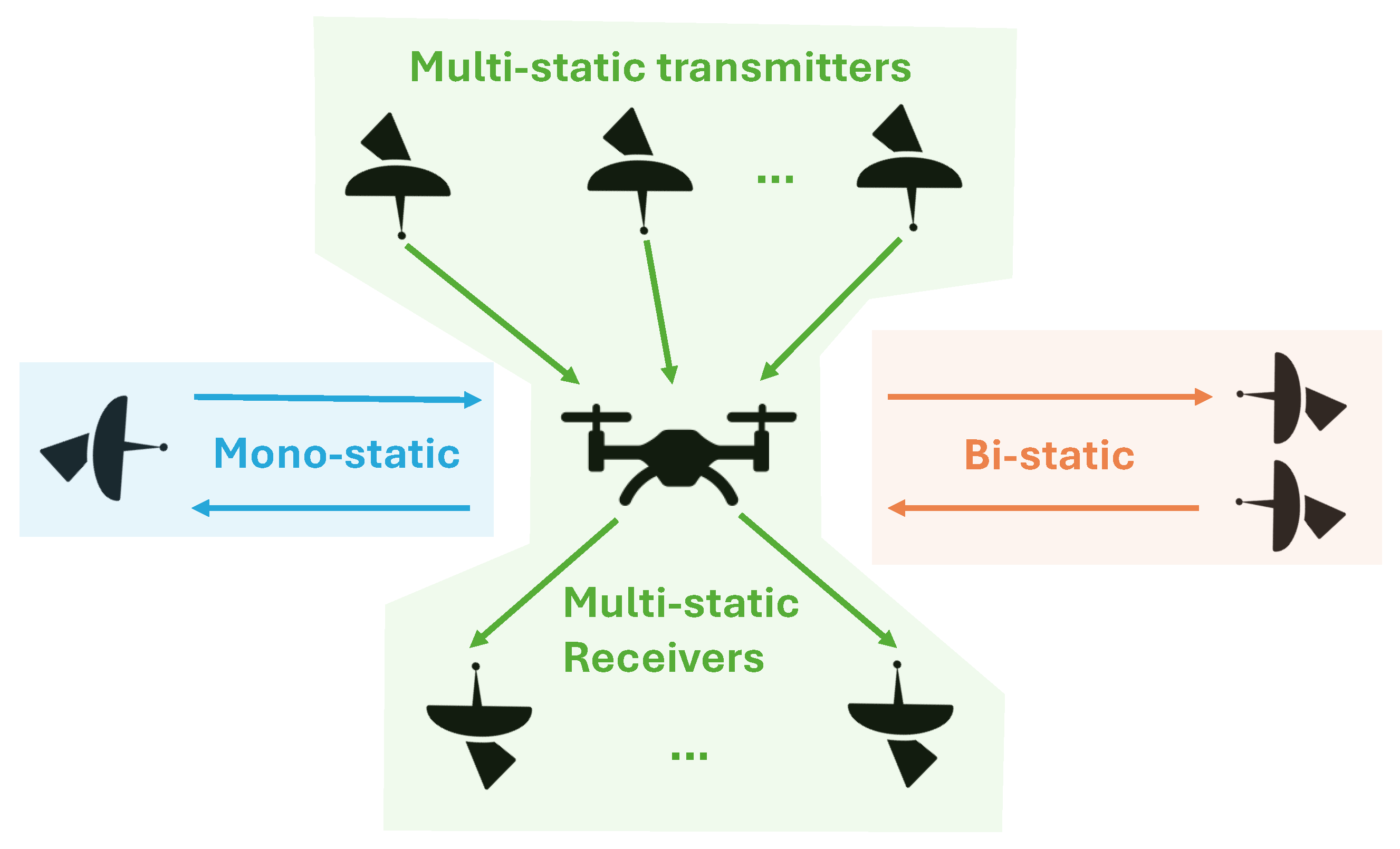

2.1. Radar Configurations

2.1.1. Monostatic Radar Configurations

2.1.2. Bistatic Radar Configurations

2.1.3. Multistatic Radar Configurations

2.2. Radar Cross Section

2.3. Frequency and Bandwidth

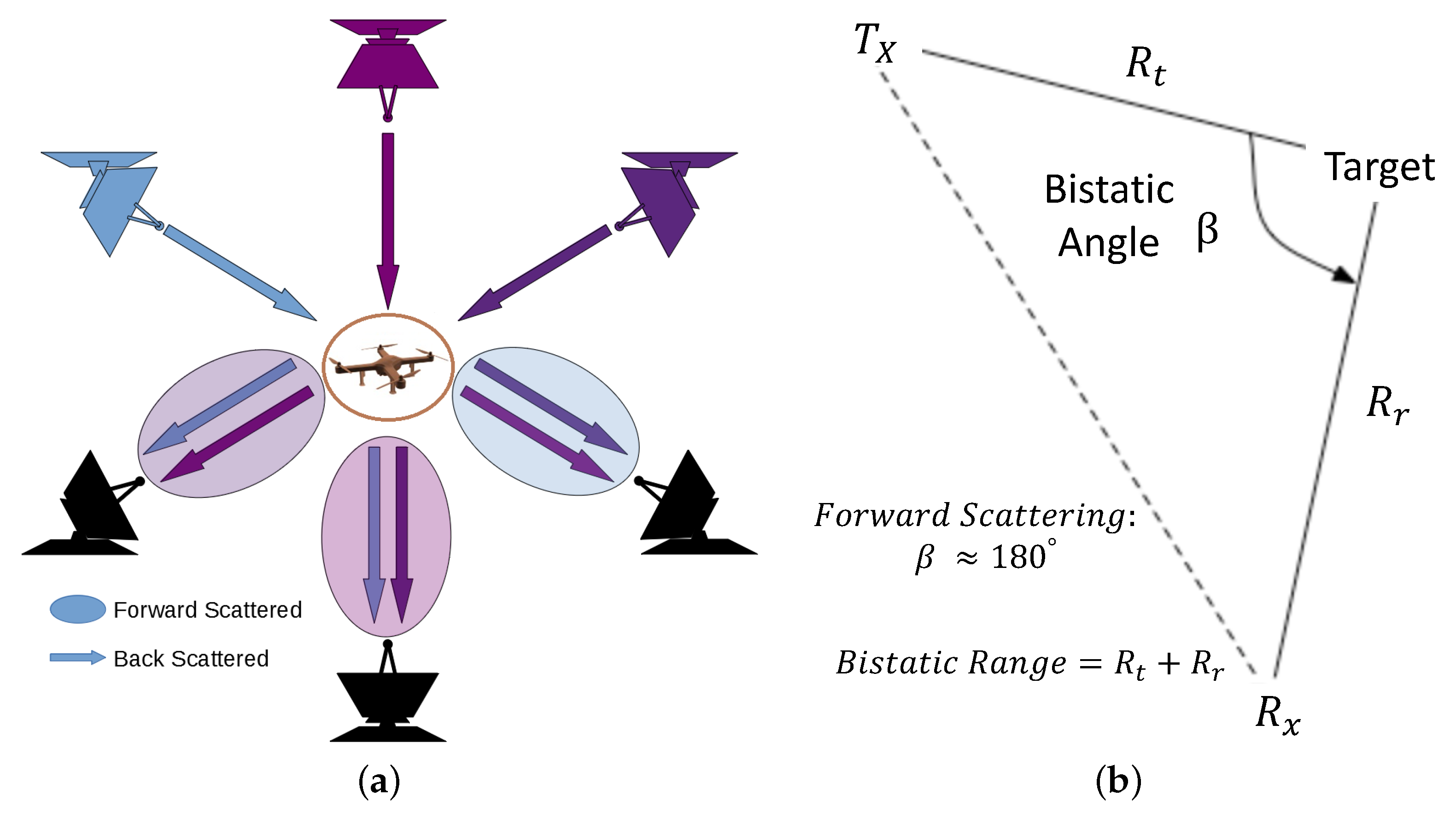

2.4. Radar Scattering

2.5. Radar Signal Power

2.6. Active or Passive

2.7. Beam Steering

2.8. Mechanical or Multi-Channel Scanning

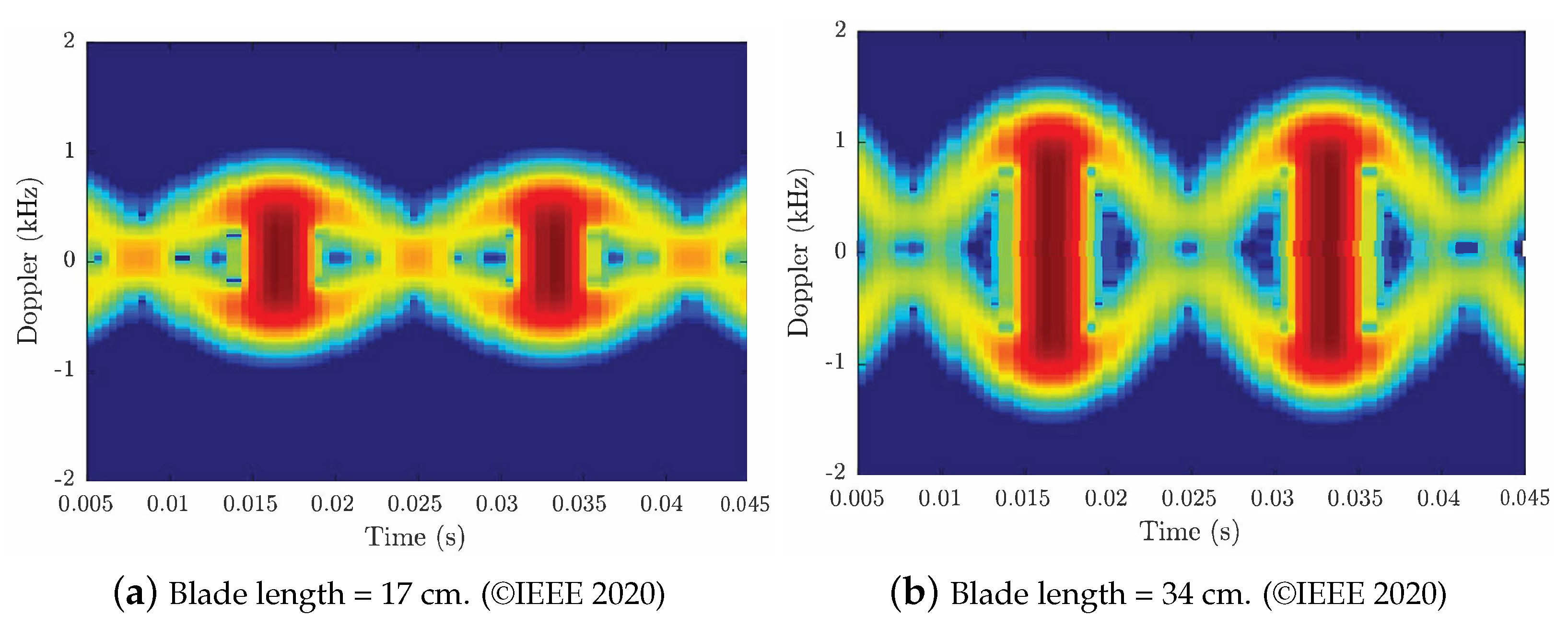

2.9. Micro-Doppler Analysis

2.10. Future Radar Drone Detection

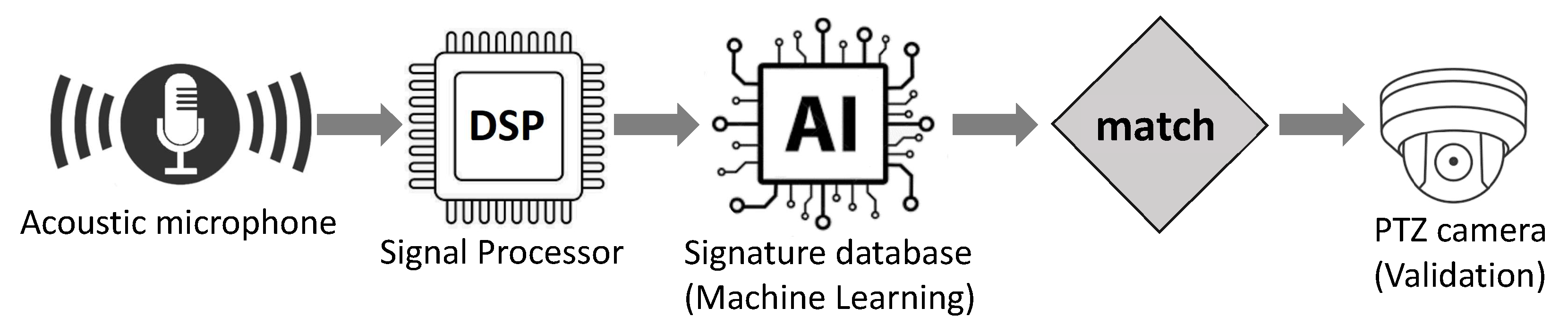

3. Acoustic Sensors

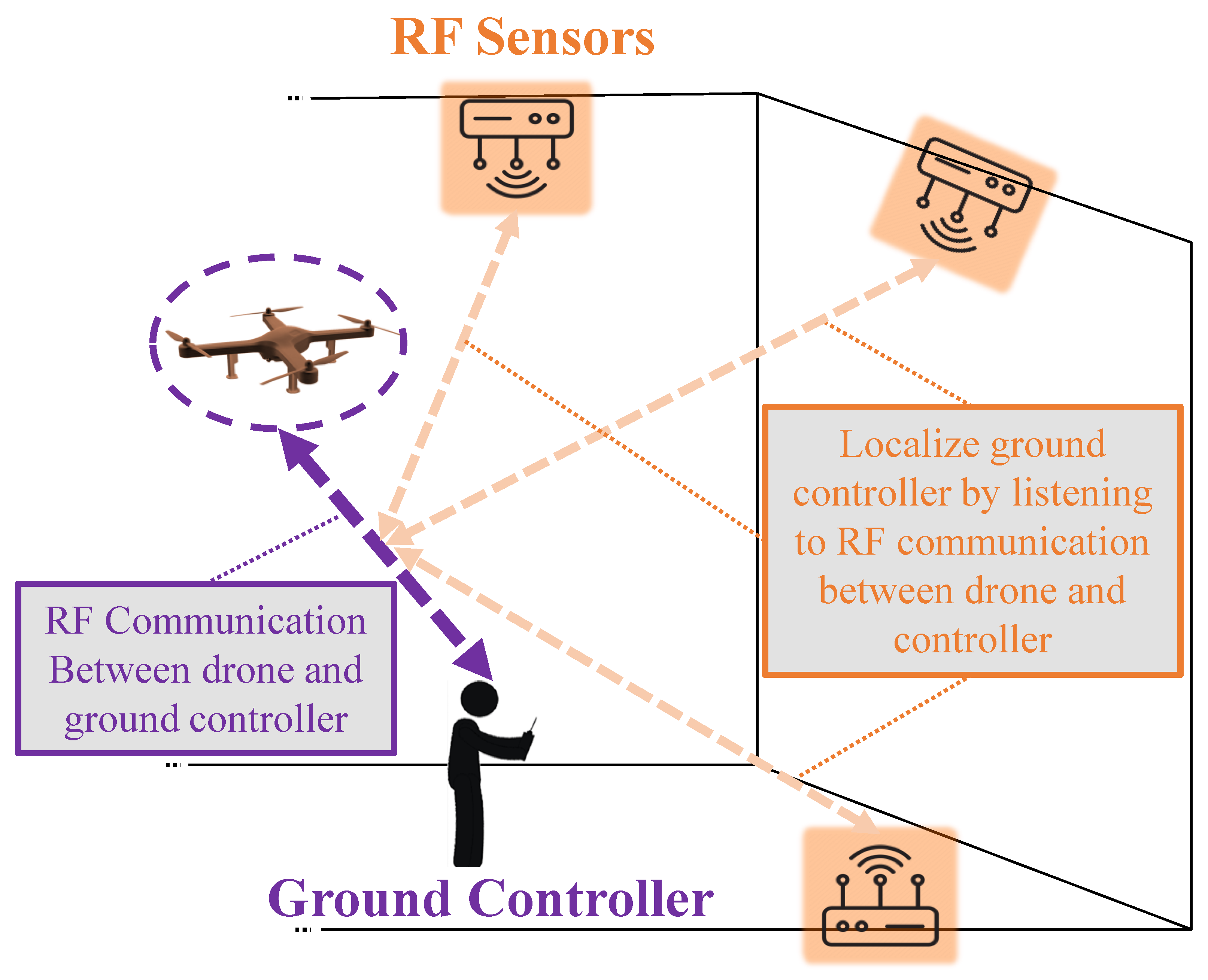

4. RF Ground Communication Sensors

5. Optical Sensors

6. Multi-Sensor Approach

7. Discussion

8. Conclusions & Future Work Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| RF | Radio Frequency |

| RCS | Radar Cross Section |

| CW | Continuous Wave |

| FMCW | Frequency Modulated Continuous Wave |

| LoS | Line of Sight |

| PTZ | Pan Tilt Zoom |

| MIMO | Multiple-Input Multiple-Output |

| GAN | Generative Adversarial Network |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Network |

| CRNN | Convolutional Recurrent Neural Network |

| SimAM | Simplified Attention Module |

| UWB | Ultra-Wideband |

| TCSPC | Time-Correlated Single Photon Counting |

| FOV | Field of View |

| UAM | Urban Air Mobility |

| SOCP | Second Order Cone Program |

| CoNNs | Concurrent Neural Networks |

| MEMS | Micro-Electro-Mechanical Systems |

| RPASs | Remotely Piloted Aircraft Systems |

References

- Choi, H.W.; Kim, H.J.; Kim, S.K.; Na, W.S. An Overview of Drone Applications in the Construction Industry. Drones 2023, 7, 515. [Google Scholar] [CrossRef]

- Quamar, M.M.; Al-Ramadan, B.; Khan, K.; Shafiullah, M.; El Ferik, S. Advancements and Applications of Drone-Integrated Geographic Information System Technology–A Review. Remote Sens. 2023, 15, 5039. [Google Scholar] [CrossRef]

- Raivi, A.M.; Huda, S.M.A.; Alam, M.M.; Moh, S. Drone Routing for Drone-Based Delivery Systems: A Review of Trajectory Planning, Charging, and Security. Sensors 2023, 23, 1463. [Google Scholar] [CrossRef]

- Famili, A.; Park, J.M.J. ROLATIN: Robust Localization and Tracking for Indoor Navigation of Drones. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Republic of Korea, 25–28 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, J.; Jo, H.; Oh, J. Application of Drone LiDAR Survey for Evaluation of a Long-Term Consolidation Settlement of Large Land Reclamation. Appl. Sci. 2023, 13, 8277. [Google Scholar] [CrossRef]

- Shah, S.A.; Lakho, G.M.; Keerio, H.A.; Sattar, M.N.; Hussain, G.; Mehdi, M.; Vistro, R.B.; Mahmoud, E.A.; Elansary, H.O. Application of drone surveillance for advance agriculture monitoring by Android application using convolution neural network. Agronomy 2023, 13, 1764. [Google Scholar] [CrossRef]

- Famili, A.; Atalay, T.; Stavrou, A.; Wang, H. Wi-Five: Optimal Placement of Wi-Fi Routers in 5G Networks for Indoor Drone Navigation. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Fu, X.; Wei, G.; Yuan, X.; Liang, Y.; Bo, Y. Efficient YOLOv7-Drone: An Enhanced Object Detection Approach for Drone Aerial Imagery. Drones 2023, 7, 616. [Google Scholar] [CrossRef]

- Zaitseva, E.; Levashenko, V.; Mukhamediev, R.; Brinzei, N.; Kovalenko, A.; Symagulov, A. Review of Reliability Assessment Methods of Drone Swarm (Fleet) and a New Importance Evaluation Based Method of Drone Swarm Structure Analysis. Mathematics 2023, 11, 2551. [Google Scholar] [CrossRef]

- Famili, A.; Stavrou, A.; Wang, H.; Park, J.M.J. RAIL: Robust Acoustic Indoor Localization for Drones. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Khan, M.A.; Menouar, H.; Eldeeb, A.; Abu-Dayya, A.; Salim, F.D. On the Detection of Unauthorized Drones—Techniques and Future Perspectives: A Review. IEEE Sens. J. 2022, 22, 11439–11455. [Google Scholar] [CrossRef]

- Famili, A.; Stavrou, A.; Wang, H.; Park, J.M.J. PILOT: High-Precision Indoor Localization for Autonomous Drones. IEEE Trans. Veh. Technol. 2022, 72, 6445–6459. [Google Scholar] [CrossRef]

- Royo, P.; Asenjo, A.; Trujillo, J.; Cetin, E.; Barrado, C. Enhancing Drones for Law Enforcement and Capacity Monitoring at Open Large Events. Drones 2022, 6, 359. [Google Scholar] [CrossRef]

- Gayathri Devi, K.; Yasoda, K.; Roy, M.N. Automatic Firefighting System Using Unmanned Aerial Vehicle. In Proceedings of the International Conference on Artificial Intelligence for Smart Community: AISC 2020, Seri Iskandar, Malaysia, 17–18 December 2022; pp. 1023–1031. [Google Scholar]

- Bi, Z.; Guo, X.; Wang, J.; Qin, S.; Liu, G. Deep reinforcement learning for truck-drone delivery problem. Drones 2023, 7, 445. [Google Scholar] [CrossRef]

- Eskandaripour, H.; Boldsaikhan, E. Last-mile drone delivery: Past, present, and future. Drones 2023, 7, 77. [Google Scholar] [CrossRef]

- Larsen, H.L.; Møller-Lassesen, K.; Enevoldsen, E.M.E.; Madsen, S.B.; Obsen, M.T.; Povlsen, P.; Bruhn, D.; Pertoldi, C.; Pagh, S. Drone with Mounted Thermal Infrared Cameras for Monitoring Terrestrial Mammals. Drones 2023, 7, 680. [Google Scholar] [CrossRef]

- Famili, A.; Stavrou, A.; Wang, H.; Park, J.M. iDROP: Robust Localization for Indoor Navigation of Drones With Optimized Beacon Placement. IEEE Internet Things J. 2023, 10, 14226–14238. [Google Scholar] [CrossRef]

- Zhao, Y.; Ju, Z.; Sun, T.; Dong, F.; Li, J.; Yang, R.; Fu, Q.; Lian, C.; Shan, P. Tgc-yolov5: An enhanced yolov5 drone detection model based on transformer, gam & ca attention mechanism. Drones 2023, 7, 446. [Google Scholar]

- Wang, X.; Yao, F.; Li, A.; Xu, Z.; Ding, L.; Yang, X.; Zhong, G.; Wang, S. DroneNet: Rescue Drone-View Object Detection. Drones 2023, 7, 441. [Google Scholar] [CrossRef]

- Karpathakis, S.F.; Dix-Matthews, B.P.; Walsh, S.M.; McCann, A.S.; Gozzard, D.R.; Frost, A.M.; Gravestock, C.T.; Schediwy, S.W. Ground-to-drone optical pulse position modulation demonstration as a testbed for lunar communications. Drones 2023, 7, 99. [Google Scholar] [CrossRef]

- George, A.; Koivumäki, N.; Hakala, T.; Suomalainen, J.; Honkavaara, E. Visual-inertial odometry using high flying altitude drone datasets. Drones 2023, 7, 36. [Google Scholar] [CrossRef]

- Hou, D.; Su, Q.; Song, Y.; Yin, Y. Research on drone fault detection based on failure mode databases. Drones 2023, 7, 486. [Google Scholar] [CrossRef]

- Famili, A.; Stavrou, A.; Wang, H.; Park, J.M.J. SPIN: Sensor Placement for Indoor Navigation of Drones. In Proceedings of the 2022 IEEE Latin-American Conference on Communications (LATINCOM), Rio de Janeiro, Brazil, 30 November–2 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Ambesh, R.; Sarfraz, A.B.; Kapoor, C.; Joshi, H.; Patel, H. Drone Detection using YOLOv4 and Amazon Rekognition. In Proceedings of the 2022 International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 9–11 May 2022; pp. 896–902. [Google Scholar] [CrossRef]

- Tokosh, J.; Chen, X. Delivery by Drone: Estimating Market Potential and Access to Consumers from Existing Amazon Infrastruture. Pap. Appl. Geogr. 2022, 8, 414–433. [Google Scholar] [CrossRef]

- Campbell, J.F. Will drones revolutionize home delivery? Let’s get real… Patterns 2022, 3, 100564. [Google Scholar] [PubMed]

- Min, H. Leveraging drone technology for last-mile deliveries in the e-tailing ecosystem. Sustainability 2023, 15, 11588. [Google Scholar] [CrossRef]

- Weng, Y.Y.; Wu, R.Y.; Zheng, Y.J. Cooperative truck–drone delivery path optimization under urban traffic restriction. Drones 2023, 7, 59. [Google Scholar] [CrossRef]

- AL-Dosari, K.; Hunaiti, Z.; Balachandran, W. Systematic Review on Civilian Drones in Safety and Security Applications. Drones 2023, 7, 210. [Google Scholar] [CrossRef]

- Daud, S.M.S.M.; Yusof, M.Y.P.M.; Heo, C.C.; Khoo, L.S.; Singh, M.K.C.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef]

- Nguyen, P.; Truong, H.; Ravindranathan, M.; Nguyen, A.; Han, R.; Vu, T. Matthan: Drone Presence Detection by Identifying Physical Signatures in the Drone’s RF Communication. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’17, Niagara Falls, NY, USA, 19–23 June 2017; pp. 211–224. [Google Scholar] [CrossRef]

- Aydin, B.; Singha, S. Drone Detection Using YOLOv5. Eng 2023, 4, 416–433. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2023, 24, 125. [Google Scholar] [CrossRef]

- Howell, L.G.; Allan, B.M.; Driscoll, D.A.; Ierodiaconou, D.; Doran, T.A.; Weston, M.A. Attenuation of Responses of Waterbirds to Repeat Drone Surveys Involving a Sequence of Altitudes and Drone Types: A Case Study. Drones 2023, 7, 497. [Google Scholar] [CrossRef]

- Abbass, M.A.B.; Kang, H.S. Drone elevation control based on python-unity integrated framework for reinforcement learning applications. Drones 2023, 7, 225. [Google Scholar] [CrossRef]

- Rábago, J.; Portuguez-Castro, M. Use of Drone Photogrammetry as An Innovative, Competency-Based Architecture Teaching Process. Drones 2023, 7, 187. [Google Scholar] [CrossRef]

- Zhou, Z.; Yu, X.; Chen, X. Object detection in drone video with temporal attention gated recurrent unit based on transformer. Drones 2023, 7, 466. [Google Scholar] [CrossRef]

- Iqbal, U.; Riaz, M.Z.B.; Zhao, J.; Barthelemy, J.; Perez, P. Drones for Flood Monitoring, Mapping and Detection: A Bibliometric Review. Drones 2023, 7, 32. [Google Scholar] [CrossRef]

- Noetel, D.; Johannes, W.; Caris, M.; Hommes, A.; Stanko, S. Detection of MAVs (Micro Aerial Vehicles) based on millimeter wave radar. In Proceedings of the SPIE Security + Defence, Edinburgh, UK, 26–29 September 2016; Volume 9993, pp. 54–60. [Google Scholar] [CrossRef]

- Robie, J.; Famili, A.; Stavrou, A. Revisiting the Spaceborne Illuminators of Opportunity for Airborne Object Tracking. Computer 2023, 56, 82–92. [Google Scholar] [CrossRef]

- Kolamunna, H.; Dahanayaka, T.; Li, J.; Seneviratne, S.; Thilakaratne, K.; Zomaya, A.Y.; Seneviratne, A. DronePrint: Acoustic Signatures for Open-Set Drone Detection and Identification with Online Data. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; ACM: New York, NY, USA, 2021; Volume 5, p. 20. [Google Scholar] [CrossRef]

- Zhang, Y. RF-based Drone Detection using Machine Learning. In Proceedings of the 2021 2nd International Conference on Computing and Data Science (CDS), Stanford, CA, USA, 28–29 January 2021; pp. 425–428. [Google Scholar] [CrossRef]

- Hammer, M.; Hebel, M.; Borgmann, B.; Laurenzis, M.; Arens, M. Potential of LiDAR sensors for the detection of UAVs. In Laser Radar Technology and Applications XXIII: Proceedings of SPIE Defense + Security, Orlando, FL, USA, 15–19 April 2018; Turner, M.D., Kamerman, G.W., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2018; Volume 10636, pp. 39–45. [Google Scholar] [CrossRef]

- Chahrour, H.; Dansereau, R.M.; Rajan, S.; Balaji, B. Target Detection through Riemannian Geometric Approach with Application to Drone Detection. IEEE Access 2021, 9, 123950–123963. [Google Scholar] [CrossRef]

- Yang, T.; De Maio, A.; Zheng, J.; Su, T.; Carotenuto, V.; Aubry, A. An Adaptive Radar Signal Processor for UAVs Detection With Super-Resolution Capabilities. IEEE Sens. J. 2021, 21, 20778–20787. [Google Scholar] [CrossRef]

- Schneebeli, M.; Leuenberger, A.; Wabeke, L.; Kloke, K.; Kitching, C.; Siegenthaler, U.; Wellig, P. Drone detection with a multistatic C-band radar. In Proceedings of the 2021 21st International Radar Symposium (IRS), Berlin, Germany, 21–22 June 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Yazici, A.; Baykal, B. Detection and Localization of Drones in MIMO CW Radar. IEEE Trans. Aerosp. Electron. Syst. 2023, 60, 226–238. [Google Scholar]

- Fu, R.; Al-Absi, M.A.; Kim, K.H.; Lee, Y.S.; Al-Absi, A.A.; Lee, H.J. Deep Learning-Based Drone Classification Using Radar Cross Section Signatures at mmWave Frequencies. IEEE Access 2021, 9, 161431–161444. [Google Scholar] [CrossRef]

- Semkin, V.; Yin, M.; Hu, Y.; Mezzavilla, M.; Rangan, S. Drone Detection and Classification Based on Radar Cross Section Signatures. In Proceedings of the 2020 International Symposium on Antennas and Propagation (ISAP), Osaka, Japan, 25–28 January 2021; pp. 223–224. [Google Scholar] [CrossRef]

- De Wit, J.J.; Gusland, D.; Trommel, R.P. Radar Measurements for the Assessment of Features for Drone Characterization. In Proceedings of the 2020 17th European Radar Conference (EuRAD), Utrecht, The Netherlands, 10–15 January 2021; pp. 38–41. [Google Scholar] [CrossRef]

- Zulkifli, S.; Balleri, A. Design and Development of K-Band FMCW Radar for Nano-Drone Detection. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Griffin, B.; Balleri, A.; Baker, C.; Jahangir, M. Optimal receiver placement in staring cooperative radar networks for detection of drones. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Morris, P.J.B.; Hari, K.V.S. Detection and Localization of Unmanned Aircraft Systems Using Millimeter-Wave Automotive Radar Sensors. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Maksymiuk, R.; Płotka, M.; Abratkiewicz, K.; Samczyński, P. 5G Network-Based Passive Radar for Drone Detection. In Proceedings of the 2023 24th International Radar Symposium (IRS), Berlin, Germany, 24–26 May 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Lam, I.; Pant, S.; Manning, M.; Kubanski, M.; Fox, P.; Rajan, S.; Patnaik, P.; Balaji, B. Time-Frequency Analysis using V-band Radar for Drone Detection and Classification. In Proceedings of the 2023 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Kuala Lumpur, Malaysia, 22–25 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Mamat, M.A.C.; Aziz, N.H.A. Drone Detection and Classification using Passive Forward Scattering Radar. Int. J. Integr. Eng. 2022, 14, 90–101. [Google Scholar]

- Gong, J.; Yan, J.; Li, D.; Kong, D. Detection of Micro-Doppler Signals of Drones Using Radar Systems with Different Radar Dwell Times. Drones 2022, 6, 262. [Google Scholar] [CrossRef]

- Solomitckii, D.; Gapeyenko, M.; Semkin, V.; Andreev, S.; Koucheryavy, Y. Technologies for Efficient Amateur Drone Detection in 5G Millimeter-Wave Cellular Infrastructure. IEEE Commun. Mag. 2018, 56, 43–50. [Google Scholar] [CrossRef]

- Wang, Y.; Phelps, T.A.; Kibaroglu, K.; Sayginer, M.; Ma, Q.; Rebeiz, G.M. 28 GHz 5G-Based Phased-Arrays for UAV Detection and Automotive Traffic-Monitoring Radars. In Proceedings of the 2018 IEEE/MTT-S International Microwave Symposium—IMS, Philadelphia, PA, USA, 10–15 June 2018; pp. 895–898. [Google Scholar]

- Chadwick, A.D. Micro-drone detection using software-defined 3G passive radar. In Proceedings of the International Conference on Radar Systems (Radar 2017), Belfast, UK, 23–26 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Yan, J.; Hu, H.; Gong, J.; Kong, D.; Li, D. Exploring Radar Micro-Doppler Signatures for Recognition of Drone Types. Drones 2023, 7, 280. [Google Scholar] [CrossRef]

- Kapoulas, I.K.; Hatziefremidis, A.; Baldoukas, A.; Valamontes, E.S.; Statharas, J. Small Fixed-Wing UAV Radar Cross-Section Signature Investigation and Detection and Classification of Distance Estimation Using Realistic Parameters of a Commercial Anti-Drone System. Drones 2023, 7, 39. [Google Scholar] [CrossRef]

- Gong, J.; Yan, J.; Hu, H.; Kong, D.; Li, D. Improved Radar Detection of Small Drones Using Doppler Signal-to-Clutter Ratio (DSCR) Detector. Drones 2023, 7, 316. [Google Scholar] [CrossRef]

- Di Seglio, M.; Filippini, F.; Bongioanni, C.; Colone, F. Comparing reference-free WiFi radar sensing approaches for monitoring people and drones. IET Radar Sonar Navig. 2024, 18, 107–124. [Google Scholar] [CrossRef]

- Delamou, M.; Noubir, G.; Dang, S.; Amhoud, E.M. An Efficient OFDM-Based Monostatic Radar Design for Multitarget Detection. IEEE Access 2023, 11, 135090–135105. [Google Scholar] [CrossRef]

- Rodriguez, D.; Rodrigues, D.V.Q.; Mishra, A.; Saed, M.A.; Li, C. Quadrature and Single-Channel Low-Cost Monostatic Radar Based on a Novel 2-Port Transceiver Chain. IEEE Sens. J. 2023, 23, 28872–28882. [Google Scholar] [CrossRef]

- Yuan, H.; Zhao, S.Y.; Chen, Y.J.; Luo, Y.; Liu, Y.X.; Zhang, Y.P. Micro-Motion Parameters Estimation of Precession Cone Based on Monostatic Radar. IEEE Trans. Antennas Propag. 2023, 72, 2811–2824. [Google Scholar] [CrossRef]

- Ding, R.; Wang, Z.; Jiang, L.; Liu, Z.; Zheng, S. A target localisation method with monostatic radar via multi-observation data association. IET Radar Sonar Navig. 2023, 17, 99–116. [Google Scholar] [CrossRef]

- Linder, M.; Strauch, J.; Schwarz, D.; Waldschmidt, C. High Gain W-Band Lens Antenna for Monostatic Radar Applications: A System-Oriented Approach. In Proceedings of the 2023 17th European Conference on Antennas and Propagation (EuCAP), Florence, Italy, 26–31 March 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Sakhnini, A.; Bourdoux, A.; Pollin, S. Estimation of Array Locations, Orientations, Timing Offsets and Target Locations in Bistatic Radars. IEEE Trans. Radar Syst. 2023, 1, 520–531. [Google Scholar] [CrossRef]

- Li, H.; Geng, J.; Xie, J. Robust joint transmit and receive beamforming by sequential optimization for bistatic radar system. IET Radar Sonar Navig. 2023, 17, 1183–1195. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Z.; Peng, D. Target Detection of Passive Bistatic Radar under the Condition of Impure Reference Signal. Remote Sens. 2023, 15, 3876. [Google Scholar] [CrossRef]

- Xiong, W.; Lu, Y.; Song, J.; Chen, X. A Two-Stage Track-before-Detect Method for Non-Cooperative Bistatic Radar Based on Deep Learning. Remote Sens. 2023, 15, 3757. [Google Scholar] [CrossRef]

- Santoro, L.; Nardello, M.; Fontanelli, D.; Brunelli, D. UWB Bistatic Radar Sensor: Across Channels Evaluation. IEEE Sens. Lett. 2023, 7, 1–4. [Google Scholar] [CrossRef]

- Robie, J.; Famili, A.; Stavrou, A. Receiver Density Analysis for High Probability Detection of Forward Scattered Airborne Signals. In Proceedings of the 2022 International Conference on Electrical, Computer and Energy Technologies (ICECET), Prague, Czech Republic, 20–22 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Beasley, P.J.; Peters, N.; Horne, C.; Ritchie, M.A. Global Navigation Satellite Systems disciplined oscillator synchronisation of multistatic radar. IET Radar Sonar Navig. 2023, 18, 23–40. [Google Scholar] [CrossRef]

- Dhulashia, D.; Ritchie, M. Multistatic radar distribution geometry effects on parameter estimation accuracy. IET Radar Sonar Navig. 2024, 18, 7–22. [Google Scholar] [CrossRef]

- Randall, M.; Delacroix, A.; Ezell, C.; Kelderman, E.; Little, S.; Loeb, A.; Masson, E.; Watters, W.A.; Cloete, R.; White, A. SkyWatch: A Passive Multistatic Radar Network for the Measurement of Object Position and Velocity. J. Astron. Instrum. 2023, 12, 2340004. [Google Scholar] [CrossRef]

- Sruti, S.; Kumar, A.A.; Giridhar, K. RCS-Based Imaging of Extended Targets for Classification in Multistatic Radar Systems. In Proceedings of the 2023 IEEE Radar Conference (RadarConf23), San Antonio, TX, USA, 1–5 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- da Graça Marto, S.; Díaz Riofrío, S.; Ilioudis, C.; Clemente, C.; Vasile, M. Satellite manoeuvre detection with multistatic radar. J. Astronaut. Sci. 2023, 70, 36. [Google Scholar] [CrossRef]

- Beasley, P.; Ritchie, M.; Griffiths, H.; Miceli, W.; Inggs, M.; Lewis, S.; Kahn, B. Multistatic Radar Measurements of UAVs at X-band and L-band. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Shen, X.; Huang, D. A Plane Wave Equivalent Model for Forward Scatter Shadow Ratio in Spherical Wave and Its Application in Shadow Profile Retrieval. IEEE Access 2023, 11, 134986–134994. [Google Scholar] [CrossRef]

- Sundaresan, S.; Surendar, M.; Ananthkumar, T.; Sureshkumar, K.; Prabhu, J.S. Impact of wind farms on surveillance radar system: A realistic scenario in Palakkad gap region. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 7949–7956. [Google Scholar] [CrossRef]

- Shen, X.; Huang, D. Forward Scatter Shadow Ratio: Concept and Its Application in Shadow Profile Retrieval. IEEE Access 2023, 11, 77147–77162. [Google Scholar] [CrossRef]

- Henry, J.K.; Narayanan, R.M.; Singla, P. Design and processing of a self-mixing passive forward scatter radar fence for space debris tracking. In Proceedings of the Sensors and Systems for Space Applications XVI, Orlando, FL, USA, 30 April–5 May 2023; Volume 12546, pp. 69–78. [Google Scholar]

- Yang, R.; Wang, C.X.; Huang, J.; Aggoune, E.H.M.; Hao, Y. A Novel 6G ISAC Channel Model Combining Forward and Backward Scattering. IEEE Trans. Wirel. Commun. 2023, 22, 8050–8065. [Google Scholar] [CrossRef]

- Oh, B.S.; Lin, Z. Extraction of Global and Local Micro-Doppler Signature Features From FMCW Radar Returns for UAV Detection. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 1351–1360. [Google Scholar] [CrossRef]

- Zhang, Y.D.; Xiang, X.; Li, Y.; Chen, G. Enhanced Micro-Doppler Feature Analysis for Drone Detection. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 7–14 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Gannon, Z.; Tahmoush, D. Measuring UAV Propeller Length using Micro-doppler Signatures. In Proceedings of the 2020 IEEE International Radar Conference (RADAR), Washington, DC, USA, 28–30 April 2020; pp. 1019–1022. [Google Scholar] [CrossRef]

- Dumitrescu, C.; Minea, M.; Costea, I.M.; Cosmin Chiva, I.; Semenescu, A. Development of an Acoustic System for UAV Detection. Sensors 2020, 20, 4870. [Google Scholar] [CrossRef] [PubMed]

- Fang, J.; Li, Y.; Ji, P.N.; Wang, T. Drone Detection and Localization Using Enhanced Fiber-Optic Acoustic Sensor and Distributed Acoustic Sensing Technology. J. Light. Technol. 2023, 41, 822–831. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Ali, A.; Al-Ali, A. Audio-Based Drone Detection and Identification Using Deep Learning Techniques with Dataset Enhancement through Generative Adversarial Networks. Sensors 2021, 21, 4953. [Google Scholar] [CrossRef]

- Salom, I.; Dimic, G.; Celebic, V.; Spasenovic, M.; Raickovic, M.; Mihajlovic, M.; Todorovic, D. An Acoustic Camera for Use on UAVs. Sensors 2023, 23, 880. [Google Scholar] [CrossRef]

- Rascon, C.; Ruiz-Espitia, O.; Martinez-Carranza, J. On the Use of the AIRA-UAS Corpus to Evaluate Audio Processing Algorithms in Unmanned Aerial Systems. Sensors 2019, 19, 3902. [Google Scholar] [CrossRef]

- Basak, S.; Rajendran, S.; Pollin, S.; Scheers, B. Combined RF-Based Drone Detection and Classification. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 111–120. [Google Scholar] [CrossRef]

- Allahham, M.S.; Khattab, T.; Mohamed, A. Deep Learning for RF-Based Drone Detection and Identification: A Multi-Channel 1-D Convolutional Neural Networks Approach. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 112–117. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Syed, A.; Lauf, A.P. Machine Learning Framework for RF-Based Drone Detection and Identification System. In Proceedings of the 2021 2nd International Conference on Smart Cities, Automation & Intelligent Computing Systems (ICON-SONICS), Tangerang, Indonesia, 12–13 October 2021; pp. 58–64. [Google Scholar] [CrossRef]

- Alam, S.S.; Chakma, A.; Rahman, M.H.; Bin Mofidul, R.; Alam, M.M.; Utama, I.B.K.Y.; Jang, Y.M. RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors 2023, 23, 4202. [Google Scholar] [CrossRef]

- Flak, P.; Czyba, R. RF Drone Detection System Based on a Distributed Sensor Grid With Remote Hardware-Accelerated Signal Processing. IEEE Access 2023, 11, 138759–138772. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B.; Pokrajac, I.; Bajčetić, J.; Bondžulić, B.; Obradović, D. Single and multiple drones detection and identification using RF based deep learning algorithm. Expert Syst. Appl. 2022, 187, 115928. [Google Scholar] [CrossRef]

- Kılıç, R.; Kumbasar, N.; Oral, E.A.; Ozbek, I.Y. Drone classification using RF signal based spectral features. Eng. Sci. Technol. Int. J. 2022, 28, 101028. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Senaid, F. Drone Detection Approach Based on Radio-Frequency Using Convolutional Neural Network. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 29–34. [Google Scholar] [CrossRef]

- Nemer, I.; Sheltami, T.; Ahmad, I.; Yasar, A.U.H.; Abdeen, M.A.R. RF-Based UAV Detection and Identification Using Hierarchical Learning Approach. Sensors 2021, 21, 1947. [Google Scholar] [CrossRef]

- Fang, J.; Zhou, Z.; Jin, S.; Wang, L.; Lu, B.; Qin, Z. Exploring LoRa for Drone Detection. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), New York, NY, USA, 2–5 May 2022; pp. 1–2. [Google Scholar] [CrossRef]

- Digulescu, A.; Despina-Stoian, C.; Popescu, F.; Stanescu, D.; Nastasiu, D.; Sburlan, D. UWB Sensing for UAV and Human Comparative Movement Characterization. Sensors 2023, 23, 1956. [Google Scholar] [CrossRef]

- Flak, P. Drone Detection Sensor With Continuous 2.4 GHz ISM Band Coverage Based on Cost-Effective SDR Platform. IEEE Access 2021, 9, 114574–114586. [Google Scholar] [CrossRef]

- Mokhtari, M.; Bajcetic, J.; Sazdic-Jotic, B.; Pavlovic, B. RF-based drone detection and classification system using convolutional neural network. In Proceedings of the 2021 29th Telecommunications Forum (TELFOR), Belgrade, Serbia, 23–24 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Lv, H.; Liu, F.; Yuan, N. Drone presence detection by the drone’s RF communication. J. Phys. Conf. Ser. 2021, 1738, 012044. [Google Scholar] [CrossRef]

- Chiper, F.L.; Martian, A.; Vladeanu, C.; Marghescu, I.; Craciunescu, R.; Fratu, O. Drone detection and defense systems: Survey and a software-defined radio-based solution. Sensors 2022, 22, 1453. [Google Scholar] [CrossRef] [PubMed]

- Sinha, P.; Yapici, Y.; Guvenc, I.; Turgut, E.; Gursoy, M.C. RSS-Based Detection of Drones in the Presence of RF Interferers. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Basak, S.; Rajendran, S.; Pollin, S.; Scheers, B. Drone classification from RF fingerprints using deep residual nets. In Proceedings of the 2021 International Conference on Communication Systems & NETworkS (COMSNETS), Bangalore, India, 5–9 January 2021; pp. 548–555. [Google Scholar] [CrossRef]

- Nie, W.; Han, Z.C.; Zhou, M.; Xie, L.B.; Jiang, Q. UAV Detection and Identification Based on WiFi Signal and RF Fingerprint. IEEE Sens. J. 2021, 21, 13540–13550. [Google Scholar] [CrossRef]

- Almubairik, N.A.; El-Alfy, E.S.M. RF-Based Drone Detection with Deep Neural Network: Review and Case Study. In Proceedings of the International Conference on Neural Information Processing, Changsha, China, 20–23 November 2023; Springer: Singapore, 2023; pp. 16–27. [Google Scholar]

- Morge-Rollet, L.; Le Jeune, D.; Le Roy, F.; Canaff, C.; Gautier, R. Drone Detection and Classification Using Physical-Layer Protocol Statistical Fingerprint. Sensors 2022, 22, 6701. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.; Kim, T.; Miao, J.; Hesselius, D.; Kenneally, E.; Massey, D.; Frew, E.; Han, R.; Vu, T. Towards RF-based localization of a drone and its controller. In Proceedings of the 5th Workshop on Micro Aerial Vehicle Networks, Systems, and Applications, Seoul, Republic of Korea, 21 June 2019; pp. 21–26. [Google Scholar]

- Yousaf, J.; Zia, H.; Alhalabi, M.; Yaghi, M.; Basmaji, T.; Shehhi, E.A.; Gad, A.; Alkhedher, M.; Ghazal, M. Drone and Controller Detection and Localization: Trends and Challenges. Appl. Sci. 2022, 12, 12612. [Google Scholar] [CrossRef]

- Famili, A.; Foruhandeh, M.; Atalay, T.; Stavrou, A.; Wang, H. GPS Spoofing Detection by Leveraging 5G Positioning Capabilities. In Proceedings of the 2022 IEEE Latin-American Conference on Communications (LATINCOM), Rio de Janeiro, Brazil, 30 November–2 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Himona, G.; Famili, A.; Stavrou, A.; Kovanis, V.; Kominis, Y. Isochrons in tunable photonic oscillators and applications in precise positioning. In Proceedings of the Physics and Simulation of Optoelectronic Devices XXXI, San Francisco, CA, USA, 28 January–3 February 2023; Volume 12415, pp. 82–86. [Google Scholar]

- Sun, Y.; Wang, W.; Mottola, L.; Zhang, J.; Wang, R.; He, Y. Indoor Drone Localization and Tracking Based on Acoustic Inertial Measurement. IEEE Trans. Mob. Comput. 2023, 1–15. [Google Scholar] [CrossRef]

- Famili, A.; Atalay, T.; Stavrou, A.; Wang, H. Wi-Six: Precise Positioning in the Metaverse via Optimal Wi-Fi Router Deployment in 6G Networks. In Proceedings of the 2023 IEEE International Conference on Metaverse Computing, Networking and Applications (MetaCom), Kyoto, Japan, 26–28 June 2023; pp. 17–24. [Google Scholar] [CrossRef]

- Guvenc, I.; Ozdemir, O.; Yapici, Y.; Mehrpouyan, H.; Matolak, D. Detection, localization, and tracking of unauthorized UAS and Jammers. In Proceedings of the 2017 IEEE/AIAA 36th Digital Avionics Systems Conference (DASC), St. Petersburg, FL, USA, 17–21 September 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Famili, A.; Atalay, T.O.; Stavrou, A.; Wang, H.; Park, J.M. OFDRA: Optimal Femtocell Deployment for Accurate Indoor Positioning of RIS-Mounted AVs. IEEE J. Sel. Areas Commun. 2023, 41, 3783–3798. [Google Scholar] [CrossRef]

- Famili, A.; Slyusar, V.; Lee, Y.H.; Stavrou, A. Vehicular Teamwork for Better Positioning. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, NI, USA, 1–4 October 2023; pp. 3507–3513. [Google Scholar] [CrossRef]

- Scholes, S.; Ruget, A.; Mora-Martín, G.; Zhu, F.; Gyongy, I.; Leach, J. DroneSense: The Identification, Segmentation, and Orientation Detection of Drones via Neural Networks. IEEE Access 2022, 10, 38154–38164. [Google Scholar] [CrossRef]

- Dogru, S.; Marques, L. Drone Detection Using Sparse Lidar Measurements. IEEE Robot. Autom. Lett. 2022, 7, 3062–3069. [Google Scholar] [CrossRef]

- Chen, Z.; Miao, Y.; Tang, D.; Yang, H.; Pan, W. Effect of LiDAR Receiver Field of View on UAV Detection. Photonics 2022, 9, 972. [Google Scholar] [CrossRef]

- Aldao, E.; Gonzalez-de Santos, L.M.; Gonzalez-Jorge, H. Lidar Based Detect and Avoid System for UAV Navigation in UAM Corridors. Drones 2022, 6, 185. [Google Scholar] [CrossRef]

- Lv, Y.; Ai, Z.; Chen, M.; Gong, X.; Wang, Y.; Lu, Z. High-Resolution Drone Detection Based on Background Difference and SAG-YOLOv5s. Sensors 2022, 22, 5825. [Google Scholar] [CrossRef]

- Khan, M.A.; Menouar, H.; Khalid, O.M.; Abu-Dayya, A. Unauthorized Drone Detection: Experiments and Prototypes. In Proceedings of the 2022 IEEE International Conference on Industrial Technology (ICIT), Shanghai, China, 22–25 August 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Dudczyk, J.; Czyba, R.; Skrzypczyk, K. Multi-Sensory Data Fusion in Terms of UAV Detection in 3D Space. Sensors 2022, 22, 4323. [Google Scholar] [CrossRef]

- Laurenzis, M.; Hengy, S.; Hommes, A.; Kloeppel, F.; Shoykhetbrod, A.; Geibig, T.; Johannes, W.; Naz, P.; Christnacher, F. Multi-sensor field trials for detection and tracking of multiple small unmanned aerial vehicles flying at low altitude. In Signal Processing, Sensor/Information Fusion, and Target Recognition XXVI: Proceedings of SPIE Defense + Security, Anaheim, CA, USA, 9–13 April 2017; Kadar, I., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2017; Volume 10200, pp. 384–396. [Google Scholar] [CrossRef]

- Laurenzis, M.; Hengy, S.; Hammer, M.; Hommes, A.; Johannes, W.; Giovanneschi, F.; Rassy, O.; Bacher, E.; Schertzer, S.; Poyet, J.M. An adaptive sensing approach for the detection of small UAV: First investigation of static sensor network and moving sensor platform. In Signal Processing, Sensor/Information Fusion, and Target Recognition XXVII: Proceedings of SPIE Defense + Security, Orlando, FL, USA, 15–19 April 2018; Kadar, I., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2018; Volume 10646, pp. 197–205. [Google Scholar] [CrossRef]

- Wang, Y.; Phelps, T.; Rupakula, B.; Zihir, S.; Rebeiz, G.M. 64 GHz 5G-Based Phased-Arrays for UAV Detection and Automotive Traffic-Monitoring Radars. In Proceedings of the 2019 IEEE International Symposium on Phased Array System & Technology (PAST), Waltham, MA, USA, 15–18 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Cao, P. Cellular Base Station Imaging for UAV Detection. IEEE Access 2022, 10, 24843–24851. [Google Scholar] [CrossRef]

- Zhao, J.; Fu, X.; Yang, Z.; Xu, F. Radar-assisted UAV detection and identification based on 5G in the Internet of Things. Wirel. Commun. Mob. Comput. 2019, 2019, 2850263. [Google Scholar] [CrossRef]

| Detection Techniques | Advantages | Disadvantages |

|---|---|---|

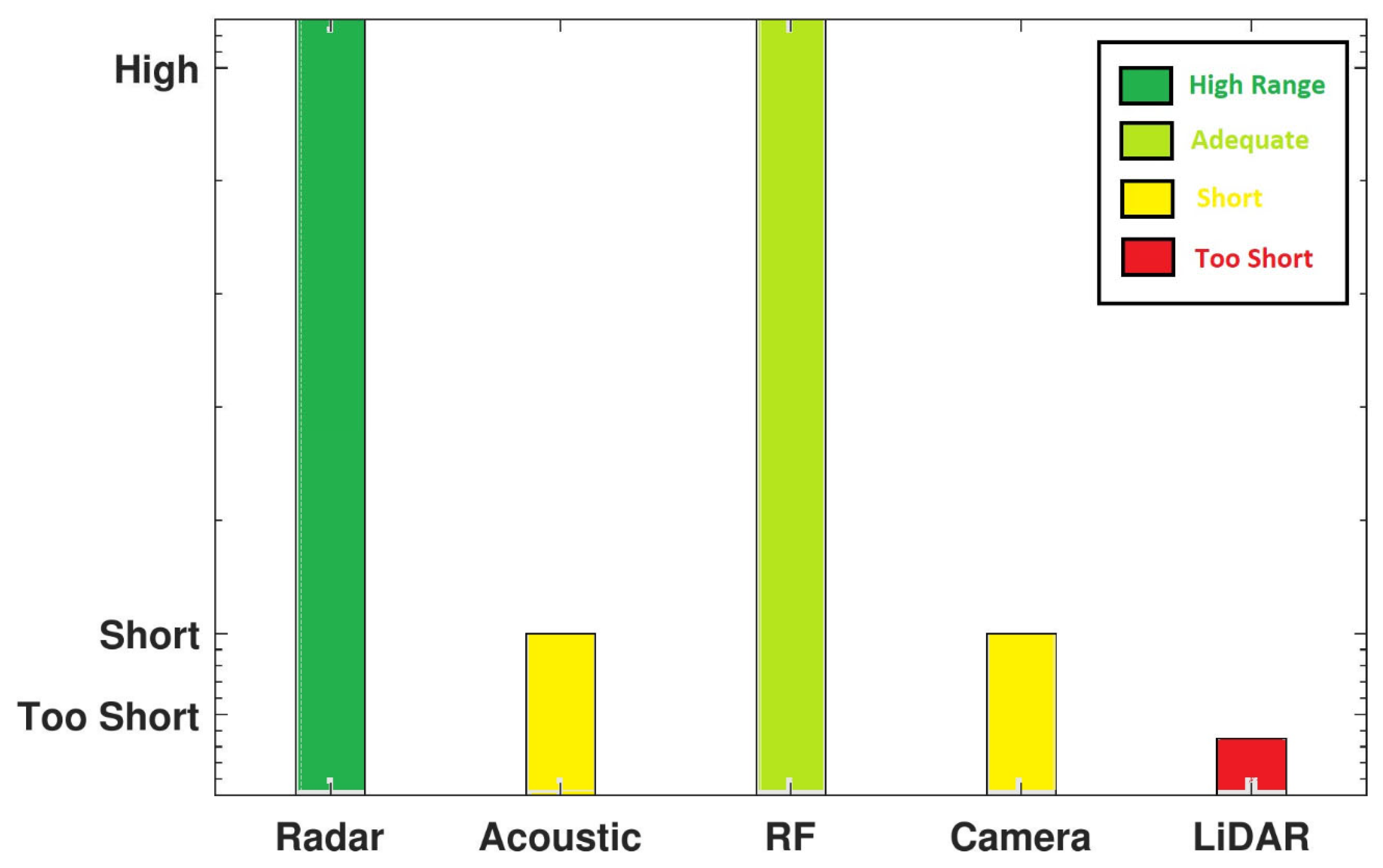

| Radar | Operates in day/night, acoustically noisy, and visually impaired environments. Long range. Constant tracking. Performs even if drone flies autonomously (without RF emissions). Drone size/type detection via micro-Doppler analysis. | Small RCS can affect performance (in cmWave radars, for regular-sized commercial drones, RCS typically ranges from dBsm to dBsm). Active radars require transmission license and frequency check to prevent interference. |

| Acoustic microphone array | Operates independent of visual conditions (day, night, fog, etc.). Performs even if drone flies autonomously. Operates in LoS/NLoS. Low-cost implementation. Low energy consumption. Easy to deploy. | Short detection range (detection range < 500 m). Performance degrades in loud and noisy environments. May work poorly in crowded urban environments due to acoustic noise. |

| RF signals | Operates in day/night, acoustically noisy, visually impaired, and LoS/NLoS environments. No licence required. Low cost sensors (e.g., SDRs). Can locate the controller of the drone on the ground. | Detection fails in cases where there is no RF signal transmission from the drone. May work poorly in crowded urban areas due to RF interference. |

| Vision-based | Offers ancillary information to classify the exact type of drone. Can record images as forensic evidence for use in eventual prosecution. | Short detection range (e.g., LiDAR sensors’ range < 50 m). Requires LoS. Relative expensive sensors. High computational cost. Performance degrades under different weather conditions (fog, dust, clouds, etc) and in visually impaired environments. |

| Multi-sensor | Combines advantages of multiple methods. Has better overall performance. Robust under different scenarios and environmental conditions. | Increased cost and computational complexity compared to single sensors. |

| Stations | Detection | Validation | Localization |

|---|---|---|---|

| Urban | The 5G cell tower antennas function as phased-array active radars with high-resolution | Surveillance drones equipped with cameras (optical sensors) that can fly close to the target and provide a better view | RF sensing to localize the controller of drone on the ground |

| Rural | Machine learning program to analyze data captured with acoustic antenna arrays | PTZ camera to provide a better view at the target | RF sensing to localize the controller of drone on the ground |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Famili, A.; Stavrou, A.; Wang, H.; Park, J.-M.; Gerdes, R. Securing Your Airspace: Detection of Drones Trespassing Protected Areas. Sensors 2024, 24, 2028. https://doi.org/10.3390/s24072028

Famili A, Stavrou A, Wang H, Park J-M, Gerdes R. Securing Your Airspace: Detection of Drones Trespassing Protected Areas. Sensors. 2024; 24(7):2028. https://doi.org/10.3390/s24072028

Chicago/Turabian StyleFamili, Alireza, Angelos Stavrou, Haining Wang, Jung-Min (Jerry) Park, and Ryan Gerdes. 2024. "Securing Your Airspace: Detection of Drones Trespassing Protected Areas" Sensors 24, no. 7: 2028. https://doi.org/10.3390/s24072028

APA StyleFamili, A., Stavrou, A., Wang, H., Park, J.-M., & Gerdes, R. (2024). Securing Your Airspace: Detection of Drones Trespassing Protected Areas. Sensors, 24(7), 2028. https://doi.org/10.3390/s24072028