Deep Learning-Based Multi-Class Segmentation of the Paranasal Sinuses of Sinusitis Patients Based on Computed Tomographic Images

Abstract

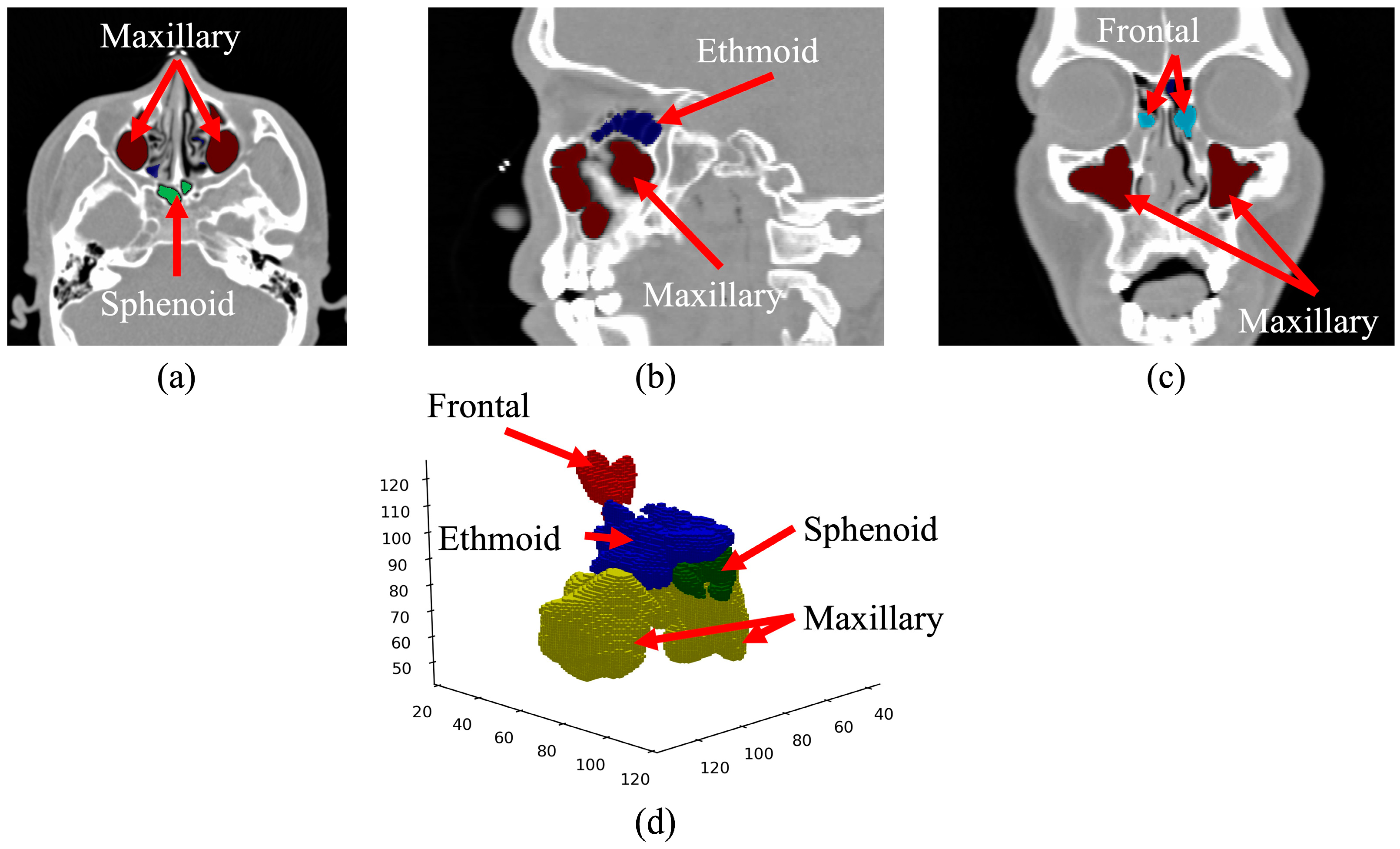

1. Introduction

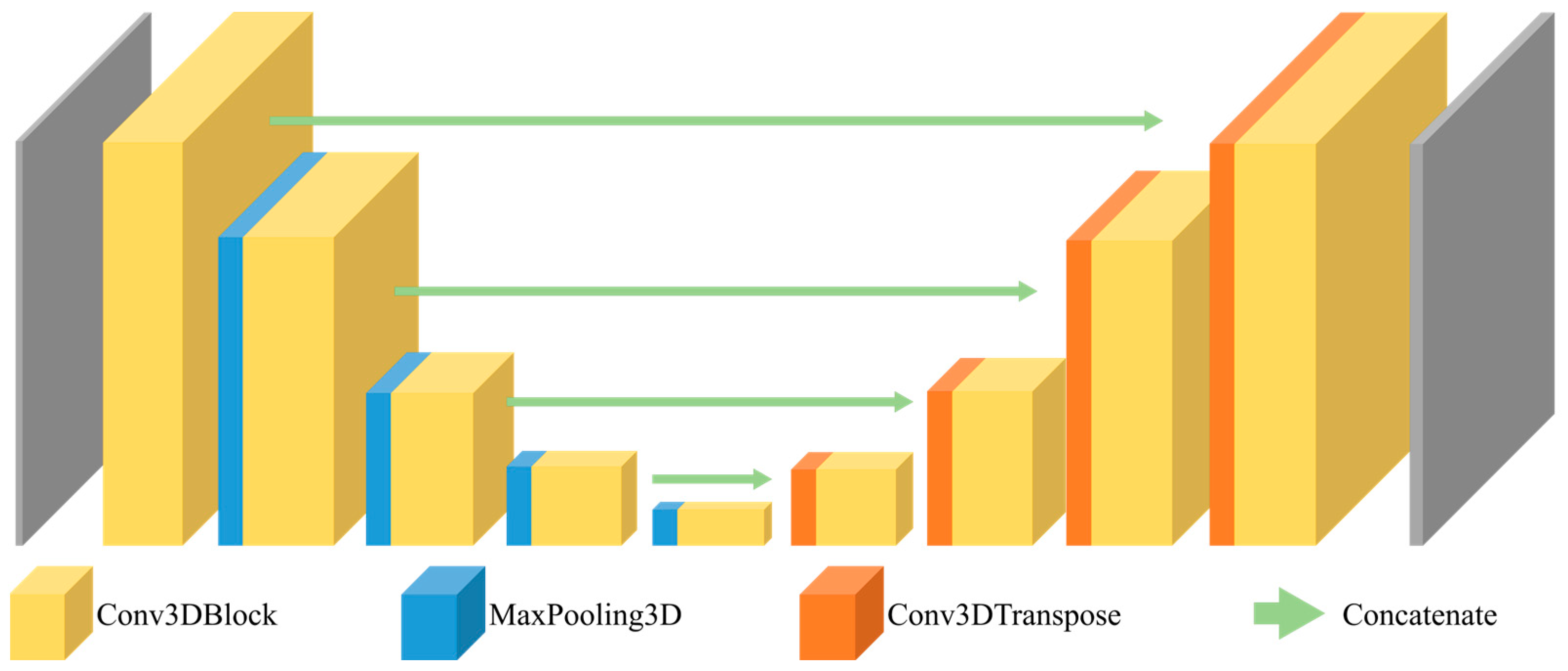

2. Materials and Methods

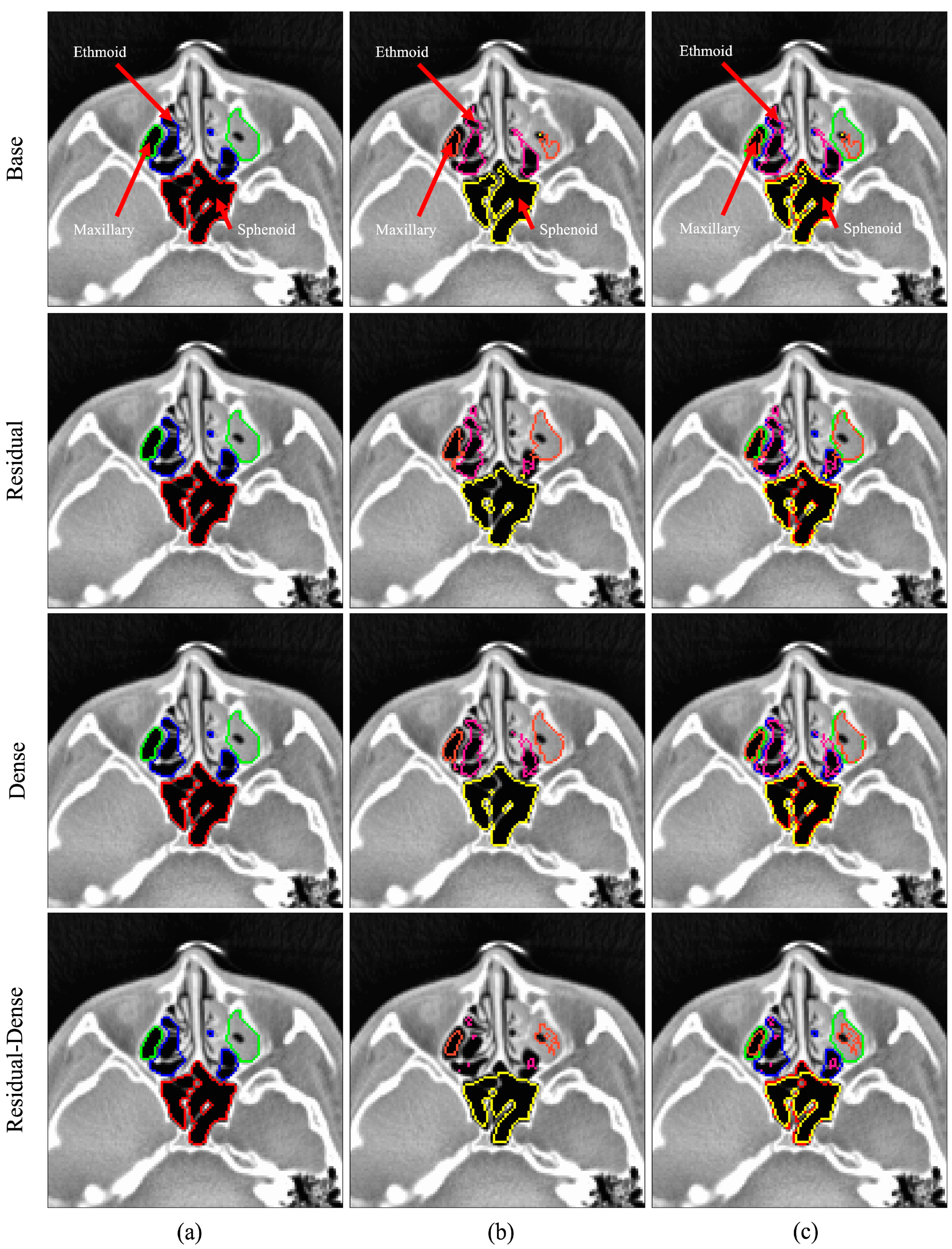

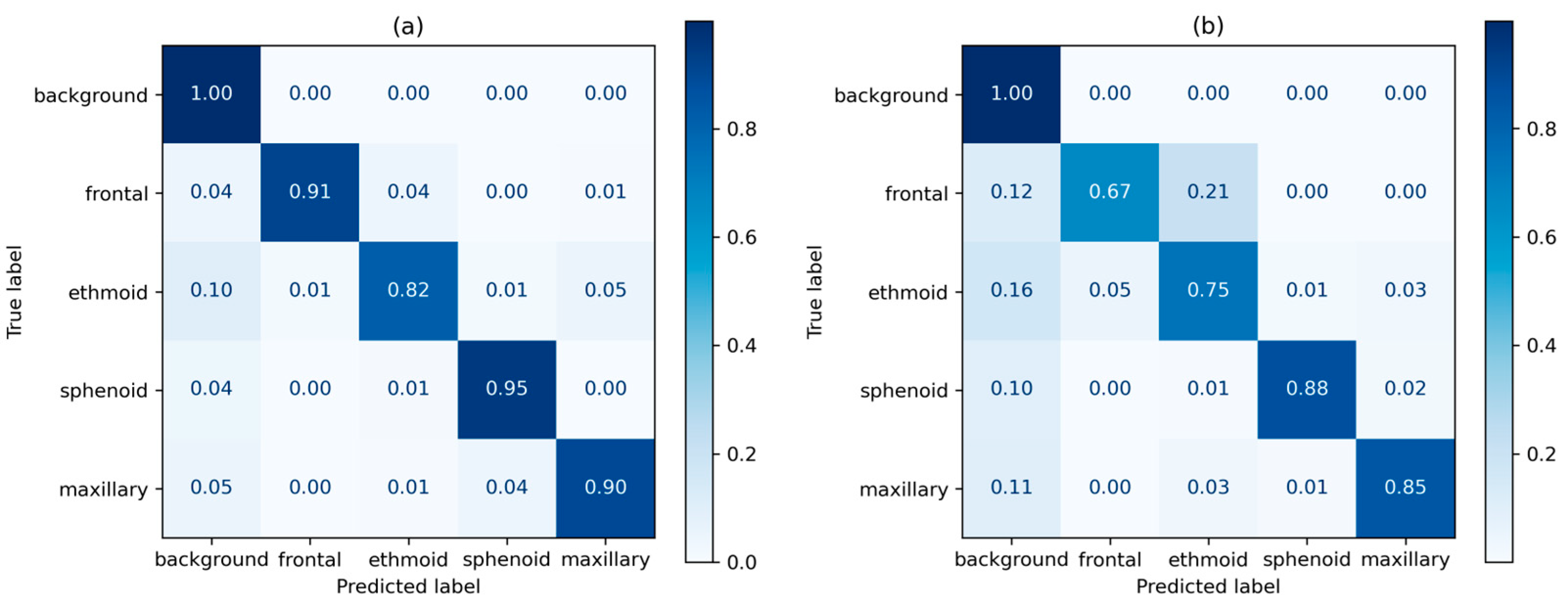

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hamilos, D.L. Chronic Sinusitis. J. Allergy Clin. Immunol. 2000, 106, 213–227. [Google Scholar] [CrossRef] [PubMed]

- Kaliner, M.; Osguthorpe, J.; Fireman, P.; Anon, J.; Georgitis, J.; Davis, M.; Naclerio, R.; Kennedy, D. Sinusitis: Bench to BedsideCurrent Findings, Future Directions. J. Allergy Clin. Immunol. 1997, 99, S829–S847. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharyya, N. Clinical and Symptom Criteria for the Accurate Diagnosis of Chronic Rhinosinusitis. Laryngoscope 2006, 116, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Hoang, J.K.; Eastwood, J.D.; Tebbit, C.L.; Glastonbury, C.M. Multiplanar Sinus CT: A Systematic Approach to Imaging Before Functional Endoscopic Sinus Surgery. Am. J. Roentgenol. 2010, 194, W527–W536. [Google Scholar] [CrossRef] [PubMed]

- O’Brien, W.T.; Hamelin, S.; Weitzel, E.K. The Preoperative Sinus CT: Avoiding a “CLOSE” Call with Surgical Complications. Radiology 2016, 281, 10–21. [Google Scholar] [CrossRef]

- Tingelhoff, K.; Moral, A.I.; Kunkel, M.E.; Rilk, M.; Wagner, I.; Eichhorn, K.W.G.; Wahl, F.M.; Bootz, F. Comparison between Manual and Semi-Automatic Segmentation of Nasal Cavity and Paranasal Sinuses from CT Images. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 5505–5508. [Google Scholar]

- Pirner, S.; Tingelhoff, K.; Wagner, I.; Westphal, R.; Rilk, M.; Wahl, F.M.; Bootz, F.; Eichhorn, K.W.G. CT-Based Manual Segmentation and Evaluation of Paranasal Sinuses. Eur. Arch. Oto-Rhino-Laryngol. 2009, 266, 507–518. [Google Scholar] [CrossRef]

- Varoquaux, G.; Cheplygina, V. Machine Learning for Medical Imaging: Methodological Failures and Recommendations for the Future. NPJ Digit. Med. 2022, 5, 48. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Cheplygina, V.; de Bruijne, M.; Pluim, J.P.W. Not-so-Supervised: A Survey of Semi-Supervised, Multi-Instance, and Transfer Learning in Medical Image Analysis. Med. Image Anal. 2019, 54, 280–296. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.; Jeon, K.J.; Kim, Y.H.; Ha, E.-G.; Lee, C.; Han, S.-S. Deep Learning-Based Fully Automatic Segmentation of the Maxillary Sinus on Cone-Beam Computed Tomographic Images. Sci. Rep. 2022, 12, 14009. [Google Scholar] [CrossRef] [PubMed]

- Morgan, N.; Van Gerven, A.; Smolders, A.; de Faria Vasconcelos, K.; Willems, H.; Jacobs, R. Convolutional Neural Network for Automatic Maxillary Sinus Segmentation on Cone-Beam Computed Tomographic Images. Sci. Rep. 2022, 12, 7523. [Google Scholar] [CrossRef]

- Kuo, C.-F.J.; Liu, S.-C. Fully Automatic Segmentation, Identification and Preoperative Planning for Nasal Surgery of Sinuses Using Semi-Supervised Learning and Volumetric Reconstruction. Mathematics 2022, 10, 1189. [Google Scholar] [CrossRef]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, October 17–21, 2016, Proceedings, Part II 19; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 424–432. [Google Scholar]

- Som, P.M. CT of the Paranasal Sinuses. Neuroradiology 1985, 27, 189–201. [Google Scholar] [CrossRef]

- Melhem, E.R.; Oliverio, P.J.; Benson, M.L.; Leopold, D.A.; Zinreich, S.J. Optimal CT Evaluation for Functional Endoscopic Sinus Surgery. AJNR Am. J. Neuroradiol. 1996, 17, 181–188. [Google Scholar]

- Huang, K.; Rhee, D.J.; Ger, R.; Layman, R.; Yang, J.; Cardenas, C.E.; Court, L.E. Impact of Slice Thickness, Pixel Size, and CT Dose on the Performance of Automatic Contouring Algorithms. J. Appl. Clin. Med. Phys. 2021, 22, 168–174. [Google Scholar] [CrossRef]

- Cantatore, A.; Müller, P. Introduction to Computed Tomography; DTU Mechanical Engineering: Lyngby, Denmark, 2011. [Google Scholar]

- Dyer, S.A.; Dyer, J.S. Cubic-Spline Interpolation. 1. IEEE Instrum. Meas. Mag. 2001, 4, 44–46. [Google Scholar] [CrossRef]

- Salem, N.; Malik, H.; Shams, A. Medical Image Enhancement Based on Histogram Algorithms. Procedia Comput. Sci. 2019, 163, 300–311. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Abul Kashem, S.B.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the Effect of Image Enhancement Techniques on COVID-19 Detection Using Chest X-Ray Images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive Histogram Equalization and Its Variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Sørensen, T.; Sørensen, T.; Biering-Sørensen, T.; Sørensen, T.; Sorensen, J.T. A Method of Establishing Group of Equal Amplitude in Plant Sociobiology Based on Similarity of Species Content and Its Application to Analyses of the Vegetation on Danish Commons. Biol. Skr. 1948, 5, 1–34. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Azad, R.; Aghdam, E.K.; Rauland, A.; Jia, Y.; Avval, A.H.; Bozorgpour, A.; Karimijafarbigloo, S.; Cohen, J.P.; Adeli, E.; Merhof, D. Medical Image Segmentation Review: The Success of U-Net. arXiv 2022, arXiv:2211.14830. [Google Scholar]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Lee, K.; Zung, J.; Li, P.H.; Jain, V.; Seung, H.S. Superhuman Accuracy on the SNEMI3D Connectomics Challenge. arXiv 2017, arXiv:1706.00120. [Google Scholar]

- Kolařík, M.; Burget, R.; Uher, V.; Dutta, M.K. 3D Dense-U-Net for MRI Brain Tissue Segmentation. In Proceedings of the 2018 41st International Conference on Telecommunications and Signal Processing (TSP), Athens, Greece, 4–6 July 2018; pp. 1–4. [Google Scholar]

- Sarica, B.; Seker, D.Z.; Bayram, B. A Dense Residual U-Net for Multiple Sclerosis Lesions Segmentation from Multi-Sequence 3D MR Images. Int. J. Med. Inform. 2023, 170, 104965. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Mynatt, R.G.; Sindwani, R. Surgical Anatomy of the Paranasal Sinuses. In Rhinology and Facial Plastic Surgery; Springer: Berlin/Heidelberg, Germany, 2009; pp. 13–33. [Google Scholar]

| Age Group | Male | Gender Ratio | Female | Gender Ratio | Total | Ratio by Age |

|---|---|---|---|---|---|---|

| 10–20 | 6 | 40.00% | 9 | 60.00% | 15 | 7.58% |

| 20–30 | 15 | 68.18% | 7 | 31.82% | 22 | 11.11% |

| 30–40 | 21 | 84.00% | 4 | 16.00% | 25 | 12.63% |

| 40–50 | 14 | 63.64% | 8 | 36.36% | 22 | 11.11% |

| 50–60 | 34 | 62.96% | 20 | 37.04% | 54 | 27.27% |

| 60–70 | 32 | 71.11% | 13 | 28.89% | 45 | 22.73% |

| 70–80 | 8 | 72.73% | 3 | 27.27% | 11 | 5.56% |

| 80– | 2 | 50.00% | 2 | 50.00% | 4 | 2.02% |

| Total | 132 | 66.67% | 66 | 33.33% | 198 | 100% |

| 3D U-Net | Residual | Dense | Residual-Dense | |||||

|---|---|---|---|---|---|---|---|---|

| Count | Parameter | Layer | Parameter | Layer | Parameter | Layer | Parameter | Layer |

| block 1 | 7376 | 4 | 4456 | 13 | 42,352 | 10 | 84,480 | 17 |

| block 2 | 41,536 | 4 | 19,840 | 13 | 125,088 | 9 | 388,416 | 17 |

| block 3 | 166,016 | 4 | 78,592 | 13 | 499,008 | 9 | 1,550,976 | 17 |

| block 4 | 663,808 | 4 | 312,832 | 13 | 1,993,344 | 9 | 6,198,528 | 17 |

| block 5 | 2,654,720 | 3 | 1,248,256 | 12 | 2,657,664 | 8 | 24,783,360 | 16 |

| block 6 | 1,589,632 | 4 | 517,632 | 13 | 4,117,504 | 9 | 10,622,208 | 18 |

| block 7 | 397,504 | 4 | 129,792 | 13 | 1,063,424 | 9 | 2,656,896 | 18 |

| block 8 | 99,424 | 4 | 32,640 | 13 | 266,496 | 9 | 664,896 | 18 |

| block 9 | 24,880 | 4 | 8256 | 13 | 73,872 | 9 | 138,624 | 18 |

| Output | 85 | 1 | 165 | 1 | 85 | 1 | 4325 | 1 |

| Total | 5,644,981 | 36 | 2,352,461 | 117 | 10,838,837 | 82 | 47,092,709 | 157 |

| 3D U-Net/Dense 3D U-Net | Residual 3D U-Net/Residual-Dense 3D U-Net | ||||

|---|---|---|---|---|---|

| Name | Feat Maps (Input) | Feat Maps (Output) | Feat Maps (Input) | Feat Maps (Output) | |

| Encoding path | conv3d_block_1 | 192 × 128 × 128 × 1 | 192 × 128 × 128 × 16 | 192 × 128 × 128 × 1 | 192 × 128 × 128 × 32 |

| maxpool3d_1 | 192 × 128 × 128 × 16 | 96 × 64 × 64 × 16 | 192 × 128 × 128 × 32 | 96 × 64 × 64 × 32 | |

| conv3d_block_2 | 96 × 64 × 64 × 16 | 96 × 64 × 64 × 32 | 96 × 64 × 64 × 32 | 96 × 64 × 64 × 64 | |

| maxpool3d_2 | 96 × 64 × 64 × 32 | 48 × 32 × 32 × 32 | 96 × 64 × 64 × 64 | 48 × 32 × 32 × 64 | |

| conv3d_block_3 | 48 × 32 × 32 × 32 | 48 × 32 × 32 × 64 | 48 × 32 × 32 × 64 | 48 × 32 × 32 × 128 | |

| maxpool3d_3 | 48 × 32 × 32 × 64 | 24 × 16 × 16 × 64 | 48 × 32 × 32 × 128 | 24 × 16 × 16 × 128 | |

| conv3d_block_4 | 24 × 16 × 16 × 64 | 24 × 16 × 16 × 128 | 24 × 16 × 16 × 128 | 24 × 16 × 16 × 256 | |

| maxpool3d_4 | 24 × 16 × 16 × 128 | 12 × 8 × 8 × 128 | 24 × 16 × 16 × 256 | 12 × 8 × 8 × 256 | |

| Bridge | 12 × 8 × 8 × 128 | 12 × 8 × 8 × 256 | 12 × 8 × 8 × 256 | 12 × 8 × 8 × 512 | |

| Decoding path | conv3d_trans_1 | 12 × 8 × 8 × 256 | 24 × 16 × 16 × 128 | 12 × 8 × 8 × 512 | 24 × 16 × 16 × 256 |

| conv3d_block_5 | 24 × 16 × 16 × 128 | 24 × 16 × 16 × 128 | 24 × 16 × 16 × 256 | 24 × 16 × 16 × 256 | |

| conv3d_trans_2 | 24 × 16 × 16 × 128 | 48 × 32 × 32 × 64 | 24 × 16 × 16 × 256 | 48 × 32 × 32 × 128 | |

| conv3d_block_6 | 48 × 32 × 32 × 64 | 48 × 32 × 32 × 64 | 48 × 32 × 32 × 128 | 48 × 32 × 32 × 128 | |

| conv3d_trans_3 | 48 × 32 × 32 × 64 | 96 × 64 × 64 × 32 | 48 × 32 × 32 × 128 | 96 × 64 × 64 × 64 | |

| conv3d_block_7 | 96 × 64 × 64 × 32 | 96 × 64 × 64 × 32 | 96 × 64 × 64 × 64 | 96 × 64 × 64 × 64 | |

| conv3d_trans_4 | 96 × 64 × 64 × 32 | 192 × 128 × 128 × 16 | 96 × 64 × 64 × 64 | 192 × 128 × 128 × 32 | |

| conv3d_block_8 | 192 × 128 × 128 × 16 | 192 × 128 × 128 × 5 | 192 × 128 × 128 × 32 | 192 × 128 × 128 × 5 | |

| Metrics | Base | Residual | Dense | Residual-Dense |

|---|---|---|---|---|

| F1 score | 0.843 ± 0.699 | 0.785 ± 0.066 | 0.790 ± 0.073 | 0.802 ± 0.093 |

| Accuracy | 0.995 ± 0.003 | 0.992 ± 0.001 | 0.993 ± 0.002 | 0.993 ± 0.003 |

| Precision | 0.857 ± 0.056 | 0.789 ± 0.059 | 0.801 ± 0.060 | 0.822 ± 0.073 |

| Recall | 0.854 ± 0.064 | 0.821 ± 0.060 | 0.822 ± 0.068 | 0.836 ± 0.078 |

| Mean IoU | 0.787 ± 0.071 | 0.703 ± 0.067 | 0.714 ± 0.074 | 0.742 ± 0.092 |

| Metrics | Base | Residual | Dense | Residual-Dense |

|---|---|---|---|---|

| F1 score | 0.793 ± 0.063 | 0.741 ± 0.069 | 0.747 ± 0.074 | 0.740 ± 0.095 |

| Accuracy | 0.994 ± 0.002 | 0.991 ± 0.002 | 0.992 ± 0.002 | 0.991 ± 0.003 |

| Precision | 0.839 ± 0.057 | 0.779 ± 0.067 | 0.785 ± 0.071 | 0.793 ± 0.089 |

| Recall | 0.785 ± 0.067 | 0.755 ± 0.076 | 0.756 ± 0.068 | 0.745 ± 0.092 |

| Mean IoU | 0.717 ± 0.061 | 0.653 ± 0.063 | 0.666 ± 0.074 | 0.670 ± 0.089 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Whangbo, J.; Lee, J.; Kim, Y.J.; Kim, S.T.; Kim, K.G. Deep Learning-Based Multi-Class Segmentation of the Paranasal Sinuses of Sinusitis Patients Based on Computed Tomographic Images. Sensors 2024, 24, 1933. https://doi.org/10.3390/s24061933

Whangbo J, Lee J, Kim YJ, Kim ST, Kim KG. Deep Learning-Based Multi-Class Segmentation of the Paranasal Sinuses of Sinusitis Patients Based on Computed Tomographic Images. Sensors. 2024; 24(6):1933. https://doi.org/10.3390/s24061933

Chicago/Turabian StyleWhangbo, Jongwook, Juhui Lee, Young Jae Kim, Seon Tae Kim, and Kwang Gi Kim. 2024. "Deep Learning-Based Multi-Class Segmentation of the Paranasal Sinuses of Sinusitis Patients Based on Computed Tomographic Images" Sensors 24, no. 6: 1933. https://doi.org/10.3390/s24061933

APA StyleWhangbo, J., Lee, J., Kim, Y. J., Kim, S. T., & Kim, K. G. (2024). Deep Learning-Based Multi-Class Segmentation of the Paranasal Sinuses of Sinusitis Patients Based on Computed Tomographic Images. Sensors, 24(6), 1933. https://doi.org/10.3390/s24061933