Abstract

While striving to optimize overall efficiency, smart manufacturing systems face various problems presented by the aging workforce in modern society. The proportion of aging workers is rapidly increasing worldwide, and visual perception, which plays a key role in quality control, is significantly susceptible to the impact of aging. Thus it is necessary to understand these changes and implement state-of-the-art technologies as solutions. In this study, we conduct research to mitigate the negative effects of aging on visual recognition through the synergistic effects of real-time monitoring technology combining cameras and AI in polymer tube production. Cameras positioned strategically and with sophisticated AI within the manufacturing environment promote real-time defect detection and identification, enabling an immediate response. An immediate response to defects minimizes facility downtime and enhances the productivity of manufacturing industries. With excellent detection performance (approximately 99.24%) and speed (approximately 20 ms), simultaneous defects in a tube can be accurately detected in real time. Finally, real-time monitoring technology with adaptive features and superior performance can mitigate the negative impact of decreased visual perception in aging workers and is expected to improve quality consistency and quality management efficiency.

1. Introduction

In this era characterized by continuous technological advancements, industries are increasingly paying attention to implementing smart manufacturing systems. While striving to optimize overall efficiency, these systems confront various problems presented by the aging workforce in contemporary society. Changes in human physical and cognitive abilities are inevitable owing to aging, and it is necessary to fully understand these changes and implement state-of-the-art technologies as solutions [1,2,3]. Among these changing conditions, the foremost concern lies in the diminishing visual perception capabilities of aging workers in the manufacturing industry. Advances in healthcare and the increase in life expectancy have precipitated a visible surge in the proportion of older workers either joining the workforce or prolonging their tenure. In Europe, the percentage of the working-age population in the 15–24 age group is expected to drop by 5% by 2040 compared with 1990. In contrast, the 55–64 age group is expected to increase by nearly 6% [4]. It is predicted that by 2025, the proportion of working people over the age of 50 years will be 32% in Europe, 30% in North America, 21% in Asia, and 17% in Latin America. Consequently, many aging workers are affected by age-related problems [5]. This demographic change directly impacts productivity and efficiency because of the potential decline in the physical activity and cognitive ability of aging workers [6,7,8]. In this context, visual perception, which plays a key role in quality control, is susceptible to the effects of aging [5]. Alterations in vision and cognitive processing contribute to a reduction in workers’ ability to identify and monitor products and defects visually. Real-time camera monitoring can be implemented as a solution to this limitation [9,10]. Installing cameras at strategic locations within the manufacturing environment can monitor process errors in real time. In addition, videos can be analyzed in detail using sophisticated artificial intelligence (AI) algorithms to promote real-time defect detection and identification, enabling an immediate response from shopfloor workers. The combination of AI and real-time monitoring surpasses the simple identification of defects and explores solutions to improve the market competitiveness of manufacturing companies [11,12]. The convergence of these two technologies aims to facilitate basic research on simplifying and enhancing defect detection efficiency, overcoming limitations as the visual perception ability of aging workers decreases [1,13,14]. Furthermore, the adaptive nature of a system allows it to evolve and learn over time, perpetually refining its accuracy and performance. Moreover, implementing such an innovative system not only lessens the negative impact of aging on visual perception ability in manufacturing industries but also assists in improving the accuracy and efficiency of defect detection and quality control. Therefore, as the workforce navigates the inevitability of aging, the adaptability of manufacturing industries becomes more important. Hence, the proposed paradigm of real-time monitoring systems, seamlessly interweaving cameras, and AI has emerged as a solution and a transformative force set to address the decline in visual recognition skills among aging workers. Therefore, in this study, we researched visual recognition technology to mitigate the adverse effects of aging among workers through the synergistic effects of real-time monitoring technology that combines cameras and AI in polymer tube production.

Hence, we proposed solutions to address issues such as improving the working environment for aging workers, facilitating adaptation to the work environment in the manufacturing sector. The main objective of this study is to explore various methods for the perfect integration of aging workers and new technologies, aiming to address the visual perception decline in aging workers through technological means. Accordingly, we proposed a solution to the visual perception decline issue among aging workers through the convergence of a real-time monitoring system, cameras, and AI. The proposed solution was applied to the field of polymer tube manufacturing, where defects are detected only with the worker’s eye. A reliable quality assurance method based on advanced quality inspection technology is necessary to overcome the decline in the visual perception and monitoring abilities of aging workers in the manufacturing industry.

Research on detecting surface defects using AI and cameras is currently being carried out in diverse sectors, including metal and polymer compound manufacturing. In many studies, line scan cameras are positioned either solely at the top or at both the top and bottom of the inspection object, allowing the detection of defects with flat cross-sections [15,16]. Additionally, the direct lighting method often causes disturbances. Attempts have been made to alleviate these disturbances using monochrome cameras, yet this approach has its drawbacks, such as creating a detection screen that is challenging for workers to recognize or causing distortion during image processing.

In contrast, our study employs a distinct approach. Through careful camera arrangement and an indirect lighting environment, our methodology can be adapted for inspecting objects with circular shapes or those with non-uniform reflection characteristics. Furthermore, by incorporating advanced technology capable of detecting and intuitively displaying surface defects with high performance in the same RGB area as reality, we aim to overcome the challenges faced by manufacturing fields and aging workers.

The most significant contribution of this study is the development of a new understanding and solutions for addressing visual perception decline issues among aging workers. Our results aim to expand knowledge in the manufacturing industry and address the negative impact of aging on workers by leveraging the synergistic effect of real-time monitoring technology combining cameras and AI in polymer tube manufacturing.

2. Experimental Method

2.1. Defect Detection Target

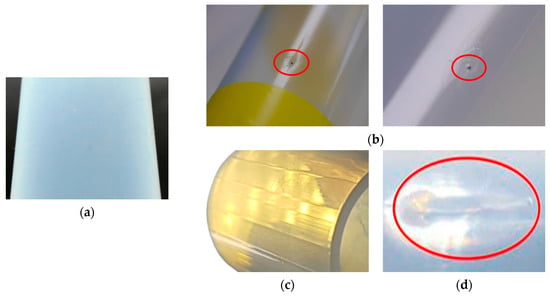

Among polymers, perfluoroalkoxy (PFA) tubes, known for their excellent chemical and corrosion resistances, are widely used to transport chemical substances [17]. However, typical defects occur on the tubing surface during the extrusion of PFA tubes. As shown in Figure 1b–d, the defects exhibit unique characteristics depending on their causes [18,19,20]. These defects can directly affect the manufacturing quality and durability of the PFA tubes [21]. Moreover, they can pose a fatal threat to worker safety in an industrial setting that handles hazardous substances and may lead to industrial accidents [22,23].

Figure 1.

Tube surfaces: (a) normal; (b) inclusion; (c) scratch; (d) lumpy surface.

In this study, we aimed to identify and detect defects that frequently occur on the surface of PFA tubes using a real-time monitoring technology that combines cameras and AI. Figure 1a shows the surface of a flawless translucent normal tube. Figure 1b shows an inclusion formed during the tube extrusion process when foreign substances, such as dust, adhere to the hot surface of the tube and undergo carbonization. Figure 1c shows a scratch that occurs because of physical friction between the tube surface and another object. Compared to the normal tube surface, inclusions appear as black spots on the tube surface, whereas scratches are visible as white lines.

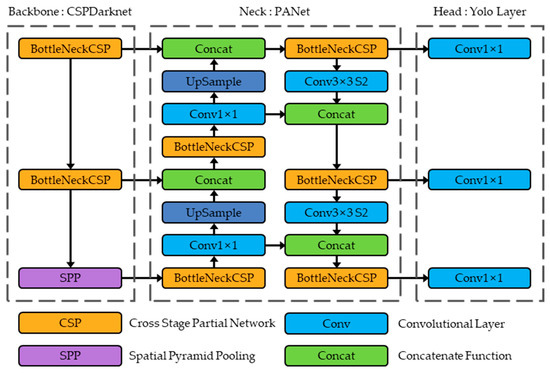

2.2. AI Algorithm for Defect Detection

Previous studies have aimed to identify and detect the quality or defects of products using cameras and image-processing algorithms for many years [24,25]. However, the filter-based algorithm used in image processing is a simple filtering algorithm that extracts the characteristics of the data by filtering based on a threshold, and its performance depends on the applied threshold. However, its detection performance is limited when the inspection target has uneven reflection characteristics or the distinction of defect areas is ambiguous. Furthermore, surface defects occurring at industrial sites can be small, and multiple defects may occur simultaneously. Therefore, relying solely on filtering and image processing for defect detection is inappropriate.

In this study, we implemented real-time monitoring technology using YOLOv5 (You Only Look Once version 5), an artificial neural network for object detection in a one-stage detector. Figure 2 depicts the architecture of YOLOv5 [26]. Its advantages include excellent detection performance and speed, better than the R-CNN series of algorithms that evolved as two-stage detectors in the early object detection field [26,27]. This AI algorithm is suitable for the real-time detection of simultaneous defects on the surfaces of PFA tubes, which are extruded at speeds ranging from a minimum of 0.004 to 0.012 m/s, by enabling the classification and localization of defects precisely and rapidly.

Figure 2.

Architecture of YOLOv5 [26].

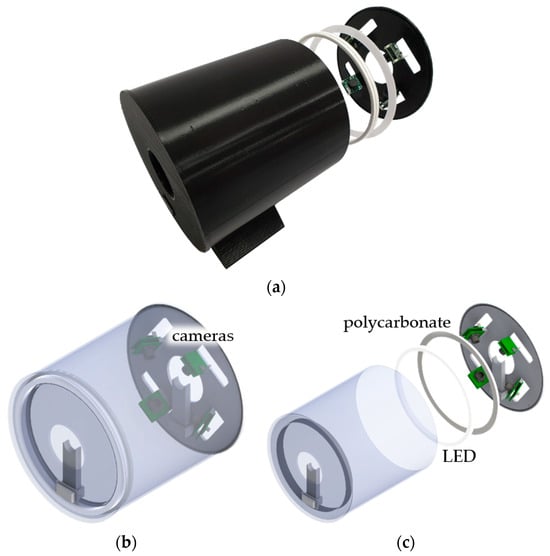

2.3. Development of Monitoring Housing

Figure 3 depicts a cylindrical (Φ 210 × 206 mm) monitoring housing prototype produced using a 3D printer. It was designed to prevent and block disturbance factors that can obscure the characteristics of PFA tubes’ surface defects. Composite resins, metals, and other materials, including PFA tubes, exhibit uneven reflective properties owing to their surrounding environment and lighting conditions. Therefore, considering the reflective characteristics of the subject under inspection, a mining and monitoring environment for defect image data should be designed [28]. The interior of the monitoring housing was constructed using black PLA filaments to create a dark room environment, ensuring that it was not affected by external lighting. Furthermore, the ring polycarbonate (Φ 200 mm) was placed on the front of the surface-emitting LED (Φ 190 mm) with no prominent light spots, minimizing the impact of interior lighting by indirect lighting conditions and uniform light diffusion.

Figure 3.

Monitoring housing: (a) prototype; (b) assembly diagram; (c) decomposition diagram.

A USB camera module (See3CAM_CU135) was positioned to enclose the PFA tube to mine and monitor the defect image data. Optical and physical design elements, such as the minimum focus distance, field of view, and reflective properties, were simultaneously considered. Four cameras (n = 4) were equally spaced perpendicular to the direction of tube movement, allowing for 360° simultaneous monitoring of the tube circumference.

Furthermore, the monitoring housing was designed modularly, allowing for easy adjustment of the number of cameras (n). This is applicable to the surface defect monitoring of PFA tubes with various outer diameters ranging from approximately 3 to 60 mm.

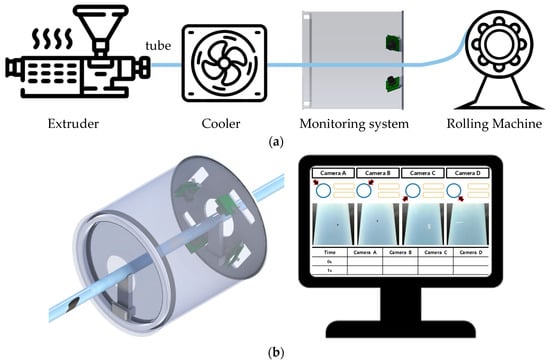

The real-time monitoring system, as shown schematically in Figure 4a, can be integrated into the production process without hindering the operation machinery. The detection and notification of defects through real-time monitoring, as shown in Figure 4b, can help workers to respond promptly.

Figure 4.

Schematic of a real-time monitoring system: (a) integrated into the production process; (b) defect detection notification screen.

2.4. Defect Monitoring Technology Implementation

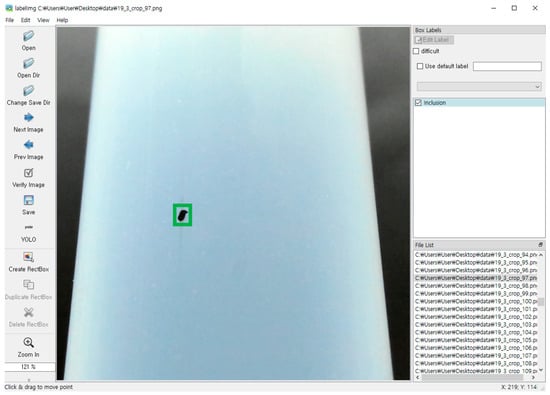

Figure 5a shows the environment used for mining surface defect data. In Figure 5b, samples of surface defects found on PFA tubes within an industrial setting are present. These defects were captured by a camera located within the monitoring housing, resulting in a dataset of 2000 defect images (1000 inclusions and 1000 scratches). As illustrated in Figure 6, the distinct features of these defects (inclusions and scratches) were meticulously specified with a ground truth bounding box using the LabelImg Tool for supervised learning. This process enabled the trained object detection algorithm to effectively generalize to various defect types and accurately predict new or untrained defects.

Figure 5.

Data mining environment and samples: (a) data mining using monitoring housing; (b) surface defects samples.

Figure 6.

Defect labeling using LabelImg tool.

The performance of an artificial neural network trained on insufficient, biased, or monotonous data and lacking prominent features is not optimal. This common error in AI networks is called overfitting or underfitting [29]. Thus, defect image data should be mined and composed to prevent overfitting and underfitting.

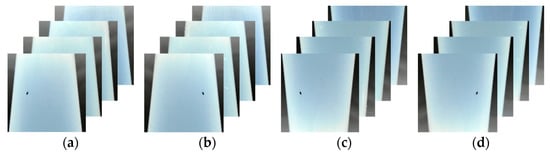

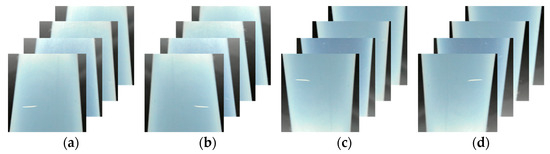

Several studies have demonstrated that data augmentation through image processing, such as rotation, flipping, blurring, brightness, and contrast adjustment, improves the performance of AI networks in common error prevention [30,31,32]. Rotation and flipping are geometric processing techniques which transform image data pixels using spatial and geometric calculations such as scaling, rotation, and translation. Figure 7 and Figure 8 show the augmented defect images created using the horizontal flip, vertical flip, and 180° rotation techniques. In this study, 8000 defect image data points were constructed using geometric processing for data augmentation. In addition, the training, validation, and test data were divided into a 6:2:2 ratio to ensure that the data, including the characteristics of defects, would be stratified and used for the supervised learning of the object detection AI networks. The training parameters were an epoch of 1000 and batch size of 32, and the specifications of the PC were Intel i9-10940X @ 3.30 GHz, 128 GB RAM, NVIDA GeForce RTX 2080Ti VRAM 11 GB.

Figure 7.

Data augmentation of inclusion: (a) original data; (b) left/right flip; (c) up/down flip; (d) 180° rotation.

Figure 8.

Data augmentation of scratch: (a) original data; (b) left/right flip; (c) up/down flip; (d) 180° rotation.

2.5. Validation of Trained Model

The performance of the trained object detection model was verified and evaluated using K-fold cross-validation and mean average precision (mAP). K-fold cross-validation is a method that divides an entire dataset into k groups. It iteratively trains an AI network by designating each group as training, validation, or test data to ensure a reliable performance assessment [29,33]. The advantage of K-fold cross-validation is that it provides a more accurate estimate of a model’s performance than simply using a single train–test split. It also helps reduce the impact of random variation in the data by averaging the results over multiple iterations. In addition, K-fold cross-validation helps identify potential problems, such as overfitting or underfitting in the model. In this study, we validated the performance of the object detection model using five iterations. Stratified K-fold cross-validation was used to partition the groups. Stratified K-fold cross-validation ensured that the class proportions in each group were approximately the same as the class proportions of the entire dataset to ensure that the features of the surface defects were not split in a biased manner.

The detection performance of the object detection model was evaluated using mAP K times. The mAP is a validation metric that allows a quantitative comparison of the performance of AI networks using a confusion matrix [29]. The object detection algorithm was trained and validated using the training and validation data, and the mAP was measured using test data that were not used for training each time.

3. Experimental Results

Defect image data were mined in a uniform indirect lighting environment inside a monitoring housing prototype manufactured using a 3D printer and utilized for training. In an industrial setup, the uniform indirect lighting environment of the monitoring housing can be maintained robustly under uneven lighting conditions.

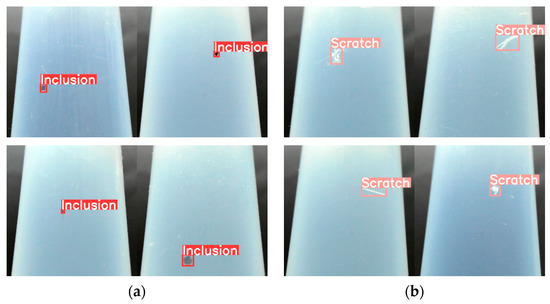

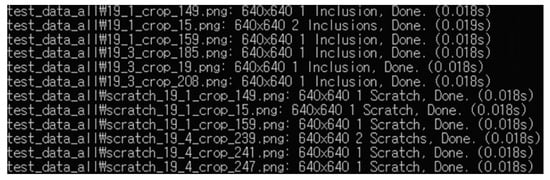

Figure 9 shows the results of the trained object detection AI network model for detecting defects in the test data. The model was verified to classify inclusions and scratches accurately and to indicate the location of the detected defects. Figure 10 shows that the identification time was approximately 20 ms, and it was possible to identify and assess the surface defects on the PFA tubes at approximately 30–50 frames per second. A multicamera system that allows simultaneous monitoring of the entire circumference of the tube was designed to ensure the uninterrupted operation of the tube production facility. This system was demonstrated to enable real-time monitoring when a tube is extruded.

Figure 9.

Results of defect detection: (a) inclusion; (b) scratch.

Figure 10.

Identification time of monitoring system.

Data augmentation through image processing and stratified K-fold cross-validation prevents common errors and improves the performance of AI networks, resulting in reliable outcomes. Table 1 shows the mAP@.5 measured at K = n (n = 1, 2, 3, 4, 5) by K-fold cross-validation. It demonstrated excellent performance with approximately 99.32% accuracy on the training data and approximately 99.24% accuracy on the test data. Furthermore, the vast amount of data accumulated from defect detection can be a decisive advantage of the AI-network-based real-time monitoring technology proposed in this study, allowing for a continuous improvement in defect detection performance.

Table 1.

mAP@.5 of the train and test data.

As stated above, excellent detection performance and technical validity were evaluated and demonstrated by providing objective metrics and quantitative values through mAP measurements and real-time defect-detection tests.

4. Conclusions

In this study, we presented a camera- and AI-network-based real-time monitoring technology for impairments in defect identification and monitoring abilities to propose a solution to reduce the decline in visual perception abilities owing to the aging workforce. The feasibility of this approach was experimentally validated. The following conclusions were drawn from this study:

- -

- With the convergence of appropriate camera placement and AI networks, a synergistic effect can be achieved to facilitate the prompt response of aging workers to defects.

- -

- An immediate response to defects minimizes facility downtime and enhances the productivity of manufacturing industries.

- -

- Real-time monitoring technology with adaptive features and superior performance can mitigate the negative impact of decreased visual perception in aging workers and is expected to improve quality consistency and quality management efficiency.

- -

- By implementing sophisticated yet simple real-time monitoring technologies, manufacturing industries can overcome limitations and promote coexistence with aging workers, securing market competitiveness.

This technology can be implemented to detect inclusions, scratches, and other defects, such as porosity, that may occur during extrusion. Furthermore, in a follow-up study, we plan to compare the results using other RPN (Region Proposal Network) algorithms, such as Faster R-CNN, in addition to the YOLO algorithm. Finally, as suggested in this research, AI-network-based real-time monitoring technology is expected to be scalable for developing surface defect detection monitoring technology for polymers such as PFA tubes and shape-critical materials such as plastic products or semiconductor components.

Author Contributions

Conceptualization, B.H.K.; methodology, B.H.K. and Y.H.S.; software, C.M.J.; validation, C.M.J. and W.K.J.; formal analysis, C.M.J. and W.K.J.; investigation, C.M.J. and W.K.J.; resources, C.M.J.; data curation, C.M.J.; writing—original draft preparation, C.M.J. and W.K.J.; writing—review and editing, B.H.K. and Y.H.S.; visualization, C.M.J.; supervision, B.H.K. and Y.H.S.; project administration, B.H.K.; funding acquisition, B.H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the “Regional Innovation Strategy (RIS)” through the National Research Foundation of Korea (NRF), funded by the Ministry of Education (MOE) (2022RIS-005), and by the Technology Innovation Program (20012834, Development of CNC control system for smart manufacturing equipment) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Calzavara, M.; Battini, D.; Bogataj, D.; Sgarbossa, F.; Zennaro, I. Ageing workforce management in manufacturing systems: State of the art and future research agenda. Int. J. Prod. Res. 2020, 58, 729–747. [Google Scholar] [CrossRef]

- Ellis, R.D. Performance implications of older workers in technological manufacturing environments: A task-analysis/human reliability perspective. Int. J. Comput. Integr. Manuf. 1999, 12, 104–112. [Google Scholar] [CrossRef]

- Takahashi, T.; Kudo, Y.; Ishiyama, R. In Intelli-wrench: Smart navigation tool for mechanical assembly and maintenance. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; Volume 2016, pp. 752–753. [Google Scholar]

- Cardás, T. Safer and Healthier Work at Any Age–Final Overall Analysis Report; Publications Office of the European Union: Luxembourg, 2016. [Google Scholar] [CrossRef]

- Peruzzini, M.; Pellicciari, M. A framework to design a human-centred adaptive manufacturing system for aging workers. Adv. Eng. Inform. 2017, 33, 330–349. [Google Scholar] [CrossRef]

- Thun, J.H.; Größler, A.; Miczka, S. The impact of the demographic transition on manufacturing: Effects of an ageing workforce in German industrial firms. J. Manuf. Technol. Manag. 2007, 18, 985–999. [Google Scholar] [CrossRef]

- Barrios, J.; Reyes, K.S. Bridging the Gap: Using Technology to Capture the Old and Encourage the New. IEEE Ind. Appl. Mag. 2016, 22, 40–44. [Google Scholar] [CrossRef]

- Bouma, H. Accommodating older people at work. Gerontechnology 2013, 11, 489–492. [Google Scholar] [CrossRef]

- Vogel, C.; Walter, C.; Elkmann, N. Safeguarding and supporting future human-robot cooperative manufacturing processes by a projection- and camera-based technology. Procedia Manuf. 2017, 11, 39–46. [Google Scholar] [CrossRef]

- Vogel, C.; Elkmann, N. Novel safety concept for safeguarding and supporting humans in human-robot shared workplaces with high-payload robots in industrial applications. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 315–316. [Google Scholar] [CrossRef]

- Arinez, J.F.; Chang, Q.; Gao, R.X.; Xu, C.; Zhang, J. Artificial intelligence in advanced manufacturing: Current status and future outlook. J. Manuf. Sci. Eng. 2020, 142, 110804. [Google Scholar] [CrossRef]

- Chien, C.-F.; Dauzère-Pérès, S.; Huh, W.T.; Jang, Y.J.; Morrison, J.R. Artificial intelligence in manufacturing and logistics systems: Algorithms, applications, and case studies. Int. J. Prod. Res. 2020, 58, 2730–2731. [Google Scholar] [CrossRef]

- Jovane, F.; Westkämper, E.; Williams, D.; Jovane, F.; Westkämper, E.; Williams, D. The ManuFuture road to high-adding-value competitive sustainable manufacturing. The ManuFuture Road: Towards Competitive and Sustainable High-Adding-Value. Manufacturing 2009, 149–163. [Google Scholar] [CrossRef]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Lei, H.; Wang, B.; Wu, H.; Wang, A. Defect Detection for Polymeric Polarizer Based on Faster R-CNN. J. Inf. Hiding Multim. Signal Process. 2018, 9, 1414–1420. [Google Scholar]

- Jiang, Q.; Tan, D.; Li, Y.; Ji, S.; Cai, C.; Zheng, Q. Object detection and classification of metal polishing shaft surface defects based on convolutional neural network deep learning. Appl. Sci. 2019, 10, 87. [Google Scholar] [CrossRef]

- Ministry of Trade, Industry and Energy, Core Engineering Composite Fluoropolymer Manufacturing Technology Development Technical Support Performance Report, 2003. Available online: https://scienceon.kisti.re.kr/srch/selectPORSrchReport.do?cn=TRKO201200005134# (accessed on 6 January 2024).

- Vergnes, B. Extrusion defects and flow instabilities of molten polymers. Int. Polym. Process. 2015, 30, 3–28. [Google Scholar] [CrossRef]

- Chemours, R. Extrusion Processing Guide, 2018. Available online: https://www.scribd.com/document/545512545/teflon-ptfe-ram-extrusion-guide (accessed on 6 January 2024).

- Arif, A.F.M.; Sheikh, A.K.; Qamar, S.Z.; Raza, M.K.; Al-Fuhaid, K.M. Product defects in aluminum extrusion and its impact on operational cost. In Proceedings of the 6th Saudi Engineering Conference, Dhahran, Saudi Arabia, 14–17 December 2002; KFUPM: Dhahran, Saudi Arabia, 2002; pp. 14–17. [Google Scholar]

- Khan, J.G.; Dalu, R.S.; Gadekar, S.S. Defects in extrusion process and their impact on product quality. Int. J. Mech. Eng. Robot. Res. 2014, 3, 187. [Google Scholar]

- Moon, J.Y.; Chon, Y.W.; Kim, H.J.; Hwang, Y.W. A study on improvement plan through analysis of chemical accidents in Korea. Korean J. Hazard. Mater. 2016, 4, 30–35. [Google Scholar]

- Oh, J.-K.; Yoon, E.S. Review of expert system applications to chemical process fault diagnosis. J. Inst. Control Robot. Syst. 1987, 674–679. [Google Scholar]

- Yun, J.P.; Jung, D.; Park, C. Surface defect inspection system for hot slabs. J. Inst. Control Robot. Syst. 2016, 22, 627–632. [Google Scholar] [CrossRef]

- Baygin, M.; Karakose, M.; Sarimaden, A.; Akin, E. Machine vision based defect detection approach using image processing. In Proceedings of the International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 16–17 September 2017; IEEE Publications: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Glenn, J. YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 6 January 2024).

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2019, 111, 257–276. [Google Scholar] [CrossRef]

- Chondronasios, A.; Popov, I.; Jordanov, I. Feature selection for surface defect classification of extruded aluminum profiles. Int. J. Adv. Manuf. Technol. 2016, 83, 33–41. [Google Scholar] [CrossRef]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- Tao, X.; Wang, Z.; Zhang, Z.; Zhang, D.; Xu, D.; Gong, X.; Zhang, L. Wire defect recognition of spring-wire socket using multitask convolutional neural networks. IEEE Trans. Compon. Packag. Manuf. Technol. 2018, 8, 689–698. [Google Scholar] [CrossRef]

- Kim, J.; Seo, K. Performance analysis of data augmentation for surface defects detection. Trans. Korean Inst. Electr. Eng. 2018, 67, 669–674. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar] [CrossRef]

- Lee, Y.E.; Choi, N.J.; Byun, Y.H.; Kim, D.W.; Kim, K.C. Rubber O-ring defect detection system using K-fold cross validation and support vector machine. J. Korean Soc. Vis. 2021, 19, 68–73. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).