Abstract

This paper introduces a method for quantifying the three-dimensional deformation of ground targets and outlines the associated process. Initially, ground-based synthetic aperture radar was employed to monitor the radial deformation of targets, and optical equipment monitored pixel-level deformation in the vertical plane of the line of sight. Subsequently, a regression model was established to transform pixel-level deformation into two-dimensional deformation based on a fundamental length unit, and the radar deformation monitoring data were merged with the optical deformation monitoring data. Finally, the fused data underwent deformation, resulting in a comprehensive three-dimensional deformation profile of the target. Through physical data acquisition experiments, the comprehensive three-dimensional deformation of targets was obtained and compared with the actual deformations. The experimental results show that the method has a relative error of less than 10%, and monitoring accuracy is achieved at the millimeter level.

1. Introduction

In recent years, frequent geological disasters have arisen from the disruption of geological structures triggered by crustal movements such as volcanoes and earthquakes, shifts in climate patterns due to global warming, and human activities such as mineral extraction and deforestation [1,2]. Beyond natural calamities, deformation issues affect structures such as buildings. Construction facilities such as bridges, dams, and high-rise buildings commonly undergo specific deformations and settlements throughout their life cycles, sometimes leading to severe incidents, e.g., collapse [3].

Geological disasters typically unfold gradually, with deformation accumulating over the long term rather than occurring suddenly [4]. Preceding major disaster events, potential hazards often undergo gradual and incremental deformations, accumulating slowly until instability arises [5,6]. Identifying these subtle deformation processes in a timely manner and issuing early warnings can enable targeted disaster mitigation, effectively preventing casualties and economic losses [7]. Therefore, it is necessary to carry out the long-term, continuous, and real-time monitoring of slopes and mines, dilapidated buildings, and large buildings with landslide and collapse risks, evaluate their stability, and make early warnings if necessary [8].

Differential interferometric synthetic aperture radar (DInSAR) has been a key player in surface monitoring, boasting high precision, extensive coverage, and all-weather capabilities since its inception. However, challenges persist for space-based SAR in terms of measurement accuracy and resolution, attributed to issues such as time decorrelation, long spatial baselines, and constraints on electromagnetic wave irradiation angles [9,10]. Ground-based synthetic aperture radar (GB-SAR), following in the footsteps of space-based and airborne SAR, has gained widespread adoption for high-precision deformation monitoring in areas affected by natural disasters, dams, alpine glaciers, and pier structures. This popularity is attributed to its advantages, including a short space-time baseline, high resolution, a brief measurement period, and ease of operation [11,12,13].

As an emerging technology, GB-SAR provides detailed and accurate measurements of ground deformation [14,15]. Despite the significant advancements in GB-SAR research in recent years, challenges persist [16]. Notably, GB-SAR’s limitation in capturing only the projection component of the target’s deformation variable along the radar radial direction can result in underestimated deformations. This limitation becomes particularly evident when a substantial angle exists between the target’s deformation direction and the radar radial direction, causing the projection of even significant deformations to appear very small in GB-SAR [17,18]. This discrepancy poses the risk of missed alarms and potential hazards [19]. In order to address this issue with GB-SAR, some scholars have attempted multi-station monitoring to capture the multi-dimensional deformation of a target. However, this approach introduces increased equipment costs and deployment challenges. Moreover, the current accuracy of this method still remains at the centimeter level [20].

In addition to GB-SAR deformation monitoring, optical imaging deformation technology finds widespread applications in various fields, including materials science and medicine [21]. Optical deformation monitoring captures relevant information in the vertical plane of line of sight (LOS). We aim to leverage optical images to complement the third-dimensional information not attainable through radar monitoring. This approach seeks to achieve comprehensive three-dimensional deformation analysis for ground targets, minimizing the likelihood of missed alarms during monitoring. Furthermore, it provides essential technical support for natural disaster warnings and building deformation monitoring [22,23].

In this paper, a technique for obtaining the comprehensive three-dimensional deformation of ground targets is proposed. Firstly, based on GB-SAR and optical images, radar deformation monitoring, and optical deformation monitoring were carried out, respectively, and a nonlinear fitting regression model was established. The pixel-level deformation obtained by optical deformation monitoring was converted into deformation under the basic length unit, and the deformation monitoring data of the two were fused to solve the comprehensive three-dimensional deformation of targets finally.

Key innovations in this paper include the following:

- Proposing a method to convert pixel deformation quantity from optical deformation monitoring into deformation quantity under a basic length unit (mm).

- Conducting three-dimensional deformation inversion by integrating optical and radar deformation information.

- Establishing a three-dimensional solution model and validating the feasibility and superiority of three-dimensional deformation inversion through measured data. In single-station monitoring scenarios, the accuracy of deformation monitoring within tens of meters reaches the millimeter level, with a controlled relative error below 10%.

The paper is organized as follows: Section 2 delves into the principles of deformation monitoring using GB-SAR interferometry measurements. In Section 3, we present a comprehensive overview of the methods proposed in this article. This includes a novel optical deformation monitoring technology based on fitting a regression model, along with a three-dimensional deformation inversion technology that integrates optical and radar images. Section 4 validates the feasibility and effectiveness of the proposed method through experimental testing. In conclusion, Section 5 provides a comprehensive summary and the concluding remarks of the paper.

2. GB-SAR Deformation Monitoring

The image obtained by GB-SAR monitoring is a single-look-complex (SLC), and its value is distributed in . For N radar SLC images in a time series, differential interference processing is performed on each target point at different times:

where is the interference phase of the n point, is the i radar echo image, is the j radar echo image, and the symbol ∗ is conjugate. The interference phase of the n target point in the differential interferogram can be modeled as

where is the LOS variable, is the atmospheric delay phase introduced by the change in atmospheric refractive index at different times, is the phase of incoherent noise, which can be filtered by using a low-pass phase filter. is the fuzzy phase, and k is an integer, which represents the ambiguity [24].

However, it should be noted that in this experiment, we assume that the error phase, such as the atmospheric phase, has little effect, and our deformation monitoring target is a strong scatterer, which is less affected by the error. Therefore, the method proposed in this paper does not consider the influence of atmospheric phase error and random noise error and directly solves the deformation through the interference phase obtained by GB-SAR [25].

GB-SAR deformation monitoring technology uses differential interferometry. This technique fuses and analyzes two SAR complex images obtained by radar in the same target area. By calculating the target phase information obtained by the radar at different times, the millimeter-level precision deformation information of the target is obtained. Each pixel in the image obtained by GB-SAR is a complex number, and its amplitude is usually used to interpret the imaging scene and study the scattering characteristics. When GB-SAR is used for deformation measurements, the radar position is fixed, and the spatial baseline between different images is zero. Differential interferometry can be achieved by using complex conjugate multiplication on the corresponding pixels of two images. GB-SAR is mainly used to monitor the same area on parallel orbits and obtain two (or more) SLC images to form interference [26].

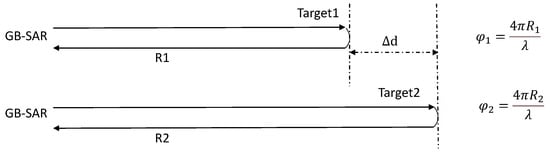

Figure 1 depicts the schematic diagram of GB-SAR differential interference deformation monitoring. Target 1 denotes the initial position of the target, whereas Target 2 corresponds to its position after displacement. The radar performs measurements on these targets both before and after deformation, capturing their respective phase information. Subsequently, the phase difference between the two is determined:

Figure 1.

Principle of differential interferometry.

By leveraging the correlation between phase difference and distance, we can extract the deformation information of the target projected in the LOS direction of the GB-SAR:

where is the phase difference, is the wavelength of the radar, and is the deformation information of the target projected in the LOS direction.

3. Methodology

3.1. Method Flowchart

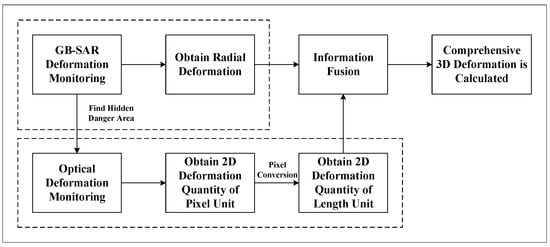

Since optical deformation monitoring can provide information within the plane perpendicular to the line of sight, we aim to utilize optical images to supplement the three-dimensional deformation information that cannot be obtained through radar monitoring. The workflow of the proposed method is shown in Figure 2. The main steps include radial deformation monitoring based on GB-SAR, optical deformation monitoring, pixel deformation conversion to deformation under the basic length unit, data information fusion, and three-dimensional deformation calculation.

Figure 2.

The process of three-dimensional deformation monitoring.

3.2. Optical Deformation Monitoring

The deformation monitoring technology based on optical images is a method that can be used to monitor the deformation of objects by using optical measurement principles and imaging technology [21]. This method obtains two or more optical images before and after the deformation of the target and then analyzes the information in the image to obtain the deformation information. Deformation monitoring technology based on optical images is widely used in materials science, physics, medicine, and other fields. Optical images are used to measure the expansion, bending, and torsion of materials. The digital image correlation method is one of the most commonly used optical deformation monitoring methods. This method uses two digital images before and after the deformation of the specimen to obtain the deformation information of the region of interest through correlation calculation [27]. The basic principle is that the region of interest in the image before deformation is meshed, and the divided sub-regions are regarded as rigid motion. Then, for the selected sub-region, the correlation calculation is carried out according to the pre-defined correlation function by using a certain method, and the region with the maximum cross-correlation coefficient with the sub-region is found in the deformed image, that is, the position of the sub-region after deformation, and then the displacement of the sub-region is obtained. This method has the advantages of a strong anti-interference ability and high measurement accuracy [28].

The goal of correlation calculation is to assess the similarity between the template and the target image, underscoring the significance of choosing an appropriate correlation function in the digital image correlation method. Common correlation functions include direct correlation, normalized correlation, and covariance correlation functions. The expression for the correlation function is defined as follows:

where represents the information entropy of picture X; the expression used for its calculation is

where represents the total number of pixels in image X with a grayscale value of i, N represents the total number of grayscale levels in image X, and represents the probability of grayscale i appearing. represents the joint entropy of the two images, X and Y:

and represents the mutual information of two pictures: X and Y. Given the suboptimal anti-noise performance of the direct correlation function, the commonly employed alternatives are the normalized correlation function and the covariance correlation function:

Utilizing the correlation function calculation to identify the position with the maximum correlation coefficient allowed us to determine the initial location of the target in the optical image prior to any target displacement. This enables the calculation of the pixel-level deformation of the target in the two-dimensional optical plane.

3.3. Pixel Conversion Based on Fitting Regression

3.3.1. The Proposed Idea

The optical deformation monitoring methods represented by the digital image correlation method have many advantages. However, these optical deformation monitoring methods can only obtain pixel-level deformation, which is widely used in materials science, physics, and other fields. In these fields, it is usually only necessary to qualitatively analyze the deformation of the target or to use the size of the target as a priori known information so as to roughly convert the pixel deformation into the actual deformation. Whether it is a qualitative analysis using pixel deformation quantity or rough conversion using prior information, these methods cannot be applied to physical target deformation monitoring in actual scenes. Therefore, how to apply optical deformation monitoring to the actual deformation monitoring field and convert the pixel deformation measured at any position into actual deformation becomes a difficult problem.

In this paper, a method based on regression fitting is proposed to convert the pixel deformation quantity obtained by optical deformation monitoring into the deformation quantity under the basic length unit. By fitting the optical sensor’s optical deformation and monitoring the data of a series of standard samples with a known detection distance, the ratio of pixel deformation at different positions in the fitting range to the deformation under the basic length unit is predicted and obtained. The basic process of the method is shown in Figure 3:

Figure 3.

The basic process of pixel conversion based on fitting regression.

Among them, data acquisition is mainly used to collect the diagonal pixel values of standard samples of the same size at different positions. Data preprocessing mainly includes steps such as image interpolation and edge detection. The prediction result is to obtain the predicted diagonal pixel values of standard samples at different positions based on the fitting model so as to calculate the pixel conversion ratio and finally convert the pixel-level optical deformation into the deformation value under the basic length unit.

3.3.2. Construction Model

The nonlinear transformation observed in camera imaging primarily stems from the multiple refractions occurring within the lens system. Among the components of the imaging system, the lens plays a pivotal role, and the optical transformation within a single lens involves phase transformation:

It can be seen that the influence of a single lens on optics is an exponential function relationship. Therefore, when nonlinearly fitting camera imaging, an exponential function model should be established:

where R is the object distance, and P is the diagonal pixel of the standard sample when the detection distance is R. Since a single lens usually contains multiple sets of lenses in actual imaging, in order to obtain better fitting prediction results, the constructed nonlinear fitting model should contain at least two sets of exponential functions:

After obtaining the diagonal pixels of the standard sample at the detection distance , the pixel conversion ratio r can be calculated here. The expression of the pixel conversion ratio is defined as

where both D and P denote the diagonal dimensions of the plane containing a set of identical standard samples at a detection distance, , where D is measured in millimeters (mm), and P is measured in pixels. For the purposes of this study, the standard samples are a set of optical calibration boards with dimensions of 80 mm by 80 mm. Consequently, the diagonal size (D) of this standard sample can be calculated using the formula mm.

For the acquisition of detection distance, the ranging function of GB-SAR can be used. In the actual deformation monitoring scene, GB-SAR can directly read the detection distance of the corresponding position after monitoring the deformation point. When the optical sensor is placed in the radar center of GB-SAR, the detection distance obtained by GB-SAR is .

Through the nonlinear fitting model, the pixel P of the standard sample at any position can be obtained by using Formula (13), and then the pixel conversion ratio can be obtained by using Formula (14) so as to realize the function of converting the pixel deformation obtained in Section 3.3.1 based on an optical image under deformation and the basic length.

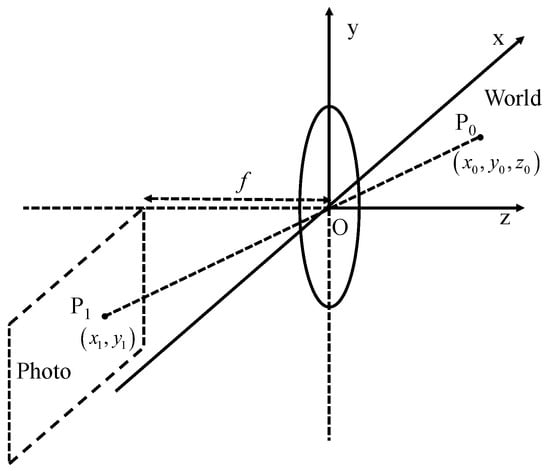

3.4. Image Fusion

Compared with spaceborne SAR imaging, GB-SAR imaging has the problem of a large detection range span, so there is serious spatial variation in radar signal processing, and there is obvious geometric distortion in its imaging [29]. Consequently, the conventional approach of fusing spaceborne SAR and optical images is not applicable to GB-SAR and optical image fusion. This paper presents a method for combining GB-SAR and optical images through the utilization of co-ordinate system transformation, achieved by employing transformation matrices and control points [30]. Firstly, there is a target point, , in the actual scene, and its co-ordinates are . In the optical image, the point corresponding to is , and its co-ordinates are , as shown in Figure 4.

Figure 4.

Schematic diagram of optical imaging co-ordinate transformation.

According to the lens imaging principle of optical cameras, it can be seen that

where f is the focal length of the camera; the transformation relationship between the actual scene and the optical image can be obtained:

where matrix A can be proved to be an invertible matrix. The corresponding point of the target point on the GB-SAR image is , and the co-ordinate under the rectangular co-ordinate system is . When the azimuth resolution of the GB-SAR is , and the range resolution is , the transformation relationship between the actual scene and the optical image can be obtained:

It can also be proved that matrix B is invertible; thus

Matrix C is the transformation matrix, which can be calculated by control points. In practical data collection scenarios, typically four–six corner reflectors are employed as pre-defined control points. Following the acquisition of both optical and GB-SAR images, the co-ordinate information of these control points can be manually extracted from the images.

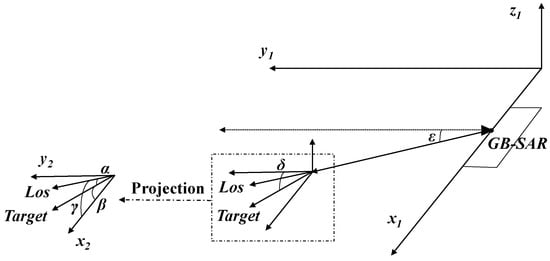

3.5. Construct a Three-Dimensional Solution Model

After obtaining the radial deformation and two-dimensional plane deformation of the target, the actual three-dimensional deformation of the target can be solved by establishing a three-dimensional solution model. A basic three-dimensional deformation solution model is shown in Figure 5.

Figure 5.

Three-dimensional deformation model.

Where is the pitch angle of the target, and is the pitch angle of GB-SAR LOS, projecting the target deformation and radar LOS to the plane; in the horizontal plane, is the angle between the radar radial direction and the target deformation direction, is the target azimuth, and is the azimuth angle of GB-SAR. The three-dimensional deformation solution can be realized by using the following formula:

where , , and are the monitoring results for the X-axis, Z-axis, and radar radial direction, respectively, and is the real deformation of the target. Finally, the radial deformation measured by GB-SAR and the plane deformation measured by the optical camera are substituted into the model to obtain the complete deformation, .

4. Experiments and Analysis

In order to demonstrate the accuracy and efficacy of the method detailed in this paper, we carried out experiments outdoors to obtain measured data for verification. Specifically, Section 4.1 examines the pixel conversion method’s effectiveness, Section 4.2 examines the impact of image fusion, and Section 4.3 shows the obtainment of the comprehensive three-dimensional deformation quantity of the target.

4.1. Optical Deformation Monitoring Based on Fitting Regression Models

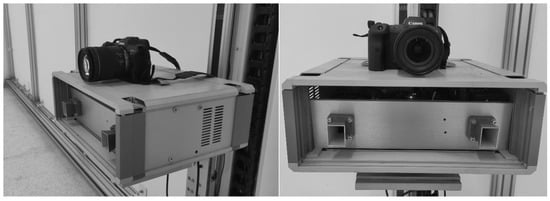

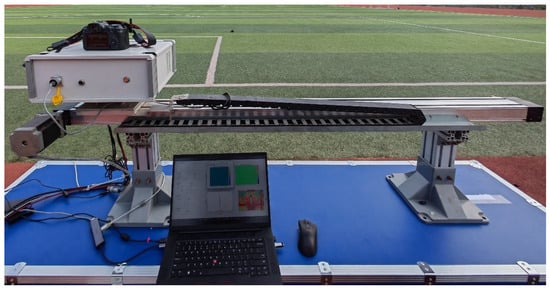

Firstly, the fitting parameters of Formula (13) were solved by practical experiments. In order to obtain the detection range of the targets, the experimental system includes an X-band GB-SAR system and an optical camera, as shown in Figure 6.

Figure 6.

Optical GB-SAR deformation monitoring system.

The parameters of the system are shown in Table 1.

Table 1.

Index parameters of the system.

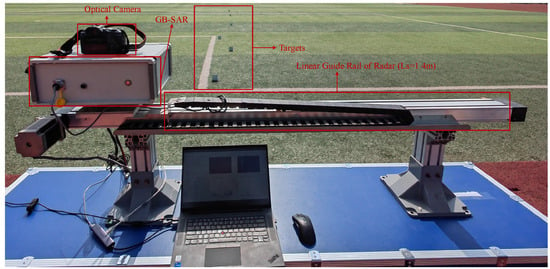

The lens of the optical camera is placed on the center of the radar line of sight of the GB-SAR so that the GB-SAR can obtain information such as the detection range and azimuth angle of the targets. The experimental scene is shown in Figure 7.

Figure 7.

Optical deformation monitoring scene.

Identical optical calibration plates are placed in the experimental site as experimental targets. The pattern size of the optical calibration plate is 80 × 80 mm, and its diagonal size under the basic length unit is D = 113.12 mm. The detection distance of these optical calibration plates is collected for fitting parameters. The detection distance and diagonal pixels of the six calibration boards are obtained, as shown in Table 2.

Table 2.

Obtaining fitting data.

By using the nonlinear model in 3.3.2 to fit the parameters a = 756.3, b = −0.5089, c = 109.3, d = −0.04932, the nonlinear regression model in the fitting range is obtained:

Repeat the experiment by changing the position of the calibration plate to obtain the detection distance and diagonal pixels of the six calibration plates and compare them with the fitting prediction data of Equation (24), as shown in Table 3:

Table 3.

Comparison of fitting prediction effect.

The root mean square error (RMSE) and mean relative error (MRE) of the model are calculated, as shown in Table 4.

Table 4.

Comparison of fitting prediction effect.

It can be seen that the nonlinear fitting model can be reused after a parameter solution. The root mean square error is 0.9301 mm, and the average relative error can be controlled within 3%. It shows that the prediction effect of the fitting model is good. In order to verify the effect of the pixel conversion method in the actual optical deformation monitoring, a corner reflector is used as the target to move horizontally by 3.0 mm in the experimental site to obtain two images before and after deformation.

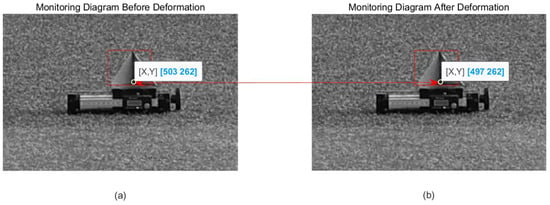

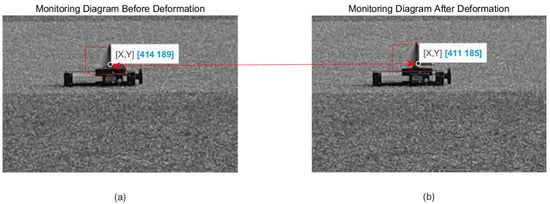

After three interpolation runs of the image, the pixel deformation of the target obtained by optical deformation monitoring is six pixels, as shown in Figure 8.

Figure 8.

Optical deformation monitoring result: (a) before deformation; (b) after deformation.

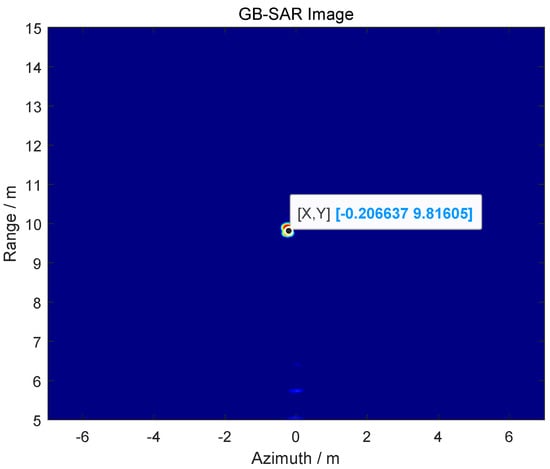

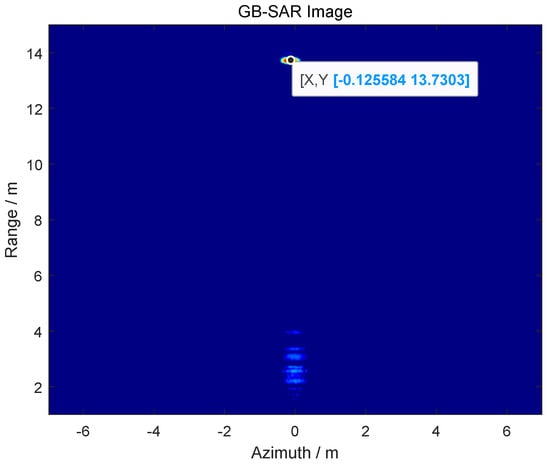

The position information of the target obtained by using GB-SAR is R = 9.82 m, as shown in Figure 9.

Figure 9.

GB-SAR imaging to obtain detection range.

Based on the nonlinear fitting model of Section 3.3, the diagonal pixels of the standard samples at this position are obtained as follows:

Thus, the original pixel conversion ratio here is 113.12/72.45 = 1.56. Due to the interpolation of the optical monitoring image by a factor of three, the pixel conversion ratio in the image is r = 1.56/3 = 0.52. Consequently, the pixel deformation quantity obtained from optical deformation monitoring is converted into the deformation quantity under the basic length unit as 6 × 0.52 = 3.12 mm. The relative error between the result and the actual movement of the target is 4%.

In order to better test the performance of the method, we changed the deformation distance of the target, repeated the experiment, and obtained the results shown in Table 5:

Table 5.

Optical deformation monitoring results with a detection distance of 9.82 m.

It can be seen that the accuracy of optical deformation monitoring using this method can reach a sub-millimeter level within 10 m, and the relative error can be controlled within 6%. In order to better verify the applicability and effectiveness of the proposed method, the detection distance was increased, and the results obtained by repeating the above experiments are shown in Table 6:

Table 6.

Optical deformation monitoring results with a detection distance of 55.62 m.

It can be seen that the accuracy of optical deformation monitoring using this method can reach the millimeter level at a detection distance of tens of meters, and the relative error can be controlled within 7%.

The experiments show that the method proposed in this paper realizes the real-time pixel conversion function at any position in the range of several meters to dozens of meters. The method does not need to solve the parameters again after solving the model parameters once and can be reused in subsequent experiments. It solves the problem of the pixel deformation quantity is difficult to convert into deformation quantity under a basic length unit in optical deformation monitoring, and it greatly improves the applicability and practicability of the whole deformation monitoring system.

4.2. Image Fusion

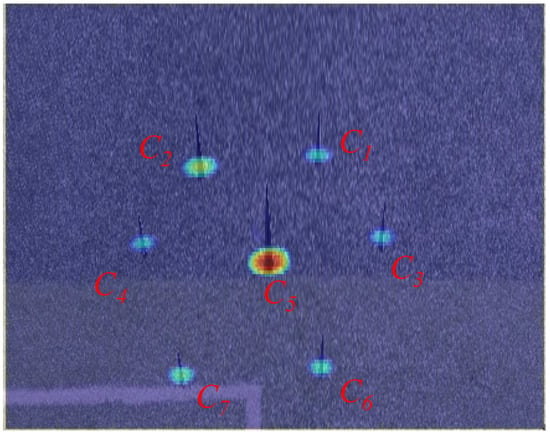

In order to verify the effectiveness of the proposed algorithm in Section 3.4, we carried out multi-objective image fusion verification experiments outdoors. Firstly, some corner reflector targets are randomly arranged in the experimental site, as shown in Figure 10.

Figure 10.

Optical image of some corner reflectors.

The GB-SAR image and an optical image of these corner reflectors are depicted in Figure 11, where represents the corresponding position of the nth corner reflector in the optical and radar image.

Figure 11.

GB-SAR image and an optical image of the corner reflectors.

Based on the algorithm in Section 3.4, the images are merged, as shown in Figure 12.

Figure 12.

Fusion image.

The enhanced fusion result is evident, displaying improved alignment between the targets in both the GB-SAR and optical images. No significant dislocation is observed, resulting in a more natural and clear visualization effect. The fusion of GB-SAR and optical images allows for the identification of potential hazards through radar, followed by the selection of any corresponding areas in the optical image for optical deformation monitoring. Subsequently, the fusion data from GB-SAR radial deformation monitoring and optical image two-dimensional deformation monitoring are utilized to calculate the comprehensive three-dimensional deformation data of the target.

4.3. Obtaining Three-Dimensional Deformation Quantity

In order to verify the feasibility of the method proposed in this paper, we carried out data acquisition experiments in actual outdoor scenes to obtain the three-dimensional deformation monitoring amount of the target; the experimental scene is shown in Figure 13.

Figure 13.

Three-dimensional deformation monitoring experimental scene.

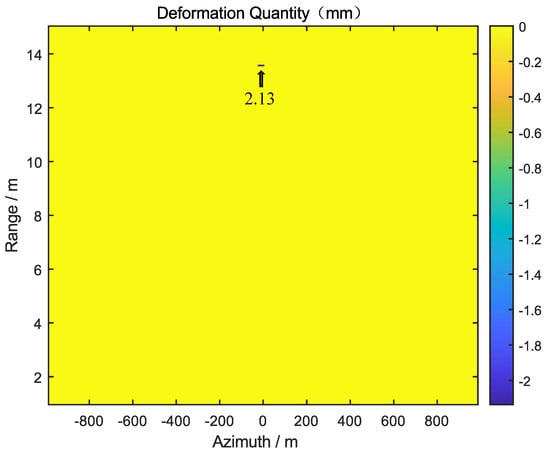

By moving the target along the X, Y, and Z axes by 2.0 mm, 2.0 mm, and 2.5 mm, respectively, the actual three-dimensional deformation of the target is 3.77 mm. First, the GB-SAR was used to monitor the radial deformation of the target, and the radial deformation, dl, of the target is 2.13 mm, as shown in Figure 14.

Figure 14.

GB-SAR acquires the radial deformation of the target.

After that, the optical camera is used to obtain the optical two-dimensional deformation monitoring of the target, and the two-dimensional deformation, = 3 pixel and = 4 pixel, of the target in pixel units is obtained, as shown in Figure 15.

Figure 15.

Optical deformation monitoring result: (a) before deformation; (b) after deformation.

Using the ranging function of GB-SAR to obtain the detection range of the target imaging results in R = 13.73m, as shown in Figure 16.

Figure 16.

GB-SAR image of the target.

Based on the pixel conversion algorithm in Section 3.3, the diagonal pixels of the calibration board at this detection distance are P = 56.23 pixels. Therefore, the pixel conversion ratio is r = 2.01. Since the actual image of optical monitoring is interpolated three times, the pixel conversion ratio in the image is r = 2.01 / 3 = 0.67, and then the optical deformation monitoring result— = 2.01 mm, = 2.68 mm—under the basic length unit is obtained. By substituting the optical deformation monitoring data and GB-SAR monitoring data into the three-dimensional solution model shown in Figure 5, the final comprehensive three-dimensional deformation monitoring result is 3.91 mm, and the relative error is 3.71%.

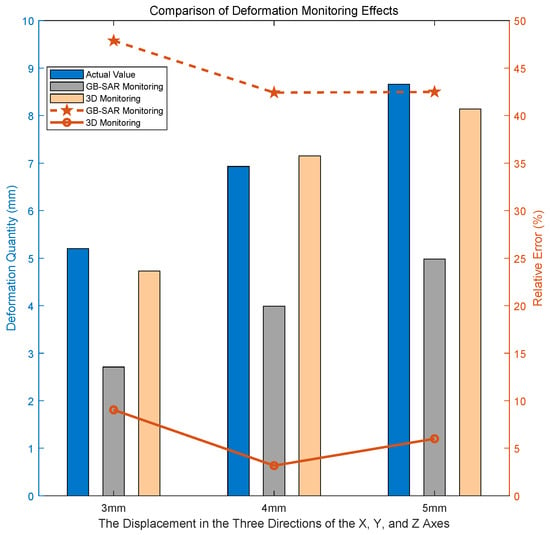

In order to better show the effect of the experiment, the target was moved the same distance along the X-axis, Y-axis, and Z-axis by 3 mm, 4 mm, and 5 mm, respectively. The comparative experimental results are illustrated in Figure 17. The term “GB-SAR Monitoring” denotes the outcomes obtained through the exclusive use of GB-SAR for deformation monitoring, and “3D Monitoring” signifies the results obtained by applying the method proposed in this paper to deformation monitoring.

Figure 17.

Comparison of monitoring effects at R = 13.73 m.

When observing the results, it becomes evident that when there is a significant angle between the actual deformation direction of the target and the radial direction of the radar, the radial deformation monitored by the radar is notably underestimated compared to the real deformation. However, through the supplementation of optical image information, the three-dimensional monitoring of deformation aligns more closely with the actual deformation. This approach proves effective in preventing missed alarms resulting from substantial angles between the deformation direction and the radial direction of the radar.

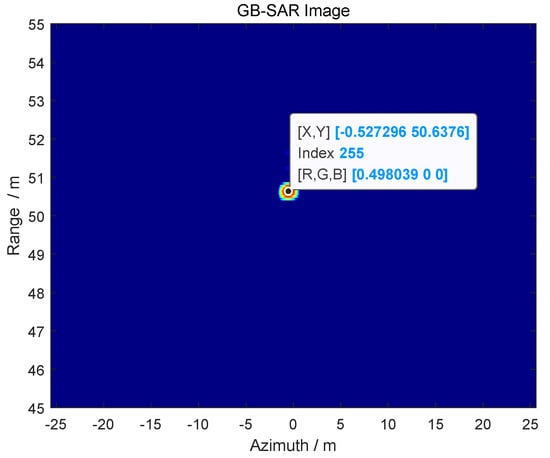

In order to better verify the applicability and effectiveness of the proposed method, the detection distance of the target was changed. The radar imaging is shown in Figure 18, and the detection distance is R = 50.64 m.

Figure 18.

GB-SAR image of the target.

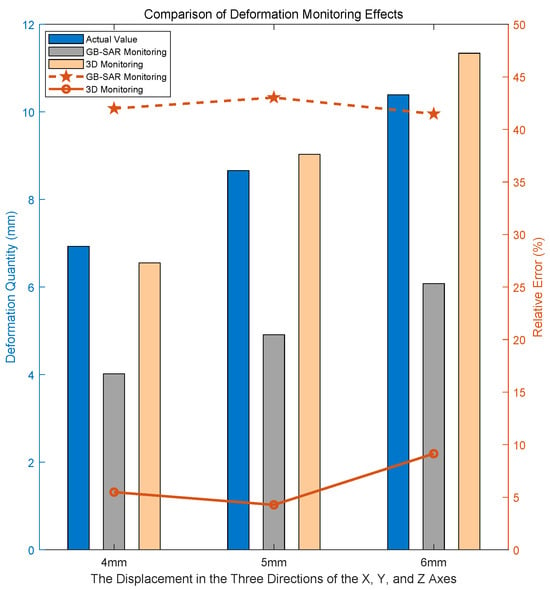

The outcomes of the repeated experiments showcasing three-dimensional deformation monitoring are illustrated in Figure 19.

Figure 19.

Comparison of monitoring effects at R = 50.64 m.

The mean relative error (MRE) and root mean square error (RMSE) of the experimental results of the proposed method under different detection distances were calculated, as shown in Table 7.

Table 7.

The monitoring effect under different detection distances.

Through the conducted experiments, it can be proved that the three-dimensional deformation monitoring method proposed in this paper can effectively carry out comprehensive three-dimensional deformation monitoring for targets within a monitoring range of tens of meters. Among them, sub-millimeter-level monitoring accuracy can be achieved at a detection distance of 10 meters, and millimeter-level monitoring accuracy can be achieved at a detection distance of tens of meters. The average relative error of monitoring can be controlled within 10%, and the root mean square error can be controlled within 0.7 mm, indicating that the monitoring effect of this method is good and the comprehensive three-dimensional deformation of the monitoring target can be effectively applied.

5. Discussions

5.1. Comparison of Conventional Methods and the Proposed Method

Predominantly, the research in three-dimensional deformation monitoring is centered on spaceborne and airborne SAR, with limited methodologies tailored for ground targets. Current research into the three-dimensional deformation monitoring of ground targets predominantly focuses on two main approaches. The primary research directions include multi-station three-dimensional deformation monitoring and the use of prior terrain information to predict deformations in various directions.

In the context of multi-station three-dimensional deformation monitoring, the predominant method entails employing multiple GB-SARs for multi-view interferometry measurements. This technique typically necessitates a minimum of two GB-SARs, and for enhanced accuracy, three GB-SARs are often concurrently deployed. However, the current accuracy level is confined to the centimeter range, accompanied by substantial costs and deployment challenges, significantly impeding the widespread adoption of GB-SARs.

In contrast, the three-dimensional deformation method proposed in this article requires only single-station monitoring. This significantly diminishes the cost and deployment complexities associated with three-dimensional deformation monitoring when compared to the aforementioned method.

Additionally, in contrast to predicting deformation information using prior terrain information, which relies on prior knowledge of the terrain and time series analysis combined with geographic reference models, the proposed method in this article operates without the need for geographic reference models or similar data. This increased flexibility enhances its adaptability across diverse scenarios, effectively expanding the application range of GB-SAR.

5.2. Conflict between Accuracy and Monitoring Distance

The accuracy of the three-dimensional deformation monitoring method proposed in this article decreases with the increase in monitoring distance, as demonstrated by a series of experiments in Section 4.3. The main reason for this problem is limited by the current constraints of optical imaging equipment. In theory, the current method can achieve millimeter-level accuracy in three-dimensional deformation monitoring at tens of meters and can control the relative error within 10%, as illustrated in Figure 17 and Figure 19 and Table 7. However, as the detection distance increases to the 100-meter range, the accuracy of the three-dimensional deformation monitoring method will decrease to the centimeter level. With the rapid development of optical devices, long-distance three-dimensional deformation monitoring will gradually be carried out. At that time, the atmospheric phase correction work mentioned in Formula (2) will also come into the picture, further enhancing the applicability of the method in this article.

6. Conclusions

This paper introduces a new method to obtain the three-dimensional deformation of a target based on ground-based synthetic aperture radar deformation monitoring and optical deformation monitoring. This method only needs single-station monitoring, which avoids the high cost and difficult deployment of dual-station monitoring. It can obtain a comprehensive three-dimensional deformation of the ground-based target. Compared with the traditional use of GB-SAR alone for deformation monitoring, it can effectively reduce the monitoring error and avoid potential safety hazards such as missed alarms. Through outdoor physical acquisition experiments, the proposed method demonstrates sub-millimeter monitoring accuracy within a 10-meter range and millimeter-level accuracy at a range of tens of meters. The average relative error can be controlled within 10%, and the root mean square error can be controlled within 0.7 mm. It can be effectively applied to the three-dimensional deformation monitoring of foundation targets field and provides a new idea for natural disaster warnings and building deformation monitoring.

Author Contributions

Conceptualization, Y.C., Y.M., H.H. and T.L.; Methodology, Y.C., H.H. and T.L.; Software, Y.C. and Y.M.; Validation, Y.C. and Y.M.; Formal analysis, Y.C. and Y.M.; Investigation, Y.C., Y.M. and H.H.; Resources, Y.C. and H.H.; Data curation, Y.C., Y.M., H.H. and T.L.; Writing—original draft, Y.C.; Writing—review & editing, Y.C., Y.M., H.H. and T.L.; Visualization, Y.C. and T.L.; Supervision, H.H. and T.L.; Project administration, H.H. and T.L.; Funding acquisition, H.H. and T.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Key Areas of R&D Projects in Guangdong Province (2019B111101001), the introduced innovative R&D team project of “The Pearl River Talent Recruitment Program” (2019ZT08X751), the Shenzhen Science Technology Planning Project (JCYJ20190807153416984), and the Natural Science Foundation of China (62071499).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Wang, Y.; Hong, W.; Zhang, Y.; Lin, Y.; Li, Y.; Bai, Z.; Zhang, Q.; Lv, S.; Liu, H.; Song, Y. Ground-based differential interferometry SAR: A review. IEEE Geosci. Remote Sens. Mag. 2020, 8, 43–70. [Google Scholar] [CrossRef]

- Zhang, Z.; Suo, Z.; Tian, F.; Qi, L.; Tao, H.; Li, Z. A Novel GB-SAR System Based on TD-MIMO for High-Precision Bridge Vibration Monitoring. Remote Sens. 2022, 14, 6383. [Google Scholar] [CrossRef]

- Ruiz, J.J.; Lemmetyinen, J.; Lahtinen, J.; Uusitalo, J.; Häkkilä, T.; Kontu, A.; Pulliainen, J.; Praks, J. Investigation of cryosphere processes in the boreal forest zone using ground-based SAR. In Proceedings of the 2022 52nd European Microwave Conference (EuMC), Milan, Italy, 27–29 September 2022; pp. 83–86. [Google Scholar]

- Mo, Y.; Lai, T.; Wang, Q.; Huang, H. Modeling and compensation for repositioning error in discontinuous GBSAR monitoring. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4012705. [Google Scholar] [CrossRef]

- Nie, Q.; Sun, B.; Li, Z. Resolution analysis of sector scan GB-SAR for wide landslides monitoring. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1393–1396. [Google Scholar]

- Deng, Y.; Tian, W.; Xiao, T.; Hu, C.; Yang, H. High-Quality Pixel Selection Applied for Natural Scenes in GB-SAR Interferometry. Remote Sens. 2021, 13, 1617. [Google Scholar] [CrossRef]

- Chan, Y.; Koo, V.; Hii, W.H.; Lim, C. A Ground-Based Interferometric Synthetic Aperture Radar Design and Experimental Study for Surface Deformation Monitoring. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 4–15. [Google Scholar] [CrossRef]

- Leva, D.; Nico, G.; Tarchi, D.; Fortuny-Guasch, J.; Sieber, A.J. Temporal analysis of a landslide by means of a ground-based SAR interferometer. IEEE Trans. Geosci. Remote Sensing 2003, 41, 745–752. [Google Scholar] [CrossRef]

- Zhan, D.; Yu, L.; Xiao, J.; Chen, T. Multi-camera and structured-light vision system (MSVS) for dynamic high-accuracy 3D measurements of railway tunnels. Sensors 2015, 15, 8664–8684. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Xu, B.; Li, Z.; Li, J.; Hou, J.; Mao, W. Joint Estimation of Ground Displacement and Atmospheric Model Parameters in Ground-Based Radar. Remote Sens. 2023, 15, 1765. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, Q.; Zhao, C.; Yang, C.; Sun, Q.; Chen, W. Monitoring land subsidence in the southern part of the lower Liaohe plain, China with a multi-track PS-InSAR technique. Remote Sens. Environ. 2017, 188, 73–84. [Google Scholar] [CrossRef]

- Xue, F.; Lv, X.; Dou, F.; Yun, Y. A review of time-series interferometric SAR techniques: A tutorial for surface deformation analysis. IEEE Geosci. Remote Sens. Mag. 2020, 8, 22–42. [Google Scholar] [CrossRef]

- Wang, P.; Xing, C.; Pan, X.; Zhou, X.; Shi, B. Microdeformation monitoring by permanent scatterer GB-SAR interferometry based on image subset series with short temporal baselines: The Geheyan Dam case study. Measurement 2021, 184, 109944. [Google Scholar] [CrossRef]

- Takahashi, K.; Matsumoto, M.; Sato, M. Continuous observation of natural-disaster-affected areas using ground-based SAR interferometry. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 1286–1294. [Google Scholar] [CrossRef]

- Kang, X.; Zhu, J.; Geng, L.Y. Application of InSAR Technique to Monitor Time-series Displacements of Transmission Towers Located in Mining Area. Electr. Power Surv. Des. 2017, 2, 11–14. [Google Scholar]

- Mo, Y.; Lai, T.; Wang, Q.; Huang, H. Study on Repositioning Error Model in GBSAR Discontinuous Observation for Building Deformation Monitoring. In Proceedings of the IGARSS 2023–2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 2199–2202. [Google Scholar]

- Meinan, Z.; Yixuan, L.; Kazhong, D.; Chenliang, Z.; Jun, F. Monitoring and prediction of railway deformation based on DinSAR and probability integral method. Bull. Surv. Mapp. 2017, 106. [Google Scholar]

- Borah, S.B.; Chatterjee, R.S.; Thapa, S. Detection of underground mining induced land subsidence using Differential Interferometric SAR (D-InSAR) in Jharia coalfields. Adbu J. Eng. Technol. 2017, 6. [Google Scholar]

- Lin, Y.; Liu, Y.; Wang, Y.; Ye, S.; Zhang, Y.; Li, Y.; Li, W.; Qu, H.; Hong, W. Frequency domain panoramic imaging algorithm for ground-based ArcSAR. Sensors 2020, 20, 7027. [Google Scholar] [CrossRef]

- Deng, Y.; Hu, C.; Tian, W.; Zhao, Z. 3-D deformation measurement based on three GB-MIMO radar systems: Experimental verification and accuracy analysis. IEEE Geosci. Remote Sens. Lett. 2020, 18, 2092–2096. [Google Scholar] [CrossRef]

- Jiang, Z.; Kemao, Q.; Miao, H.; Yang, J.; Tang, L. Path-independent digital image correlation with high accuracy, speed and robustness. Opt. Lasers Eng. 2015, 65, 93–102. [Google Scholar] [CrossRef]

- Yang, D.; Buckley, S.M. Estimating high-resolution atmospheric phase screens from radar interferometry data. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 3117–3128. [Google Scholar] [CrossRef]

- Osmanoğlu, B.; Sunar, F.; Wdowinski, S.; Cabral-Cano, E. Time series analysis of InSAR data: Methods and trends. ISPRS-J. Photogramm. Remote Sens. 2016, 115, 90–102. [Google Scholar] [CrossRef]

- Baffelli, S.; Frey, O.; Hajnsek, I. Geostatistical analysis and mitigation of the atmospheric phase screens in Ku-band terrestrial radar interferometric observations of an Alpine glacier. IEEE Trans. Geosci. Remote Sensing 2020, 58, 7533–7556. [Google Scholar] [CrossRef]

- Nico, G.; Cifarelli, G.; Miccoli, G.; Soccodato, F.; Feng, W.; Sato, M.; Miliziano, S.; Marini, M. Measurement of pier deformation patterns by ground-based SAR interferometry: Application to a bollard pull trial. IEEE J. Ocean. Eng. 2018, 43, 822–829. [Google Scholar] [CrossRef]

- Cheng, Y.; Huang, H.; Lai, T.; Ou, P. An Image Fusion-Based Multi-Target Discrimination Method in Minimum Resolution Cell for Ground-Based Synthetic Aperture Radar. In Proceedings of the 2023 4th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 16–18 June 2023; pp. 637–641. [Google Scholar]

- Eremin, A.; Lyubutin, P.; Panin, S.; Sunder, R. Application of digital image correlation and Williams series approximation to characterize mode I stress intensity factor. Acta Mech. 2022, 233, 5089–5104. [Google Scholar] [CrossRef]

- Rokoš, O.; Peerlings, R.; Hoefnagels, J.; Geers, M. Integrated digital image correlation for micro-mechanical parameter identification in multiscale experiments. Int. J. Solids Struct. 2023, 267, 112130. [Google Scholar] [CrossRef]

- Enomoto, K.; Sakurada, K.; Wang, W.; Kawaguchi, N.; Matsuoka, M.; Nakamura, R. Image translation between SAR and optical imagery with generative adversarial nets. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1752–1755. [Google Scholar]

- Hughes, L.H.; Marcos, D.; Lobry, S.; Tuia, D.; Schmitt, M. A deep learning framework for matching of SAR and optical imagery. ISPRS-J. Photogramm. Remote Sens. 2020, 169, 166–179. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).