Predictions of Aeroengines’ Infrared Radiation Characteristics Based on HKELM Optimized by the Improved Dung Beetle Optimizer

Abstract

1. Introduction

2. Principles and Modeling

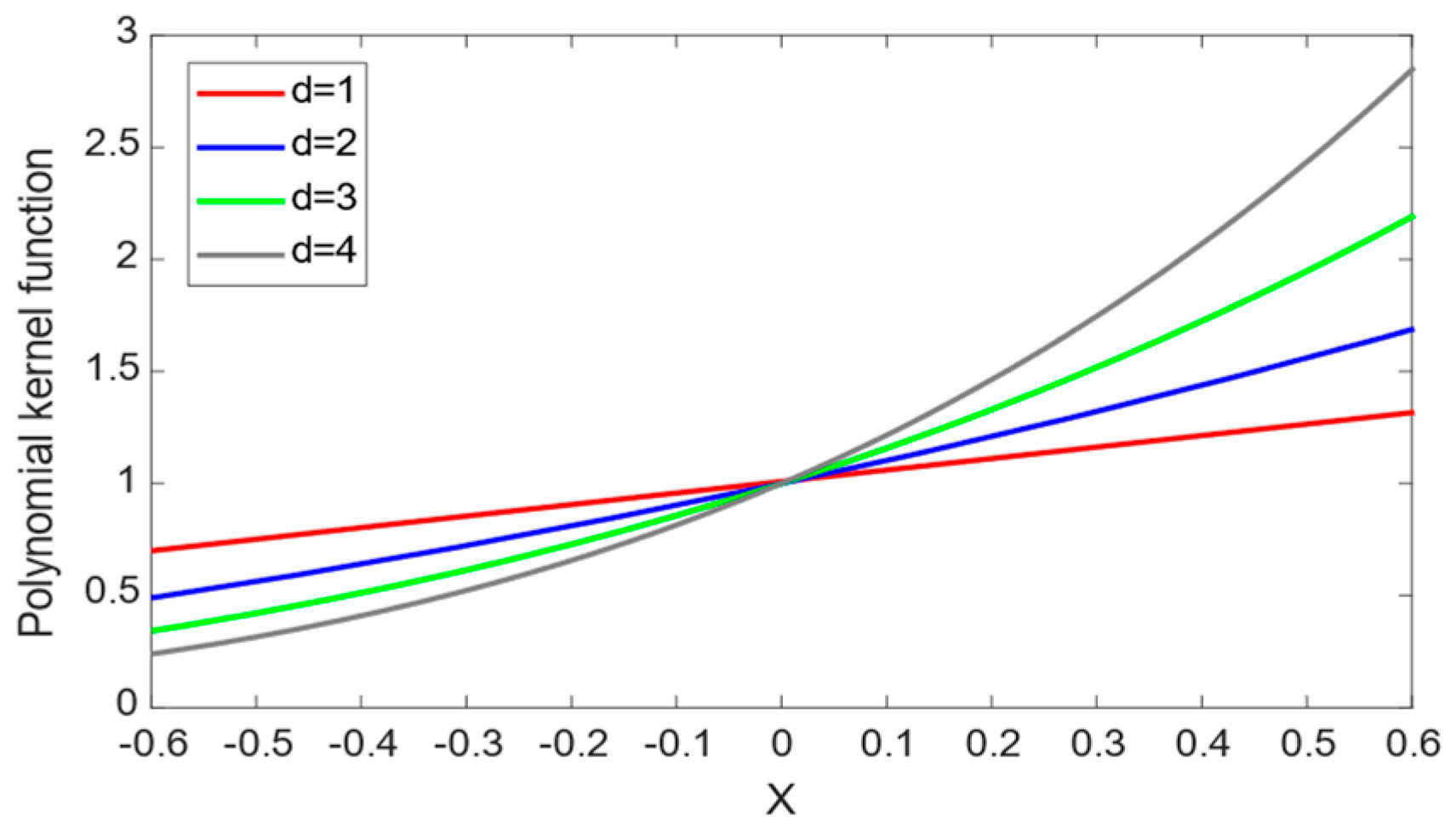

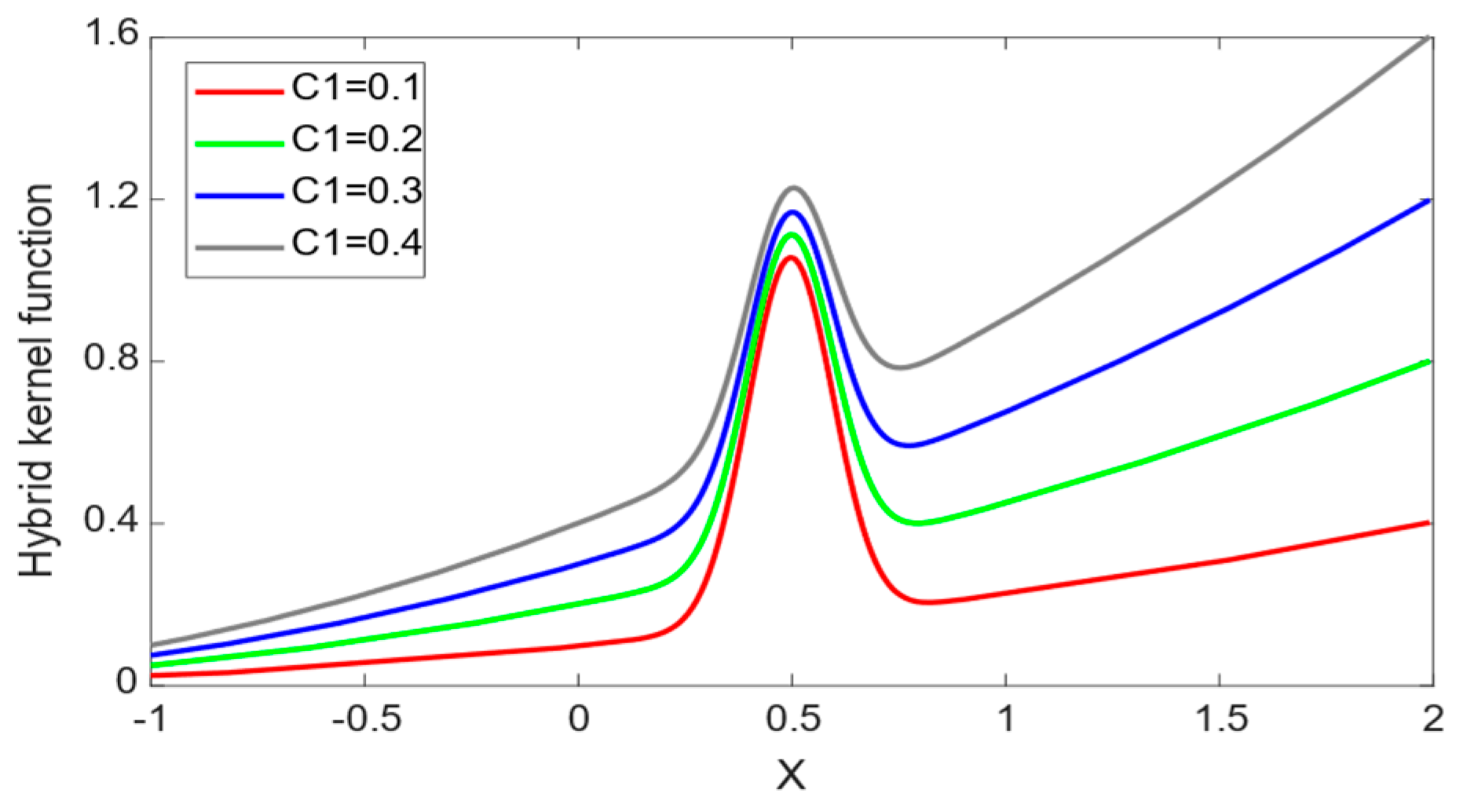

2.1. Principle of the Hybrid Kernel Extreme Learning Machine

2.2. Principle of the Dung Beetle Optimizer

2.3. Improvement of the DBO

2.3.1. Levy Flight Strategy

2.3.2. Variable Spiral Strategy

- (1)

- Initialize the IDBO algorithm’s settings and the dung beetle swarm.

- (2)

- Calculate the fitness values of all agents according to the objective function.

- (3)

- Update the position of the ball-rolling dung beetle by using Equations (13) and (14), the position of the thief by using Equation (20), the position of brood ball by using Equation (26), and the position of small dung beetle by using Equation (27).

- (4)

- Determine whether each agent is outside the limit.

- (5)

- Reevaluate the fitness value of the current optimal solution.

- (6)

- Keep repeating the previous stages until it satisfies the termination requirement.

3. IDBO Algorithm Performance Test

3.1. Test Functions and Parameter Settings

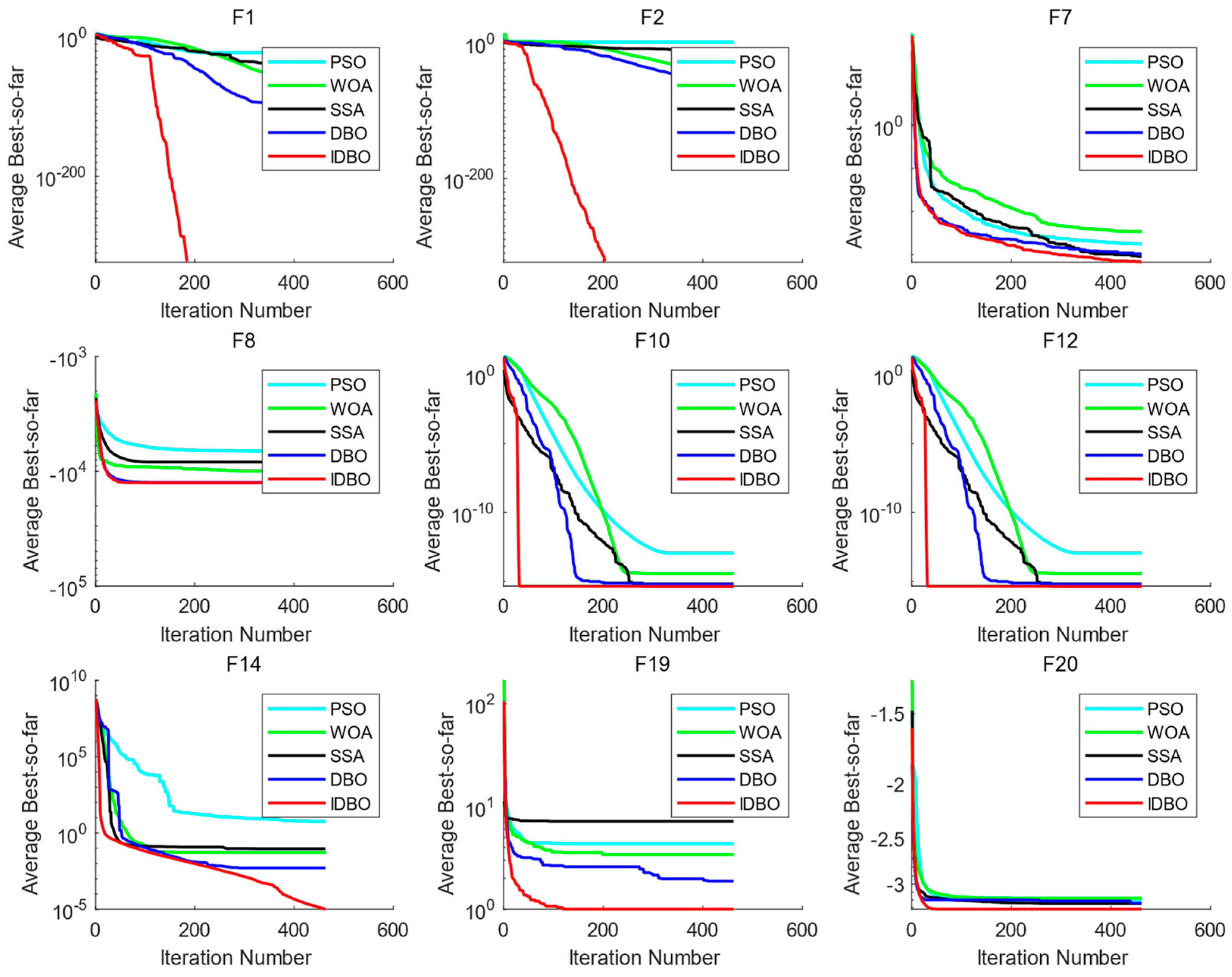

3.2. Optimization Capability of IDBO (Functions F1–F23)

3.3. Comparative Analysis of the Algorithms’ Convergence Curves

3.4. Statistical Analysis: Rank Sum Test

4. Practical Application and Analysis of the Results

4.1. Data Preparation

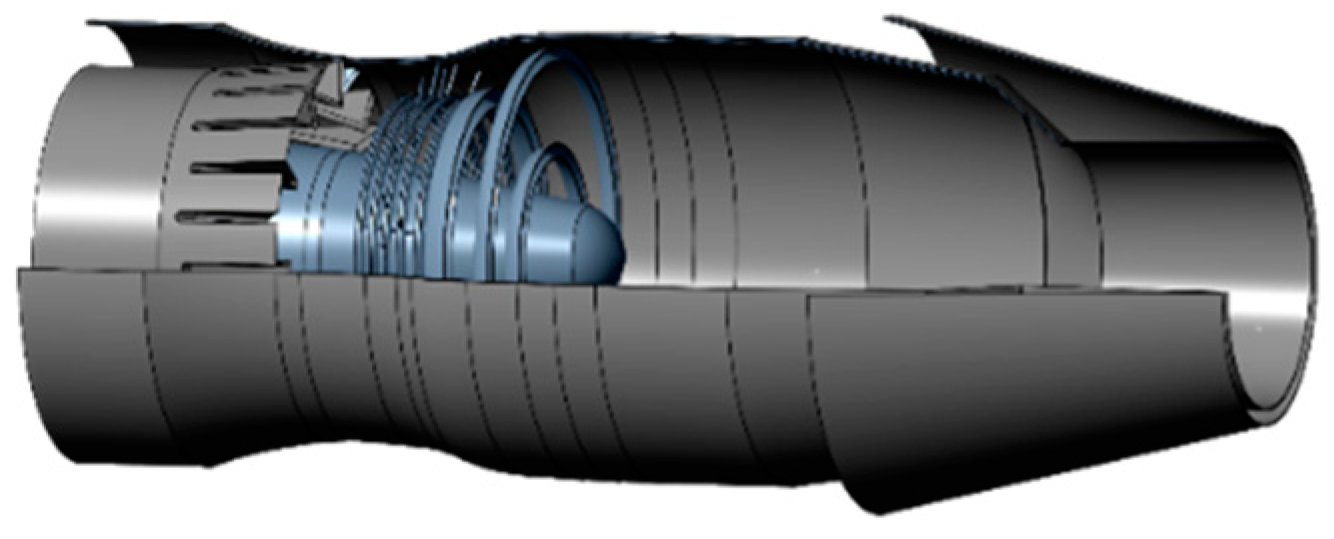

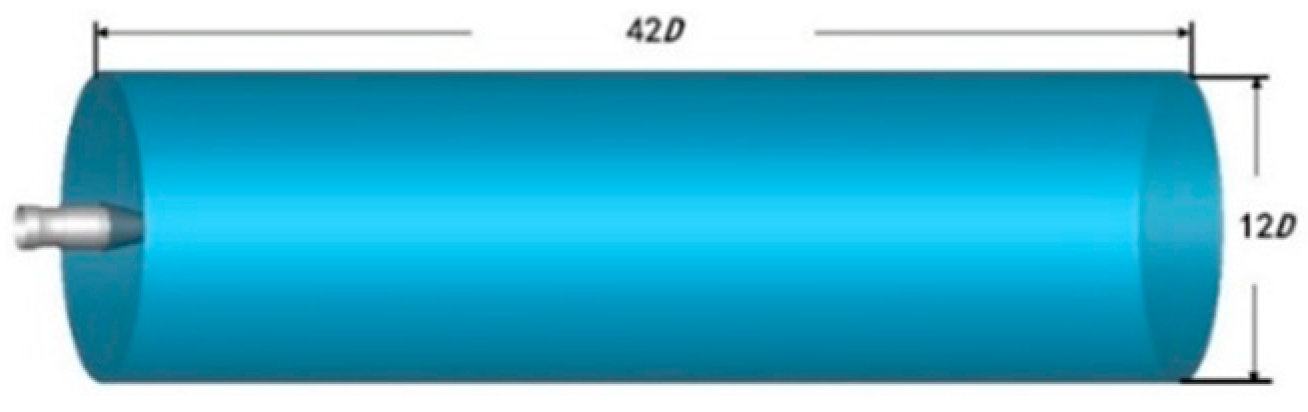

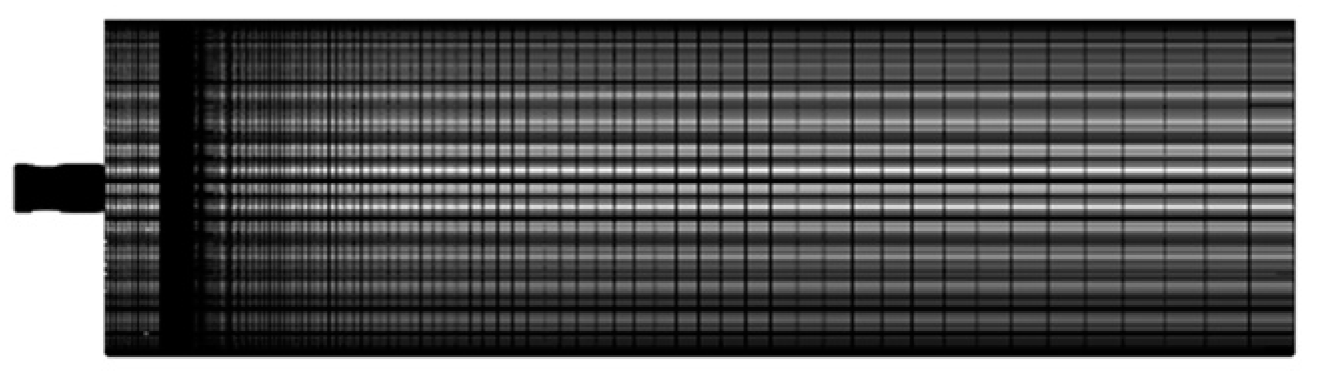

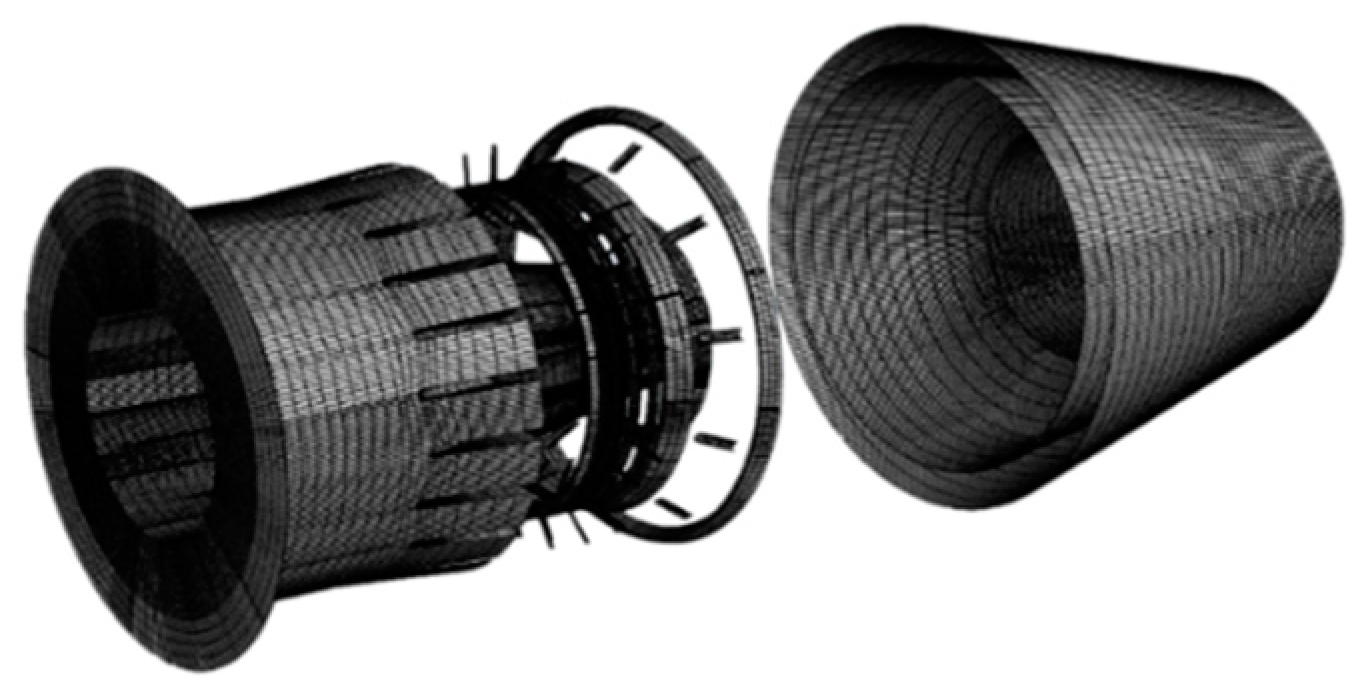

4.1.1. Flow Field Calculation

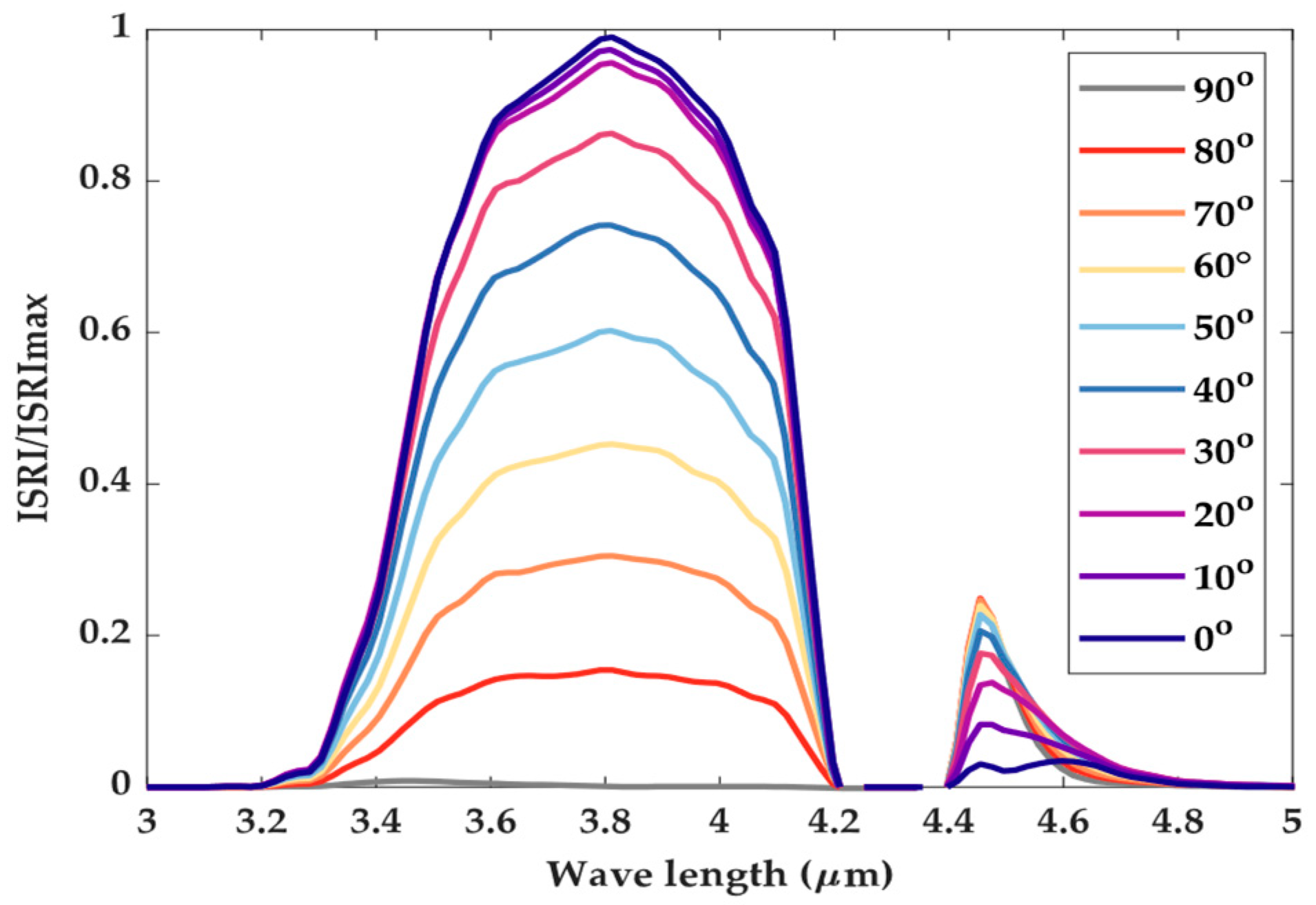

4.1.2. Calculation of the Characteristics of Infrared Radiation

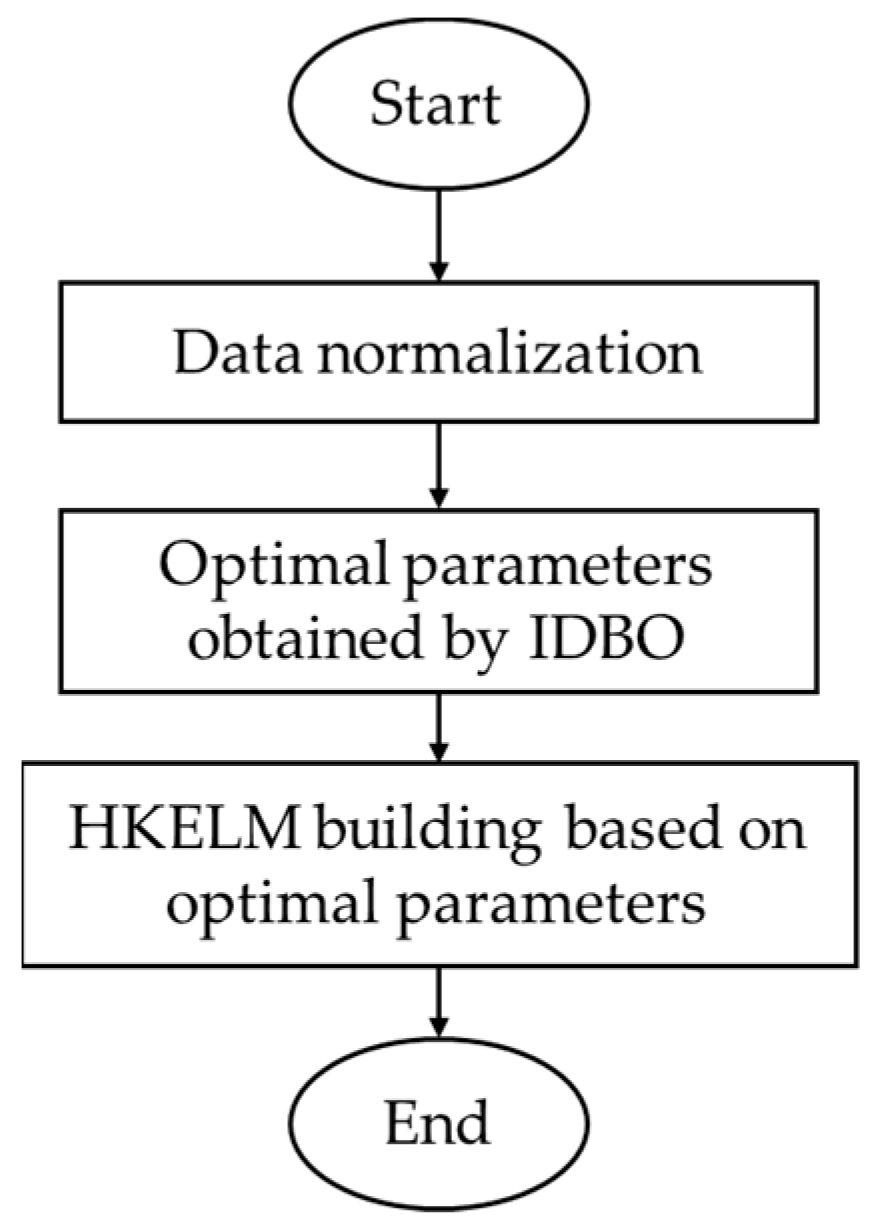

4.2. IDBO-HKELM Prediction Flow

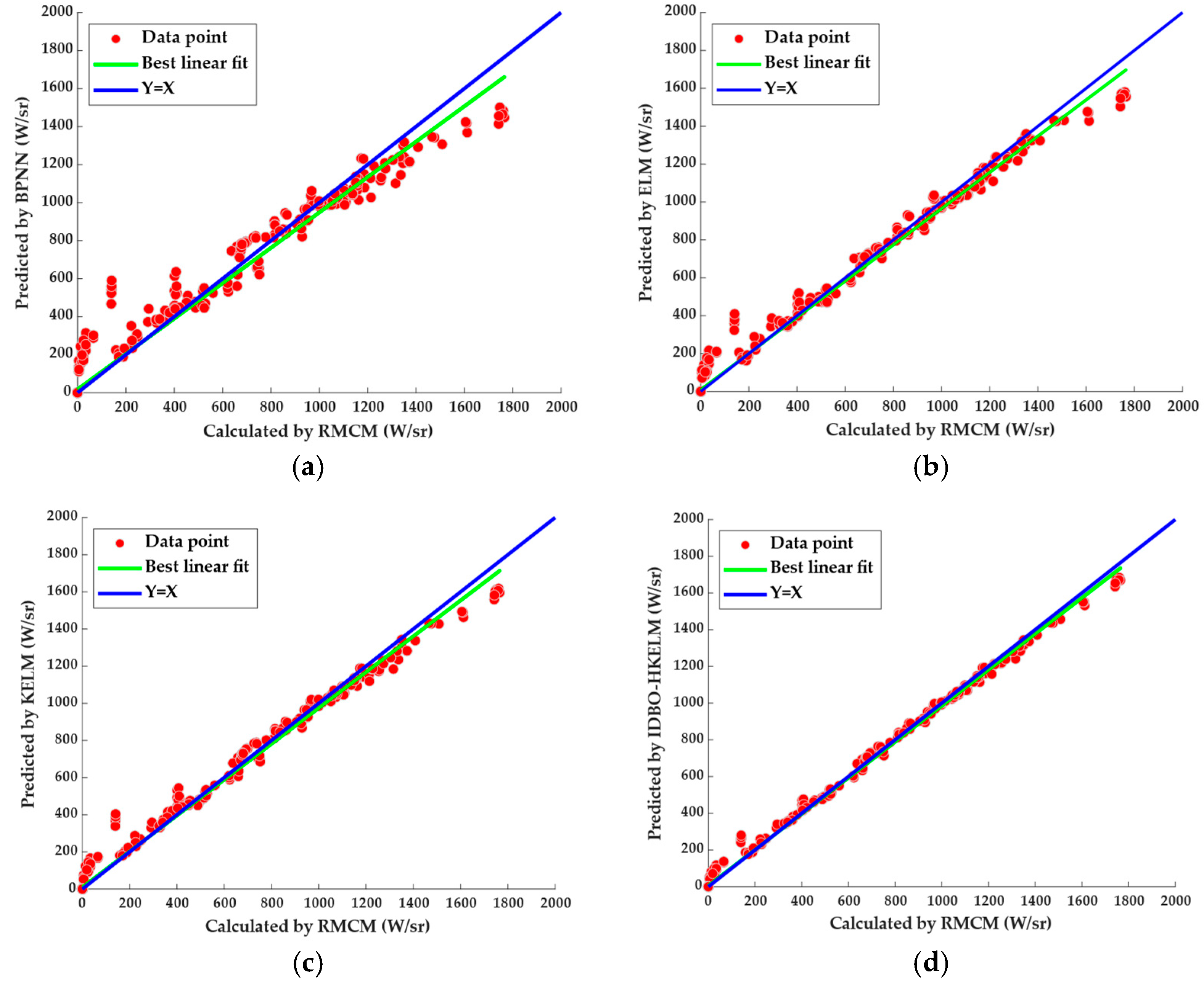

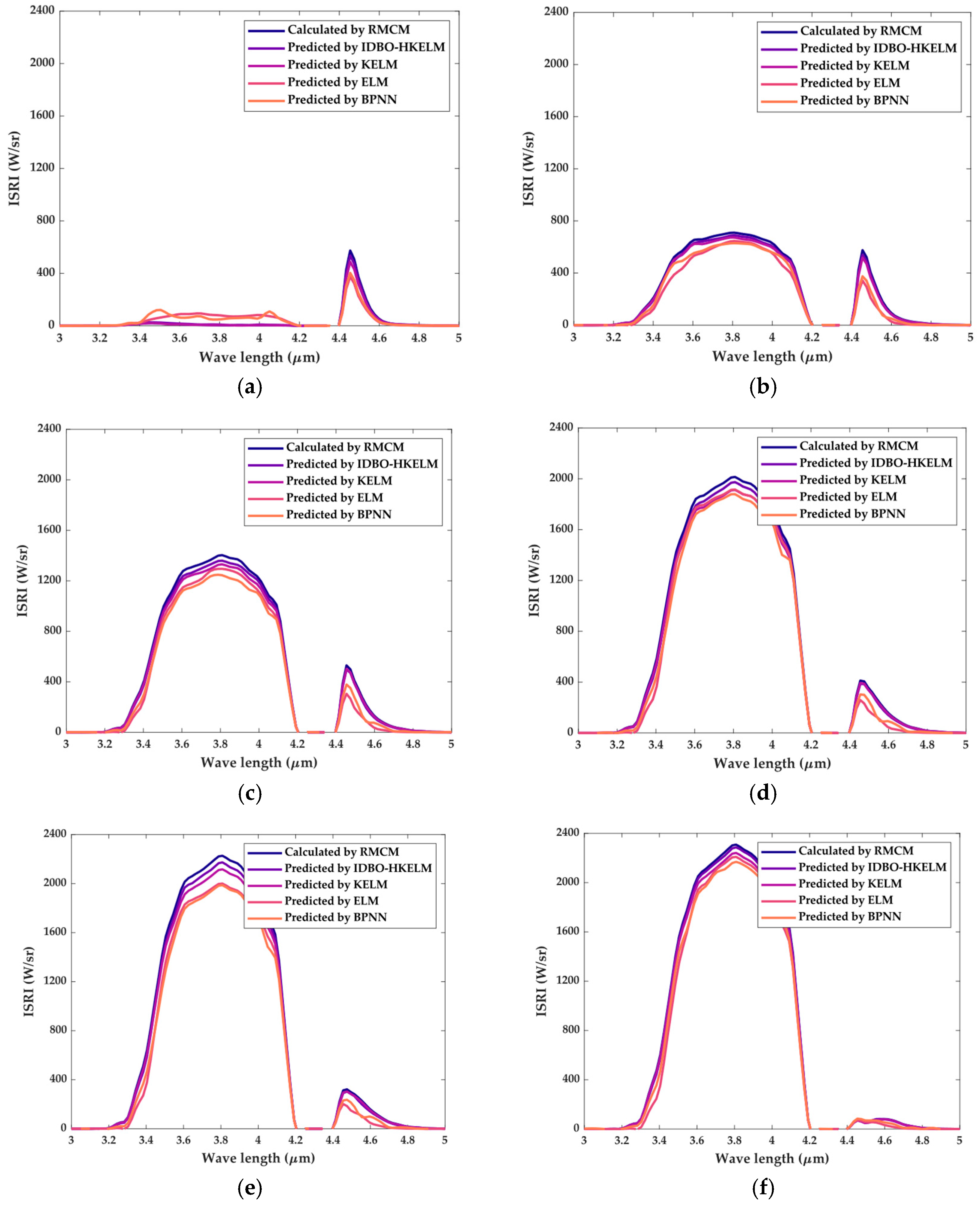

4.3. Comparative Analysis of the Models’ Prediction Effect

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deng, H.W.; Shang, S.T.; Jin, H.; Yang, S.N.; Wang, X. Analysis and discussion on stealth technology of aeroengine. Aeronaut. Sci. Technol. 2017, 28, 1–7. [Google Scholar]

- Ismail, K.; Salinas, C.S. Application of multidimensional scheme and the discrete ordinate method to radiative heat transfer in a two-dimensional enclosure with diffusely emitting and reflecting boundary walls. J. Quant. Spectrosc. Radiat. Transf. 2004, 88, 407–422. [Google Scholar] [CrossRef]

- Floyd, J.E. CFD Fire Simulation Using Mixture Fraction Combustion and Finite Volume Radiative Heat Transfer. J. Fire Prot. Eng. 2003, 13, 11–36. [Google Scholar] [CrossRef]

- Feldheim, V.; Lybaert, P. Solution of radiative heat transfer problems with the discrete transfer method applied to triangular meshes. J. Comput. Appl. Math. 2004, 168, 179–190. [Google Scholar] [CrossRef]

- Byun, D.; Lee, C.; Baek, S.W. Radiative heat transfer in discretely heated irregular geometry with an absorbing, emitting, and anisotropically scattering medium using combined Monte-Carlo and finite volume method. Int. J. Heat Mass Transf. 2004, 47, 4195–4203. [Google Scholar] [CrossRef]

- Modest, M.F. Backward Monte Carlo Simulations in Radiative Heat Transfer. J. Heat Transfer 2003, 125, 57–62. [Google Scholar] [CrossRef]

- Case, K.M. Transfer problems and the reciprocity principle. Rev. Mod. Phys. 1957, 29, 651–663. [Google Scholar] [CrossRef]

- Shuai, Y.; Dong, S.K. Simulation of the infrared radiation characteristics of high-temperature exhaust plume including particles using the backward Monte Carlo method. J. Quant. Spectrosc. Radiat. Transf. 2005, 95, 231–240. [Google Scholar] [CrossRef]

- Tan, H.P.; Shuai, Y.; Dong, S.K. Analysis of Rocket Plume Base Heating by Using Backward Monte-Carlo Method. J. Thermophys. Heat Transf. 2005, 19, 125–127. [Google Scholar] [CrossRef]

- Han, Z.H.; Xu, C.Z.; Qiao, J.L. Recent progress of efficient global aerodynamic shape optimization using surrogate-based approach. Acta Aeronaut. Astronaut. Sin. 2020, 41, 30–70. [Google Scholar]

- Han, Z.H.; Zhang, Y.; Xu, C.Z. Aerodynamic optimization design of large civil aircraft wings using surrogate-based model. Acta Aeronaut. Astronaut. Sin. 2019, 40, 522398. [Google Scholar]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. IEEE Int. Jt. Conf. Neural Netw. 2004, 2, 985–990. [Google Scholar]

- Cambria, E.; Huang, G.B.; Kasun, L.L.C. Extreme Learning Machines [Trends & Controversies]. IEEE Intell. Syst. 2013, 28, 30–59. [Google Scholar]

- Deng, C.W.; Huang, G.B.; Jia, X.U.; Tang, J. Extreme learning machines: New trends and applications. Sci. China (Inf. Sci.) 2015, 58, 5–20. [Google Scholar] [CrossRef]

- Tang, J.; Deng, C.; Huang, G.B. Extreme Learning Machine for Multilayer Perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2017, 27, 809–821. [Google Scholar] [CrossRef] [PubMed]

- Wong, P.K.; Wong, K.I.; Vong, C.M. Modeling and optimization of biodiesel engine performance using kernel-based extreme learning machine and cuckoo search. Renew. Energy 2015, 74, 640–647. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2022, 79, 7305–7336. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Control. Eng. Open Access J. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Seyedali, M.; Andrew, L. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the Icnn95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: New York, NY, USA, 1995. [Google Scholar]

- Wilcoxon, F. Individual Comparisons by Ranking Methods. In Springer Series in Statistics, Breakthroughs in Statistics; Springer: New York, NY, USA, 1992; pp. 196–202. [Google Scholar]

| F | IDBO | DBO | SSA | WOA | PSO |

|---|---|---|---|---|---|

| F1 | 0.00 | 1.67 × 10−112 | 2.24 × 10−87 | 1.35 × 10−73 | 1.96 × 10−27 |

| (0.00) | (8.79 × 10−112) | (4.80 × 10−87) | (5.02 × 10−73) | (5.48 × 10−27) | |

| F2 | 0.00 | 3.41 × 10−52 | 1.35 × 10−45 | 1.35 × 10−52 | 8.03 × 10−17 |

| (0.00) | (1.86 × 10−51) | (1.30 × 10−45) | (3.38 × 10−52) | (5.28 × 10−17) | |

| F3 | 1.20 × 10−99 | 8.82 × 10−57 | 4.57 × 10−27 | 2.08 × 10−22 | 1.03 × 10−5 |

| (6.60 × 10−99) | (4.83 × 10−56) | (2.08 × 10−26) | (8.61 × 10−22) | (2.22 × 10−5) | |

| F4 | 2.19 × 10−66 | 1.00 × 10−53 | 1.22 × 10−26 | 2.73 × 10−37 | 5.26 × 10−7 |

| (1.19 × 10−65) | (5.50 × 10−53) | (6.68 × 10−26) | (3.32 × 10−37) | (4.51 × 10−7) | |

| F5 | 2.86 × 101 | 2.57 × 101 | 2.59 × 101 | 2.78 × 101 | 2.71 × 101 |

| (3.20 × 10−1) | (2.60 × 10−1) | (3.70 × 10−1) | (5.40 × 10−1) | (7.85 × 10−1) | |

| F6 | 1.40 × 10−2 | 1.05 × 10−2 | 7.32 × 10−1 | 4.44 × 10−1 | 1.89 × 103 |

| (5.27 × 10−2) | (5.47 × 10−2) | (3.90 × 10−1) | (2.52 × 10−1) | (8.87 × 101) | |

| F7 | 1.01 × 10−3 | 8.77 × 10−4 | 6.64 × 10−4 | 3.36 × 10−3 | 1.68 × 10−3 |

| (7.61 × 10−4) | (7.94 × 10−4) | (3.41 × 10−4) | (3.03 × 10−3) | (1.01 × 10−3) | |

| F8 | −1.26 × 104 | −1.25 × 104 | −8.57 × 103 | −1.05 × 103 | −7.53 × 103 |

| (1.14) | (6.37 × 102) | (6.76 × 102) | (1.81 × 102) | (9.28 × 102) | |

| F9 | 0.00 | 0.00 | 0.00 | 3.78 × 10−15 | 1.20 |

| (0.00 | (0.00) | (0.00) | (1.44 × 10−14) | (2.37) | |

| F10 | 4.44 × 10−16 | 4.44 × 10−16 | 4.44 × 10−16 | 3.16 × 10−15 | 7.31 |

| (0.00) | (0.00) | (0.00) | (2.58 × 10−15) | (5.43) | |

| F11 | 0.00 | 0.00 | 0.00 | 3.36 × 10−3 | 4.63 × 10−3 |

| (0.00) | (0.00) | (0.00) | (7.25 × 10−3) | (2.53 × 10−2) | |

| F12 | 1.33 × 10−4 | 6.86 × 10−4 | 5.03 × 10−2 | 2.84 × 10−2 | 4.01 |

| (6.77 × 10−4) | (2.41 × 10−3) | (2.45 × 10−2) | (4.33 × 10−2) | (1.93) | |

| F13 | 2.63 × 10−1 | 7.50 × 10−1 | 6.43 × 10−1 | 5.30 × 10−1 | 1.23 × 101 |

| (1.63 × 10−1) | (5.16 × 10−1) | (2.42 × 10−1) | (2.89 × 10−1) | (8.60) | |

| F14 | 9.98 × 10−1 | 1.88 | 7.16 | 3.41 | 4.36 |

| (0.00) | (2.14) | (5.64) | (3.58) | (3.84) | |

| F15 | 3.07 × 10−4 | 8.07 × 10−4 | 3.48 × 10−4 | 7.02 × 10−3 | 1.09 × 10−3 |

| (4.57 × 10−7) | (4.40 × 10−4) | (1.74 × 10−4) | (8.61 × 10−3) | (2.22 × 10−3) | |

| F16 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 |

| (1.43 × 10−5) | (6.11 × 10−16) | (5.13 × 10−16) | (4.81 × 10−9) | (2.79 × 10−5) | |

| F17 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 |

| (6.48 × 10−16) | (1.80 × 10−5) | (1.23 × 10−5) | (1.71 × 10−6) | (1.45 × 10−5) | |

| F18 | 3.00 | 3.00 | 3.00 | 3.00 | 3.00 |

| (1.18 × 10−15) | (2.44 × 10−15) | (1.01 × 10−7) | (1.35 × 10−6) | (1.13 × 10−5) | |

| F19 | −3.85 | −3.81 | −3.81 | −3.81 | −3.81 |

| (2.68 × 10−15) | (3.39 × 10−3) | (2.26 × 10−15) | (1.84 × 10−2) | (2.72 × 10−3) | |

| F20 | −3.29 | −3.24 | −3.27 | −3.26 | −3.18 |

| (1.49 × 10−7) | (7.53 × 10−2) | (5.99 × 10−2) | (9.17 × 10−2) | (1.53 × 10−1) | |

| F21 | −8.89 | −8.46 | −1.01 × 101 | −7.69 | −9.61 |

| (2.36) | (2.42) | (4.89 × 10−3) | (2.92) | (1.54) | |

| F22 | −8.11 | −1.03 × 101 | −1.02 × 101 | −8.08 | −1.02 × 101 |

| (6.60 × 10−3) | (2.65 × 10−1) | (9.62 × 10−1) | (2.88) | (5.06 × 10−1) | |

| F23 | −8.67 | −1.05 × 101 | −1.01 × 101 | −7.75 | −1.02 × 101 |

| (7.73 × 10−3) | (2.55) | (1.74) | (3.10) | (1.57) |

| F | IDBO vs. DBO | IDBO vs. SSA | IDBO vs. WOA | IDBO vs. PSO |

|---|---|---|---|---|

| F1 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |

| F2 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |

| F3 | 1.65 × 10−9 | 1.36 × 10−11 | 6.47 × 10−12 | 6.47 × 10−12 |

| F4 | 3.54 × 10−10 | 1.96 × 10−10 | 6.47 × 10−12 | 6.47 × 10−12 |

| F5 | 3.01 × 10−11 | 3.01 × 10−11 | 1.38 × 10−6 | 4.18 × 10−9 |

| F6 | 3.01 × 10−11 | 3.01 × 10−11 | 3.01 × 10−11 | 3.01 × 10−11 |

| F7 | 5.60 × 10−2 | 8.70 × 10−1 | 3.36 × 10−6 | 7.73 × 10−6 |

| F8 | 1.85 × 10−2 | 3.01 × 10−11 | 3.01 × 10−11 | 3.01 × 10−11 |

| F9 | NaN | NaN | 1.61 × 10−2 | 1.09 × 10−12 |

| F10 | NaN | NaN | 7.20 × 10−7 | 1.20 × 10−12 |

| F11 | NaN | NaN | 1.10 × 10−2 | 1.21 × 10−12 |

| F12 | 1.95 × 10−3 | 3.01 × 10−11 | 3.01 × 10−11 | 3.01 × 10−11 |

| F13 | 1.68 × 10−4 | 6.52 × 10−8 | 6.76 × 10−5 | 3.01 × 10−11 |

| F14 | 1.24 × 10−5 | 5.69 × 10−9 | 1.21 × 10−12 | 1.21 × 10−12 |

| F15 | 4.19 × 10−10 | 1.86 × 10−6 | 3.01 × 10−11 | 2.37 × 10−10 |

| F16 | 1.13 × 10−11 | 7.57 × 10−12 | 3.01 × 10−11 | 2.28 × 10−1 |

| F17 | NaN | NaN | 1.21 × 10−12 | 1.21 × 10−12 |

| F18 | 5.50 × 10−4 | 3.60 × 10−10 | 1.77 × 10−11 | 1.77 × 10−11 |

| F19 | 6.41 × 10−5 | 4.10 × 10−12 | 6.70 × 10−3 | 3.80 × 10−7 |

| F20 | 2.00 × 10−3 | 5.10 × 10−3 | 1.09 × 10−11 | 1.09 × 10−11 |

| F21 | 4.13 × 10−3 | 1.17 × 10−2 | 6.73 × 10−6 | 5.10 × 10−3 |

| F22 | 4.13 × 10−3 | 1.02 × 10−6 | 8.29 × 10−6 | 8.12 × 10−4 |

| F23 | 3.90 × 10−2 | 5.18 × 10−7 | 5.26 × 10−4 | 8.00 × 10−3 |

| Parameters | |||||

|---|---|---|---|---|---|

| Optimal value | 21.37 | 0.23 | 2 | 68.92 | 85.09 |

| Models | MAE | RMSE |

|---|---|---|

| BPNN | 27.74 | 69.09 |

| ELM | 15.44 | 41.05 |

| KELM | 14.72 | 36. 93 |

| IDBO-HKELM | 8.33 | 20.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiao, L.; Chen, L.; Li, Y.; Hua, W.; Wang, P.; Cui, Y. Predictions of Aeroengines’ Infrared Radiation Characteristics Based on HKELM Optimized by the Improved Dung Beetle Optimizer. Sensors 2024, 24, 1734. https://doi.org/10.3390/s24061734

Qiao L, Chen L, Li Y, Hua W, Wang P, Cui Y. Predictions of Aeroengines’ Infrared Radiation Characteristics Based on HKELM Optimized by the Improved Dung Beetle Optimizer. Sensors. 2024; 24(6):1734. https://doi.org/10.3390/s24061734

Chicago/Turabian StyleQiao, Lei, Lihai Chen, Yiwen Li, Weizhuo Hua, Ping Wang, and You Cui. 2024. "Predictions of Aeroengines’ Infrared Radiation Characteristics Based on HKELM Optimized by the Improved Dung Beetle Optimizer" Sensors 24, no. 6: 1734. https://doi.org/10.3390/s24061734

APA StyleQiao, L., Chen, L., Li, Y., Hua, W., Wang, P., & Cui, Y. (2024). Predictions of Aeroengines’ Infrared Radiation Characteristics Based on HKELM Optimized by the Improved Dung Beetle Optimizer. Sensors, 24(6), 1734. https://doi.org/10.3390/s24061734