1. Introduction

The Multi-Objective Optimization Problem (MOP) is widely used to address common problems in the fields of economics, engineering, and the Internet of Things (IoT). In the context of IoT with numerous sensors, related studies primarily focus on tasks such as minimizing request response time and energy consumption [

1], developing optimal scheduling strategies to conserve energy [

2], and identifying malicious traffic. These tasks require a comprehensive consideration of factors and objectives such as service response time, workload, and energy consumption of each sensor in IoT. However, these objectives conflict with each other. Therefore, solving the optimization problem under multi-objective tasks is a critical issue in the current IoT and other important tasks. In contrast, single-objective optimization usually focuses on just one objective function, so the optimal value for such a function could be obtained by the best solution. MOP considers two or more objectives that are usually in conflict; that is, the improvement of one objective may bring negative effects to other objectives with a very high probability. Thus, equally optimal solutions should be computed to pursue the trade-off situation among all of the objectives, which is the Pareto Optimal Set (PS).

In the realm of multi-objective optimization problems (MOPs), the complexity of the solution set poses challenges for precise algorithms [

3]. Conventional approaches struggle to effectively handle MOPs, prompting the exploration of heuristic [

4] and meta-heuristic algorithms [

5] for improved performance. Notable examples include Genetic Algorithm (GA), Ant Colony Optimization (ACO), Particle Swarm Optimization (PSO), Fruit Fly Optimization (FOA), and Differential Evolution (DE). Recently, the Multi-Objective Grey Wolf Optimizer (MOGWO) [

6] has emerged as a promising swarm intelligence algorithm, building upon the foundation of the Grey Wolf Optimizer (GWO). MOGWO distinguishes itself with a faster convergence speed compared to its counterparts. In its implementation, MOGWO employs a fixed-size external archive to retain non-dominated solutions, and a grid-based method evaluates the Pareto front throughout the optimization process. However, traditional MOGWO exhibits drawbacks such as slow convergence in the later stages, making it susceptible to the pitfall of local optima.

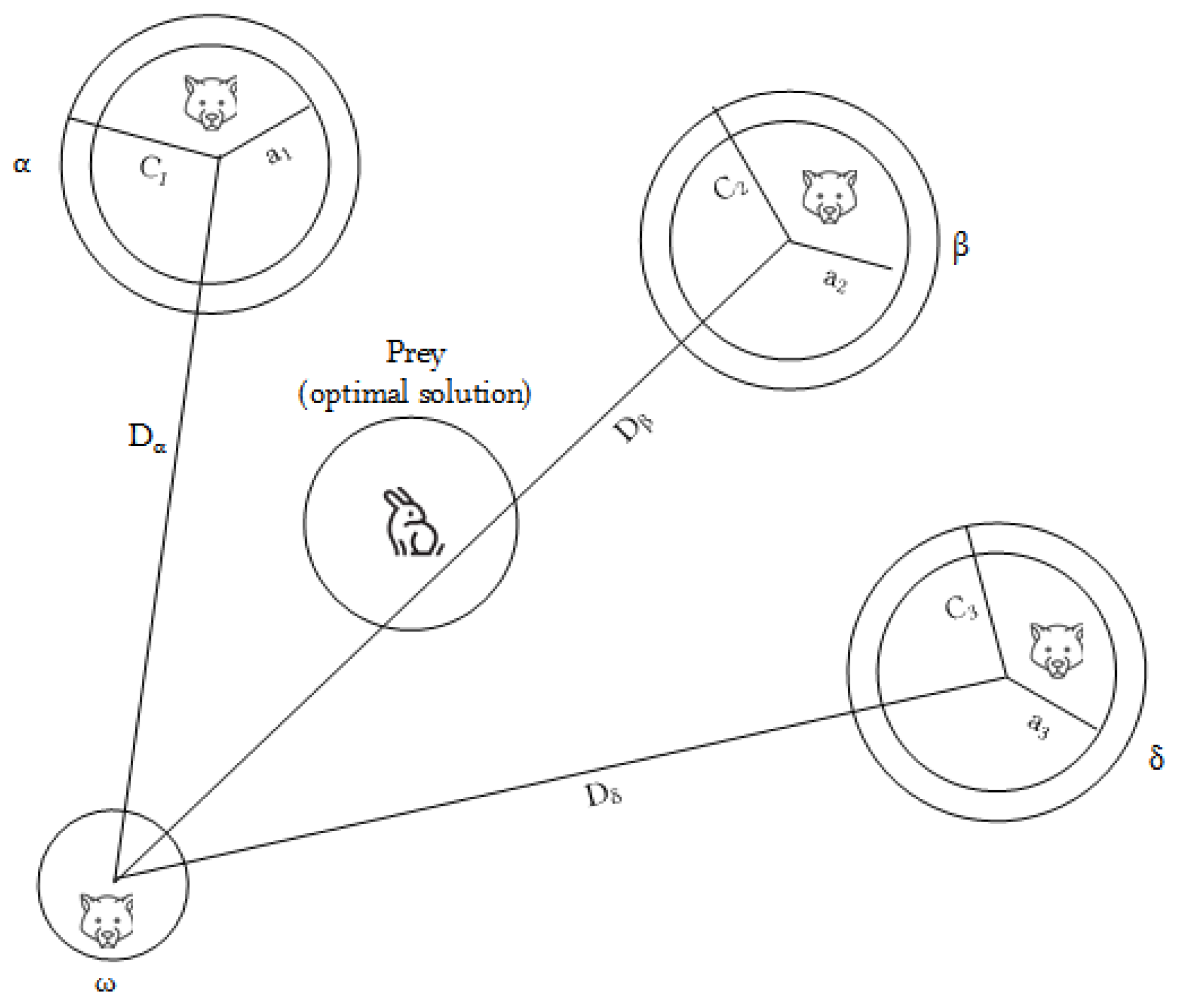

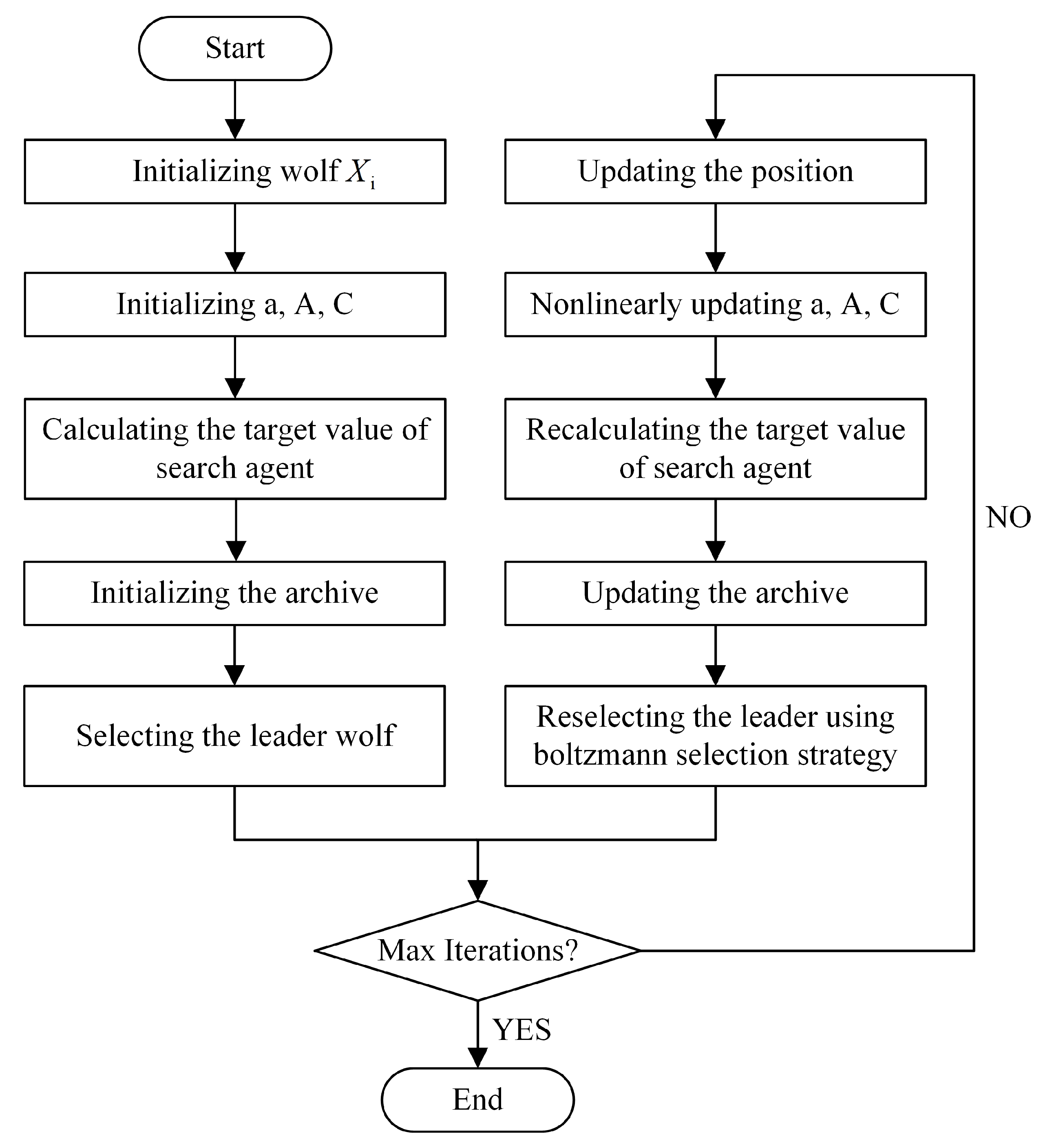

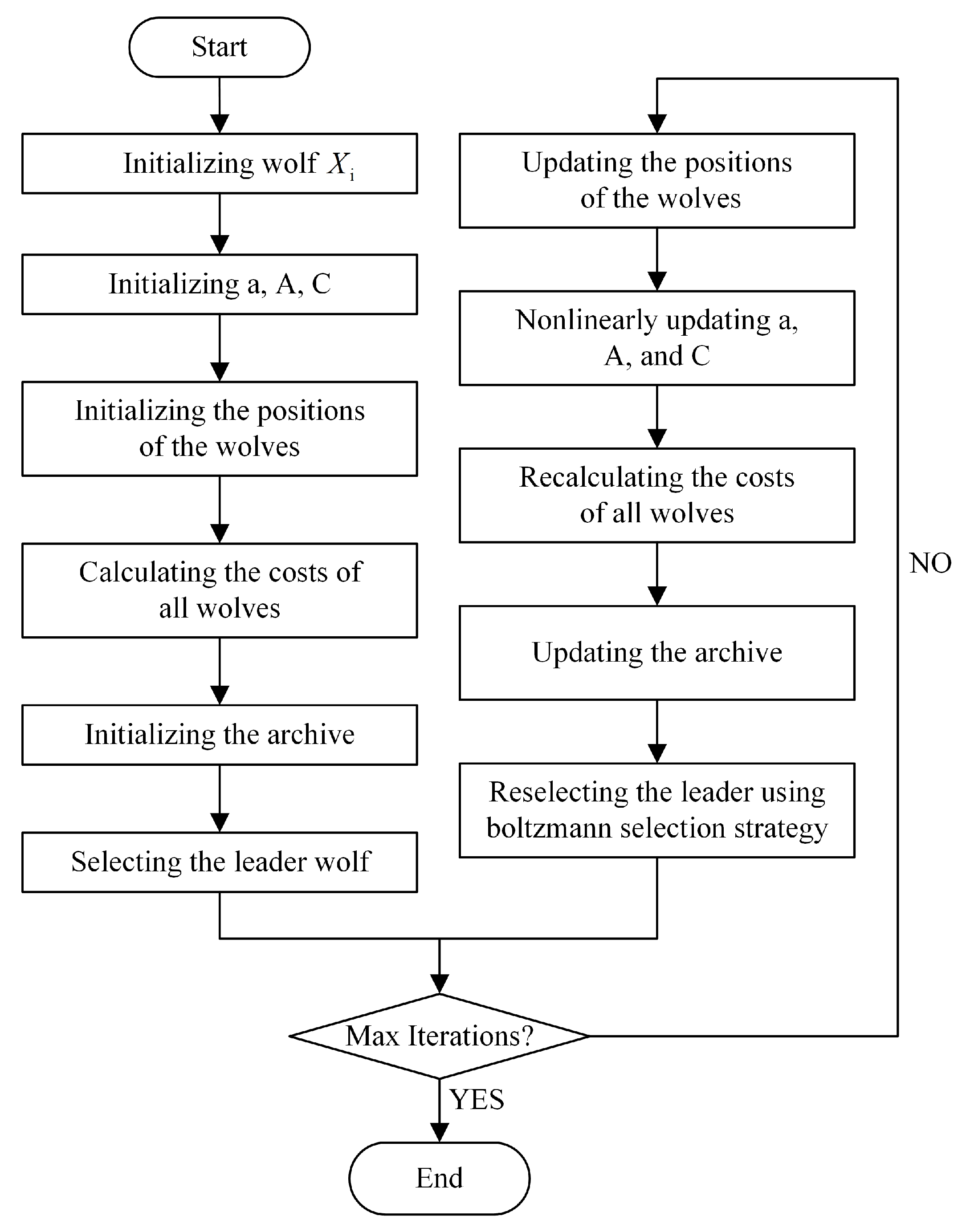

The traditional Multi-Objective Grey Wolf Optimizer (MOGWO) algorithm consists of three primary steps: initializing the wolf pack, updating the position of the leader wolf and the entire wolf pack, and obtaining the solution set through continuous iteration. MOGWO exhibits a significant level of randomness during the wolf pack initialization. The algorithm’s search strategy predominantly relies on the values of

a and

in the iteration process, both of which decrease as the number of iterations progresses. This tendency makes the algorithm prone to falling into local optima. Consequently, there is a need to optimize the search strategy in the MOGWO algorithm, aiming to formulate a more effective parameter update rule. Simultaneously, the position of the leading wolf holds crucial importance for the convergence of the MOGWO algorithm. Therefore, the primary research focus of this paper encompasses optimizing both the search strategy of the MOGWO algorithm and the selection strategy for the leading wolf. In this paper, a Modified Boltzmann-Based MOGWO is proposed, named MBB-MOGWO. As an optimized version of MOGWO, our MBB-MOGWO modifies the convergence factor used in the position update of a wolf into the variation of cosine law, and adopts Boltzmann selection strategy to get a better balance in the exploration and exploitation phase. We use multi-objective benchmark functions in CEC2009 [

7] and ZDT [

8] to perform the experimental evaluation. MBB-MOGWO is compared with four representative algorithms, i.e., MOGWO, NSGA-II, MOPSO, and MOEA/D. The experiment results illustrate that our MBB-MOGWO method overcomes the flaws in the traditional algorithms, that is, after those two major aspects of optimizations, MBB-MOGWO executes in fast convergence and well improves the precision of the solution, furthermore, it could not be trapped in the local optimum with a high probability.

Furthermore, within the realm of IoT, the web services composition can be leveraged to construct intricate intelligent systems with numerous sensors. Through the composition of services encompassing sensor data collection, device control, and user management, functionalities such as smart home automation and remote control can be effectively realized. To demonstrate the optimization effects of the MBB-MOGWO for solving actual optimization problems, it is further applied to deal with the MOP in the scenario of composing web service components. The MOP in web service composition-related studies is a non-linear and high-dimensional problem. Web service system is a platform-independent, low-coupling, and programmable-based software application [

9]. The limitations of traditional single services in meeting the demands of complex tasks have prompted the emergence of web service compositions. Therefore, it is significant for a web service system that assembles the existing web services to build a powerful value-added service. The number of existing services is increasing rapidly. Many services have similar functions but different service quality attributes, or there are conflicts between services [

10]. Therefore, it is a dilemma for users to select suitable web services for each subtask to make the whole web service system run optimally. Many related studies have proposed effective solutions for the web service composition problems, surveyed in [

11,

12,

13,

14]. So how to compute the optimal solution for this composition scenario is still very worth in-depth study nowadays. In this paper, we apply MBB-MOGWO to optimize the MOP issue in the web service composition problem. The real data records in the QWS dataset [

15] are used to evaluate the composition effects. By comparing with four representative algorithms, our MBB-MOGWO-based method shows advantages in terms of the solution precision of web service composition.

The main contributions of this study are summarized as follows: (1) We propose a Modified Boltzmann-Based MOGWO to optimize the Wolf pack position update strategy in the MOGWO algorithm, so as to reduce the possibility of the algorithm falling into the local optimal and obtain better solutions. (2) We propose a new leader wolf selection strategy based on the Boltzmann selection strategy to improve the convergence speed of the algorithm. (3) The experimental results of several multi-objective test functions show that the proposed method is effective in terms of the quality and speed of the obtained solutions. We extend the method to the web service composition problem and verify the effectiveness of the algorithm.

The rest of our paper is organized as described below. Related work on MOGWO is discussed in

Section 2.

Section 3 presents the details about the MBB-MOGWO algorithm.

Section 4 evaluates the performance of our MBB-MOGWO using common multi-objective benchmark functions.

Section 5 integrates the MBB-MOGWO algorithm into the web service composition problem and evaluates its effects with the real data records in the QWS dataset. Finally, we conclude our work in

Section 6 with future works.

2. Related Work

Compared with single-objective optimization, MOP tends to be more complex, which would consider two or more objectives and these objectives are usually in conflict. Therefore, corresponding multi-objective optimization algorithms need to be developed to optimize these objectives at the same time. Among the recently proposed algorithms, MOGWO is one of the most popular algorithms, with the advantage of a concise structure and fewer parameters to be adjusted. We will present a brief discussion of related work from these three categories in detail as follows.

2.1. Improved Initialization Population

In MOGWO, the first stage initializes population, after which the optimal set of solutions to the problem is obtained by stepwise iteration. Therefore, it is crucial for MOGWO to initializing the population. The quality of the initial population is quite important for global convergence speed, as well as the availability of solutions. A well-diversified initial population is beneficial to seek out the optimal solution. Luo et al. [

16] were inspired by complex-valued encoding, which greatly expands individual information capacity. The genes of the gray wolf can be expressed as Equation (

1):

where

and

indicate the genes of gray wolves. The two variables are updated independently, thus enhancing the diversity of the population. Madhiarasan et al. [

17] improved the traditional gray wolf population rank by dividing the gray wolf population into three groups, namely theta (

), zeta (

) and psi (

). During the updating phase, the worst position in every group is thought over to minimizes the convergence time for better performance. Long et al. [

18] introduced the good point set approach for improving the population initialization. When the same number is taken, the point sequences got by the good point set method would be distributed equably in whole feasible region, which get better diversity of individuals for population initialization.

In original MOGWO, the simple random initial method is used, but the method does not keep population diversity and converges to local optimum easily.

2.2. Improvements to the Search Mechanism

In the original MOGWO,

a and

A are used to regenerate the position for grey wolves. The value of parameter

a decreases linearly, hence MOGWO has the weak capability of exploration and was easy to get into the dilemma of local optimum. Large randomness is only available when initializing the position of the grey wolves. Muangkote et al. [

19] proposed that two different update strategies for grey wolves’ position are employed. A new strategy was introduced to calculate the distance vectors, i.e., randomly selected index values are used to update the vectors for improving randomness. Saremi et al. [

20] presents the updating method for the grey wolves’ positions using the Evolutionary Population Dynamics (EPD). The worst individuals were removed in each iteration which were repositioned around the best solutions, such as

,

,

or random position around the search space, which obtain better solutions. Malik et al. [

21] showed Weighted distance Grey wolf optimizer which modified the original location update strategy of gray wolf, i.e., the weighted average for three positions is used as the new position instead of a simple average.

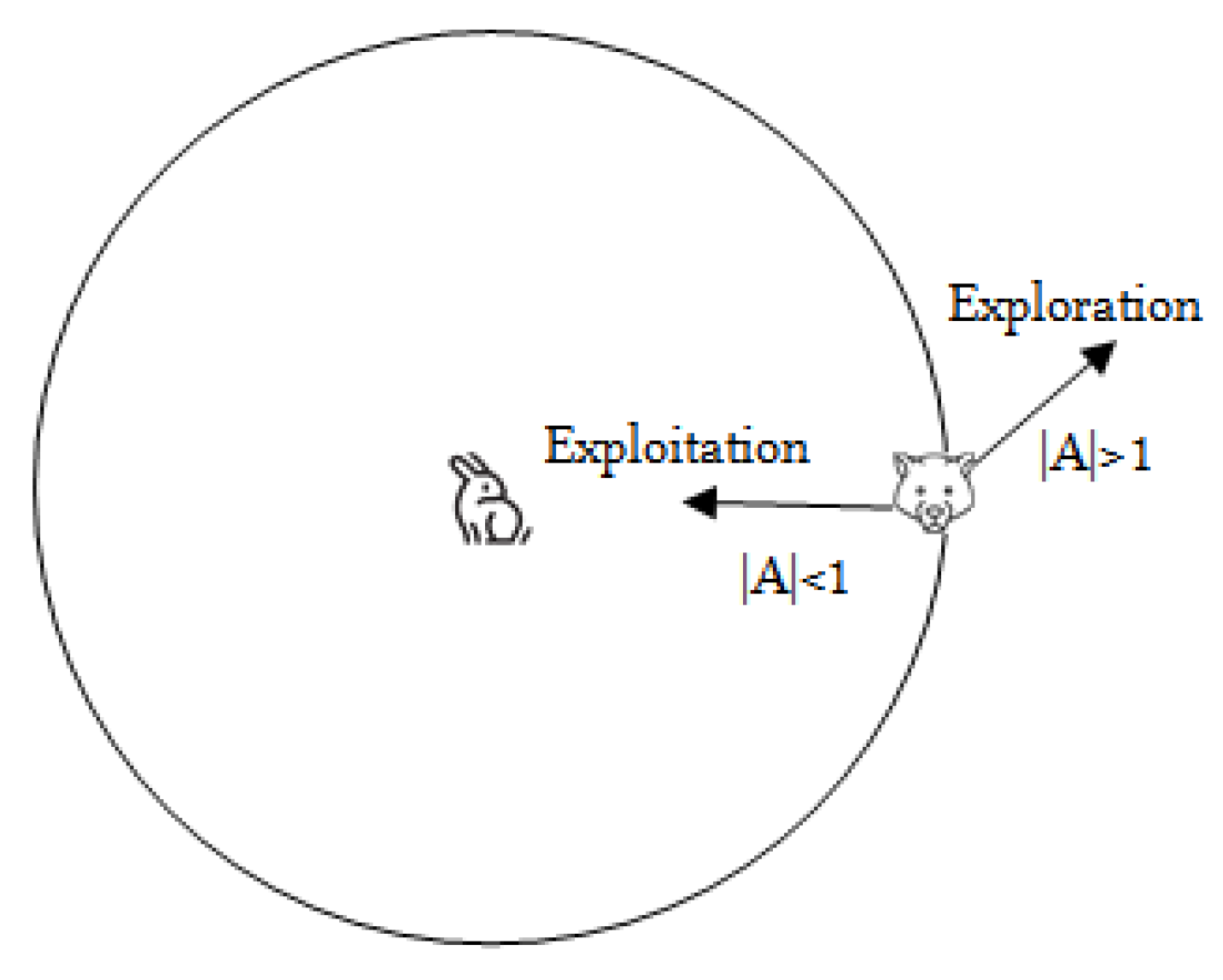

The exploration and exploitation phase in original MOGWO depends mainly on the , while the update of the depends on a. As > 1, the MOGWO would search for the prey, which called the exploration phase. As < 1, the MOGWO would pay close attention to the prey in search space, which called exploitation phase. Hence, is one of the key factors to pursue optimal balance in exploration and exploitation. All the above papers improved the position update strategy, but the update strategy of is not modified. Therefore, the algorithm is easy to enter exploitation phase and trap into the dilemma of local optimum. In our work, the update strategy of a is improved for extending the length of exploration phase and keeping away from local optimum, i.e., the using a nonlinear function to update a.

2.3. The Design of Hybrid Algorithms

Part of the literature focuses on integrating multiple algorithms to improve the MOGWO. Zhang et al. [

22] presented a hybrid MOGWO with elite opposition, called EOGWO, where the elite opposition-based learning method was merged into GWO. Singh et al. [

23] hybridized the Whale Optimizer Algorithm and Mean Grey Wolf Optimizer algorithm, named as HAGWO. In HAGWO, the spiral equation of the former algorithm was utilized to update the position of three leader wolves, which kept the balance in exploration and exploitation. Elgayyar et al. [

24] used GWO algorithm to efficiently explore the search space and Bat swarm optimizer (BA) to refine the solution. Similarly, Zhang et al. [

25] hybridized the Biogeography-Based Optimization (BBO) with GWO to fully utilize their advantages. Tawhid et al. [

26] integrated genetic algorithm with GWO. To make the search solutions more diversified, the population was separated, together with using the genetic mutation operators towards the whole population. Through experimental results, the algorithm was effective for finding or approximating global minima. Similarly, Bouzary et al. [

27] integrated a genetic algorithm and grey wolf optimizer algorithm, which was applied to service composition and optimal selection (SCOS) problems. Mirjalili et al. [

28] proposed a MOGWO using decomposition, which cooperatively approximates the Pareto solution by defining the neighborhood relations between the scalarized subproblems decomposed by the multi-objective problem. In this paper, we combine the genetic algorithm and MOGWO, i.e., the Boltzmann selection is applied to select leader wolves.

4. Experiments and Results Analysis

To verify whether the modified algorithm can improve the deficiencies of the traditional algorithm. We tested with the CEC 2009 and ZDT benchmark functions and compared them with the four representative algorithms.

4.1. Experiment Environment

The experiment configurations are shown in the

Table 1.

The key parameters in the experiments are set without loss of generality. The maximum of iterations is set to 250. The number of grey wolves is set to 100. The initial temperature is set to 800. We perform experiments in the way that each algorithm tests 20 times for each benchmark function.

4.2. Performance Metrics

We use HV (hypervolume) [

30], IGD (Reverse Generation Distance) [

31], and Spread [

32] as experimental indicators, which are widely used to evaluate the performance of multi-objective optimization methods, including the convergence and diversity of algorithm solutions.

HV represents the volume of the region in the target space as shown in Equation (10):

where the number of non-dominated solution sets is defined as

, and

represents the hyper-volume computed according to the

i-th solution in the solution set and reference point.

R are the extreme (bounding) solutions. The larger the HV metric, the better the convergence and diversity of the algorithm solutions.

IGD indicates the average distance from every reference point to nearest solution, as shown in Equation (

11):

where

P are solutions obtained by the algorithm,

are the extreme (bounding) solutions,

represents the Euclidean distance between point x and point y. The IGD metric serves as a comprehensive measure for evaluating both the convergence and diversity of an algorithm, providing insights into its overall accuracy. A smaller IGD value indicates improved performance of the algorithm.

Spread measures the breadth of the solutions, as shown in Equation (

12):

where the Euclidean distance between consecutive solutions is indicated as

, and

is the average value of all

. The minimum Euclidean distances from solutions in

S to the extreme (bounding) solutions of the

P is referred as

and

. Thus, as the Spread metric getting smaller, the spread of the solutions are surely better.

4.3. Results and Discussion

We use multi-objective performance metrics to evaluate the five algorithms. Each algorithm runs 20 times on the benchmark functions of UF2, UF5, UF9, ZDT2 and ZDT3. UF2 and ZDT2 are unconstrained continuous dual-objective functions, UF5 is an unconstrained discrete dual-objective function, ZDT3 is an infinitely-constrained discontinuous dual-objective function, and UF9 is a tri-objective function. We calculate the mean and standard deviation of the HV, IGD, and Spread as metrics, and the final results are shown in

Table 2.

The comparative analysis reveals that our method consistently outperforms other algorithms in most cases, as indicated by superior performance metrics. We identified the optimal values for each metric across different algorithms. Across the five benchmark functions, our method consistently achieves optimal average Inverted Generational Distance (IGD) values. Furthermore, except the UF9 function, our method also attains optimal average Hypervolume (HV) values. In the case of the UF9 function, although the MOEA/D algorithm demonstrates a higher average HV, its average Spread is excessively large, indicating a limited distribution range for solutions. This suggests that the solutions obtained by the MOEA/D algorithm may lack the precision achieved by our method. However, for UF2, ZDT2, and ZDT3, although HV and IGD perform better, the spread value is large, indicating that the scalability of the solution is affected.

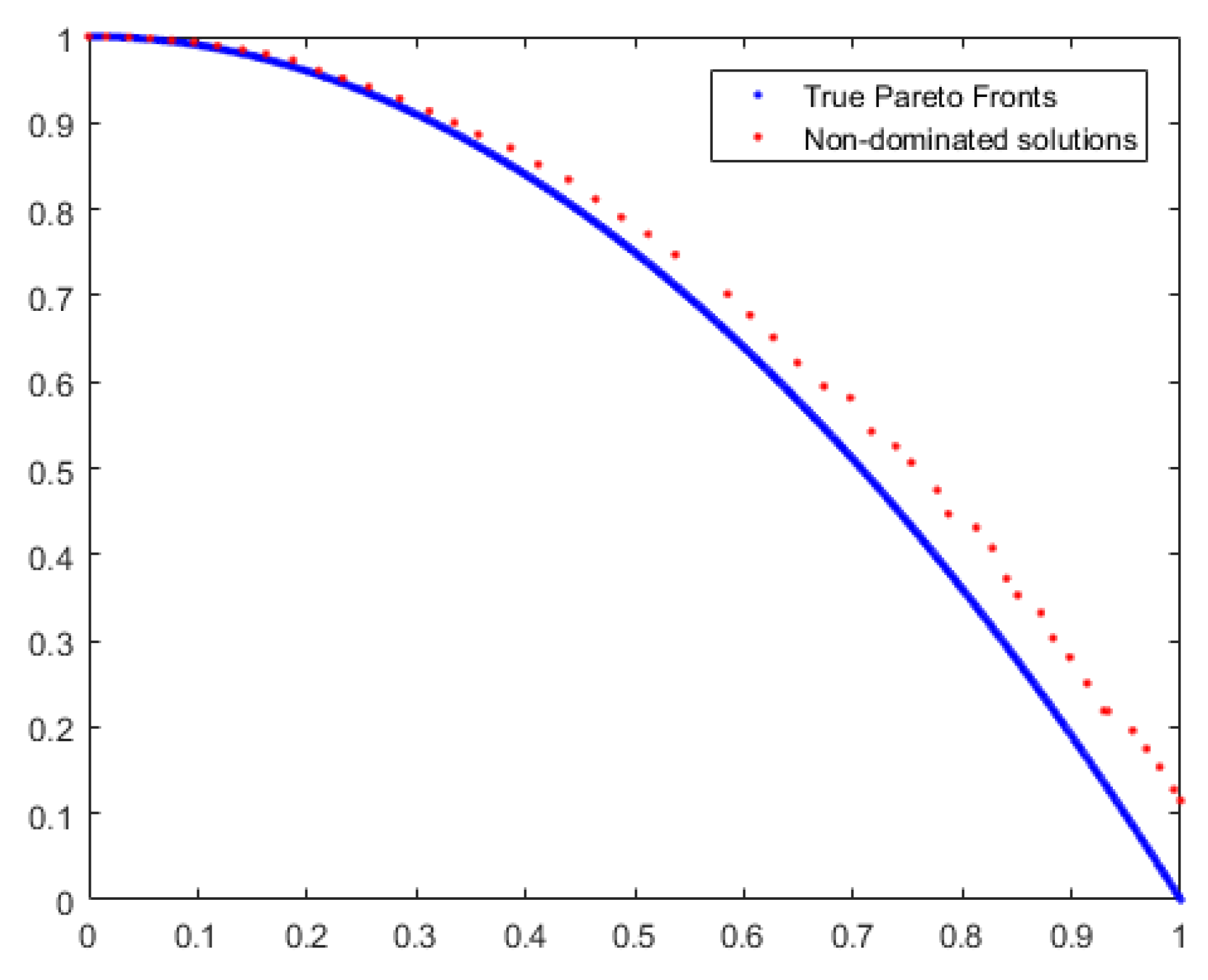

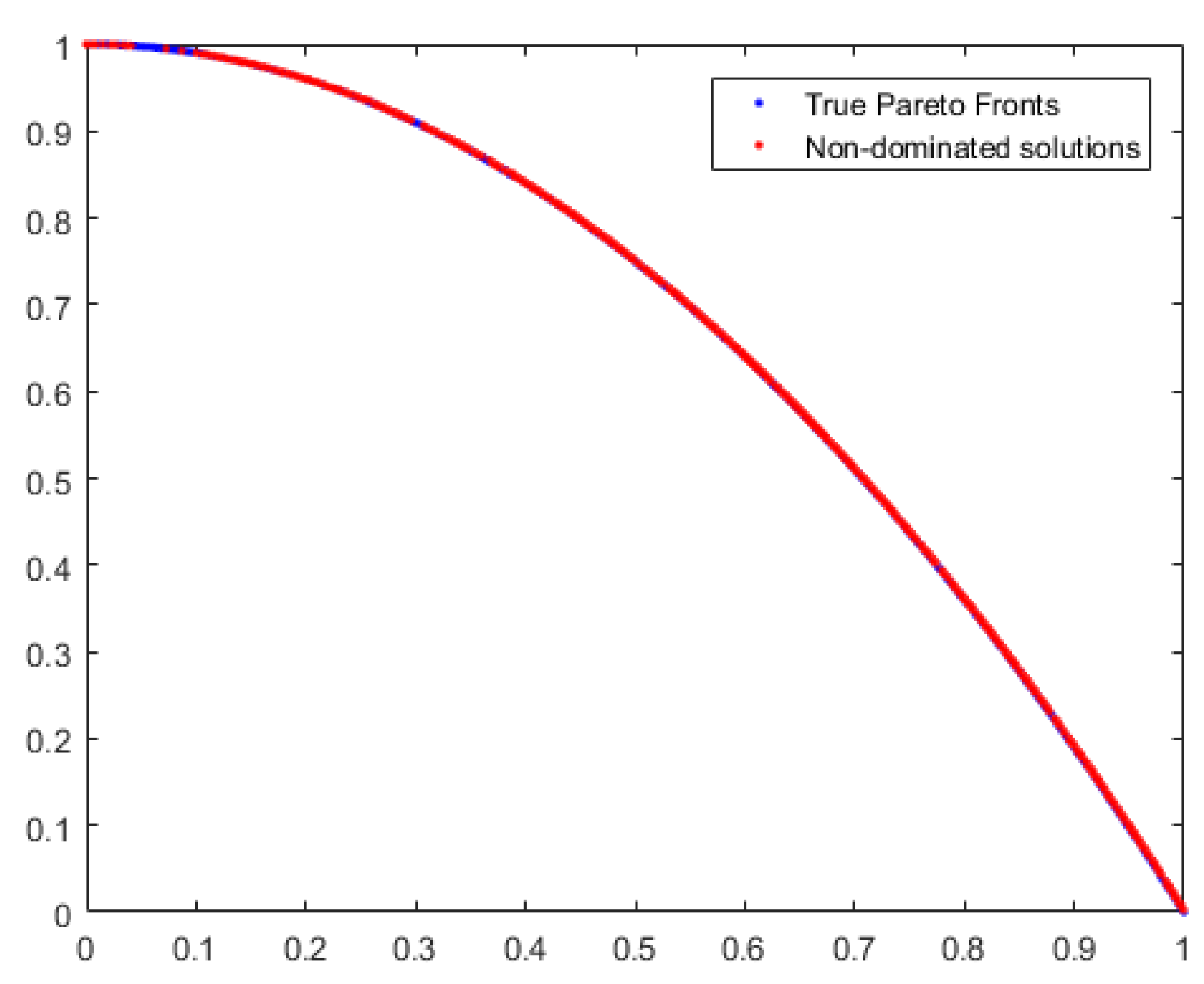

To demonstrate the accuracy of our work, we compare the non-dominated solutions generated by MOEA/D and MBB-MOGWO with the true Pareto fronts of UF9. We observe the coverage of the solutions to assess their accuracy. The comparison results are depicted in

Figure 4 and

Figure 5.

The blue dots represent the true Pareto fronts of the benchmark function UF9, and the red dots represent the non-dominated solutions obtained by the MOEA/D algorithm and MBB-MOGWO algorithm. The more the red dots fall on the blue dots, the higher the coverage of the solutions. From

Figure 4 and

Figure 5, we can see that the solutions obtained by our method have a wider coverage and higher accuracy.

According to

Table 2, MOPSO has the lowest average Spread on the UF2 function, MOEA/D has the lowest average Spread on the ZDT2 function, and NSGA-II has the lowest Spread on the ZDT3 function. But MBB-MOGWO has the optimal average IGD and average HV on these functions. On ZDT2 and ZDT3 functions, the average IGD is even two orders of magnitude lower than the above algorithms. Therefore, although the average Spread of MBB-MOGWO is not the lowest, the solution obtained is better. Below we take the ZDT2 function as an example to prove that our algorithm can get a better solution. The results are shown in

Figure 6 and

Figure 7.

The blue dots and red dots in the figures still represent the reference solutions and the obtained solutions, respectively. We also can see that the solutions obtained by our method have a wider coverage and higher accuracy.

Overall, by comparing the values of the multi-objective performance metrics of the five algorithms on the five benchmark functions and plotting the coverage of the solutions, we find that our method has faster convergence and diversity. Besides, the solutions obtained by our method have a wider coverage and higher accuracy.

5. MBB-MOGWO-Based Web Service Composition

In this section, we transform the web service composition problem into a QoS-aware multi-objective optimization problem. By optimizing metrics in , the optimal solution for the web service composition can be obtained. Then how to optimize multiple metrics in is key point. Besides, we apply the modified algorithm proposed previously to the web service composition scenario and evaluate it with QWS dataset.

5.1. Modeling the Web Service Composition

Usually, web services have functional attribute and QoS attribute. The functional attribute refers to the functions that the web service can provide. The QoS attribute includes a series of metrics such as throughput, response time, reliability, and availability. In optimization problems within the IoT domain, these metrics and functions are employed to gauge critical attributes such as the cost of resource scheduling, system stability, and real-time performance associated with sensors. When users select different web services to combine, the higher QoS means the better service quality and the better composition solution, with the premise that the function is satisfied. Since QoS has multiple metrics to measure the quality of service, we can abstract the web service composition problem into a QoS-aware multi-objective optimization problem. Below we abstract the web service composition and give some relative definitions.

Definition 6 (QoS multi-tuple). QoS means the quality of a web service. The QoS multi-tuple is represented by the vector , where m represents QoS has a total of m metrics, represents the value of the i-th metric in m, .

QoS attributes often have two categories. One aspect refers to the positive attributes, i.e., the bigger attribute values cause the better QoS, such as throughput, availability or reliability, etc. The other aspect refers to the negative attribute, i.e., the bigger attribute values cause the worse QoS, such as price or response time, etc.

Definition 7 (Abstract web service). We define the abstract web service as a two-tuple , where Q means the QoS of specific web service and means the execution relationship between the web services.

There are four execution relationships between the web services: sequence, loop, parallel, and branch, as shown in Figure 8. The sequence relationship means that all subtasks are executed one by one; the parallel relationship means that all subtasks are executed at the same time, which does not interfere with each other; the loop relationship refers to the subtasks being executed iteratively; the branch relationship means that only one branch will be selected for execution.

Definition 8 (Abstract web service composition). We define the abstract web service composition as a tri-tuple , where , i.e., a web service composition s consists of k different web services; represents the global QoS of the web service composition; represents the value of the i-th metric in m global QoS metrics, ; represents the weight of each QoS metric, and .

Through the above abstract description, we transform the web service composition problem into a QoS-aware multi-objective optimization problem. By measuring the of the web service composition, we can judge the pros and cons of the combination solutions. The is a combination of the of each web service in the composition. Different execution sequences have different aggregation equations to calculate the which consists of . As shown in Table 3, represents the six QoS metrics, and . 5.2. Application of MBB-MOGWO on Web Service Composition

The execution flow of MBB-MOGWO-based web service composition is shown in

Figure 9.

The key content of combining the MBB-MOGWO with the web service composition mainly includes initializing the positions of the wolves, calculating the costs of all wolves, and updating the positions of the wolves. In these three parts, we have adopted encoding, fitness function and position update strategy, which make our method more reasonable. We will discuss the three parts as follows.

5.2.1. Encoding

In the scenario of web service composition, the global QoS depends on the execution relationship between each subtask. This paper considers the sequential workflow. We assume that each wolf represents a solution to the web service composition. Each wolf has a position vector and a cost vector. The dimension of the wolf’s position is the number of required subtasks. The range of each dimension is the candidate services for the subtask. We need to select one of the candidate services for every subtask and save the global QoS as the cost vector of Wolf. Finally, by comparing the cost, the MBB-MOGWO algorithm updates the archive and obtains the optimal web service composition.

In order to make the algorithm more suitable for web service composition problem, we use integer coding to indicate which candidate service is selected for each subtask. For example, , where . That is, the web service composition has four subtasks, subtask 1 selects the candidate service 3, subtask 2 selects the candidate service 4, and so on. s is the position vector of a grey wolf, and S represents each grey wolf.

5.2.2. Fitness Function

In the optimization process, we need to judge the adaptability of each wolf through the fitness function, and retain the wolves with higher fitness, so that the wolves continue to approach the optimal solution. Since QoS has two types of attributes, i.e., positive attribute and negative attribute, we transform the web service composition problem into a bi-objective problem that optimizes the positive attribute and the negative attribute, shown in Formula (

13):

where

P represents the positive attribute,

T,

A, and

S represent throughput, availability, and success rate respectively;

N represents the negative attribute,

t,

l, and

p represent response time, latency, and price respectively.

is the weight of each QoS metric. The smaller the values of

P and

N, the higher the fitness of wolf, that is, the better the solution of the web service composition.

In the fitness function, each QoS metric (such as

) is calculated by the aggregation equation in

Section 5.1. Since the values of the different metrics have large differences, normalization processing is required before they are used. Assuming

represents the

h-th metric of the QoS attribute, we use Equation (

14) to normalize all the metrics:

after normalization, all values are stipulated between

.

5.2.3. Position Update

In MBB-MOGWO algorithm, the position information of the wolves’ changes within a continuous range, and the calculation rules involved in the algorithm are also for continuous variables. However, as we presented in previous subsection, the candidate service for each subtask is a discrete number based on integers, so we need to discretize the continuous variables.

There are usually three main discretization strategies, probability processing, operator redefinition, and direct conversion. However, the method of probabilistic processing has too few application scenarios, and operator redefinition has higher complexity. Therefore, we use the direct conversion method to discretize the position information of the wolves. After each position is updated, we replace the actual position of the grey wolf with the nearest value from the actual position in the discrete domain. Despite there may be cases where lots of continuous variables point to the same discrete variable, the calculation results show that in the high dimensional optimization problem, after the discretization processing, the algorithm still has high stability and does not fall into local optimum.

5.3. Experiments and Results Analysis

According to the NFL theorem [

33], no perfect optimization methods exist to solve all kinds of optimization issues. The superiority of the optimizer to a type of problems is not necessarily useful for another type of problems. So we need to make an evaluation of our modified method. We select the QWS dataset that is commonly used in web service composition problems to evaluate our method. The QWS dataset contains 2507 real web service data [

15], and a total of 9 QoS metrics are counted. The 9 QoS metrics and their descriptions are shown in

Table 4.

Among these QoS metrics, we have selected six more important metrics, which are availability, reliability, throughput, response time, success rate and latency. The response time and latency are negative attributes, and the rests are positive attributes. We select 2500 data in QWS dataset to conduct experiments. We assume that a web service composition consists of 10 subtasks, and each subtask has 250 candidate services.

In the experiments, we compare the MBB-MOGWO algorithm with NSGA-II algorithm, MOEA/D algorithm, MOPSO algorithm and MOGWO algorithm. The experiment environment is the same as

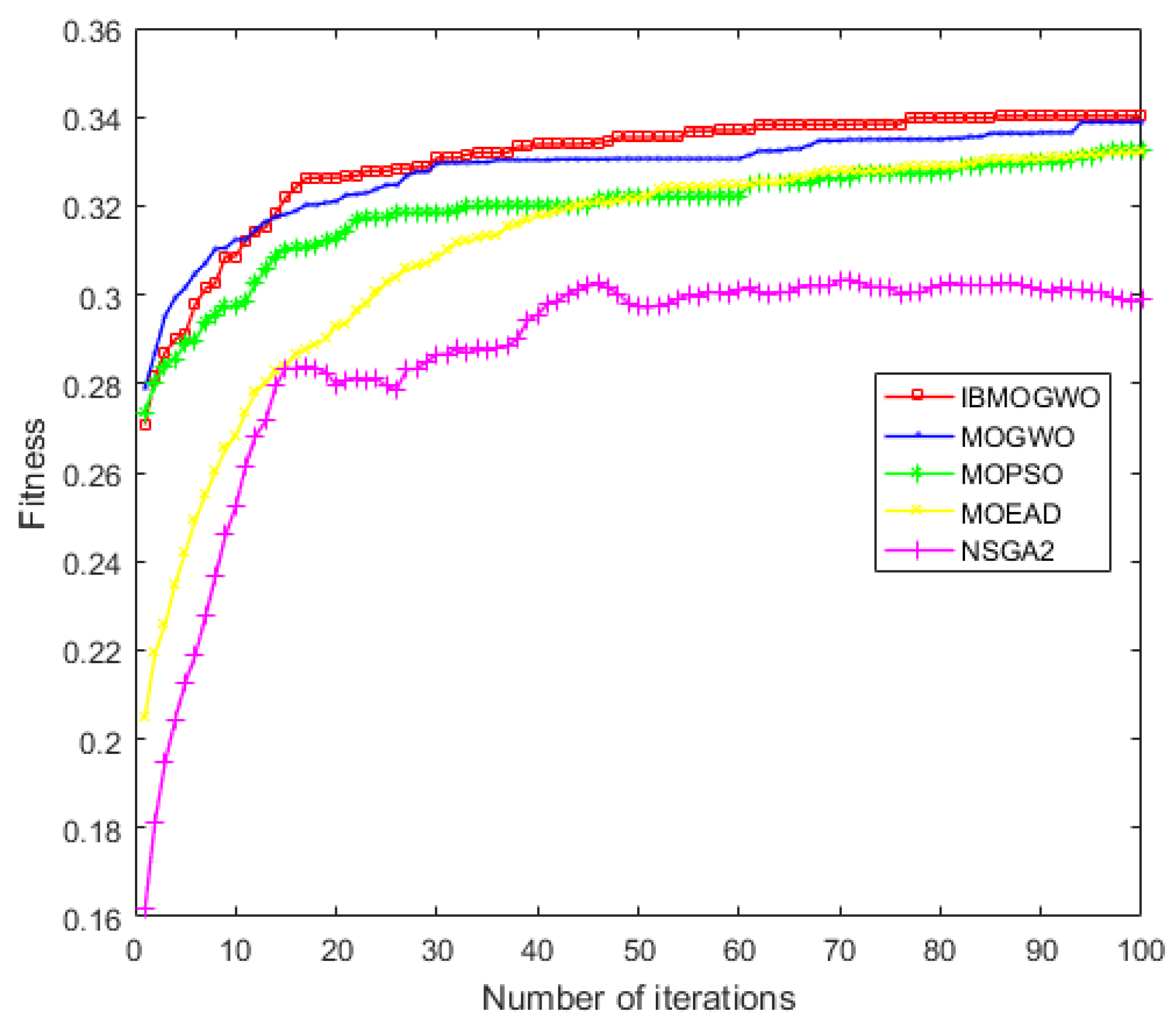

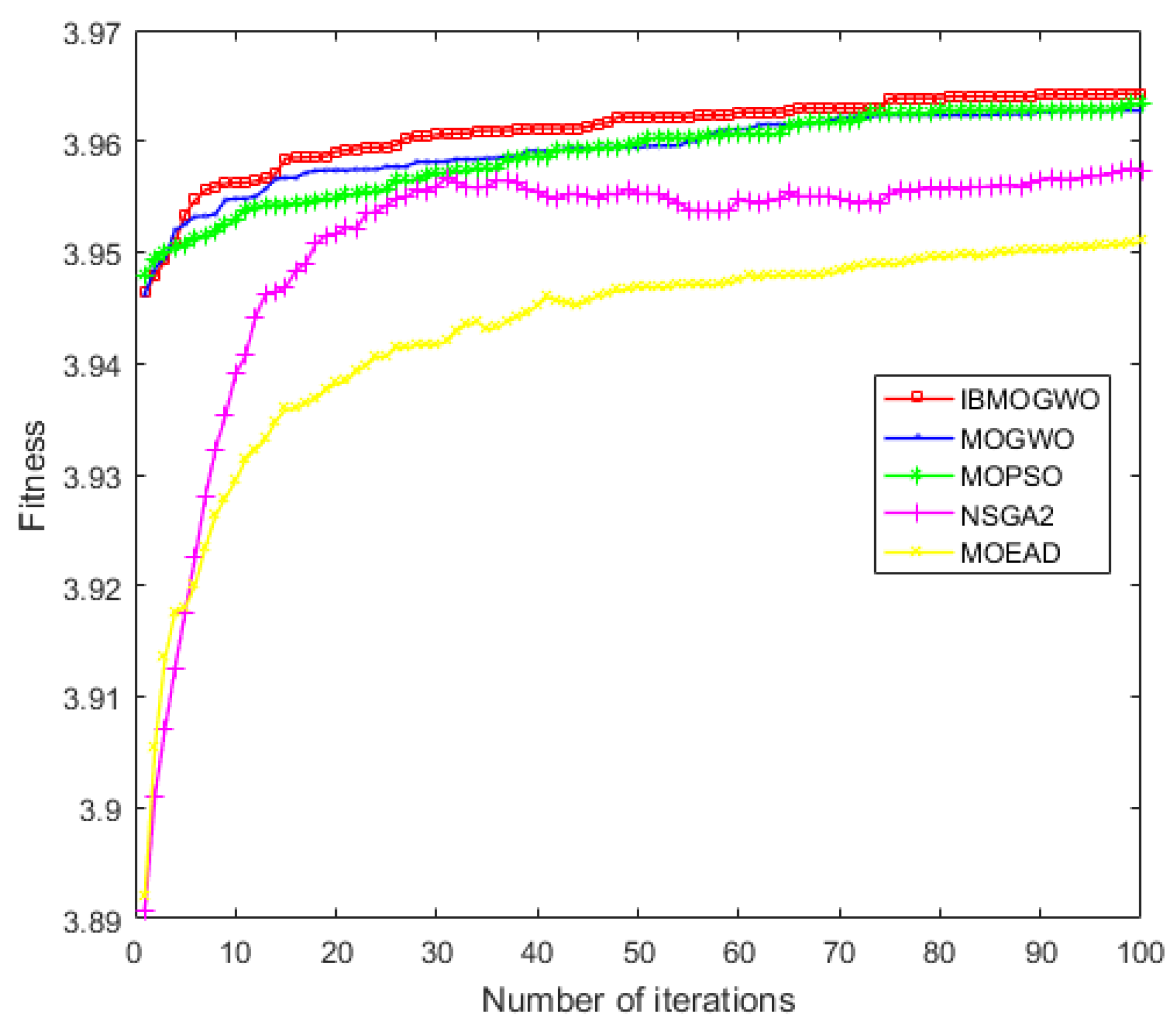

Section 4.1 and the key parameters are configured as follows. The maximum of iterations is set to 100. The number of grey wolves is set to 100. Initial temperature is set to 600. We perform experiments in the way that each algorithm tests 20 times.

To evaluate our method, we calculated the best, worst, average, and standard deviation of HV in 20 web service composition experiments for each algorithm, which is shown in

Table 5. The analysis demonstrates that in both the best and worst cases, the HV values of MBB-MOGWO consistently surpass those of other algorithms, with the average result being the highest. This suggests that the MBB-MOGWO algorithm exhibits superior convergence and diversity in addressing web service composition problems. Notably, among the five algorithms, MBB-MOGWO boasts the lowest standard deviation of HV, indicating enhanced stability compared to its counterparts.

To further illustrate the advantages of the MBB-MOGWO algorithm, we averaged the experimental results of 20 tests for each algorithm and plotted the change trend of the fitness values, which shown in

Figure 10 and

Figure 11.

The abscissa represents iterations, and the ordinate represents the fitness values of the positive/negative attribute. From the figures, we can see that the fitness values of all methods increased rapidly at the beginning of the experiment. With iteration increases, the trend of fitness values tends to be stable. Finally, the fitness of our method is higher than the baseline algorithms. That is, the QoS of the web service composition found by our method is better.

Through the evaluation, we find that the MBB-MOGWO algorithm shows better performance on the web service composition problem, which has fast convergence speed and diversity. By balancing the exploration and exploitation phases, it does not easily fall into a local optimum, which improves the accuracy of the solution. So the MBB-MOGWO algorithm is more conducive to finding a better quality web service composition.