Remote Emotion Recognition Using Continuous-Wave Bio-Radar System

Abstract

1. Introduction

- Demonstrates the radar system’s capability to recognize emotions with a remarkable accuracy of 99.7%, marking a significant advancement in the field of non-contact emotional analysis. It is important to note that these results are specific to the population studied, under the conditions of the study, and using the established emotion induction protocol;

- Establishes that the radar system can match or even exceed the performance of traditional contact-based emotion recognition systems, showcasing its viability as an effective alternative;

- The emotion induction protocol utilized, which has been validated in previous studies [9,11,12,13], effectively mitigated emotional contamination. This approach advanced the successful elicitation of emotions while concurrently eliminating confounding variables. Through this protocol, the study ensured a controlled environment for emotion induction, enhancing the reliability of the emotional responses observed.

2. Related Work

3. Materials and Methods

3.1. Setup and Experiment Protocol Description

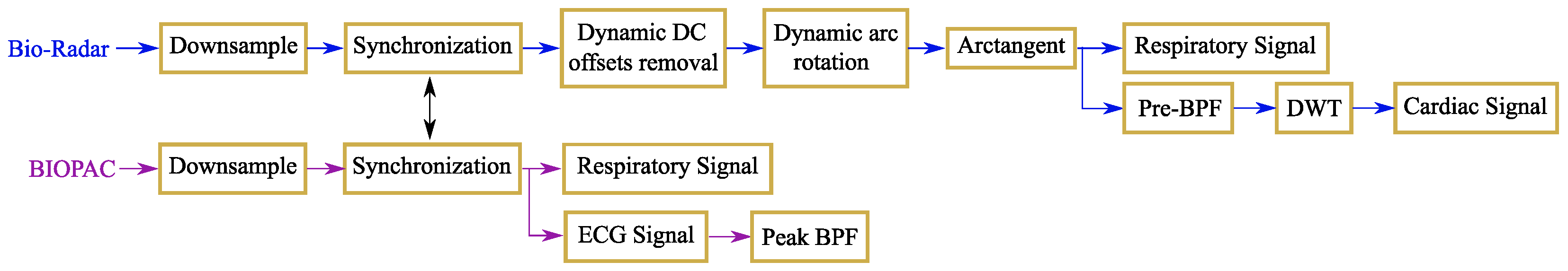

3.2. Vital Signs Extraction

3.3. Window-Based HRV Parameters

4. Features Extraction

5. Feature Selection

Feature Selection Discussion

6. Classification Results

- Cross-validation () using the leave-one-out strategy [10];

- Testing stage () using a hold-out strategy where of the dataset of each condition was used to train the model and the remaining was used to test it. The partition of data was performed randomly and repeated 20 times. The results are presented as the mean value and standard deviation of the accuracy and F1-score.

6.1. Binary Problem

6.2. Multiclass Problem

7. Discussion of the State-of-the-Art Related Works

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boric-Lubecke, O.; Lubecke, V.; Droitcour, A.; Park, B.; Singh, A. Doppler Radar Physiological Sensing; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Connelly, M.A.; Brown, J.T.; Kearns, G.L.; Anderson, R.A.; St Peter, S.D.; Neville, K.A. Pupillometry: A non-invasive technique for pain assessment in paediatric patients. Arch. Dis. Child. 2014, 99, 1125–1131. [Google Scholar] [CrossRef]

- Vasa, R.A.; Keefer, A.; Reaven, J.; South, M.; White, S.W. Priorities for advancing research on youth with autism spectrum disorder and co-occurring anxiety. J. Autism Dev. Disord. 2018, 48, 925–934. [Google Scholar] [CrossRef]

- Mauss, I.B.; Robinson, M.D. Measures of emotion: A review. Cogn. Emot. 2009, 23, 209–237. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Laborde, S.; Mosley, E.; Thayer, J. Heart Rate Variability and Cardiac Vagal Tone in Psychophysiological Research—Recommendations for Experiment Planning, Data Analysis, and Data Reporting. Front. Psychol. 2017, 8, 213. [Google Scholar] [CrossRef]

- Shaffer, F.; Ginsberg, J.P. An overview of heart rate variability metrics and norms. Front. Public Health 2017, 5, 258. [Google Scholar] [CrossRef]

- Nawaz, M.; Saleem, S.; Masood, M.; Rashid, J.; Nazir, T. COVID-ECG-RSNet: COVID-19 classification from ECG images using swish-based improved ResNet model. Biomed. Signal Process. Control 2024, 89, 105801. [Google Scholar] [CrossRef]

- Pinto, G.; Carvalho, J.M.; Barros, F.; Soares, S.C.; Pinho, A.J.; Brás, S. Multimodal emotion evaluation: A physiological model for cost-effective emotion classification. Sensors 2020, 20, 3510. [Google Scholar] [CrossRef]

- Gouveia, C.; Tomé, A.; Barros, F.; Soares, S.C.; Vieira, J.; Pinho, P. Study on the usage feasibility of continuous-wave radar for emotion recognition. Biomed. Signal Process. Control 2020, 58, 101835. [Google Scholar] [CrossRef]

- Ferreira, J.; Parma, V.; Alho, L.; Silva, C.F.; Soares, S.C. Emotional body odors as context: Effects on cardiac and subjective responses. Chem. Senses 2018, 43, 347–355. [Google Scholar] [CrossRef]

- Barros, F.; Figueiredo, C.; Brás, S.; Carvalho, J.M.; Soares, S.C. Multidimensional assessment of anxiety through the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA): From dimensionality to response prediction across emotional contexts. PLoS ONE 2022, 17, e0262960. [Google Scholar] [CrossRef]

- Ferreira, J.; Brás, S.; Silva, C.F.; Soares, S.C. An automatic classifier of emotions built from entropy of noise. Psychophysiology 2017, 54, 620–627. [Google Scholar] [CrossRef]

- Zhang, L.; Fu, C.H.; Hong, H.; Xue, B.; Gu, X.; Zhu, X.; Li, C. Non-Contact Dual-Modality Emotion Recognition System by CW Radar and RGB Camera. IEEE Sens. J. 2021, 21, 23198–23212. [Google Scholar] [CrossRef]

- Zhao, M.; Adib, F.; Katabi, D. Emotion recognition using wireless signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 95–108. [Google Scholar]

- Siddiqui, H.U.R.; Shahzad, H.F.; Saleem, A.A.; Khan Khakwani, A.B.; Rustam, F.; Lee, E.; Ashraf, I.; Dudley, S. Respiration Based Non-Invasive Approach for Emotion Recognition Using Impulse Radio Ultra Wide Band Radar and Machine Learning. Sensors 2021, 21, 8336. [Google Scholar] [CrossRef]

- Gao, Q.; Zhang, L.; Yan, J.; Zhao, H.; Ding, C.; Hong, H.; Zhu, X. Non-contact emotion recognition via CW Doppler radar. In Proceedings of the 2018 Asia-Pacific Microwave Conference (APMC), IEEE, Kyoto, Japan, 6–9 November 2018; pp. 1468–1470. [Google Scholar]

- Yin, H.; Yu, S.; Zhang, Y.; Zhou, A.; Wang, X.; Liu, L.; Ma, H.; Liu, J.; Yang, N. Let IoT Know You Better: User Identification and Emotion Recognition Through Millimeter-Wave Sensing. IEEE Int. Things J. 2022, 10, 1149–1161. [Google Scholar] [CrossRef]

- Huang, L.; Yu, K.; Song, T.; Wang, H.; Yuan, F.; Zhang, Y.; Yang, H. Research on Emotion Recognition Based on Multisource Signals. In Proceedings of the 2023 IEEE Statistical Signal Processing Workshop (SSP), IEEE, Hanoi, Vietnam, 2–5 July 2023; pp. 280–284. [Google Scholar]

- Siddiqui, H.U.R.; Zafar, K.; Saleem, A.A.; Raza, M.A.; Dudley, S.; Rustam, F.; Ashraf, I. Emotion classification using temporal and spectral features from IR-UWB-based respiration data. Multimed. Tools Appl. 2023, 82, 18565–18583. [Google Scholar] [CrossRef]

- Dang, X.; Chen, Z.; Hao, Z. Emotion recognition method using millimetre wave radar based on deep learning. IET Radar Sonar Navig. 2022, 16, 1796–1808. [Google Scholar] [CrossRef]

- Zeng, K.; Liu, G. Emotion recognition based on millimeter wave radar. In Proceedings of the 2023 3rd International Conference on Bioinformatics and Intelligent Computing, Sanya, China, 10–12 February 2023; pp. 232–236. [Google Scholar]

- Alam, A.; Urooj, S.; Ansari, A.Q. Design and Development of a Non-Contact ECG-Based Human Emotion Recognition System Using SVM and RF Classifiers. Diagnostics 2023, 13, 2097. [Google Scholar] [CrossRef]

- Gouveia, C.; Albuquerque, D.; Vieira, J.; Pinho, P. Dynamic Digital Signal Processing Algorithm for Vital Signs Extraction in Continuous-Wave Radars. Remote Sens. 2021, 13, 4079. [Google Scholar] [CrossRef]

- Kim, J.G.; Sim, S.H.; Cheon, S.; Hong, S. 24 GHz circularly polarized Doppler radar with a single antenna. In Proceedings of the 2005 European Microwave Conference, IEEE, Paris, Italy, 4 October 2005; Volume 2, p. 4. [Google Scholar]

- de Groot, J.H.; Smeets, M.A.; Rowson, M.J.; Bulsing, P.J.; Blonk, C.G.; Wilkinson, J.E.; Semin, G.R. A sniff of happiness. Psychol. Sci. 2015, 26, 684–700. [Google Scholar] [CrossRef]

- Gouveia, C.; Albuquerque, D.; Pinho, P.; Vieira, J. Evaluation of Heartbeat Signal Extraction Methods using a 5.8 GHz Doppler Radar System in a Real Application Scenario. IEEE Sens. J. 2022, 22, 7979–7989. [Google Scholar] [CrossRef]

- Park, B.K.; Lubecke, V.; Boric-Lubecke, O.; Host-Madsen, A. Center tracking quadrature demodulation for a Doppler radar motion detector. In Proceedings of the IEEE/MTT-S International Microwave Symposium, Honolulu, HI, USA, 3–8 June 2007; pp. 1323–1326. [Google Scholar]

- Park, B.K.; Boric-Lubecke, O.; Lubecke, V.M. Arctangent demodulation with DC offset compensation in quadrature Doppler radar receiver systems. IEEE Trans. Microw. Theory Tech. 2007, 55, 1073–1079. [Google Scholar] [CrossRef]

- Jang, Y.I.; Sim, J.Y.; Yang, J.R.; Kwon, N.K. The Optimal Selection of Mother Wavelet Function and Decomposition Level for Denoising of DCG Signal. Sensors 2021, 21, 1851. [Google Scholar] [CrossRef]

- Kathirvel, P.; Manikandan, M.S.; Prasanna, S.; Soman, K. An efficient R-peak detection based on new nonlinear transformation and first-order Gaussian differentiator. Cardiovasc. Eng. Technol. 2011, 2, 408–425. [Google Scholar] [CrossRef]

- Jaakkola, J.; Jaakkola, S.; Lahdenoja, O.; Hurnanen, T.; Koivisto, T.; Pänkäälä, M.; Knuutila, T.; Kiviniemi, T.O.; Vasankari, T.; Airaksinen, K.J. Mobile phone detection of atrial fibrillation with mechanocardiography: The MODE-AF Study (Mobile Phone Detection of Atrial Fibrillation). Circulation 2018, 137, 1524–1527. [Google Scholar] [CrossRef]

- Kaisti, M.; Tadi, M.J.; Lahdenoja, O.; Hurnanen, T.; Saraste, A.; Pänkäälä, M.; Koivisto, T. Stand-alone heartbeat detection in multidimensional mechanocardiograms. IEEE Sens. J. 2018, 19, 234–242. [Google Scholar] [CrossRef]

- Gouveia, C.; Albuquerque, D.F.; Pinho, P.; Vieira, J. Bio-Radar Cardiac Signal Model Used for HRV Assessment and Evaluation Using Adaptive Filtering. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Ramachandran, G.; Singh, M. Three-dimensional reconstruction of cardiac displacement patterns on the chest wall during the P, QRS and T-segments of the ECG by laser speckle inteferometry. Med. Biol. Eng. Comput. 1989, 27, 525–530. [Google Scholar] [CrossRef]

- Electrophysiology, Task Force of the European Society of Cardiology the North American Society of Pacing. Heart rate variability: Standards of measurement, physiological interpretation, and clinical use. Circulation 1996, 93, 1043–1065. [Google Scholar] [CrossRef]

- Rojo-Álvarez, J.L.; Sanchez-Sanchez, A.; Barquero-Perez, O.; Goya-Esteban, R.; Everss, E.; Mora-Jimenez, I.; Garcia-Alberola, A. Analysis of physiological meaning of detrended fluctuation analysis in heart rate variability using a lumped parameter model. In Proceedings of the 2007 Computers in Cardiology, IEEE, Durham, NC, USA, 30 September–3 October 2007; pp. 25–28. [Google Scholar]

- Picard, R.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Hahs-Vaughn, D.L.; Lomax, R.G. Statistical Concepts—A Second Course, 5th ed.; Routledge: London, UK, 2020. [Google Scholar]

- Zita, I. Automatic Audio Signal Analysis for the Detection of Anomalies in Calls. Master’s Thesis, University of Aveiro, Aveiro, Portugal, 2021. [Google Scholar]

- Li, C.; Xiao, Y.; Lin, J. Design guidelines for radio frequency non-contact vital sign detection. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, IEEE, Lyon, France, 22–26 August 2007; pp. 1651–1654. [Google Scholar]

- Quintana, D.S. Statistical considerations for reporting and planning heart rate variability case-control studies. Psychophysiology 2017, 54, 344–349. [Google Scholar] [CrossRef] [PubMed]

- McGinley, J.J.; Friedman, B.H. Autonomic specificity in emotion: The induction method matters. Int. J. Psychophysiol. 2017, 118, 48–57. [Google Scholar] [CrossRef] [PubMed]

- Rainville, P.; Bechara, A.; Naqvi, N.; Damasio, A.R. Basic emotions are associated with distinct patterns of cardiorespiratory activity. Int. J. Psychophysiol. 2006, 61, 5–18. [Google Scholar] [CrossRef] [PubMed]

- Wilhelm, F.H.; Grossman, P. Emotions beyond the laboratory: Theoretical fundaments, study design, and analytic strategies for advanced ambulatory assessment. Biol. Psychol. 2010, 84, 552–569. [Google Scholar] [CrossRef]

| Category | Feature No. | Description | Applied Signal |

|---|---|---|---|

| Waveform | F1–F2 | Signal Rate | CS and RS |

| F3–F4 | AppEn | CS and RS | |

| F5–F7 | First derivative | RS, RS-N, IBI-CS | |

| F8–F10 | Second derivative | RS, RS-N, IBI-CS | |

| F11 | Energy Ratio | RS | |

| F12 | Kurtosis | RS | |

| F13 | Peak Width | RS | |

| F14 | Variance | RS | |

| Statistical | F15–F18 | Sk, Med, IQR, Av | IBI-CS |

| F19–F22 | IBI-RS | ||

| F23–F26 | CS | ||

| F27–F30 | RS | ||

| F31–F34 | Inhale (RS) | ||

| F35–F38 | Exhale (RS) | ||

| Spectral | F39–F44 | PSD | RS |

| F45 | PSD ratio | RS | |

| HRV parameters | F46–F47 | SDNN | IBI-CS, IBI-RS |

| F48–F49 | RMSSD | IBI-CS, IBI-RS | |

| F50 | pNN50 | IBI-CS | |

| F51–F52 | DFA2 and | IBI-CS | |

| F53–F56 | Poincaré plot for | IBI-CS | |

| F57–F60 | Poincaré plot for | IBI-CS |

| Bio-Radar | BIOPAC | ||

|---|---|---|---|

| Feature | Description | Feature | Description |

| F28 | Median of RS | F25 | IQR of CS |

| F34 | Mean of inhale time | F55 | SD12 for |

| F2 | RS rate | F24 | Median of CS |

| F43 | PSD in 0.4–0.9 Hz | F51 | DFA2 |

| F23 | Skewness of CS | F34 | Median of inhale time |

| F3 | AppEn of CS | F2 | RS rate |

| F42 | PSD in 0.3–0.4 Hz band | F45 | PSD ratio |

| F9 | Second derivative of RS-N | F27 | Skewness of RS |

| F27 | Skewness of RS | F41 | PSD in 0.2–0.3 Hz |

| F25 | IQR of CS | F3 | AppEn of CS |

| F58 | SD2 for | F10 | Second derivative of IBI-CS |

| F51 | DFA2 | F4 | AppEn of RS |

| F37 | IQR of exhale time | F12 | Kurtosis of RS |

| F40 | PSD in 0.1–0.2 Hz band | F35 | Skewness of exhale time |

| F18 | Mean of IBI-CS | F54 | SD2 for |

| F45 | PSD ratio | F43 | PSD in 0.4–0.9 Hz |

| F39 | PSD in 0–0.1 Hz band | F19 | Skewness of IBI-RS |

| F5 | First derivative of RS | F9 | Second derivative of RS-N |

| F55 | SD12 for | F48 | RMSSD for IBI-CS |

| F11 | Energy ratio | ||

| F12 | Kurtosis of RS | ||

| F15 | Skewness of IBI-CS | ||

| F47 | SDNN of IBI-RS | ||

| Accuracy (%) | HN [] | FH [] | FN [] | ||||

|---|---|---|---|---|---|---|---|

| SVM | bR | ||||||

| bP | |||||||

| KNN | bR | ||||||

| bP | |||||||

| RFO | bR | ||||||

| bP | |||||||

| Accuracy [] | F1-Score [] | |||

|---|---|---|---|---|

| SVM | bR | |||

| bP | ||||

| KNN | bR | |||

| bP | ||||

| RFO | bR | |||

| bP | ||||

| Work References | [14] | [15] | [16] | [21] | [19] | Current Study | |

|---|---|---|---|---|---|---|---|

| Setup | CW radar @ 2.4 GHz + RGB camera | FMCW radar @ 5.46–7.25 GHz | UWB radar @ 7.29–8.79 GHz | FMCW radar @ 76–81 GHz | CW radar @ 2.4 GHz + camera | CW @ 5.8 GHz | |

| Vital Signs | RS and CS | RS and CS | RR | RS and CS | CS | RS and CS | |

| N° Observations | 2010 (1 min) of 18 Sub. | 400 (2 min) of 11 Sub. | 315 (5 min) of 35 Sub. | 1200 (1 min) of 20 Sub. | 512 (1 min) of 10 Sub. | 1626 (1 min) of 20 Sub. | |

| Tested Classifiers | RFO | SVM | KNN, ETC, ADB, GBM, SV, HV, CNN, MLP | ER-CNN, 1D-CNN, Bi-LSTM | CNN | SVM, KNN, RFO | |

| Emotions | H, N, F, S | H, S, A, P | H, F, D | H, N, S, A | H, S, N, F | H, N, F | |

| N° Features | 63 → 23 | 27 | 3 → 1 | - | - | 60 → 23 | |

| Performance Evaluation | CV | 10-fold | - | 10-fold | - | Leave-one-out | |

| Test | Hold-out with 70:30 ratio over the dataset | Hold-out with 11/12 and 1/12 for test | Hold-out with various ratios over the dataset | Hold-out with 80:10 ratio over the dataset and 10 ratio for validation | Hold-out with 80:10 ratio over the dataset and 10 ratio for validation | Hold-out with 70:30 ratio over 19/20 and 1/20 for test | |

| Results | CV | - | - | - | |||

| Test (Accuracy) | with 80:20 ratio | 90.8 | with 70:30 ratio | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gouveia, C.; Soares, B.; Albuquerque, D.; Barros, F.; Soares, S.C.; Pinho, P.; Vieira, J.; Brás, S. Remote Emotion Recognition Using Continuous-Wave Bio-Radar System. Sensors 2024, 24, 1420. https://doi.org/10.3390/s24051420

Gouveia C, Soares B, Albuquerque D, Barros F, Soares SC, Pinho P, Vieira J, Brás S. Remote Emotion Recognition Using Continuous-Wave Bio-Radar System. Sensors. 2024; 24(5):1420. https://doi.org/10.3390/s24051420

Chicago/Turabian StyleGouveia, Carolina, Beatriz Soares, Daniel Albuquerque, Filipa Barros, Sandra C. Soares, Pedro Pinho, José Vieira, and Susana Brás. 2024. "Remote Emotion Recognition Using Continuous-Wave Bio-Radar System" Sensors 24, no. 5: 1420. https://doi.org/10.3390/s24051420

APA StyleGouveia, C., Soares, B., Albuquerque, D., Barros, F., Soares, S. C., Pinho, P., Vieira, J., & Brás, S. (2024). Remote Emotion Recognition Using Continuous-Wave Bio-Radar System. Sensors, 24(5), 1420. https://doi.org/10.3390/s24051420