Online Learning State Evaluation Method Based on Face Detection and Head Pose Estimation

Abstract

1. Introduction

- (1)

- We propose a face detection network based on ghost module and parameter-free attention (GA-Face). GA-Face reduces the number of parameters and computation required to generate redundant features in the feature extraction network through the ghost module [7]. GA-Face also uses the parameter-free attention module SimAM [8] to focus the network on important features.

- (2)

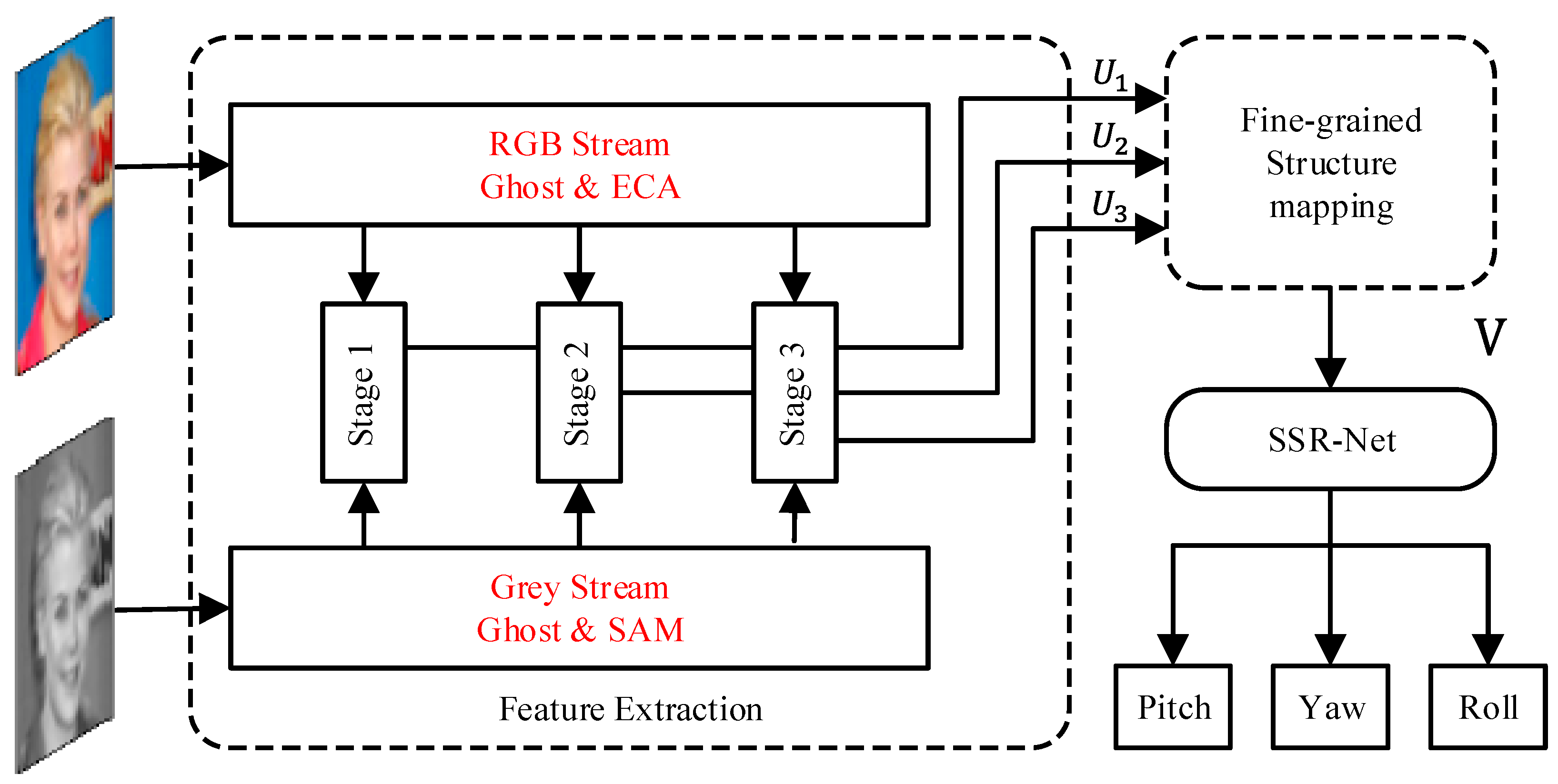

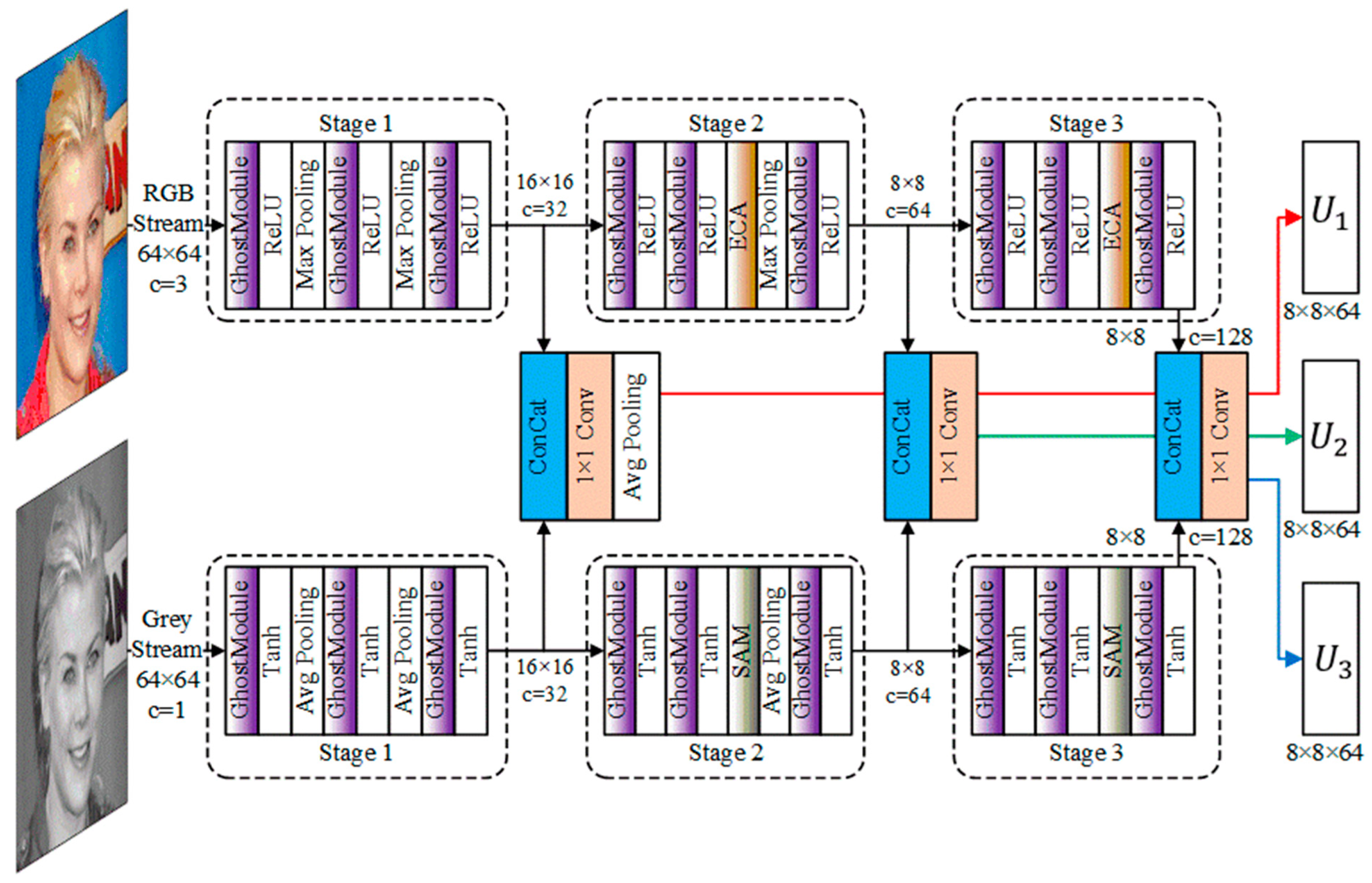

- We propose a head pose estimation network, DB-Net, based on dual-branch attention. DB-Net has two feature extraction branches: color and grayscale. DB-Net uses the ghost module to enhance its ability to extract deep semantic information and reduce its parameter count. DB-Net uses convolutional fusion methods to prevent the generation of useless features.

- (3)

- The real-time detection of student head position and posture on mobile devices using GA-Face and DB-Net, and the scoring of student attention using learning state evaluation algorithms.

2. Related Work

2.1. Facial Detection

2.2. Head Pose Estimation

3. Approach

3.1. Face Detection Network: GA-Face

3.1.1. Architecture of GA-Face

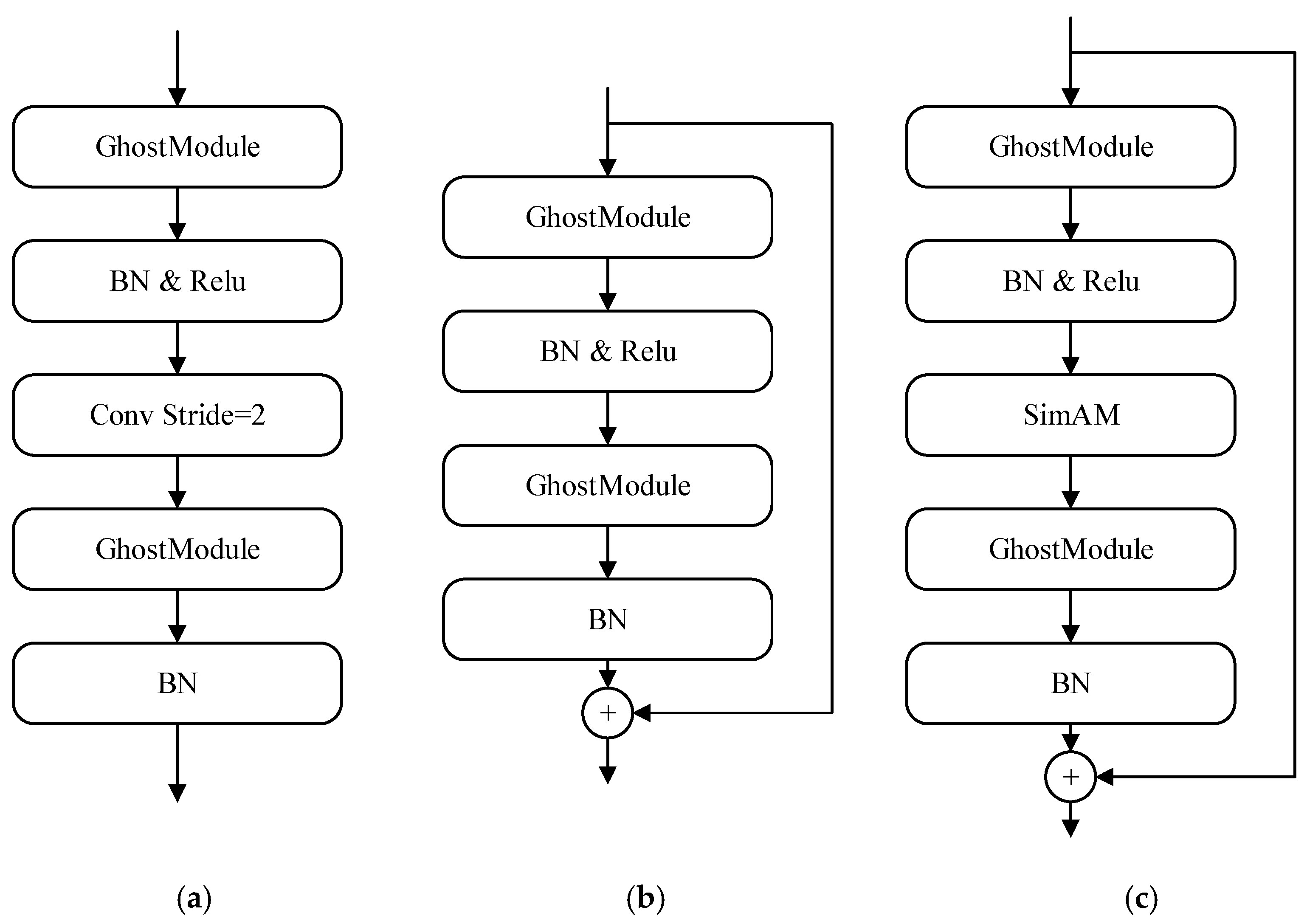

3.1.2. Feature Extraction Module for Facial Detection Network

3.1.3. The Loss Function of Face Detection Networks

3.2. Head Pose Estimation Network: DB-Net

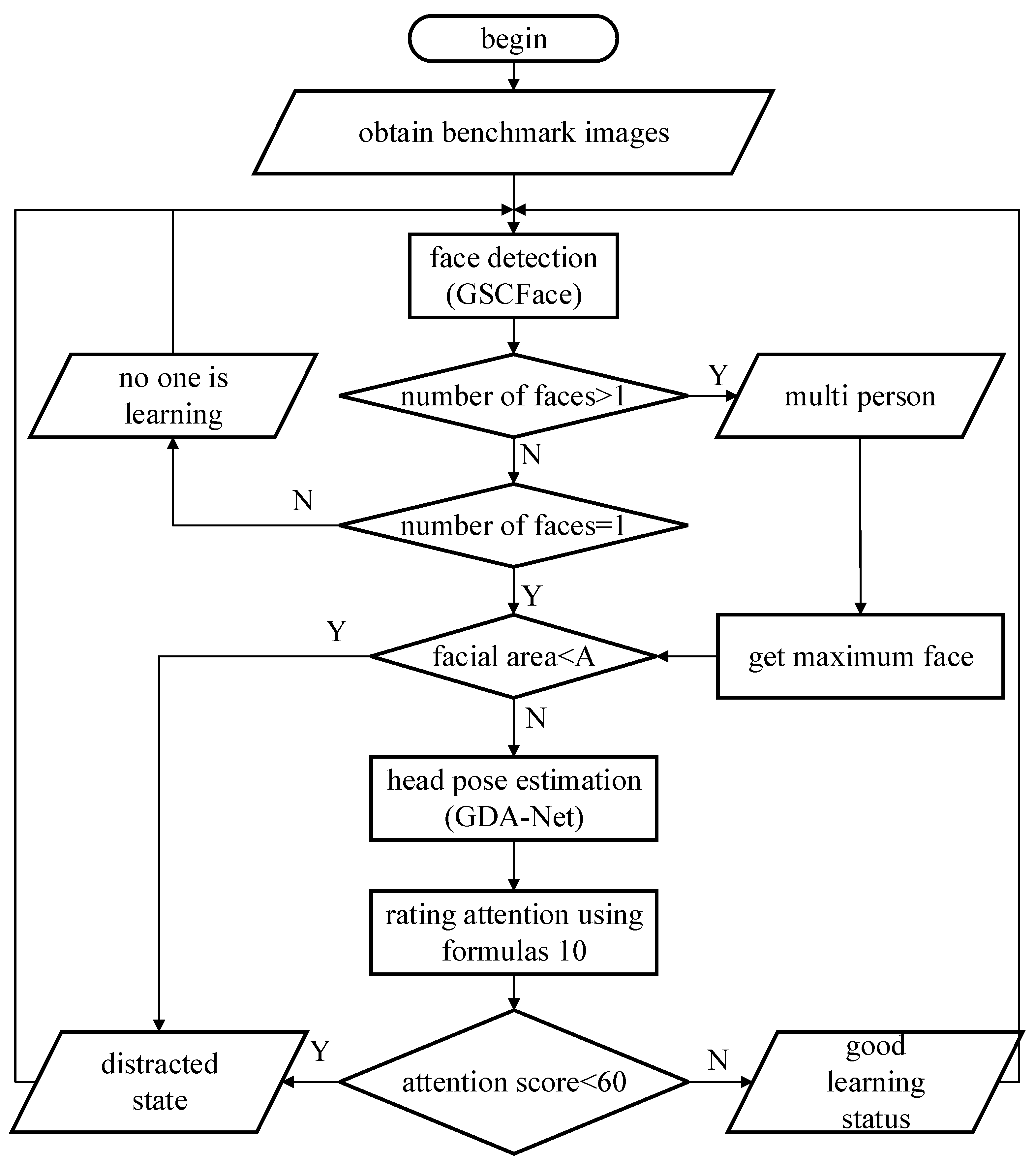

3.3. Learning State Evaluation Algorithm

4. Experiment

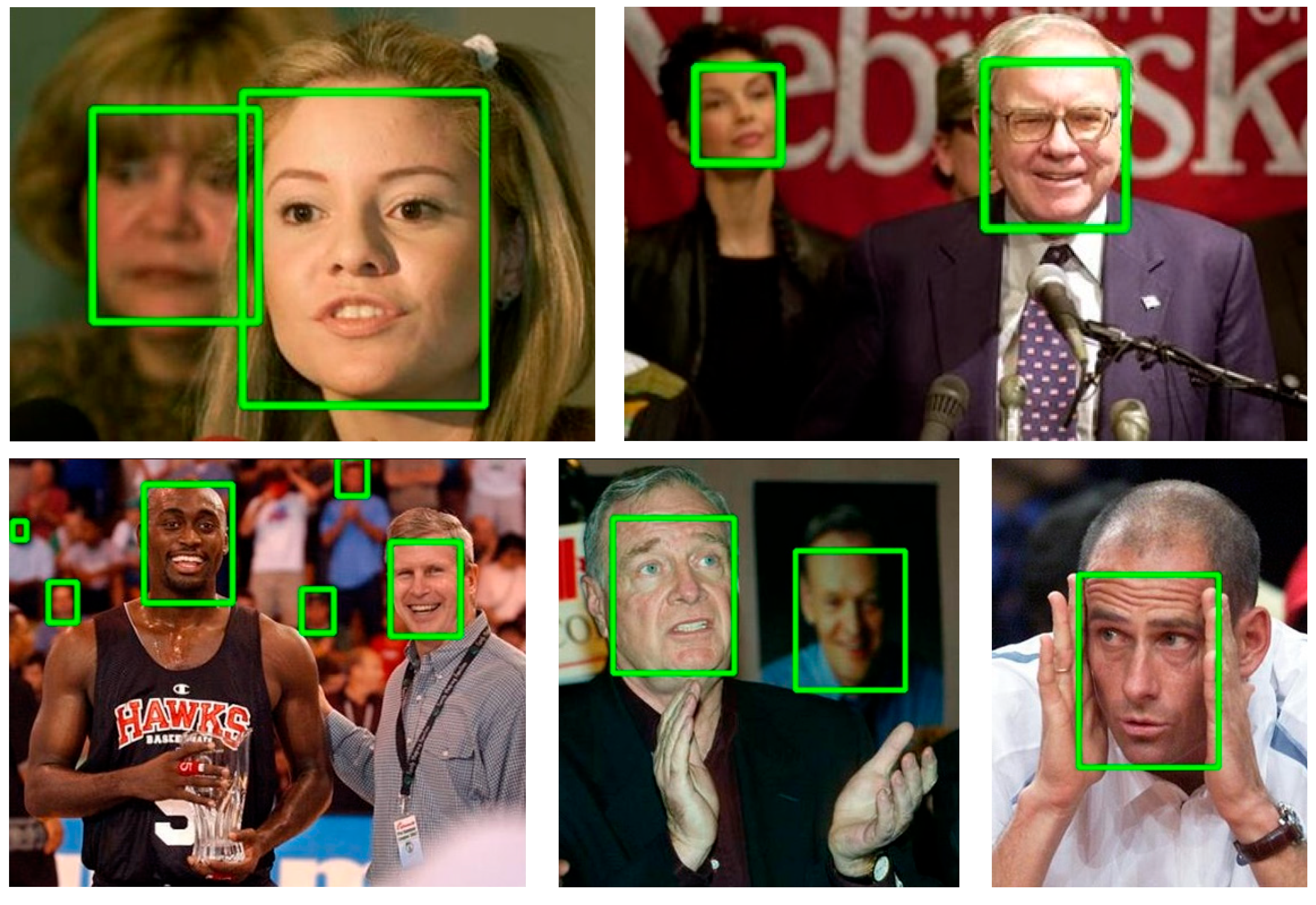

4.1. Effectiveness Evaluation of Face Detection Network GA-Face

- (1)

- RetinaFace has a higher accuracy than CenterFace on both datasets, but due to its approximately 100,000 pre-set anchor boxes, the network running speed is only 16FPS, making it unable to achieve real-time face detection on mobile devices.

- (2)

- The average accuracy of the GA-Face network on the FDDB dataset is 1.3% higher than CenterFace and 0.6% higher than RetinaFace. The accuracy of GA-Face on WiderFace’s Easy and Medium difficulty is on par with CenterFace, while it is slightly lower on Hard difficulty. This is because the ghost module sacrifices some basic features, reducing its ability to detect extremely small and blurry faces, but this does not affect the network’s use in online learning scenarios. The network parameter count of GA-Face is 55% less than that of CenterFace. The FPS of GA-Face doubles compared to RetinaFace and increases by 32% compared to CenterFace. The network can reach a speed of 24FPS when deployed on mobile devices, achieving real-time detection results.

- (3)

- ASFD has multiple versions, with ASFD-D0 designed for mobile devices and ASFD-D6 being the most comprehensive version. The ASFD-D6 version has the highest accuracy, but it has the highest number of parameters and the lowest inference speed. As a mobile-device-specific version, ASFD-D0 has the fastest speed, but its accuracy is lower than GA-Face.

- (4)

- The accuracy of GA-Face using only the ghost module is lower than that of GA-Face on both datasets. The accuracy of using only SimAM’s GA-Face is similar to that of GA-Face, but the speed decreases. SimAM has a slight impact on the speed of the network, but has a positive impact on the accuracy of the network. GA-Face achieves a good balance between speed and accuracy.

4.2. Effectiveness Evaluation of Head Pose Estimation Network DB-Net

- (1)

- The accuracy of DB-Net (ECA&SAM only) is slightly higher than that of FSA-Net, indicating that both ECA and SAM can enhance the network’s feature extraction ability. However, ECA and SAM also slightly increase the number of network parameters, slightly affecting the running speed.

- (2)

- DB-Net (ghost only) has a slightly higher accuracy than FSA-Net, indicating that the ghost module deepens the network and increases the receptive field, which has a positive impact on accuracy. At the same time, the Ghost module reduces the number of parameters and computation, and the network speed is improved.

- (3)

- On two datasets, the AE of DB-Net decreases by 0.36 compared to FSA Net, the number of network parameters decreases by 27%, and the running speed increases by 16%. The network speed on mobile phones can reach 126FPS, meeting the requirements of real-time operation.

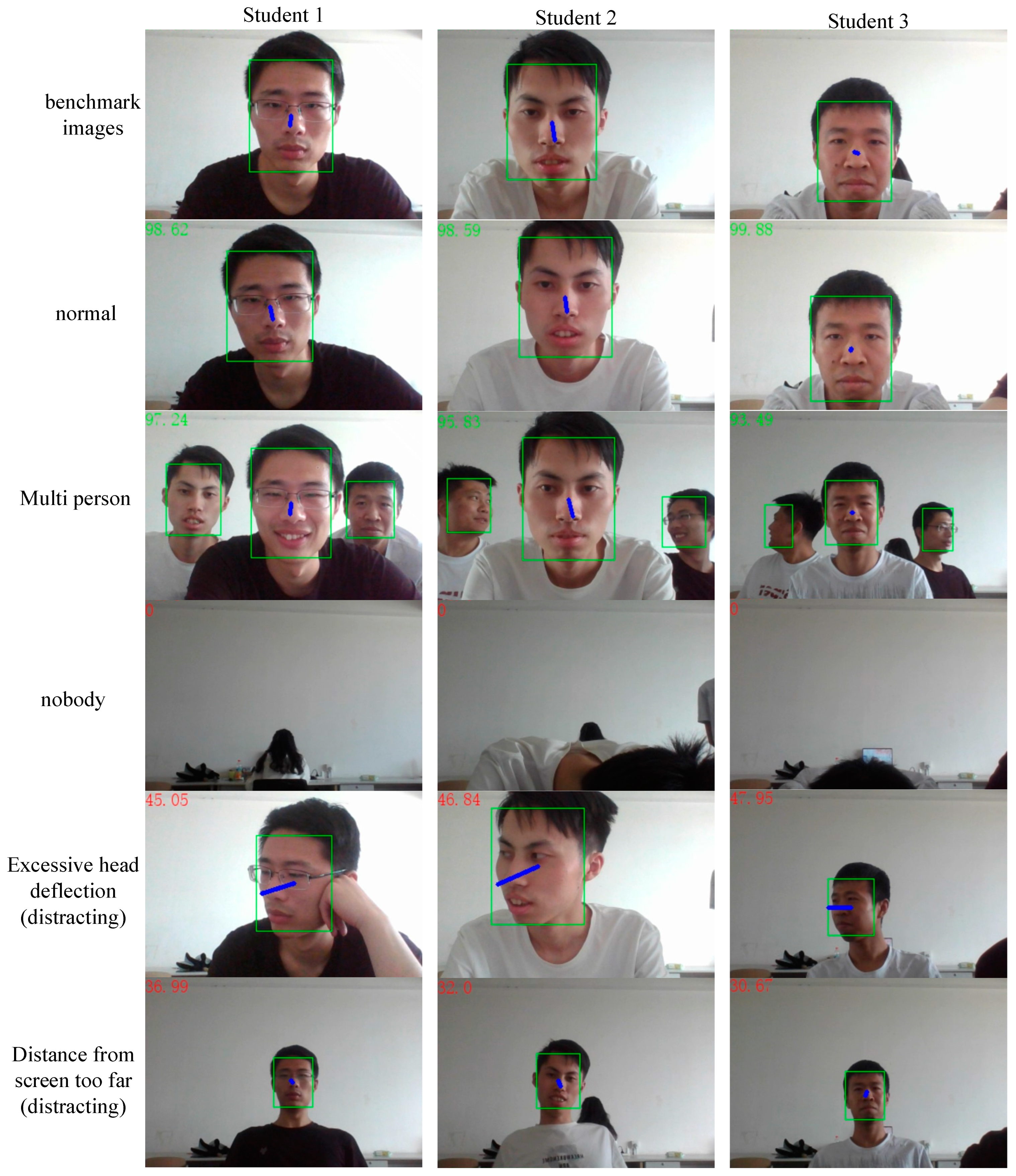

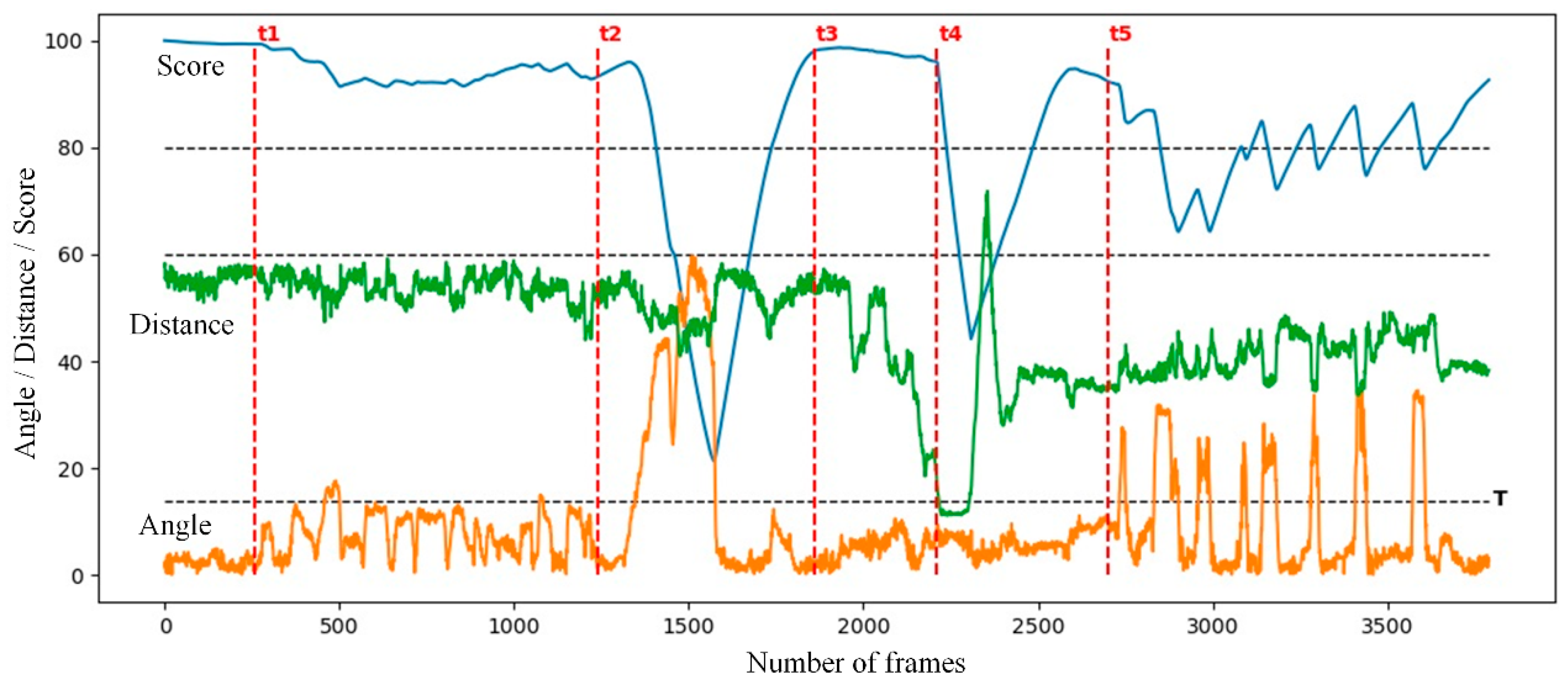

4.3. Evaluation of the Effectiveness of Learning State Evaluation Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- de Avila, U.E.R.; Braga, I.C.; de França Campos, F.R.; Nafital, A.C. Attention detection system based on the variability of heart rate. J. Sens. Technol. 2019, 09, 54–70. [Google Scholar] [CrossRef][Green Version]

- Zhang, S.; Yan, Z.; Sapkota, S.; Zhao, S.; Ooi, W.T. Moment-to-moment continuous attention fluctuation monitoring through consumer-grade EEG device. Sensors 2021, 21, 3419. [Google Scholar] [CrossRef] [PubMed]

- Sharma, P.; Zhang, Z.; Conroy, T.B.; Hui, X.; Kan, E.C. Attention Detection by Heartbeat and Respiratory Features from Radio-Frequency Sensor. Sensors 2022, 22, 8047. [Google Scholar] [CrossRef]

- Palinko, O.; Rea, F.; Sandini, G.; Sciutti, A. Robot reading human gaze: Why eye tracking is better than head tracking for human-robot collaboration. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 5048–5054. [Google Scholar]

- Veronese, A.; Racca, M.; Pieters, R.S.; Kyrki, V. Probabilistic Mapping of human Visual attention from head Pose estimation. Front. Robot. AI 2017, 4, 53. [Google Scholar] [CrossRef]

- Li, J.; Ngai, G.; Leong, H.V.; Chan, S.C. Multimodal human attention detection for reading from facial expression, eye gaze, and mouse dynamics. ACM SIGAPP Appl. Comput. Rev. 2016, 16, 37–49. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual Only, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Xiong, S.; Li, B.; Zhu, S. DCGNN: A single-stage 3D object detection network based on density clustering and graph neural network. Complex Intell. Syst. 2023, 9, 3399–3408. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- He, Y.; Xu, D.; Wu, L.; Jian, M.; Xiang, S.; Pan, C. Lffd: A light and fast face detector for edge devices. arXiv 2019, arXiv:1904.10633. [Google Scholar]

- Zhang, F.; Fan, X.; Ai, G.; Song, J.; Qin, Y.; Wu, J. Accurate Face Detection for High Performance. arXiv 2019, arXiv:1905.01585. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhu, Y.; Cai, H.; Zhang, S.; Wang, C.; Xiong, Y. Tinaface: Strong but simple baseline for face detection. arXiv 2020, arXiv:2011.13183. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Xu, Y.; Yan, W.; Yang, G.; Luo, J.; Li, T.; He, J. CenterFace: Joint face detection and alignment using face as point. Sci. Program. 2020, 2020, 7845384. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EP n P: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Xin, M.; Mo, S.; Lin, Y. Eva-gcn: Head pose estimation based on graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1462–1471. [Google Scholar]

- Zhang, H.; Wang, M.; Liu, Y.; Yuan, Y. FDN: Feature decoupling network for head pose estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12789–12796. [Google Scholar]

- Zhou, Y.; Gregson, J. Whenet: Real-time fine-grained estimation for wide range head pose. arXiv 2020, arXiv:2005.10353. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-grained head pose estimation without keypoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2074–2083. [Google Scholar]

- Yang, T.-Y.; Chen, Y.-T.; Lin, Y.-Y.; Chuang, Y.-Y. Fsa-net: Learning fine-grained structure aggregation for head pose estimation from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1087–1096. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Zhu, X.; Cheng, D.; Zhang, Z.; Lin, S.; Dai, J. An empirical study of spatial attention mechanisms in deep networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6688–6697. [Google Scholar]

- Jain, V.; Learned-Miller, E. Fddb: A benchmark for face detection in unconstrained settings. UMass Amherst Tech. Rep. 2010, 2, 1–11. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.-C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5525–5533. [Google Scholar]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-stage dense face localisation in the wild. arXiv 2019, arXiv:1905.00641. [Google Scholar]

- Li, J.; Zhang, B.; Wang, Y.; Tai, Y.; Zhang, Z.; Wang, C.; Li, J.; Huang, X.; Xia, Y. ASFD: Automatic and scalable face detector. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 2139–2147. [Google Scholar]

- Zhu, X.; Lei, Z.; Liu, X.; Shi, H.; Li, S.Z. Face alignment across large poses: A 3d solution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 146–155. [Google Scholar]

- Fanelli, G.; Dantone, M.; Gall, J.; Fossati, A.; Van Gool, L. Random forests for real time 3d face analysis. Int. J. Comput. Vis. 2013, 101, 437–458. [Google Scholar] [CrossRef]

| Input Resolution | Convolutional Kernel Size | Input Channels | Intermediate Channel | Output Channel | Composition Structure | |

|---|---|---|---|---|---|---|

| block_1 | 224 × 224 | 3 | 3 | / | 16 | Conv |

| 112 × 112 | 3 | 16 | 48 | 24 | (a) | |

| 56 × 56 | 3 | 24 | 72 | 24 | (b) | |

| block_2 | 56 × 56 | 3 | 24 | 72 | 40 | (a) |

| 28 × 28 | 3 | 40 | 120 | 40 | (c) | |

| block_3 | 28 × 28 | 3 | 40 | 240 | 80 | (a) |

| 14 × 14 | 3 | 80 | 200 | 80 | (c) | |

| 14 × 14 | 3 | 80 | 184 | 80 | (b) | |

| 14 × 14 | 3 | 80 | 184 | 80 | (b) | |

| 14 × 14 | 3 | 80 | 480 | 112 | (b) | |

| 14 × 14 | 3 | 112 | 672 | 112 | (c) | |

| block_4 | 14 × 14 | 3 | 112 | 672 | 160 | (a) |

| 7 × 7 | 3 | 160 | 960 | 160 | (c) | |

| 7 × 7 | 3 | 160 | 960 | 160 | (b) | |

| 7 × 7 | 3 | 160 | 960 | 160 | (b) | |

| 7 × 7 | 3 | 160 | 960 | 160 | (c) |

| Method | FDDB (AP%) | WiderFace Easy (AP%) | WiderFace Medium (AP%) | WiderFace Hard (AP%) | WiderFace (mAP%) | FPS (CPU) | FPS (Phone) | Parameters (×106) |

|---|---|---|---|---|---|---|---|---|

| RetinaFace | 95.2 | 90.7 | 90.1 | 87.4 | 89.4 | 16 | - | 0.73 |

| LFFD | 93.2 | 85.8 | 82.1 | 74.1 | 80.7 | 21 | - | 2.15 |

| ASFD-D6 | 98.1 | 95.8 | 94.7 | 86.0 | 92.2 | 5 | - | 8.61 |

| ASFD-D0 | 92.4 | 90.1 | 87.5 | 74.4 | 84.0 | 36 | 28 | 0.68 |

| CenterFace | 94.5 | 88.1 | 87.5 | 82.5 | 86.0 | 25 | 18 | 1.80 |

| GA-Face (Ghost only) | 92.7 | 85.2 | 84.4 | 78.7 | 82.8 | 35 | 25 | 0.81 |

| GA-Face (SimAM only) | 94.9 | 88.6 | 87.9 | 81.9 | 86.1 | 24 | 18 | 1.80 |

| GA-Face | 95.8 | 88.3 | 87.3 | 81.0 | 85.5 | 33 | 24 | 0.81 |

| Method | AFLW2000 | BIWI | ||||||

|---|---|---|---|---|---|---|---|---|

| Pitch | Yaw | Roll | MAE | Pitch | Yaw | Roll | MAE | |

| EVA-GCN | 5.97 | 4.38 | 4.23 | 4.86 | 5.92 | 4.53 | 3.37 | 4.60 |

| HopeNet | 6.56 | 6.47 | 5.44 | 6.16 | 6.60 | 4.81 | 3.27 | 4.90 |

| WHENet | 5.76 | 4.35 | 4.29 | 4.80 | 5.44 | 4.38 | 3.30 | 4.37 |

| FSA-Net | 6.22 | 4.34 | 4.65 | 5.07 | 6.46 | 4.83 | 3.56 | 4.95 |

| DB-Net(ECA&SAM only) | 5.79 | 4.16 | 4.52 | 4.82 | 6.18 | 4.95 | 3.60 | 4.91 |

| DB-Net(Ghost only) | 5.89 | 4.22 | 4.47 | 4.86 | 5.97 | 5.02 | 3.45 | 4.81 |

| DB-Net | 5.83 | 4.09 | 4.22 | 4.71 | 5.64 | 4.83 | 3.31 | 4.59 |

| Method | FPS (CPU) | FPS (Cell Phone) | Parameters (×106) |

|---|---|---|---|

| EVA-GCN | 56 | - | 6.22 |

| HopeNet | 22 | - | 23.9 |

| WHENet | 119 | 52 | 4.40 |

| FSA-Net | 288 | 98 | 0.29 |

| DB-Net DB-Net (ECA&SAM only) | 265 | 96 | 0.31 |

| DB-Net(Ghost only) | 356 | 134 | 0.19 |

| DB-Net | 336 | 126 | 0.21 |

| Method | Inference Time (640 × 480) | FPS (640 × 480) | Inference Time (320 × 240) | FPS (320 × 240) |

|---|---|---|---|---|

| Face detection (GA-Face) | 41 ms | 24 | 29 ms | 34 |

| Head pose estimation (DB-Net) | 8 ms | 126 | 8 ms | 126 |

| Learning state evaluation | 3 ms | 384 | 3 ms | 384 |

| Total | 52 ms | 19 | 40 ms | 25 |

| Method | Inference Time | FPS |

|---|---|---|

| Ours | 37 ms | 25 |

| Baseline | 54 ms | 18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Liu, P. Online Learning State Evaluation Method Based on Face Detection and Head Pose Estimation. Sensors 2024, 24, 1365. https://doi.org/10.3390/s24051365

Li B, Liu P. Online Learning State Evaluation Method Based on Face Detection and Head Pose Estimation. Sensors. 2024; 24(5):1365. https://doi.org/10.3390/s24051365

Chicago/Turabian StyleLi, Bin, and Peng Liu. 2024. "Online Learning State Evaluation Method Based on Face Detection and Head Pose Estimation" Sensors 24, no. 5: 1365. https://doi.org/10.3390/s24051365

APA StyleLi, B., & Liu, P. (2024). Online Learning State Evaluation Method Based on Face Detection and Head Pose Estimation. Sensors, 24(5), 1365. https://doi.org/10.3390/s24051365