Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks

Abstract

1. Introduction

2. Theoretical and Experimental Background

2.1. Experimental Design of the Test Device

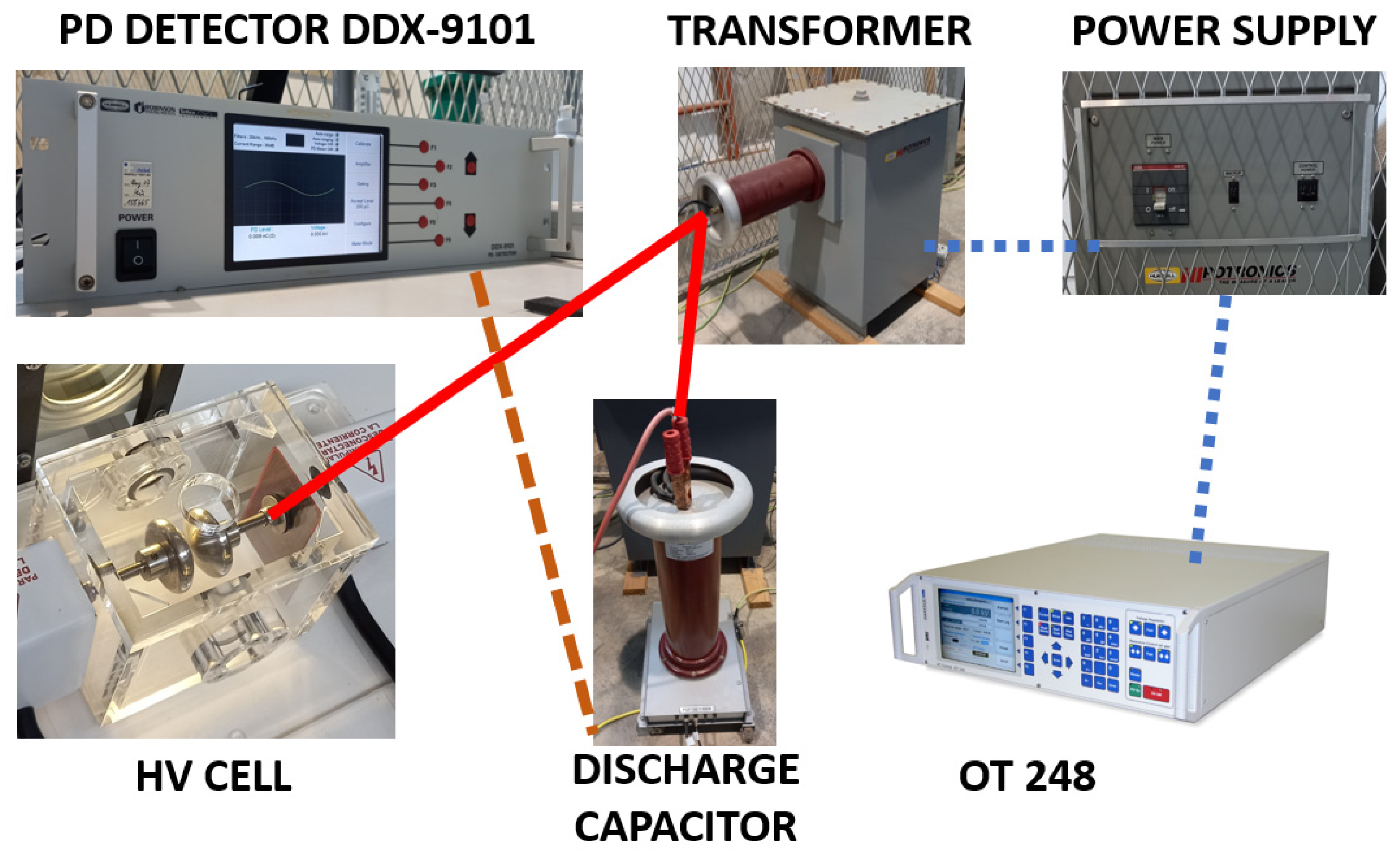

2.1.1. HV Laboratory Description

2.1.2. Raspberry Pi HQ and V2 Cameras

2.1.3. Description of the Raspberry Pi 4 Computer

2.1.4. Description of the Camera Control Software

2.2. Optical Characterization of the Measurement System

2.2.1. CFL and Laser Light Spectrum Analysis

2.2.2. Optimal Position of the Polarizers

2.2.3. PD Detection Using Kerr Effect and HQ Camera

3. PD in Gaseous Bubbles Dissolved in Oil

3.1. Validation of the Permanent Regime of the Electric Field of a Bubble

3.2. Numerical and Experimental Results with Two-Bubble Rupture

4. CNN Design, Training, and Validation

4.1. Introduction

4.2. Image Collection and Classification into Classes

- Convolutional: in this layer, each filter is applied to the image in successive positions along the image, and through convolution operations, a features map is generated.

- Pooling: the aim of this layer is to reduce the computational load by reducing the size of the feature maps.

- Fully connected: this layer takes the convolutional features, previously flattened, generated by the last convolutional layer and makes a prediction.

- Class 0—PD (with PD): This category is illustrated in Figure 22, where eight images randomly selected from a set of 500 are shown. These images represent different instances of the PD, which were experimentally verified through electrical measurements through the discharge capacitor.

- Class 1—NO_PD (without PD): Figure 23 presents eight random images out of a total of 500 that belong to this category. These images represent moments in which the PD detector DDX-9101 does not detect any PD.

- Class 2—ARC (total rupture of the electric arc): Figure 24 shows images that correspond to the moment of total rupture of the electric arc.

- Class 3—BREAK (post-arc break with a large number of bubbles): Finally, Figure 25 shows a random selection of eight images out of a total of 500 belonging to this class.

4.3. Image Transformation

- “rescale = 1/255” is a simple normalization, where the value of each pixel in the image is divided by 255. This is done to ensure that the pixel values are in the range [0, 1], which facilitates processing and improves convergence during training.

- “rotation_range = 30” allows us to randomly rotate images within a range of ±30 degrees. This helps the model to be more robust to different object orientations.

- “width_shift_range = 0.25” and “height_shift_range = 0.25” allows us to shift images horizontally and vertically within a range of ±25% of the original image size. This simulates variations in object position in the image and allows the model to support greater variability in object locations.

- “shear_range = 15” introduces warps that cause the image to be sheared at an angle of ±15 degrees. This is useful for working with images taken from different angles.

- “zoom_range = [0.5, 1.5]” is a transformation that randomly zooms images in the range 0.5 to 1.5. This simulates the variability in the distance from the camera to the object and improves the model’s ability to recognize objects in different sizes.

- “validation_split = 0.2” sets the proportion of data used for validation. In this case, 20% of the data were separated to be used as a validation set, while the remaining 80% was used for training.

- “/content/drive/MyDrive/Colab_Notebooks/dataset” is the path to the directory containing the training data. In this case, the data are organized into subfolders, where each subfolder represents an object class; in our case “PD,” “NO_PD,” “ARC” and “BREAK.”

- “target_size = (224, 224)” sets the size to which all images will be resized. The images were resized to a size of 224 × 224 pixels, which is a size commonly used in many deep learning applications.

- “batch_size = 32” defines the batch size for training. During training, the images were grouped into batches of 32 images each to optimize the training process.

- “shuffle = True” was set to True so that the images within each batch were randomly shuffled at each training epoch. This helps ensure that the model does not memorize the order of the images.

- “classes = [“PD,” “NO_PD,” “ARC,” “BREAK”]” sets the list of class names to which the images belong. This is essential so that the data generator knows what labels to assign to the images based on the folder structure.

4.4. Creation of the CNN Model

- “tf.keras.layers.Conv2D(32, (3,3), input_shape = (224,224,3), activation =”relu”)” is a 2D convolution layer that applies 32 filters, also known as kernels, to the input image. Each filter is 3 × 3 pixels in size.

- “input_shape = (224,224,3)” specifies the size of the input image. The images are 224 × 224 pixels with RGB color channels. The “relu” (rectified linear unit) trigger function is applied after convolution. This function is common in CNN and helps to introduce nonlinearity in the network.

- “tf.keras.layers.MaxPooling2D(2,2)” is a pooling layer that reduces the spatial dimension of the output of the convolution layer. It uses the max-pooling method with a window size of 2 × 2 pixels and retains only the maximum value of those pixels. This method simplifies the number of parameters and operations in the network, which helps reduce the risk of overfitting and improves computational efficiency.

- “tf.keras.layers.Conv2D(64, (3,3), activation = “relu”)” is similar to the first convolution layer, but now 64 filters are used instead of 32.

- “tf.keras.layers.Dropout(0.5)” is a dropout layer that randomly deactivates 50% of the neurons in this layer during training. Dropout is a regularization technique that helps prevent overfitting by reducing interdependence between neurons.

- “tf.keras.layers.Flatten()” converts the output of the previous layer into a one-dimensional vector.

4.5. Compilation Stage

- “optimizer = “Adam”,” where the Adam optimizer is an optimization algorithm that automatically adjusts the learning rate during training.

- “loss = “categorical_crossentropy”,” which is the loss function used during training. Our case is a multiclass classification problem, so categorical cross-entropy loss is a common option. It helps measure the difference between the probabilities predicted by the model and the true labels of the data.

- “metrics = “accuracy”” defines the metrics that are used to evaluate the performance of the model during training and evaluation. In this case, the accuracy metric is used, which measures the proportion of samples classified correctly.

4.6. CNN Model Training

- “training_data” represents the set of training data used to train the model. It contains the input features and corresponding output labels for training. It is provided as a pair of tensors: one for features and one for labels.

- “epochs” specifies the number of epochs or training cycles that are performed. A complete epoch occurs when the entire training dataset has been used once to update the model weights. The value that was used in training in this work was “epochs = 50.”

- “batch_size = 32” defines the size of the data batch used in each training step. At each epoch, the training data are divided into batches of the specified size and used to calculate the gradient and update the model weights. A batch size larger than 32 can speed up training but requires more memory.

4.7. Convergence for Three Classes

4.7.1. The CNN Method with Knowledge Transfer

4.7.2. CNN Method without Dropout

4.7.3. CNN Method with Dropout

4.8. Convergence for Four Classes

4.9. CNN Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hussain, G.A.; Hassan, W.; Mahmood, F.; Shafiq, M.; Rehman, H.; Kay, J.A. Review on Partial Discharge Diagnostic Techniques for High Voltage Equipment in Power Systems. IEEE Access 2023, 11, 51382–51394. [Google Scholar] [CrossRef]

- Ghanakota, K.C.; Yadam, Y.R.; Ramanujan, S.; Prasad, V.J.V.; Arunachalam, K. Study of ultra high frequency measurement techniques for online monitoring of partial discharges in high voltage systems. IEEE Sens. J. 2022, 22, 11698–11709. [Google Scholar] [CrossRef]

- Hassan, W.; Mahmood, F.; Andreotti, A.; Pagano, M.; Ahmad, F. Influence of voltage harmonics on partial discharge diagnostics in electric motors fed by variable-frequency drives. IEEE Trans. Ind. Electron. 2022, 69, 10605–10614. [Google Scholar] [CrossRef]

- Madhar, S.A.; Mor, A.R.; Mraz, P.; Ross, R. Study of DC partial discharge on dielectric surfaces: Mechanism, patterns and similarities to AC. Int. J. Electr. Power Energy Syst. 2021, 126 Pt B, 106600. [Google Scholar] [CrossRef]

- Babaeva, N.Y.; Tereshonok, D.V.; Naidis, G.V. Initiation of breakdown in bubbles immersed in liquids: Pre-existed charges versus bubble size. J. Phys. D Appl. Phys. 2015, 48, 355201. [Google Scholar] [CrossRef]

- Korobeynikov, S.M.; Ovsyannikov, A.G.; Ridel, A.V.; Medvedev, D.A. Dynamics of bubbles in electric field. J. Phys. Conf. Ser. 2017, 899, 082003. [Google Scholar] [CrossRef]

- Panov, V.A.; Kulikov, Y.M.; Son, E.E.; Tyuftyaev, A.S.; Gadzhiev, M.K.; Akimov, P.L. Electrical breakdown voltage of transformer oil with gas bubbles. High Temp. 2014, 52, 770–773. [Google Scholar] [CrossRef]

- Talaat, M.; El-Zein, A. Analysis of Air Bubble Deformation Subjected to Uniform Electric Field in Liquid Dielectric. J. Electromagn. Appl. 2012, 2, 4–10. [Google Scholar] [CrossRef]

- Perkasa, C.Y.; Lelekakis, N.; Czaszejko, T.; Wijaya, J.; Martin, D. A comparison of the formation of bubbles and water droplets in vegetable and mineral oil impregnated transformer paper. IEEE Trans. Dielectr. Electr. Insul. 2014, 21, 2111–2118. [Google Scholar] [CrossRef]

- Zhang, R.; Li, X.; Wang, Z. Pattern of bubble evolution in liquids under repetitive pulsed power. IEEE Trans. Dielectr. Electr. Insul. 2019, 26, 353–360. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, Q.; Zhou, J.; Wang, S.; Sun, Y.; Wen, T. Partial Discharge Characteristics and Deterioration Mechanisms of Bubble-Containing Oil-Impregnated Paper. IEEE Trans. Dielectr. Electr. Insul. 2022, 29, 1282–1289. [Google Scholar] [CrossRef]

- Pagnutti, M.A.; Ryan, R.E.; Cazenavette, G.J.V.; Gold, M.J.; Harlan, R.; Leggett, E.; Pagnutti, J.F. Laying the foundation to use Raspberry Pi 3 V2 camera module imagery for scientific and engineering purposes. J. Electron. Imaging 2017, 26, 013014. [Google Scholar] [CrossRef]

- Riba, J.-R.; Gómez-Pau, Á.; Moreno-Eguilaz, M. Experimental Study of Visual Corona under Aeronautic Pressure Conditions Using Low-Cost Imaging Sensors. Sensors 2020, 20, 411. [Google Scholar] [CrossRef]

- Miikki, K.; Karakoç, A.; Rafiee, M.; Lee, D.W.; Vapaavuori, J.; Tersteegen, J.; Lemetti, L.P. An open-source camera system for experimental measurements. Software X 2021, 14, 100688. [Google Scholar] [CrossRef]

- Monzón-Verona, J.M.; González-Domínguez, P.I.; García-Alonso, S.; Vaswani Reboso, J. Characterization of Dielectric Oil with a Low-Cost CMOS Imaging Sensor and a New Electric Permittivity Matrix Using the 3D Cell Method. Sensors 2021, 21, 7380. [Google Scholar] [CrossRef]

- Xia, C.; Ren, M.; Chen, R.; Yu, J.; Li, C.; Chen, Y.; Wang, K.; Wang, S.; Dong, M. Multispectral optical partial discharge detection, recognition, and assessment. IEEE Trans. Instrum. Meas. 2022, 71, 7380. [Google Scholar] [CrossRef]

- Kornienko, V.V.; Nechepurenko, I.A.; Tananaev, P.N.; Chubchev, E.D.; Baburin, A.S.; Echeistov, V.V.; Zverev, A.V.; Novoselov, I.I.; Kruglov, I.A.; Rodionov, I.A.; et al. Machine Learning for Optical Gas Sensing: A Leaky-Mode Humidity Sensor as Example. IEEE Sens. J. 2020, 20, 6954–6963. [Google Scholar] [CrossRef]

- Benbrahim, H.; Hachimi, H.; Amine, A. Deep Convolutional Neural Network with TensorFlow and Keras to Classify Skin Cancer Images. In Scalable Computing: Practice and Experience; Universitatea de Vest din Timișoara: Timișoara, Romania, 2020; Volume 21. [Google Scholar] [CrossRef]

- Mingxing, T.; Quoc, L.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. Cornell University. arXiv 2019, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. Cornell University. arXiv 2018, arXiv:1802.02611. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Cornell University. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Pan, Y.; Yao, T.; Li, Y.; Wang, Y.; Ngo, C.-W.; Mei, T. Transferrable Prototypical Networks for Unsupervised Domain Adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2239–2247. Available online: https://arxiv.org/pdf/1904.11227.pdf (accessed on 8 January 2024).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Scientific Research Publishing: Wuhan, China, 2012; Volume 25, Available online: https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf (accessed on 17 January 2024).

- Do, T.-D.; Tuyet-Doan, V.-N.; Cho, Y.-S.; Sun, J.-H.; Kim, Y.-H. Convolutional-Neural-Network-Based Partial Discharge Diagnosis for Power Transformer Using UHF Sensor. IEEE Access 2020, 8, 207377–207388. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, J.; Yang, Z.; Liu, T.; Zhao, Y.; Li, J. Partial Discharge Pattern Recognition of Gas-Insulated Switchgear via a Light-Scale Convolutional Neural Network. Energies 2019, 12, 4674. [Google Scholar] [CrossRef]

- Barrios, S.; Buldain, D.; Comech, M.P.; Gilbert, I.; Orue, I. Partial Discharge Classification Using Deep Learning Methods—Survey of Recent Progress. Energies 2019, 12, 2485. [Google Scholar] [CrossRef]

- Song, H.; Dai, J.; Sheng, G.; Jiang, X. GIS partial discharge pattern recognition via deep convolutional neural network under complex data source. IEEE Trans. Dielectr. Electr. Insul. 2018, 25, 678–685. [Google Scholar] [CrossRef]

- Chen, C.-H.; Chou, C.-J. Deep Learning and Long-Duration PRPD Analysis to Uncover Weak Partial Discharge Signals for Defect Identification. Appl. Sci. 2023, 13, 10570. [Google Scholar] [CrossRef]

- Chang, C.-K.; Chang, H.-H.; Boyanapalli, B.K. Application of Pulse Sequence Partial Discharge Based Convolutional Neural Network in Pattern Recognition for Underground Cable Joints. IEEE Trans. Dielect. Elect. Insul. 2022, 29, 1070–1078. [Google Scholar] [CrossRef]

- Govindaraju, P.; Muniraj, C. Monitoring and optimizing the state of pollution of high voltage insulators using wireless sensor network based convolutional neural network. Microprocess. Microsyst. 2020, 79, 103299. [Google Scholar] [CrossRef]

- Lu, S.; Chai, H.; Sahoo, A.; Phung, B.T. Condition Monitoring Based on Partial Discharge Diagnostics Using Machine Learning Methods: A Comprehensive State-of-the-Art Review. IEEE Trans. Dielectr. Electr. Insul. 2020, 27, 1861–1888. [Google Scholar] [CrossRef]

- Peng, X.; Yang, F.; Wang, G.; Wu, Y.; Li, L.; Li, Z.; Bhatti, A.A.; Zhou, C.; Hepburn, D.M.; Reid, A.J.; et al. A Convolutional Neural Network-Based Deep Learning Methodology for Recognition of Partial Discharge Patterns from High-Voltage Cables. IEEE Trans. Power Deliv. 2019, 34, 1460–1469. [Google Scholar] [CrossRef]

- Che, Q.; Wen, H.; Li, X.; Peng, Z.; Chen, K.P. Partial Discharge Recognition Based on Optical Fiber Distributed Acoustic Sensing and a Convolutional Neural Network. IEEE Access 2019, 7, 101758–101764. [Google Scholar] [CrossRef]

- TensorFlow Model. Available online: https://www.tensorflow.org (accessed on 8 January 2024).

- KERAS Model. Available online: https://github.com/keras-team/keras (accessed on 8 January 2024).

- IS IEC 60270:2000-12+AMD1:2015 CSV; Edition 3.1, 2015–11; Consolidated version; High-Voltage Test Techniques—Partial Discharge Measurements. International Electrotechnical Commission: Geneva, Switzerland, 2015.

- Raspberry Pi HQ Camera. Available online: https://www.raspberrypi.com/documentation/accessories/camera.html#hq-camera (accessed on 8 January 2024).

- Raspberry Pi HQ Camera, IMX477-DS. Available online: https://www.sony-semicon.com/files/62/pdf/p-13_IMX477-AACK_Flyer.pdf (accessed on 8 January 2024).

- Raspberry Pi 4 Computer. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 8 January 2024).

- Raspberry Pi Camera Libraries Available in Python. Available online: https://picamera.readthedocs.io/en/release-1.13/ (accessed on 8 January 2024).

- What Is JGS1, JGS2, JGS3 in Optical Quartz Glass? Available online: https://sot.com.sg/optical-quartz-glass/ (accessed on 8 January 2024).

- Smith, A.J.; Jaramillo, D.E.; Osorio, J. Revisión del efecto Kerr magneto óptico. Rev. Mex. De Física 2009, E 55, 61–69. [Google Scholar]

- Fiji-ImageJ Software. Available online: https://fiji.sc/ (accessed on 8 January 2024).

- Mahardika, P.S.; Gunawan, A.A.N. Modeling of water temperature in evaporation pot with 7 DS18B20 sensors based on Atmega328 microcontroller. Linguist. Cult. Rev. 2022, 6 (Suppl. 3), 184–193. [Google Scholar] [CrossRef]

- Kovacevic, U.; Milovanovic, I.; Vujisic, M.; Stankovic, K.; Osmokrovic, P. Verification of a VFT measuring method based on the kerr electro-optic effect. IEEE Trans. Dielectr. Electr. Insul. 2014, 21, 1133–1142. [Google Scholar] [CrossRef]

- Illias, H.A.; Chen, G.; Lewin, P.L. Comparison between Three-Capacitance, Analytical-based and Finite Element Analysis Partial Discharge Models in Condition Monitoring. IEEE Trans. Dielectr. Electr. Insul. 2017, 24, 99–109. [Google Scholar] [CrossRef]

- Monzón-Verona, J.M.; González-Domínguez, P.; García-Alonso, S. Effective Electrical Properties and Fault Diagnosis of Insulating Oil Using the 2D Cell Method and NSGA-II Genetic Algorithm. Sensors 2023, 23, 1685. [Google Scholar] [CrossRef] [PubMed]

- Tonti, E. Why starting from differential equations for computational physics? J. Comput. Phys. 2014, 257 Pt B, 1260–1290. [Google Scholar] [CrossRef]

- Geuzaine, C.; Remacle, J.-F. A three-dimensional finite element mesh generator with built-in pre and post-processing facilities. Int. J. Numer. Methods Eng. 2009, 79, 1309–1331. [Google Scholar] [CrossRef]

- Dular, P.; Geuzaine, C.; Henrotte, F.; Legros, W. A general environment for the treatment of discrete problems and its application to the finite element method. IEEE Trans. Magn. 1998, 34, 3395–3398. [Google Scholar] [CrossRef]

| V2 | HQ | |

|---|---|---|

| Sensor: | CMOS image sensor Sony IMX219 | CMOS image sensor Sony IMX477 |

| Resolution: | 3280 × 2464 pixels 8-megapixel | 4056 × 3040 pixels 12.3-megapixel |

| Sensor image area: | 3.68 × 2.76 mm 4.6 mm diagonal | 6.287 × 4.712 mm 7.9 mm diagonal |

| Pixel size: | 1.12 × 1.12 µm | 1.55 × 1.55 µm |

| Horizontal field of view: | 62.2 degrees | Depends on lens |

| Vertical field of view: | 48.8 degrees | Depends on lens |

| IR cut filter: | Eliminated | Integrated |

| Back focus length of lens: | 3.04 mm | 2.6 mm–11.8 mm (M12 Mount) 12.5 mm–22.4 mm (CS Mount) |

| Substrate material: | Silicon | Silicon |

| Sample Number | fps | T [°C] | Vrup [kV] | ||

|---|---|---|---|---|---|

| R | C | L | |||

| 1 | 86.0 | 84.0 | 83.0 | 17.2 | 23.8 |

| 2 | 86.4 | 85.1 | 84.0 | 17.2 | 21.9 |

| 3 | 87.0 | 84.6 | 86.1 | 16.8 | 20.5 |

| 4 | 87.0 | 84.7 | 86.1 | 16.8 | 24.0 |

| 5 | 87.0 | 84.7 | 86.3 | 16.9 | 24.0 |

| 6 | 87.0 | 84.7 | 86.2 | 16.9 | 34.3 |

| 7 | 87.0 | 84.6 | 86.2 | 17.1 | 22.1 |

| 8 | 87.0 | 84.6 | 86.0 | 17.2 | 24.7 |

| Sample Number | fps | T [°C] | Vrup [kV] | ||

|---|---|---|---|---|---|

| R | C | L | |||

| 1 | 87.1 | 84.7 | 86.0 | 17.3 | 23.9 |

| 2 | 87.0 | 84.7 | 86.2 | 17.3 | 22.1 |

| 3 | 87.0 | * | 86.3 | 17.5 | 29.1 |

| 4 | 86.8 | 84.6 | 86.3 | 17.5 | 35.9 |

| 5 | 87.0 | 84.7 | 86.3 | 17.8 | 21.4 |

| 6 | 87.0 | 84.7 | 86.2 | 17.7 | 21.4 |

| 7 | 87.1 | 84.7 | 86.2 | 17.6 | 31.0 |

| 8 | 87.0 | 84.6 | 86.1 | 17.6 | 32.8 |

| Sample Number | fps | T [°C] | Vrup [kV] | ||

|---|---|---|---|---|---|

| R | C | L | |||

| 1 | 87.0 | 84.7 | 86.2 | 17.1 | 24.4 |

| 2 | 87.0 | 84.7 | 86.2 | 17.1 | 24.4 |

| 3 | 87.0 | 84.7 | 86.3 | 17.1 | 23.5 |

| 4 | 86.9 | 84.7 | 86.2 | 17.2 | 23.3 |

| 5 | 87.0 | 85.0 | 86.2 | 17.3 | 23.1 |

| 6 | 87.0 | 84.7 | 86.1 | 17.3 | 29.1 |

| 7 | 87.0 | 84.7 | 86.1 | 17.3 | 30.0 |

| 8 | 87.1 | 84.7 | 86.1 | 17.3 | 28.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monzón-Verona, J.M.; González-Domínguez, P.; García-Alonso, S. Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks. Sensors 2024, 24, 1317. https://doi.org/10.3390/s24041317

Monzón-Verona JM, González-Domínguez P, García-Alonso S. Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks. Sensors. 2024; 24(4):1317. https://doi.org/10.3390/s24041317

Chicago/Turabian StyleMonzón-Verona, José Miguel, Pablo González-Domínguez, and Santiago García-Alonso. 2024. "Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks" Sensors 24, no. 4: 1317. https://doi.org/10.3390/s24041317

APA StyleMonzón-Verona, J. M., González-Domínguez, P., & García-Alonso, S. (2024). Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks. Sensors, 24(4), 1317. https://doi.org/10.3390/s24041317