Customized Tracking Algorithm for Robust Cattle Detection and Tracking in Occlusion Environments

Abstract

1. Introduction

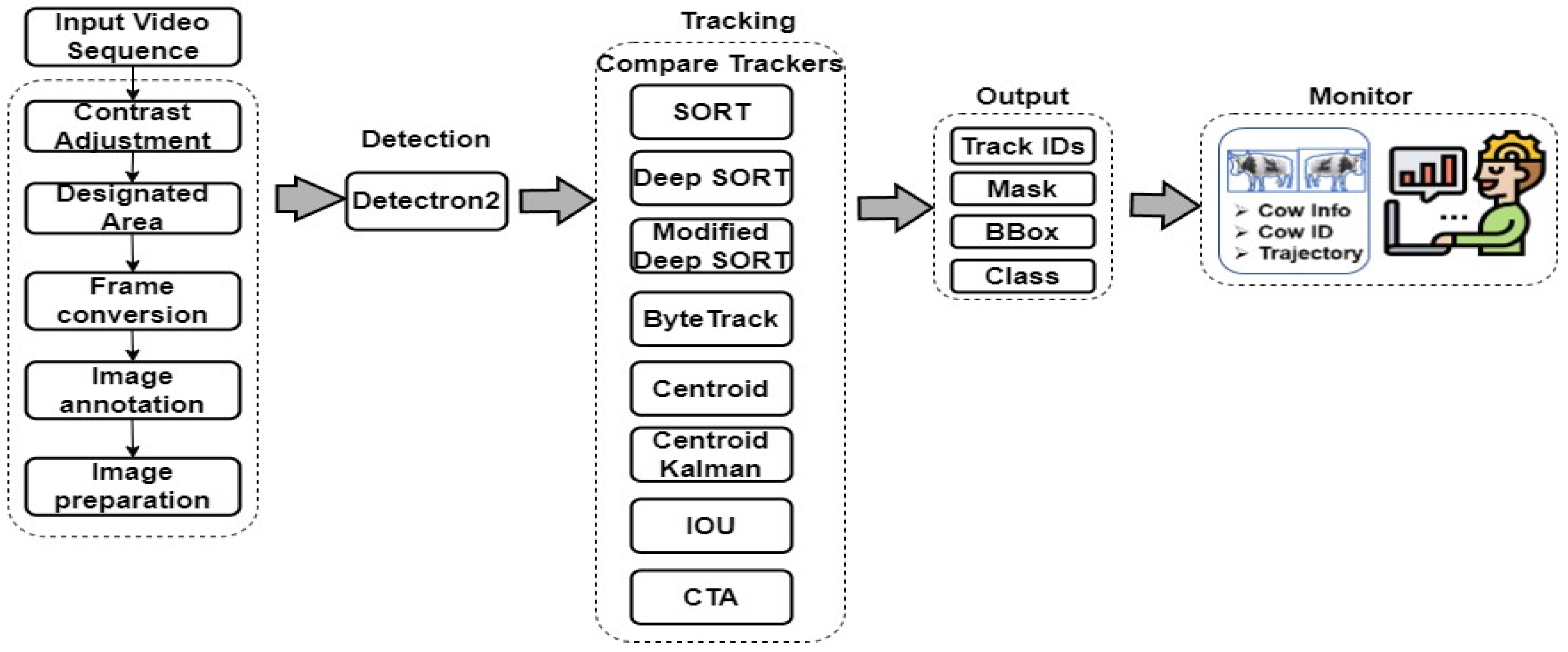

- (1)

- Development of a general framework for cattle detection and segmentation: the study proposes a framework that utilizes tailored modifications embedded in Detectron2, leveraging scientific principles such as mask region-based convolutional neural networks (Mask R-CNNs) to enhance the accuracy and efficiency of cattle detection and segmentation in diverse agricultural settings.

- (2)

- Integration of different trackers with Detectron2 detection: the study combines the detection capabilities of Detectron2 with various trackers such as Simple Online Real-time Tracking (SORT), Deep SORT, Modified Deep SORT, ByteTrack, Centroid, Centroid with Kalman filter, IOU, and our CTA.

- (3)

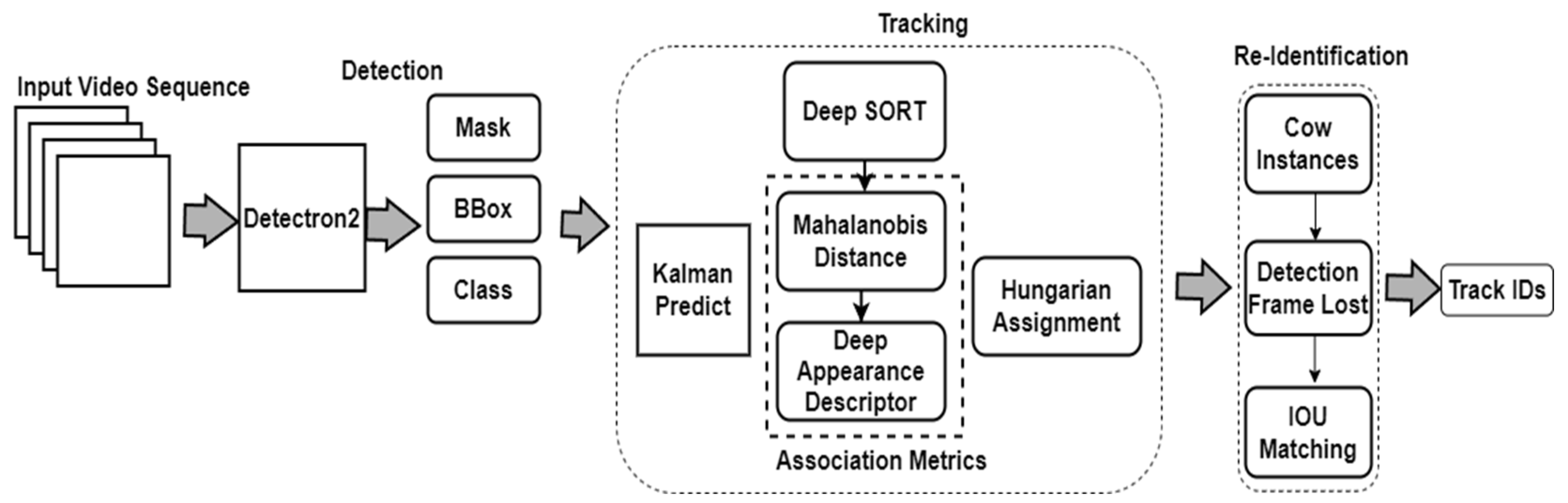

- Modification of the Deep SORT tracking algorithm: the study modifies the Deep SORT tracking algorithm by combining it with a modified re-identification process, leading to improved performance in cattle tracking.

- (4)

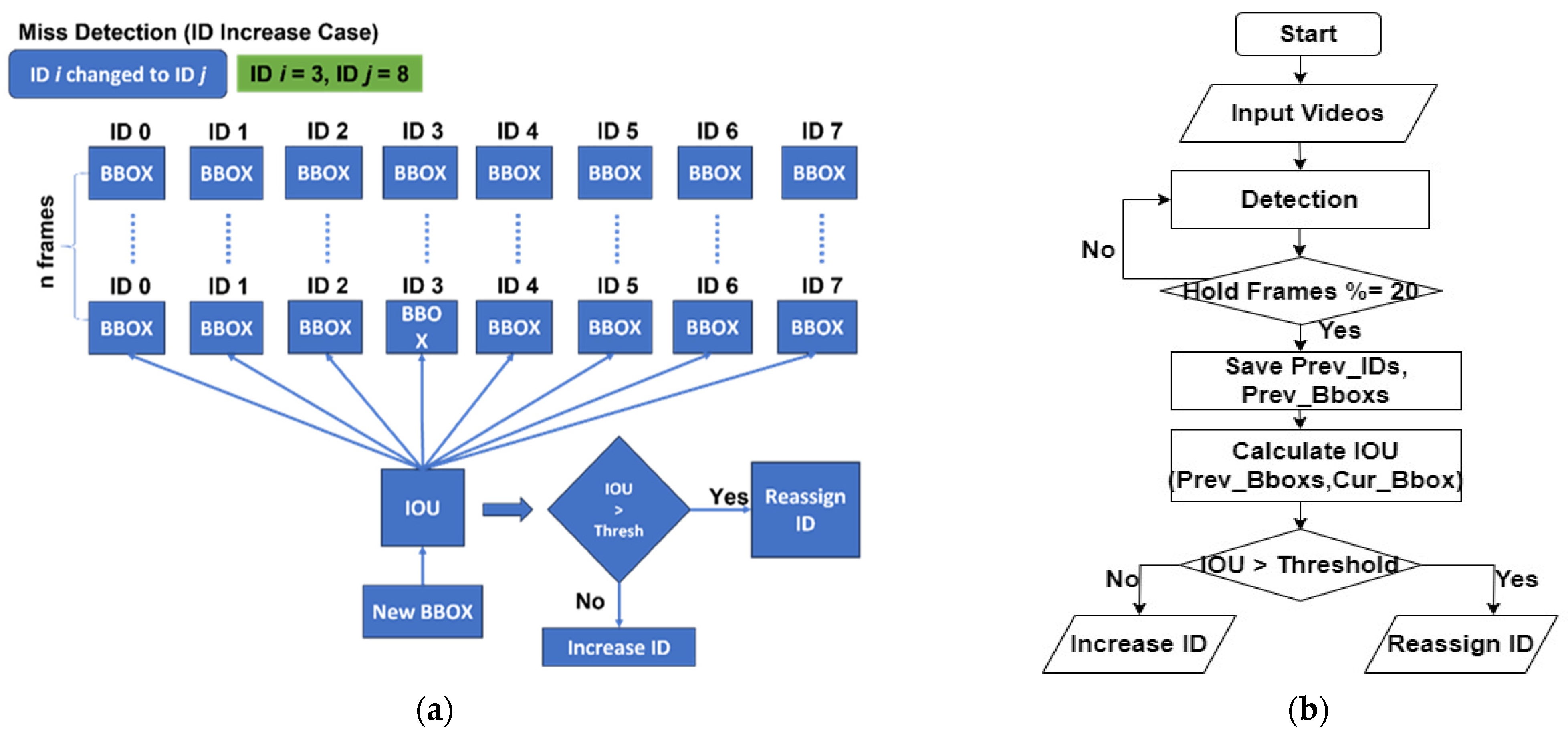

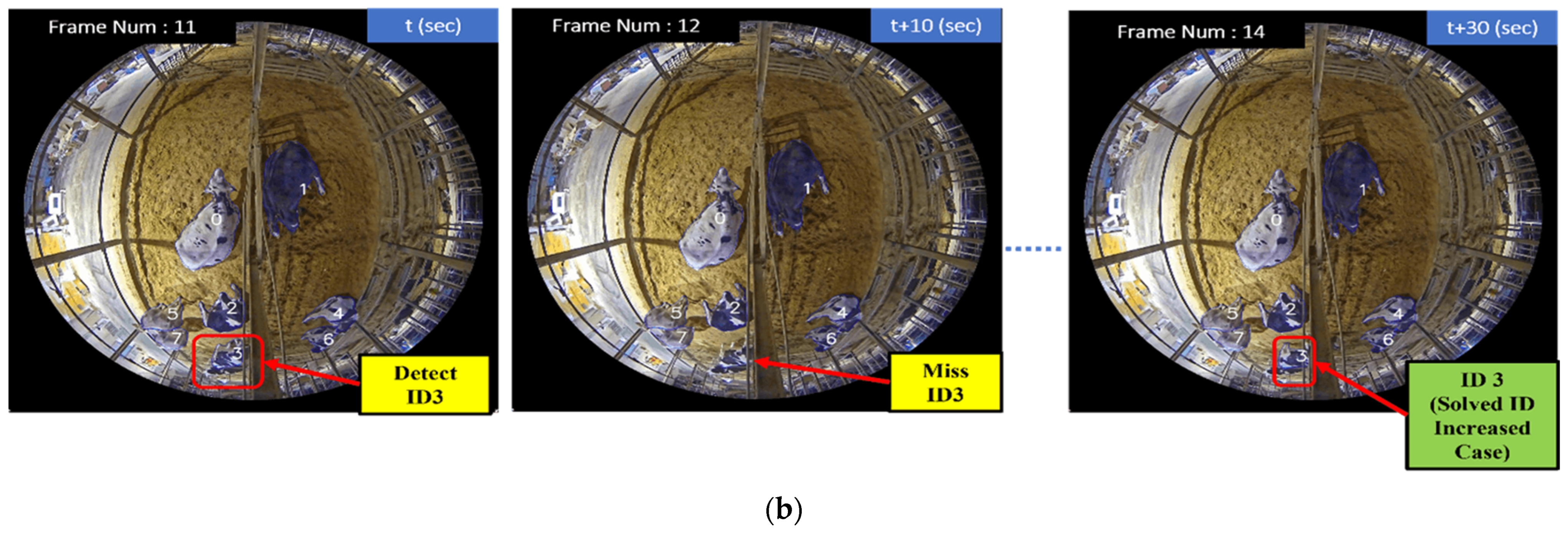

- Implementation of CTA: The study leverages the IOU bounding box (BB) calculation and finds the missing track-IDs for the re-identification process. Our CTA offers accurate and reliable cattle tracking results and minimizes the occurrence of identification switch cases caused by different occlusions. It can also control duplicated track-IDs and handle cases without track-ID increments for miss detection to test long-time videos. Our CTA has the remarkable ability to retain the original track-ID even in cases of track-ID switch occurrences.

- (5)

- In addition to the above contributions, the research focuses on developing a real-time system for the automatic detection and tracking of cattle. The research addresses the challenge of accurately detecting foreground objects, particularly cattle, amidst a mixture of other objects, such as people and various background elements. Furthermore, the study tackles the complexity of track-ID switch cases in large-size calving pen environments, specifically addressing the occlusion challenges encountered when tracking cattle.

2. Research Background and Related Works

3. Materials and Methods

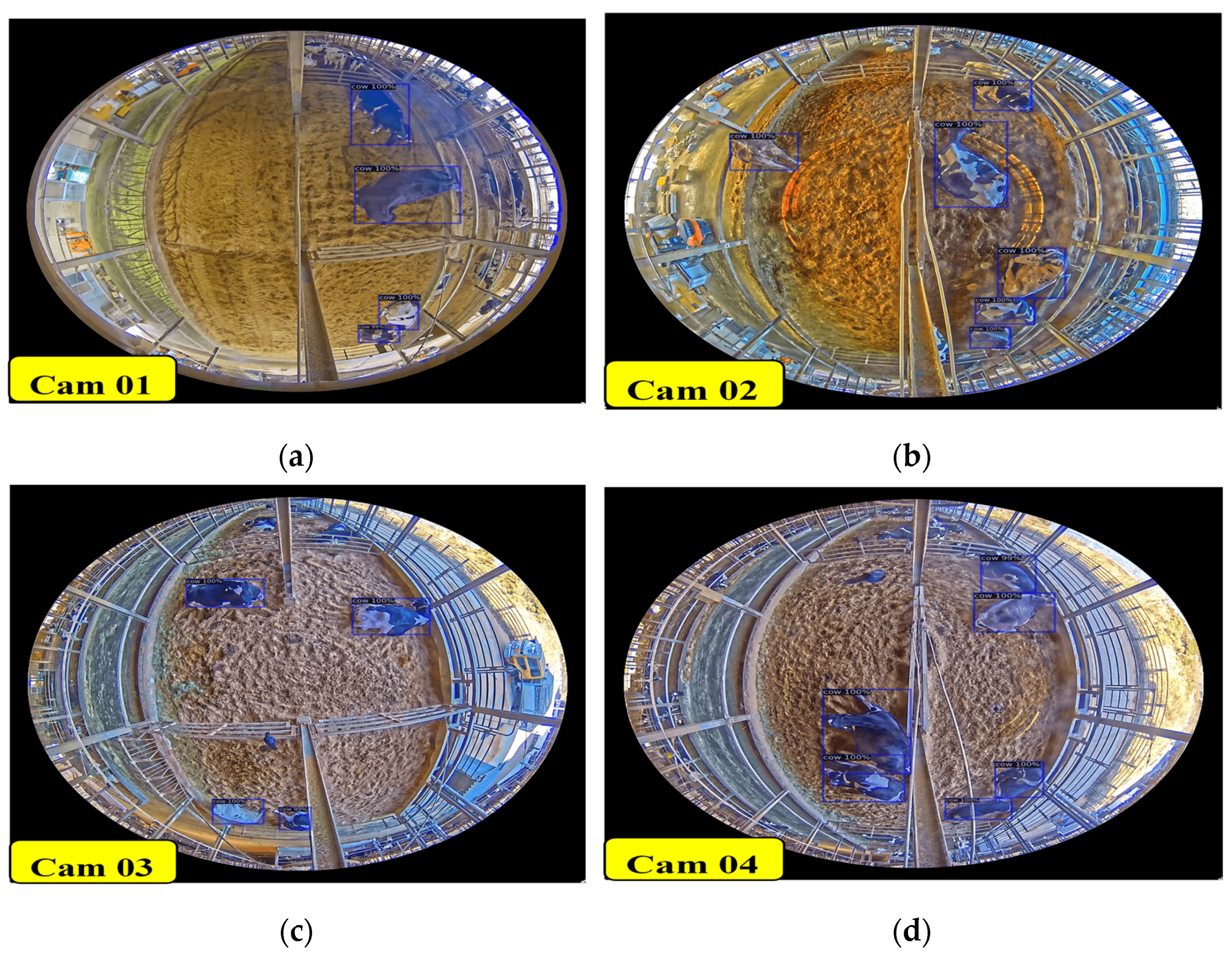

3.1. Data Preparation

3.2. Data Preprocessing

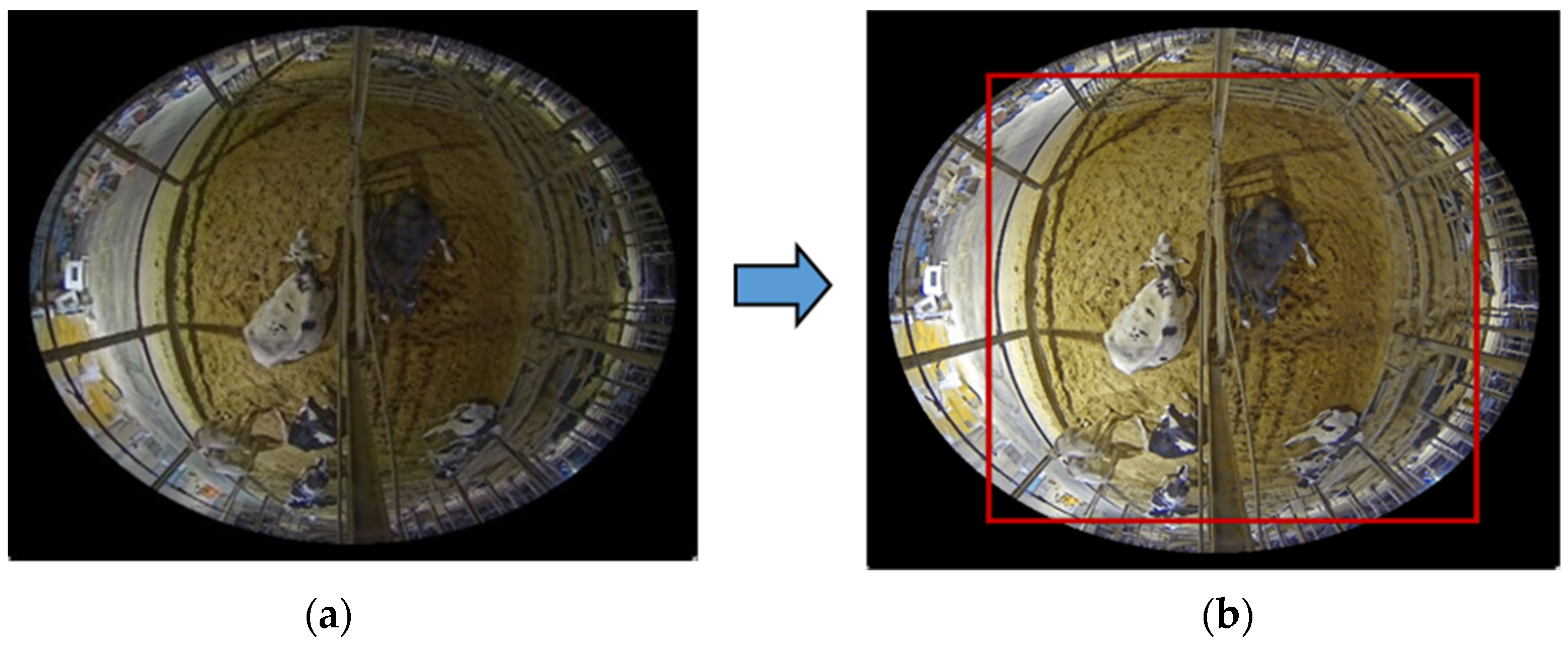

Contrast Adjustment and Designated Area

3.3. Cattle Detection

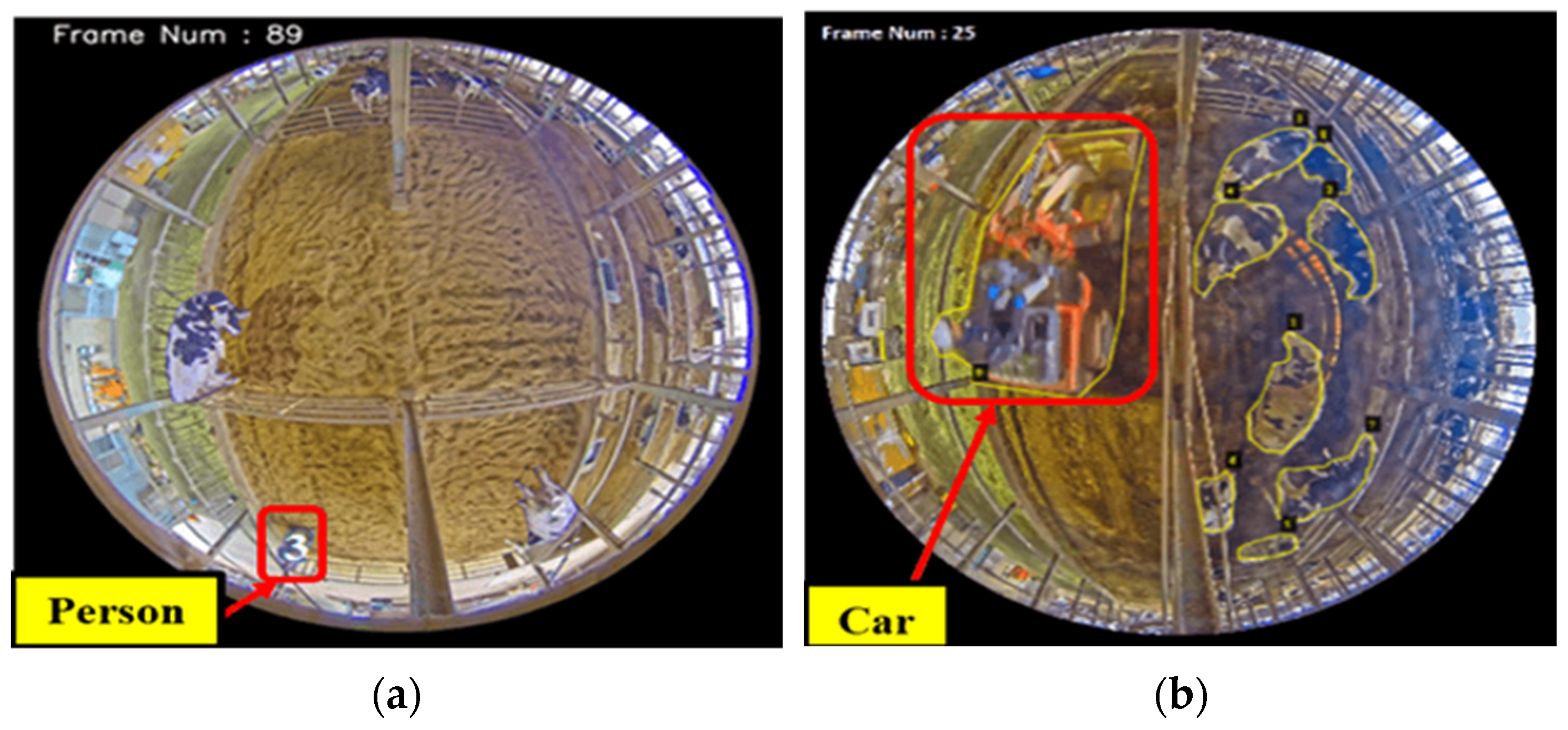

Noise Removal

3.4. Cattle Tracking

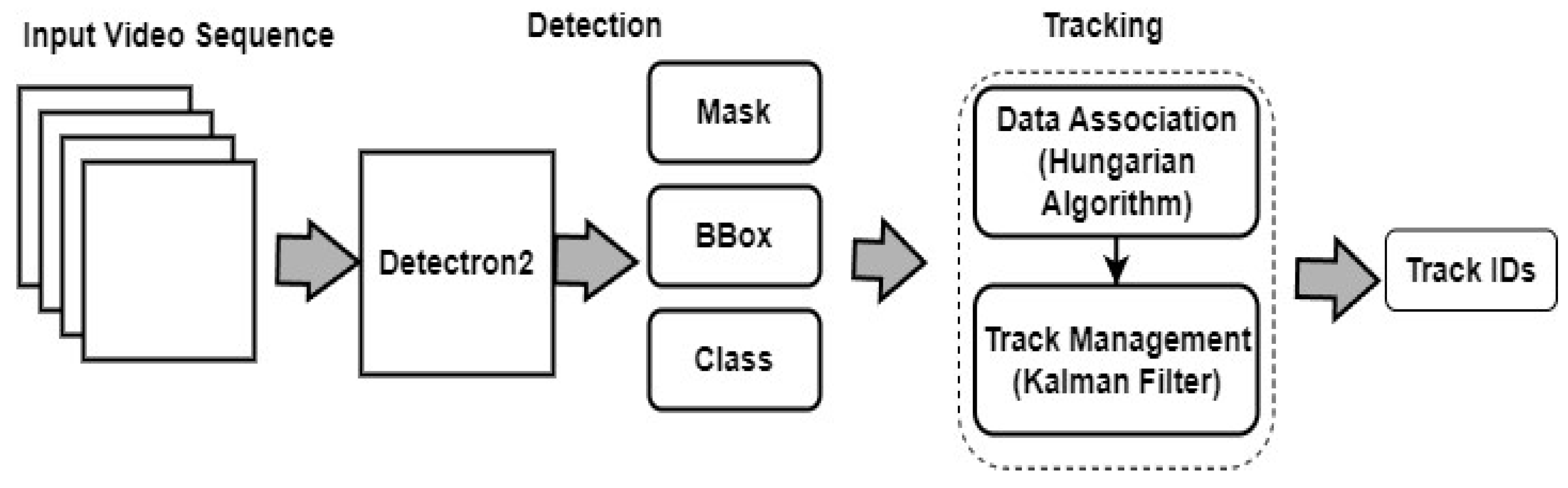

3.4.1. SORT Algorithm

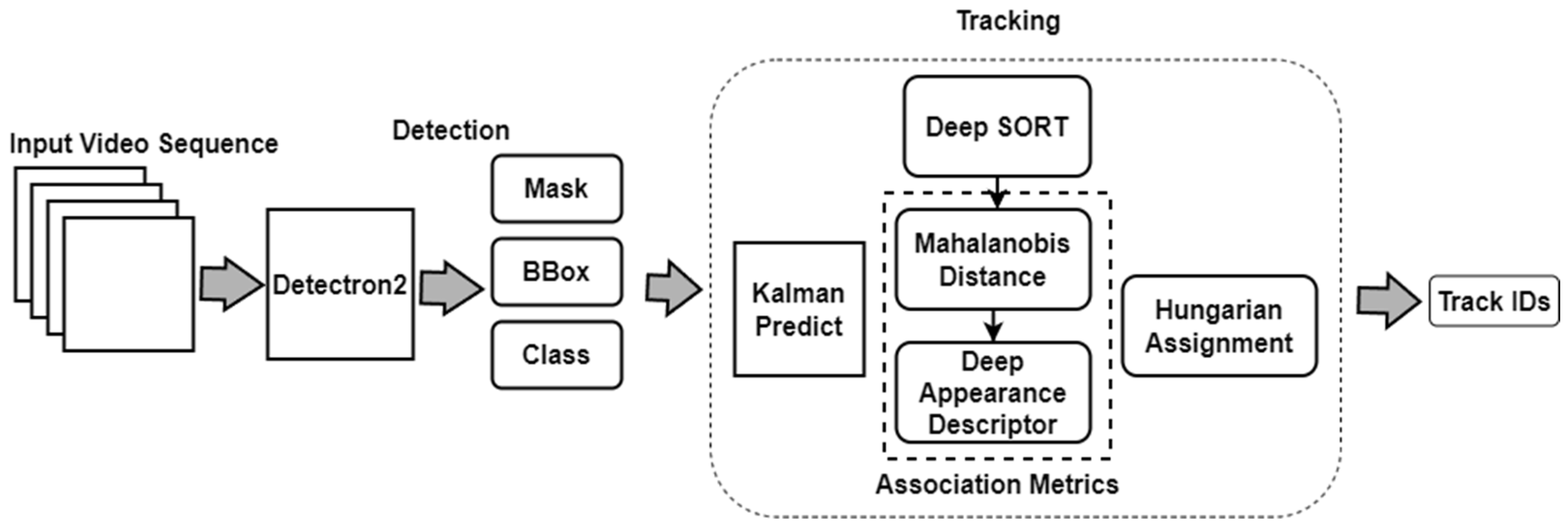

3.4.2. Deep SORT Algorithm

3.4.3. Modified Deep SORT Algorithm

3.4.4. ByteTrack Algorithm

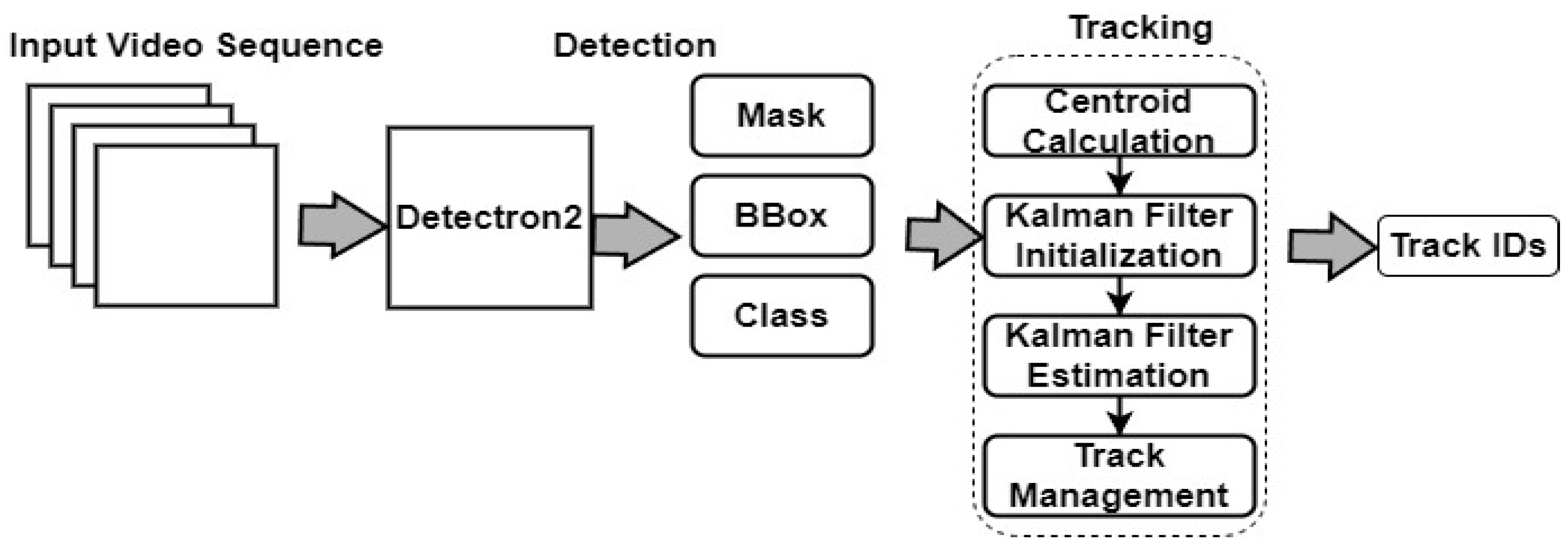

3.4.5. Centroid Tracking Algorithm

3.4.6. Centroid with Kalman Filter Algorithm

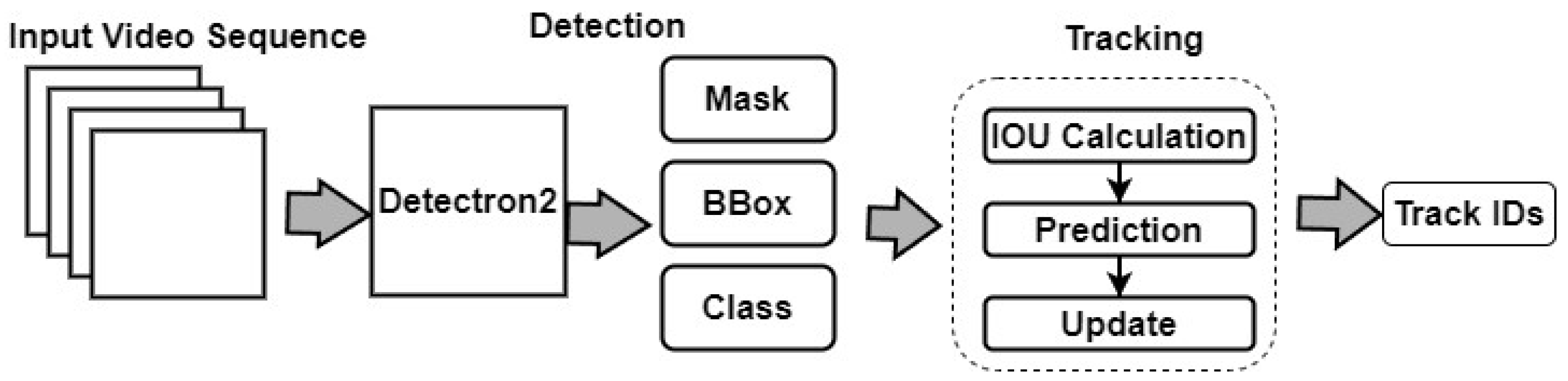

3.4.7. IOU Tracking

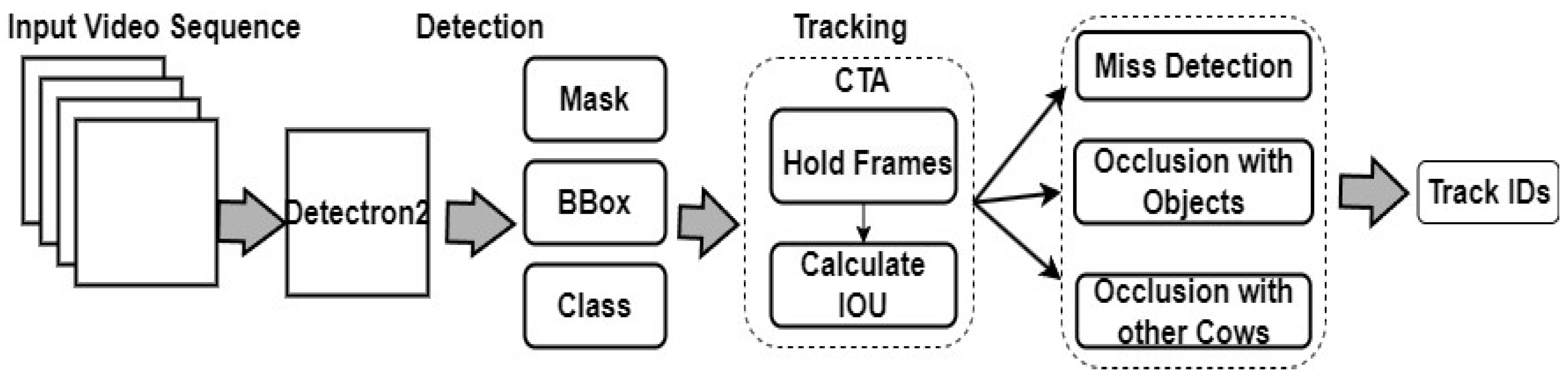

3.4.8. Customized Tracking Algorithm (CTA)

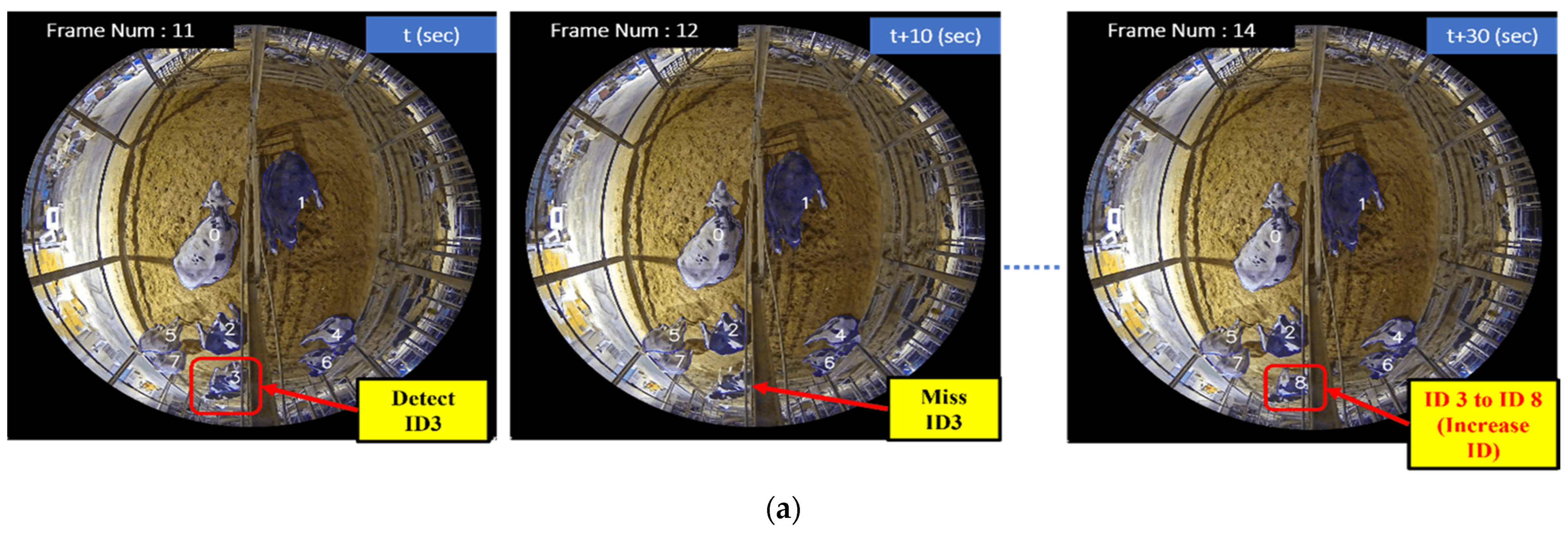

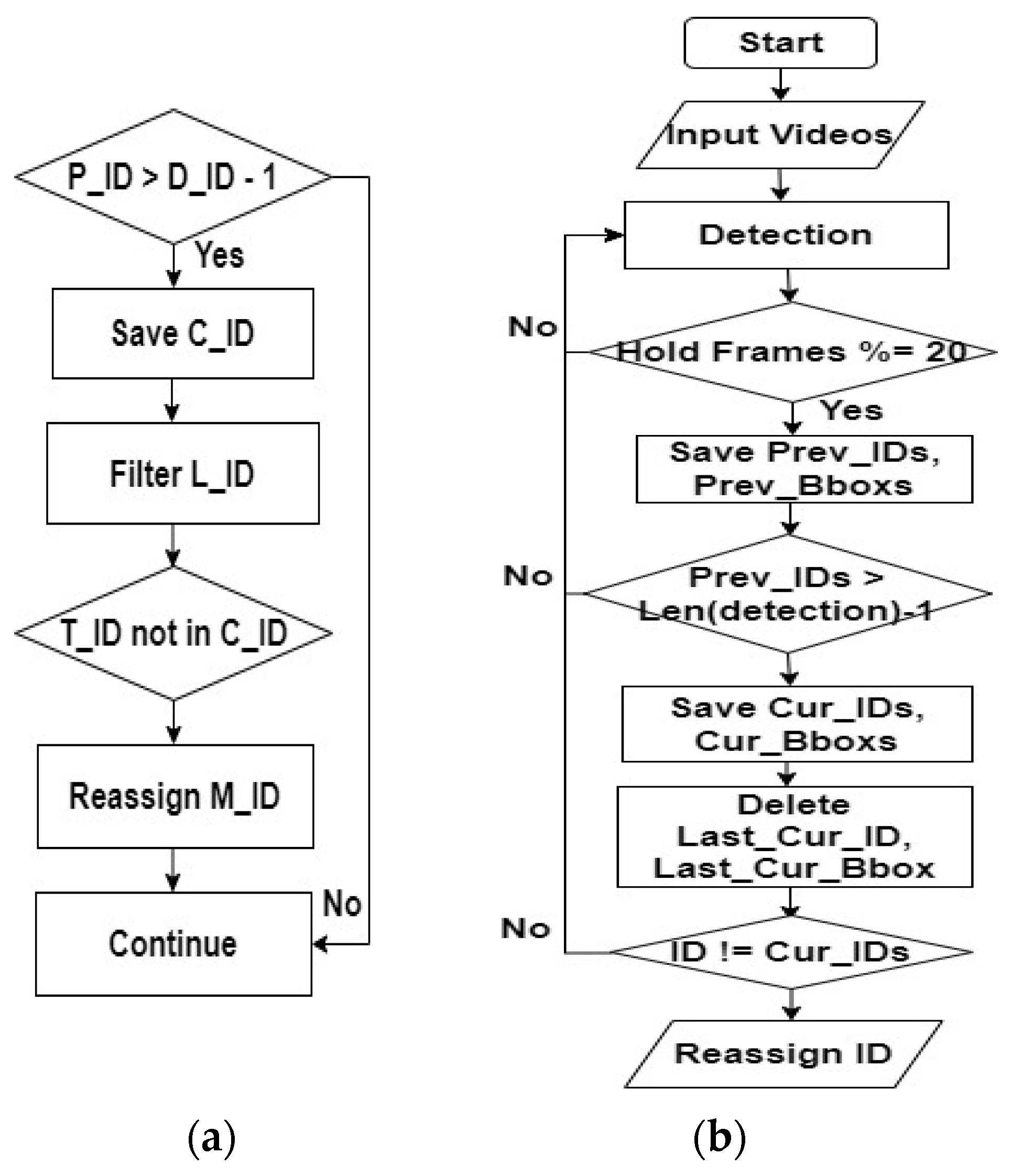

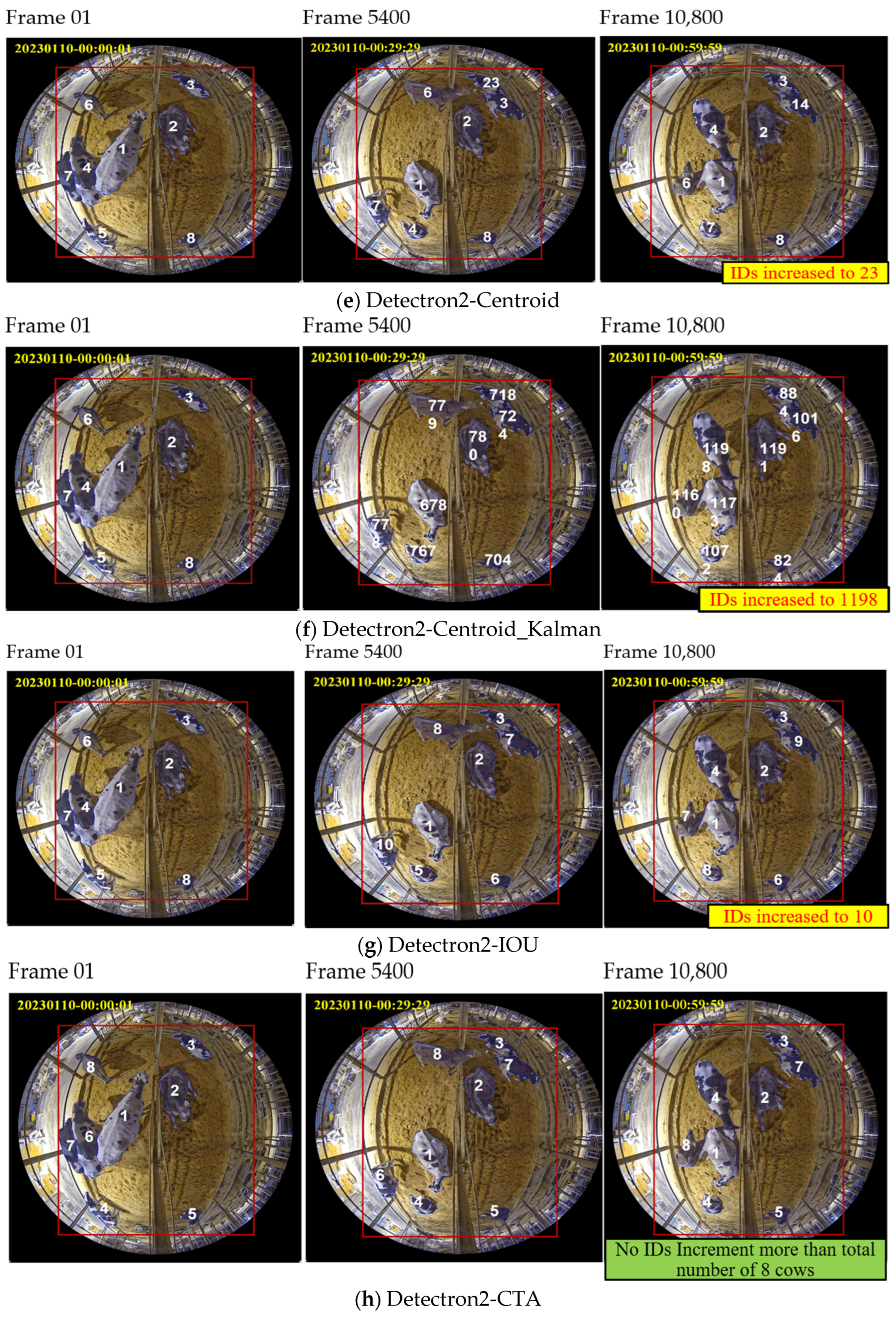

CTA—Miss Detection

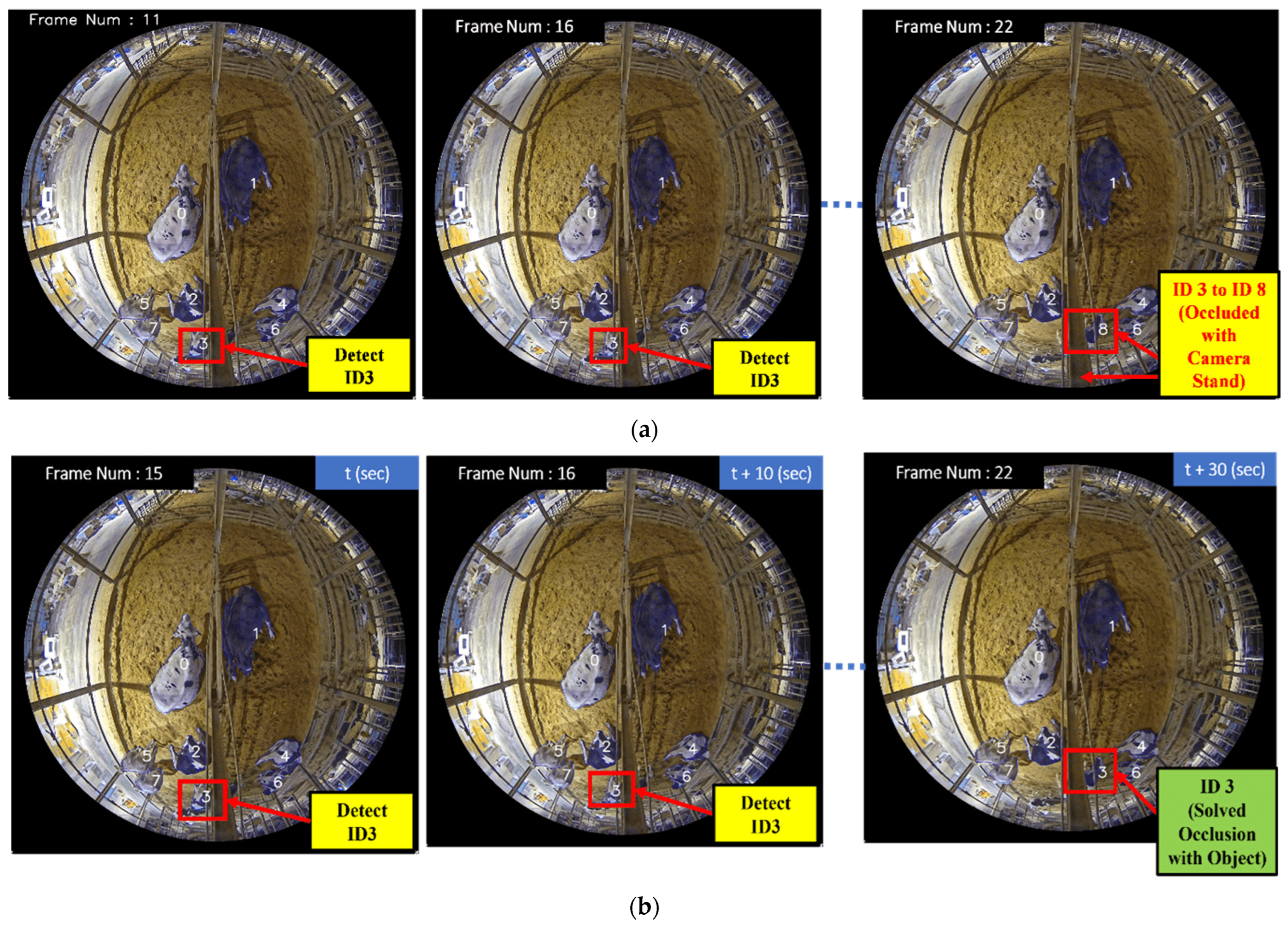

CTA—Occlusion with Objects

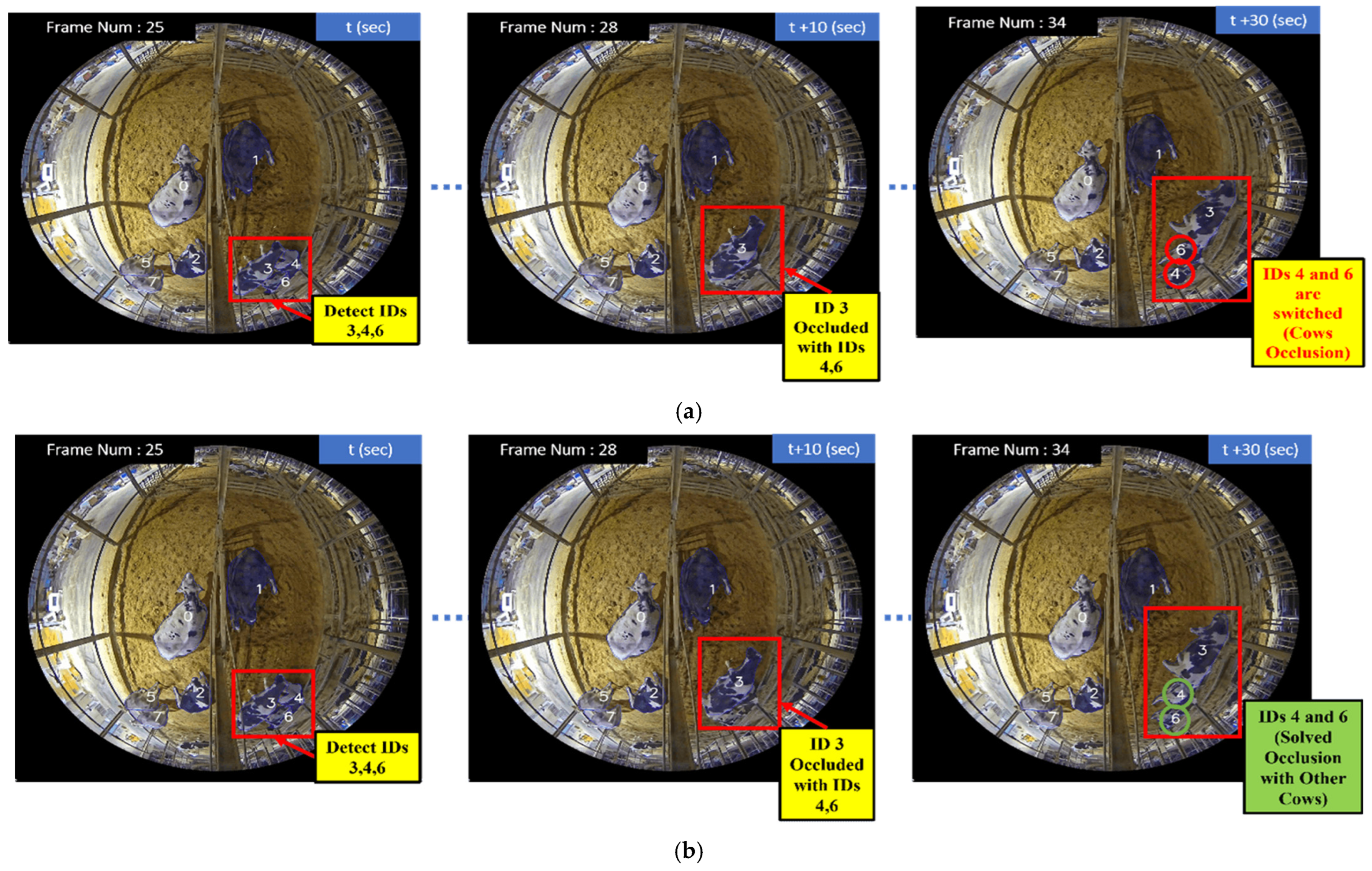

CTA—Occlusion with Other Cows

4. Experimental Implementation Results and Analysis

4.1. Performance Analysis of Cattle Detection Results

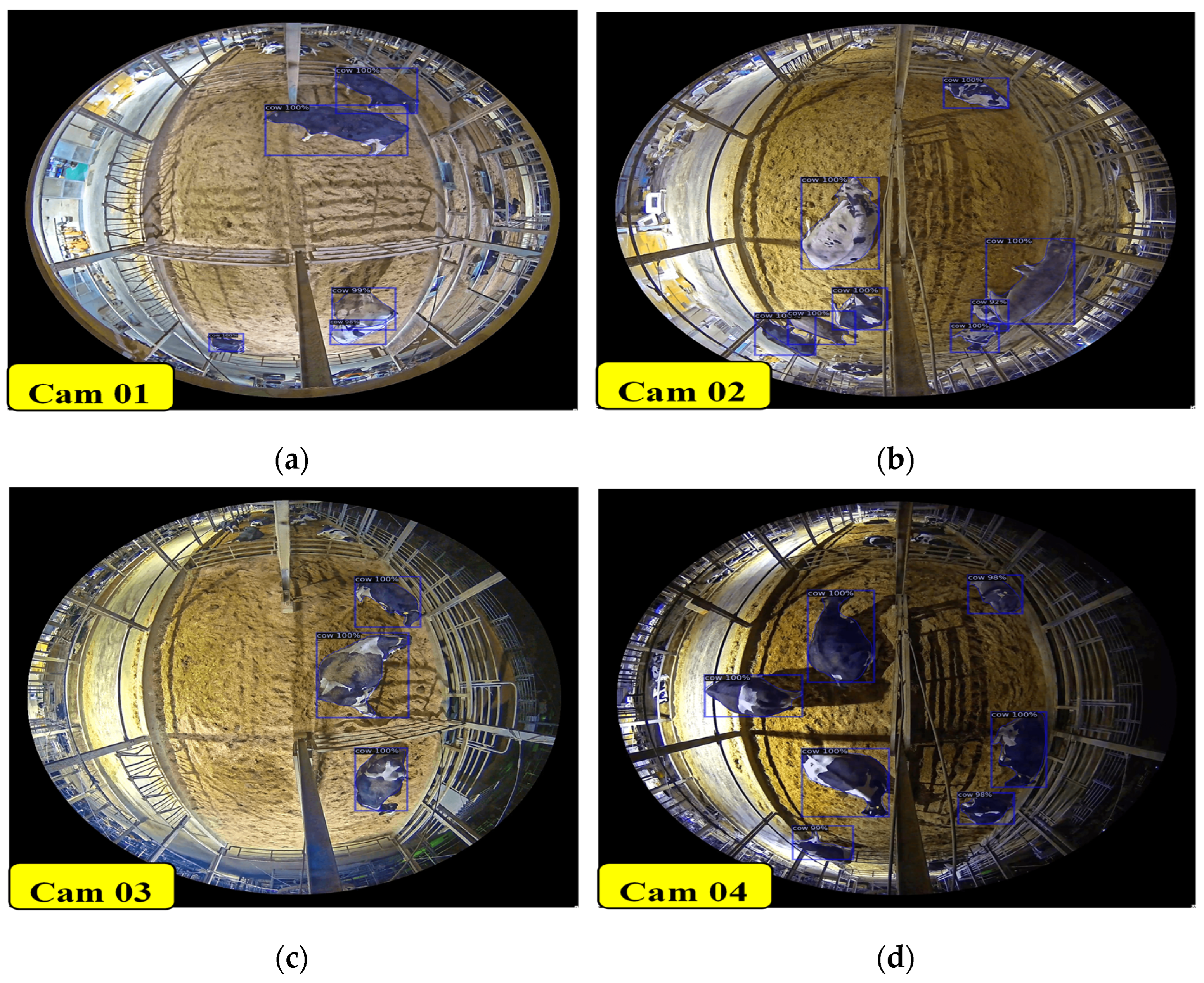

4.1.1. Nighttime Detection Accuracy and Results

4.1.2. Daytime Detection Accuracy and Results

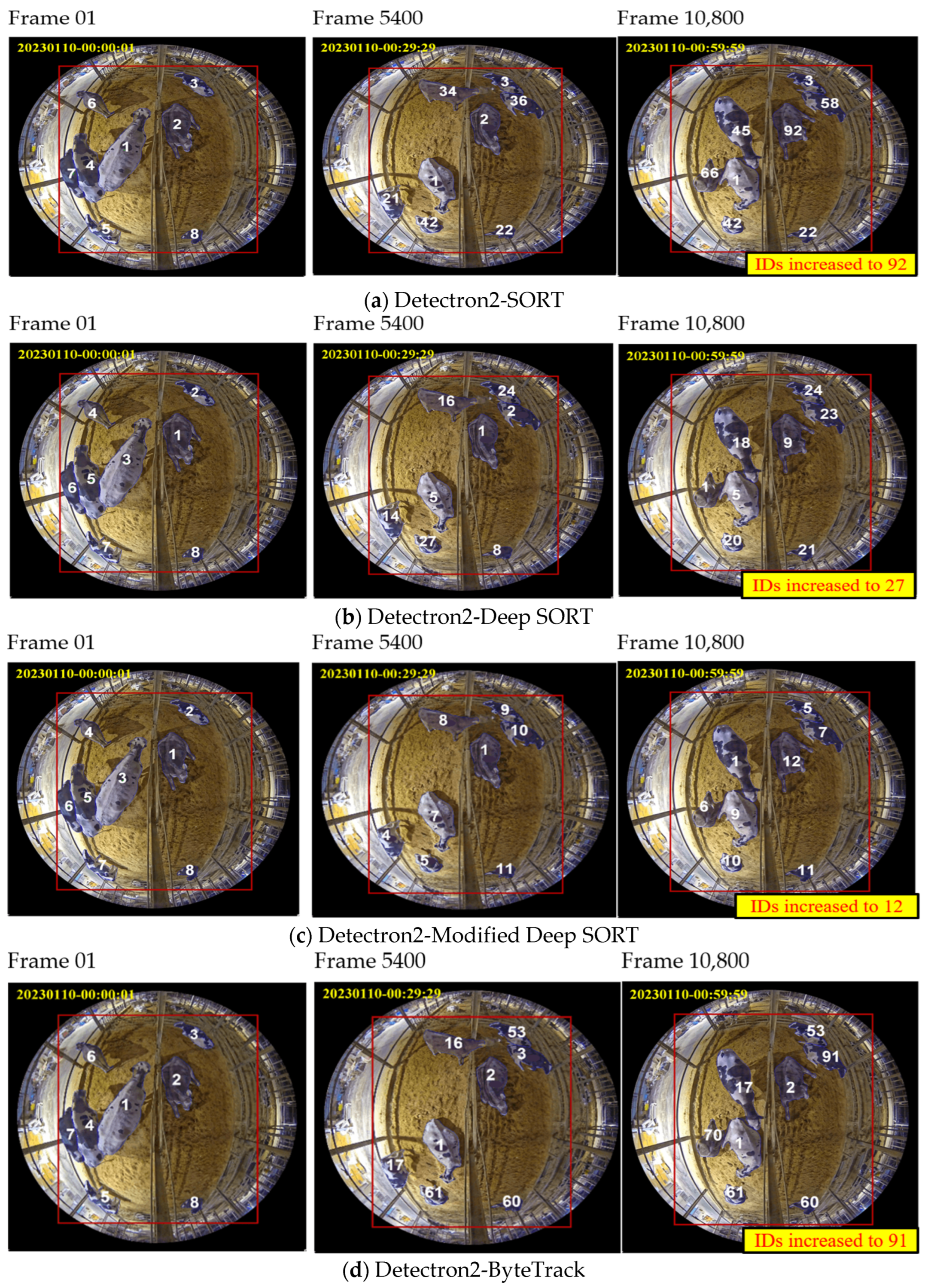

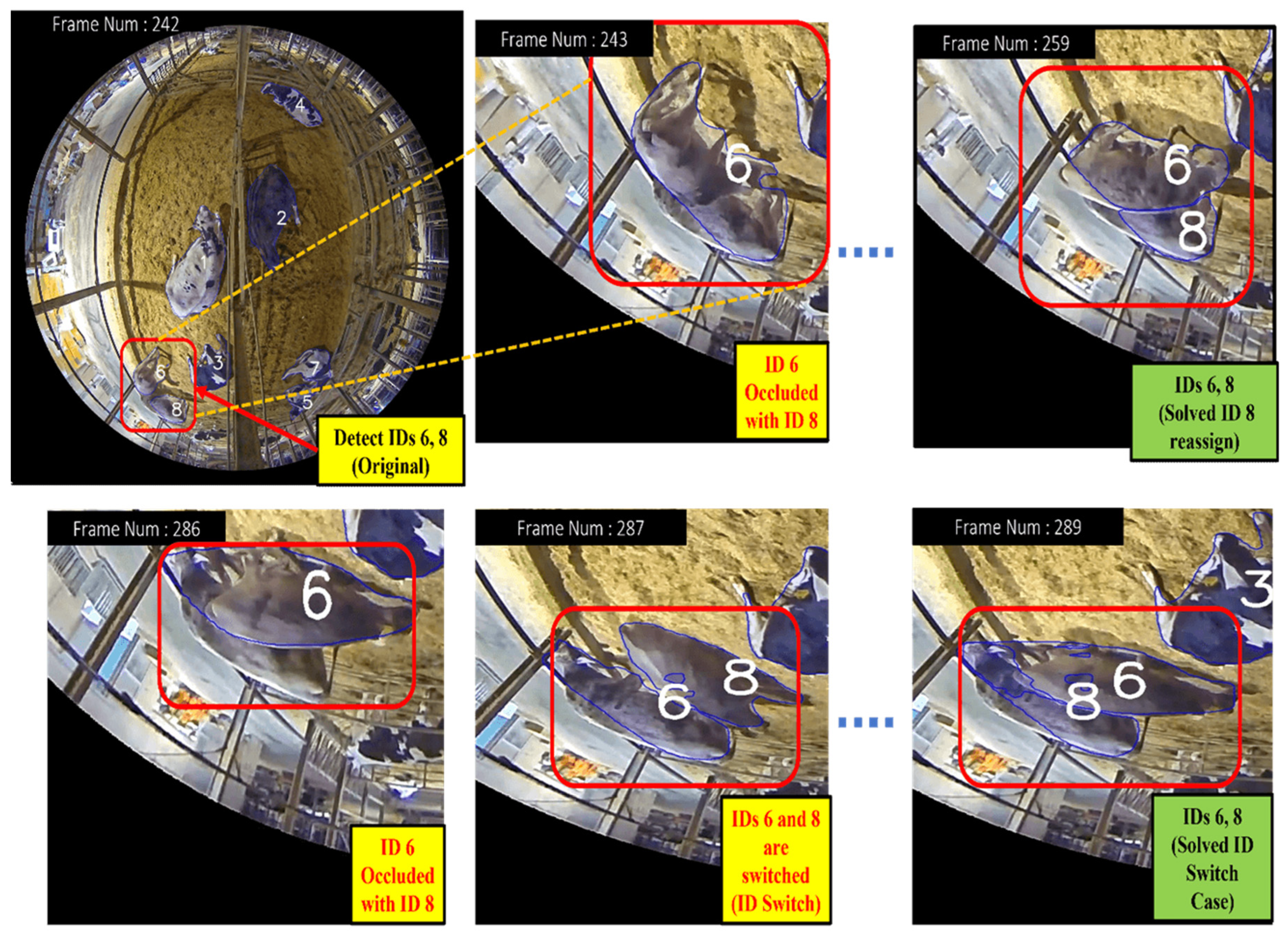

4.2. Performance Analysis of Cattle Tracking Results

4.2.1. Nighttime Tracking Accuracy and Results

4.2.2. Performance Analysis of Miss Detection and Occlusion Results

4.2.3. Daytime Tracking Accuracy and Results

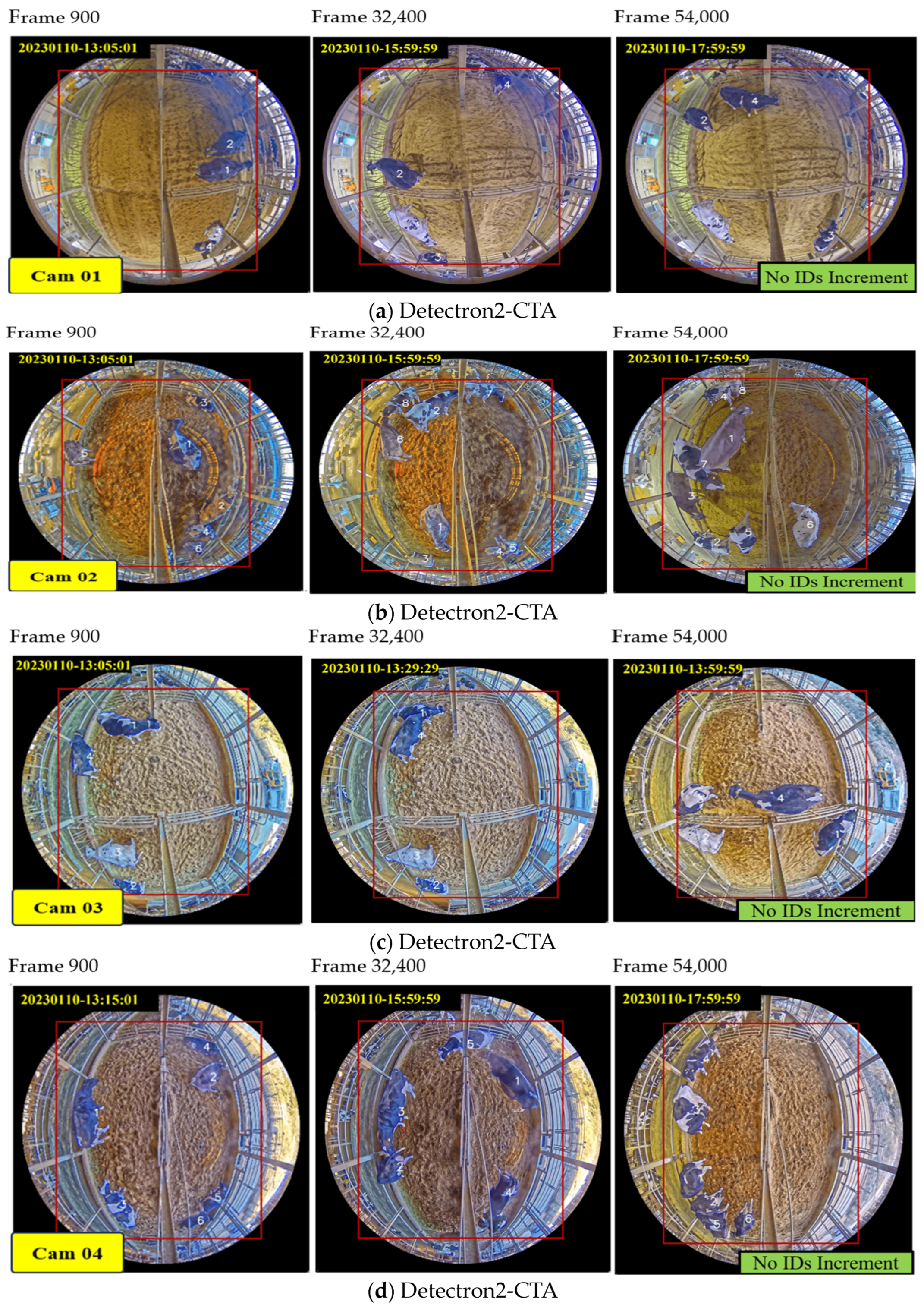

4.2.4. Performance Analysis of Testing Duration

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, H.; Reibman, A.R.; Boerman, J.P. Video analytic system for detecting cow structure. Comput. Electron. Agric. 2020, 178, 105761. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, X.; Wang, Z.; Li, R.; Hua, Z.; Song, H. ShuffleNet-Triplet: A lightweight RE identification network for dairy cows in natural scenes. Comput. Electron. Agric. 2023, 205, 107632. [Google Scholar] [CrossRef]

- Yi, Y.; Luo, L.; Zheng, Z. Single online visual object tracking with enhanced tracking and detection learning. Multimed. Tools Appl. 2019, 78, 12333–12351. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J.; Yu, D.; Wang, W.; Liu, J.; Huang, H. Occlusion-aware real-time object tracking. IEEE Trans. Multimed. 2016, 19, 763–771. [Google Scholar] [CrossRef]

- Li, Z.; Tang, Q.L.; Sang, N. Improved mean shift algorithm for occlusion pedestrian tracking. Electron. Lett. 2008, 44, 622–623. [Google Scholar] [CrossRef]

- Lyu, Y.; Yang, M.Y.; Vosselman, G.; Xia, G.S. Video object detection with a convolutional regression tracker. ISPRS J. Photogramm. Remote Sens. 2021, 176, 139–150. [Google Scholar] [CrossRef]

- Pham, V.; Pham, C.; Dang, T. Road damage detection and classification with detectron2 and faster r-cnn. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5592–5601. [Google Scholar] [CrossRef]

- Guo, S.; Wang, S.; Yang, Z.; Wang, L.; Zhang, H.; Guo, P.; Gao, Y.; Guo, J. A Review of Deep Learning-Based Visual Multi-Object Tracking Algorithms for Autonomous Driving. Appl. Sci. 2022, 12, 10741. [Google Scholar] [CrossRef]

- Sumi, K.; Maw, S.Z.; Zin, T.T.; Tin, P.; Kobayashi, I.; Horii, Y. Activity-integrated hidden markov model to predict calving time. Animals 2021, 11, 385. [Google Scholar] [CrossRef] [PubMed]

- Awad, A.I. From classical methods to animal biometrics: A review on cattle identification and tracking. Comput. Electron. Agric. 2016, 123, 423–435. [Google Scholar] [CrossRef]

- Chunsheng, C.; Din, L.; Balakrishnan, N. Research on the Detection and Tracking Algorithm of Moving Object in Image Based on Computer Vision Technology. Wirel. Commun. Mob. Comput. 2021, 2021, 1127017. [Google Scholar] [CrossRef]

- Mandal, V.; Adu-Gyamfi, Y. Object Detection and Tracking Algorithms for Vehicle Counting: A Comparative Analysis. J. Big Data Anal. Transp. 2020, 2, 251–261. [Google Scholar] [CrossRef]

- Philomin, V.; Duraiswami, R.; Davis, L. Pedestrian tracking from a moving vehicle. In Proceedings of the IEEE Intelligent Vehicles Symposium 2000 (Cat. No. 00TH8511), Dearborn, MI, USA, 5 October 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 350–355. [Google Scholar] [CrossRef]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer vision and deep learning techniques for pedestrian detection and tracking: A survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Sun, Z.; Chen, J.; Chao, L.; Ruan, W.; Mukherjee, M. A survey of multiple pedestrian tracking based on tracking-by-detection framework. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1819–1833. [Google Scholar] [CrossRef]

- Mohanapriya, D.; Mahesh, K. Multi object tracking using gradient-based learning model in video-surveillance. China Commun. 2021, 18, 169–180. [Google Scholar] [CrossRef]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple Object Tracking with Attention to Appearance, Structure, Motion and Size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Raghunandan, A.; Raghav, P.; Aradhya, H.R. Object detection algorithms for video surveillance applications. In Proceedings of the 2018 International Conference on Communication and Signal Processing (ICCSP), Erode, India, 27–29 March 2019; IEEE: Piscataway, NJ, USA, 2018; pp. 0563–0568. [Google Scholar] [CrossRef]

- Eaindrar, M.W.H.; Thi, Z.T. Cattle Face Detection with Ear Tags Using YOLOv5 Model. ICIC Express Lett. Part B Appl. 2023, 14, 65. [Google Scholar] [CrossRef]

- Chen, J.; Xi, Z.; Wei, C.; Lu, J.; Niu, Y.; Li, Z. Multiple Object Tracking Using Edge Multi-Channel Gradient Model with ORB Feature. IEEE Access 2021, 9, 2294–2309. [Google Scholar] [CrossRef]

- Yao, L.; Hu, Z.; Liu, C.; Liu, H.; Kuang, Y.; Gao, Y. Cow face detection and recognition based on automatic feature extraction algorithm. In Proceedings of the ACM Turing Celebration Conference-China, Chengdu, China, 1–5 May 2019. [Google Scholar]

- Ning, J.; Zhang, L.; Zhang, D.; Wu, C. Robust object tracking using joint color-texture histogram. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 1245–1263. [Google Scholar] [CrossRef]

- Dutta, A.; Gupta, A.; Zissermann, A. VGG Image Annotator (VIA). 2016. Available online: http://www.robots.ox.ac.uk/~vgg/software/via (accessed on 3 November 2020).

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Mraz, A.; Kashiyama, T.; Sekimoto, Y. Deep learning-based road damage detection and classification for multiple countries. Autom. Constr. 2021, 132, 103935. [Google Scholar] [CrossRef]

- Abhishek, A.V.S.; Kotni, S. Detectron2 object detection & manipulating images using cartoonization. Int. J. Eng. Res. Technol. (IJERT) 2021, 10, 322–326. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3464–3468. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. Real time pear fruit detection and counting using YOLOv4 models and deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 1–21. [Google Scholar] [CrossRef]

- Nascimento, J.C.; Abrantes, A.J.; Marques, J.S. An algorithm for centroid-based tracking of moving objects. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings, ICASSP99 (Cat. No. 99CH36258), Phoenix, AZ, USA, 15–19 March 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 6, pp. 3305–3308. [Google Scholar] [CrossRef]

- Bittanti, S.; Savaresi, S.M. On the parametrization and design of an extended Kalman filter frequency tracker. IEEE Trans. Autom. Control 2000, 45, 1718–1724. [Google Scholar] [CrossRef]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU based multi-object tracking by visual information. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

| No. | Existing Algorithms | Advantages | Drawbacks |

|---|---|---|---|

| 1. | YOLO_SORT | Efficient tracking of multiple objects. | Susceptibility to identity switches in crowded scenes. |

| 2. | CenterNet_ DeepSORT | Improved tracking accuracy through deep learning. | May struggle with occlusions in crowded scenes. |

| 3. | YOLOv4_DeepSORT | Enhanced tracking accuracy with Kalman filter integration. | Increased computational complexity due to filter usage and struggle with occlusions. |

| 4. | Probability Gradient Pattern (PGP) | Offers robustness to noise and varying illumination. | Limited effectiveness in scenes with complex backgrounds and extensive occlusions. |

| 5. | LIBS_GMM | Effective in changing lighting conditions | Limited capability to handle complex background scenes with extensive occlusions. |

| Dataset | Date | #Frames | #Instances |

|---|---|---|---|

| Training | 2021: October~November 2022: March~April, June, September~November 2023: January~February | 1080 | 7725 |

| Validation | 2021: December, 2022: January, 2023: January | 240 | 2375 |

| System Component | Specification |

|---|---|

| Operating System | Windows 10 Pro |

| Processor | 3.20 GHz 12th Gen Intel Core i9-12900K |

| Memory | 64 GB |

| Storage | 1 TB HDD |

| Graphics Processing Unit (GPU) | NVIDIA GeForce RTX 3090 |

| Dataset | BBox (%) | Mask (%) | ||||

|---|---|---|---|---|---|---|

| AP | AP 50 | AP 75 | AP | AP 50 | AP 75 | |

| Training | 92.53 | 98.17 | 95.99 | 90.23 | 97.87 | 95.81 |

| Validation | 91.12 | 97.67 | 95.67 | 89.56 | 97.17 | 95.13 |

| Cam. | Date | Period | #Frames | TP | FP | TN | FN | Accuracy (%) |

|---|---|---|---|---|---|---|---|---|

| 01 | 10 January 2023 | 00:00:00~05:00:00 | 18,000 | 17,990 | 0 | 0 | 10 | 99.94 |

| 02 | 17,980 | 0 | 0 | 20 | 99.89 | |||

| 03 | 17,978 | 0 | 0 | 22 | 99.88 | |||

| 04 | 17,988 | 0 | 0 | 12 | 99.93 | |||

| Average Accuracy | 72,000 | 71,936 | 0 | 0 | 64 | 99.91 | ||

| Cam No. | Date | Period | #Frames | TP | FP | TN | FN | Accuracy (%) |

|---|---|---|---|---|---|---|---|---|

| 01 | 10 January 2023 | 13:00:00~18:00:00 | 18,000 | 17,990 | 0 | 0 | 10 | 99.94 |

| 02 | 17,980 | 0 | 0 | 20 | 99.89 | |||

| 03 | 17,978 | 0 | 0 | 22 | 99.88 | |||

| 04 | 17,988 | 0 | 0 | 12 | 99.93 | |||

| Average Accuracy | 72,000 | 71,861 | 0 | 0 | 139 | 99.81 | ||

| Cam. | Methods | #Cows | GT | FP | FN | IDS | MOTA(%) |

|---|---|---|---|---|---|---|---|

| 01 | Detectron2_SORT | 4 | 213,915 | 0 | 10 | 10,314 | 95.17 |

| Detectron2_DeepSORT | 0 | 10 | 12,314 | 94.24 | |||

| Detectron2_Modified_DeepSORT | 0 | 10 | 1610 | 99.24 | |||

| Detectron2_ByteTrack | 0 | 10 | 3348 | 98.43 | |||

| Detectron2_Centroid | 0 | 10 | 1401 | 99.34 | |||

| Detectron2_Centroid_Kalman | 0 | 10 | 11,314 | 94.71 | |||

| Detectron2_IOU | 0 | 10 | 1312 | 99.38 | |||

| Detectron2_CTA | 0 | 10 | 3 | 99.99 | |||

| 02 | Detectron2_SORT | 8 | 354,100 | 0 | 20 | 11,472 | 96.75 |

| Detectron2_DeepSORT | 0 | 20 | 12,413 | 96.49 | |||

| Detectron2_Modified_DeepSORT | 0 | 20 | 4031 | 98.86 | |||

| Detectron2_ByteTrack | 0 | 20 | 11,872 | 96.64 | |||

| Detectron2_Centroid | 0 | 20 | 3431 | 99.03 | |||

| Detectron2_Centroid_Kalman | 0 | 20 | 12,292 | 96.52 | |||

| Detectron2_IOU | 0 | 20 | 2796 | 99.20 | |||

| Detectron2_CTA | 0 | 20 | 43 | 99.98 | |||

| 03 | Detectron2_SORT | 8 | 354,100 | 0 | 22 | 11,517 | 96.74 |

| Detectron2_DeepSORT | 0 | 22 | 12,933 | 96.34 | |||

| Detectron2_Modified_DeepSORT | 0 | 22 | 3931 | 98.88 | |||

| Detectron2_ByteTrack | 0 | 22 | 11,341 | 96.79 | |||

| Detectron2_Centroid | 0 | 22 | 3231 | 99.08 | |||

| Detectron2_Centroid_Kalman | 0 | 22 | 12,192 | 96.55 | |||

| Detectron2_IOU | 0 | 22 | 2894 | 99.18 | |||

| Detectron2_CTA | 0 | 22 | 48 | 99.98 | |||

| 04 | Detectron2_SORT | 7 | 323,870 | 0 | 12 | 10,417 | 96.78 |

| Detectron2_DeepSORT | 0 | 12 | 12,243 | 96.22 | |||

| Detectron2_Modified_DeepSORT | 0 | 12 | 1741 | 99.46 | |||

| Detectron2_ByteTrack | 0 | 12 | 3901 | 98.79 | |||

| Detectron2_Centroid | 0 | 12 | 1641 | 99.49 | |||

| Detectron2_Centroid_Kalman | 0 | 12 | 10,992 | 96.60 | |||

| Detectron2_IOU | 0 | 12 | 1413 | 99.56 | |||

| Detectron2_CTA | 0 | 12 | 37 | 99.98 |

| Cam. | Methods | #Cows | GT | FP | FN | IDS | MOTA(%) |

|---|---|---|---|---|---|---|---|

| 01 | Detectron2_SORT | 4 | 213,915 | 0 | 66 | 10,214 | 95.19 |

| Detectron2_DeepSORT | 0 | 66 | 12,214 | 94.26 | |||

| Detectron2_Modified_DeepSORT | 0 | 66 | 1510 | 99.26 | |||

| Detectron2_ByteTrack | 0 | 66 | 3248 | 98.45 | |||

| Detectron2_Centroid | 0 | 66 | 1301 | 99.36 | |||

| Detectron2_Centroid_Kalman | 0 | 66 | 11,214 | 94.73 | |||

| Detectron2_IOU | 0 | 66 | 1212 | 99.40 | |||

| Detectron2_CTA | 0 | 66 | 4 | 99.97 | |||

| 02 | Detectron2_SORT | 8 | 354,100 | 0 | 23 | 11,317 | 96.80 |

| Detectron2_DeepSORT | 0 | 23 | 12,213 | 96.54 | |||

| Detectron2_Modified_DeepSORT | 0 | 23 | 3431 | 99.02 | |||

| Detectron2_ByteTrack | 0 | 23 | 10,872 | 96.92 | |||

| Detectron2_Centroid | 0 | 23 | 2831 | 99.19 | |||

| Detectron2_Centroid_Kalman | 0 | 23 | 11,892 | 96.64 | |||

| Detectron2_IOU | 0 | 23 | 2776 | 99.21 | |||

| Detectron2_CTA | 0 | 23 | 51 | 99.98 | |||

| 03 | Detectron2_SORT | 8 | 354,100 | 0 | 32 | 11,417 | 96.77 |

| Detectron2_DeepSORT | 0 | 32 | 12,813 | 96.37 | |||

| Detectron2_Modified_DeepSORT | 0 | 32 | 3831 | 98.91 | |||

| Detectron2_ByteTrack | 0 | 32 | 11,201 | 96.83 | |||

| Detectron2_Centroid | 0 | 32 | 3131 | 99.11 | |||

| Detectron2_Centroid_Kalman | 0 | 32 | 12,092 | 96.58 | |||

| Detectron2_IOU | 0 | 32 | 2784 | 99.20 | |||

| Detectron2_CTA | 0 | 32 | 34 | 99.98 | |||

| 04 | Detectron2_SORT | 7 | 323,870 | 0 | 18 | 10,317 | 96.81 |

| Detectron2_DeepSORT | 0 | 18 | 12,113 | 96.25 | |||

| Detectron2_Modified_DeepSORT | 0 | 18 | 1631 | 99.50 | |||

| Detectron2_ByteTrack | 0 | 18 | 3801 | 98.82 | |||

| Detectron2_Centroid | 0 | 18 | 1531 | 99.52 | |||

| Detectron2_Centroid_Kalman | 0 | 18 | 10,892 | 96.63 | |||

| Detectron2_IOU | 0 | 18 | 1313 | 99.59 | |||

| Detectron2_CTA | 0 | 18 | 4 | 99.99 |

| No. | Methods | Calculation Time | |

|---|---|---|---|

| Nighttime | Daytime | ||

| 1. | Detectron2_SORT | 6 h 42 min | 6 h 49 min |

| 2. | Detectron2_DeepSORT | 6 h 40 min | 6 h 55 min |

| 3. | Detectron2_Modified_DeepSORT | 5 h 39 min | 5 h 56 min |

| 4. | Detectron2_ByteTrack | 5 h 39 min | 5 h 42 min |

| 5. | Detectron2_Centroid | 5 h 24 min | 5 h 46 min |

| 6. | Detectron2_Centroid_Kalman | 6 h 17 min | 6 h 31 min |

| 7. | Detectron2_IOU | 5 h 11 min | 5 h 19 min |

| 8. | Detectron2_CTA | 4 h 45 min | 4 h 51 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mg, W.H.E.; Tin, P.; Aikawa, M.; Kobayashi, I.; Horii, Y.; Honkawa, K.; Zin, T.T. Customized Tracking Algorithm for Robust Cattle Detection and Tracking in Occlusion Environments. Sensors 2024, 24, 1181. https://doi.org/10.3390/s24041181

Mg WHE, Tin P, Aikawa M, Kobayashi I, Horii Y, Honkawa K, Zin TT. Customized Tracking Algorithm for Robust Cattle Detection and Tracking in Occlusion Environments. Sensors. 2024; 24(4):1181. https://doi.org/10.3390/s24041181

Chicago/Turabian StyleMg, Wai Hnin Eaindrar, Pyke Tin, Masaru Aikawa, Ikuo Kobayashi, Yoichiro Horii, Kazuyuki Honkawa, and Thi Thi Zin. 2024. "Customized Tracking Algorithm for Robust Cattle Detection and Tracking in Occlusion Environments" Sensors 24, no. 4: 1181. https://doi.org/10.3390/s24041181

APA StyleMg, W. H. E., Tin, P., Aikawa, M., Kobayashi, I., Horii, Y., Honkawa, K., & Zin, T. T. (2024). Customized Tracking Algorithm for Robust Cattle Detection and Tracking in Occlusion Environments. Sensors, 24(4), 1181. https://doi.org/10.3390/s24041181