Optimized OTSU Segmentation Algorithm-Based Temperature Feature Extraction Method for Infrared Images of Electrical Equipment

Abstract

1. Introduction

2. The Infrared Image Segmentation Algorithm

2.1. OTSU Algorithm Principle

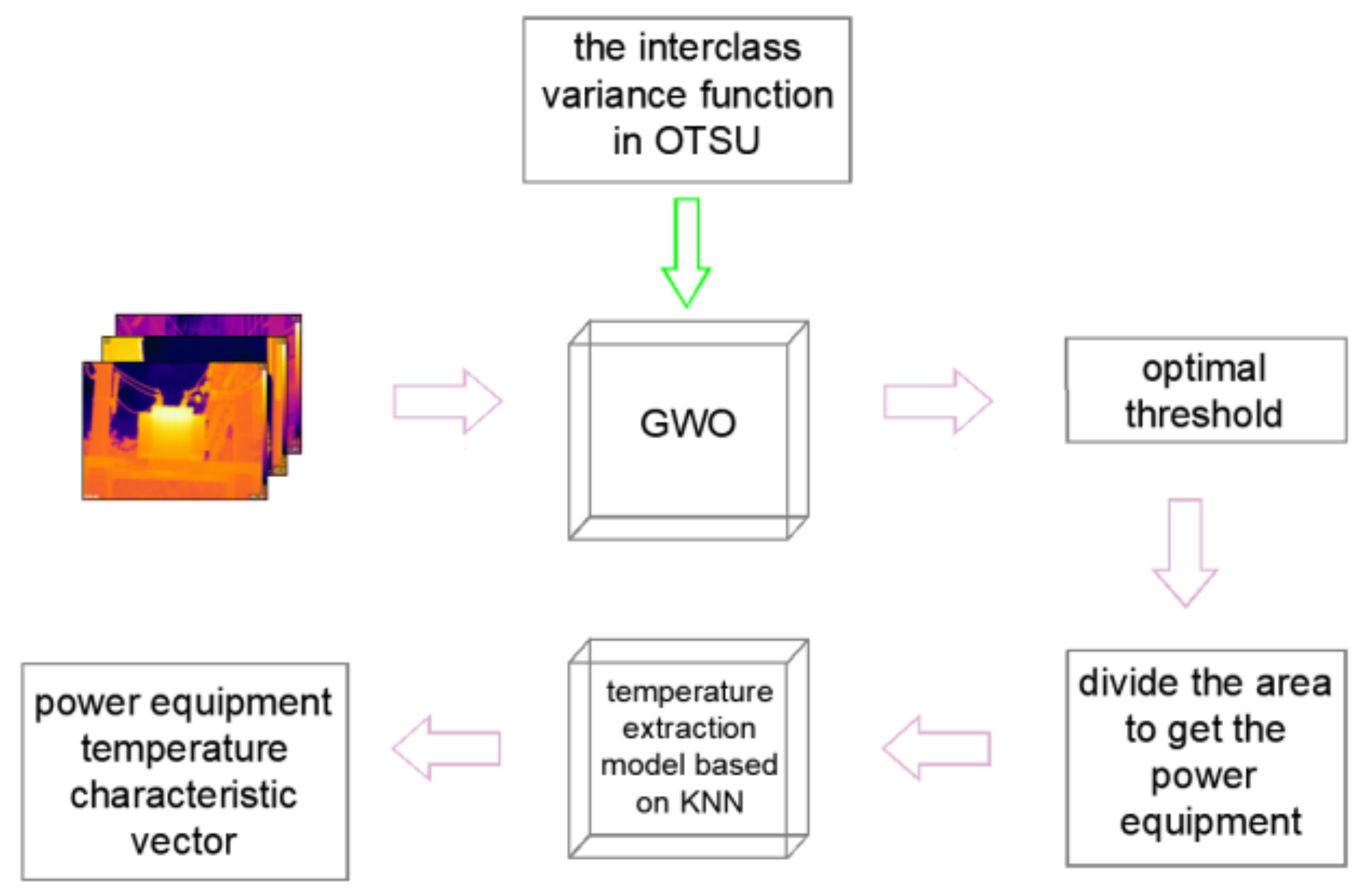

2.2. The GWO-Optimized OTSU Segmentation Algorithm

| Algorithm 1: GWO Algorithm Pseudocode |

| 1. Input: population_size—size of the population; num_iterations—number of iterations; lower_bound—lower bound for the variables; upper_bound—upper bound for the variables. 2. Initialization: Create a population of size population_size and randomly initialize the position and fitness value for each individual; Compute the fitness value for each individual. 3. Find the optimal solution: For each iteration, do the following: For each individual, compute the fitness value; Find the best individual with the highest fitness value in the current population, denoted as alpha; Find the second best individual with the second highest fitness value in the current population, denoted as beta; Find the worst individual with the lowest fitness value in the current population, denoted as delta; For each individual in the population, update the position based on the position of alpha, beta, and delta: For each dimension, compute the new position; If the new position is out of bounds, set it to the boundary value; Return the position of the best individual with the highest fitness value. 4. Main program: Initialize the population; For each iteration, do the following: Find the position of the best individual; Output the current iteration number and the fitness value of the best solution; Update the population. 5. Output: The position and fitness value of the best solution. |

3. Infrared Image Temperature Feature Extraction Method Based on an Optimized OTSU Algorithm

3.1. Traditional Linear Temperature Extraction Method

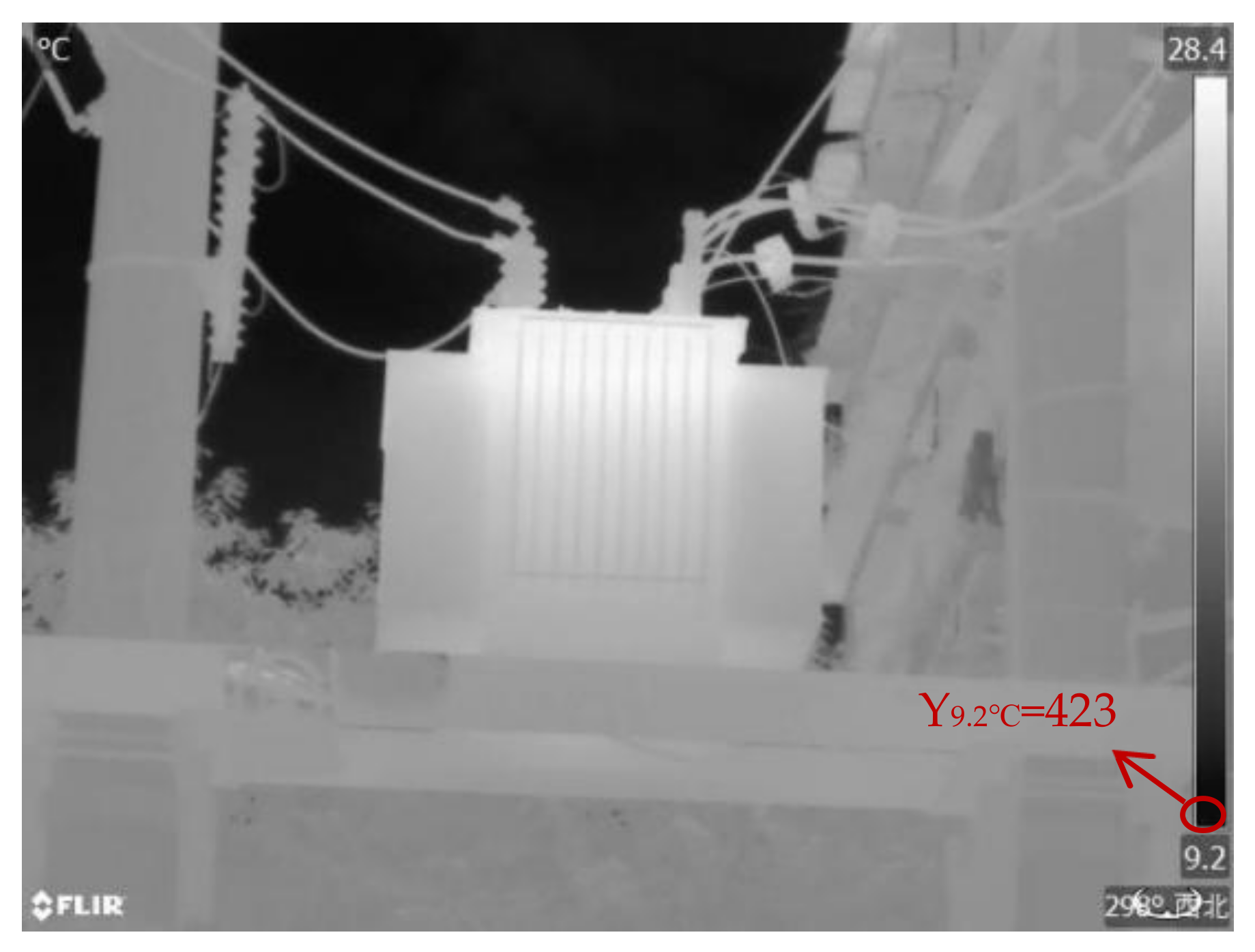

3.2. KNN-Based Infrared Image Temperature Value Extraction Method

3.3. A Temperature Feature Extraction Method for Infrared Images Based on an Optimized OTSU Algorithm

- Extraction of maximum and minimum temperatures

- 2.

- Extraction of average temperature

4. Method Validation

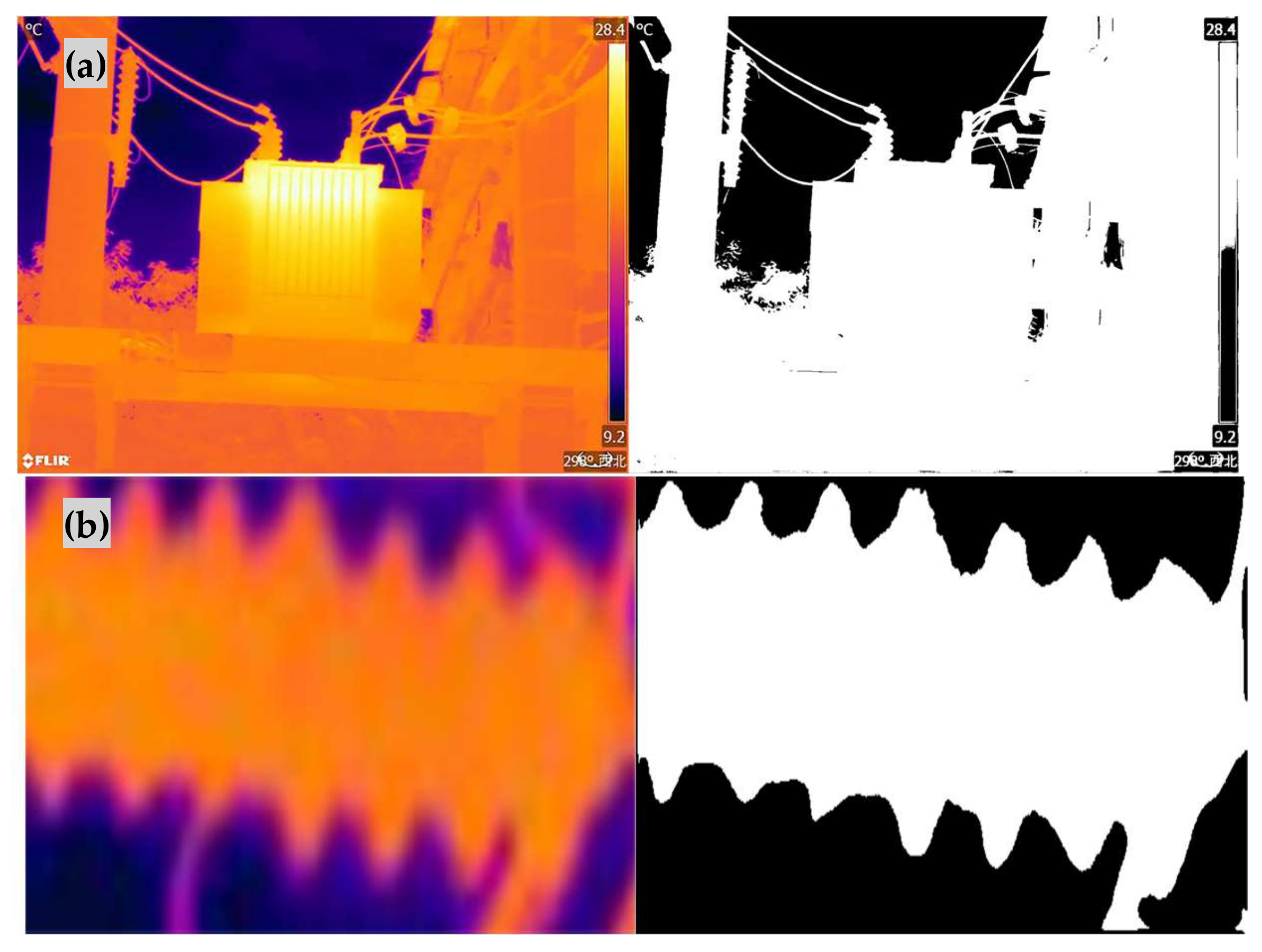

4.1. Infrared Image Segmentation

4.2. Temperature Feature Vector Extraction

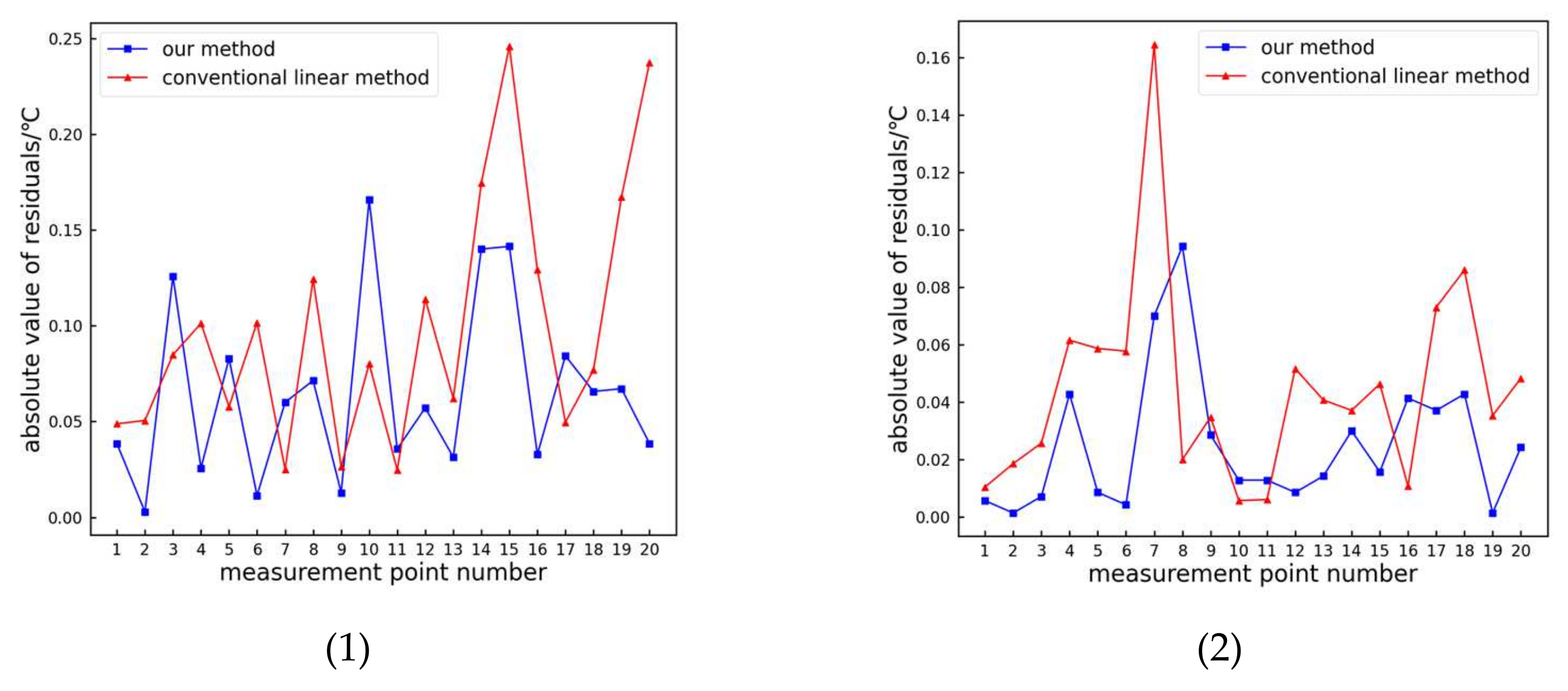

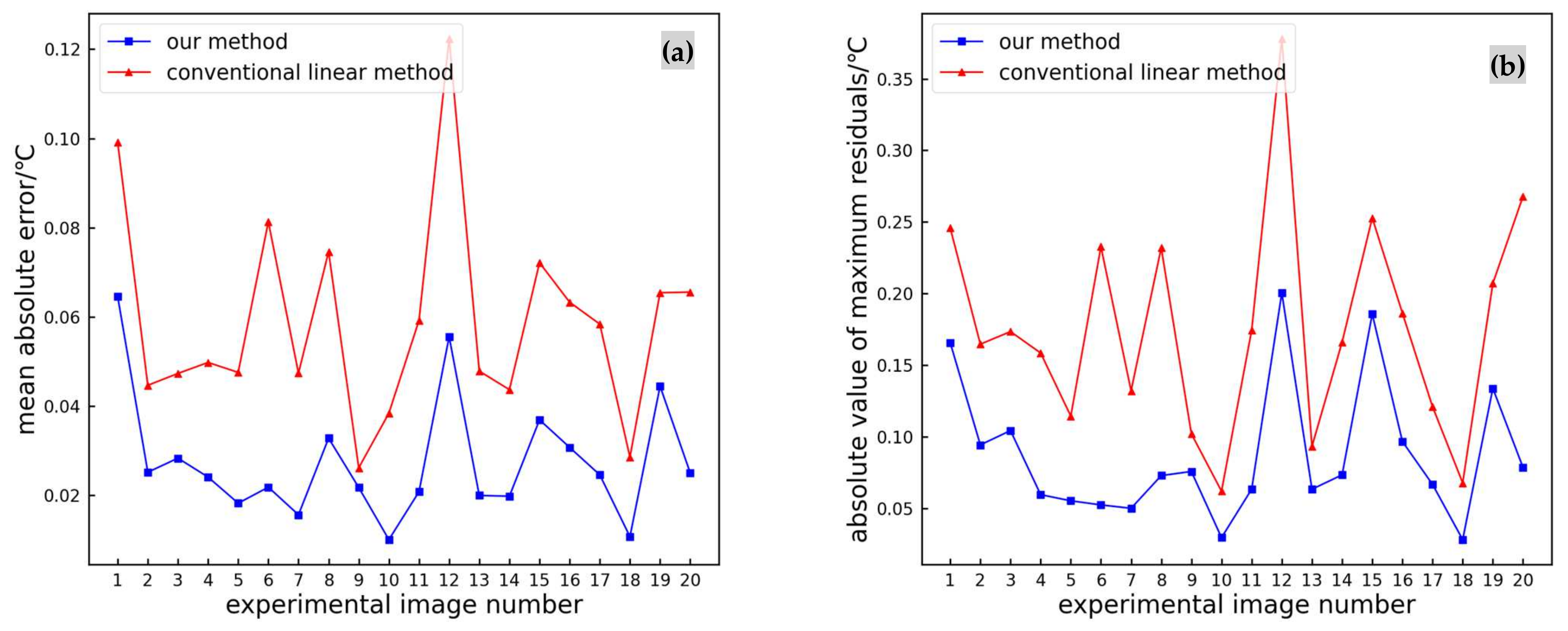

4.2.1. Extraction of Temperature Values from Normal Infrared Images

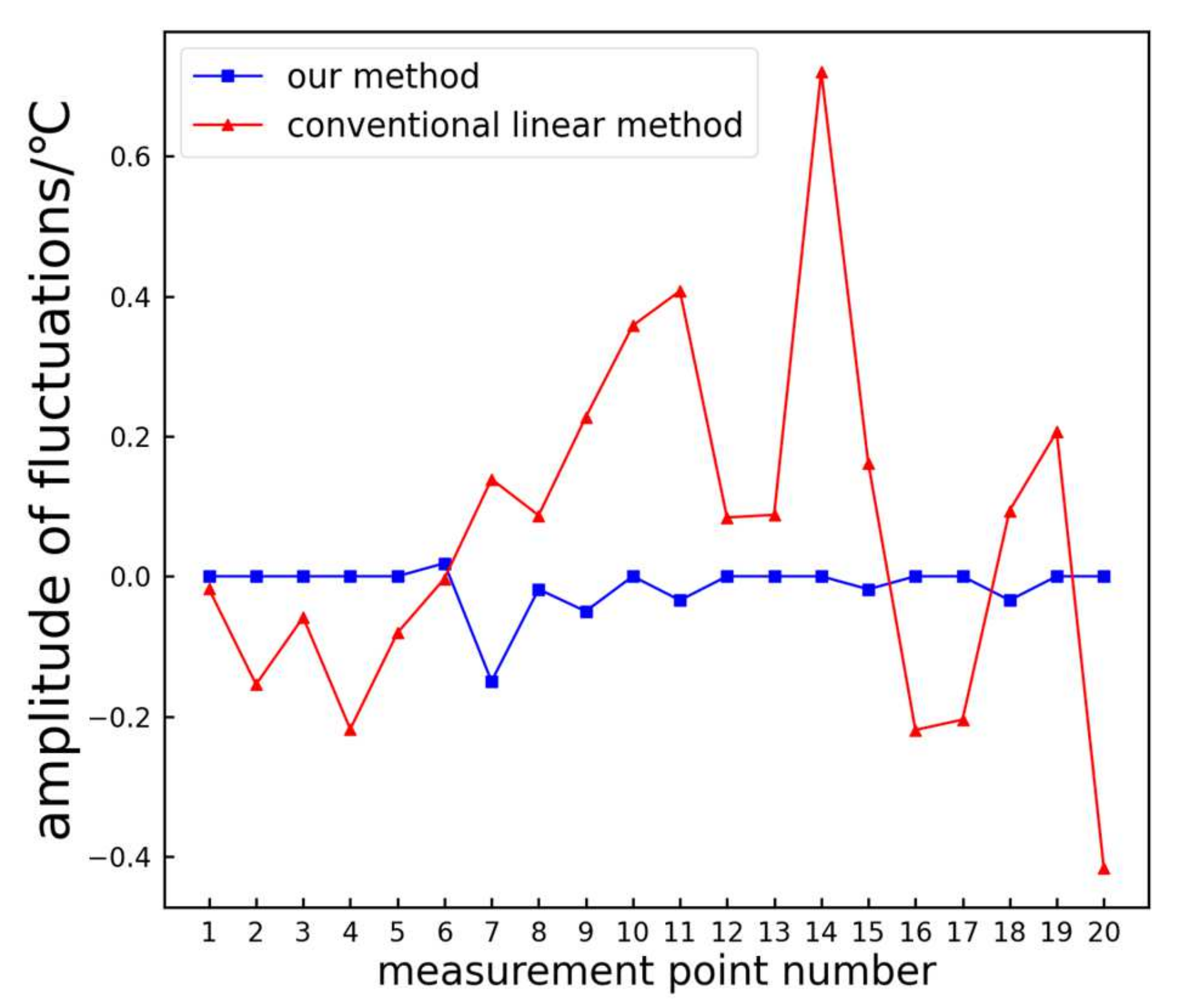

4.2.2. Extraction of Temperature Values from Infrared Images with Added Noise

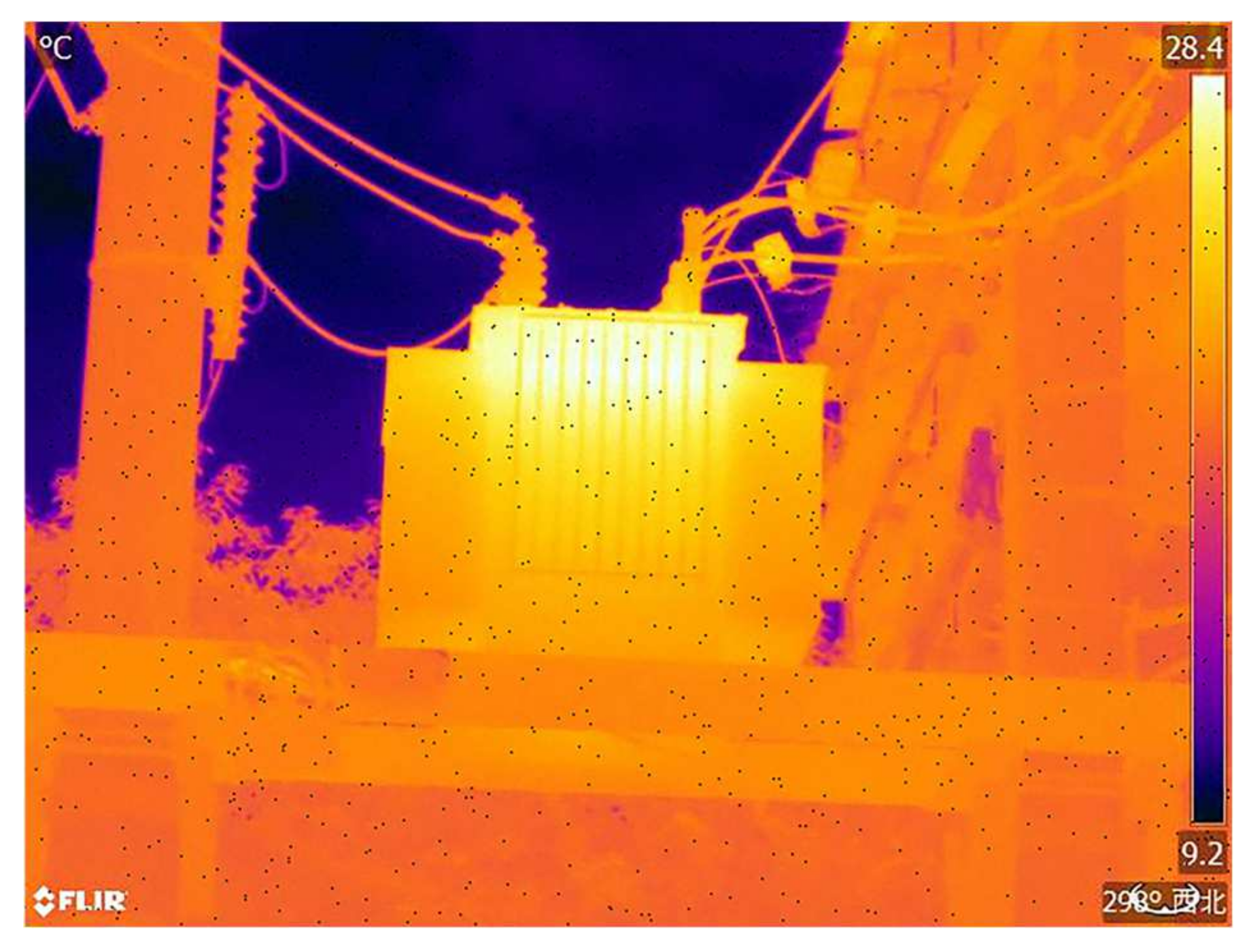

4.2.3. Extraction of Temperature Feature Vectors from Power Equipment Infrared Images

5. Conclusions

- By utilizing the Gray Wolf Optimization (GWO) algorithm to calculate the maximum inter-class variance threshold for the OTSU method, an optimized OTSU segmentation algorithm based on GWO is obtained. This algorithm improves the rate of finding the optimal segmentation threshold. The experimental results show that the proposed method reduces the average computation time for the optimal threshold by 83.99%, while maintaining a similar segmentation effect.

- By combining the K-nearest neighbor (KNN) algorithm, the temperature values from power equipment infrared images are extracted, addressing the issue of high errors in temperature calculation using traditional linear fitting methods. The experimental results show that compared to the traditional linear method, the proposed method achieves a 73.68% improvement in the absolute residuals and a 78.95% improvement in the average residuals. The proposed method, therefore, demonstrates higher accuracy compared to the traditional linear method.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Han, S.; Yang, F.; Yang, G.; Gao, B.; Zhang, N.; Wang, D. Electrical equipment identification in infrared images based on ROI-selected CNN method. Electr. Power Syst. Res. 2020, 188, 10653. [Google Scholar] [CrossRef]

- Jiang, J.; Bie, Y.; Li, J.; Yang, X.; Ma, G.; Lu, Y.; Zhang, C. Fault diagnosis of the bushing infrared images based on mask R-CNN and improved PCNN joint algorithm. High Volt. 2021, 6, 116–124. [Google Scholar] [CrossRef]

- Hu, F.; Chen, H.; Wang, X. An Intuitionistic Kernel-Based Fuzzy C-Means Clustering Algorithm With Local Information for Power Equipment Image Segmentation. IEEE Access 2020, 8, 4500–4514. [Google Scholar] [CrossRef]

- Lu, M.; Liu, H.; Yuan, X. Thermal Fault Diagnosis of Electrical Equipment in Substations Based on Image Fusion. Trait. Signal 2021, 38, 1095–1102. [Google Scholar] [CrossRef]

- Zou, H.; Huang, F. A novel intelligent fault diagnosis method for electrical equipment using infrared thermography. Infrared Phys. Technol. 2015, 73, 29–35. [Google Scholar] [CrossRef]

- Gritzman, A.D.; Postema, M.; Rubin, D.M.; Aharonson, V. Threshold-based outer lip segmentation using support vector regression. SIViP 2021, 15, 1197–1202. [Google Scholar] [CrossRef]

- Fang, K. Threshold segmentation of PCB defect image grid based on finite difference dispersion for providing accuracy in the IoT based data of smart cities. Int. J. Syst. Assur. Eng. Manag. 2022, 13 (Suppl. S1), 121–131. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, W.; Liu, H. Loess terrain segmentation from digital elevation models based on the region growth method. Phys. Geogr. 2018, 39, 51–66. [Google Scholar] [CrossRef]

- Chen, Q.; Sun, Q.-S.; Xia, D.-S. A new edge-based interactive image segmentation method. Proc. SPIE 2010, 7820, 78201P. [Google Scholar] [CrossRef]

- Liu, S. Image segmentation technology of the ostu method for image materials based on binary PSO algorithm. In Advances in Computer Science Intelligent System and Environment; Springer: Berlin/Heidelberg, Germany, 2011; pp. 415–419. [Google Scholar] [CrossRef]

- Wang, H.; Ying, D. An improved image segmentation algorithm based on OTSU method. Comput. Simul. 2011, 6625, 262–265. [Google Scholar]

- Lin, G.Y.; Zhang, W.G. Image segmentation of the ostu method based on ep algorithm. Chin. J. Sens. Actuat. 2006, 19, 179–182. [Google Scholar]

- Zhu, Q.; Jing, L.; Bi, R. Exploration and improvement of Ostu threshold segmentation algorithm and Auotmation. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation (WCICA), Jinan, China, 7–9 July 2010. [Google Scholar]

- Shami, T.M.; El-Saleh, A.A.; Alswaitti, M.; Al-Tashi, Q.; Summakieh, M.A.; Mirjalili, S. Particle Swarm Optimization: A Comprehensive Survey. IEEE Access 2022, 10, 10031–10061. [Google Scholar] [CrossRef]

- Kohli, M.; Arora, S. Chaotic grey wolf optimization algorithm for constrained optimization problems. J. Comput. Des. Eng. 2018, 5, 458–472. [Google Scholar] [CrossRef]

- Katoch, S.; Chauhan, S.S.; Kumar, V. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Li, X.; Wen, Y. AN OTSU image segmentation based on fruitfly optimization algorithm. Alex. Eng. J. 2021, 60, 183188. [Google Scholar] [CrossRef]

- Ning, G. Two-dimensional Otsu multi-threshold image segmentation based on hybrid whale optimization algorithm. Multimed. Tools Appl. 2023, 82, 15007–15026. [Google Scholar] [CrossRef]

- Zheng, H.; Ping, Y.; Cui, Y.; Li, J. Intelligent Diagnosis Method of Power Equipment Faults Based on Single-Stage Infrared Image Target Detection. IEEJ Trans. Electr. Electron. Eng. 2022, 17, 1706–1716. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, J.; Yu, H.; Wang, Y.; Zhou, X. An Improved Grey Wolf Optimizer Based on Differential Evolution and OTSU Algorithm. Appl. Sci. 2020, 10, 6343. [Google Scholar] [CrossRef]

- CSDN Blog. Otsu Algorithm—Maximum Inter Class Variance Method (Otsu Algorithm). Available online: https://blog.csdn.net/a15779627836/article/details/124151125 (accessed on 4 May 2023).

- Liang, Y.; Li, K.-J.; Ma, Z.; Lee, W.-J. Multilabel Classification Model for Type Recognition of Single-Phase-to-Ground Fault Based on KNN-Bayesian Method. IEEE Trans. Ind. Appl. 2021, 57, 1294–1302. [Google Scholar] [CrossRef]

- Guidelines for Infrared Diagnosis of Live Equipment. (2016). DL/T 664-2016. Available online: https://std.samr.gov.cn/hb/search/stdHBDetailed?id=8B1827F21888BB19E05397BE0A0AB44A (accessed on 4 May 2023).

| Compared Categories | Image | GWO–OTSU | SSA–OTSU | GA–OTSU | WOA–OTSU | OTSU |

|---|---|---|---|---|---|---|

| Threshold | (a) | 90 | 90 | 92 | 90 | 90 |

| (b) | 93 | 93 | 93 | 93 | 93 | |

| (c) | 112 | 112 | 111 | 113 | 112 | |

| (d) | 96 | 96 | 94 | 94 | 96 | |

| PSNR | (a) | 27.91 | 27.91 | 27.92 | 27.91 | 27.91 |

| (b) | 27.40 | 27.40 | 27.40 | 27.40 | 27.40 | |

| (c) | 28.41 | 28.41 | 28.34 | 28.40 | 28.41 | |

| (d) | 28.01 | 28.01 | 28.00 | 28.00 | 28.01 | |

| SSIM | (a) | 0.5030 | 0.5030 | 0.4999 | 0.5030 | 0.5030 |

| (b) | 0.3624 | 0.3624 | 0.3624 | 0.3624 | 0.3624 | |

| (c) | 0.4723 | 0.4723 | 0.4787 | 0.4665 | 0.4723 | |

| (d) | 0.2390 | 0.2390 | 0.2414 | 0.2414 | 0.2390 | |

| Elapsed time/ms | (a) | 21.87 | 43.76 | 42.29 | 38.68 | 147.82 |

| (b) | 21.77 | 43.86 | 39.83 | 38.63 | 134.38 | |

| (c) | 22.04 | 42.94 | 40.62 | 38.26 | 133.76 | |

| (d) | 21.98 | 44.24 | 39.76 | 38.13 | 131.56 | |

| Average running time/ms | 21.915 | 43.7 | 40.625 | 38.425 | 136.88 | |

| Average uptime lift rate/% | 83.99 | 68.07 | 70.32 | 71.93 |

| Compared Categories | Image | GWO–OTSU | SSA–OTSU | GA–OTSU | WOA–OTSU | OTSU |

|---|---|---|---|---|---|---|

| Threshold | (1) | 50 | 50 | 50 | 51 | 50 |

| (2) | 84 | 84 | 83 | 85 | 84 | |

| (3) | 28 | 28 | 28 | 27 | 28 | |

| (4) | 63 | 63 | 63 | 63 | 63 | |

| (5) | 54 | 54 | 54 | 54 | 54 | |

| (6) | 77 | 77 | 78 | 78 | 77 | |

| (7) | 41 | 41 | 39 | 42 | 41 | |

| (8) | 33 | 33 | 34 | 33 | 33 | |

| (9) | 43 | 43 | 43 | 42 | 43 | |

| (10) | 37 | 37 | 36 | 38 | 37 | |

| (11) | 67 | 67 | 64 | 69 | 67 | |

| (12) | 76 | 76 | 77 | 74 | 76 | |

| (13) | 35 | 35 | 32 | 34 | 35 | |

| (14) | 84 | 84 | 84 | 82 | 84 | |

| (15) | 16 | 16 | 19 | 16 | 16 | |

| (16) | 31 | 31 | 33 | 30 | 31 | |

| (17) | 62 | 62 | 59 | 63 | 62 | |

| (18) | 45 | 45 | 45 | 46 | 45 | |

| (19) | 66 | 66 | 66 | 69 | 66 | |

| (20) | 60 | 60 | 63 | 58 | 60 | |

| Elapsed time/ms | (1) | 22.66 | 43.96 | 40.63 | 37.08 | 131.47 |

| (2) | 22.15 | 43.79 | 37.12 | 40.07 | 131.16 | |

| (3) | 22.29 | 44.76 | 39.57 | 39.59 | 136.79 | |

| (4) | 22.87 | 45.83 | 40.96 | 36.65 | 133.47 | |

| (5) | 21.85 | 44.66 | 40.08 | 38.09 | 139.70 | |

| (6) | 19.99 | 42.37 | 36.50 | 42.55 | 131.48 | |

| (7) | 21.01 | 42.67 | 34.42 | 38.40 | 133.83 | |

| (8) | 20.29 | 42.53 | 39.08 | 37.19 | 132.44 | |

| (9) | 20.19 | 41.64 | 33.96 | 34.69 | 132.31 | |

| (10) | 21.19 | 42.02 | 38.04 | 37.92 | 134.32 | |

| (11) | 20.79 | 40.70 | 35.23 | 35.58 | 133.84 | |

| (12) | 20.22 | 44.27 | 34.52 | 37.59 | 133.85 | |

| (13) | 21.45 | 41.37 | 32.92 | 38.22 | 139.19 | |

| (14) | 20.87 | 42.42 | 39.84 | 37.78 | 131.57 | |

| (15) | 20.98 | 42.48 | 42.56 | 35.42 | 131.56 | |

| (16) | 21.97 | 42.50 | 38.28 | 35.77 | 131.17 | |

| (17) | 20.51 | 42.46 | 38.51 | 37.09 | 130.29 | |

| (18) | 20.77 | 42.35 | 42.08 | 35.99 | 132.69 | |

| (19) | 20.50 | 43.87 | 39.10 | 37.96 | 132.79 | |

| (20) | 21.83 | 43.67 | 40.77 | 37.65 | 135.10 | |

| Average running time/ms | 21.219 | 43.016 | 38.2085 | 37.564 | 133.451 | |

| Average uptime lift rate/% | 84.10 | 67.77 | 71.37 | 71.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, Z.; Hao, Y.; Zhao, H.; Yang, Y. Optimized OTSU Segmentation Algorithm-Based Temperature Feature Extraction Method for Infrared Images of Electrical Equipment. Sensors 2024, 24, 1126. https://doi.org/10.3390/s24041126

Liu X, Zhang Z, Hao Y, Zhao H, Yang Y. Optimized OTSU Segmentation Algorithm-Based Temperature Feature Extraction Method for Infrared Images of Electrical Equipment. Sensors. 2024; 24(4):1126. https://doi.org/10.3390/s24041126

Chicago/Turabian StyleLiu, Xueli, Zhanlong Zhang, Yuefeng Hao, Hui Zhao, and Yu Yang. 2024. "Optimized OTSU Segmentation Algorithm-Based Temperature Feature Extraction Method for Infrared Images of Electrical Equipment" Sensors 24, no. 4: 1126. https://doi.org/10.3390/s24041126

APA StyleLiu, X., Zhang, Z., Hao, Y., Zhao, H., & Yang, Y. (2024). Optimized OTSU Segmentation Algorithm-Based Temperature Feature Extraction Method for Infrared Images of Electrical Equipment. Sensors, 24(4), 1126. https://doi.org/10.3390/s24041126