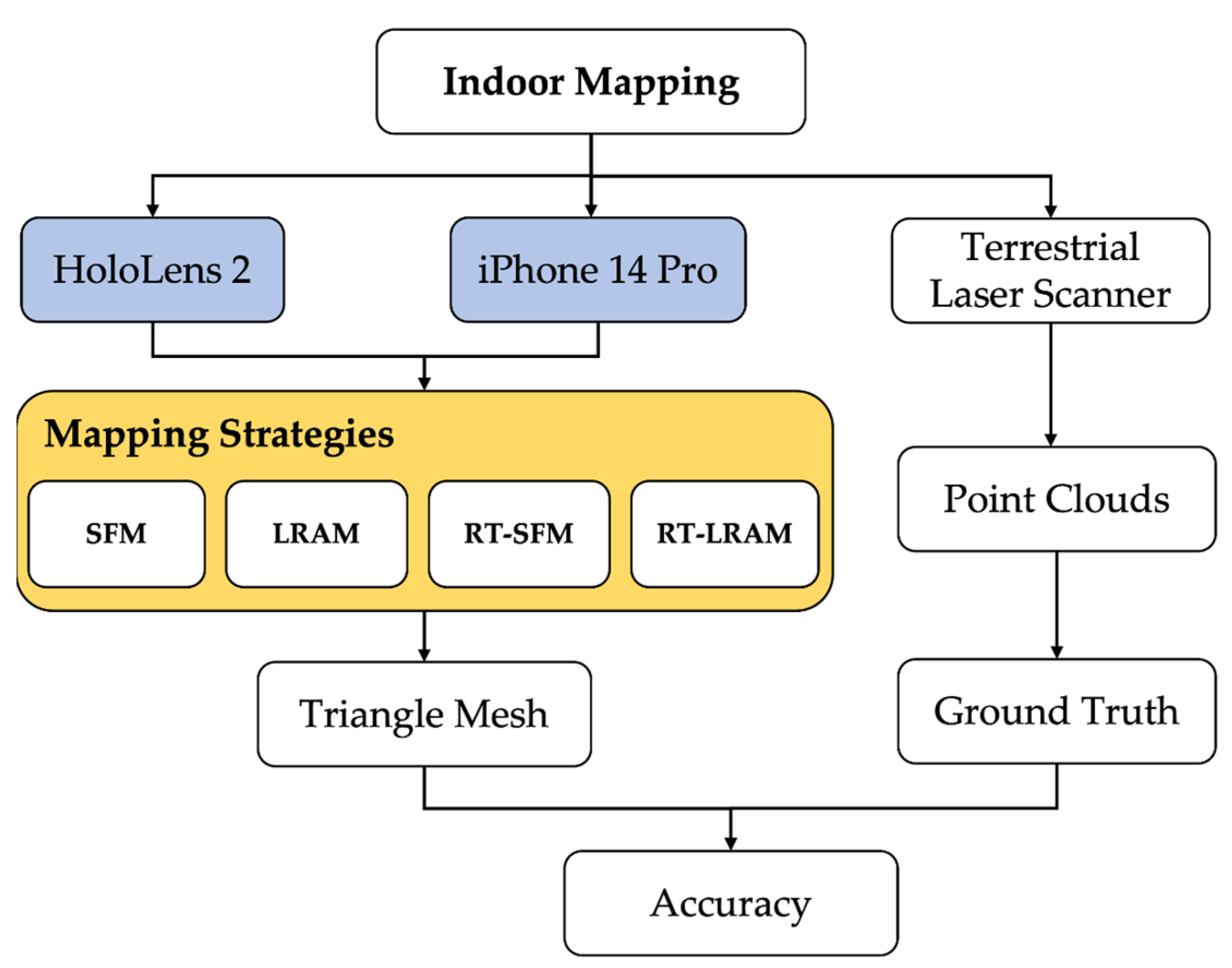

Indoor Mapping with Entertainment Devices: Evaluating the Impact of Different Mapping Strategies for Microsoft HoloLens 2 and Apple iPhone 14 Pro

Abstract

1. Introduction

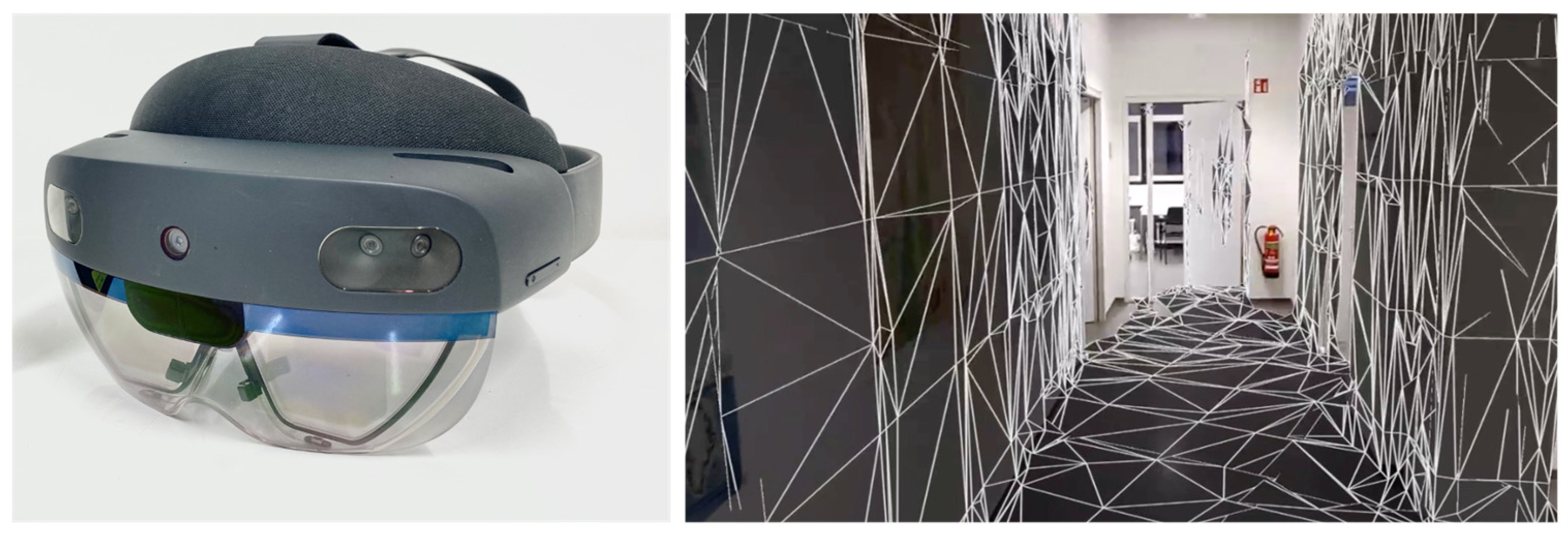

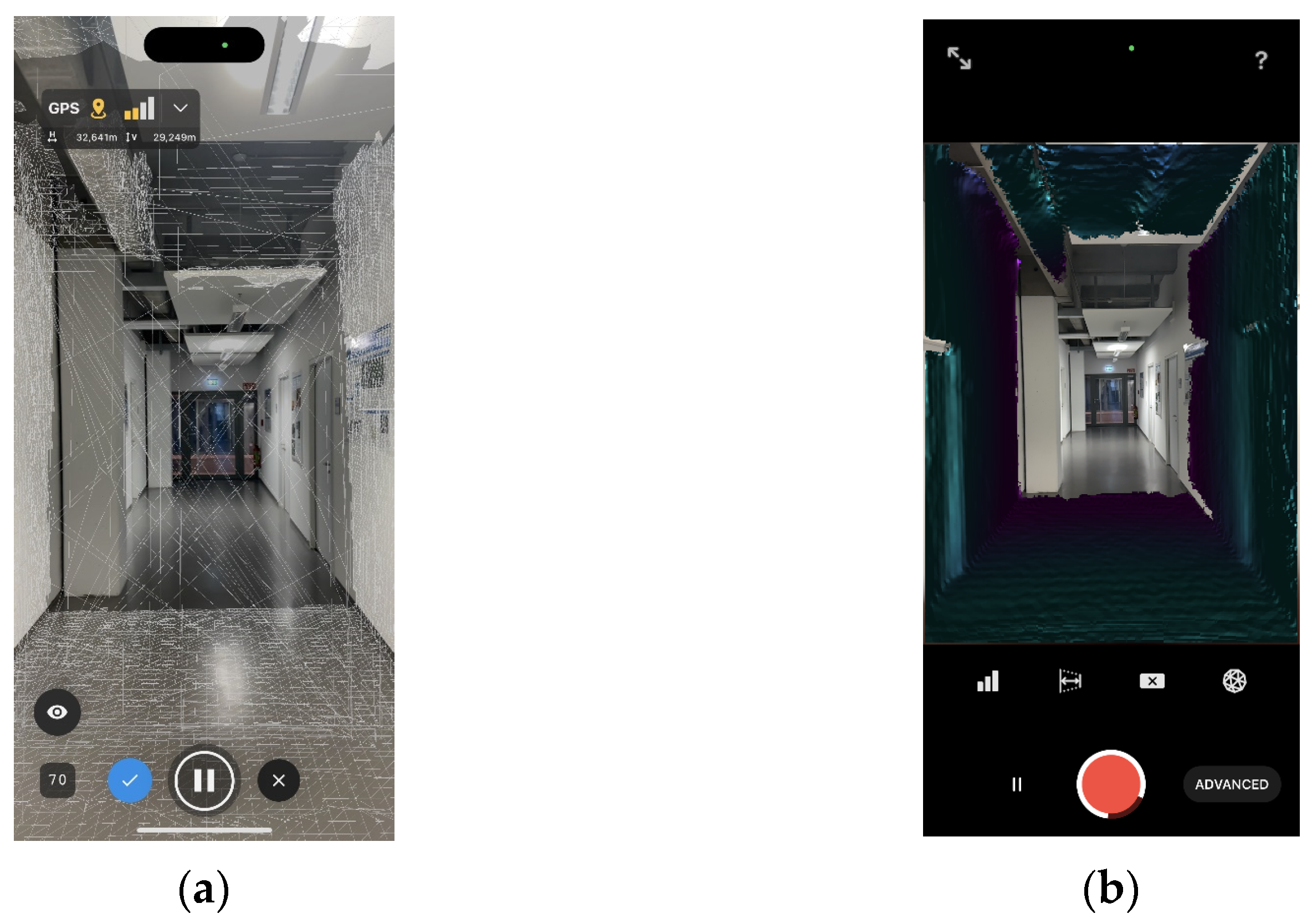

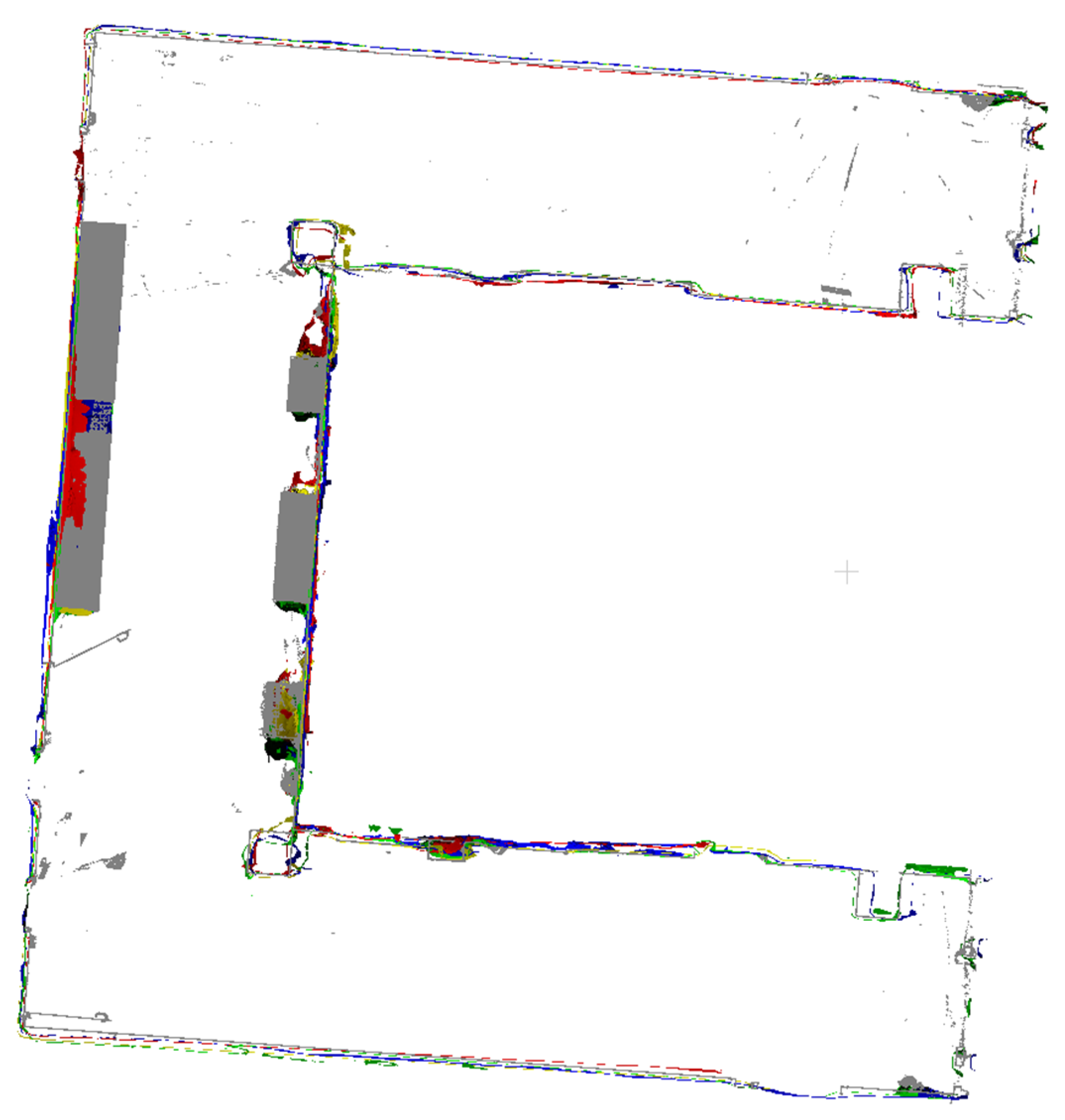

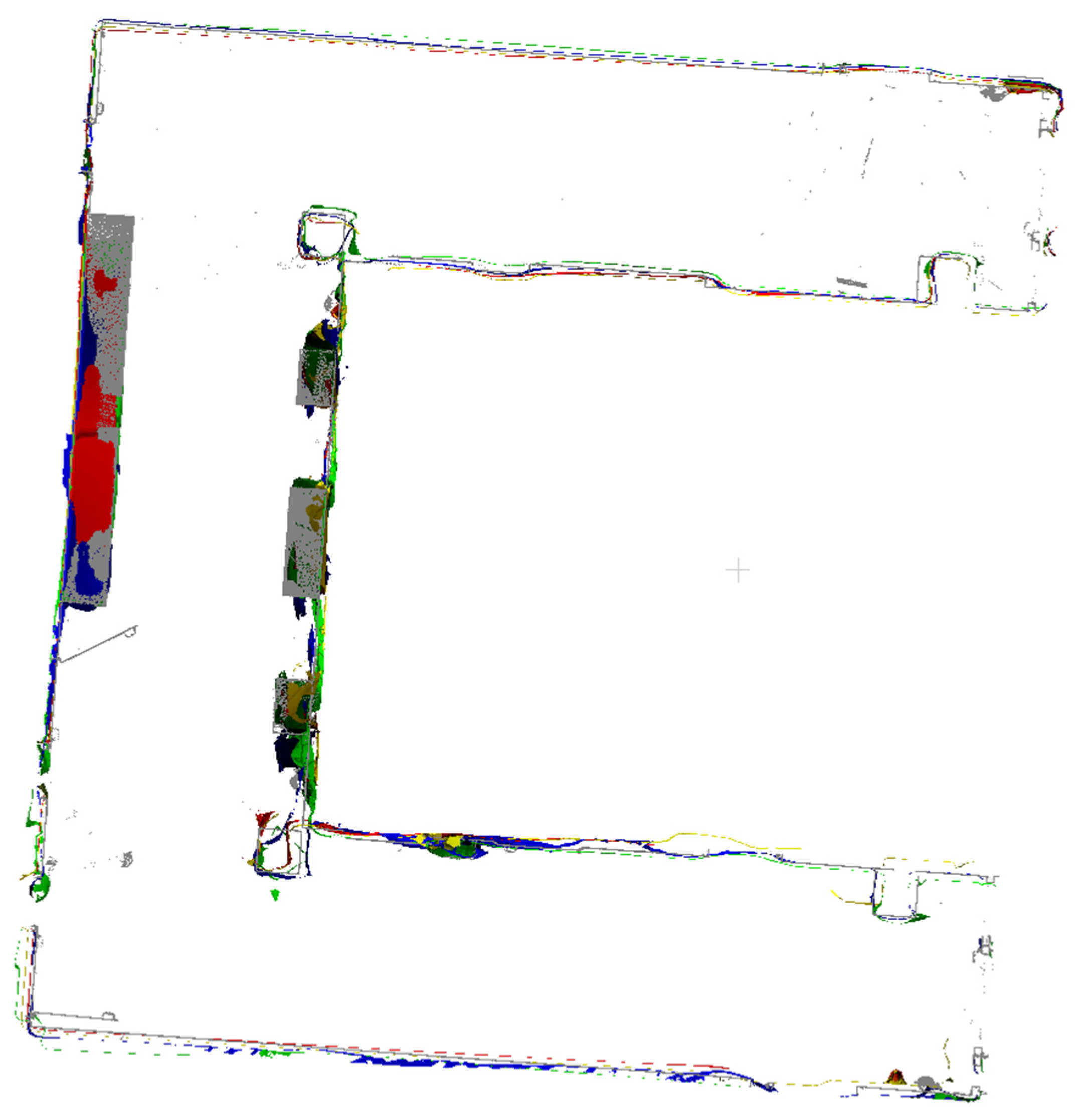

2. Materials and Methods

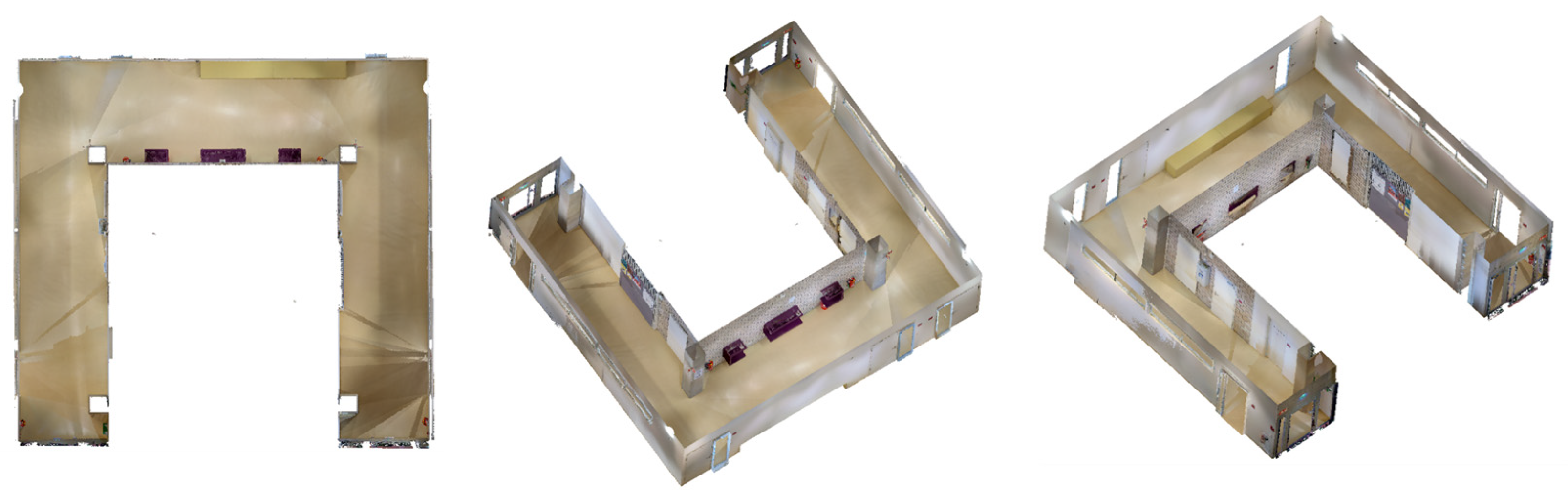

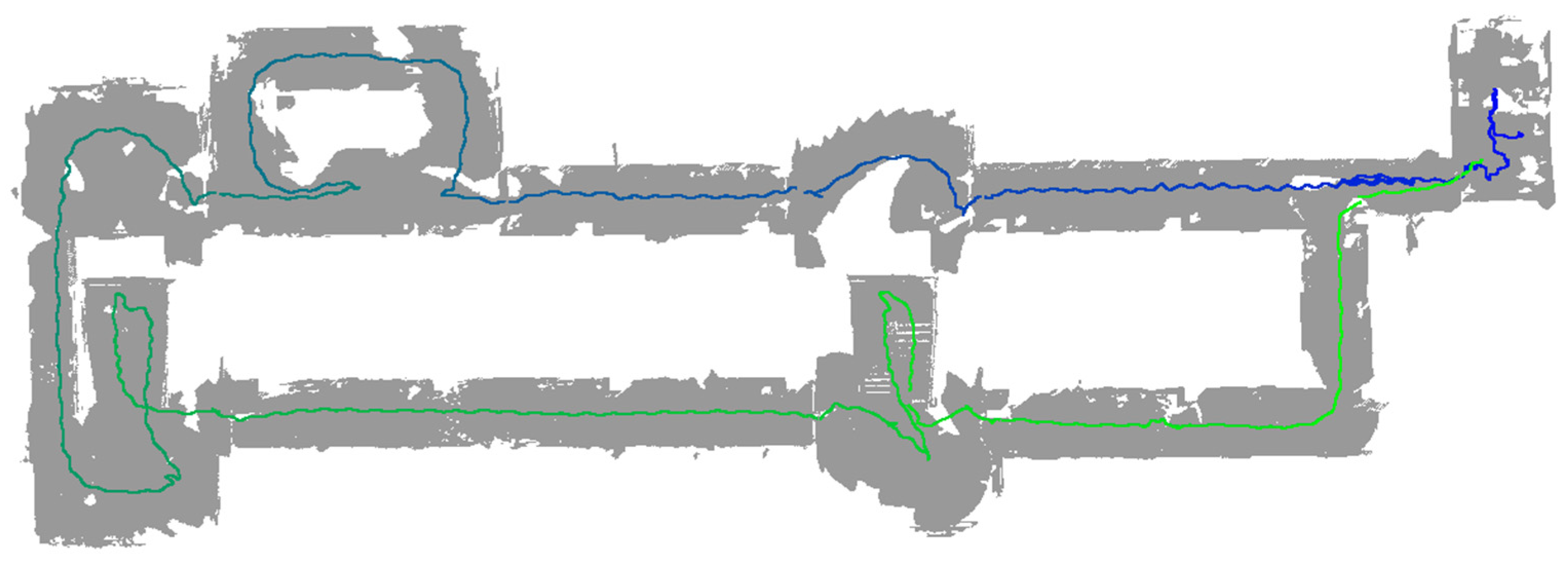

2.1. Experimental Space

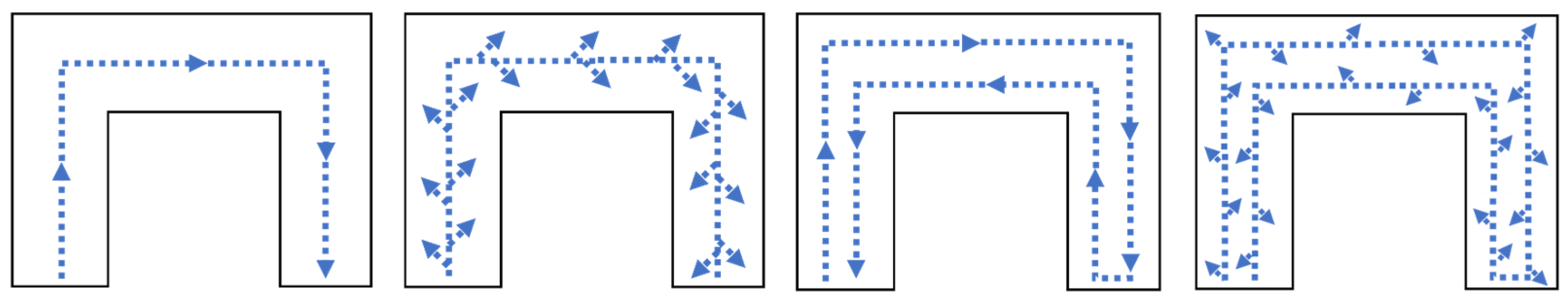

2.2. Strategies Description

- Straight-Forward Mapping (SFM): Devices are oriented directly ahead.

- Left–Right Alternating Mapping (LRAM): Devices oscillate horizontally while mapping.

- Round-Trip Straight-Forward Mapping (RT-SFM): In the experimental environment, the user walks a round trip while the devices are oriented directly ahead during the mapping process.

- Round-Trip Left–Right Alternating Mapping (RT-LRAM): Similar to RT-SFM, the user walks a round trip while the devices oscillate horizontally while mapping.

2.3. Evaluation Method

3. Results

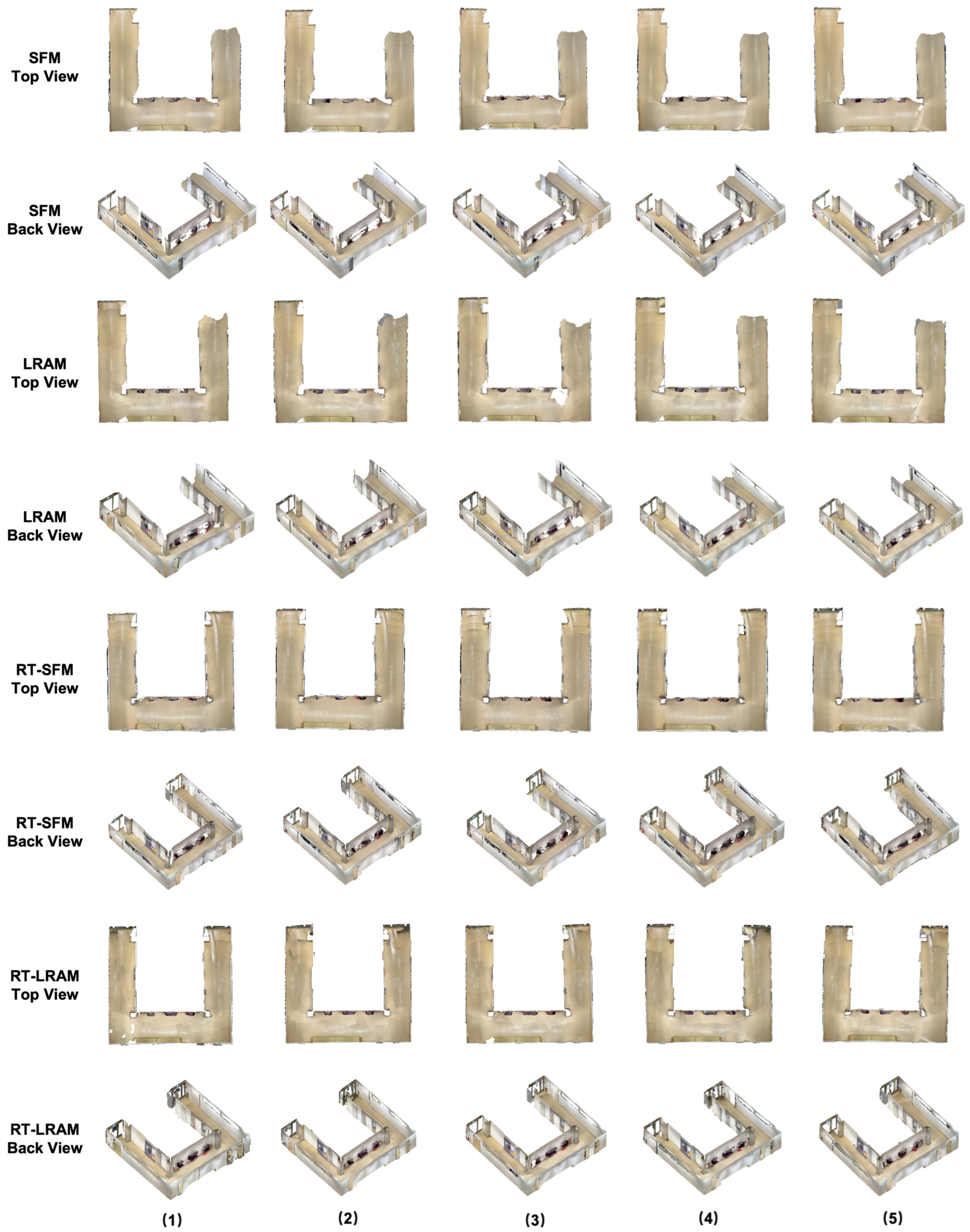

3.1. Indoor Mapping Outputs

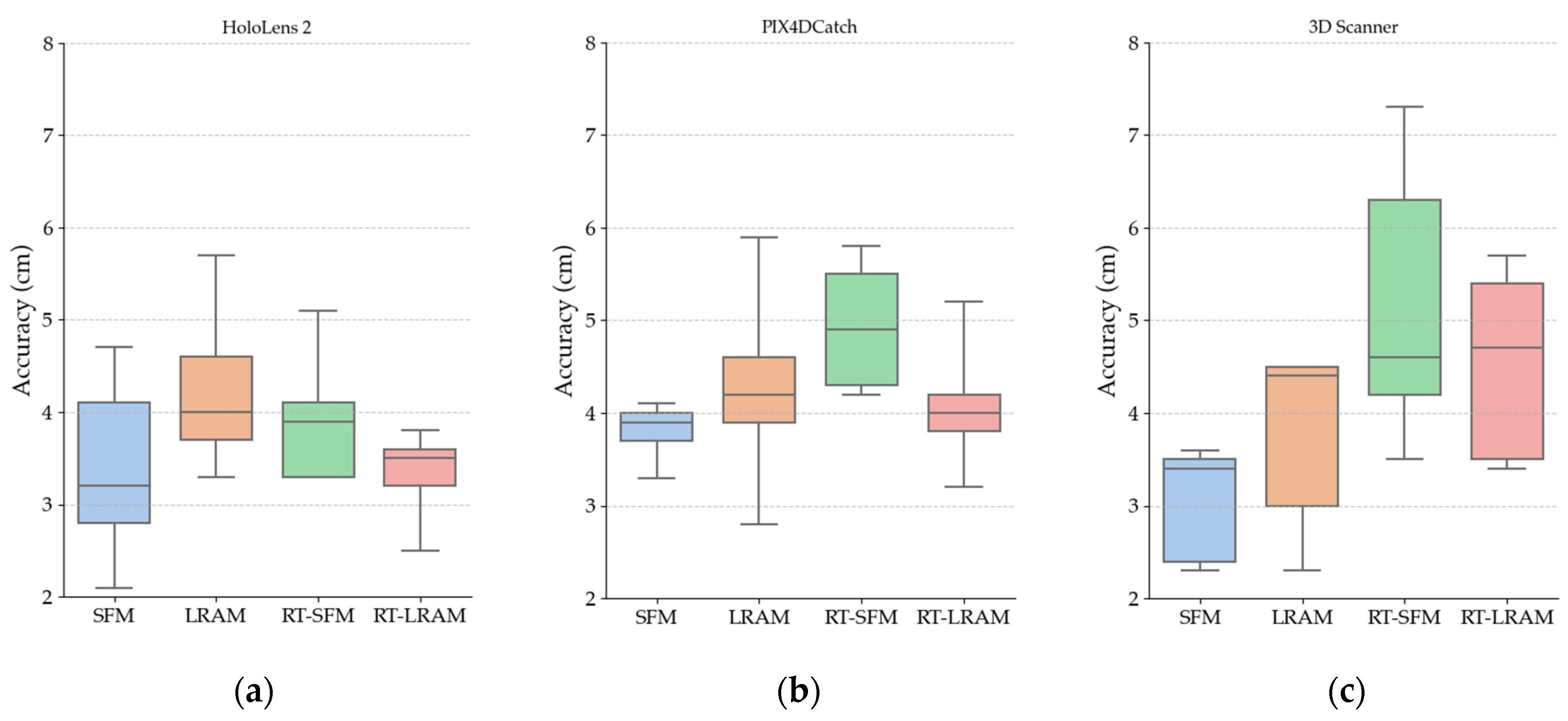

3.2. Indoor Mapping Accuracy

4. Discussion

4.1. Indoor Mapping Outputs Analysis

4.2. Indoor Mapping Accuracy Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Timeline of 3D Laser Scanners. Available online: https://scantech-international.com/blog/timeline-of-3d-laser-scanners (accessed on 15 December 2023).

- Telling, J.; Lyda, A.; Hartzell, P.; Glennie, C. Review of Earth Science Research Using Terrestrial Laser Scanning. Earth-Sci. Rev. 2017, 169, 35–68. [Google Scholar] [CrossRef]

- Hoon, Y.J.; Hong, S. Three-Dimensional Digital Documentation of Cultural Heritage Site Based on the Convergence of Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, J.; Jiang, C.; Zhu, L.; Lehtomäki, M.; Kaartinen, H.; Kaijaluoto, R.; Wang, Y.; Hyyppä, J.; Hyyppä, H.; et al. The Accuracy Comparison of Three Simultaneous Localization and Mapping (SLAM)-Based Indoor Mapping Technologies. Sensors 2018, 18, 3228. [Google Scholar] [CrossRef] [PubMed]

- Del Duca, G.; Machado, C. Assessing the Quality of the Leica BLK2GO Mobile Laser Scanner versus the Focus 3D S120 Static Terrestrial Laser Scanner for a Preliminary Study of Garden Digital Surveying. Heritage 2023, 6, 1007–1027. [Google Scholar] [CrossRef]

- Wang, C.; Wen, C.; Dai, Y.; Yu, S.; Liu, M. Urban 3D Modeling with Mobile Laser Scanning: A Review. Virtual Real. Intell. Hardw. 2020, 2, 175–212. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhu, Q.; Liu, L.; Li, C.; Zheng, D. A Survey of Mobile Laser Scanning Applications and Key Techniques over Urban Areas. Remote Sens. 2019, 11, 1540. [Google Scholar] [CrossRef]

- Zheng, S.; Wang, J.; Rizos, C.; Ding, W.; El-Mowafy, A. Simultaneous Localization and Mapping (SLAM) for Autonomous Driving: Concept and Analysis. Remote Sens. 2023, 15, 1156. [Google Scholar] [CrossRef]

- Li, X.; Liu, C.; Wang, Z.; Xie, X.; Li, D.; Xu, L. Airborne LiDAR: State-of-the-Art of System Design, Technology and Application. Meas. Sci. Technol. 2020, 32, 032002. [Google Scholar] [CrossRef]

- Karam, S.; Nex, F.; Chidura, B.T.; Kerle, N. Microdrone-Based Indoor Mapping with Graph SLAM. Drones 2022, 6, 352. [Google Scholar] [CrossRef]

- Karam, S.; Nex, F.; Karlsson, O.; Rydell, J.; Bilock, E.; Tulldahl, M.; Holmberg, M.; Kerle, N. Micro and Macro Quadcopter Drones for Indoor Mapping To Support Disaster Management. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 5, 203–210. [Google Scholar] [CrossRef]

- Goebel, M.; Iwaszczuk, D. Backpack System for Capturing 3D Point Clouds of Forests. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 695–702. [Google Scholar] [CrossRef]

- Lehtola, V.V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Honkavaara, E.; Rosnell, T.; Vaaja, M.T.; Virtanen, J.P.; et al. Comparison of the Selected State-of-the-Art 3D Indoor Scanning and Point Cloud Generation Methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Cui, Y.; Li, Q.; Yang, B.; Xiao, W.; Chen, C.; Dong, Z. Automatic 3-D Reconstruction of Indoor Environment with Mobile Laser Scanning Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3117–3130. [Google Scholar] [CrossRef]

- Adán, A.; Quintana, B.; Prieto, S.A. Autonomous Mobile Scanning Systems for the Digitization of Buildings: A Review. Remote Sens. 2019, 11, 306. [Google Scholar] [CrossRef]

- Iwaszczuk, D.; Goebel, M.; Du, Y.; Schmidt, J.; Weinmann, M. Potential of Mobile Mapping To Create Digital Twins of Forests. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2023, 48, 199–206. [Google Scholar] [CrossRef]

- Mokroš, M.; Mikita, T.; Singh, A.; Tomaštík, J.; Chudá, J.; Wężyk, P.; Kuželka, K.; Surový, P.; Klimánek, M.; Zięba-Kulawik, K.; et al. Novel Low-Cost Mobile Mapping Systems for Forest Inventories as Terrestrial Laser Scanning Alternatives. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102512. [Google Scholar] [CrossRef]

- Maté-González, M.Á.; Di Pietra, V.; Piras, M. Evaluation of Different LiDAR Technologies for the Documentation of Forgotten Cultural Heritage under Forest Environments. Sensors 2022, 22, 6314. [Google Scholar] [CrossRef]

- Di Stefano, F.; Torresani, A.; Farella, E.M.; Pierdicca, R.; Menna, F.; Remondino, F. 3D Surveying of Underground Built Heritage: Opportunities and Challenges of Mobile Technologies. Sustainability 2021, 13, 13289. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-Time Dense Surface Mapping and Tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar] [CrossRef]

- Niesner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-Time 3D Reconstruction at Scale Using Voxel Hashing. ACM Trans. Graph. 2013, 32, 169. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y.; Tan, J. Loop Closing Detection in RGB-D SLAM Combining Appearance and Geometric Constraints. Sensors 2015, 15, 14639–14660. [Google Scholar] [CrossRef]

- Díaz-Vilariño, L.; Tran, H.; Frías, E.; Balado, J.; Khoshelham, K. 3D Mapping of Indoor and Outdoor Environments Using Apple Smart Devices. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2022, 43, 303–308. [Google Scholar] [CrossRef]

- Hasler, O.; Blaser, S.; Nebiker, S. Implementation and First Evaluation of an Indoor Mapping Application Using Smartphones and AR Frameworks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2019, 42, 135–141. [Google Scholar] [CrossRef]

- Zhou, B.; Ma, W.; Li, Q.; El-Sheimy, N.; Mao, Q.; Li, Y.; Gu, F.; Huang, L.; Zhu, J. Crowdsourcing-Based Indoor Mapping Using Smartphones: A Survey. ISPRS J. Photogramm. Remote Sens. 2021, 177, 131–146. [Google Scholar] [CrossRef]

- Mcgill, M.; Gugenheimer, J.; Freeman, E. A Quest for Co-Located Mixed Reality: Aligning and Assessing SLAM Tracking for Same-Space Multi-User Experiences. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, Virtual Event, 1–4 November 2020. [Google Scholar] [CrossRef]

- Hübner, P.; Clintworth, K.; Liu, Q.; Weinmann, M.; Wursthorn, S. Evaluation of Hololens Tracking and Depth Sensing for Indoor Mapping Applications. Sensors 2020, 20, 1021. [Google Scholar] [CrossRef] [PubMed]

- Hübner, P.; Landgraf, S.; Weinmann, M.; Wursthorn, S. Evaluation of the Microsoft HoloLens for the Mapping of Indoor Building Environments. In Proceedings of the 39th Annual Scientific and Technical Conference of the DGPF—Tri-Country Conference OVG—DGPF—SGPF—Photogrammetry—Remote Sensing—Geoinformation, Vienna, Austria, 20–22 February 2019; Volume 28, pp. 44–53. [Google Scholar]

- Khoshelham, K.; Tran, H.; Acharya, D. Indoor Mapping Eyewear: Geometric Evaluation of Spatial Mapping Capability of Hololens. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2019, 42, 805–810. [Google Scholar] [CrossRef]

- Introduction to Microsoft Kinect. Available online: https://en.wikipedia.org/wiki/Kinect (accessed on 2 February 2024).

- Khoshelham, K.; Elberink, S.O. Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef]

- Curto, E.; Araujo, H. An Experimental Assessment of Depth Estimation in Transparent and Translucent Scenes for Intel RealSense D415, SR305 and L515. Sensors 2022, 22, 7378. [Google Scholar] [CrossRef]

- Servi, M.; Mussi, E.; Profili, A.; Furferi, R.; Volpe, Y.; Governi, L.; Buonamici, F. Metrological Characterization and Comparison of D415, D455, L515 Realsense Devices in the Close Range. Sensors 2021, 21, 7770. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, L. Skeleton Tracking Accuracy and Precision Evaluation of Kinect V1, Kinect V2, and the Azure Kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Tango (Platform). Available online: https://en.wikipedia.org/wiki/Tango_(platform) (accessed on 2 February 2024).

- Diakité, A.A.; Zlatanova, S. First Experiments With the Tango Tablet for Indoor Scanning. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-4, 67–72. [Google Scholar] [CrossRef]

- Wu, Y.; Shang, J.; Xue, F. Regard: Symmetry-Based Coarse Registration of Smartphone’s Colorful Point Clouds with Cad Drawings for Low-Cost Digital Twin Buildings. Remote Sens. 2021, 13, 1882. [Google Scholar] [CrossRef]

- ARCore—Google’s Augmented Reality SDK for Building New Immersive Experiences on Android, IOS, Unity, and Web. Available online: https://developers.google.com/ar (accessed on 15 December 2023).

- Apple Developer—Augmented Reality. Available online: https://developer.apple.com/augmented-reality/ (accessed on 15 December 2023).

- Jakovljević, G.; Taboada, F.A. Iphone 13 Pro Vs Professional Tls for 3D Indoor Mapping. STEPGRAD 2022, 1, 274–282. [Google Scholar] [CrossRef]

- Microsoft HoloLens 2. Available online: https://www.microsoft.com/en-us/hololens (accessed on 20 December 2023).

- Magic Leap 2. Available online: https://www.magicleap.com/magic-leap-2 (accessed on 20 December 2023).

- Jay Kothari Glass Enterprise Edition 2: Faster and More Helpful. Available online: https://blog.google/products/devices-services/glass-enterprise-edition-2/ (accessed on 28 December 2023).

- Weinmann, M.; Jäger, M.A.; Wursthorn, S.; Jutzi, B.; Weinmann, M.; Hübner, P. 3D Indoor Mapping with the Microsoft Hololens: Qualitative and Quantitative Evaluation by Means of Geometric Features. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 165–172. [Google Scholar] [CrossRef]

- Weinmann, M.; Wursthorn, S.; Weinmann, M.; Hübner, P. Efficient 3D Mapping and Modelling of Indoor Scenes with the Microsoft HoloLens: A Survey. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 319–333. [Google Scholar] [CrossRef]

- Jäger, M.; Hübner, P.; Haitz, D.; Jutzi, B. A Comparative Neural Radiance Field (Nerf) 3D Analysis of Camera Poses From Hololens Trajectories and Structure From Motion. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2023, 48, 207–213. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2020, 65, 405–421. [Google Scholar] [CrossRef]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Gao, K.; Gao, Y.; He, H.; Lu, D.; Xu, L.; Li, J. NeRF: Neural Radiance Field in 3D Vision, A Comprehensive Review. arXiv 2022, arXiv:2210.00379. [Google Scholar]

- Teruggi, S.; Fassi, F. Hololens 2 Spatial Mapping Capabilities in Vast Monumental Heritage Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2022, 46, 489–496. [Google Scholar] [CrossRef]

- Demirkan, D.C.; Duzgun, S. An Evaluation of AR-Assisted Navigation for Search and Rescue in Underground Spaces. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 241–246. [Google Scholar] [CrossRef]

- Li, F.; Hao, J.; Wang, J.; Luo, J.; He, Y.; Yu, D.; Cheng, X. VisioMap: Lightweight 3-D Scene Reconstruction Toward Natural Indoor Localization. IEEE Internet Things J. 2019, 6, 8870–8882. [Google Scholar] [CrossRef]

- Holzwarth, V.; Gisler, J.; Hirt, C.; Kunz, A. Comparing the Accuracy and Precision of Steamvr Tracking 2.0 and Oculus Quest 2 in a Room Scale Setup. In Proceedings of the 2021 the 5th International Conference on Virtual and Augmented Reality Simulations, Melbourne, Australia, 20–22 March 2021; pp. 42–46. [Google Scholar] [CrossRef]

- Meta Quest 2. Available online: https://www.meta.com/de/en/quest/products/quest-2/ (accessed on 20 December 2023).

- HTC Vive Tracker. Available online: https://business.vive.com/eu/product/vive-tracker/ (accessed on 21 December 2023).

- Dlesk, A.; Vach, K.; Holubec, P. Analysis of Possibilities of Low-Cost Photogrammetry for Interior Mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2019, 42, 27–31. [Google Scholar] [CrossRef]

- Barazzetti, L.; Previtali, M.; Roncoroni, F. 3D Modelling with the Samsung Gear 360. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 85–90. [Google Scholar] [CrossRef]

- Herban, S.; Costantino, D.; Alfio, V.S.; Pepe, M. Use of Low-Cost Spherical Cameras for the Digitisation of Cultural Heritage Structures into 3D Point Clouds. J. Imaging 2022, 8, 13. [Google Scholar] [CrossRef] [PubMed]

- Ding, Y.; Zheng, X.; Zhou, Y.; Xiong, H.; Gong, J. Low-Cost and Efficient Indoor 3D Reconstruction through Annotated Hierarchical Structure-from-Motion. Remote Sens. 2019, 11, 58. [Google Scholar] [CrossRef]

- Malhotra, A.; Söderström, U. Fixed Camera Drone Based Photogrammetry for Indoor Mapping. In Proceedings of the 2022 IEEE 9th Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Prayagraj, India, 2–4 December 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Zheng, E.; Frahm, J.M.; Pollefeys, M. Pixelwise View Selection for Unstructured Multi-View Stereo. In Proceedings of the 14th European Conference on Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 501–518. [Google Scholar] [CrossRef]

- COLMAP. Available online: https://colmap.github.io/ (accessed on 2 February 2024).

- Askar, C.; Sternberg, H. Use of Smartphone Lidar Technology for Low-Cost 3D Building Documentation with IPhone 13 Pro: A Comparative Analysis of Mobile Scanning Applications. Geomatics 2023, 3, 563–579. [Google Scholar] [CrossRef]

- HoloLens 2 Sensor Streaming. Available online: https://github.com/jdibenes/hl2ss (accessed on 26 December 2023).

- Tamimi, R.; Toth, C. Performance Assessment of a Mini Mobile Mapping System: Iphone 14 pro Installed on a e-Scooter. In Proceedings of the American Society for Photogrammetry and Remote Sensing Annual Conference, Denver, CO, USA, 13–15 February & 12–15 June 2023; Volume 48, pp. 307–315. [Google Scholar]

- Günen, M.A.; Erkan, İ.; Aliyazicioğlu, Ş.; Kumaş, C. Investigation of Geometric Object and Indoor Mapping Capacity of Apple IPhone 12 Pro LiDAR. Mersin Photogramm. J. 2023, 5, 82–89. [Google Scholar] [CrossRef]

- Schmidt, J.; Volland, V.; Iwaszczuk, D.; Eichhorn, A. Detection of Hidden Edges and Corners in Slam-Based Indoor Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2023, 48, 443–449. [Google Scholar] [CrossRef]

- CloudCompare. 2.13.Alpha 2023. Available online: https://github.com/CloudCompare/CloudCompare/releases (accessed on 15 December 2023).

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative Point Matching for Registration of Free-Form Curves and Surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

| Devices | Sequence | SFM | LRAM | RT-SFM | RT-LRAM |

|---|---|---|---|---|---|

| HoloLens 2 | 1 | 2.1 | 4.6 | 3.9 * | 3.2 |

| 2 | 2.8 | 5.7 | 4.1 | 3.5 * | |

| 3 | 4.7 | 4.0 * | 3.3 | 2.5 | |

| 4 | 3.2 * | 3.7 | 5.1 | 3.6 | |

| 5 | 4.1 | 3.3 | 3.3 | 3.8 | |

| Average | 3.4 | 4.3 | 3.9 | 3.3 | |

| iPhone 14 Pro with PIX4Dcatch | 1 | 3.3 | 3.9 | 4.2 | 4.2 |

| 2 | 4.0 | 5.9 | 4.9 * | 3.8 | |

| 3 | 3.9 * | 2.8 | 4.3 | 3.2 | |

| 4 | 4.1 | 4.2 * | 5.5 | 4.0 * | |

| 5 | 3.7 | 4.6 | 5.8 | 5.2 | |

| Average | 3.8 | 4.3 | 4.9 | 4.1 | |

| iPhone 14 Pro with 3D Scanner | 1 | 3.4 * | 4.5 | 3.5 | 3.5 |

| 2 | 2.3 | 2.3 | 7.3 | 4.7 * | |

| 3 | 2.4 | 4.4 * | 4.2 | 5.7 | |

| 4 | 3.6 | 4.5 | 6.3 | 3.4 | |

| 5 | 3.5 | 3.0 | 4.6 * | 5.4 | |

| Average | 3.0 | 3.7 | 5.2 | 4.5 |

| Devices | Sequence | SFM | LRAM | RT-SFM | RT-LRAM |

|---|---|---|---|---|---|

| HoloLens 2 | 1 | 3.4 | 5.5 | 3.9 | 3.5 |

| 2 | 4.0 | 5.5 | 4.0 | 4.2 | |

| 3 | 5.3 | 4.4 * | 4.3 * | 3.0 | |

| 4 | 4.1 * | 3.9 | 5.0 | 3.6 * | |

| 5 | 4.8 | 4.1 | 4.3 | 3.8 | |

| Average | 4.3 | 4.7 | 4.3 | 3.6 | |

| iPhone 14 Pro with PIX4Dcatch | 1 | 3.2 | 4.3 * | 4.2 | 4.8 |

| 2 | 3.5 * | 5.2 | 4.4 * | 3.5 | |

| 3 | 3.5 | 3.7 | 4.3 | 3.8 | |

| 4 | 3.6 | 4.0 | 4.5 | 3.9 * | |

| 5 | 3.6 | 4.3 | 5.2 | 4.9 | |

| Average | 3.5 | 4.3 | 4.5 | 4.2 | |

| iPhone 14 Pro with 3D Scanner | 1 | 3.7 | 4.9 | 4.0 | 3.8 |

| 2 | 2.4 | 3.6 | 6.0 | 4.8 * | |

| 3 | 2.6 | 4.9 | 4.3 * | 6.5 | |

| 4 | 3.3 * | 4.5 * | 5.9 | 4.2 | |

| 5 | 3.9 | 3.8 | 4.2 | 5.1 | |

| Average | 3.2 | 4.3 | 4.9 | 4.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, J.; Hübner, P.; Schmidt, J.; Iwaszczuk, D. Indoor Mapping with Entertainment Devices: Evaluating the Impact of Different Mapping Strategies for Microsoft HoloLens 2 and Apple iPhone 14 Pro. Sensors 2024, 24, 1062. https://doi.org/10.3390/s24041062

Hou J, Hübner P, Schmidt J, Iwaszczuk D. Indoor Mapping with Entertainment Devices: Evaluating the Impact of Different Mapping Strategies for Microsoft HoloLens 2 and Apple iPhone 14 Pro. Sensors. 2024; 24(4):1062. https://doi.org/10.3390/s24041062

Chicago/Turabian StyleHou, Jiwei, Patrick Hübner, Jakob Schmidt, and Dorota Iwaszczuk. 2024. "Indoor Mapping with Entertainment Devices: Evaluating the Impact of Different Mapping Strategies for Microsoft HoloLens 2 and Apple iPhone 14 Pro" Sensors 24, no. 4: 1062. https://doi.org/10.3390/s24041062

APA StyleHou, J., Hübner, P., Schmidt, J., & Iwaszczuk, D. (2024). Indoor Mapping with Entertainment Devices: Evaluating the Impact of Different Mapping Strategies for Microsoft HoloLens 2 and Apple iPhone 14 Pro. Sensors, 24(4), 1062. https://doi.org/10.3390/s24041062