Material Visual Perception and Discharging Robot Control for Baijiu Fermented Grains in Underground Tank

Abstract

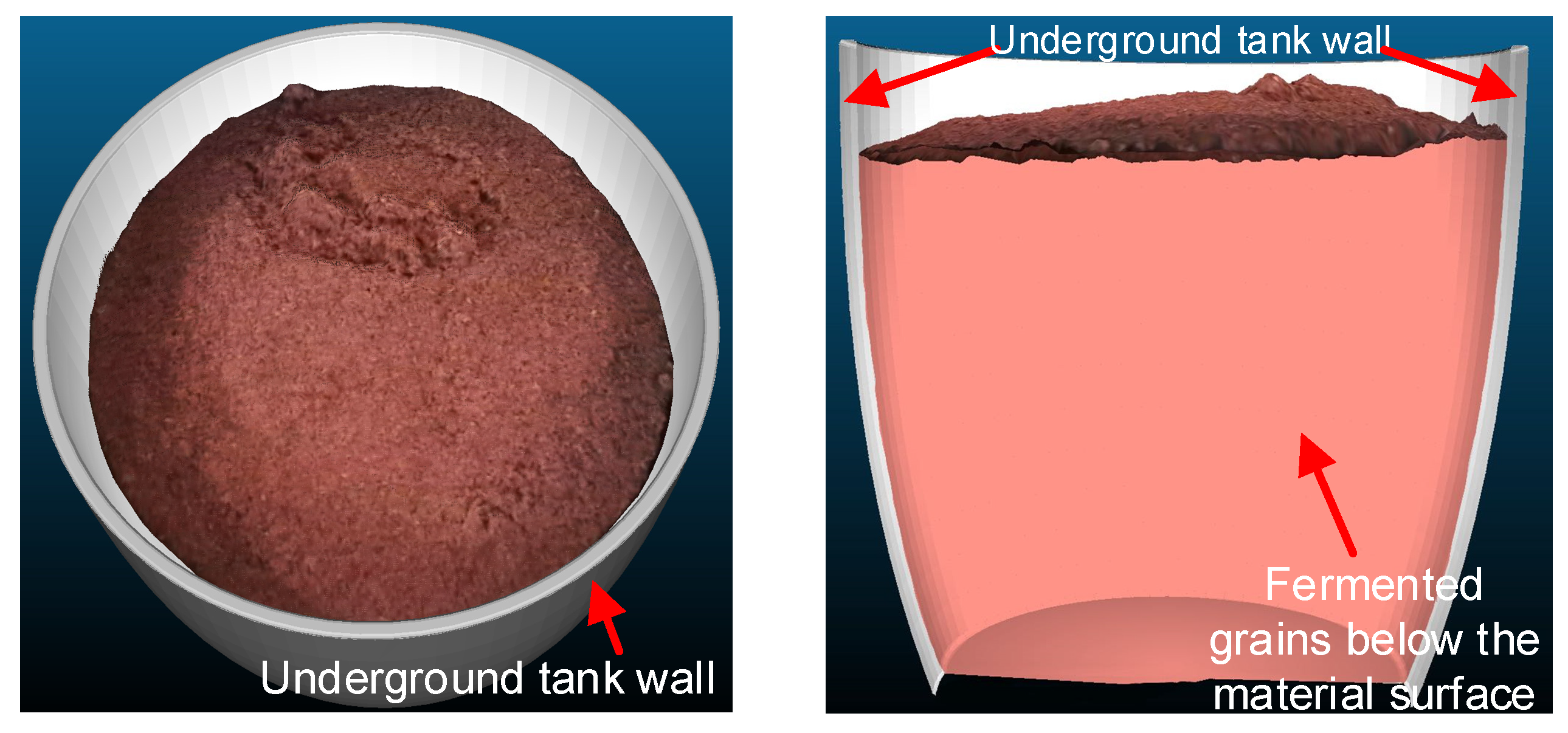

:1. Introduction

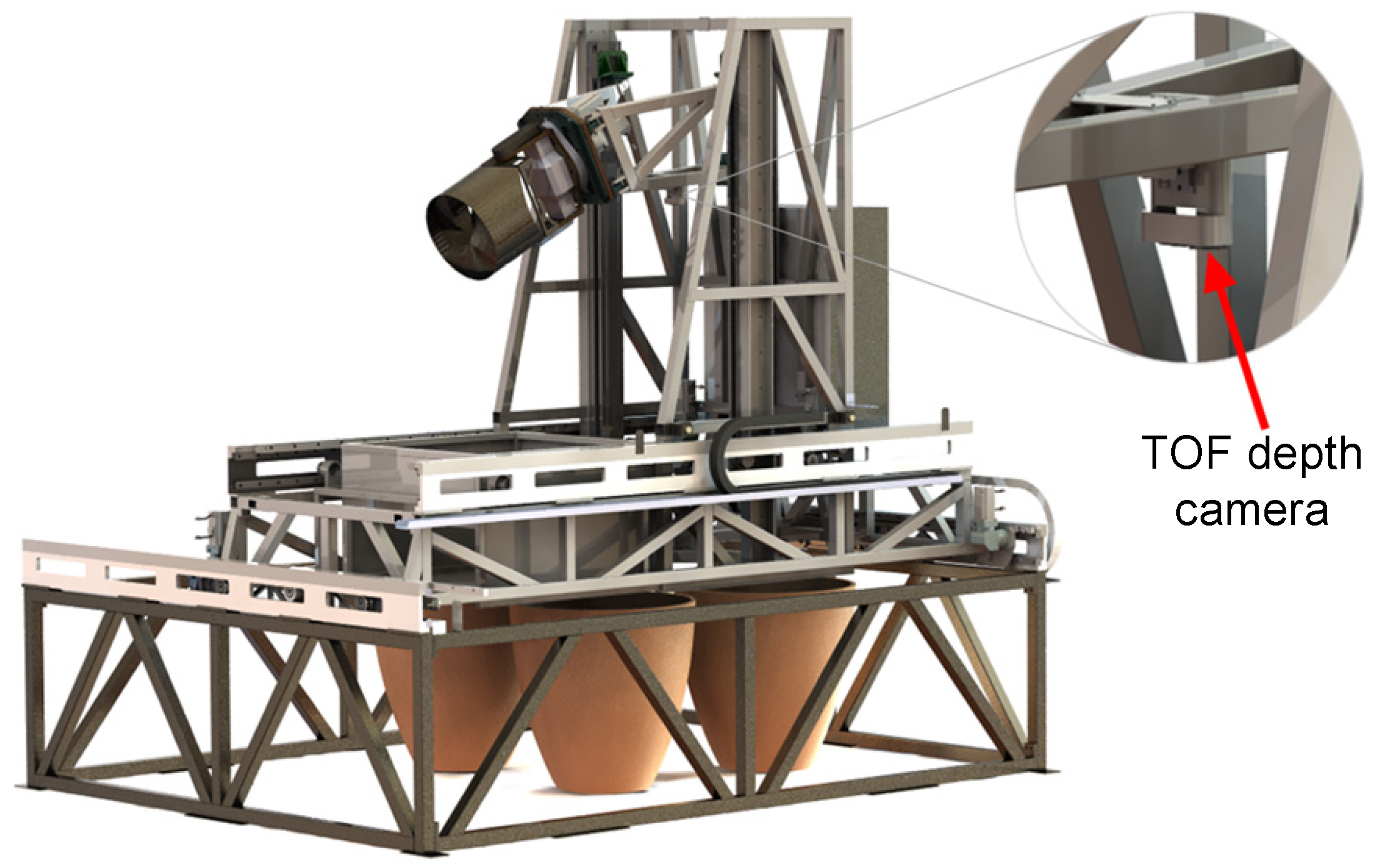

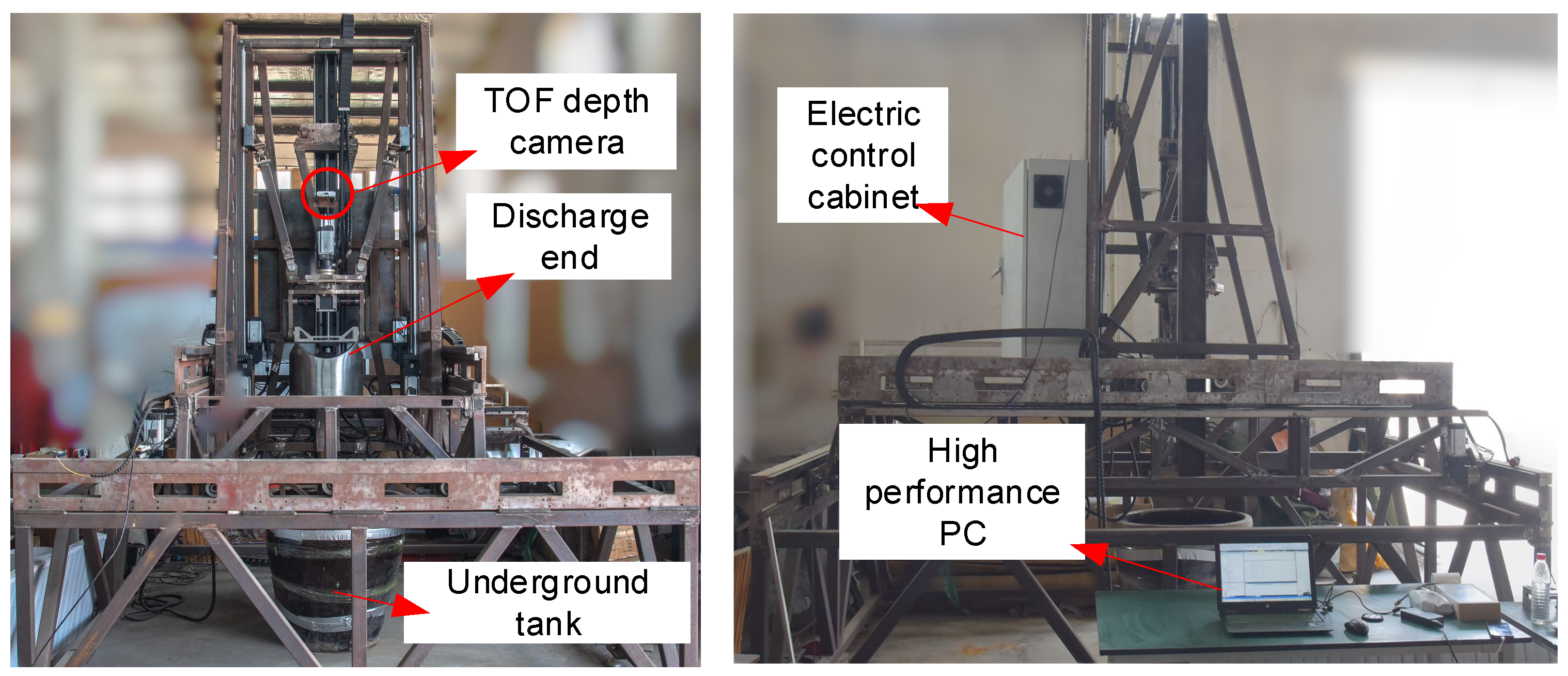

2. Discharge System

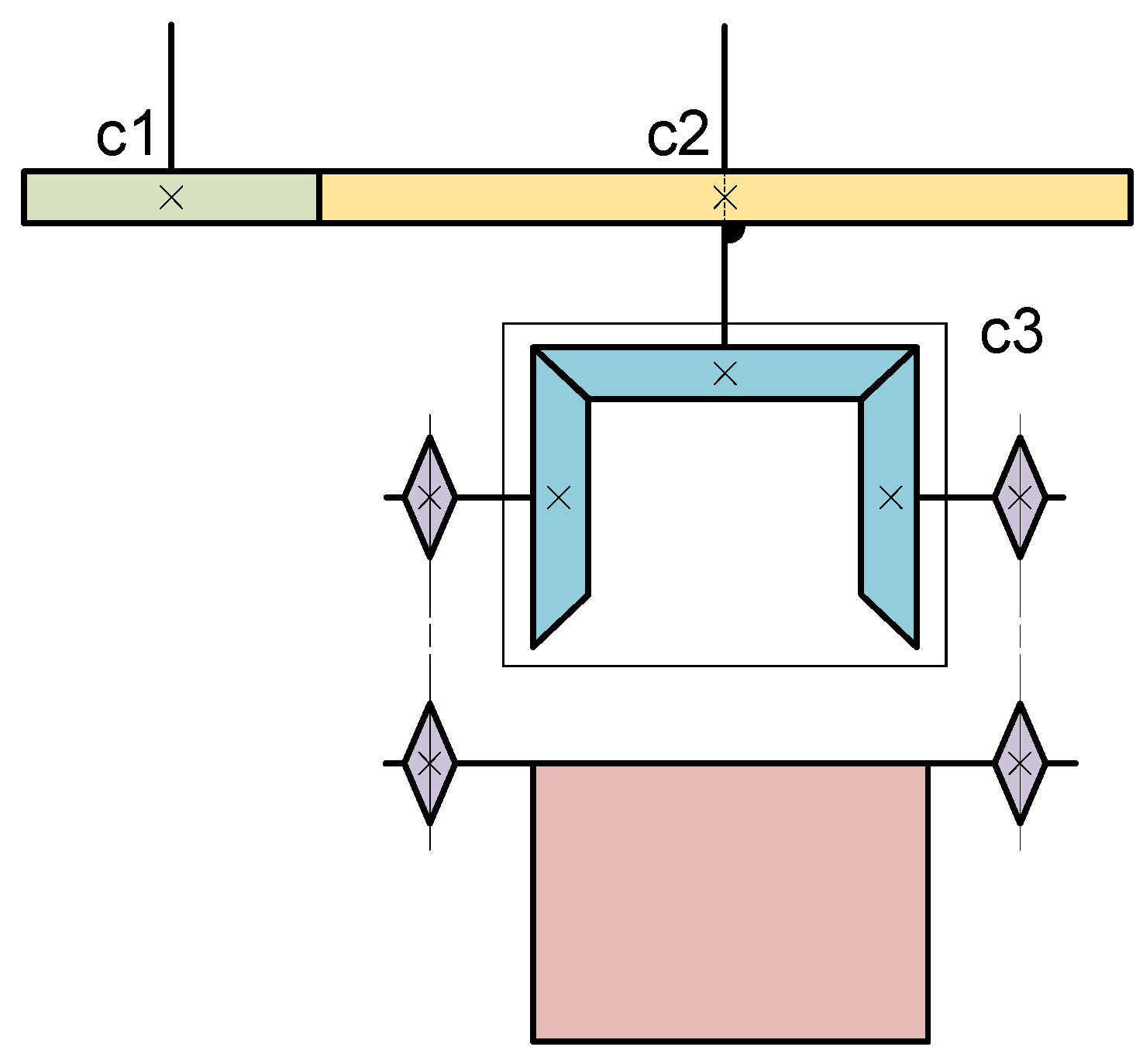

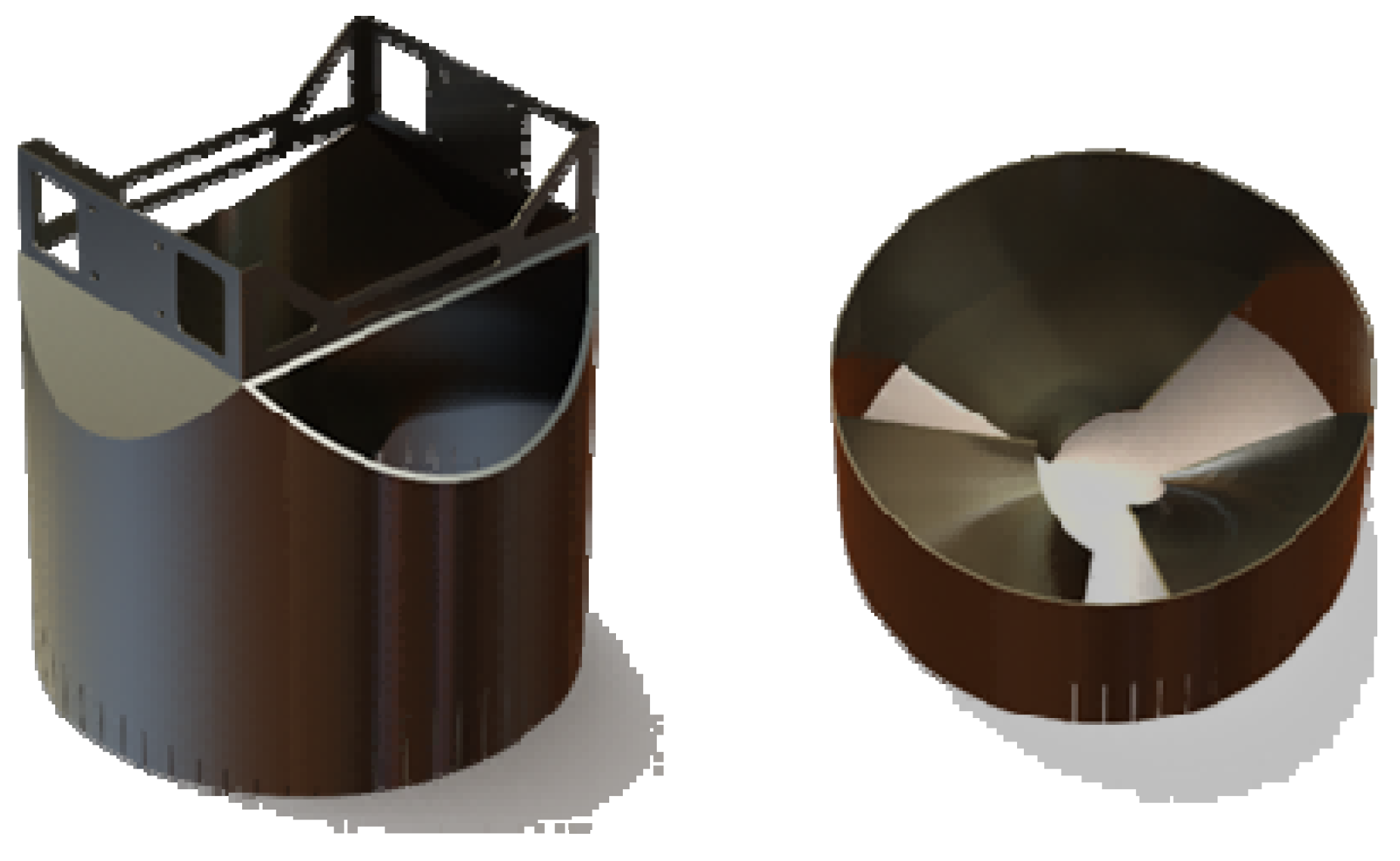

2.1. The Introduction of Hybrid Discharge Robot

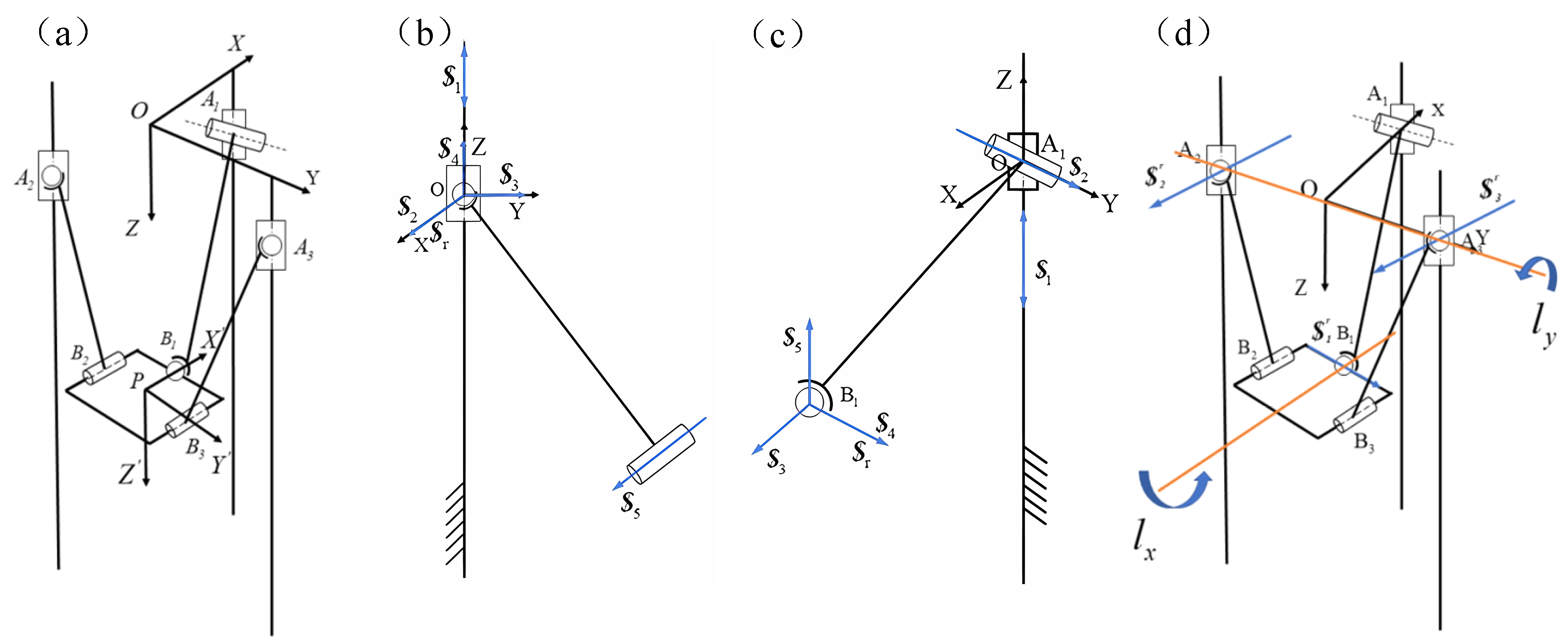

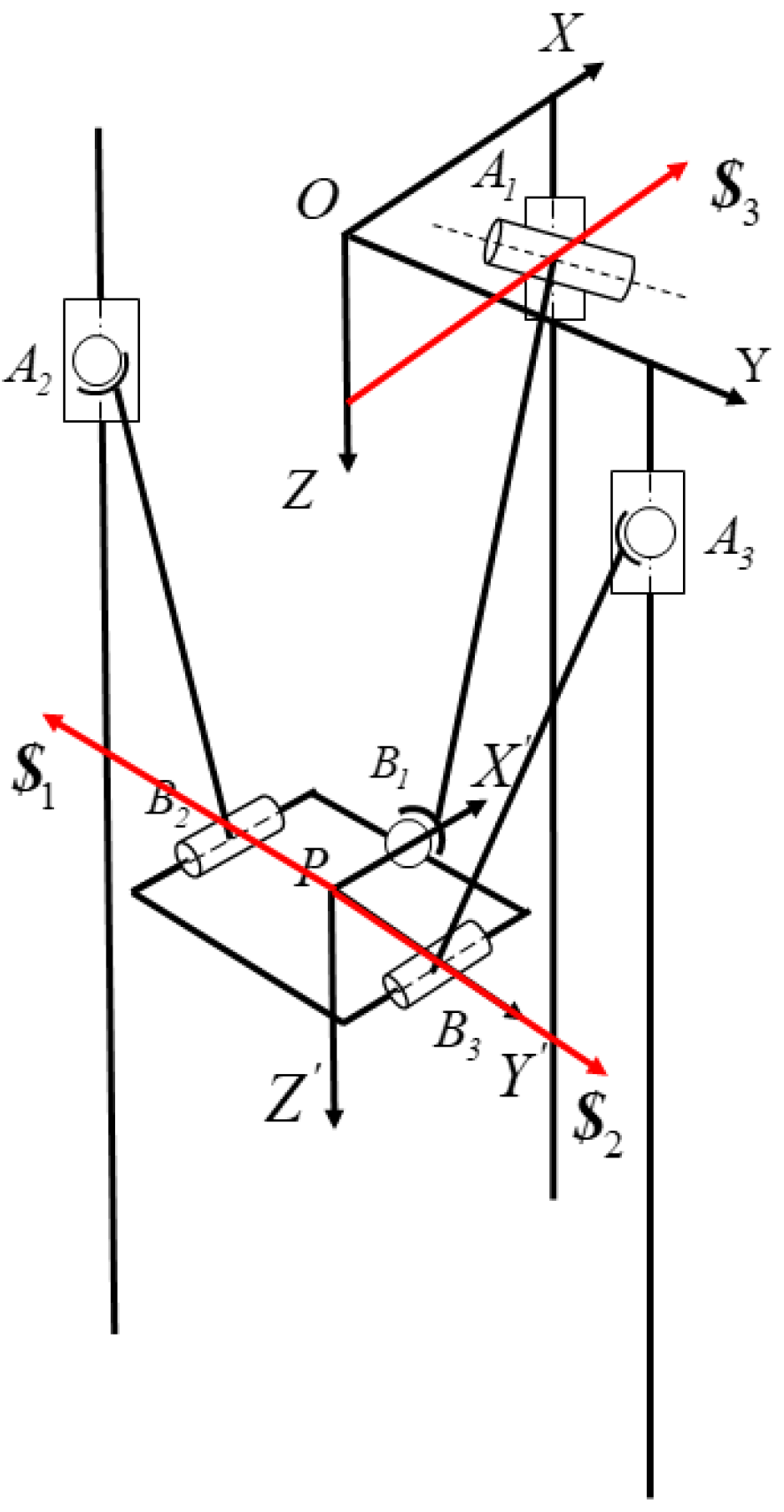

2.2. Analysis of 2PSR/1PRS Parallel Mechanism

2.2.1. Calculation of Degrees of Freedom

2.2.2. Inverse Kinematics

2.2.3. Forward Kinematics

2.2.4. Velocity Analysis of the Discharge End

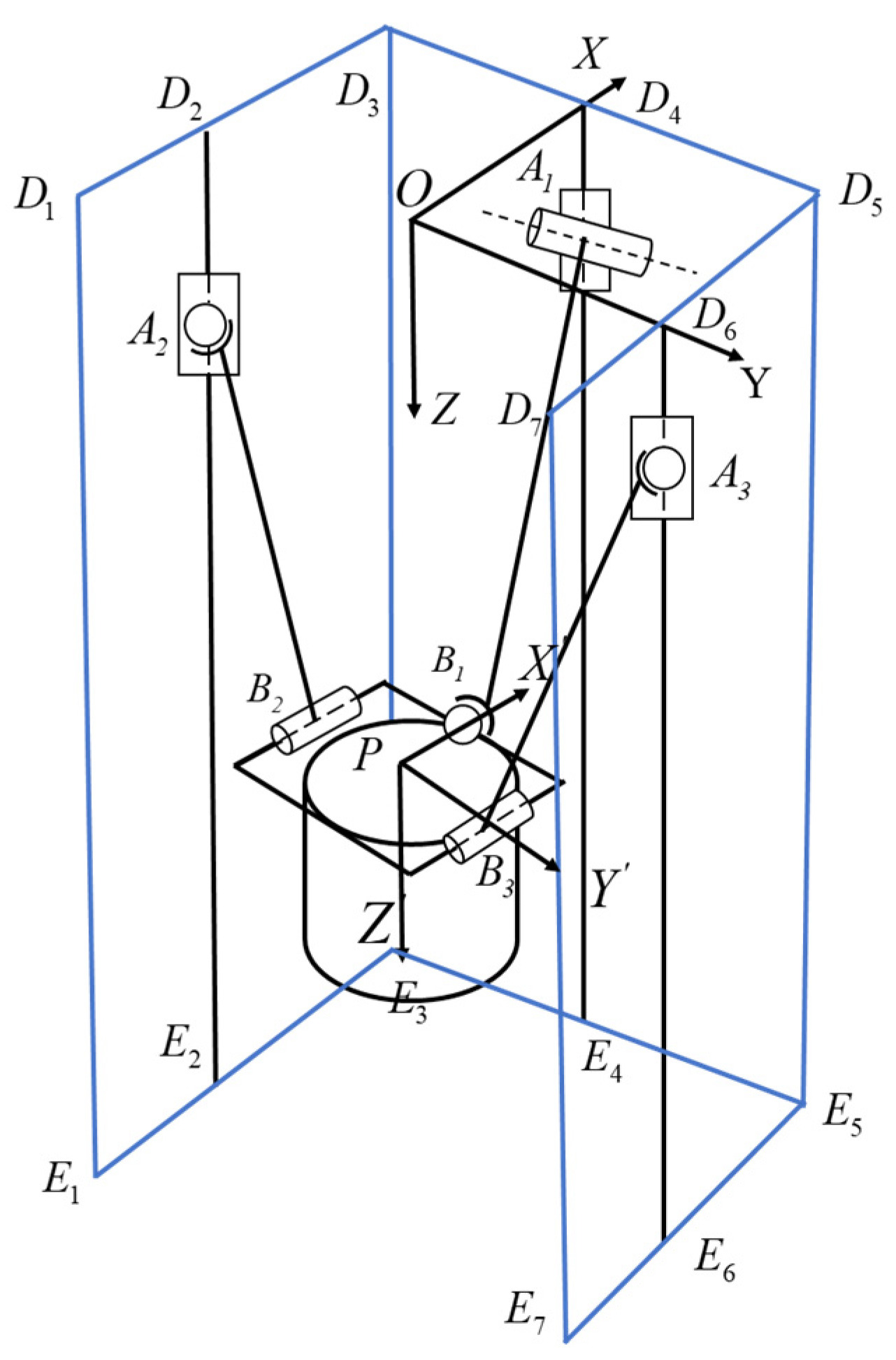

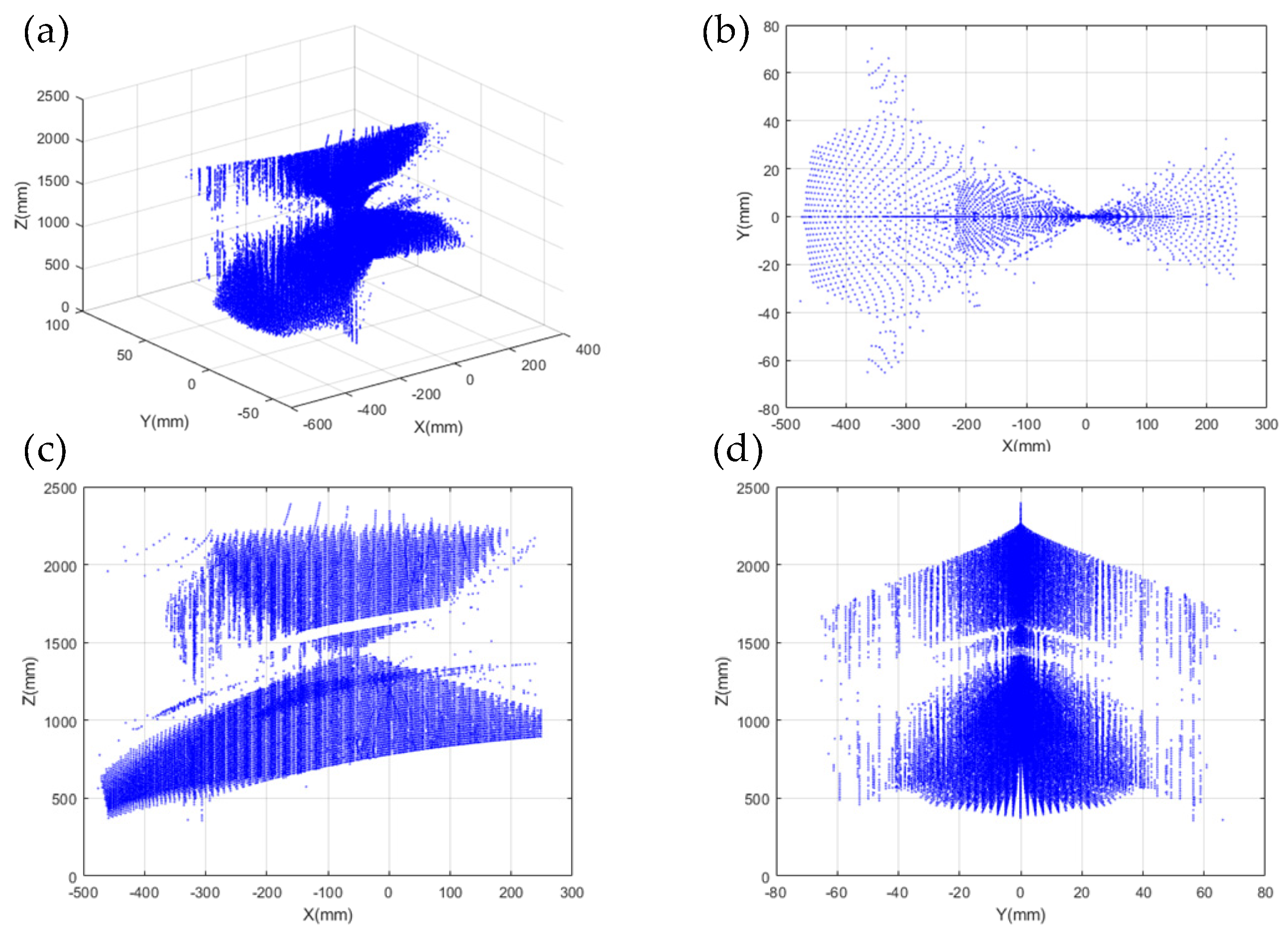

2.2.5. Workspace Analysis

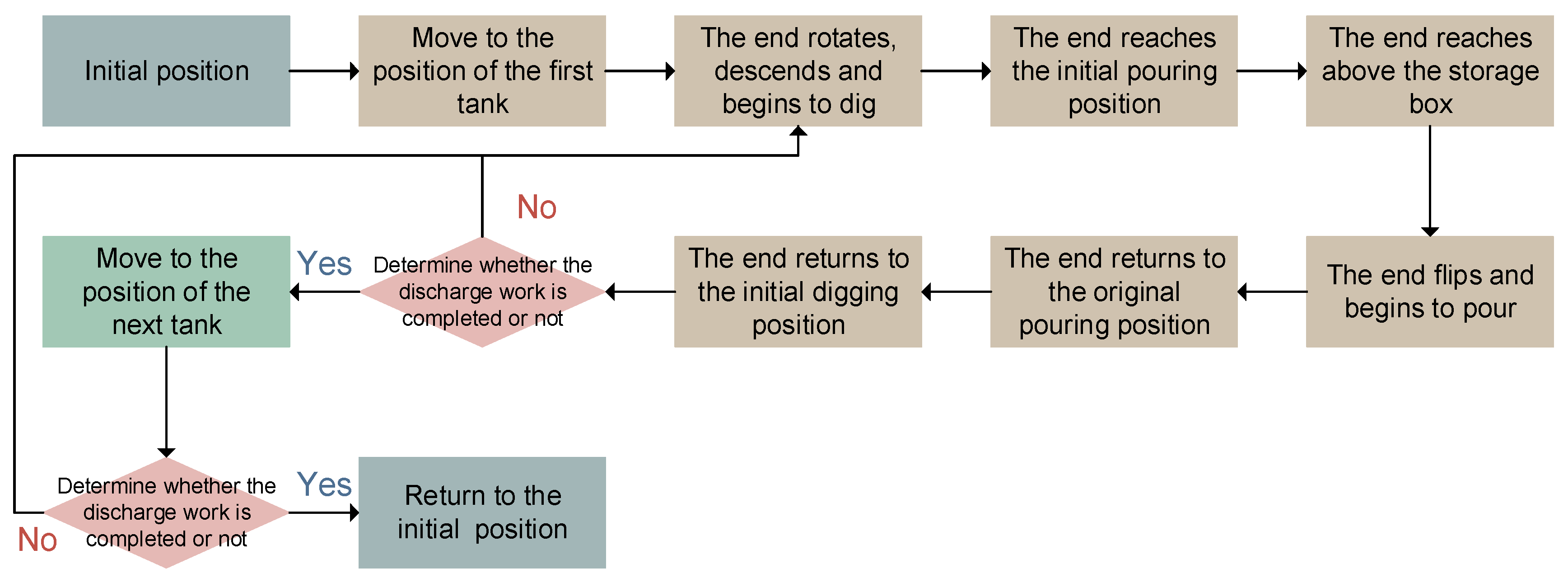

3. Intelligent Discharge Strategy

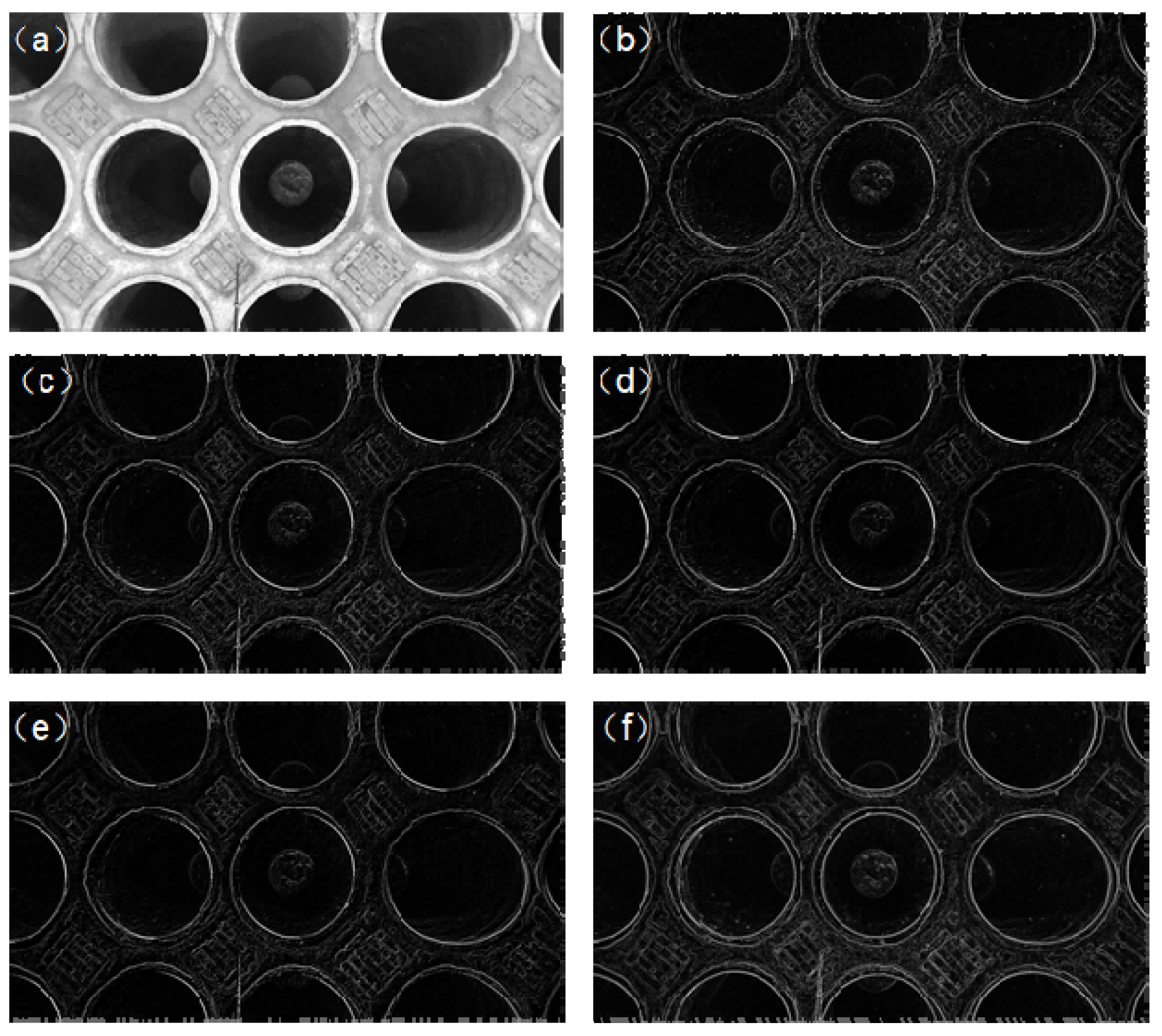

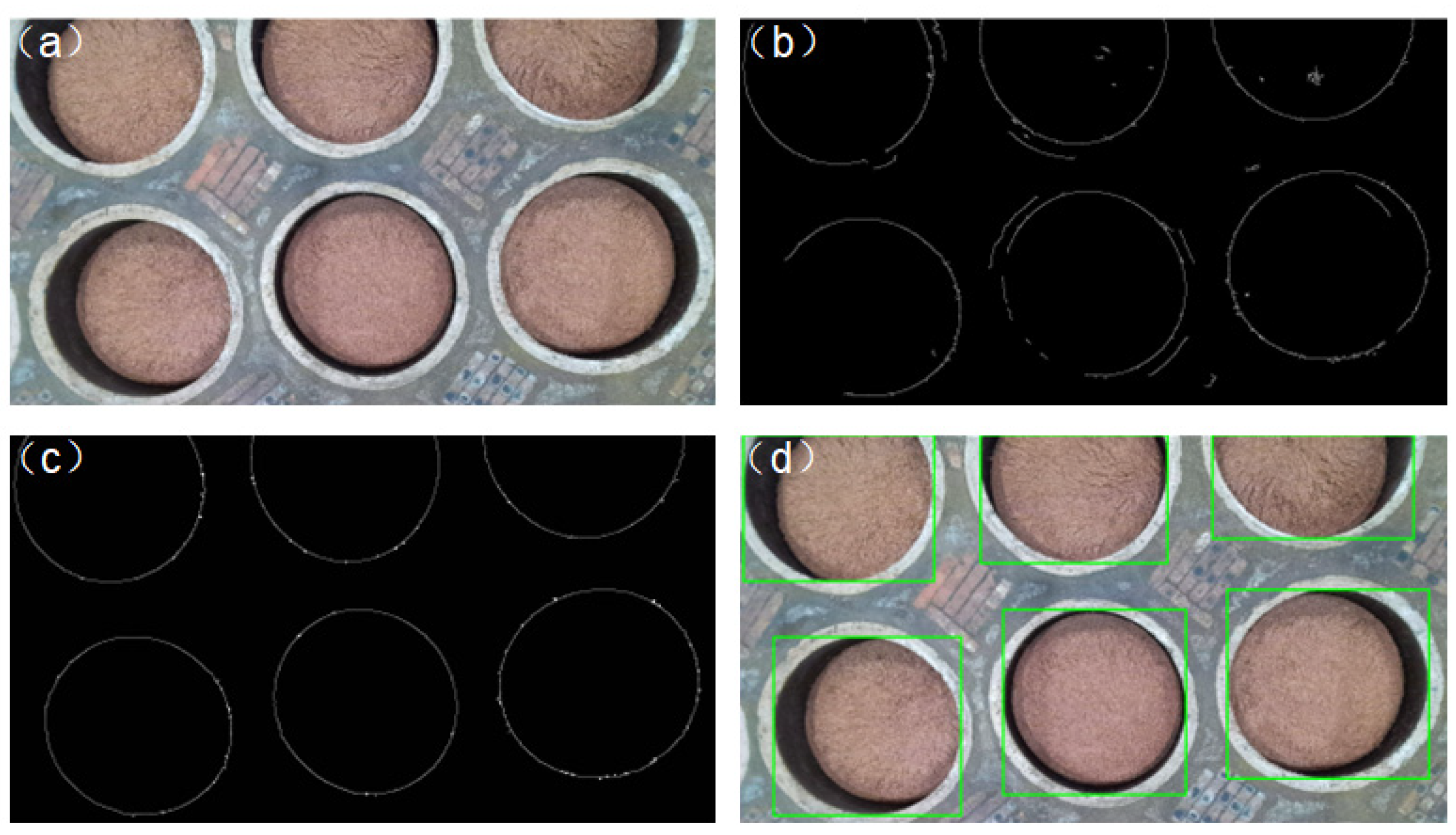

3.1. Improved Canny Edge Detection Algorithm

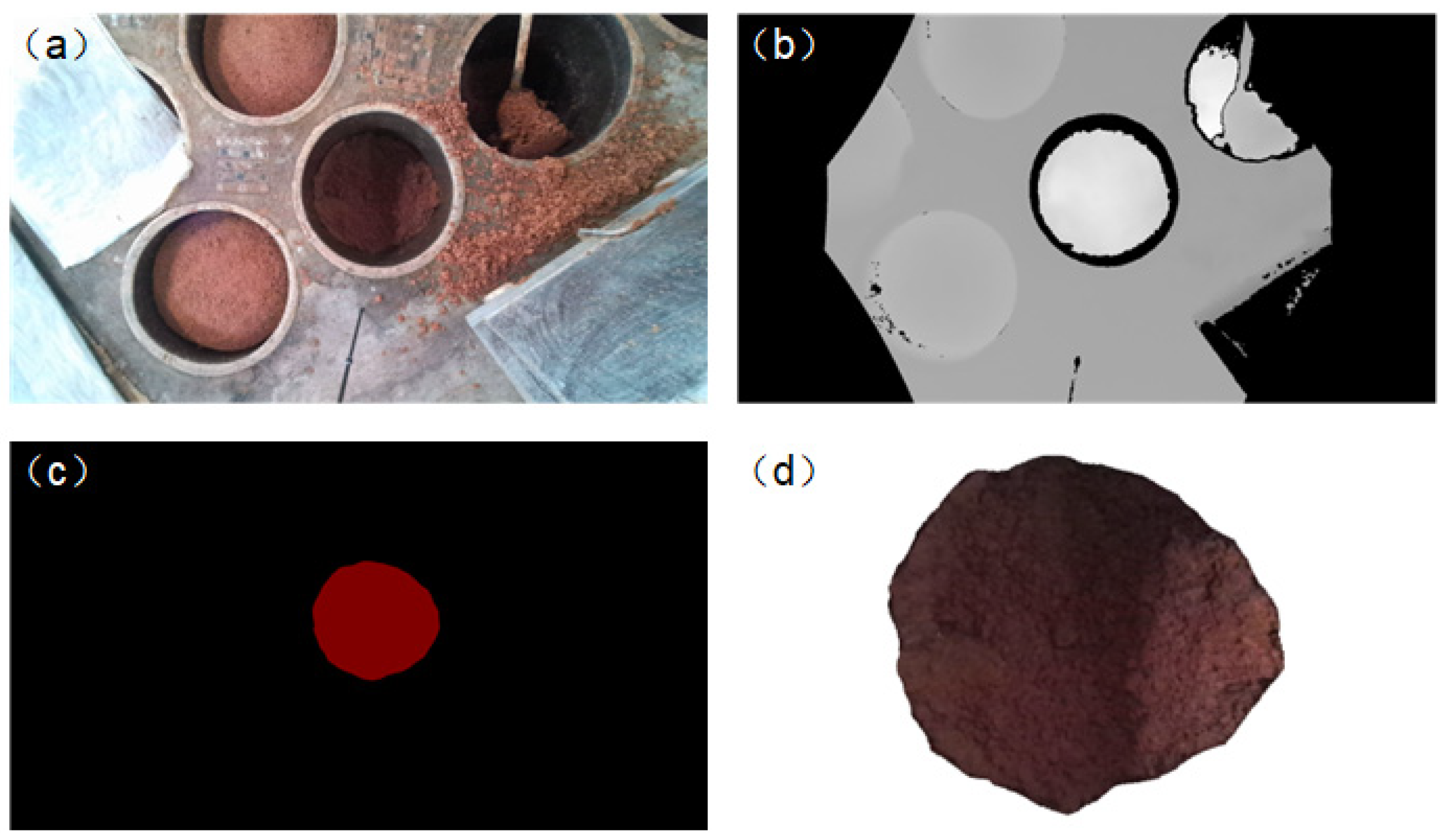

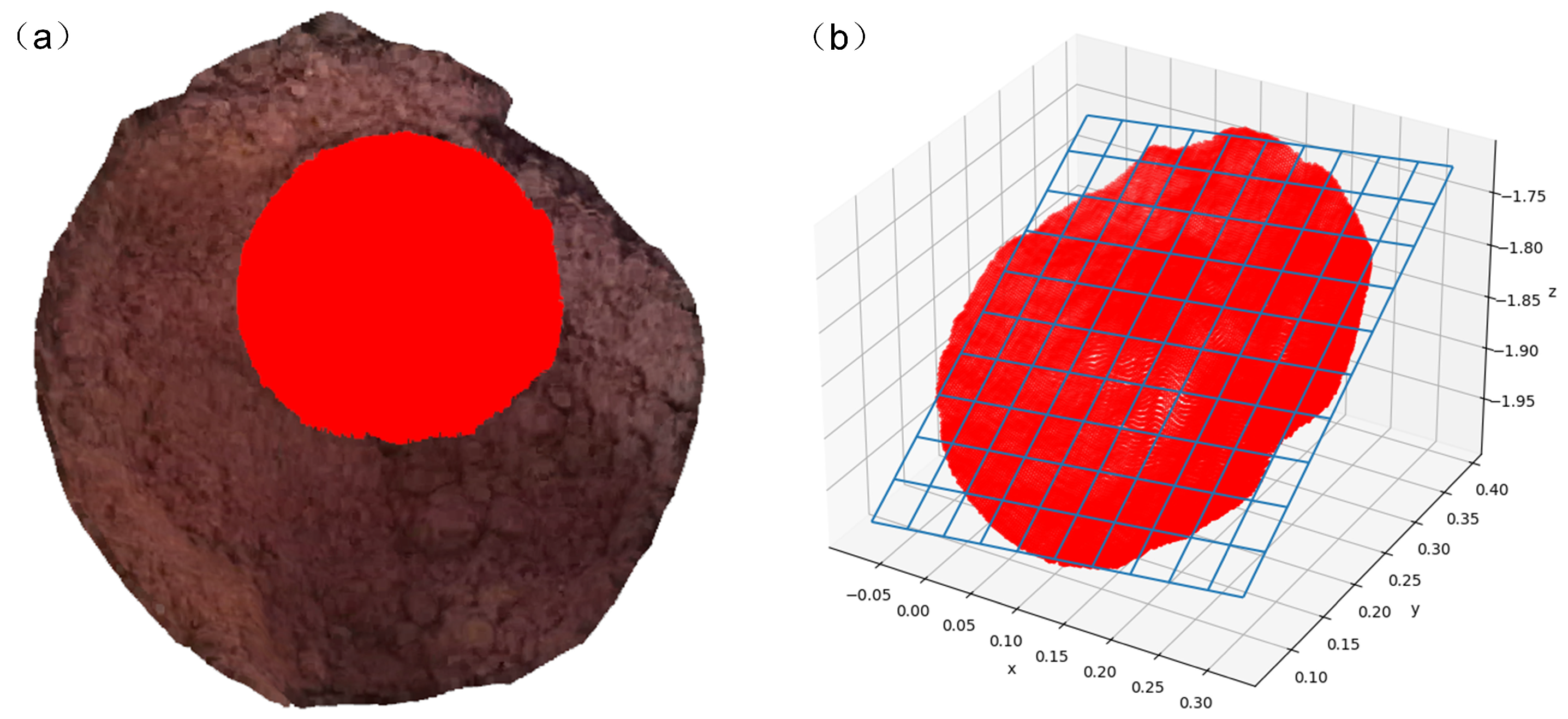

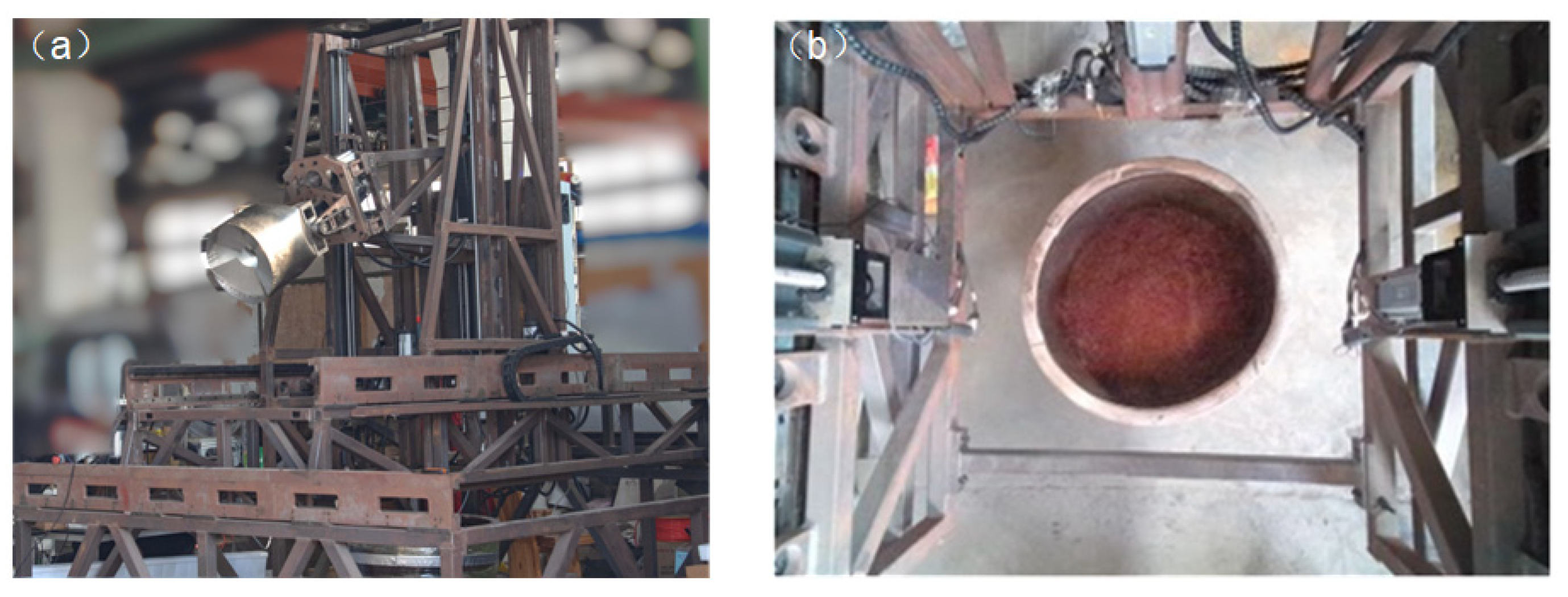

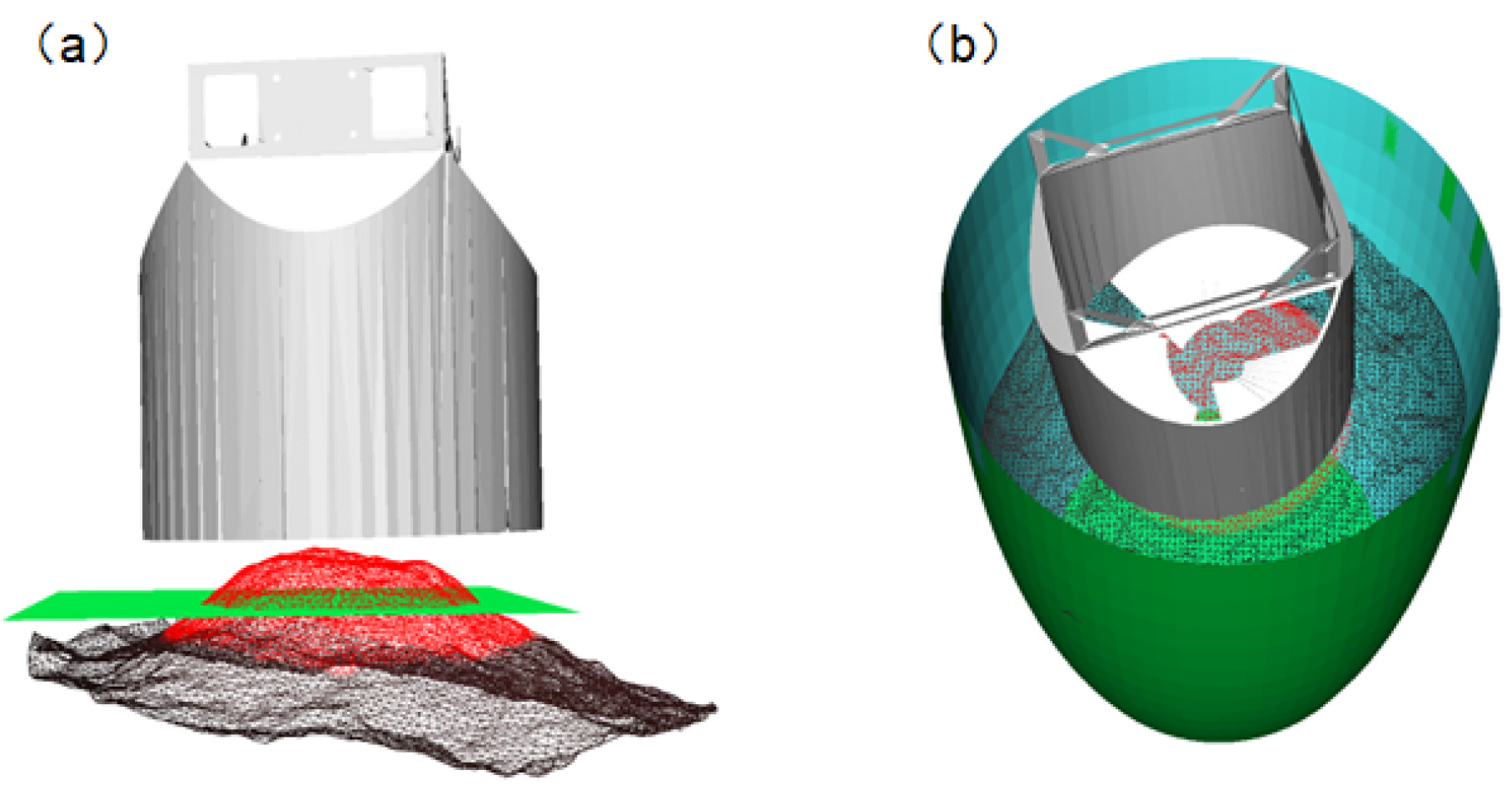

3.2. Acquisition and Processing of Fermented Grains Surface Point Cloud

3.2.1. Acquisition of Fermented Grains Point Cloud

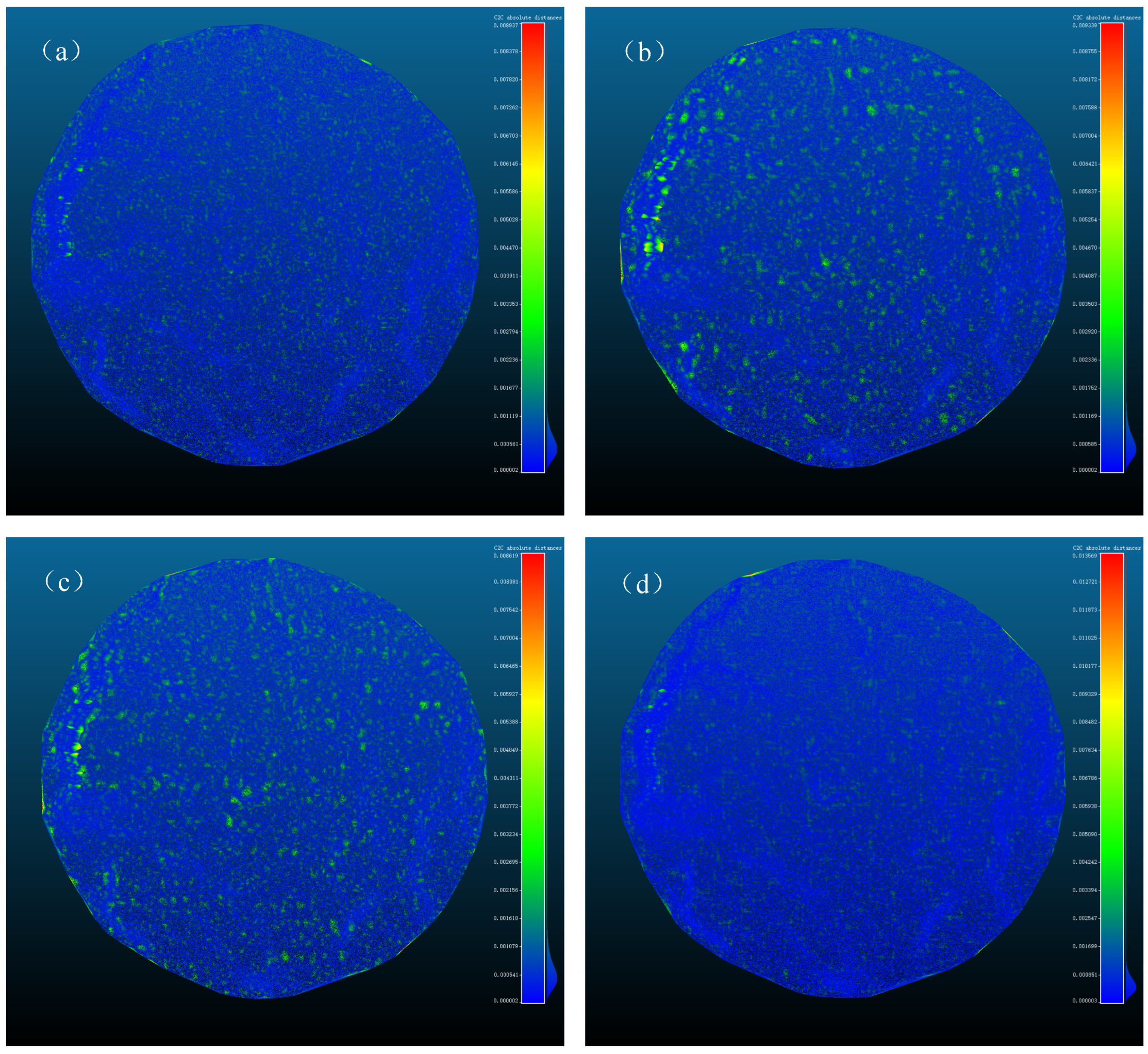

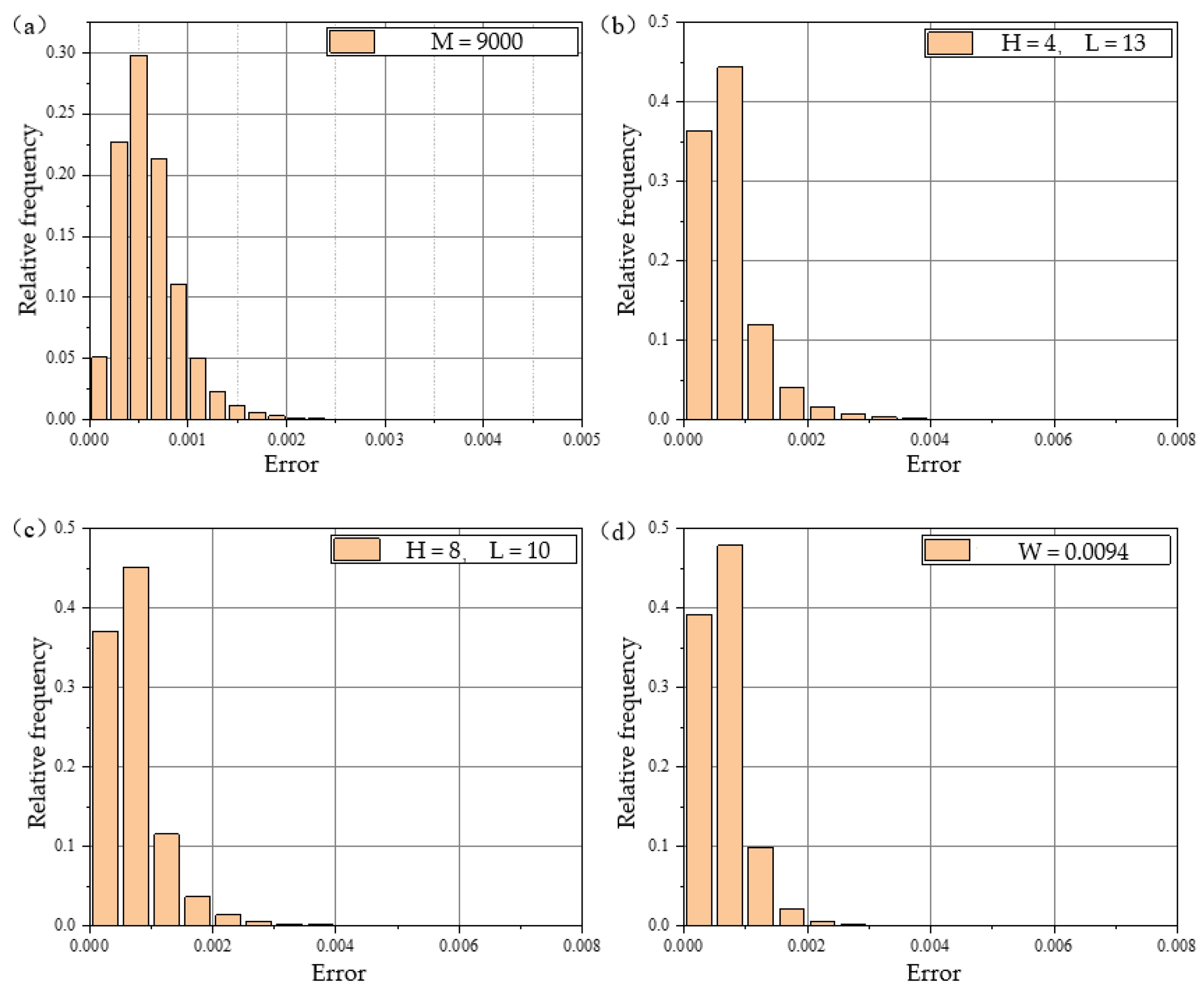

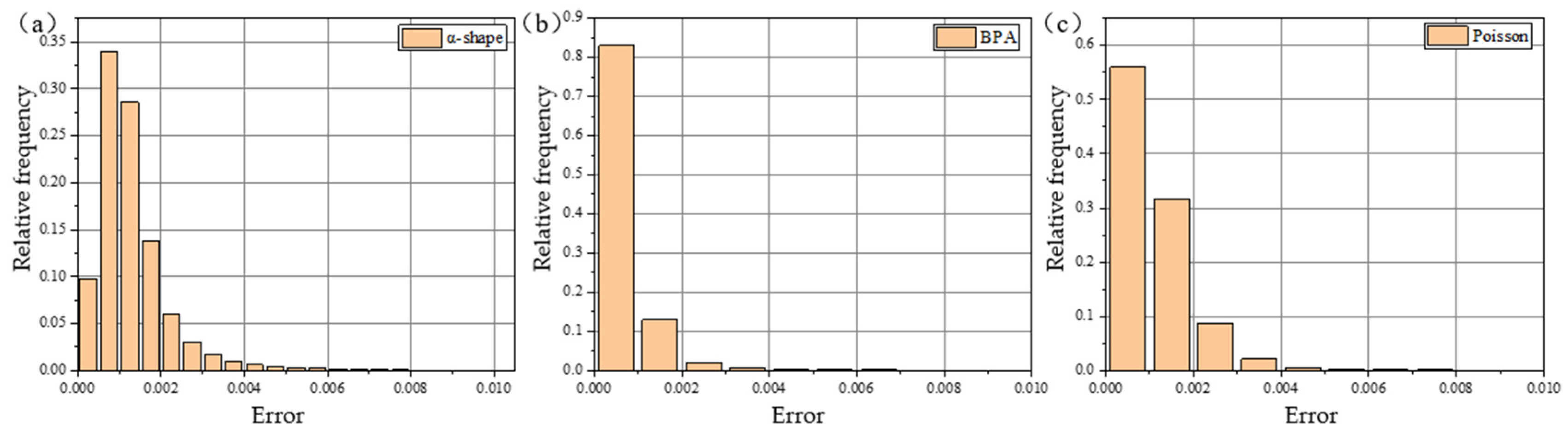

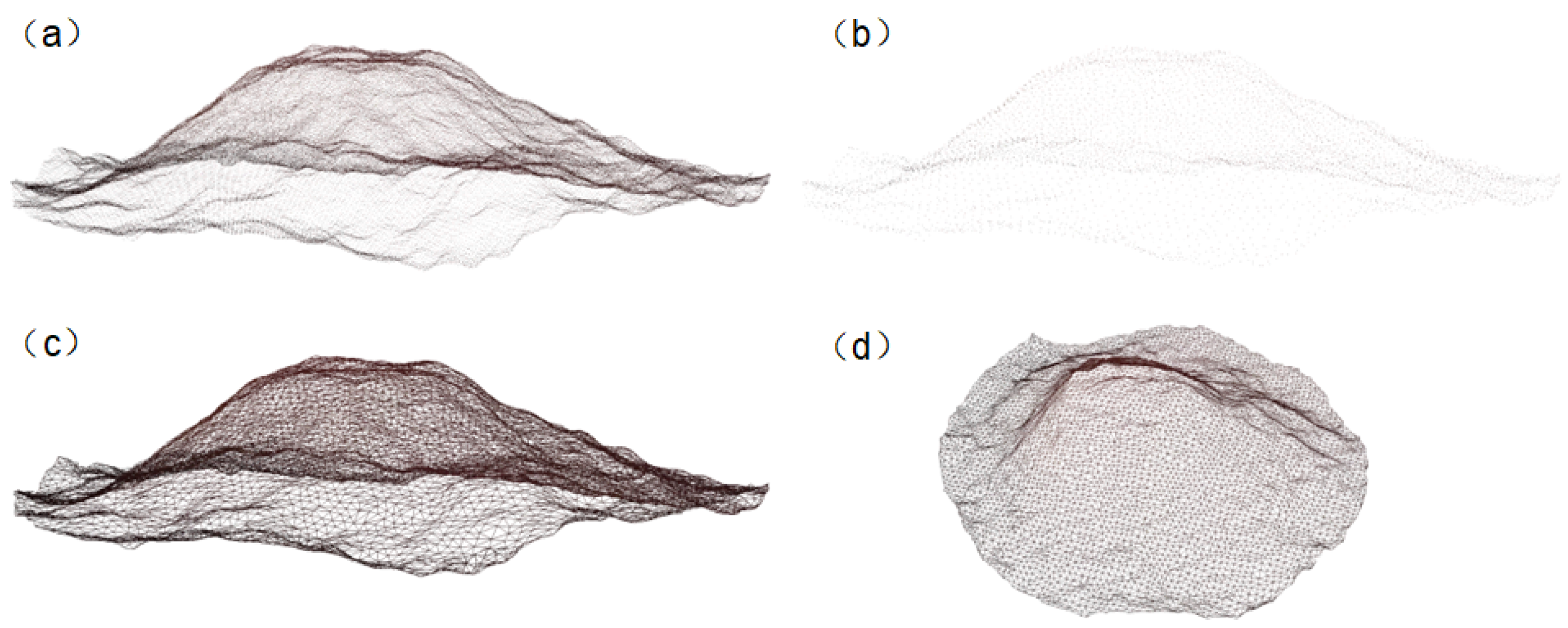

3.2.2. Point Cloud Downsampling and Three-Dimensional Reconstruction

3.3. Motion Control of Fermented Grains Based on Visual Perception

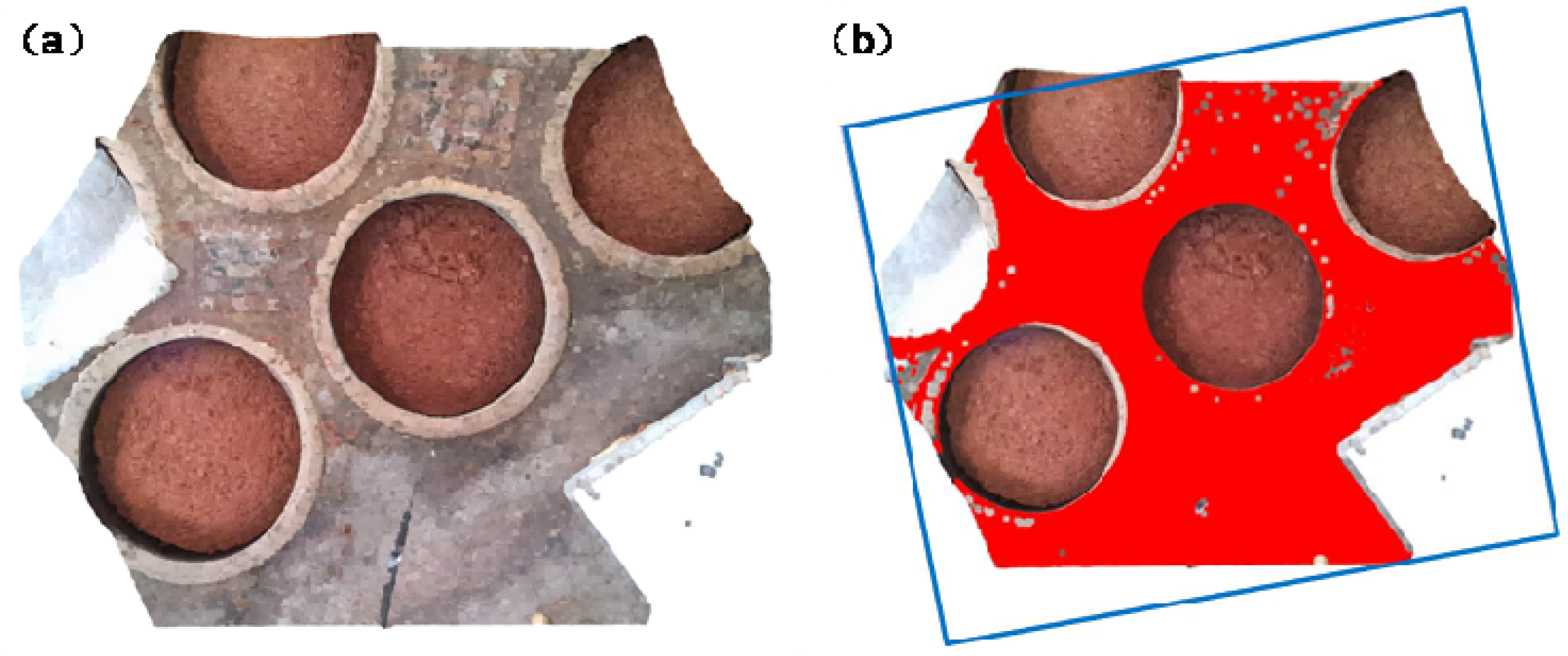

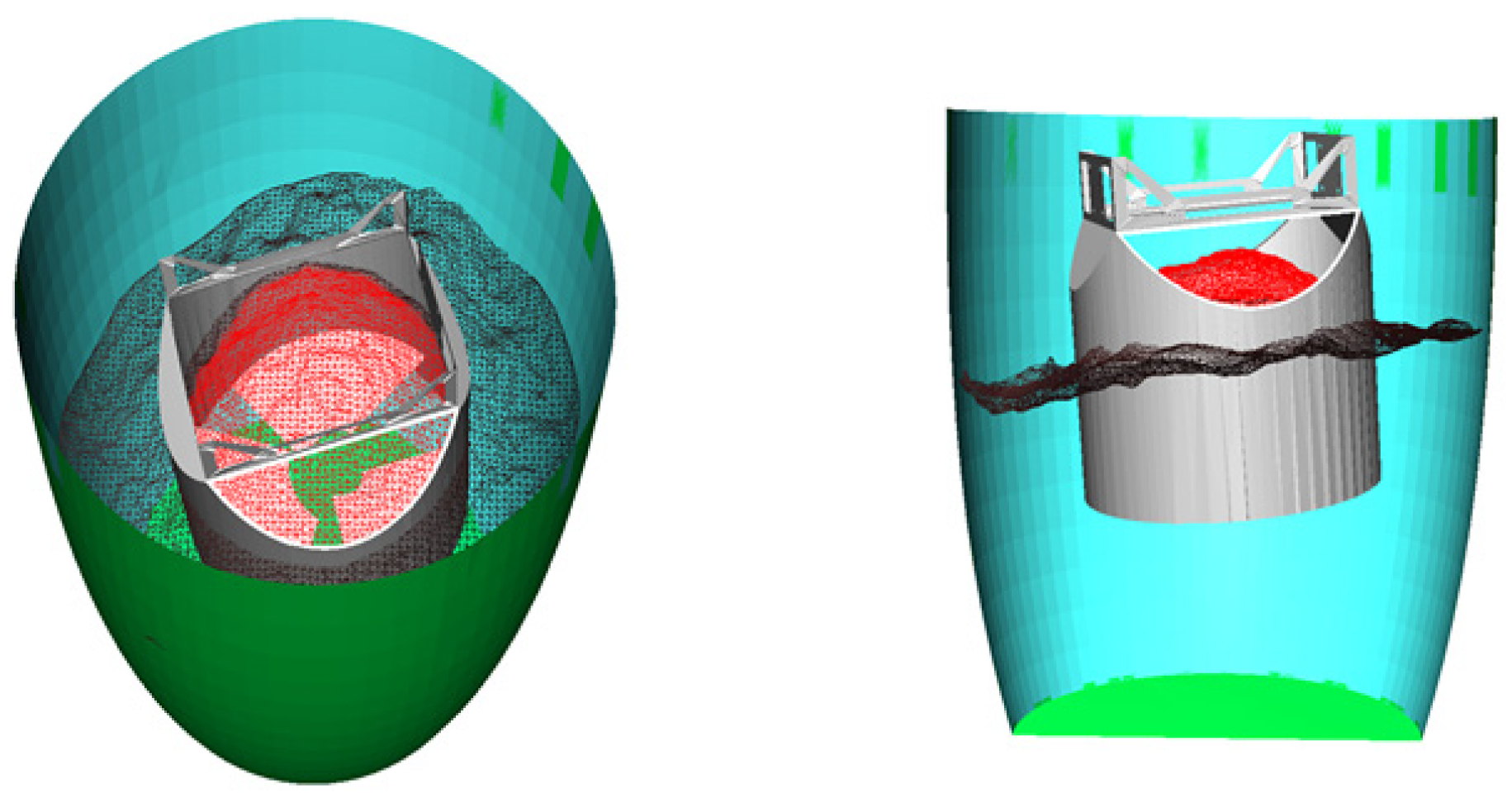

3.3.1. In-Tank Space Perception

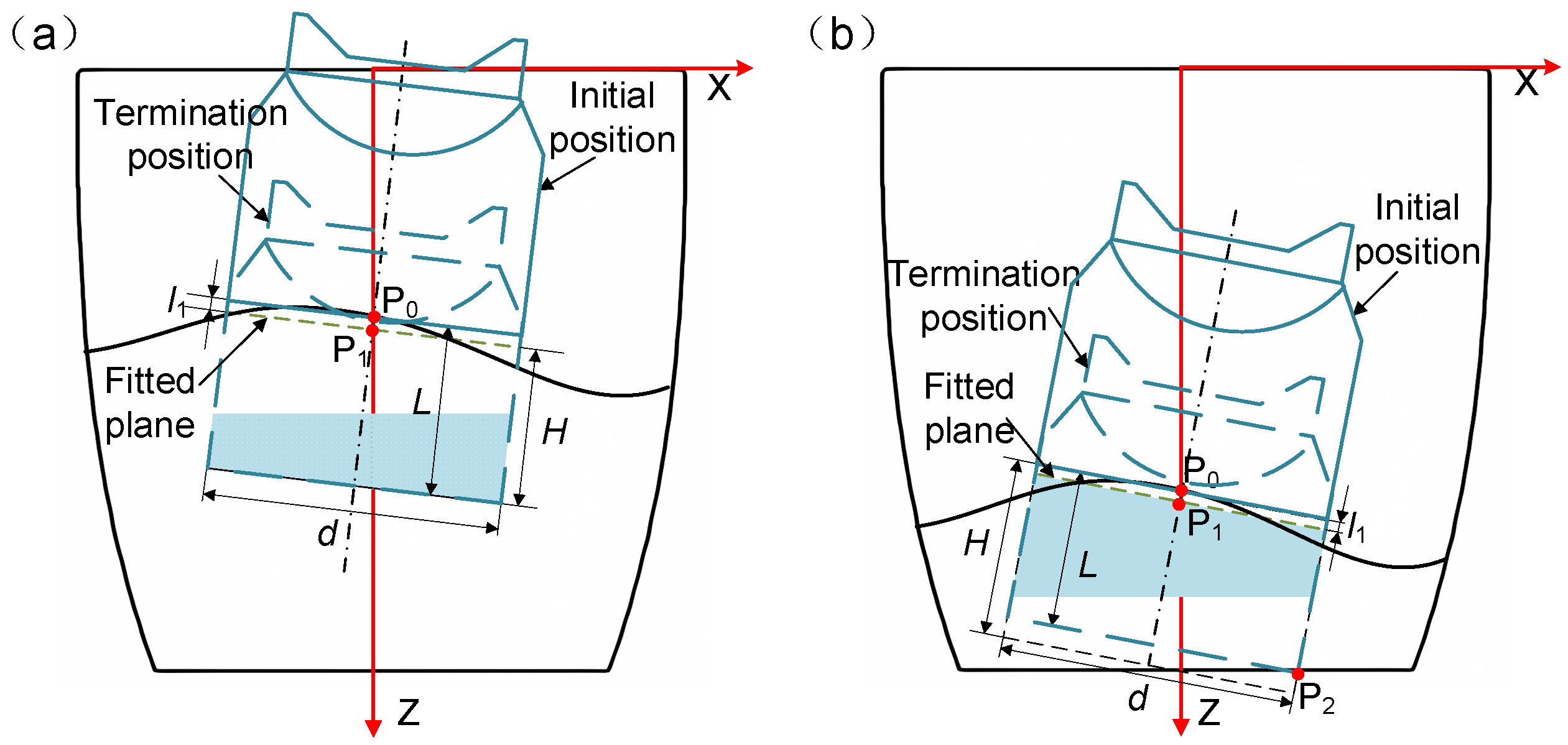

3.3.2. Motion Control Method of Fermented Grains

4. Experiment

4.1. Improved Canny Underground Tank Edge Detection Results

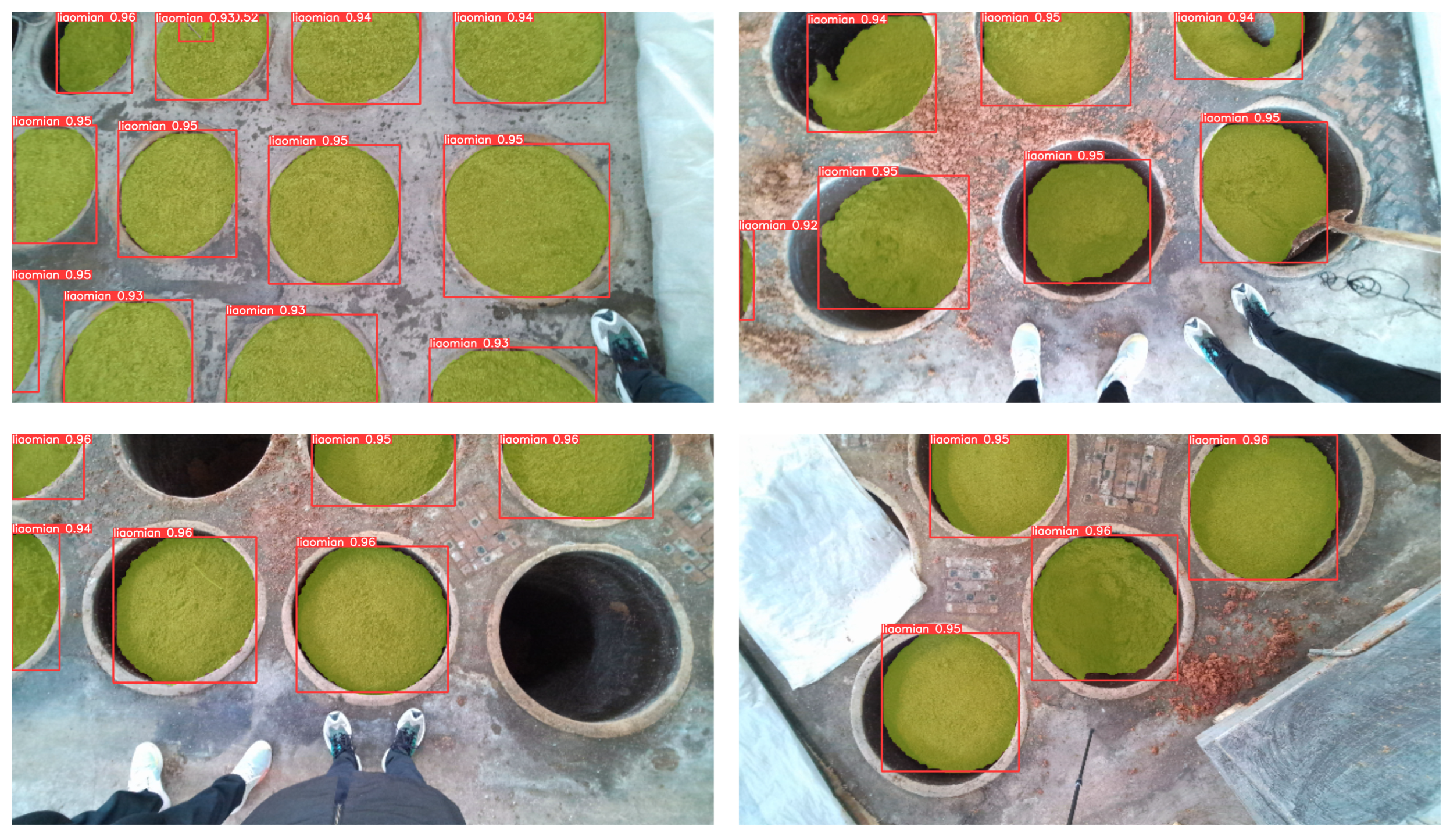

4.2. YOLO Model Training and Material Surface Segmentation

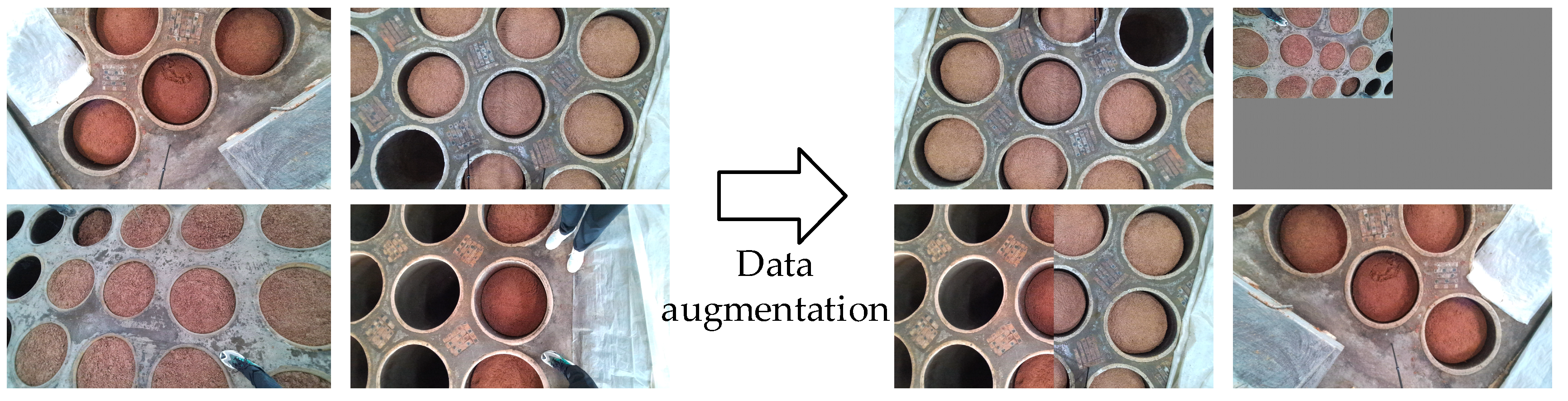

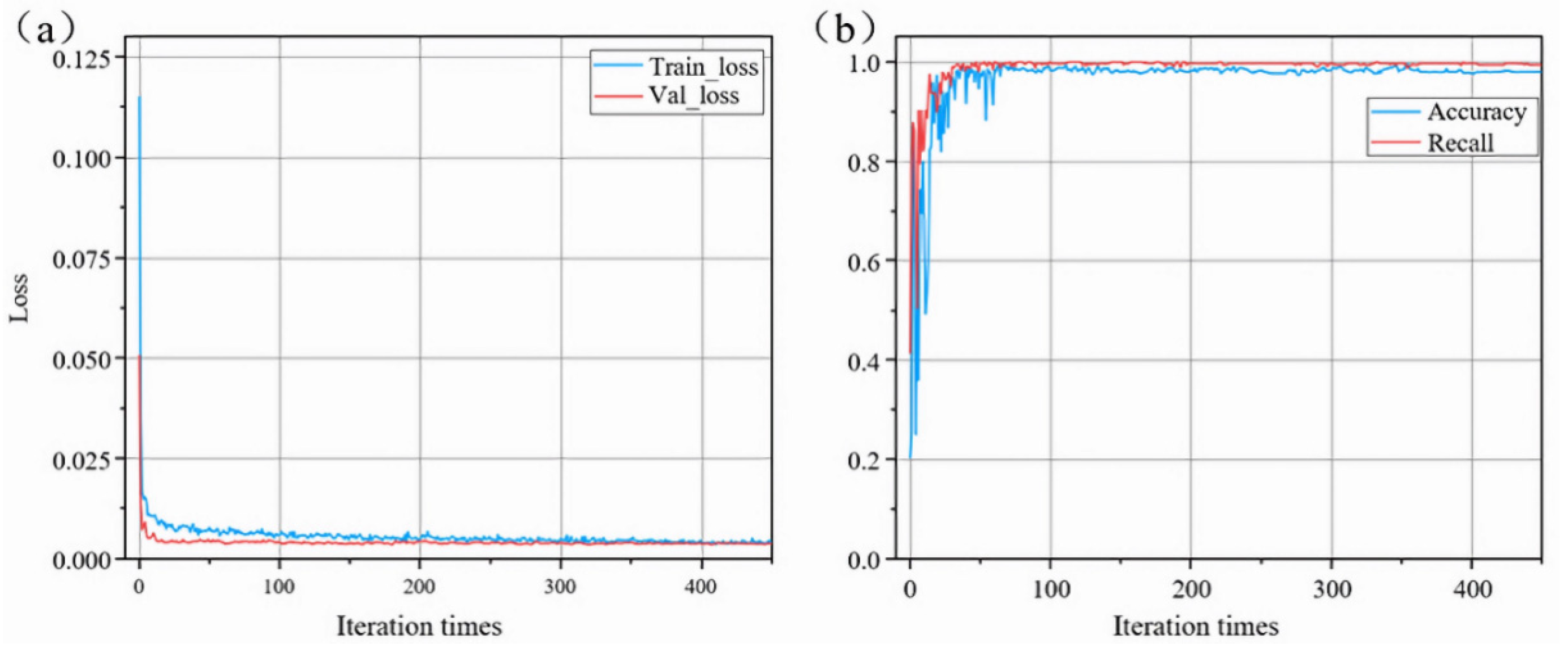

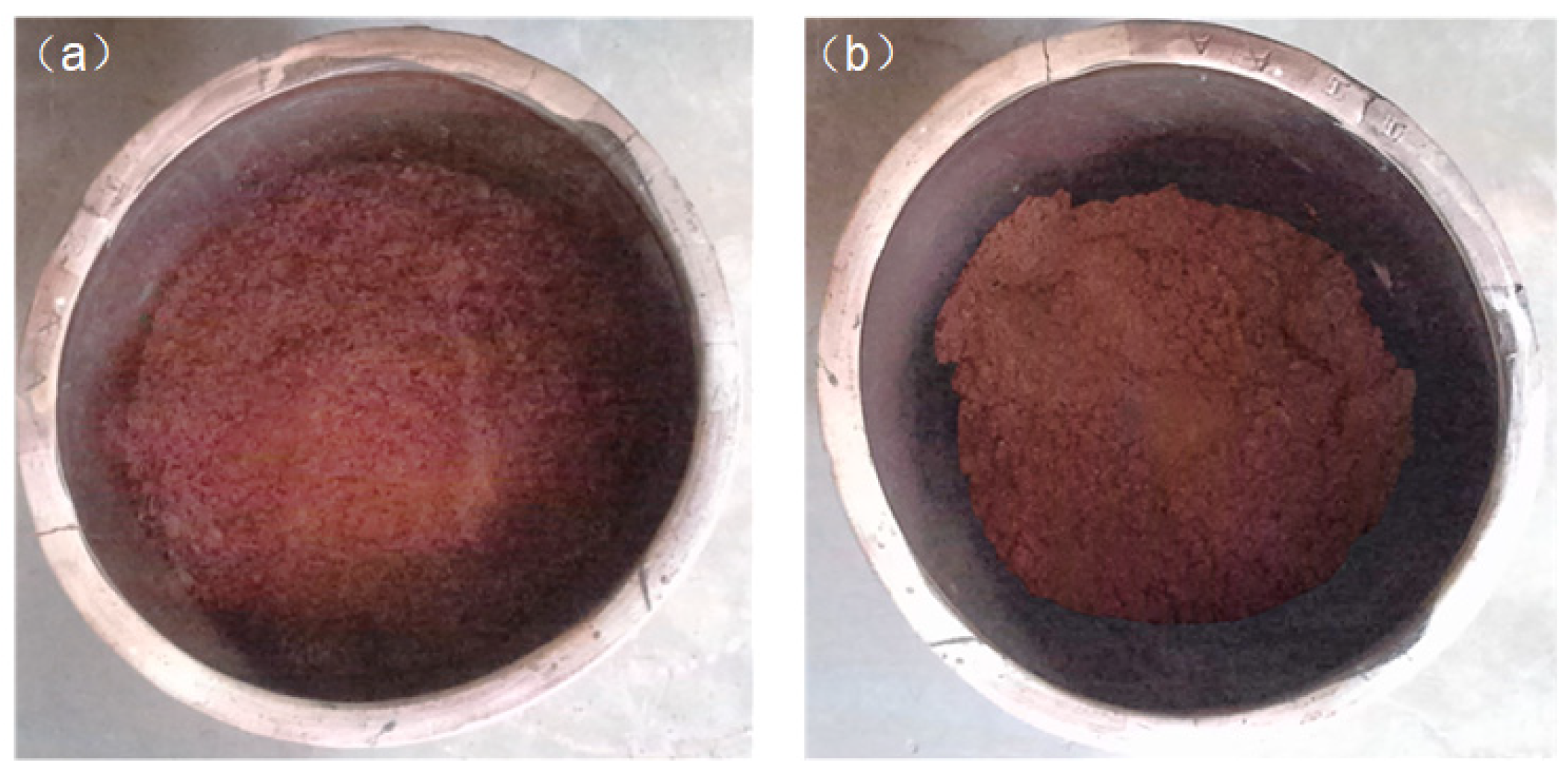

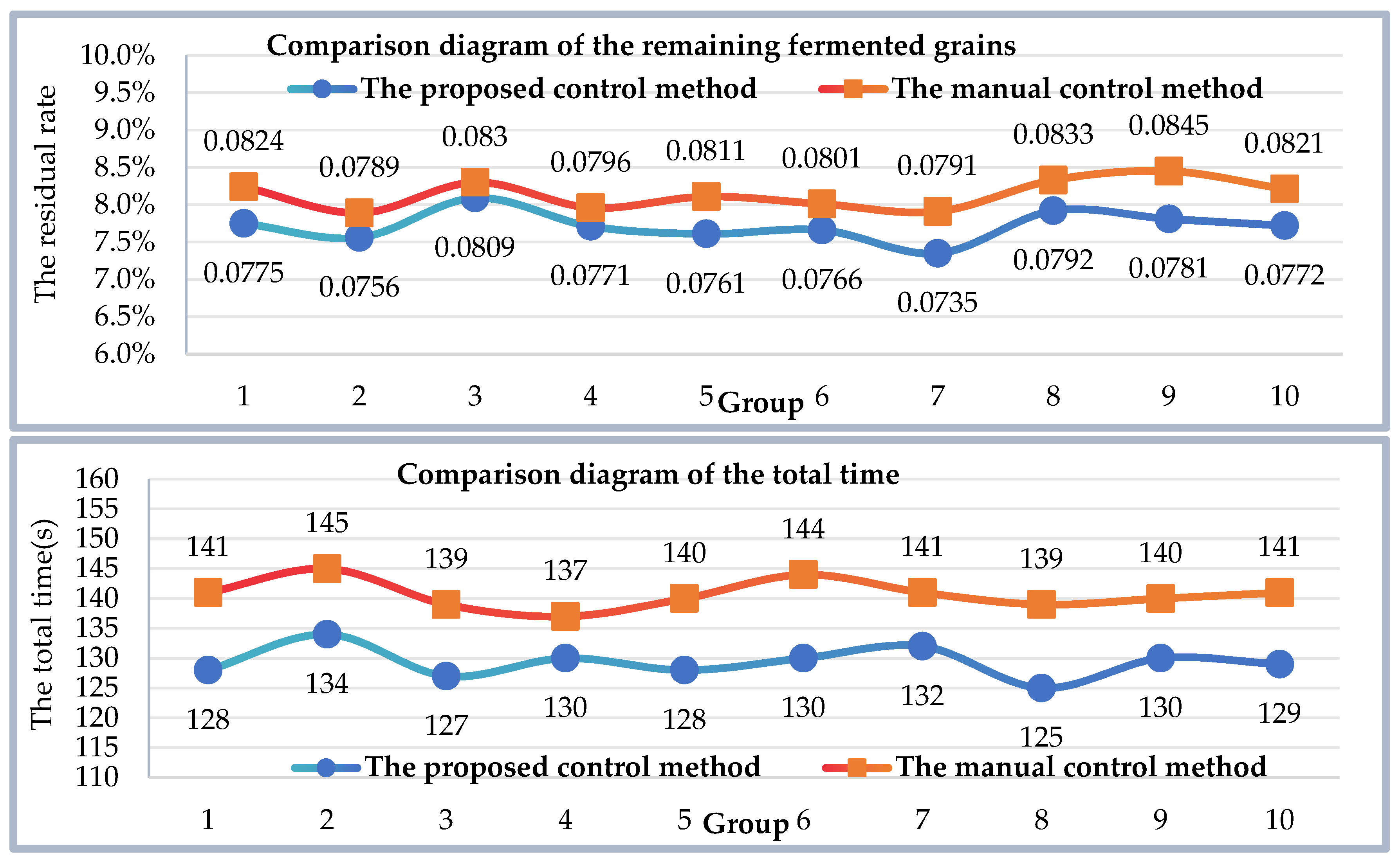

4.3. Experimental Validation of Digging Motion Control

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, W.; Chen, X.; Zhou, D.; Xiong, F. Applications and prospects of the automation of compound flavor baijiu production by solid-state fermentation. Int. J. Food Eng. 2022, 18, 737–749. [Google Scholar] [CrossRef]

- Liu, H.; Sun, B. Effect of Fermentation Processing on the Flavor of Baijiu. J. Agric. Food Chem. 2018, 66, 5425–5432. [Google Scholar] [CrossRef] [PubMed]

- Ye, H.; Wang, J.; Shi, J.; Du, J.; Zhou, Y.; Huang, M.; Sun, B. Automatic and Intelligent Technologies of Solid-State Fermentation Process of Baijiu Production: Applications, Challenges, and Prospects. Foods 2021, 10, 680. [Google Scholar] [CrossRef] [PubMed]

- Rath, T.; Kawollek, M. Robotic harvesting of Gerbera Jamesonii based on detection and three-dimensional modeling of cut flower pedicels. Comput. Electron. Agric. 2009, 66, 85–92. [Google Scholar] [CrossRef]

- Huang, Y.-J.; Lee, F.-F. An automatic machine vision-guided grasping system for Phalaenopsis tissue culture plantlets. Comput. Electron. Agric. 2010, 70, 42–51. [Google Scholar] [CrossRef]

- Guo, D.; Sun, F.; Fang, B.; Yang, C.; Xi, N. Robotic grasping using visual and tactile sensing. Inf. Sci. 2017, 417, 274–286. [Google Scholar] [CrossRef]

- Takahashi, K.; Ko, W.; Ummadisingu, A.; Maeda, S. Uncertainty-aware Self-supervised Target-mass Grasping of Granular Foods. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA 2021), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 2620–2626. [Google Scholar]

- Schenck, C.; Tompson, J.; Levine, S.; Fox, D. Learning Robotic Manipulation of Granular Media. In Proceedings of the 1st Annual Conference on Robot Learning, PMLR, Mountain View, California, 13 –15 November 2017; pp. 239–248. [Google Scholar]

- Hu, J.; Li, Q.; Bai, Q. Research on Robot Grasping Based on Deep Learning for Real-Life Scenarios. Micromachines 2023, 14, 1392. [Google Scholar] [CrossRef]

- Ding, A.; Peng, B.; Yang, K.; Zhang, Y.; Yang, X.; Zou, X.; Zhu, Z. Design of a Machine Vision-Based Automatic Digging Depth Control System for Garlic Combine Harvester. Agriculture 2022, 12, 2119. [Google Scholar] [CrossRef]

- Sun, R.; Wu, C.; Zhao, X.; Zhao, B.; Jiang, Y. Object Recognition and Grasping for Collaborative Robots Based on Vision. Sensors 2024, 24, 195. [Google Scholar] [CrossRef]

- Lundell, J.; Verdoja, F.; Kyrki, V. DDGC: Generative Deep Dexterous Grasping in Clutter. IEEE Robot. Autom. Lett. 2021, 6, 6899–6906. [Google Scholar] [CrossRef]

- Tong, L.; Song, K.; Tian, H.; Man, Y.; Yan, Y.; Meng, Q. SG-Grasp: Semantic Segmentation Guided Robotic Grasp Oriented to Weakly Textured Objects Based on Visual Perception Sensors. IEEE Sens. J. 2023, 23, 28430–28441. [Google Scholar] [CrossRef]

- Haggag, S.A.; Elnahas, N.S. Event-based detection of the digging operation states of a wheel loader earth moving equipment. IJHVS 2013, 20, 157. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, J.; Zhang, Y.; Luo, C. A Novel Method of Soil Parameter Identification and Force Prediction for Automatic Excavation. IEEE Access 2020, 8, 11197–11207. [Google Scholar] [CrossRef]

- Huh, J.; Bae, J.; Lee, D.; Kwak, J.; Moon, C.; Im, C.; Ko, Y.; Kang, T.K.; Hong, D. Deep Learning-Based Autonomous Excavation: A Bucket-Trajectory Planning Algorithm. IEEE Access 2023, 11, 38047–38060. [Google Scholar] [CrossRef]

- Jud, D.; Hottiger, G.; Leemann, P.; Hutter, M. Planning and Control for Autonomous Excavation. IEEE Robot. Autom. Lett. 2017, 2, 2151–2158. [Google Scholar] [CrossRef]

- Zhao, J.; Hu, Y.; Liu, C.; Tian, M.; Xia, X. Spline-Based Optimal Trajectory Generation for Autonomous Excavator. Machines 2022, 10, 538. [Google Scholar] [CrossRef]

- Yang, Y.; Long, P.; Song, X.; Pan, J.; Zhang, L. Optimization-Based Framework for Excavation Trajectory Generation. IEEE Robot. Autom. Lett. 2021, 6, 1479–1486. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, C.; Fang, Y.; Pfister, P.-D. A Direct-Drive Permanent-Magnet Motor Selective Compliance Assembly Robot Arm: Modeling, Motion Control, and Trajectory Optimization Based on Direct Collocation Method. IEEE Access 2023, 11, 123862–123875. [Google Scholar] [CrossRef]

- Li, Y.; Hao, X.; She, Y.; Li, S.; Yu, M. Constrained Motion Planning of Free-Float Dual-Arm Space Manipulator via Deep Reinforcement Learning. Aerosp. Sci. Technol. 2021, 109, 106446. [Google Scholar] [CrossRef]

- Song, K.-T.; Tsai, S.-C. Vision-Based Adaptive Grasping of a Humanoid Robot Arm. In Proceedings of the 2012 IEEE International Conference on Automation and Logistics, Zhengzhou, China, 15–17 August 2012; pp. 155–160. [Google Scholar]

- Chen, J.-H.; Song, K.-T. Collision-Free Motion Planning for Human-Robot Collaborative Safety Under Cartesian Constraint. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4348–4354. [Google Scholar]

- Amine, S.; Masouleh, M.T.; Caro, S.; Wenger, P.; Gosselin, C. Singularity analysis of 3T2R parallel mechanisms using Grassmann-Cayley algebra and Grassmann geometry. Mech. Mach. Theory 2012, 52, 326–340. [Google Scholar] [CrossRef]

- Geng, M.; Zhao, T.; Wang, C.; Chen, Y.; He, Y. Direct Position Analysis of Parallel Mechanism Based on Quasi-Newton Method. JME 2015, 51, 28. [Google Scholar] [CrossRef]

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent advances on image edge detection: A comprehensive review. Neurocomputing 2022, 503, 259–271. [Google Scholar] [CrossRef]

- Wu, C.; Ma, H.; Jiang, H.; Huang, Z.; Cai, Z.; Zheng, Z.; Wong, C.-H. An Improved Canny Edge Detection Algorithm with Iteration Gradient Filter. In Proceedings of the 2022 6th International Conference on Imaging, Signal Processing and Communications (ICISPC), Kumamoto, Japan, 22–24 July 2022; pp. 16–21. [Google Scholar]

- Sangeetha, D.; Deepa, P. FPGA implementation of cost-effective robust Canny edge detection algorithm. J. Real-Time Image Proc. 2019, 16, 957–970. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Arróspide, J.; Salgado, L.; Camplani, M. Image-based on-road vehicle detection using cost-effective Histograms of Oriented Gradients. J. Vis. Commun. Image Represent. 2013, 24, 1182–1190. [Google Scholar] [CrossRef]

- Huang, M.; Liu, Y.; Yang, Y. Edge detection of ore and rock on the surface of explosion pile based on improved Canny operator. Alex. Eng. J. 2022, 61, 10769–10777. [Google Scholar] [CrossRef]

| Name | Numerical Value |

|---|---|

| 1 | |

| 5 | |

| 70 | |

| 120/34 | |

| 30 |

| Parameters | Numerical Value |

|---|---|

| 1680 mm | |

| The height of the cylinder: | 748 mm |

| 400 mm | |

| 550 mm | |

| 534 mm | |

| 825 mm | |

| 650 mm | |

| 650 mm | |

| The maximum rotation angle of the spherical joint | 14° |

| Algorithms | Parameters | The Number of Points | Runtime(s) | RMSE |

|---|---|---|---|---|

| FPS | M = 9000 | 9000 | 0.801523 | 0.0006908300 |

| Curvature-based downsampling | H = 4, L = 13 | 9046 | 1.977056 | 0.0009401315 |

| Curvature-based downsampling | H = 8, L = 10 | 9040 | 1.964903 | 0.0008853890 |

| Voxel downsampling | W = 0.0094 | 9086 | 0.001998 | 0.0007709814 |

| Algorithms | The Average Errors | SD | Runtime (s) | RMSE |

|---|---|---|---|---|

| BPA | 0.000747 | 0.000733 | 0.088995 | 0.001307 |

| α-shape | 0.001306 | 0.000923 | 0.616996 | 0.001609 |

| Poisson | 0.000987 | 0.000616 | 0.771998 | 0.001162 |

| Group | Total Number of Underground Tanks | The Number of Underground Tanks Detected | Recognition Rate |

|---|---|---|---|

| 1 | 83 | 74 | 0.891566 |

| 2 | 75 | 70 | 0.933333 |

| 3 | 62 | 54 | 0.870968 |

| 4 | 71 | 64 | 0.901408 |

| 5 | 57 | 53 | 0.929825 |

| 6 | 73 | 65 | 0.890411 |

| 7 | 69 | 61 | 0.884058 |

| 8 | 74 | 68 | 0.918919 |

| Hyperparameters | Numerical Value |

|---|---|

| Batch size | 10 |

| Learning rate | 0.01 |

| Epochs | 1000 |

| Optimizer | Adam |

| The proportion of the training set, validation set, and test set | 8:1:1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Wang, Z.; Li, H.; Wang, C.; Zhang, J.; Zhu, J.; Liu, X. Material Visual Perception and Discharging Robot Control for Baijiu Fermented Grains in Underground Tank. Sensors 2024, 24, 8215. https://doi.org/10.3390/s24248215

Zhao Y, Wang Z, Li H, Wang C, Zhang J, Zhu J, Liu X. Material Visual Perception and Discharging Robot Control for Baijiu Fermented Grains in Underground Tank. Sensors. 2024; 24(24):8215. https://doi.org/10.3390/s24248215

Chicago/Turabian StyleZhao, Yan, Zhongxun Wang, Hui Li, Chang Wang, Jianhua Zhang, Jingyuan Zhu, and Xuan Liu. 2024. "Material Visual Perception and Discharging Robot Control for Baijiu Fermented Grains in Underground Tank" Sensors 24, no. 24: 8215. https://doi.org/10.3390/s24248215

APA StyleZhao, Y., Wang, Z., Li, H., Wang, C., Zhang, J., Zhu, J., & Liu, X. (2024). Material Visual Perception and Discharging Robot Control for Baijiu Fermented Grains in Underground Tank. Sensors, 24(24), 8215. https://doi.org/10.3390/s24248215