4.2. Proposed Method with SimaRPN++

To access object tracking, the foundational algorithm chosen was SiamRPN++. The integration of the proposed algorithm with SiamRPN++ is outlined in detail in

Section 3.3. The VOT2018 [

39] dataset containing 60 videos with 21,296 frames was used in the experiments for short-term single object tracking. To merge the proposed method with SiamRPN++, the foundational code for SiamRPN++ was sourced from [

41]. SiamRPN++ and the proposed method were executed on GPU and CPU, respectively.

Table 2 presents a comparison of the performance metrics among different methods:

(SiamRPN++ using ResNet backbone), frame skipping (

), and the proposed method without confidence level evaluation (

) and with confidence level evaluation (

).

denotes the maximum number of frames skipped consecutively without performing SiamRPN++. Abbreviations A, R, L, EOA, S, and FPS correspond to accuracy, robustness, lost count, expected average overlap, skip, and frames per second, respectively. For

, the initial frame was tracked using SimaRPN++. The subsequent

frames were skipped without tracking, and their bounding boxes were replicated from the bounding box of the initial frame. For instance, with

, only frames indexed as 1, 5, 9, 13, etc., underwent tracking using SiamRPN++, whereas frames (2, 3, 4), (6, 7, 8), etc., were skipped. The table indicates that increasing

significantly enhances the processing speed. While SiamRPN++(

) operated at 58.3 FPS,

with

achieved 428.2 FPS. However, as

increased, the accuracy, robustness, and EOA metrics decreased, thus indicating that the bounding box of the previous frame is often not applicable to future frames. By contrast,

involves tracking these frames using the lightweight object tracking method, which predicts the position of the bounding box. This prediction enhances accuracy, robustness, and EOA performance over

. Although this method outperforms

, the bounding boxes predicted inaccurately can still lead to performance degradation compared with SiamRPN++.

In

, SiamRPN++ is applied to frames with a confidence level below a given threshold. Hence, the value of S(%) for

was lower than that for

, as presented in

Table 2. For instance, with

and T = 0.5, S(%) values for

and

were 49.8 and 34.1, respectively. This indicates that approximately 15.7% of the total frames with a confidence level below the threshold employed SiamRPN++ to accurately track the target. This process significantly improved the performance of

in terms of accuracy, robustness, and EOA, compared with

. This demonstrates that the proposed confidence level was an effective threshold for the accuracy of the predicted bounding boxes. Comparing

(T = 0.5 and

) with SiamRPN++ (

), the proposed

accelerated the processing speed by approximately 1.5 times, whereas their accuracies were almost identical. However, the robustness and EOA of

(T = 0.5 and

) were slightly lower than those of SiamRPN++. This slight degradation may stem from an increase in the seven lost frames in

and the absence of bounding box size updates in the lightweight object tracking method. In

, as

increased, the accuracy, robustness, lost counts, and EOA performance degraded significantly. However, in

, even as

increased, the decline in performance metrics was relatively minimal. For example, in

, when

was 1, the number of lost counts was 87; however, when

increased to 10, the number of lost counts increased by approximately 2.6 times to 225. This indicates that indiscriminate frame skipping negatively impacted tracking performance. By contrast, for

, when

was 1, the number of lost counts was 59, and when

increased to 10, the number of lost counts only increased to 84, approximately 1.42 times higher. This demonstrates that the confidence evaluation effectively identifies unreliable frames and triggers robust tracking to prevent tracking failures.

Table 3 lists the performance variation with respect to different threshold values. As the threshold value decreased, the S(%) value increased. An important observation is that

with

= 1 and T = 0.67 outperformed SiamRPN++ (

) in terms of accuracy, robustness, lost counts, and EOA by a small margin. Although SiamRPN++ yielded better tracking results than the lightweight object tracking method, there were instances in which the latter performed better. A threshold value of 0.67 with

= 1 corresponded to a scenario where

matched or slightly surpassed the performance of SiamRPN++ in terms of accuracy, robustness, lost counts, and EOA. (Note that the processing speed of

is significantly higher than that of SiamRPN++.) Despite extensive experiments, determining the optimal threshold value remains a challenge. High threshold values lead to a random performance owing to the factors mentioned earlier. For instance, the threshold value of 0.33 demonstrated superior performance not only in terms of speed but also in terms of accuracy, robustness, lost counts, and EOA as compared with the threshold values of 0.4 and 0.5 (

Table 3). However, as the threshold value decreased further, the performance of accuracy, robustness, lost counts, and EOA deteriorated.

Table 4 presents a comparison of

with

in terms of FPS for some sequences. For

(SiamRPN++), the difference in FPS across the sequences was not pronounced. The highest speed, 63.7 FPS, was achieved in the

ant3 sequence, whereas the lowest speed, 51.8 FPS, was observed in the

ant1 sequence. By contrast, for

, the variance in FPS across the sequences was significant. The highest speed, 117 FPS, was reached in the

handball1 sequence, whereas the lowest speed, 58.9 FPS, was recorded in the

book sequence. Here,

and T were set to 1 and 0.5, respectively. The speed of the proposed method depends on the degree of change between successive frames within a sequence. When the target’s motion is relatively small, the lightweight tracking method accurately tracks the target and achieves significant speed gains. However, when the target’s motion is substantial, the proposed method results in processing overhead without corresponding improvements in speed. For example, in the

book sequence, the target features highly complex motion. As lightweight object tracking relies on a block-matching algorithm that solely considers translational motion, accurately tracking a rotating book becomes challenging. Owing to the inaccurate tracking results, the confidence levels dropped below the threshold, and the tracking speed did not improve. By contrast, in the

fish1 sequence, the target (a fish) exhibited minimal motion. The confidence levels surpassed the threshold, and the proposed method skipped executing SiamRPN++ for most frames, thus achieving maximum acceleration.

Li [

34] proposed a fast variant of SiamRPN++ using MobileNet [

42] backbone, denoted as

in

Table 5. This SiamRPN++ variant (

) enhances processing speed compared with

(SiamRPN++). In

, the proposed method is combined with

to further increase the tracking speed.

Table 5 lists the tracking speed enhancement of

using the proposed method at various threshold values. As presented in the table,

improved the tracking speed over

while providing comparable performance.

further improved the tracking speed of

while minimizing performance degradation. Notably, when

= 1 and T = 0.33,

achieved performance accuracy comparable to

while improving the tracking speed by approximately 1.64 times.

Given the compact size of the target objects in VOT2018, the 3% overhead incurred by lightweight object tracking, which employs a full-search block-matching algorithm implemented using C code, was practically negligible. However, when dealing with larger target sizes, the search range may need to be expanded. In such cases, utilizing fast search techniques is essential.

4.3. Proposed Method Combined with Other Methods Including MixFormer

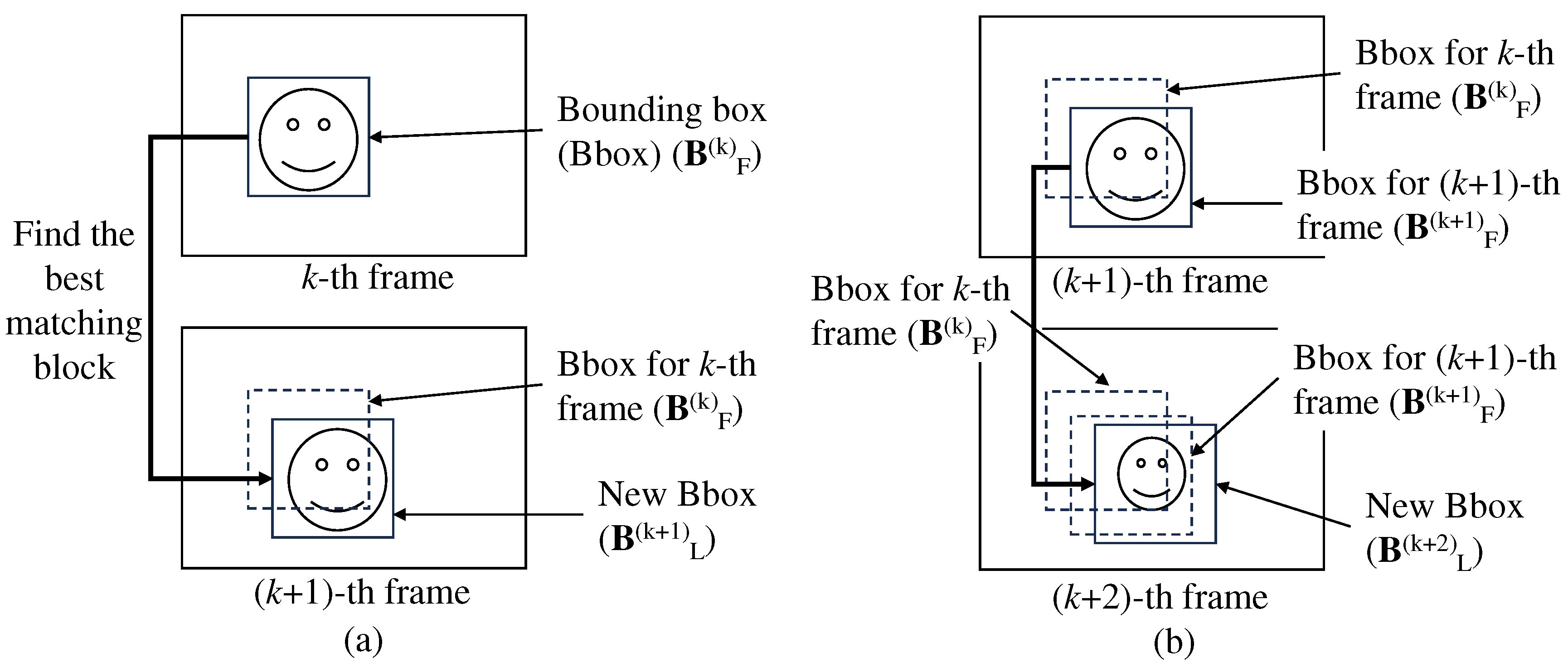

As depicted in

Figure 1, the lightweight object tracking method was used to find the best candidate for a target from the previous frame within the current frame. Subsequently, the confidence level,

, was calculated and used to determine whether to invoke a robust but complex existing method. Therefore, for a given target, the existing method did not influence the skipping decision. The only impact of the existing method was that if it failed to detect the target object, an incorrect target object was fed into the lightweight object tracking model. Consequently, the performance of the proposed method is not dependent on the specific existing method combined with it. Instead, the major factor affecting the performance of the proposed method is the characteristics of the input video, such as texture, motion amount, object deformation, and other factors.

To validate this, two experiments were designed as follows: In the first experiment, we assumed the existence of an ultimate tracking algorithm,

, that always provided the ground truth. The proposed method combined with

is denoted as

. Given the ground truth for the dataset,

consistently provides the correct tracking results for the corresponding frames. Since recent tracking methods generally outperform

(SiamRPN++), their performance will likely fall between that of

and

. In the second experiment, the proposed method was combined with the real tracking algorithm, MixFormer [

22,

23]; the source code for MixFormer is available in [

40]. In the proposed method combined with MixFormer,

is denoted as

. Since the optimal parameters for MixFormer on the VOT2018 dataset were not specified, default values were used in this experiment.

Table 6 shows the comparison results of

,

, and

in terms of skipping rate (%) for

= 1, 2, and 5. The threshold value was set to 0.5. The table shows the average skipping rates across the entire VOT2018 dataset and presents the skipping rates for selected video sequences from the dataset. As shown in the table, the average skipping rates for

= 1, 2, and 5 were approximately 0.34, 0.45, and 0.55, respectively, regardless of the algorithms used. Despite the significantly superior tracking performance of

compared to

and

, the average skipping rates across the three methods were similar. This observation underscores that the choice of algorithm has minimal impact on the skipping decision. Instead, the skipping rate is highly influenced by the characteristics of the video sequence. For example, the skipping rates for the

fish1 sequence at

= 2 were 0.63, 0.65, and 0.65 for

,

, and

, respectively. On the other hand, the skipping rates for the

book sequence for

= 2 were 0.05, 0.06, and 0.03 for the same methods, respectively.

Table 7 represents the accuracy, robustness, and EOA for

,

, and

. Since

always provided the correct tracking results, there were no lost frames, resulting in a robustness value of 0 for

. Although the proposed algorithm accelerated the tracking speed by frame skipping, the performance degradation remained minimal.

The ground truth represents the theoretical upper bound of tracking performance, as no tracking algorithm can outperform it. By combining the proposed method with ground truth, the experiments in

Table 6 and

Table 7 demonstrate the maximum potential performance when integrated with an ideal tracker. These experimental results show that the proposed method provides consistent performance regardless of the combined existing methods, both in terms of accuracy and acceleration.

To further validate the proposed method, experiments were conducted on two additional widely used datasets, namely OTB100 [

5] and UAV123 [

43], using MixFormer as the baseline tracker, as presented in

Table 8. Compared to

, the MixFormer combined with the proposed method,

significantly accelerates processing speed with only minimal degradation in AUC score and precision for the OTB100 dataset. Interestingly, for the UAV123 dataset, the proposed method (

) not only accelerated the processing speed but also slightly improved tracking performance (AUC and precision). This improvement may be attributed to the characteristics of UAV123, where objects exhibit relatively predictable motion and minimal background changes, allowing the lightweight tracker to perform effectively even with frame skipping. These results confirm that the proposed method is not limited to specific datasets but is effective across various tracking scenarios, highlighting its generalizability and robustness.

Figure 4 illustrates examples of successful and unsuccessful tracking. In the

fish sequence, MixFormer (

) slightly missed the target across the frames, while MixFormer combined with the proposed method (

) accurately tracked the target throughout the sequence. This highlights how the lightweight tracker can enhance tracking accuracy in some scenarios. Conversely, in the

ant1 sequence, where the target underwent slight rotation,

failed to track the target, while

successfully maintained the target’s position, though with a less accurate bounding box. In the

ball2 sequence,

successfully tracked the target, whereas

failed. These examples illustrate specific cases where the performance of

and

diverge. It is worth noting, however, that in the majority of the cases, the results of

and

were nearly identical.