Research on Parameter Compensation Method and Control Strategy of Mobile Robot Dynamics Model Based on Digital Twin

Abstract

:1. Introduction

- (1)

- We require a dynamic model that can be applied to unknown situations in order to increase the positioning accuracy of outdoor mobile robots [34,35]. However, since the mobile robot is a complicated multi-DOF system, it is challenging to create an accurate dynamic model which depends on a variety of elements, including the slope and smoothness of the road surface [36].

- (2)

- No platforms for robotic surveillance systems can be customized to fit different application situations, features, controls, visualization, and other needs [37].

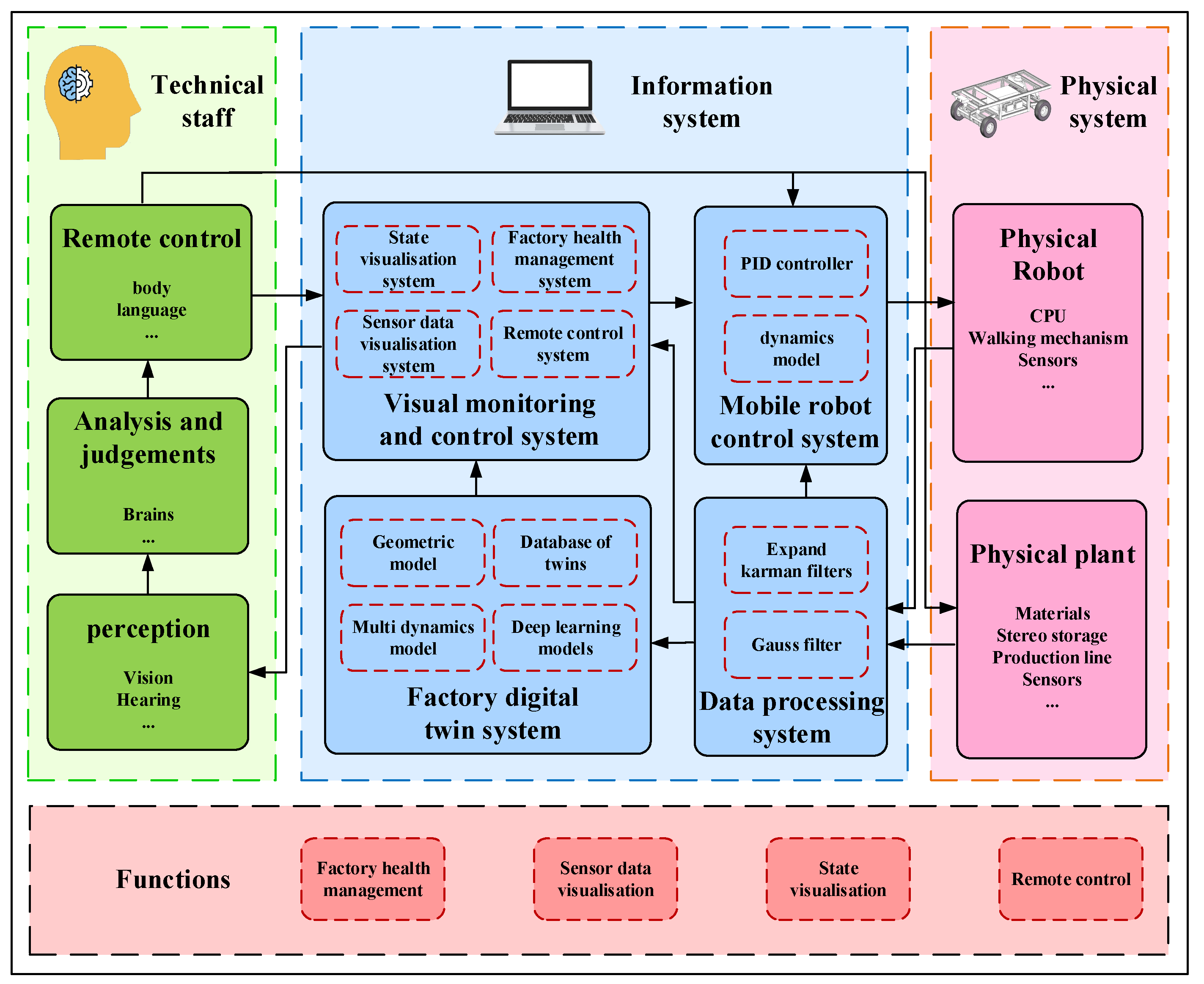

2. Visual Monitoring and Control System for Inspection Robots

2.1. System Architecture for Inspection Robots Based on Digital Twin

2.2. Digital Twin System for High-Risk Factories

2.2.1. Digital Twin System Architecture

2.2.2. Digital Twin System for Mobile Robots

2.3. Design of Visual Monitoring and Control System

3. Nonlinear Dynamics Parameter Compensation Strategy

3.1. Nonlinear Inverse Dynamics Model for Mobile Robots

3.2. Strategy for Compensating Parameters in a Nonlinear Dynamics Model

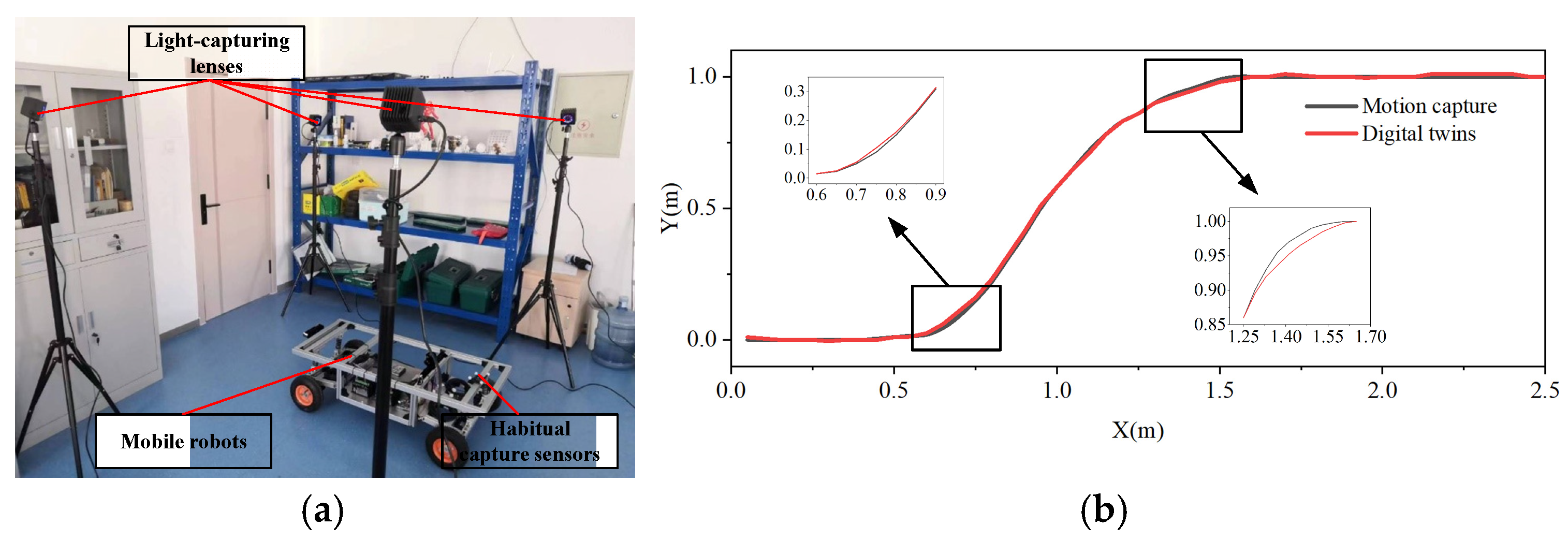

3.2.1. Acquisition of Motion Datasets

3.2.2. Calculation and Compensation of Dynamic Model Errors

4. Experiment

4.1. Physical Platform of Mobile Robot

4.2. Virtual–Real Consistency Experiment

4.3. Visualization Monitoring and Control Experiment

4.4. Positioning Accuracy Experiment

5. Conclusions

- (1)

- A control approach for both indoor and outdoor inspection robots is proposed, based on a five-dimensional spatial model of a digital twin system. Sensor data from the mobile robot are uploaded to the twin database via a wireless network. By evaluating the robot operational state and sending the proper motion parameters to the physical mobile robot entity, the approach presented in this study is more practical than the current ones for controlling the past and present operating states of factories and mobile robots. It also increases the accuracy of mobile robot control and broadens the scope of applications of mobile robots.

- (2)

- A visual monitoring and control system for indoor and outdoor inspection robots has been established to achieve three-dimensional visual monitoring and abnormal monitoring of the robot status, operating environment, and sensor information during the inspection process. Virtual reality technology is used in this system. This makes it possible to monitor mobile robots and industrial machinery in several dimensions visually. The usability and inspection efficiency of the mobile robots have been enhanced by integrating the visual monitoring system, factory health management system, and remote control system onto a single platform. This ensures the stable and safe operation of high-risk factories. In contrast to Yong Zhou’s [33] approach, this paper combines the visual monitoring system, factory health management system, and mobile robot remote control system into a single platform. This approach has a higher level of system integration, enhances system usability, and ensures the stable and safe operation of high-risk factories.

- (3)

- When operating on complicated road surfaces, mobile robots jitter and have low precision due to the inaccuracy of linear models, which are used in the majority of current robot dynamics models. Thus, for the nonlinear inverse dynamic model of indoor and outdoor inspection robots, this work suggests a parameter compensation technique. We construct a multi-degree-of-freedom dynamics model for the mobile robot using digital twin and finite element technologies. A data-driven method is used to estimate the unknown parameters in the dynamics model by collecting motion data from the mobile robot under different pavement conditions, such as level pavement, complicated pavement, concave pavement, and convex pavement. This increases the stability and positioning accuracy of outdoor mobile robots by improving the accuracy of the mobile robot dynamics model.

- (4)

- Through coordinated scheduling and operation planning for the mobile robot and production equipment, experiments on the mobile inspection robot have demonstrated the interoperability of the various modules of the visual monitoring and control system. This has allowed for the visual inspection in high-risk factories. Reducing positioning errors is a significant effect of the parameter compensation approach for the mobile inspection robot dynamics model, especially under unstructured road circumstances.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Y.; Lu, Y.; Bao, J.; Tao, F. Prognostics and health management via long short-term digital twins. J. Manuf. Syst. 2023, 68, 560–575. [Google Scholar] [CrossRef]

- Liu, C.; Mu, F. Design and Research of Intelligent Logistics Robot based on STM32. Recent Adv. Electr. Electron. Eng. 2021, 14, 44–51. [Google Scholar]

- Ouyang, D.; Chen, M.; Huang, Q.; Weng, J.; Wang, Z.; Wang, J. A review on the thermal hazards of the lithium-ion battery and the corresponding countermeasures. Appl. Sci. 2019, 12, 2483. [Google Scholar] [CrossRef]

- Shelton, G.A. Evaluation and design of structures to contain lithium-ion battery hazards. Process Saf. Prog. 2022, 41, 433–436. [Google Scholar] [CrossRef]

- Schismenos, S.; Chalaris, M.; Stevens, G. Battery hazards and safety: A scoping review for lead acid and silver-zinc batteries. Saf. Sci. 2021, 140, 105290. [Google Scholar] [CrossRef]

- Ramūnas, B. Beyond the Human Eye: AI Improves Inspection in Manufacturing. Assembly 2024, 67, 25. [Google Scholar]

- Whitney, D.; Rosen, E.; Ullman, D.; Phillips, E.; Tellex, S. ROS Reality: A Virtual Reality Framework Using Consumer-Grade Hardware for ROS-Enabled Robots. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Suwanan, K.; Santi, N.; Panuthat, B. Design and Development of Cyber Physical System for Real-Time Web-based Visualization and Control of robot arm. In Proceedings of the 2020 5th International Conference on Control and Robotics Engineering (ICCRE), Osaka, Japan, 24–26 April 2020. [Google Scholar]

- Yan, Z.; Zhen, J. Overview of Patrol Robots Application and lt’s Prospects in Airports. Mod. Comput. 2022, 28, 50–55. [Google Scholar]

- Ma, C.; Guo, S.; Wu, J. Research On Omnidirectional Mobile Robot Tracking Location And Motion Trajectory In A Complex Environment. Telecommun. Radio Eng. 2021, 80, 65–76. [Google Scholar] [CrossRef]

- Sathian, P.; Raihan, E.A.; Mohan, R.E.; Prabakaran, V. AI-Enabled Vibrotactile Feedback-Based Condition Monitoring Framework for Outdoor Mobile Robots. Mathematics 2023, 11, 3804. [Google Scholar] [CrossRef]

- Deng, F.; Zhu, X.; He, C. Vision-based real-time traversable region detection for mobile robot in the outdoors. Sensors 2017, 17, 2101. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Ge, D.; Song, J.; Xiang, W. Outdoor scene understanding of mobile robot via multi-sensor information fusion. J. Ind. Inf. Integr. 2022, 30, 100392. [Google Scholar] [CrossRef]

- Payam, N.; Fiona, J.S.M.; Will, N.B. In situ skid estimation for mobile robots in outdoor environments. J. Field Robot. 2023, 41, 179–194. [Google Scholar]

- Sarcevic, P.; Csík, D.; Pesti, R.; Stančin, S.; Tomažič, S.; Tadic, V.; Rodriguez-Resendiz, J.; Sárosi, J.; Odry, A. Online Outdoor Terrain Classification Algorithm for Wheeled Mobile Robots Equipped with Inertial and Magnetic Sensors. Electronics 2023, 12, 3238. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhuang, Y.; Yan, F.; Hu, H.; Wang, W. RGB-DI Images and Full Convolution Neural Network-Based Outdoor Scene Understanding for Mobile Robots. IEEE Trans. Instrum. Meas. 2019, 68, 27–37. [Google Scholar] [CrossRef]

- Sango, M.; Kimitoshi, Y.; Yoshitaka, H.; Takashi, T. Traversable Region Estimation for Mobile Robots in an Outdoor Image. J. Intell. Robot. Syst. 2018, 92, 453–463. [Google Scholar]

- Pookkuttath, S.; Palanisamy, A.P.; Elara, R.M. AI-Enabled Condition Monitoring Framework for Outdoor Mobile Robots Using 3D LiDAR Sensor. Mathematics 2023, 11, 3594. [Google Scholar] [CrossRef]

- Sathian, P.; Povendhan, A.P.; Mohan, R.E. Outdoor Localization for a Mobile Robot under Different Weather Conditions Using a Deep Learning Algorithm. J. Eur. Systèmes Autom. 2023, 56, 1. [Google Scholar]

- Atiyah, A.H.; Hassan, Y.M. Outdoor Localization of 4-Wheels for Mobile Robot Using CNN with 3D Data. Int. J. Adv. Sci. Eng. Inf. Technol. 2022, 12, 1403–1409. [Google Scholar] [CrossRef]

- Lee, S.W.; Choi, H.M.; Lim, H.J. Unscented Kalman Filter Based 3D Localization of Outdoor Mobile Robots. J. Korean Soc. Precis. Eng. 2020, 37, 331–338. [Google Scholar] [CrossRef]

- Lee, S.W.; Choi, H.M.; Lim, H.J. Extended Kalman Filter Based 3D Localization Method for Outdoor Mobile Robots. J. Korean Soc. Precis. Eng. 2019, 36, 851–858. [Google Scholar] [CrossRef]

- Lee, S.W.; Lim, H.J. Unscented Kalman Filter based Outdoor Localization of a Mobile Robot. J. Korean Soc. Precis. Eng. 2019, 36, 183–190. [Google Scholar] [CrossRef]

- Sivapong, N.; Delowar, H.; Shin-ichiro, K.; Genci, C. Deep learning-based landmark detection for mobile robot outdoor localization. Machines 2019, 7, 25. [Google Scholar] [CrossRef]

- Ron, S.K.; Jacob, B. The digital twin in Industry 4.0: A wide-angle perspective. Qual. Reliab. Eng. Int. 2021, 38, 1357–1366. [Google Scholar]

- Uhlemann, H.T.; Lehmann, C.; Steinhilper, R. The Digital Twin: Realizing the Cyber-Physical Production System for Industry 4.0. Procedia CIRP 2017, 61, 335–340. [Google Scholar] [CrossRef]

- Enrique, C.; Toshio, U.; Ixchel, G.; Ramirez, A. A Path to Industry 5.0 Digital Twins for Human–Robot Collaboration by Bridging NEP+ and ROS. Robotics 2024, 13, 28. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Y.; Feng, J.; Gao, J.; Zhang, L. A framework for development of digital twin industrial robot production lines based on a mechatronics approach. Int. J. Model. Simul. Sci. Comput. 2022, 14, 2341025. [Google Scholar]

- Kroell, N.; Maghmoumi, A.; Dietl, T.; Chen, X.; Küppers, B.; Scherling, T.; Feil, A.; Greiff, K. Towards digital twins of waste sorting plants: Developing data-driven process models of industrial-scale sensor-based sorting units by combining machine learning with near-infrared-based process monitoring. Resour. Conserv. Recycl. 2024, 200, 107257. [Google Scholar] [CrossRef]

- Hajjaj, H.S.S.; Sahari, M.S.K. Developing a Portable Human–Robot Interaction (HRI) Framework for Outdoor Robots Through Selective Compartmentalization. Arab. J. Sci. Eng. 2019, 44, 9779–9786. [Google Scholar] [CrossRef]

- Doris, A.; Jonas, S.I.R.; Daniëlle, V.T.; Joris, V.D.; Zoltan, R. Mirrorlabs—Creating accessible Digital Twins of robotic production environment with Mixed Reality. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Utrecht, The Netherlands, 14–18 December 2020. [Google Scholar]

- Li, X.; He, B.; Wang, Z.; Zhou, Y.; Li, G.; Jiang, R. Semantic-Enhanced Digital Twin System for Robot–Environment Interaction Monitoring. IEEE Transac-Tions Instrum. Meas. 2021, 70, 7502113. [Google Scholar] [CrossRef]

- Zhou, Y.; Fu, Z.; Zhang, J.; Li, W.; Gao, C. A Digital Twin-Based Operation Status Monitoring System for Port Cranes. Sensors 2022, 22, 3216. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, J.; He, Z.; Li, Q.B.E.; Khan, F.A.; Boada, M.J.L.; Yu, R.; Ding, S.; Chen, Y. A hierarchical constraint approach for dynamic modeling and trajectory tracking control of a mobile robot. J. Vib. Control. 2022, 28, 564–576. [Google Scholar]

- Han, X.; Ge, M.; Cui, J.; Wang, H.; Zhuang, W. Motion modeling of a non-holonomic wheeled mobile robot based on trajectory tracking control. Trans. Can. Soc. Mech. Eng. 2020, 44, 228–233. [Google Scholar] [CrossRef]

- Kee, D.C. Motion Tracking of Four-Wheeled Mobile Robots in Outdoor Environments Using Bayes’ Filters. Int. J. Precis. Eng. Manuf. 2023, 24, 767–786. [Google Scholar]

- Tao, F.; Sun, X.; Cheng, J.; Zhu, Y.; LIU, W.; Wang, Y.; Xu, H.; Hu, T.; Liu, X.; Liu, T.; et al. makeTwin: A reference architecture for digital twin software platform. Chin. J. Aeronaut. 2024, 37, 1–18. [Google Scholar] [CrossRef]

- Grieves, M.; Vickers, J. Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems. In Transdisciplinary Perspectives on Complex Systems; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 85–113. [Google Scholar]

- Tao, F.; Cheng, J.; Qi, Q.; Zhang, M.; Zhang, H.; Sui, F. Digital twin-driven product design, manufacturing and service with big data. Int. J. Adv. Manuf. Technol. 2018, 94, 3563–3576. [Google Scholar] [CrossRef]

- Tao, F.; Liu, W.; Zhang, M.; Hu, T.; Luo, Y. Five-dimension digital twin model and its ten applications. Comput. Integr. Manuf. Syst. 2019, 25, 1–18. [Google Scholar]

- Newrzella, S.R.; Franklin, D.W.; Haider, S. 5-Dimension Cross-Industry Digital Twin Applications Model and Analysis of Digital Twin Classification Terms and Models. IEEE Access 2021, 9, 131306–131321. [Google Scholar] [CrossRef]

- Wu, C.; Zhou, Y.; Pessôa, P.V.M.; Peng, Q.; Tan, R. Conceptual digital twin modeling based on an integrated five-dimensional framework and TRIZ function model. J. Manuf. Syst. 2021, 58, 79–93. [Google Scholar] [CrossRef]

- Zou, Y.; Li, R.; Zhang, X.; Song, J. Five-dimensional model research of complex product assembly driven by digital twin. Int. J. Wirel. Mob. Comput. 2021, 21, 198–206. [Google Scholar] [CrossRef]

- Sun, L.; Pei, A.; Qi, X.; Cao, S.; Yang, R.; Liu, X. Dynamic Analysis of Digital Twin System Based on Five-Dimensional Model. J. Phys. Conf. Ser. 2020, 1486, 072038. [Google Scholar] [CrossRef]

- Xu, J.; Guo, T. Application and research on digital twin in electronic cam servo motion control system. Int. J. Adv. Manuf. Technol. 2021, 112, 1145–1158. [Google Scholar] [CrossRef]

- Stączek, P.; Pizoń, J.; Danilczuk, W.; Gola, A. A Digital Twin Approach for the Improvement of an Autonomous Mobile Robots (AMR’s) Operating Environment—A Case Study. Sensors 2021, 21, 7830. [Google Scholar] [CrossRef]

- Cheng, Q.; Cao, Y.; Liu, Z.; Cui, L.; Zhang, T.; Xu, L. A Health Management Technology Based on PHM for Diagnosis, Prediction of Machine Tool Servo System Failures. Appl. Sci. 2024, 14, 2656. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J. Research and Innovation in Predictive Remote Control Technology for Mobile Service Robots. Adv. Comput. Signals Syst. 2023, 7, 1–6. [Google Scholar]

- YI, Y. Design of Robot Remote Control System Based on Sensor Fusion Technolog. Comput. Meas. Control. 2023, 31, 142–147+182. [Google Scholar]

- Kao, I.H.; Su, J.A.; Perng, J.W. Design of Outdoor Autonomous Moble Robot. arXiv 2022, arXiv:2201.12605. [Google Scholar]

- Ohno, K.; Tsubouchi, T.; Shigematsu, B.; Yuta, S. Differential GPS and odometry-based outdoor navigation of a mobile robot. Adv. Robot. 2004, 18, 611–635. [Google Scholar] [CrossRef]

- Edwin, L.L.; Fabián, R.J. Global Map Generation and SLAM using LiDAR and Stereo Camera for tracking motion of Mobile Robot. ITECKNE Innovación Investig. Ing. 2019, 16, 58–70. [Google Scholar]

| Category | Constraints |

|---|---|

| go straight and start straight | Pavement: flat pavement, concave pavement, raised pavement, complex pavement; Speed: 0–1 m/s; Acceleration: 0.2 m/s2, 0.4 m/s2, 0.6 m/s2, 0.8 m/s2 and 1 m/s2. |

| straight at a constant speed | Pavement: flat pavement, concave pavement, raised pavement, complex pavement; speed: 0.2 m/s, 0.4 m/s, 0.6 m/s, 0.8 m/s, 1.0 m/s. |

| turn and start | Pavement: flat pavement, concave pavement, raised pavement, complex pavement; Speed: 0–1 m/s; Acceleration: 0.2 m/s2, 0.4 m/s2, 0.6 m/s2; Turning radius: 1 m, 2 m, 3 m. |

| constant speed in turning | Pavement: flat pavement, concave pavement, raised pavement, complex pavement; Speed: 0.2 m/s, 0.4 m/s, 0.6 m/s; Turning radius: 1 m, 2 m, 3 m. |

| Part | Parameter | Value |

|---|---|---|

| 3D camera | resolution Frame rate | 1920 × 1080 30 fps |

| Lidar | Ranging principle Measure the radius Frequency of scans | TOF 30 m 10 Hz |

| Raspberry Pi 4B | CPU Running memory operating system | 1.5 GHz 4 cores 8 GB Ubuntu 22.04 |

| IMU | Number of axes Output frequency | 9 100 Hz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Shang, X.; Wang, Y.; Liu, C.; Song, L.; Zhang, Y.; Gu, L.; Zhang, X. Research on Parameter Compensation Method and Control Strategy of Mobile Robot Dynamics Model Based on Digital Twin. Sensors 2024, 24, 8101. https://doi.org/10.3390/s24248101

Li R, Shang X, Wang Y, Liu C, Song L, Zhang Y, Gu L, Zhang X. Research on Parameter Compensation Method and Control Strategy of Mobile Robot Dynamics Model Based on Digital Twin. Sensors. 2024; 24(24):8101. https://doi.org/10.3390/s24248101

Chicago/Turabian StyleLi, Renjun, Xiaoyu Shang, Yang Wang, Chunbai Liu, Linsen Song, Yiwen Zhang, Lidong Gu, and Xinming Zhang. 2024. "Research on Parameter Compensation Method and Control Strategy of Mobile Robot Dynamics Model Based on Digital Twin" Sensors 24, no. 24: 8101. https://doi.org/10.3390/s24248101

APA StyleLi, R., Shang, X., Wang, Y., Liu, C., Song, L., Zhang, Y., Gu, L., & Zhang, X. (2024). Research on Parameter Compensation Method and Control Strategy of Mobile Robot Dynamics Model Based on Digital Twin. Sensors, 24(24), 8101. https://doi.org/10.3390/s24248101