The details are explained as follows: first, we analyzed the principle and constitution of DWS, including its basic working principle (A-1), constituent components (A-2), and graded warning mechanisms (A-3), which clarified the structure of the warning system. Next, we conducted an in-depth analysis of the AR-HUD principle (B-1), examined different warning interface cases (B-2), and summarized the characteristics of AR-HUD warning interface design (B-3). Then, we analyzed cognitive load theory, covering its basic concepts (C-1), the different types of cognitive load (C-2), and the application of cognitive load in the driving domain (C-3). Finally, we explored the theory and methods of ecological interface design (D-1), including abstract level analysis (D-2) and the SRK classification framework (D-3), as well as how to effectively transform interface design elements through ecological interface semantic mapping. These theories provide crucial design guidance for creating an intuitive and efficient AR-HUD driving warning interface. The theoretical analysis provides a solid theoretical foundation for the interface design and establishes evaluation standards for the construction of a cognitively balanced AR-HUD driving warning system.

2.1. Ecological Interface Cognitive Balanced Design Strategy

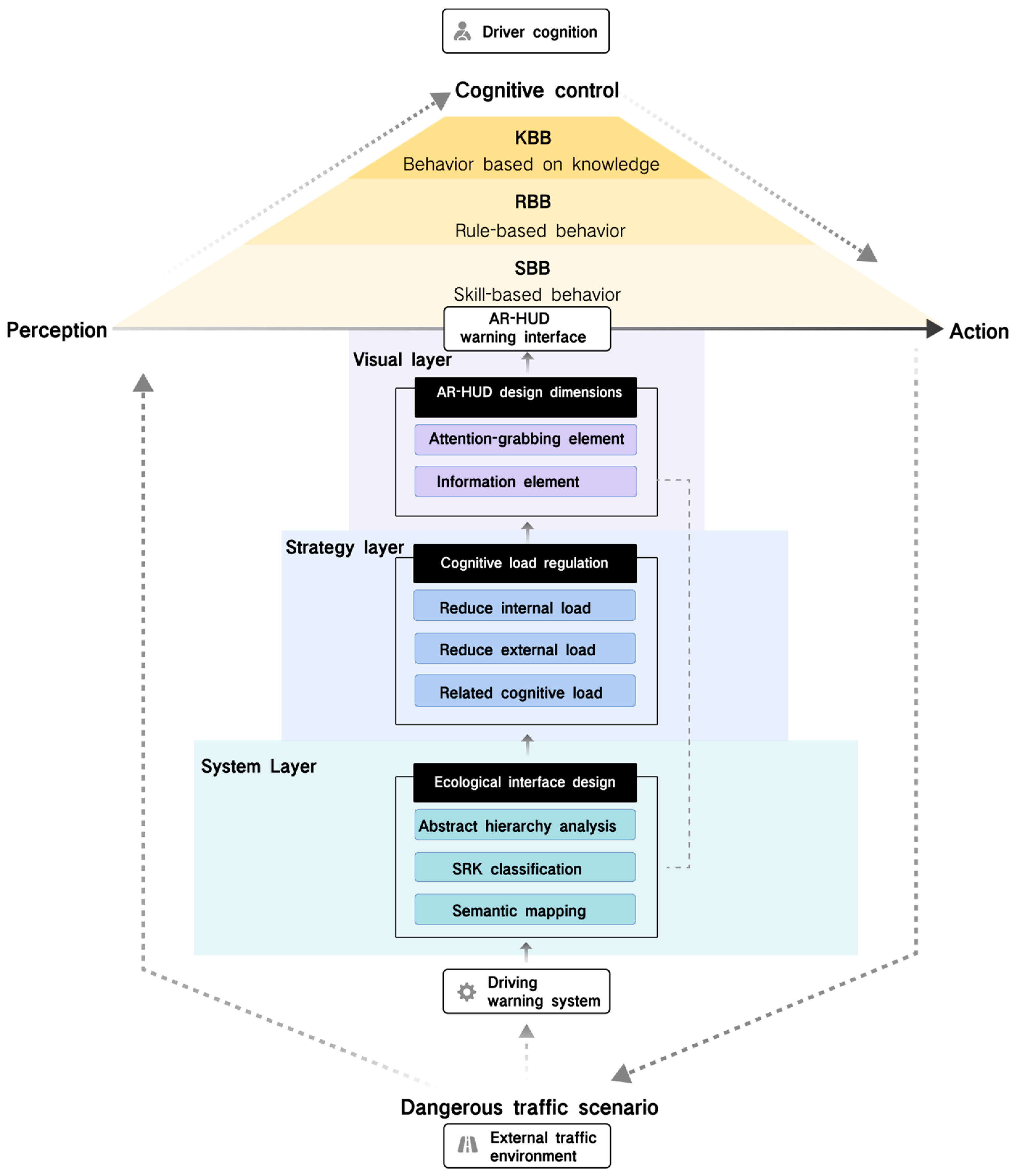

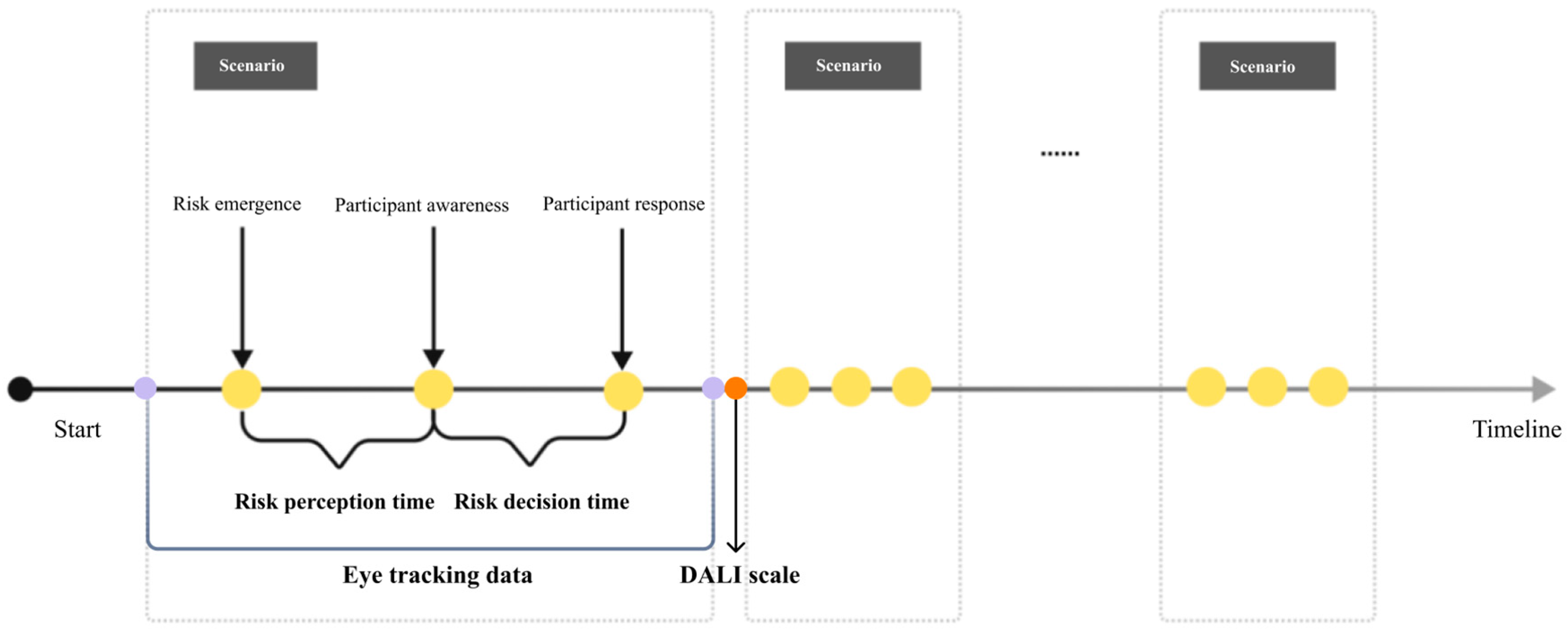

According to the research of DWS, AR-HUD interface design, CLT, and EID method, three layers of a balanced cognitive load AR-HUD warning interface can be constructed, as shown in

Figure 1. Firstly, at the system layer, the external environment and the architecture of the warning system are analyzed through ecological interface design. Secondly, at the strategy layer, the interface design strategy is adjusted by the causal dimension of cognitive load. Finally, at the visual layer, the design process is considered from the visual design dimension of the AR-HUD. This comprehensive dynamic analysis ensures that the warning interface meets system architecture, driver cognitive, and AR-HUD characteristic needs. The three layers are organically combined. For example, the physical form layer at the abstract level intuitively presents the function through the AR-HUD design dimension, and the SRK classification also uses the classification of these dimensions to classify the types of warning information. In addition, in terms of semantic mapping, the visual design is optimized by combining the visual thesaurus and AR-HUD warning interface. The application of these three hierarchical methods helps to balance the cognitive load, match the driver’s internal cognition with the external environment, promote the realization of skill-based behavior, make the driver react quickly in dangerous scenarios, and improve the acquisition ability of the driving schema.

System layer: analysis of environment and system by ecological interface design.

In the cognitive load balance design of the AR-HUD warning system, it is important to analyze and construct the relationship between the external environment and the warning system by adopting the principle of ecological interface design (EID). The goal of EID is to create an intuitive representation that makes system status and operation clear and understandable to the driver, enabling efficient decision making. First of all, through the abstract hierarchy analysis of EID, the overall framework of the AR-HUD system can be deeply understood, and the interaction and dependence of various layers in the purpose–means structure can be revealed. Secondly, the whole–part structure helps to identify the constraints on variables at each layer and explore their interactions. These variables can be mapped to the SRK classification to identify the cognitive needs of different driving tasks. Finally, semantic mapping translates these constraints and information requirements into specific interface elements to ensure that information presentation matches driver cognitive patterns. Through this series of analyses, the AR-HUD warning system interface is carefully constructed to support fast, intuitive cognitive processing to help drivers maintain cognitive load balance and optimize the overall driving experience.

Strategy layer: interface elements of cognitive load regulation.

In the strategy layer of the AR-HUD warning system, the goal of cognitive load regulation is to effectively transmit warning information without exceeding the processing ability of the driver. This requires careful design of interface elements to enable rapid absorption and understanding of information while reducing unnecessary cognitive load. It is necessary to comprehensively consider the nature of the task, the driver’s ability, and their interaction to reduce the internal and external cognitive load and improve the related cognitive load. To this end, a modular holistic task strategy is adopted to present information in stages to help drivers understand and process the warning information. At the same time, it is necessary to avoid unnecessary comparisons between goals and problem states, reduce information redundancy, and integrate information sources in emergencies to prevent distraction. The interface design should also be adjusted according to the professional knowledge level of the driver to provide appropriate information and support. To improve the related cognitive load, task variation design and embedded stent design can be adopted. Task variation design helps drivers identify key features and promotes schema transfer. Implicit and explicit supports, such as cues and feedback, help drivers effectively identify and respond to potential hazards. These design strategies aim to improve the driver’s cognitive processing efficiency and reduce the safety risks caused by improper information processing. In summary, the balance needs to be found between interface elements, information presentation, and user interaction, ensuring that every design decision is aimed at improving task efficiency and safety while reducing cognitive stress so that the AR-HUD warning interface is aligned with the driver’s cognitive patterns and needs.

Visual layer: visual interface presented from the AR-HUD design dimension.

In the visual hierarchy design of AR-HUD, the warning system should utilize augmented reality technology to effectively present visual information and match the driver’s visual perception and cognitive process. It is not only necessary to focus on the accurate transmission of information but also to consider the impact of visual presentation on the driver’s understanding and reaction. The design dimension of the AR-HUD interface should be combined with the characteristics of warning information and the display characteristics of AR technology to distinguish the attention-grabbing elements and information content elements. The visual design task is to ensure that the warning elements attract the driver’s attention while keeping the message clear and easy to read. Attention-grabbing elements include registration type, visual field position, and visual design factors. The registration types include 3D registration, 2D registration, angle simulation, and unregistered forms, which have different limitations in visual space and depth. The space and depth perception advantages of the AR-HUD should be utilized in the design to integrate warning information into the driver’s field of view to create an intuitive information layer. Visual field position determines where information is presented, while visual design factors focus on color, transparency, dynamic frequency, and saturation. Information content elements are divided into location-specific elements, dynamic environment elements, and action suggestions, corresponding to KBB, RBB, and SBB in SRK classification, respectively. Combined with the visual thesaurus of ecological interface design, we can develop visual languages that conform to cognitive habits and improve information acceptance and warning effects. In short, the interface design of the AR-HUD warning system needs to comprehensively consider the synergy of visual elements to maximize the effect of information transmission and ensure its practicality and effectiveness in warning scenarios.

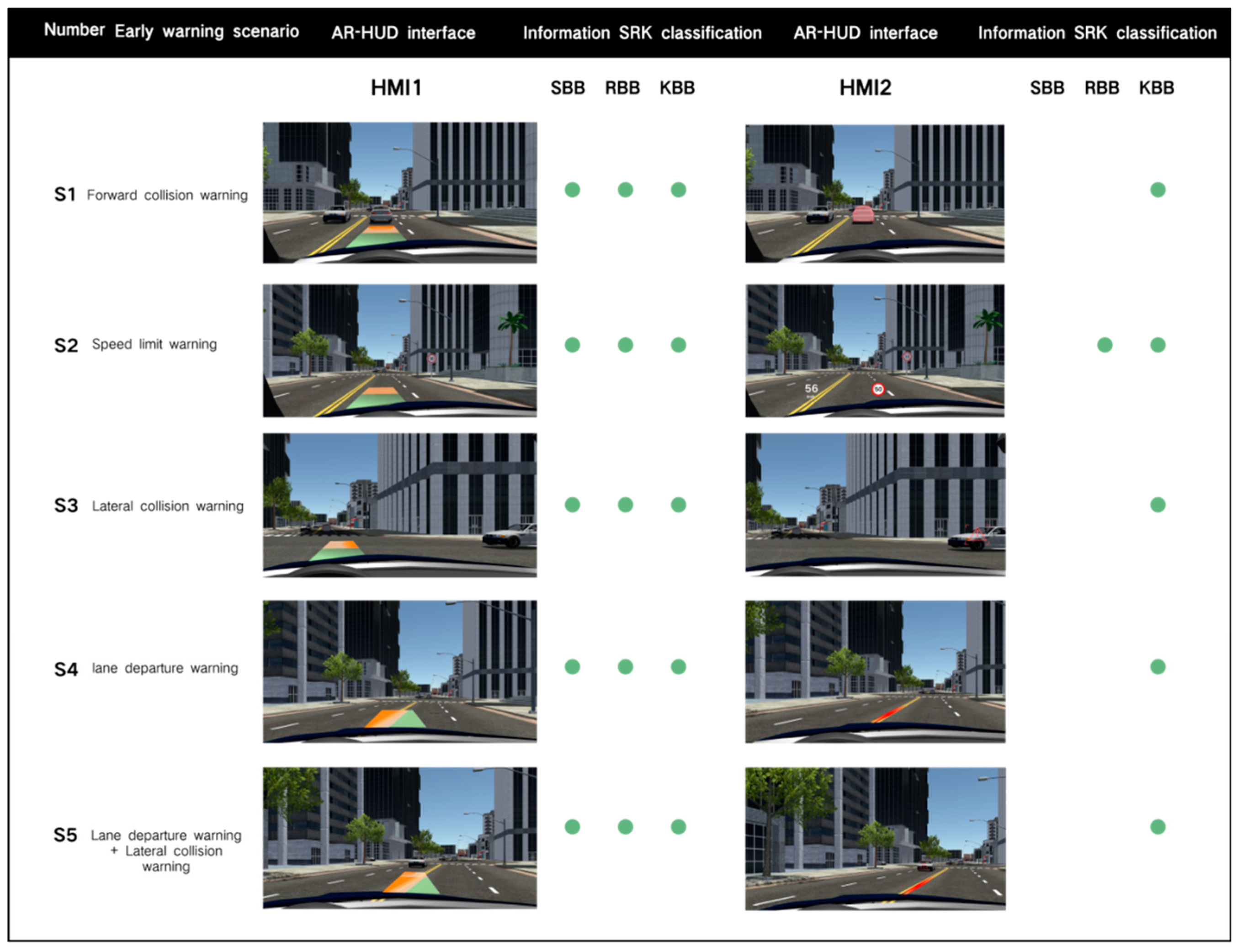

2.2. Ecological Interface Design of AR-HUD Warning System

Based on the proposed design strategy, the information analysis, design, and construction of the AR-HUD interface of the warning system are carried out from the system layer, strategy layer, and vision layer. At the system layer, the interface information architecture is obtained from the means–purpose structure of the abstract-level analysis of the AR-HUD warning system. Combined with the whole–part structure, the key variables corresponding to the information can be associated, the SRK classification of this information can be conducted, and the types of corresponding information elements can be judged. At the strategy level, the internal cognitive load of warning information is reduced by using the modular overall task strategy. By considering different cognitive load effects and professional knowledge reversal, the external load of interface design is reduced. By changing the structure of the task itself and embedding cognitive stents to improve the related cognitive load, cognitive load regulation analysis, interface information architecture, and presentation strategy are carried out. Finally, through the integration of semantic mapping of the visual layer and AR-HUD interface design dimension analysis, the AR-HUD warning interface design framework is constructed.

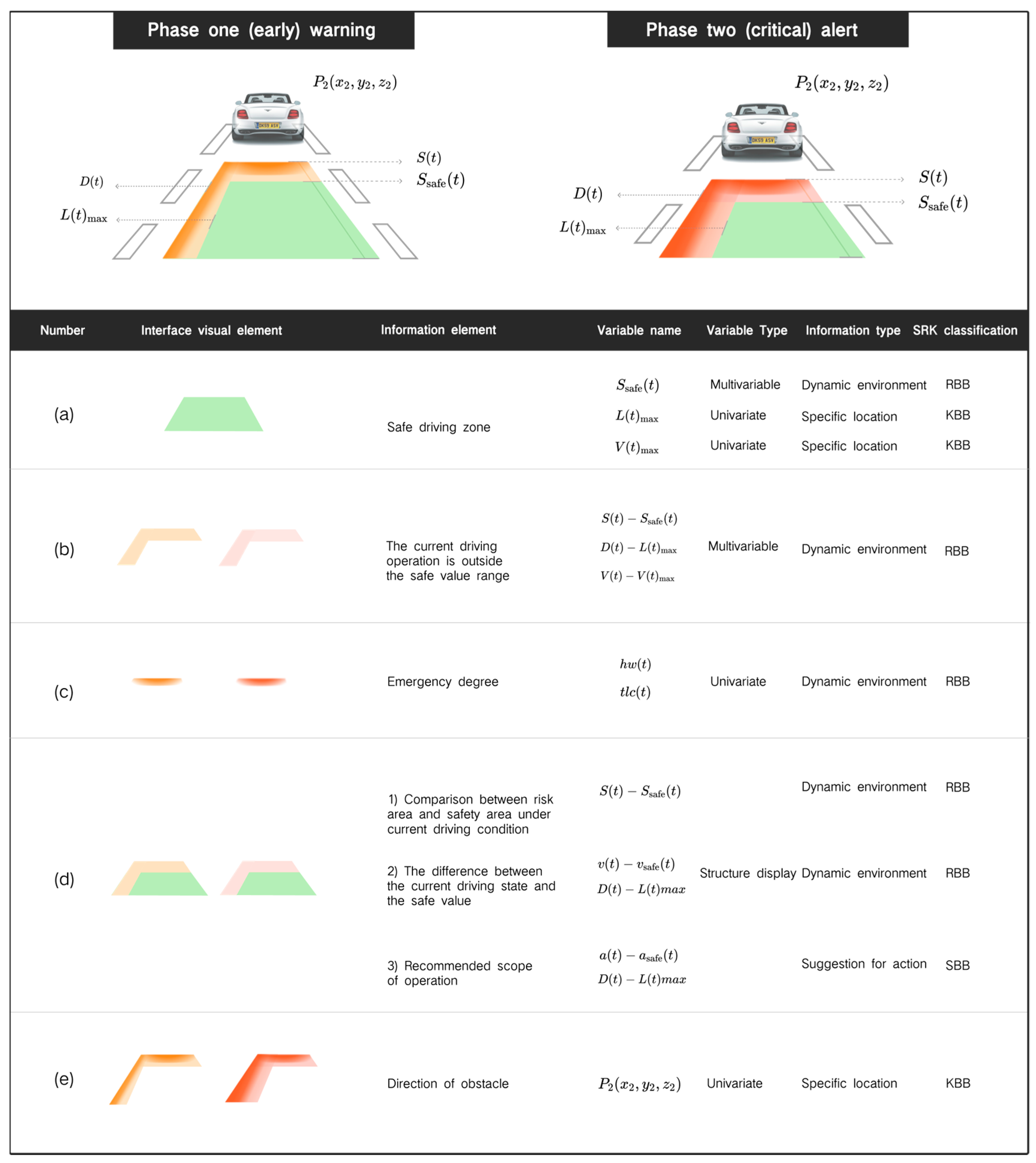

According to the previous analysis of interface design dimensions, warning visual design elements are constructed from two categories: attention-attracting elements and information content elements:

At present, most AR-HUD interfaces only use one type to provide warning information, which easily leads to the imbalance of cognitive load in the warning interface. Therefore, this study not only considers the integration of the three information elements into the overall structure but also considers the presentation of different information elements to drivers with different cognitive levels, that is, the independence of the three elements. After comprehensively considering the information design architecture and principles of the above system layer, strategy layer, and vision layer, the overall AR-HUD warning interface design framework is analyzed and constructed, as shown in

Figure 2 (see

Supplementary Note S1 for details).

From the overall visual point of view, the AR-HUD warning interface is displayed in the shape of a “carpet”, a visual combination of multiple warning information components. The warning color of the overall classification of the interface adopts the three colors of red, yellow, and green, which are widely recognized by the public and represent the warning signals and are also three colors in the traffic lights, referring to different warning levels: green is safe, yellow is the early warning (notice) of phase one, and red is the critical warning of phase two. The interface elements are presented in combination with univariate mapping, multi-variable mapping, and structure display, which are mainly divided into three subcomponents (a–c) and two combined components (d, e). In different warning subsystems, each component represents different meanings of warning.

Figure 2a shows the safe driving area in the current traffic environment, and its width is equal to the width of the vehicle body. In different warning scenarios, the meaning of this component refers to different safety variables, and the SRK and information types are also different. For example, in collision warning, its vertical boundary value refers to

, the safety distance that changes over time, which is related to the difference in speed between the vehicle and obstacles, and the headway threshold

. Therefore, it belongs to the RBB information type in a dynamic environment. In lane departure warning, the lateral boundary value represents the maximum allowable lateral departure

, which can be directly presented on the coordinates of the driver’s perspective in combination with the 3D registration display mode of the AR-HUD and can inform the driver of the location information of the specific safety area, so it belongs to the KBB classification type.

Figure 2b indicates the range beyond the safe value under the current driving operation. The larger the transverse and longitudinal area, the more the current driving behavior (transverse and longitudinal acceleration) exceeds the safe value. The area will change in real time with the driver’s operation change and provide the change mapping of the current driving key value. For example, in the longitudinal direction, when the speed of the car is faster, the longitudinal area is larger. In the AR-HUD interface, the current displacement of the Y-axis can be estimated:

Here, is the velocity vector at time , is the initial velocity vector, and is the acceleration vector at time ; is the displacement vector at time , is the initial displacement vector, and is the velocity vector at time . Therefore, the driver can perceive the change in speed according to the change of displacement, and can perceive the range of change, to properly accelerate and decelerate. It is also conducive to the driver to build a schema of real-time changes in risk factors with driving operations.

Figure 2c shows a gradual color block whose saturation increases as the current warning risk value increases, indicating the urgency of the current situation. In phase one of the warning, a light-yellow gradient appears, and as the risk level continues to increase, it gradually becomes dark yellow. If the risk increases to phase two of the warning, the dark yellow gradient changes to light red, and then continues to deepen to dark red, indicating that the warning level continues to increase.

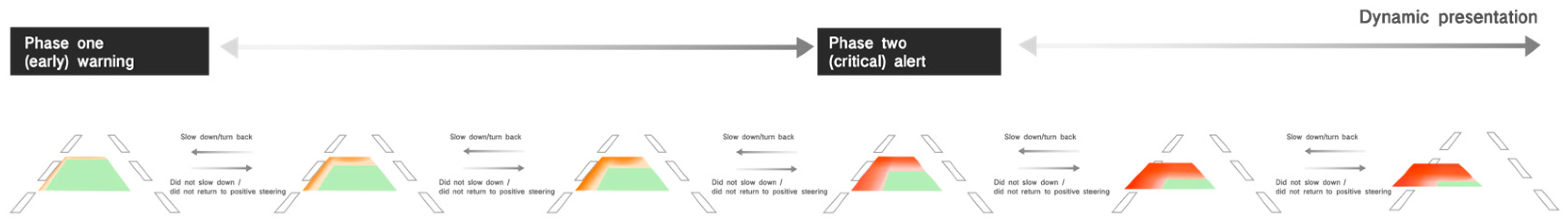

Combined with

Figure 2b,c, the interface of dynamic change of warning risk is shown in

Figure 3. When the warning risk is higher, the area in

Figure 2b is larger (that is, the area in

Figure 2e), and the saturation in

Figure 2c is higher.

Because the driver’s horizontal and vertical operations can change the current risk state in real time, the critical risk may also revert to the early risk, and the area will decrease and the saturation will decrease until it gradually disappears, showing a dynamic change process. At the same time, the visual changes in area and color saturation in

Figure 2b,c also promote the driver to understand the change in risk under dynamic operation and help the driver to construct the relevant cognitive load of dangerous scenarios.

Figure 2d is a composite component of

Figure 2a,b, and there are three main information elements that can be reflected: the comparison between the risk area and the safety area under the current driving state; the difference between the current driving condition and the safe value; recommended scope of operation. In the current driving state, the risk area and the safety area shown in

Figure 2d are the combined components of (a) and (b), and the comparison between the two can reflect the gap beyond the safety range under the current driving operation. Since (b) will change in real time with the driver’s operation, if (b) continues to shrink in the direction of (a) and the area continues to decrease (as shown in

Figure 3), it means that the change in the current driving behavior reduces the risk; otherwise, it means that the risk continues to increase. In the information element of the difference between the current driving state and the safety value, as (d) is the real-time feedback and intuitive mapping of the difference between the safety value and the driving behavior, it can be used as the type of action suggestion information to stimulate the SBB and inform the driver of the direction of safe operation, as shown in

Figure 2, that is, (b) in the direction of (a) contraction, and the longitudinal (Y-axis) contraction represents deceleration. Lateral (X-axis) contraction represents a return to positive steering. This visual schema allows the driver to perceive the association between the current driving operation and the change in the hazard level through appropriate operation and reduces the risk of an accident by controlling the area operation of the value (b). In the matching of the suggested operation range information elements, the visual effect can also inform the driver of the reasonable operation range, as shown in

Figure 2, that is, (b) shrinks in the direction of (a) until the area is 0 and disappears, the warning is lifted, and the effect shown in (a) is achieved (all-green trapezoid).

Figure 2e is a composite component of

Figure 2b,c). The orientation and transparency changes in the visual element in

Figure 2c represent the direction of the obstacle. The derivation process is as follows:

The direction vector of the obstacle is the vector from the observation point

to the obstacle

, which can be represented by

, as follows:

Here, we take the unit vector to represent the direction of the obstacle:

The display area in

Figure 2c does not exceed the area in

Figure 2e, which represents the relationship between the collision risk of the obstacle and the current speed. When the distance of the obstacle is closer, the area in

Figure 2c occupies a larger proportion in

Figure 2b. If the speed changes to a safe speed, that is,

Figure 2b gradually shrinks in the direction of

Figure 2a until it disappears, then

Figure 2e, representing the direction, will also disappear simultaneously, which is conducive to constructing a relationship schema between obstacle distance and driving operation for the driver.