Time-Series Representation Feature Refinement with a Learnable Masking Augmentation Framework in Contrastive Learning

Abstract

1. Introduction

- It introduces a comprehensive framework for contrastive learning through the integration of a learnable masking network with a conventional contrastive learning framework, culminating in the establishment of a loss function. This innovative process not only incorporates existing augmentation techniques but also enhances them by enabling the model to learn and refine features more effectively. By focusing on feature learning in conjunction with the masking mechanism, we aspire to achieve superior feature refinement, ultimately yielding enhanced augmentation features that improve the model’s performance in time representation tasks. This integrated approach has the potential to advance the field of time-series analysis by providing more robust and context-aware representations.

- It proposes a feature extraction module that harnesses intermediate features acquired during the reconstruction of masked time-series data to significantly enhance feature utilization in contrastive learning. By emphasizing the importance of these intermediate representations, we aim to optimize the model’s ability to extract meaningful features that encapsulate the complexities inherent in time-series data. This process not only improves the overall effectiveness of contrastive learning but also facilitates a more nuanced understanding of temporal dynamics, ultimately contributing to advancements in time representation tasks.

- It validates the effectiveness of the proposed approach to time representation learning by conducting performance evaluations across a diverse range of datasets, including the HAR dataset [27], Sleep-EDF [28], and Epilepsy [29] datasets. This comprehensive analysis aims to demonstrate the robustness and applicability of our method in various contexts.

2. Related Work

2.1. Self-Supervised Learning in Time Series Representation

2.2. Contrastive Learning in Time Series Representation

2.3. Reconstruction-Based Method in Time Series Representation

3. Methodology

3.1. Pre-Training Stage

3.2. Training Stage

3.3. Fine-Tuning Stage

3.4. Loss

3.4.1. Reconstruction Loss

3.4.2. Pair-Wised Loss

3.4.3. Contrastive Loss

4. Experiment

4.1. Datasets

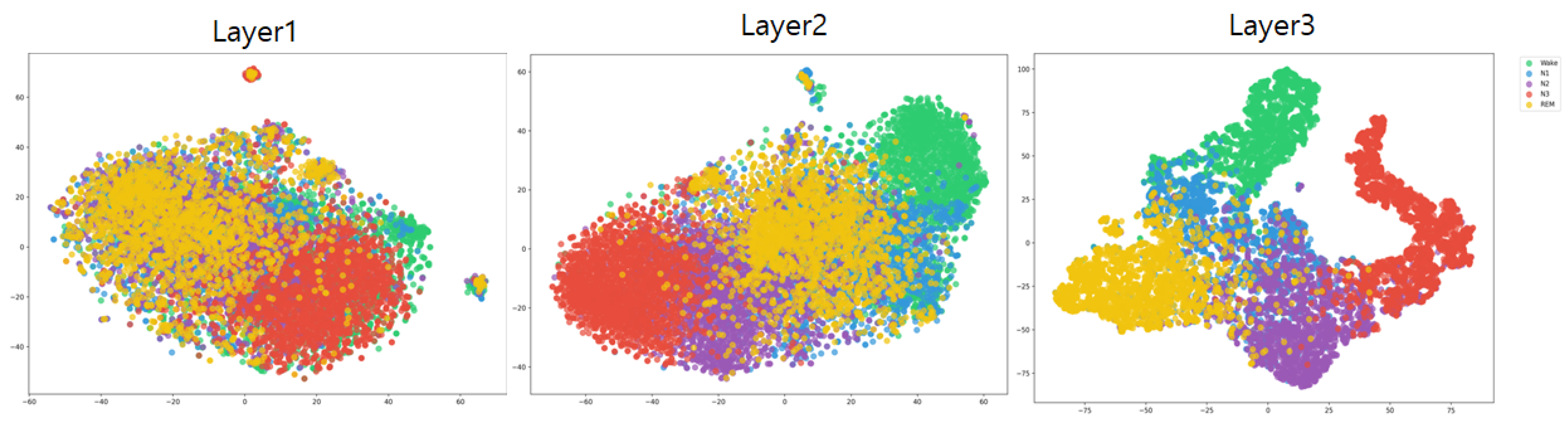

4.1.1. Sleep-EDF

4.1.2. HAR

4.1.3. Epilepsy

4.2. Implementation Details

4.2.1. Augmentation

4.2.2. Parameters

4.2.3. Environment

4.3. Comparative Result

4.4. Ablation Studies

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abid, M.; Khabou, A.; Ouakrim, Y.; Watel, H.; Chemcki, S.; Mitiche, A.; Benazza-Benyahia, A.; Mezghani, N. Physical activity recognition based on a parallel approach for an ensemble of machine learning and deep learning classifiers. Sensors 2021, 21, 4713. [Google Scholar] [CrossRef] [PubMed]

- Sanchez Guinea, A.; Sarabchian, M.; Mühlhäuser, M. Improving wearable-based activity recognition using image representations. Sensors 2022, 22, 1840. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Fang, Y.; Zhao, Y.; Tian, Z.; Zhang, W.; Feng, X.; Yu, L.; Li, W.; Fan, H.; Mu, T. Time-Series Representation Learning in Topology Prediction for Passive Optical Network of Telecom Operators. Sensors 2023, 23, 3345. [Google Scholar] [CrossRef] [PubMed]

- Pouromran, F.; Lin, Y.; Kamarthi, S. Personalized Deep Bi-LSTM RNN based model for pain intensity classification using EDA signal. Sensors 2022, 22, 8087. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Liu, T.; Xia, Z.; Fan, Y.; Yan, M.; Dang, X. SSG-Net: A Multi-Branch Fault Diagnosis Method for Scroll Compressors Using Swin Transformer Sliding Window, Shallow ResNet, and Global Attention Mechanism (GAM). Sensors 2024, 24, 6237. [Google Scholar] [CrossRef]

- Lee, Y.; Min, J.; Han, D.K.; Ko, H. Spectro-temporal attention-based voice activity detection. IEEE Signal Process. Lett. 2019, 27, 131–135. [Google Scholar] [CrossRef]

- Ahn, S.; Ko, H. Background noise reduction via dual-channel scheme for speech recognition in vehicular environment. IEEE Trans. Consum. Electron. 2005, 51, 22–27. [Google Scholar]

- Park, S.; Mun, S.; Lee, Y.; Ko, H. Acoustic Scene Classification Based on Convolutional Neural Network Using Double Image Features. In Proceedings of the DCASE 2017, Munich, Germany, 16 November 2017; pp. 98–102. [Google Scholar]

- AlMuhaideb, S.; AlAbdulkarim, L.; AlShahrani, D.M.; AlDhubaib, H.; AlSadoun, D.E. Achieving More with Less: A Lightweight Deep Learning Solution for Advanced Human Activity Recognition (HAR). Sensors 2024, 24, 5436. [Google Scholar] [CrossRef]

- Azadi, B.; Haslgrübler, M.; Anzengruber-Tanase, B.; Sopidis, G.; Ferscha, A. Robust feature representation using multi-task learning for human activity recognition. Sensors 2024, 24, 681. [Google Scholar] [CrossRef]

- Radhakrishnan, B.L.; Ezra, K.; Jebadurai, I.J.; Selvakumar, I.; Karthikeyan, P. An Autonomous Sleep-Stage Detection Technique in Disruptive Technology Environment. Sensors 2024, 24, 1197. [Google Scholar] [CrossRef]

- Wen, Q.; Sun, L.; Yang, F.; Song, X.; Gao, J.; Wang, X.; Xu, H. Time series data augmentation for deep learning: A survey. arXiv 2020, arXiv:2002.12478. [Google Scholar]

- Ching, T.; Himmelstein, D.S.; Beaulieu-Jones, B.K.; Kalinin, A.A.; Do, B.T.; Way, G.P.; Ferrero, E.; Agapow, P.M.; Zietz, M.; Hoffman, M.M.; et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 2018, 15, 20170387. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Wang, Y.; Hu, J.; Yuan, T. Sleep CLIP: A multimodal sleep staging model based on sleep signals and sleep staging labels. Sensors 2023, 23, 7341. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Xu, Y.; Peng, S.; Wang, H.; Li, F. Detection Method of Epileptic Seizures Using a Neural Network Model Based on Multimodal Dual-Stream Networks. Sensors 2024, 24, 3360. [Google Scholar] [CrossRef]

- Oh, C.; Han, S.; Jeong, J. Time-series data augmentation based on interpolation. Procedia Comput. Sci. 2020, 175, 64–71. [Google Scholar] [CrossRef]

- Gui, J.; Chen, T.; Zhang, J.; Cao, Q.; Sun, Z.; Luo, H.; Tao, D. A Survey on Self-Supervised Learning: Algorithms, Applications, and Future Trends. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9052–9071. [Google Scholar] [CrossRef]

- Shwartz Ziv, R.; LeCun, Y. To compress or not to compress—Self-supervised learning and information theory: A review. Entropy 2024, 26, 252. [Google Scholar] [CrossRef]

- Zhang, K.; Wen, Q.; Zhang, C.; Cai, R.; Jin, M.; Liu, Y.; Zhang, J.Y.; Liang, Y.; Pang, G.; Song, D.; et al. Self-supervised learning for time series analysis: Taxonomy, progress, and prospects. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6775–6794. [Google Scholar] [CrossRef]

- Liu, Z.; Alavi, A.; Li, M.; Zhang, X. Self-supervised contrastive learning for medical time series: A systematic review. Sensors 2023, 23, 4221. [Google Scholar] [CrossRef]

- Luo, D.; Cheng, W.; Wang, Y.; Xu, D.; Ni, J.; Yu, W.; Zhang, X.; Liu, Y.; Chen, Y.; Chen, H.; et al. Time series contrastive learning with information-aware augmentations. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4534–4542. [Google Scholar]

- Demirel, B.U.; Holz, C. Finding order in chaos: A novel data augmentation method for time series in contrastive learning. Adv. Neural Inf. Process. Syst. 2024, 36, 30750–30783. [Google Scholar]

- Wang, X.; Qi, G.J. Contrastive learning with stronger augmentations. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5549–5560. [Google Scholar] [CrossRef] [PubMed]

- Xia, J.; Wu, L.; Chen, J.; Hu, B.; Li, S.Z. Simgrace: A simple framework for graph contrastive learning without data augmentation. In Proceedings of the ACM Web Conference 2022, Virtual, 25–29 April 2022; pp. 1070–1079. [Google Scholar]

- Luo, R.; Wang, Y.; Wang, Y. Rethinking the effect of data augmentation in adversarial contrastive learning. arXiv 2023, arXiv:2303.01289. [Google Scholar]

- Eldele, E.; Ragab, M.; Chen, Z.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. Time-series representation learning via temporal and contextual contrasting. arXiv 2021, arXiv:2106.14112. [Google Scholar]

- Garcia-Gonzalez, D.; Rivero, D.; Fernandez-Blanco, E.; Luaces, M.R. A public domain dataset for real-life human activity recognition using smartphone sensors. Sensors 2020, 20, 2200. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Andrzejak, R.G.; Lehnertz, K.; Mormann, F.; Rieke, C.; David, P.; Elger, C.E. Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: Dependence on recording region and brain state. Phys. Rev. E 2001, 64, 061907. [Google Scholar] [CrossRef]

- Dong, J.; Wu, H.; Zhang, H.; Zhang, L.; Wang, J.; Long, M. SimMTM: A simple pre-training framework for masked time-series modeling. Adv. Neural Inf. Process. Syst. 2024, 36, 29996–30025. [Google Scholar]

- Malhotra, P.; Tv, V.; Vig, L.; Agarwal, P.; Shroff, G. TimeNet: Pre-trained deep recurrent neural network for time series classification. arXiv 2017, arXiv:1706.08838. [Google Scholar]

- Sagheer, A.; Kotb, M. Unsupervised pre-training of a deep LSTM-based stacked autoencoder for multivariate time series forecasting problems. Sci. Rep. 2019, 9, 19038. [Google Scholar] [CrossRef]

- Abid, A.; Zou, J. Autowarp: Learning a warping distance from unlabeled time series using sequence autoencoders. arXiv 2018, arXiv:1810.10107. [Google Scholar]

- Tonekaboni, S.; Eytan, D.; Goldenberg, A. Unsupervised representation learning for time series with temporal neighborhood coding. arXiv 2021, arXiv:2106.00750. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Yang, L.; Hong, S. Unsupervised time-series representation learning with iterative bilinear temporal-spectral fusion. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 25038–25054. [Google Scholar]

- Zhang, K.; Liu, Y. Unsupervised feature learning with data augmentation for control valve stiction detection. In Proceedings of the 2021 IEEE 10th Data Driven Control and Learning Systems Conference (DDCLS), Suzhou, China, 14–16 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1385–1390. [Google Scholar]

- Abdulaal, A.; Liu, Z.; Lancewicki, T. Practical approach to asynchronous multivariate time series anomaly detection and localization. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; pp. 2485–2494. [Google Scholar]

- Vaswani, A. Attention is all you need. In Proceedings of the NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Sun, H.; Li, B.; Dan, Z.; Hu, W.; Du, B.; Yang, W.; Wan, J. Multi-level feature interaction and efficient non-local information enhanced channel attention for image dehazing. Neural Netw. 2023, 163, 10–27. [Google Scholar] [CrossRef] [PubMed]

- Pan, H.; Guo, Y.; Deng, Q.; Yang, H.; Chen, J.; Chen, Y. Improving fine-tuning of self-supervised models with contrastive initialization. Neural Netw. 2023, 159, 198–207. [Google Scholar] [CrossRef] [PubMed]

- Mousavi, S.; Afghah, F.; Acharya, U.R. SleepEEGNet: Automated sleep stage scoring with sequence to sequence deep learning approach. PLoS ONE 2019, 14, e0216456. [Google Scholar] [CrossRef] [PubMed]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef]

- Perslev, M.; Jensen, M.; Darkner, S.; Jennum, P.J.; Igel, C. U-Time: A fully convolutional network for time series segmentation applied to sleep staging. Adv. Neural Inf. Process. Syst. 2019, 32, 4415–4426. [Google Scholar]

- Phan, H.; Mikkelsen, K.; Chén, O.Y.; Koch, P.; Mertins, A.; De Vos, M. Sleeptransformer: Automatic sleep staging with interpretability and uncertainty quantification. IEEE Trans. Biomed. Eng. 2022, 69, 2456–2467. [Google Scholar] [CrossRef]

- Sun, S.; Li, C.; Lv, N.; Zhang, X.; Yu, Z.; Wang, H. Attention based convolutional network for automatic sleep stage classification. Biomed. Eng./Biomed. Tech. 2021, 66, 335–343. [Google Scholar] [CrossRef]

- Phan, H.; Andreotti, F.; Cooray, N.; Chén, O.Y.; De Vos, M. SeqSleepNet: End-to-end hierarchical recurrent neural network for sequence-to-sequence automatic sleep staging. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 400–410. [Google Scholar] [CrossRef]

- Sarkar, P.; Etemad, A. Self-supervised ECG representation learning for emotion recognition. IEEE Trans. Affect. Comput. 2020, 13, 1541–1554. [Google Scholar] [CrossRef]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Saeed, A.; Ozcelebi, T.; Lukkien, J. Multi-task self-supervised learning for human activity detection. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–30. [Google Scholar] [CrossRef]

- Haresamudram, H.; Beedu, A.; Agrawal, V.; Grady, P.L.; Essa, I.; Hoffman, J.; Plötz, T. Masked reconstruction based self-supervision for human activity recognition. In Proceedings of the 2020 ACM International Symposium on Wearable Computers, Virtual, 12–16 September 2020; pp. 45–49. [Google Scholar]

- Wang, J.; Zhu, T.; Chen, L.L.; Ning, H.; Wan, Y. Negative selection by clustering for contrastive learning in human activity recognition. IEEE Internet Things J. 2023, 10, 10833–10844. [Google Scholar] [CrossRef]

- Singh, S.P.; Sharma, M.K.; Lay-Ekuakille, A.; Gangwar, D.; Gupta, S. Deep ConvLSTM with self-attention for human activity decoding using wearable sensors. IEEE Sensors J. 2020, 21, 8575–8582. [Google Scholar] [CrossRef]

- Liu, D.; Wang, T.; Liu, S.; Wang, R.; Yao, S.; Abdelzaher, T. Contrastive self-supervised representation learning for sensing signals from the time-frequency perspective. In Proceedings of the 2021 International Conference on Computer Communications and Networks (ICCCN), Athens, Greece, 19–22 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–10. [Google Scholar]

| Dataset | Method | ACC (%) | MF1 |

|---|---|---|---|

| SleepEDF-78 | SLEEPEEG [42] | 80 | 73.6 |

| DEEPSLEEP [43] | 80.8 | 74.2 | |

| U-TIME [44] | 81.3 | 76.3 | |

| transformer [45] | 81.4 | 74.3 | |

| 1-MAXCNN [46] | 81.9 | 73.8 | |

| SEQSLEEP [47] | 82.6 | 76.4 | |

| Ours | 84.6 | 79.0 | |

| SleepEDF-20 | SSL-ECG [48] | 74.58 | 65.44 |

| SIMCLR [35] | 78.91 | 68.6 | |

| CPC [49] | 82.82 | 73.94 | |

| TS-TCC [26] | 83 | 73.57 | |

| Ours | 85.55 | 75.53 | |

| UCI-HAR | SSL-ECG [48] | 65.34 | 63.75 |

| SIMCLR [35] | 80.97 | 80.19 | |

| Multi-taskSSL [50] | None | 89.81 | |

| MaskedRe [51] | None | 81.89 | |

| ClusterCLHAR [52] | None | 92.63 | |

| DeepConvLSTM [53] | 82.6 | 76.4 | |

| CPC [49] | 83.85 | 83.27 | |

| TS-TCC [26] | 90.37 | 90.38 | |

| TNC [34] | 92.03 | None | |

| STF-CSL [54] | 93.96 | 94.10 | |

| Ours | 94.26 | 94.12 | |

| Epilepsy | SSL-ECG [48] | 93.72 | 89.15 |

| SIMCLR [35] | 96.05 | 93.53 | |

| CPC [49] | 96.61 | 94.44 | |

| TS-TCC [26] | 97.23 | 95.54 | |

| Ours | 97.12 | 96.25 |

| SleepEDF-78 | ||||||||

|---|---|---|---|---|---|---|---|---|

| W | N1 | N2 | N3 | R | PR | RE | F1 | |

| W | 63,893 | 3562 | 327 | 25 | 640 | 93.6 | 93.3 | 93.5 |

| N1 | 3529 | 9914 | 6182 | 58 | 1839 | 55.8 | 46.1 | 50.5 |

| N2 | 506 | 3071 | 61,406 | 1861 | 2288 | 84.2 | 88.8 | 86.5 |

| N3 | 45 | 33 | 2822 | 10,125 | 14 | 83.8 | 77.7 | 80.6 |

| R | 279 | 1198 | 2157 | 12 | 22,189 | 82.3 | 85.9 | 84.0 |

| SleepEDF-78 | ||

|---|---|---|

| Masking Ratio | ACC | MF1 |

| 0 | 83.5 | 76.9 |

| 0.1 | 83.9 | 77.5 |

| 0.25 | 84.6 | 79.0 |

| 0.3 | 84.3 | 78.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Ham, I.; Kim, Y.; Ko, H. Time-Series Representation Feature Refinement with a Learnable Masking Augmentation Framework in Contrastive Learning. Sensors 2024, 24, 7932. https://doi.org/10.3390/s24247932

Lee J, Ham I, Kim Y, Ko H. Time-Series Representation Feature Refinement with a Learnable Masking Augmentation Framework in Contrastive Learning. Sensors. 2024; 24(24):7932. https://doi.org/10.3390/s24247932

Chicago/Turabian StyleLee, Junyeop, Insung Ham, Yongmin Kim, and Hanseok Ko. 2024. "Time-Series Representation Feature Refinement with a Learnable Masking Augmentation Framework in Contrastive Learning" Sensors 24, no. 24: 7932. https://doi.org/10.3390/s24247932

APA StyleLee, J., Ham, I., Kim, Y., & Ko, H. (2024). Time-Series Representation Feature Refinement with a Learnable Masking Augmentation Framework in Contrastive Learning. Sensors, 24(24), 7932. https://doi.org/10.3390/s24247932